Abstract

In this paper, we address the problems of contour detection, bottom-up grouping, object detection and semantic segmentation on RGB-D data. We focus on the challenging setting of cluttered indoor scenes, and evaluate our approach on the recently introduced NYU-Depth V2 (NYUD2) dataset (Silberman et al., ECCV, 2012). We propose algorithms for object boundary detection and hierarchical segmentation that generalize the \(gPb-ucm\) approach of Arbelaez et al. (TPAMI, 2011) by making effective use of depth information. We show that our system can label each contour with its type (depth, normal or albedo). We also propose a generic method for long-range amodal completion of surfaces and show its effectiveness in grouping. We train RGB-D object detectors by analyzing and computing histogram of oriented gradients on the depth image and using them with deformable part models (Felzenszwalb et al., TPAMI, 2010). We observe that this simple strategy for training object detectors significantly outperforms more complicated models in the literature. We then turn to the problem of semantic segmentation for which we propose an approach that classifies superpixels into the dominant object categories in the NYUD2 dataset. We design generic and class-specific features to encode the appearance and geometry of objects. We also show that additional features computed from RGB-D object detectors and scene classifiers further improves semantic segmentation accuracy. In all of these tasks, we report significant improvements over the state-of-the-art.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Notes

In recent work (Girshick et al. 2014), report much better object detection performance by using features from a Convolutional Neural Network (CNN) trained on a large image classification dataset (Deng et al. 2009). In our more recent work (Gupta et al. 2014), we experimented with these CNN based features and observe similar improvements in object detection performance, and moreover also show how CNNs can be used to learn features from depth images. We refer the readers to (Gupta et al. 2014) for more details on this.

ODS refers to optimal dataset scale, OIS refers to optimal image scale, bestC is the average overlap of the best segment in the segmentation hierarchy to each ground truth region. We refer the reader to Arbelaez et al. (2011) for more details about these metrics.

We run their code on NYUD2 with our bottom-up segmentation hierarchy using the same classifier hyper-parameters as specified in their code.

References

Arbelaez, P., Hariharan, B., Gu, C., Gupta, S., Bourdev, L., & Malik, J. (2012). Semantic segmentation using regions and parts. In CVPR.

Arbelaez, P., Maire, M., Fowlkes, C., & Malik, J. (2011). Contour detection and hierarchical image segmentation. In TPAMI.

Barron, J. T., Malik, J. (2013). Intrinsic scene properties from a single RGB-D image. In CVPR.

Bourdev, L., Maji, S., Brox, T., & Malik, J. (2010). Detecting people using mutually consistent poselet activations. In ECCV.

Breiman, L. (2001). Random forests. Machine Learning, 45, 5–32.

Carreira, J., Caseiro, R., Batista, J., & Sminchisescu, C. (2012). Semantic segmentation with second-order pooling. In ECCV.

Carreira, J., Li, F., & Sminchisescu, C. (2012). Object recognition by sequential figure-ground ranking. In IJCV.

Carreira, J., Caseiro, R., Batista, J., & Sminchisescu, C. (2012). Semantic segmentation with second-order pooling. ECCV. Berlin Heidelberg: Springer.

Criminisi, A., Shotton, J., & Konukoglu, E. (2012). Decision forests: A unified framework for classification, regression, density estimation, manifold learning and semi-supervised learning. Graphics and Vision: Found and Trends in Comp.

Dalal, N., & Triggs, B.(2005). Histograms of oriented gradients for human detection. In CVPR.

Deng, J., Dong, W., Socher, R., Li, L. -J., Li, K., & Fei-Fei, L. (2009). Image{N}et: A large-scale hierarchical image database. In CVPR.

Dollár, P., Zitnick, C. L. (2013). Structured forests for fast edge detection. In ICCV.

Endres, I., Shih, K. J., Jiaa, J., & Hoiem, D. (2013). Learning collections of part models for object recognition. In CVPR.

Everingham, M., Van Gool, L., Williams, C. K. I., Winn, J., & Zisserman, A. (2010). The PASCAL Visual Object Classes (VOC) Challenge. In IJCV.

Everingham, M., Van Gool, L., Williams, C.K.I., Winn, J., & Zisserman, A.(2012). The PASCAL Visual Object Classes Challenge (VOC2012) Results. http://www.pascal-network.org/challenges/VOC/voc2012/workshop/index.html

Felzenszwalb, P., Girshick, R., McAllester, D., & Ramanan, D. (2010). Object detection with discriminatively trained part based models. In TPAMI.

Frome, A., Huber, D., Kolluri, R., Bülow, T., & Malik, J. (2004). Recognizing objects in range data using regional point descriptors. In ECCV.

Girshick, R., Donahue, J., Darrell, T., & Malik, J. (2014). Rich feature hierarchies for accurate object detection and semantic segmentation. In CVPR.

Gupta, S., Arbelaez, P., & Malik, J. (2013). Perceptual organization and recognition of indoor scenes from RGB-D images. In CVPR.

Gupta, A., Efros, A., & Hebert, M. (2010). Blocks world revisited: Image understanding using qualitative geometry and mechanics. In ECCV.

Gupta, S., Girshick, R., Arbeláez, P., & Malik, J. (2014). Learning rich features from RGB-D images for Object detection and segmentation. In ECCV.

Gupta, A., Satkin, S., Efros, A., & Hebert, M. (2011). From 3D scene geometry to human workspace. In CVPR.

Hedau, V., Hoiem, D., & Forsyth, D. (2012). Recovering free space of indoor scenes from a single image. In CVPR.

Hoiem, D., Efros, A., & Hebert, M. (2007). Recovering surface layout from an image. In IJCV.

Hoiem, D., Efros, A., & Hebert, M. (2011). Recovering occlusion boundaries from an image. In IJCV.

Izadi, S., Kim, D., Hilliges, O., Molyneaux, D., Newcombe, R., Kohli, P., Shotton, J., Hodges, S., Freeman, D., Davison, A., & Fitzgibbon, A. (2011). KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In UIST.

Janoch, A., Karayev, S., Jia, Y., Barron, J. T., Fritz, M., Saenko, K., et al. (2013). A category-level 3D object dataset: Putting the kinect to work. Consumer Depth Cameras for Computer Vision (pp. 141–165). Berlin: Springer.

Johnson, A., Hebert, M. (1999). Using spin images for efficient object recognition in cluttered 3D scenes. In TPAMI.

Kanizsa, G. (1979). Organization in Vision: Essays on Gestalt Perception. New York: Praeger Publishers.

Koppula, H., Anand, A., Joachims, T., & Saxena, A. (2011). Semantic labeling of 3d point clouds for indoor scenes. In NIPS.

Ladicky, L., Russell, C., Kohli, P., & Torr, P. H. S. (2010). Graph cut based inference with co-occurrence statistics. In ECCV.

Lai, K., Bo, L., Ren, X., & Fox, D. (2011). A large-scale hierarchical multi-view RGB-D object dataset. In ICRA.

Lai, K., Bo, L., Ren, X., & Fox, D. (2013). RGB-D object recognition: Features, algorithms, and a large scale benchmark. In A. Fossati, J. Gall, H. Grabner, X. Ren, & K. Konolige (Eds.), Consumer Depth Cameras for Computer Vision: Research Topics and Applications (pp. 167–192). Berlin: Springer.

Lazebnik, S., Schmid, C., & Ponce, J. (2006). Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In CVPR.

Lee, D., Gupta, A., Hebert, M., & Kanade, T. (2010). Estimating spatial layout of rooms using volumetric reasoning about objects and surfaces. In NIPS.

Lee, D., Hebert, M., & Kanade, T. (2009). Geometric reasoning for single image structure recovery. In CVPR.

Maji, S., Berg, A. C., & Malik, J. (2013). Efficient classification for additive kernel svms. In TPAMI.

Martin, D., Fowlkes, C., & Malik, J. (2004). Learning to detect natural image boundaries using local brightness, color and texture cues. In TPAMI.

Reconstruction meets recognition challenge, iccv 2013.http://ttic.uchicago.edu/~rurtasun/rmrc/index.php (2013)

Ren, X., & Bo, L. (2012). Discriminatively trained sparse code gradients for contour detection. In NIPS.

Ren, X., Bo, L., & Fox, D.(2012). RGB-(D) scene labeling: Features and algorithms. In CVPR.

Rusu, R. B., Blodow, N., & Beetz, M. (2009). Fast point feature histograms (FPFH) for 3D registration. In ICRA.

Savitsky, A., & Golay, M. (1964). Smoothing and differentiation of data by simplified least squares procedures. Analytical Chemistry, 36(8), 1627–1639.

Saxena, A., Chung, S., & Ng, A. (2008). 3-D depth reconstruction from a single still image. In IJCV.

Shotton, J., Fitzgibbon, A. W., Cook, M., Sharp, T., Finocchio, M., Moore, R., Kipman, A., & Blake, A. (2011). Real-time human pose recognition in parts from single depth images. In CVPR.

Silberman, N., Hoiem, D., Kohli, P., & Fergus, R. (2012). Indoor segmentation and support inference from RGBD images. In ECCV.

soo Kim, B., Xu, S., & Savarese, S.(2013) Accurate localization of 3D objects from RGB-D data using segmentation hypotheses. In CVPR.

Tang, S., Wang, X., Lv, X., Han, T.X., Keller, J., He, Z., Skubic, M., & Lao, S. (2012). Histogram of oriented normal vectors for object recognition with a depth sensor. In ACCV.

van de Sande, K. E. A., Gevers, T., & Snoek, C. G. M. (2010). Evaluating color descriptors for object and scene recognition. In TPAMI.

Viola, P., & Jones, M.(2001). Rapid object detection using a boosted cascade of simple features. In CVPR.

Ye, E.S.(2013). Object detection in RGB-D indoor scenes. Master’s thesis, EECS Department, University of California, Berkeley.

Acknowledgments

We are thankful to Jon Barron, Bharath Hariharan, and Pulkit Agrawal for the useful discussions. This work was sponsored by ONR SMARTS MURI N00014-09-1-1051, ONR MURI N00014-10-10933, and a Berkeley Fellowship.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Derek Hoiem, James Hays, Jianxiong Xiao and Aditya Khosla.

Appendices

Appendix 1: Extracting a Geocentric Coordinate Frame

We note that the direction of gravity imposes a lot of structure on how the real world looks (the floor and other supporting surfaces are always horizontal, the walls are always vertical). Hence, to leverage this structure, we develop a simple algorithm to determine the direction of gravity.

Note that this differs from the Manhattan World assumption made by, e.g. Gupta et al. (2011) in the past. The assumption that there are 3 principal mutually orthogonal directions is not universally valid. On the other hand the role of the gravity vector in architectural design is equally important for a hut in Zimbabwe or an apartment in Manhattan.

Since we have depth data available, we propose a simple yet robust algorithm to estimate the direction of gravity. Intuitively, the algorithm tries to find the direction which is the most aligned to or most orthogonal to locally estimated surface normal directions at as many points as possible. The algorithm starts with an estimate of the gravity vector and iteratively refines the estimate via the following 2 steps.

-

1.

Using the current estimate of the gravity direction \(\varvec{g}_{i-1}\), make hard-assignments of local surface normals to aligned set \(\mathcal {N}_{\Vert }\) and orthogonal set \(\mathcal {N}_{\bot }\), (based on a threshold \(d\) on the angle made by the local surface normal with \(\varvec{g}_{i-1}\)). Stack the vectors in \(\mathcal {N}_{\Vert }\) to form a matrix \(N_{\Vert }\), and similarly in \(\mathcal {N}_{\bot }\) to form \(N_{\bot }\).

$$\begin{aligned}&\mathcal {N}_{\Vert } = \{\varvec{n}: \theta (\varvec{n},\varvec{g}_{i-1}) < d \text{ or } \theta (\varvec{n},\varvec{g}_{i-1}) > 180^\circ -d\} \\&\mathcal {N}_{\bot } = \{\varvec{n}: 90^\circ -d < \theta (\varvec{n},\varvec{g}_{i-1}) < 90^\circ + d\} \\&\text{ where, } \theta (\varvec{a},\varvec{b}) = \text{ Angle } \text{ between } \varvec{a} \text{ and } \varvec{b}\text{. } \end{aligned}$$Typically, \(\mathcal {N}_{\Vert }\) would contain normals from points on the floor and table-tops and \(\mathcal {N}_{\bot }\) would contain normals from points on the walls.

-

2.

Solve for a new estimate of the gravity vector \(\varvec{g}_i\) which is as aligned to normals in the aligned set and as orthogonal to the normals in the orthogonal set as possible. This corresponds to solving the following optimization problem, which simplifies into finding the eigen-vector with the smallest eigen value of the \(3\times 3\) matrix, \(N_{\bot }N^t_{\bot } - N_{\Vert }N^t_{\Vert }\).

$$\begin{aligned} \min _{\varvec{g}:\Vert \varvec{g}\Vert _2 = 1} {\sum _{\varvec{n}\in \mathcal {N}_{\bot }} {\cos ^2(\theta (\varvec{n},\varvec{g}))} + \sum _{\varvec{n}\in \mathcal {N}_{\Vert }} {\sin ^2(\theta (\varvec{n},\varvec{g}))}} \end{aligned}$$

Our initial estimate for the gravity vector \(\varvec{g}_0\) is the Y-axis, and we run 5 iterations with \(d = 45^\circ \) followed by 5 iterations with \(d = 15^\circ \).

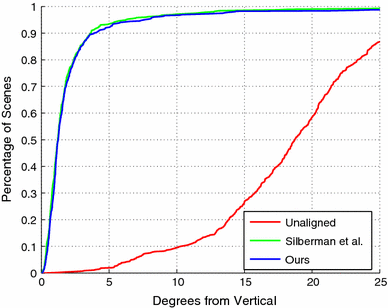

To benchmark the accuracy of our gravity direction, we use the metric of Silberman et al. (2012). We rotate the point cloud to align the Y-axis with the estimated gravity direction and look at the angle the floor makes with the Y-axis. We show the cumulative distribution of the angle of the floor with the Y-axis in Fig. 5. Note that our gravity estimate is within \(5^\circ \) of the actual direction for 90 % of the images, and works as well as the method of Silberman et al. (2012), while being significantly simpler.

Appendix 2: Histogram of Depth Gradients

Suppose we are looking at a plane \(N_XX+ N_YY+N_ZZ + d = 0\) in space. A point \(\left( X,Y,Z\right) \) in the world gets imaged at the point \(\left( x=\frac{fX}{Z},y=\frac{fY}{Z}\right) \), where \(f\) is the focal length of the camera. Using this in the first equation, we get the relation, \(N_X\frac{Zx}{f}+ N_Y\frac{Zy}{f}+N_ZZ + d = 0\), which simplifies to give \(Z = \frac{-fd}{fN_Z+N_Yy+N_Xx}\). Differentiating this with respect to the image gradient gives us,

Using this with the relation that relates disparity \(\delta \) with depth value \(Z, \delta = \frac{bf}{Z}\), where \(b\) is the baseline for the Kinect, gives us the derivatives for the disparity \(\delta \) to be

Thus, the gradient orientation for both the disparity and the depth image comes out to be \(tan^{-1}(\frac{N_Y}{N_X})\) (although the contrast is swapped). The gradient magnitude for the depth image is \(\frac{Z^2\sqrt{1-N_Z^2}}{df} = \frac{Z^2\sin (\theta )}{df}\), and for the disparity image is \(\frac{b\sqrt{1-N_Z^2}}{d} = \frac{b\sin (\theta )}{d}\), where \(\theta \) is the angle that the normal at this point makes with the image plane.

Note that, the gradient magnitude for the disparity and the depth image differ in that the depth gradient has a factor of \(Z^2\), which makes points further away have a larger gradient magnitude. This agrees well with the noise model for the Kinect (quantization of the disparity value, which leads to an error in \(Z\) which value proportional to \(Z^2\)). In this sense, the disparity gradient is much better behaved than the gradient of the depth image.

Note that, the subsequent contrast normalization step in standard HOG computation, essentially gets rid of this difference between these 2 quantities (assuming that the close by cells have more or less comparable \(Z\) values).

Appendix 3: Visualization for RGB-D Detector Parts

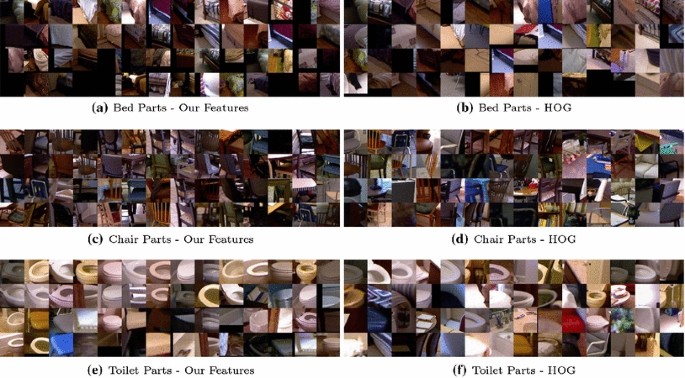

One interesting thing to visualize is what the DPM is learning with these features. The question that we want to ask here is that whether the parts that we get semantically meaningful? The hope is that with access to depth data, the parts that get discovered should be more meaningful than ones you get with purely appearance data.

In Fig. 6, we visualize the various DPM parts for the bed, chair and toilet detector. We run the detector on a set of images that the detector did not see at train time, pick the top few detections based on the detector score. We then crop out the part of the image that a particular part of the DPM got placed at, and visualize these image patches for the different DPM parts.

We observe that the parts are tight semantically—that is, a particular part likes semantically similar regions of the object class. For comparison, we also provide visualizations for the parts that get learnt for an appearance only DPM. As expected, the parts from our DPM are semantically tighter than the parts from an appearance only DPM. Recently, in the context of intensity images there has been a lot of work in trying to get mid-level parts in an unsupervised manner from weak annotations like that of bounding boxes in intensity images (Endres et al. 2013), and in a supervised manner from strong annotations like that of keypoint annotation (Bourdev et al. 2010). These visualizations suggest that it may be possible to get very reasonable mid-level parts from weak annotations in RGB-D images, which can be used to train appearance only part detectors.

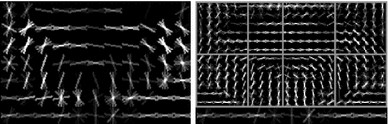

We also visualize what the HHG features learn. In Fig. 7, we see that the model as expected picks on the shape cues. There is a flat horizontal surface along the sides and on the middle portion which corresponds to the floor and the top of the bed and there are vertical surfaces going from the horizontal floor to the top of the bed.

Appendix 4: Scene Classification

We address the task of indoor scene classification based on the idea that a scene can be recognized by identifying the objects in it. Thus, we use our predicted semantic segmentation maps as features for this task. We use the spatial pyramid (SPM) formulation of Lazebnik et al. (2006), but instead of using histograms of vector quantized SIFT descriptors as features, we use the average presence of each semantic class (as predicted by our algorithm) in each pyramid cell as our feature.

To evaluate our performance, we use the scene labels provided by the NYUD2. The dataset has 27 scene categories but only a few are well represented. Hence, we reduce the 27 categories into 10 categories (9 most common categories and the rest). As before, we train on the 795 images from the train set and test on the remaining 654 images. We report the diagonal of the confusion matrix for each of the scene class and use the mean of the diagonal, and overall accuracy as aggregate measures of performance.

We use a \(1, 2\times 2, 4\times 4\) spatial pyramid and use a SVM with an additive kernel as our classifier (Maji et al. 2013). We use the 40 category output of our algorithm. We compare against an appearance-only baseline based on SPM on vector quantized color SIFT descriptors (van de Sande et al. 2010), a geometry-only baseline based on SPM on geocentric textons (introduced in Sect. 5.1.2), and a third baseline which uses both SIFT and Geocentric Textons in the SPM.

We report the performance we achieve in Table 9.

Rights and permissions

About this article

Cite this article

Gupta, S., Arbeláez, P., Girshick, R. et al. Indoor Scene Understanding with RGB-D Images: Bottom-up Segmentation, Object Detection and Semantic Segmentation. Int J Comput Vis 112, 133–149 (2015). https://doi.org/10.1007/s11263-014-0777-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-014-0777-6