In my spare time I’ve been working on a database of Japanese prints for a little over 3.5 years now. I’m fully aware that I’ve never actually written about this, personally very important, project on my blog — until now. Unfortunately this isn’t a post explaining that project. I do still hope to write more about the intricacies of it, some day, but until that time you can watch this talk I gave at OpenVisConf 2014 about the site and the tech behind it.

I’ve been doing a lot of work exploring different computer vision, and machine learning, algorithms to see how they might apply to the world of art history study. I’m especially interested in finding novel uses of technology that could greatly benefit art historians in their work and also help individuals better appreciate art.

One tool that I came across yesterday is called Waifu2x. It’s a convolutional neural network (CNN) that is designed to optimally “upscale” images (taking small images and generating a larger image). The creator of this tool built it to better upscale poorly-sized Anime images and video. This is an effort that I can massively cheer on – while I’m not a purveyor of Anime, I love applying algorithmic overkill to non-tech hobbies.

Waifu2x also provides a live demo site that you can use to test it on other images. When I saw this I became immediately intrigued. Anime has direct stylistic influences drawn from the “old” world of Japanese woodblock printing, which was popular from the late 1600s to the late 1800s. Maybe the pre-trained upscaler could also work well for photos of Japanese prints? (Naturally I could train a new CNN to do this, but it may not even be necessary!)

Now the first questions that should come up, before even attempting upscale Japanese prints, are simply: Are there enough tiny images of prints that need to be made bigger? Who will benefit from this?

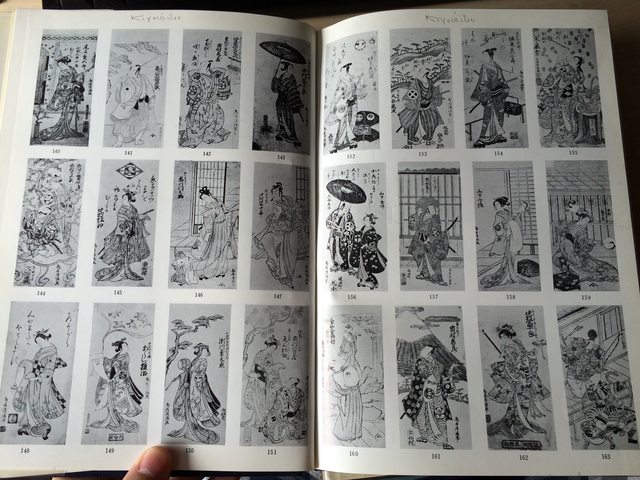

To answer those questions: Unfortunately there are tons of tiny pictures of Japanese prints in the world. To provide one very real example: The Tokyo National Museum has one of the greatest collections of Japanese prints in the world… none of which are (publicly) digitized. If a researcher wants to see if a the TNM has a particular copy of a print they’ll need to use the following 3 volume set of books (which I own):

Inside the book is 3,926 small, black-and-white, scans of every print in their collection:

I plan on digitizing these books and bringing these (not-ideal) prints online as they will be of the utmost use to scholars. However given their tiny size it will be very hard for most researchers to be able to make out what exactly the print is depicting. Thus any technology that is able to upscale the images to make them a bit easier to view would be greatly appreciated.

I began by experimenting with a few different, existing, print images and was really intrigued by the results.

I started with a primitive Ukiyo-e print by Hishikawa Moronobu:

(Source Image)

And here is the image scaled 2x (OSX Preview) and then using Waifu2x using high noise reduction (be sure to click the images to see them full size):

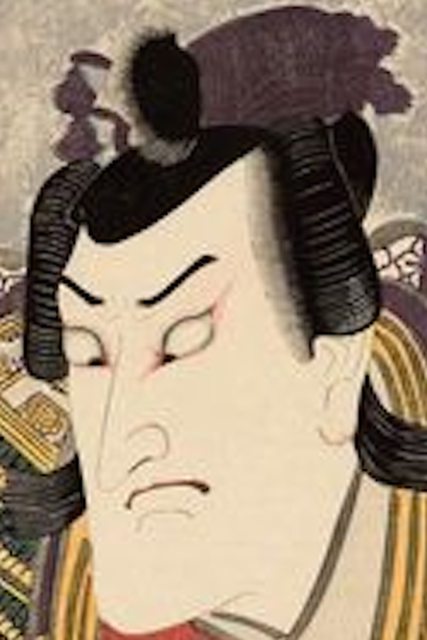

And here is another early actor print by Utagawa Kunisada:

(Source Image)

And here is the image scaled 2x (OSX Preview) and then using Waifu2x with low noise reduction and high noise reduction (be sure to click the images to see them full size):

I also have a few blown-up details comparing the results between these three (OSX Preview 2x, Waifu2x with low noise reduction, Waifu2x with high noise reduction):

It’s immediately apparent that the lines are still quite “crisp” in both of the Waifu2x versions. This is extremely compelling as being able to see those details can be quite important. In fact seeing these upscaled images like this is seriously quite impressive. It definitely reinforces the fact that the algorithms Anime training data has suited this subject matter well!

To my eye it almost looks like they entire image has also become more “smooth”, it seems much more mottled, almost as if someone spilled water on it (Japanese prints are printed with watercolors and thus are quite susceptible to water damage that actually creates similar results as to what’s seen here). This is especially true when using Waifu2x’s high noise reduction. It’s not clear that this would become any better with better training data as the original source image really only has so much data to begin with.

Waifu2x’s high noise reduction also causes the background to become much more tumultuous, as if it was crumpled wrapping paper. And the details in the signature, that make it readable, tend to be lost with that much processing. I suspect that using just the low noise reduction may be a better sweet spot.

Helping researchers be able to better see the lines of a print from a tiny image can be a double-edged sword. They will certainly appreciate being able to have a larger and semi-crisp image. However the lack of precision is a massive problem (not that the original image at 2x would’ve been any better). Researchers rely upon being able to spot minute differences between print impressions in order to be able to understand when a print could’ve been created. I suspect that using this technique will need to be reserved to only extremely-small images and come with a massive caveat warning the viewer as to the nature of the image and its appearance.

Regardless, this technology is quite exciting and it’s extremely serendipitous that the subject matter that was used just so happens to correlate nicely with one of my areas of study. I’ll be very curious to see where else people have success with this particular utility and in what context.

Dave (May 20, 2015 at 6:23 pm)

hahaha… John Resig is browsing 4chan!

tom c. (May 20, 2015 at 6:23 pm)

I have trouble believing you’re not an anime fan, I read your Javascript Ninja book and, aside from learning a lot about Javascript that I didn’t know before, there are tons of embarrassingly enjoyable references to “Ichigo”, “Naruto”, Death Note, and other such anime that had been popular. Even if you are no longer an anime fan today, at one point in your life, you probably did enjoy them (possibly a little too much). And old sins have long shadows.

John Resig (May 20, 2015 at 6:30 pm)

@Dave: Hmm – no? I came across this from Hacker News, I believe. It’s also one of the top trending projects on Github right now.

@Tom: That’s quite amusing – all of those references are actually from my co-author Bear! Unfortunately I haven’t seen any of the Animes that you mention. Not out of contempt, but simply that I haven’t managed to get around to it! Some day!

joel (May 20, 2015 at 6:33 pm)

Most of the noise in your images seem to be from jpeg compression. The enlarging software is impressive but mostly it seems to be removing the jpeg artifacts. Maybe your source scans are compressed?

John Resig (May 20, 2015 at 6:42 pm)

@Joel: That’s sort of the point I was attempting to make. Taking a normal small (jpeg) image and simply scaling it by 2x on both the width and height results in an image that is riddled with jpeg artifacts. Using Waifu2x you end up with something that is “smoother” and certainly has less noise.

mikemikemikemikemiks (May 20, 2015 at 6:42 pm)

I would call this a JPEG cleanerupperer, since it does not seem to help with resolution, but it does make the image look very clean and vivid.

John Resig (May 20, 2015 at 6:44 pm)

@mike: IMO, inherently that’s the best that any upscaler can hope to achieve, unless the source material is effectively treated as a series of scalable vectors and just sized-up as is.

Tom Wulf (May 20, 2015 at 7:13 pm)

Very cool John. I teach technology but have strong interests in humanities especially Old Norse and Viking culture. (Using JQM to create rich hypertext versions of the Sagas.) Recently, we had a THAT camp unconference at the University of Cincinnati. This is a national thing which I actually don’t know a lot about but I think you might find it a great place to create a bridge between tech folks and Humanities folks.

thatcamp.org although site seems to be down right now. Also a Wikipedia entry on this.

Cheers

John Resig (May 20, 2015 at 7:37 pm)

@Tom Wulf: I’m so glad jQM has been able to help you with your own digital humanities work! I’m actually quite familiar with THATCamp – I’ve actually attended 3 of them now! (two in NYC, one in Chicago). I find them to be a fantastic environment for learning and cross-pollination. Thank you for the suggestion!

Scott (May 20, 2015 at 8:38 pm)

Would it not be easier to go to the Museum and take pics of the pics or get permission to scan them at hi-res or perhaps, they have the positives or negatives in storage?

You are scanning out of a book, which means you are scanning 4 plates, Cyan, Magenta, Yellow, and black, making a rosette pattern, unless they were printed stochastically which would improve your scanning greatly. Though I doubt they are, that means you will scan a ton of dots, probably nowhere near the 150 lines per inch you will need to get even decent results.

Back in the days of pre-press, film, drum scanners, etc, they would take the films or plates used to print the book, scan the individual films, and put them back together, apply some dithering, and get pretty good results.

Basically, the better the input, the less work you will have to do to the output, so I would look to getting better input. Scanning out of book is going to be a very hard way to make a hi-res print. If the colors are solid spot colors, or additions of CMYK, like Cyan and Yellow equaling 100% green, those are all solid colors and you will have excellent results. If they blend 50% of Cyan and 90% yellow to make a slightly greenish blue, that is where trouble is going to come in.

Happy to talk offline of need be. I have been told that in that Museum, or some other one, is a photo headshot of every Kamikaze pilot before they went out. @ pics were taken, one for the museum and one to send to their parents. Apparently that was my grandfather that took this pictures, though I can’t be sure as my father passes before I had a chance to officially confirm what has been told to me since I was a child.

John Resig (May 20, 2015 at 8:53 pm)

@Scott: One would think an institution would be amenable to something like this! However, in practice, it seems to be anything but. I have some contacts over in Japan (in the museum/digitization world) but none of them have mentioned the possibility of this becoming possible, which makes me very skeptical as to its potential. Obviously having material directly from the source would be best, but barring that, scanning a book is definitely preferable to nothing.

Leif (May 21, 2015 at 1:04 am)

This reminds me of some work I did years ago in processing Japanese internee records from WWII.

During the war, many Japanese, or people with Japanese backgrounds (born in Australia, but of Japanese parents) were generally arrested and placed in internment camps. Whilst it must have been horrific to have been arrested and sent to a camp in the middle of nowhere just because your parent were Japanese, in some ways it also removed them from the immediate danger of someone seeking revenge for the death of a son or brother fighting in the pacific. Many were shipped back to Japan at the end of the war, regardless of where they were born or how long they had been in Australia.

The work I had to do was to help improve the quality of images from scans from the internee’s records, created by the Army and now part of the Australian War Memorials collection. Incidentally I had to be briefed on the terms of the Geneva Convention for the treatment of POW records (these we considered a type of POW record), so the sealed records could not be opened, as they had been deemed embarassing for the POW – stuff like medical treatment for an STD, or a single mother giving birth – things judged by the standards of the day.

The paper records were on very low quality pulp-ish paper, almost a rag paper. When scanned using an HD digital camera setup, the faint grid pattern from the wire racks the paper rested on, when drying after coming through the paper press, came out in strong relief, almost like a moire pattern.

I spent a week playing with plugging in new algorithms, or tweaking the structure and parameters of existing ones, with Perl and ImageMagick. I had best results with Gaussian and I think cubic filters of different sorts (it was over 10 years ago!). Most of my work was just extending the existing Perl API to expose more internals of filters (start conditions, radius control etc) than actually coding any new filter types. In the end we were able to suppress the wire grid pattern significantly, allowing war researchers a much easier time looking at archived images for their work.

Maybe there is something in http://www.imagemagick.org/Usage/filter/ to help you. Not as sophisticated as waifu2x learning algorithms, but another path for thought.

asindu (May 21, 2015 at 1:06 am)

lol. I didn’t even know that this project had computer vision technology applied to it. you need to write that post that explains it fully!

Bambax (May 21, 2015 at 3:20 am)

If you do the scanning yourself you won’t have the problem of JPEG compression, since you can choose to work with uncompressed images as a source.

(The problems outlined in Scott’s post remain, but at least the specific point about JPEG compression is moot).

Also, the noise reduction applied by the tool seems to be a very standard / aggressive type; there are commercial solutions specialized in all types of noise reduction that do amazing jobs. You could just use waifu2x to upscale with little to no noise reduction, and then process the images with other tools to get a better final image.

Matt Morgan (May 21, 2015 at 8:13 am)

John, this is cool.

It would be great to see some comparisons to actual 2x size images, too. E.g., get some hi-res images from the Met,

http://metmuseum.org/collection/the-collection-online/search?&where=Japan&what=Prints&pg=1

(which you know I’m sure but others may like the link!), scale them down by 50%, then compare the original to the Waifu2x scaled-up version. Have you tried it?

Anonymous (May 21, 2015 at 3:56 pm)

It looks quite similar to warpsharp filter that has been a fad in anime video encoding a couple of years ago (we sorted that; its proponents now lay peacefully under the dark waters of the bay).

It may be suitable for the described task, but lost features (thin lines on bended brows) and changes in artistic style (smudged textures, flattened volume and vectorized lines) may misguide and enrage the professional.

Greg (May 21, 2015 at 5:31 pm)

This blog post on the Flipboard Engineering blog had a lot of information about using neural networks for upscaling images: http://engineering.flipboard.com/2015/05/scaling-convnets/

Matt Morgan (May 22, 2015 at 6:30 pm)

John, have you seen how the doubled images compare to the originals? Probably not with these, but it wouldn’t be hard to do a comparison using images for which you do have higher-res versions (i.e., scale them down, then back up with Waifu2x and compare to the originals).