AutoMM Detection - Finetune on COCO Format Dataset with Customized Settings¶

In this section, our goal is to fast finetune and evaluate a pretrained model

on Pothole dataset in COCO format with customized setting.

Pothole is a single object, i.e. pothole, detection dataset, containing 665 images with bounding box annotations

for the creation of detection models and can work as POC/POV for the maintenance of roads.

See AutoMM Detection - Prepare Pothole Dataset for how to prepare Pothole dataset.

To start, let’s import MultiModalPredictor and make sure mmcv and mmdet are installed:

from autogluon.multimodal import MultiModalPredictor

And also import some other packages that will be used in this tutorial:

import os

from autogluon.core.utils.loaders import load_zip

We have the sample dataset ready in the cloud. Let’s download it and store the paths for each data split:

zip_file = "https://automl-mm-bench.s3.amazonaws.com/object_detection/dataset/pothole.zip"

download_dir = "./pothole"

load_zip.unzip(zip_file, unzip_dir=download_dir)

data_dir = os.path.join(download_dir, "pothole")

train_path = os.path.join(data_dir, "Annotations", "usersplit_train_cocoformat.json")

val_path = os.path.join(data_dir, "Annotations", "usersplit_val_cocoformat.json")

test_path = os.path.join(data_dir, "Annotations", "usersplit_test_cocoformat.json")

Downloading ./pothole/file.zip from https://automl-mm-bench.s3.amazonaws.com/object_detection/dataset/pothole.zip...

100%|██████████| 351M/351M [00:07<00:00, 47.7MiB/s]

While using COCO format dataset, the input is the json annotation file of the dataset split.

In this example, usersplit_train_cocoformat.json is the annotation file of the train split.

usersplit_val_cocoformat.json is the annotation file of the validation split.

And usersplit_test_cocoformat.json is the annotation file of the test split.

We select the YOLOX-small model pretrained on COCO dataset. With this setting, it is fast to finetune or inference,

and easy to deploy. Note that you can use a larger model by setting the checkpoint_name to corresponding checkpoint name for better performance (but usually with slower speed).

And you may need to change the learning_rate and per_gpu_batch_size for a different model.

An easier way is to use our predefined presets "medium_quality", "high_quality", or "best_quality".

For more about using presets, see Quick Start Coco.

checkpoint_name = "yolox_s"

num_gpus = 1 # only use one GPU

We create the MultiModalPredictor with selected checkpoint name and number of GPUs.

We need to specify the problem_type to "object_detection",

and also provide a sample_data_path for the predictor to infer the categories of the dataset.

Here we provide the train_path, and it also works using any other split of this dataset.

predictor = MultiModalPredictor(

hyperparameters={

"model.mmdet_image.checkpoint_name": checkpoint_name,

"env.num_gpus": num_gpus,

},

problem_type="object_detection",

sample_data_path=train_path,

)

We set the learning rate to be 1e-4.

Note that we use a two-stage learning rate option during finetuning by default,

and the model head will have 100x learning rate.

Using a two-stage learning rate with high learning rate only on head layers makes

the model converge faster during finetuning. It usually gives better performance as well,

especially on small datasets with hundreds or thousands of images.

We set batch size to be 16, and you can increase or decrease the batch size based on your available GPU memory.

We set max number of epochs to 30, number of validation check per interval to 1.0,

and validation check per n epochs to 3 for fast finetuning.

We also compute the time of the fit process here for better understanding the speed.

predictor.fit(

train_path,

tuning_data=val_path,

hyperparameters={

"optimization.learning_rate": 1e-4, # we use two stage and detection head has 100x lr

"env.per_gpu_batch_size": 16, # decrease it when model is large or GPU memory is small

"optimization.max_epochs": 30, # max number of training epochs, note that we may early stop before this based on validation setting

"optimization.val_check_interval": 1.0, # Do 1 validation each epoch

"optimization.check_val_every_n_epoch": 3, # Do 1 validation each 3 epochs

"optimization.patience": 3, # Early stop after 3 consective validations are not the best

},

)

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

Downloading yolox_s_8x8_300e_coco_20211121_095711-4592a793.pth from https://download.openmmlab.com/mmdetection/v2.0/yolox/yolox_s_8x8_300e_coco/yolox_s_8x8_300e_coco_20211121_095711-4592a793.pth...

Loads checkpoint by local backend from path: yolox_s_8x8_300e_coco_20211121_095711-4592a793.pth

The model and loaded state dict do not match exactly

size mismatch for bbox_head.multi_level_conv_cls.0.weight: copying a param with shape torch.Size([80, 128, 1, 1]) from checkpoint, the shape in current model is torch.Size([1, 128, 1, 1]).

size mismatch for bbox_head.multi_level_conv_cls.0.bias: copying a param with shape torch.Size([80]) from checkpoint, the shape in current model is torch.Size([1]).

size mismatch for bbox_head.multi_level_conv_cls.1.weight: copying a param with shape torch.Size([80, 128, 1, 1]) from checkpoint, the shape in current model is torch.Size([1, 128, 1, 1]).

size mismatch for bbox_head.multi_level_conv_cls.1.bias: copying a param with shape torch.Size([80]) from checkpoint, the shape in current model is torch.Size([1]).

size mismatch for bbox_head.multi_level_conv_cls.2.weight: copying a param with shape torch.Size([80, 128, 1, 1]) from checkpoint, the shape in current model is torch.Size([1, 128, 1, 1]).

size mismatch for bbox_head.multi_level_conv_cls.2.bias: copying a param with shape torch.Size([80]) from checkpoint, the shape in current model is torch.Size([1]).

=================== System Info ===================

AutoGluon Version: 1.1.1b20240716

Python Version: 3.10.13

Operating System: Linux

Platform Machine: x86_64

Platform Version: #1 SMP Fri May 17 18:07:48 UTC 2024

CPU Count: 8

Pytorch Version: 2.3.1+cu121

CUDA Version: 12.1

Memory Avail: 28.68 GB / 30.95 GB (92.7%)

===================================================

No path specified. Models will be saved in: "AutogluonModels/ag-20240716_223653"

Using default root folder: ./pothole/pothole/Annotations/... Specify `root=...` if you feel it is wrong...

Using default root folder: ./pothole/pothole/Annotations/... Specify `root=...` if you feel it is wrong...

AutoMM starts to create your model. ✨✨✨

To track the learning progress, you can open a terminal and launch Tensorboard:

```shell

# Assume you have installed tensorboard

tensorboard --logdir /home/ci/autogluon/docs/tutorials/multimodal/object_detection/advanced/AutogluonModels/ag-20240716_223653

```

Seed set to 0

GPU Count: 1

GPU Count to be Used: 1

GPU 0 Name: Tesla T4

GPU 0 Memory: 0.42GB/15.0GB (Used/Total)

Using 16bit Automatic Mixed Precision (AMP)

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

HPU available: False, using: 0 HPUs

`Trainer(val_check_interval=1.0)` was configured so validation will run at the end of the training epoch..

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params | Mode

-------------------------------------------------------------------------------

0 | model | MMDetAutoModelForObjectDetection | 8.9 M | train

1 | validation_metric | MeanAveragePrecision | 0 | train

-------------------------------------------------------------------------------

8.9 M Trainable params

0 Non-trainable params

8.9 M Total params

35.751 Total estimated model params size (MB)

/home/ci/opt/venv/lib/python3.10/site-packages/torch/functional.py:512: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:3587.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

/home/ci/opt/venv/lib/python3.10/site-packages/torchmetrics/utilities/prints.py:43: UserWarning: Encountered more than 100 detections in a single image. This means that certain detections with the lowest scores will be ignored, that may have an undesirable impact on performance. Please consider adjusting the `max_detection_threshold` to suit your use case. To disable this warning, set attribute class `warn_on_many_detections=False`, after initializing the metric.

warnings.warn(*args, **kwargs) # noqa: B028

Epoch 2, global step 39: 'val_map' reached 0.31094 (best 0.31094), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/object_detection/advanced/AutogluonModels/ag-20240716_223653/epoch=2-step=39.ckpt' as top 1

Epoch 5, global step 78: 'val_map' reached 0.34476 (best 0.34476), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/object_detection/advanced/AutogluonModels/ag-20240716_223653/epoch=5-step=78.ckpt' as top 1

Epoch 8, global step 117: 'val_map' was not in top 1

Epoch 11, global step 156: 'val_map' was not in top 1

Epoch 14, global step 195: 'val_map' was not in top 1

AutoMM has created your model. 🎉🎉🎉

To load the model, use the code below:

```python

from autogluon.multimodal import MultiModalPredictor

predictor = MultiModalPredictor.load("/home/ci/autogluon/docs/tutorials/multimodal/object_detection/advanced/AutogluonModels/ag-20240716_223653")

```

If you are not satisfied with the model, try to increase the training time,

adjust the hyperparameters (https://auto.gluon.ai/stable/tutorials/multimodal/advanced_topics/customization.html),

or post issues on GitHub (https://github.com/autogluon/autogluon/issues).

<autogluon.multimodal.predictor.MultiModalPredictor at 0x7f6a587aebf0>

To evaluate the model we just trained, run:

predictor.evaluate(test_path)

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

saving file at /home/ci/autogluon/docs/tutorials/multimodal/object_detection/advanced/AutogluonModels/ag-20240716_224316/object_detection_result_cache.json

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

Loading and preparing results...

DONE (t=0.31s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=0.41s).

Accumulating evaluation results...

DONE (t=0.07s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.364

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.650

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.364

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.190

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.394

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.468

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.213

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.487

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.590

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.503

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.587

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.667

Using default root folder: ./pothole/pothole/Annotations/... Specify `root=...` if you feel it is wrong...

A new predictor save path is created. This is to prevent you to overwrite previous predictor saved here. You could check current save path at predictor._save_path. If you still want to use this path, set resume=True

No path specified. Models will be saved in: "AutogluonModels/ag-20240716_224316"

{'map': 0.36394616092854915,

'mean_average_precision': 0.36394616092854915,

'map_50': 0.6502237188397358,

'map_75': 0.36449099428234055,

'map_small': 0.18979637635013016,

'map_medium': 0.39388683737191943,

'map_large': 0.4682430066230392,

'mar_1': 0.21327433628318587,

'mar_10': 0.4867256637168141,

'mar_100': 0.5899705014749262,

'mar_small': 0.5028169014084507,

'mar_medium': 0.587292817679558,

'mar_large': 0.6666666666666667}

Note that it’s always recommended to use our predefined presets to save customization time with following code script:

predictor = MultiModalPredictor(

problem_type="object_detection",

sample_data_path=train_path,

presets="medium_quality",

)

predictor.fit(train_path, tuning_data=val_path)

predictor.evaluate(test_path)

For more about using presets, see Quick Start Coco.

And the evaluation results are shown in command line output. The first value is mAP in COCO standard, and the second one is mAP in VOC standard (or mAP50). For more details about these metrics, see COCO’s evaluation guideline.

We can get the prediction on test set:

pred = predictor.predict(test_path)

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

Using default root folder: ./pothole/pothole/Annotations/... Specify `root=...` if you feel it is wrong...

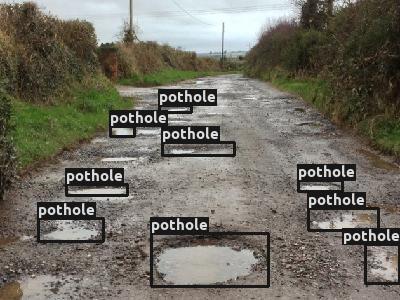

Let’s also visualize the prediction result:

!pip install opencv-python

Requirement already satisfied: opencv-python in /home/ci/opt/venv/lib/python3.10/site-packages (4.10.0.84)

Requirement already satisfied: numpy>=1.21.2 in /home/ci/opt/venv/lib/python3.10/site-packages (from opencv-python) (1.26.4)

from autogluon.multimodal.utils import visualize_detection

conf_threshold = 0.25 # Specify a confidence threshold to filter out unwanted boxes

visualization_result_dir = "./" # Use the pwd as result dir to save the visualized image

visualized = visualize_detection(

pred=pred[12:13],

detection_classes=predictor.classes,

conf_threshold=conf_threshold,

visualization_result_dir=visualization_result_dir,

)

from PIL import Image

from IPython.display import display

img = Image.fromarray(visualized[0][:, :, ::-1], 'RGB')

display(img)

Saved visualizations to ./

Under this fast finetune setting, we reached a good mAP number on a new dataset with a few hundred seconds!

For how to finetune with higher performance,

see AutoMM Detection - High Performance Finetune on COCO Format Dataset, where we finetuned a VFNet model with

5 hours and reached mAP = 0.450, mAP50 = 0.718 on this dataset.

Other Examples¶

You may go to AutoMM Examples to explore other examples about AutoMM.

Customization¶

To learn how to customize AutoMM, please refer to Customize AutoMM.

Citation¶

@article{DBLP:journals/corr/abs-2107-08430,

author = {Zheng Ge and

Songtao Liu and

Feng Wang and

Zeming Li and

Jian Sun},

title = {{YOLOX:} Exceeding {YOLO} Series in 2021},

journal = {CoRR},

volume = {abs/2107.08430},

year = {2021},

url = {https://arxiv.org/abs/2107.08430},

eprinttype = {arXiv},

eprint = {2107.08430},

timestamp = {Tue, 05 Apr 2022 14:09:44 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2107-08430.bib},

bibsource = {dblp computer science bibliography, https://dblp.org},

}