Preface

This book is not about how to write correct and beautiful code, I am assuming that you already know how to do that. This book isn’t really about profiling and performance tuning either. Although, there is a chapter in this book on tracing and profiling which can help you find bottlenecks and unnecessary usage of resources. There also is a chapter on performance tuning.

These two chapters are the last chapters in the book, and the whole book is building up to those chapters, but the real goal with this book is to give you all the information, all the gory details, that you need in order to really understand the performance of your Erlang application.

About this book

For anyone who: Want to tune an Erlang installation. Want to know how to debug VM crashes. Want to improve performance of Erlang applications. Want to understand how Erlang really works. Want to learn how to build your own runtime environment.

If you want to debug the VM If you want to extend the VM If you want to do performance tweaking—jump to the last chapter … but to really understand that chapter, you need to read the book.

How to read this book

The Erlang RunTime System (ERTS) is a complex system with many interdependent components. It is written in a very portable way so that it can run on anything from a gum-stick computer to the largest multicore system with terabytes of memory. In order to be able to optimize the performance of such a system for your application, you need to not only know your application, but you also need to have a thorough understanding of ERTS itself.

With this knowledge of how ERTS works, you will be able to understand how your application behaves when running on ERTS, and you will also be able to find and fix problems with the performance of your application. In the second part of this book, we will go through how you successfully run, monitor, and scale your ERTS application.

You don’t need to be an Erlang programmer to read this book, but you will need some basic understanding of what Erlang is. This following section will give you some Erlang background.

Erlang

In this section, we will look at some basic Erlang concepts that are vital to understanding the rest of the book.

Erlang has been called, especially by one of Erlang’s creators, Joe Armstrong, a concurrency oriented language. Concurrency is definitely at the heart of Erlang, and to be able to understand how an Erlang system works you need to understand the concurrency model of Erlang.

First of all, we need to make a distinction between concurrency and parallelism. In this book, concurrency is the concept of having two or more processes that can execute independently of each other, this can be done by first executing one process then the other or by interleaving the execution, or by executing the processes in parallel. With parallel executions, we mean that the processes actually execute at the exact same time by using several physical execution units. Parallelism can be achieved on different levels. Through multiple execution units in the execution pipeline in one core, in several cores on one CPU, by several CPUs in one machine or through several machines.

Erlang uses processes to achieve concurrency. Conceptually Erlang processes are similar to most OS processes, they execute in parallel and can communicate through signals. In practice, there is a huge difference in that Erlang processes are much more lightweight than most OS processes. Many other concurrent programming languages call their equivalent to Erlang processes agents.

Erlang achieves concurrency by interleaving the execution of processes on the Erlang virtual machine, the BEAM. On a multi-core processor the BEAM can also achieve parallelism by running one scheduler per core and executing one Erlang process per scheduler. The designer of an Erlang system can achieve further parallelism by distributing the system on several computers.

A typical Erlang system (a server or service built in Erlang) consists of a number of Erlang applications, corresponding to a directory on disk. Each application is made up of several Erlang modules corresponding to files in the directory. Each module contains a number of functions, and each function is made up of expressions.

Since Erlang is a functional language, it has no statements, only expressions. Erlang expressions can be combined into an Erlang function. A function takes a number of arguments and returns a value. In Erlang Code Examples we can see some examples of Erlang expressions and functions.

%% Some Erlang expressions:

true.

1+1.

if (X > Y) -> X; true -> Y end.

%% An Erlang function:

max(X, Y) ->

if (X > Y) -> X;

true -> Y

end.Erlang has a number of built in functions (or BIFs) which are implemented by the VM. This is either for efficiency reasons, like the implementation of lists:append (which could be implemented in Erlang). It could also be to provide some low level functionality, which would be hard or impossible to implement in Erlang itself, like list_to_atom.

Since Erlang/OTP R13B03 you can also provide your own functions implemented in C by using the Native Implemented Functions (NIF) interface.

Acknowledgments

First of all I want to thank the whole OTP team at Ericsson both for maintaining Erlang and the Erlang runtime system, and also for patiently answering all my questions. In particular I want to thank Kenneth Lundin, Björn Gustavsson, Lukas Larsson, Rickard Green and Raimo Niskanen.

I would also like to thank Yoshihiro Tanaka, Roberto Aloi and Dmytro Lytovchenko for major contributions to the book, and HappiHacking and TubiTV for sponsoring work on the book.

Finally, a big thank you to everyone who has contributed with edits and fixes:

Erik Stenman Yoshihiro Tanaka Roberto Aloi Dmytro Lytovchenko Anthony Molinaro Alexandre Rodrigues Yoshihiro TANAKA Ken Causey Kim Shrier Lukas Larsson Tobias Lindahl Andrea Leopardi Anton N Ryabkov DuskyElf Greg Baraghimian Lincoln Bryant Marc van Woerkom Michał Piotrowski Ramkumar Rajagopalan Trevor Brown Yves Müller techgaun Alex Fu Alex Jiao Amir Moulavi Antonio Nikishaev Benjamin Tan Wei Hao Borja o'Cook Buddhika Chathuranga Cameron Price Chris Yunker Davide Bettio Eric Yu Erick Dennis Humberto Rodríguez A Jan Lehnardt Juan Facorro Karl Hallsby Kian-Meng, Ang Kyle Baker Luke Imhoff Michael Kohl Milton Inostroza PlatinumThinker Richard Carlsson ShalokShalom Simon Johansson Stefan Hagen Thales Macedo Garitezi Yago Riveiro fred happi tomdos yoshi

I: Understanding ERTS

1. Introducing the Erlang Runtime System

The Erlang RunTime System (ERTS) is a complex system with many interdependent components. It is written in a very portable way so that it can run on anything from a gum stick computer to the largest multicore system with terabytes of memory. In order to be able to optimize the performance of such a system for your application, you need to not only know your application, but you also need to have a thorough understanding of ERTS itself.

1.1. ERTS and the Erlang Runtime System

There is a difference between any Erlang Runtime System and a specific implementation of an Erlang Runtime System. "Erlang/OTP" by Ericsson is the de facto standard implementation of Erlang and the Erlang Runtime System. In this book I will refer to this implementation as ERTS or spelled out Erlang RunTime System with a capital T. (See Section 1.3 for a definition of OTP).

There is no official definition of what an Erlang Runtime System is, or what an Erlang Virtual Machine is. You could sort of imagine what such an ideal Platonic system would look like by taking ERTS and removing all the implementation specific details. This is unfortunately a circular definition, since you need to know the general definition to be able to identify an implementation specific detail. In the Erlang world we are usually too pragmatic to worry about this.

We will try to use the term Erlang Runtime System to refer to the general idea of any Erlang Runtime System as opposed to the specific implementation by Ericsson which we call the Erlang RunTime System or usually just ERTS.

Note This book is mostly a book about ERTS in particular and only to a small extent about any general Erlang Runtime System. If you assume that we talk about the Ericsson implementation unless it is clearly stated that we are talking about a general principle you will probably be right.

1.2. How to read this book

In Part II of this book we will look at how to tune the runtime system for your application and how to profile and debug your application and the runtime system. In order to really know how to tune the system you also need to know the system. In Part I of this book you will get a deep understanding of how the runtime system works.

The following chapters of Part I will go over each component of the system by itself. You should be able to read any one of these chapters without having a full understanding of how the other components are implemented, but you will need a basic understanding of what each component is. The rest of this introductory chapter should give you enough basic understanding and vocabulary to be able to jump between the rest of the chapters in part one in any order you like.

However, if you have the time, read the book in order the first time. Words that are specific to Erlang and ERTS or used in a specific way in this book are usually explained at their first occurrence. Then, when you know the vocabulary, you can come back and use Part I as a reference whenever you have a problem with a particular component.

1.3. ERTS

In this there is a basic overview of the main components of ERTS and some vocabulary needed to understand the more detailed descriptions of each component in the following chapters.

1.3.1. The Erlang Node (ERTS)

When you start an Elixir or Erlang application or system, what you really start is an Erlang node. The node runs the Erlang RunTime System and the virtual machine BEAM. (or possibly another implementation of Erlang (see Section 1.4)).

Your application code will run in an Erlang node, and all the layers of the node will affect the performance of your application. We will look at the stack of layers that makes up a node. This will help you understand your options for running your system in different environments.

In OO terminology one could say that an Erlang node is an object of the Erlang Runtime System class. The equivalent in the Java world is a JVM instance.

All execution of Elixir/Erlang code is done within a node. An Erlang node runs in one OS process, and you can have several Erlang nodes running on one machine.

To be completely correct according to the Erlang OTP documentation a

node is actually an executing runtime system that has been given a

name. That is, if you start Elixir without giving a name through one

of the command line switches --name NAME@HOST or --sname NAME (or

-name and -sname for an Erlang runtime.) you will have a runtime

but not a node. In such a system the function Node.alive?

(or in Erlang is_alive()) returns false.

$ iex

Erlang/OTP 19 [erts-8.1] [source-0567896] [64-bit] [smp:4:4]

[async-threads:10] [hipe] [kernel-poll:false]

Interactive Elixir (1.4.0) - press Ctrl+C to exit (type h() ENTER for help)

iex(1)> Node.alive?

false

iex(2)>

The runtime system itself is not that strict in its use

of the terminology. You can ask for the name of the node even

if you didn’t give it a name. In Elixir you use the function

Node.list with the argument :this, and in Erlang you

call nodes(this).:

iex(2)> Node.list :this [:nonode@nohost] iex(3)>

In this book we will use the term node for any running instance of the runtime whether it is given a name or not.

1.3.2. Layers in the Execution Environment

Your program (application) will run in one or more nodes, and the performance of your program will depend not only on your application code but also on all the layers below your code in the ERTS stack. In Figure 1 you can see the ERTS Stack illustrated with two Erlang nodes running on one machine.

If you are using Elixir there is yet another layer to the stack.

Let’s look at each layer of the stack and see how you can tune them to your application’s need.

At the bottom of the stack there is the hardware you are running on. The easiest way to improve the performance of your app is probably to run it on better hardware. You might need to start exploring higher levels of the stack if economical or physical constraints or environmental concerns won’t let you upgrade your hardware.

The two most important choices for your hardware is whether it is multicore and whether it is 32-bit or 64-bit. You need different builds of ERTS depending on whether you want to use multicore or not and whether you want to use 32-bit or 64-bit.

The second layer in the stack is the OS level. ERTS runs on most versions of Windows and most POSIX "compliant" operating systems, including Linux, VxWorks, FreeBSD, Solaris, and Mac OS X. Today most of the development of ERTS is done on Linux and OS X, and you can expect the best performance on these platforms. However, Ericsson has been using Solaris internally in many projects and ERTS has been tuned for Solaris for many years. Depending on your use case you might actually get the best performance on a Solaris system. The OS choice is usually not based on performance requirements, but is restricted by other factors. If you are building an embedded application you might be restricted to Raspbian or VxWork, and if you for some reason are building an end user or client application you might have to use Windows. The Windows port of ERTS has so far not had the highest priority and might not be the best choice from a performance or maintenance perspective. If you want to use a 64-bit ERTS you of course need to have both a 64-bit machine and a 64-bit OS. We will not cover many OS specific questions in this book, and most examples will be assuming that you run on Linux.

The third layer in the stack is the Erlang Runtime System. In our case this will be ERTS. This and the fourth layer, the Erlang Virtual Machine (BEAM), is what this book is all about.

The fifth layer, OTP, supplies the Erlang standard libraries. OTP

originally stood for "Open Telecom Platform" and was a number of

Erlang libraries supplying building blocks (such as supervisor,

gen_server and gen_tcp) for building robust applications (such as

telephony exchanges). Early on, the libraries and the meaning of OTP

got intermingled with all the other standard libraries shipped with

ERTS. Nowadays most people use OTP together with Erlang in

"Erlang/OTP" as the name for ERTS and all Erlang libraries shipped by

Ericsson. Knowing these standard libraries and how and when to use

them can greatly improve the performance of your application. This

book will not go into any details of the standard libraries and

OTP, there are many other books that cover these aspects.

If you are running an Elixir program the sixth layer provides the Elixir environment and the Elixir libraries.

Finally, the seventh layer (APP) is your application, and any third party libraries you use. The application can use all the functionality provided by the underlying layers. Apart from upgrading your hardware this is probably the place where you most easily can improve your application’s performance. In Chapter 19 there are some hints and some tools that can help you profile and optimize your application. In Chapter 20 we will look at how to find the cause of crashing applications and how to find bugs in your application.

For information on how to build and run an Erlang node see Appendix A, and read the rest of the book to learn all about the components of an Erlang node.

1.3.3. Distribution

One of the key insights by the Erlang language designers was that in order to build a system that works 24/7 you need to be able to handle hardware failure. Therefore you need to distribute your system over at least two physical machines. You do this by starting a node on each machine and then you can connect the nodes to each other so that processes can communicate with each other across the nodes just as if they were running in the same node.

1.3.4. The Erlang Compiler

The Erlang Compiler is responsible for compiling Erlang source code, from .erl files into virtual machine code for BEAM (the virtual machine). The compiler itself is written in Erlang and compiled by itself to BEAM code and usually available in a running Erlang node. To bootstrap the runtime system there are a number of precompiled BEAM files, including the compiler, in the bootstrap directory.

For more information about the compiler see Chapter 2.

1.3.5. The Erlang Virtual Machine: BEAM

BEAM is the Erlang virtual machine used for executing Erlang code, just like the JVM is used for executing Java code. BEAM runs in an Erlang Node.

Just as ERTS is an implementation of a more general concept of a Erlang Runtime System so is BEAM an implementation of a more general Erlang Virtual Machine (EVM). There is no definition of what constitutes an EVM but BEAM actually has two levels of instructions Generic Instructions and Specific Instructions. The generic instruction set could be seen as a blueprint for an EVM.

1.3.6. Processes

An Erlang process basically works like an OS process. Each process has its own memory (a mailbox, a heap and a stack) and a process control block (PCB) with information about the process.

All Erlang code execution is done within the context of a process. One Erlang node can have many processes, which can communicate through message passing and signals. Erlang processes can also communicate with processes on other Erlang nodes as long as the nodes are connected.

To learn more about processes and the PCB see Chapter 3.

1.3.7. Scheduling

The Scheduler is responsible for choosing the Erlang process to execute. Basically the scheduler keeps two queues, a ready queue of processes ready to run, and a waiting queue of processes waiting to receive a message. When a process in the waiting queue receives a message or gets a time out it is moved to the ready queue.

The scheduler picks the first process from the ready queue and hands it to BEAM for execution of one time slice. BEAM preempts the running process when the time slice is used up and adds the process to the end of the ready queue. If the process is blocked in a receive before the time slice is used up, it gets added to the waiting queue instead.

Erlang is concurrent by nature, that is, each process is conceptually running at the same time as all other processes, but in reality there is just one process running in the VM. On a multicore machine Erlang actually runs more than one scheduler, usually one per physical core, each having their own queues. This way Erlang achieves true parallelism. To utilize more than one core ERTS has to be built (see Appendix A) in SMP mode. SMP stands for Symmetric MultiProcessing, that is, the ability to execute a processes on any one of multiple CPUs.

In reality the picture is more complicated with priorities among processes and the waiting queue is implemented through a timing wheel. All this and more is described in detail in Chapter 11.

1.3.8. The Erlang Tag Scheme

Erlang is a dynamically typed language, and the runtime system needs a way to keep track of the type of each data object. This is done with a tagging scheme. Each data object or pointer to a data object also has a tag with information about the data type of the object.

Basically some bits of a pointer are reserved for the tag, and the emulator can then determine the type of the object by looking at the bit pattern of the tag.

These tags are used for pattern matching and for type test and for primitive operations as well as by the garbage collector.

The complete tagging scheme is described in Chapter 4.

1.3.9. Memory Handling

Erlang uses automatic memory management and the programmer does not have to worry about memory allocation and deallocation. Each process has a heap and a stack which both can grow, and shrink, as needed.

When a process runs out of heap space, the VM will first try to reclaim free heap space through garbage collection. The garbage collector will then go through the process stack and heap and copy live data to a new heap while throwing away all the data that is dead. If there still isn’t enough heap space, a new larger heap will be allocated and the live data is moved there.

The details of the current generational copying garbage collector, including the handling of reference counted binaries can be found in Chapter 12.

In a system which uses HiPE compiled native code, each process actually has two stacks, a BEAM stack and a native stack, the details can be found in Chapter 18.

1.3.10. The Interpreter and the Command Line Interface

When you start an Erlang node with erl you get a command prompt. This is the Erlang read eval print loop (REPL) or the command line interface (CLI) or simply the Erlang shell.

You can actually type in Erlang code and execute it directly from the shell. In this case the code is not compiled to BEAM code and executed by the BEAM, instead the code is parsed and interpreted by the Erlang interpreter. In general the interpreted code behaves exactly as compiled code, but there are a few subtle differences, these differences and all other aspects of the shell are explained in Chapter 21.

1.4. Other Erlang Implementations

This book is mainly concerned with the "standard" Erlang implementation by Ericsson/OTP called ERTS, but there are a few other implementations available and in this section we will look at some of them briefly.

1.4.1. Erlang on Xen

Erlang on Xen (https://github.com/cloudozer/ling) is an Erlang implementation running directly on server hardware with no OS layer in between, only a thin Xen client.

Ling, the virtual machine of Erlang on Xen is almost 100% binary compatible with BEAM. In xref:the_eox_stack you can see how the Erlang on Xen implementation of the Erlang Solution Stack differs from the ERTS Stack. The thing to note here is that there is no operating system in the Erlang on Xen stack.

Since Ling implements the generic instruction set of BEAM, it can reuse the BEAM compiler from the OTP layer to compile Erlang to Ling.

1.4.2. Erjang

Erjang (https://github.com/trifork/erjang) is an Erlang implementation which runs on the JVM. It loads .beam files and recompiles the code to Java .class files. Erjang is almost 100% binary compatible with (generic) BEAM.

In xref:the_erjang_stack you can see how the Erjang implementation of the Erlang Solution Stack differs from the ERTS Stack. The thing to note here is that JVM has replaced BEAM as the virtual machine and that Erjang provides the services of ERTS by implementing them in Java on top of the VM.

Now that you have a basic understanding of all the major pieces of ERTS, and the necessary vocabulary you can dive into the details of each component. If you are eager to understand a certain component, you can jump directly to that chapter. Or if you are really eager to find a solution to a specific problem you could jump to the right chapter in Part II, and try the different methods to tune, tweak, or debug your system.

2. The Compiler

This book will not cover the programming language Erlang, but since the goal of the ERTS is to run Erlang code you will need to know how to compile Erlang code. In this chapter we will cover the compiler options needed to generate readable beam code and how to add debug information to the generated beam file. At the end of the chapter there is also a section on the Elixir compiler.

For those readers interested in compiling their own favorite language to ERTS this chapter will also contain detailed information about the different intermediate formats in the compiler and how to plug your compiler into the beam compiler backend. I will also present parse transforms and give examples of how to use them to tweak the Erlang language.

2.1. Compiling Erlang

Erlang is compiled from source code modules in .erl files to fat binary .beam files.

The compiler can be run from the OS shell with the erlc command:

> erlc foo.erlAlternatively the compiler can be invoked from the Erlang shell with the default shell command c or by calling compile:file/{1,2}

1> c(foo).or

1> compile:file(foo).The optional second argument to compile:file is a list of compiler options. A full list of the options can be found in the documentation of the compile module: see http://www.erlang.org/doc/man/compile.html.

Normally the compiler will compile Erlang source code from a .erl file and write the resulting binary beam code to a .beam file. You can also get the resulting binary back as an Erlang term by giving the option binary to the compiler. This option has then been overloaded to mean return any intermediate format as a term instead of writing to a file. If you for example want the compiler to return Core Erlang code you can give the options [core, binary].

The compiler is made up of a number of passes as illustrated in Figure 6.

If you want to see a complete and up to date list of compiler passes you can run the function compile:options/0 in an Erlang shell. The definitive source for information about the compiler is of course the source: compile.erl

2.2. Generating Intermediate Output

Looking at the code produced by the compiler is a great help in trying to understand how the virtual machine works. Fortunately, the compiler can show us the intermediate code after each compiler pass and the final beam code.

Let us try out our newfound knowledge to look at the generated code.

1> compile:options().

dpp - Generate .pp file

'P' - Generate .P source listing file...

'E' - Generate .E source listing file

...

'S' - Generate .S file

Let us try with a small example program "world.erl":

-module(world).

-export([hello/0]).

-include("world.hrl").

hello() -> ?GREETING.And the include file "world.hrl"

-define(GREETING, "hello world").If you now compile this with the 'P' option to get the parsed file you get a file "world.P":

2> c(world, ['P']).

** Warning: No object file created - nothing loaded **

okIn the resulting .P file you can see a pretty printed version of the code after the preprocessor (and parse transformation) has been applied:

-file("world.erl", 1).

-module(world).

-export([hello/0]).

-file("world.hrl", 1).

-file("world.erl", 4).

hello() ->

"hello world".To see how the code looks after all source code transformations are done, you can compile the code with the 'E'-flag.

3> c(world, ['E']).

** Warning: No object file created - nothing loaded **

okThis gives us an .E file, in this case all compiler directives have been removed and the built in functions module_info/{1,2} have been added to the source:

-vsn("\002").

-file("world.erl", 1).

-file("world.hrl", 1).

-file("world.erl", 5).

hello() ->

"hello world".

module_info() ->

erlang:get_module_info(world).

module_info(X) ->

erlang:get_module_info(world, X).We will make use of the 'P' and 'E' options when we look at parse transforms in Section 2.3.2, but first we will take a look at an "assembler" view of generated BEAM code. By giving the option 'S' to the compiler you get a .S file with Erlang terms for each BEAM instruction in the code.

3> c(world, ['S']).

** Warning: No object file created - nothing loaded **

okThe file world.S should look like this:

{module, world}. %% version = 0

{exports, [{hello,0},{module_info,0},{module_info,1}]}.

{attributes, []}.

{labels, 7}.

{function, hello, 0, 2}.

{label,1}.

{line,[{location,"world.erl",6}]}.

{func_info,{atom,world},{atom,hello},0}.

{label,2}.

{move,{literal,"hello world"},{x,0}}.

return.

{function, module_info, 0, 4}.

{label,3}.

{line,[]}.

{func_info,{atom,world},{atom,module_info},0}.

{label,4}.

{move,{atom,world},{x,0}}.

{line,[]}.

{call_ext_only,1,{extfunc,erlang,get_module_info,1}}.

{function, module_info, 1, 6}.

{label,5}.

{line,[]}.

{func_info,{atom,world},{atom,module_info},1}.

{label,6}.

{move,{x,0},{x,1}}.

{move,{atom,world},{x,0}}.

{line,[]}.

{call_ext_only,2,{extfunc,erlang,get_module_info,2}}.Since this is a file with dot (".") separated Erlang terms, you can read the file back into the Erlang shell with:

{ok, BEAM_Code} = file:consult("world.S").

The assembler code mostly follows the layout of the original source code.

The first instruction defines the module name of the code. The version mentioned in the comment (%% version = 0) is the version of the beam opcode format (as given by beam_opcodes:format_number/0).

Then comes a list of exports and any compiler attributes (none in this example) much like in any Erlang source module.

The first real beam-like instruction is {labels, 7} which tells the VM the number of labels in the code to make it possible to allocate room for all labels in one pass over the code.

After that there is the actual code for each function. The first instruction gives us the function name, the arity and the entry point as a label number.

You can use the 'S' option with great effect to help you understand how the BEAM works, and we will use it like that in later chapters. It is also invaluable if you develop your own language that you compile to the BEAM through Core Erlang, to see the generated code.

2.3. Compiler Passes

In the following sections we will go through most of the compiler passes shown in Figure 6. For a language designer targeting the BEAM this is interesting since it will show you what you can accomplish with the different approaches: macros, parse transforms, core erlang, and BEAM code, and how they depend on each other.

When tuning Erlang code, it is good to know what optimizations are applied when, and how you can look at generated code before and after optimizations.

2.3.1. Compiler Pass: The Erlang Preprocessor (epp)

The compilation starts with a combined tokenizer (or scanner) and preprocessor. That is, the preprosessor drives the tokenizer. This means that macros are expanded as tokens, so it is not a pure string replacement (as for example m4 or cpp). You can not use Erlang macros to define your own syntax, a macro will expand as a separate token from its surrounding characters. You can not concatenate a macro and a character to a token:

-define(plus,+).

t(A,B) -> A?plus+B.This will expand to

t(A,B) -> A + + B.

and not

t(A,B) -> A ++ B.

On the other hand since macro expansion is done on the token level, you do not need to have a valid Erlang term in the right hand side of the macro, as long as you use it in a way that gives you a valid term. E.g.:

-define(p,o, o]). t() -> [f,?p.

There are few real usages for this other than to win the obfuscated Erlang code contest. The main point to remember is that you can not really use the Erlang preprocessor to define a language with a syntax that differs from Erlang. Fortunately there are other ways to do this, as you shall see later.

2.3.2. Compiler Pass: Parse Transformations

The easiest way to tweak the Erlang language is through Parse Transformations (or parse transforms). Parse Transformations come with all sorts of warnings, like this note in the OTP documentation:

| Programmers are strongly advised not to engage in parse transformations and no support is offered for problems encountered. |

When you use a parse transform you are basically writing an extra pass in the compiler and that can if you are not careful lead to very unexpected results. But to use a parse transform you have to declare the usage in the module using it, and it will be local to that module, so as far as compiler tweaks goes this one is quite safe.

The biggest problem with parse transforms as I see it is that you are inventing your own syntax, and it will make it more difficult for anyone else reading your code. At least until your parse transform has become as popular and widely used as e.g. QLC.

OK, so you know you shouldn’t use it, but if you have to, here is what you need to know. A parse transforms is a function that works on the abstract syntax tree (AST) (see http://www.erlang.org/doc/apps/erts/absform.html ). The compiler does preprocessing, tokenization and parsing and then it will call the parse transform function with the AST and expects to get back a new AST.

This means that you can’t change the Erlang syntax fundamentally, but you can change the semantics. Lets say for example that you for some reason would like to write json code directly in your Erlang code, then you are in luck since the tokens of json and of Erlang are basically the same. Also, since the Erlang compiler does most of its sanity checks in the linter pass which follows the parse transform pass, you can allow an AST which does not represent valid Erlang.

To write a parse transform you need to write an Erlang module (lets call it p) which exports the function parse_transform/2. This function is called by the compiler during the parse transform pass if the module being compiled (lets call it m) contains the compiler option {parse_transform, p}. The arguments to the function is the AST of the module m and the compiler options given to the call to the compiler.

|

Note that you will not get any compiler options given in the file, this is a bit of a nuisance since you can’t give options to the parse transform from the code. The compiler does not expand compiler options until the expand pass which occurs after the parse transform pass. |

The documentation of the abstract format is somewhat dense and it is quite hard to get a grip on the abstract format by reading the documentation. I encourage you to use the syntax_tools and especially erl_syntax_lib for any serious work on the AST.

Here we will develop a simple parse transform just to get an understanding of the AST. Therefore we will work directly on the AST and use the old reliable io:format approach instead of syntax_tools.

First we create an example of what we would like to be able to compile json_test.erl:

-module(json_test).

-compile({parse_transform, json_parser}).

-export([test/1]).

test(V) ->

<<{{

"name" : "Jack (\"Bee\") Nimble",

"format": {

"type" : "rect",

"widths" : [1920,1600],

"height" : (-1080),

"interlace" : false,

"frame rate": V

}

}}>>.Then we create a minimal parse transform module json_parser.erl:

-module(json_parser).

-export([parse_transform/2]).

parse_transform(AST, _Options) ->

io:format("~p~n", [AST]),

AST.This identity parse transform returns an unchanged AST but it also prints it out so that you can see what an AST looks like.

> c(json_parser).

{ok,json_parser}

2> c(json_test).

[{attribute,1,file,{"./json_test.erl",1}},

{attribute,1,module,json_test},

{attribute,3,export,[{test,1}]},

{function,5,test,1,

[{clause,5,

[{var,5,'V'}],

[],

[{bin,6,

[{bin_element,6,

{tuple,6,

[{tuple,6,

[{remote,7,{string,7,"name"},{string,7,"Jack (\"Bee\") Nimble"}},

{remote,8,

{string,8,"format"},

{tuple,8,

[{remote,9,{string,9,"type"},{string,9,"rect"}},

{remote,10,

{string,10,"widths"},

{cons,10,

{integer,10,1920},

{cons,10,{integer,10,1600},{nil,10}}}},

{remote,11,{string,11,"height"},{op,11,'-',{integer,11,1080}}},

{remote,12,{string,12,"interlace"},{atom,12,false}},

{remote,13,{string,13,"frame rate"},{var,13,'V'}}]}}]}]},

default,default}]}]}]},

{eof,16}]

./json_test.erl:7: illegal expression

./json_test.erl:8: illegal expression

./json_test.erl:5: Warning: variable 'V' is unused

error

The compilation of json_test fails since the module contains invalid Erlang syntax, but you get to see what the AST looks like. Now we can just write some functions to traverse the AST and rewrite the json code into Erlang code.[1]

-module(json_parser).

-export([parse_transform/2]).

parse_transform(AST, _Options) ->

json(AST, []).

-define(FUNCTION(Clauses), {function, Label, Name, Arity, Clauses}).

%% We are only interested in code inside functions.

json([?FUNCTION(Clauses) | Elements], Res) ->

json(Elements, [?FUNCTION(json_clauses(Clauses)) | Res]);

json([Other|Elements], Res) -> json(Elements, [Other | Res]);

json([], Res) -> lists:reverse(Res).

%% We are interested in the code in the body of a function.

json_clauses([{clause, CLine, A1, A2, Code} | Clauses]) ->

[{clause, CLine, A1, A2, json_code(Code)} | json_clauses(Clauses)];

json_clauses([]) -> [].

-define(JSON(Json), {bin, _, [{bin_element

, _

, {tuple, _, [Json]}

, _

, _}]}).

%% We look for: <<"json">> = Json-Term

json_code([]) -> [];

json_code([?JSON(Json)|MoreCode]) -> [parse_json(Json) | json_code(MoreCode)];

json_code(Code) -> Code.

%% Json Object -> [{}] | [{Label, Term}]

parse_json({tuple,Line,[]}) -> {cons, Line, {tuple, Line, []}};

parse_json({tuple,Line,Fields}) -> parse_json_fields(Fields,Line);

%% Json Array -> List

parse_json({cons, Line, Head, Tail}) -> {cons, Line, parse_json(Head),

parse_json(Tail)};

parse_json({nil, Line}) -> {nil, Line};

%% Json String -> <<String>>

parse_json({string, Line, String}) -> str_to_bin(String, Line);

%% Json Integer -> Intger

parse_json({integer, Line, Integer}) -> {integer, Line, Integer};

%% Json Float -> Float

parse_json({float, Line, Float}) -> {float, Line, Float};

%% Json Constant -> true | false | null

parse_json({atom, Line, true}) -> {atom, Line, true};

parse_json({atom, Line, false}) -> {atom, Line, false};

parse_json({atom, Line, null}) -> {atom, Line, null};

%% Variables, should contain Erlang encoded Json

parse_json({var, Line, Var}) -> {var, Line, Var};

%% Json Negative Integer or Float

parse_json({op, Line, '-', {Type, _, N}}) when Type =:= integer

; Type =:= float ->

{Type, Line, -N}.

%% parse_json(Code) -> io:format("Code: ~p~n",[Code]), Code.

-define(FIELD(Label, Code), {remote, L, {string, _, Label}, Code}).

parse_json_fields([], L) -> {nil, L};

%% Label : Json-Term --> [{<<Label>>, Term} | Rest]

parse_json_fields([?FIELD(Label, Code) | Rest], _) ->

cons(tuple(str_to_bin(Label, L), parse_json(Code), L)

, parse_json_fields(Rest, L)

, L).

tuple(E1, E2, Line) -> {tuple, Line, [E1, E2]}.

cons(Head, Tail, Line) -> {cons, Line, Head, Tail}.

str_to_bin(String, Line) ->

{bin

, Line

, [{bin_element

, Line

, {string, Line, String}

, default

, default

}

]

}.And now we can compile json_test without errors:

1> c(json_parser).

{ok,json_parser}

2> c(json_test).

{ok,json_test}

3> json_test:test(42).

[{<<"name">>,<<"Jack (\"Bee\") Nimble">>},

{<<"format">>,

[{<<"type">>,<<"rect">>},

{<<"widths">>,[1920,1600]},

{<<"height">>,-1080},

{<<"interlace">>,false},

{<<"frame rate">>,42}]}]The AST generated by parse_transform/2 must correspond to valid Erlang code unless you apply several parse transforms, which is possible. The validity of the code is checked by the following compiler pass.

2.3.3. Compiler Pass: Linter

The linter (erl_lint.erl) generates warnings for syntactically correct but otherwise bad code, like "export_all flag enabled".

2.3.4. Compiler Pass: Save AST

In order to enable debugging of a module, you can "debug compile" the module, that is to pass the option debug_info to the compiler. The abstract syntax tree will then be saved by the "Save AST" until the end of the compilation, where it will be written to the .beam file.

It is important to note that the code is saved before any optimisations are applied, so if there is a bug in an optimisation pass in the compiler and you run code in the debugger you will get a different behavior. If you are implementing your own compiler optimisations this can trick you up badly.

2.3.5. Compiler Pass: Expand

In the expand phase source erlang constructs, such as records, are expanded to lower level erlang constructs. Compiler options, "-compile(...)", are also expanded to meta data.

2.3.6. Compiler Pass: Core Erlang

Core Erlang is a strict functional language suitable for compiler optimizations. It makes code transformations easier by reducing the number of ways to express the same operation. One way it does this is by introducing let and letrec expressions to make scoping more explicit.

Core Erlang is the best target for a language you want to run in ERTS. It changes very seldom and it contains all aspects of Erlang in a clean way. If you target the beam instruction set directly you will have to deal with much more details, and that instruction set usually changes slightly between each major release of ERTS. If you on the other hand target Erlang directly you will be more restricted in what you can describe, and you will also have to deal with more details, since Core Erlang is a cleaner language.

To compile an Erlang file to core you can give the option "to_core", note though that this writes the Erlang core program to a file with the ".core" extension. To compile an Erlang core program from a ".core" file you can give the option "from_core" to the compiler.

1> c(world, to_core).

** Warning: No object file created - nothing loaded **

ok

2> c(world, from_core).

{ok,world}

Note that the .core files are text files written in the human readable core format. To get the core program as an Erlang term you can add the binary option to the compilation.

2.3.7. Compiler Pass: Kernel Erlang

Kernel Erlang is a flat version of Core Erlang with a few differences. For example, each variable is unique and the scope is a whole function. Pattern matching is compiled to more primitive operations.

2.3.8. Compiler Pass: BEAM Code

The last step of a normal compilation is the external beam code format. Some low level optimizations such as dead code elimination and peep hole optimisations are done on this level.

The BEAM code is described in detail in Chapter 7 and Appendix B

2.3.9. Compiler Pass: Native Code

If you add the flag native to the compilation, and you have a HiPE enabled runtime system, then the compiler will generate native code for your module and store the native code along with the beam code in the .beam. file.

2.4. Other Compiler Tools

There are a number of tools available to help you work with code generation and code manipulation. These tools are written in Erlang and not really part of the runtime system but they are very nice to know about if you are implementing another language on top of the BEAM.

In this section we will cover three of the most useful code tools: the lexer — Leex, the parser generator — Yecc, and a general set of functions to manipulate abstract forms — Syntax Tools.

2.4.1. Leex

Leex is the Erlang lexer generator. The lexer generator takes a description of a DFA from a definitions file xrl and produces an Erlang program that matches tokens described by the DFA.

The details of how to write a DFA definition for a tokenizer is beyond the scope of this book. For a thorough explanation I recommend the "Dragon book" (Compiler … by Aho, Sethi and Ullman). Other good resources are the man and info entry for "flex" the lexer program that inspired leex, and the leex documentation itself. If you have info and flex installed you can read the full manual by typing:

> info flex

The online Erlang documentation also has the leex manual (see yecc.html).

We can use the lexer generator to create an Erlang program which recognizes JSON tokens. By looking at the JSON definition http://www.ecma-international.org/publications/files/ECMA-ST/ECMA-404.pdf we can see that there are only a handful of tokens that we need to handle.

Definitions.

Digit = [0-9]

Digit1to9 = [1-9]

HexDigit = [0-9a-f]

UnescapedChar = [^\"\\]

EscapedChar = (\\\\)|(\\\")|(\\b)|(\\f)|(\\n)|(\\r)|(\\t)|(\\/)

Unicode = (\\u{HexDigit}{HexDigit}{HexDigit}{HexDigit})

Quote = [\"]

Delim = [\[\]:,{}]

Space = [\n\s\t\r]

Rules.

{Quote}{Quote} : {token, {string, TokenLine, ""}}.

{Quote}({EscapedChar}|({UnescapedChar})|({Unicode}))+{Quote} :

{token, {string, TokenLine, drop_quotes(TokenChars)}}.

null : {token, {null, TokenLine}}.

true : {token, {true, TokenLine}}.

false : {token, {false, TokenLine}}.

{Delim} : {token, {list_to_atom(TokenChars), TokenLine}}.

{Space} : skip_token.

-?{Digit1to9}+{Digit}*\.{Digit}+((E|e)(\+|\-)?{Digit}+)? :

{token, {number, TokenLine, list_to_float(TokenChars)}}.

-?{Digit1to9}+{Digit}* :

{token, {number, TokenLine, list_to_integer(TokenChars)+0.0}}.

Erlang code.

-export([t/0]).

drop_quotes([$" | QuotedString]) -> literal(lists:droplast(QuotedString)).

literal([$\\,$" | Rest]) ->

[$"|literal(Rest)];

literal([$\\,$\\ | Rest]) ->

[$\\|literal(Rest)];

literal([$\\,$/ | Rest]) ->

[$/|literal(Rest)];

literal([$\\,$b | Rest]) ->

[$\b|literal(Rest)];

literal([$\\,$f | Rest]) ->

[$\f|literal(Rest)];

literal([$\\,$n | Rest]) ->

[$\n|literal(Rest)];

literal([$\\,$r | Rest]) ->

[$\r|literal(Rest)];

literal([$\\,$t | Rest]) ->

[$\t|literal(Rest)];

literal([$\\,$u,D0,D1,D2,D3|Rest]) ->

Char = list_to_integer([D0,D1,D2,D3],16),

[Char|literal(Rest)];

literal([C|Rest]) ->

[C|literal(Rest)];

literal([]) ->[].

t() ->

{ok,

[{'{',1},

{string,2,"no"},

{':',2},

{number,2,1.0},

{'}',3}

],

4}.By using the Leex compiler we can compile this DFA to Erlang code, and by giving the option dfa_graph we also produce a dot-file which can be viewed with e.g. Graphviz.

1> leex:file(json_tokens, [dfa_graph]).

{ok, "./json_tokens.erl"}

2>You can view the DFA graph using for example dotty.

> dotty json_tokens.dot

We can try our tokenizer on an example json file (test.json).

{

"no" : 1,

"name" : "Jack \"Bee\" Nimble",

"escapes" : "\b\n\r\t\f\//\\",

"format": {

"type" : "rect",

"widths" : [1920,1600],

"height" : -1080,

"interlace" : false,

"unicode" : "\u002f",

"frame rate": 4.5

}

}

First we need to compile our tokenizer, then we read the file and convert it to a string. Finally we can use the string/1 function that leex generates to tokenize the test file.

2> c(json_tokens).

{ok,json_tokens}.

3> f(File), f(L), {ok, File} = file:read_file("test.json"), L = binary_to_list(File), ok.

ok

4> f(Tokens), {ok, Tokens,_} = json_tokens:string(L), hd(Tokens).

{'{',1}

5>The shell function f/1 tells the shell to forget a variable binding. This is useful if you want to try a command that binds a variable multiple times, for example as you are writing the lexer and want to try it out after each rewrite. We will look at the shell commands in detail in the later chapter.

Armed with a tokenizer for JSON we can now write a json parser using the parser generator Yecc.

2.4.2. Yecc

Yecc is a parser generator for Erlang. The name comes from Yacc (Yet another compiler compiler) the canonical parser generator for C.

Now that we have a lexer for JSON terms we can write a parser using yecc.

Nonterminals value values object array pair pairs.

Terminals number string true false null '[' ']' '{' '}' ',' ':'.

Rootsymbol value.

value -> object : '$1'.

value -> array : '$1'.

value -> number : get_val('$1').

value -> string : get_val('$1').

value -> 'true' : get_val('$1').

value -> 'null' : get_val('$1').

value -> 'false' : get_val('$1').

object -> '{' '}' : #{}.

object -> '{' pairs '}' : '$2'.

pairs -> pair : '$1'.

pairs -> pair ',' pairs : maps:merge('$1', '$3').

pair -> string ':' value : #{ get_val('$1') => '$3' }.

array -> '[' ']' : {}.

array -> '[' values ']' : list_to_tuple('$2').

values -> value : [ '$1' ].

values -> value ',' values : [ '$1' | '$3' ].

Erlang code.

get_val({_,_,Val}) -> Val;

get_val({Val, _}) -> Val.Then we can use yecc to generate an Erlang program that implements the parser, and call the parse/1 function provided with the tokens generated by the tokenizer as an argument.

5> yecc:file(yecc_json_parser), c(yecc_json_parser).

{ok,yexx_json_parser}

6> f(Json), {ok, Json} = yecc_json_parser:parse(Tokens).

{ok,#{"escapes" => "\b\n\r\t\f////",

"format" => #{"frame rate" => 4.5,

"height" => -1080.0,

"interlace" => false,

"type" => "rect",

"unicode" => "/",

"widths" => {1920.0,1.6e3}},

"name" => "Jack \"Bee\" Nimble",

"no" => 1.0}}The tools Leex and Yecc are nice when you want to compile your own complete language to the Erlang virtual machine. By combining them with Syntax tools and specifically Merl you can manipulate the Erlang Abstract Syntax tree, either to generate Erlang code or to change the behaviour of Erlang code.

2.5. Syntax Tools and Merl

Syntax Tools is a set of libraries for manipulating the internal representation of Erlang’s Abstract Syntax Trees (ASTs).

The syntax tools applications also includes the tool Merl since Erlang 18.0. With Merl you can very easily manipulate the syntax tree and write parse transforms in Erlang code.

You can find the documentation for Syntax Tools on the Erlang.org site: http://erlang.org/doc/apps/syntax_tools/chapter.html.

2.6. Compiling Elixir

Another approach to writing your own language on top of the Beam is to use the meta programming tools in Elixir. Elixir compiles to Beam code through the Erlang abstraxt syntax tree.

With Elixir’s defmacro you can define your own Domain Specific Language, directly in Elixir.

3. Processes

The concept of lightweight processes is the essence of Erlang and the BEAM; they are what makes BEAM stand out from other virtual machines. In order to understand how the BEAM (and Erlang and Elixir) works you need to know the details of how processes work, which will help you understand the central concept of the BEAM, including what is easy and cheap for a process and what is hard and expensive.

Almost everything in the BEAM is connected to the concept of processes and in this chapter we will learn more about these connections. We will expand on what we learned in the introduction and take a deeper look at concepts such as memory management, message passing, and in particular scheduling.

An Erlang process is very similar to an OS process. It has its own address space, it can communicate with other processes through signals and messages, and the execution is controlled by a preemptive scheduler.

When you have a performance problem in an Erlang or Elixir system the problem is very often stemming from a problem within a particular process or from an imbalance between processes. There are of course other common problems such as bad algorithms or memory problems which we will look at in other chapters. Still, being able to pinpoint the process which is causing the problem is always important, therefore we will look at the tools available in the Erlang RunTime System for process inspection.

We will introduce the tools throughout the chapter as we go through how a process and the scheduler works, and then we will bring all tools together for an exercise at the end.

3.1. What is a Process?

A process is an isolated entity where code execution occurs. A process protects your system from errors in your code by isolating the effect of the error to the process executing the faulty code.

The runtime comes with a number of tools for inspecting processes to help us find bottlenecks, problems and overuse of resources. These tools will help you identify and inspect problematic processes.

3.1.1. Listing Processes from the Shell

Let us dive right in and look at which processes we have

in a running system. The easiest way to do that is to

just start an Erlang shell and issue the shell command i().

In Elixir you can call the function in the shell_default module as

:shell_default.i.

$ erl

Erlang/OTP 19 [erts-8.1] [source] [64-bit] [smp:4:4] [async-threads:10]

[hipe] [kernel-poll:false]

Eshell V8.1 (abort with ^G)

1> i().

Pid Initial Call Heap Reds Msgs

Registered Current Function Stack

<0.0.0> otp_ring0:start/2 376 579 0

init init:loop/1 2

<0.1.0> erts_code_purger:start/0 233 4 0

erts_code_purger erts_code_purger:loop/0 3

<0.4.0> erlang:apply/2 987 100084 0

erl_prim_loader erl_prim_loader:loop/3 5

<0.30.0> gen_event:init_it/6 610 226 0

error_logger gen_event:fetch_msg/5 8

<0.31.0> erlang:apply/2 1598 416 0

application_controlle gen_server:loop/6 7

<0.33.0> application_master:init/4 233 64 0

application_master:main_loop/2 6

<0.34.0> application_master:start_it/4 233 59 0

application_master:loop_it/4 5

<0.35.0> supervisor:kernel/1 610 1767 0

kernel_sup gen_server:loop/6 9

<0.36.0> erlang:apply/2 6772 73914 0

code_server code_server:loop/1 3

<0.38.0> rpc:init/1 233 21 0

rex gen_server:loop/6 9

<0.39.0> global:init/1 233 44 0

global_name_server gen_server:loop/6 9

<0.40.0> erlang:apply/2 233 21 0

global:loop_the_locker/1 5

<0.41.0> erlang:apply/2 233 3 0

global:loop_the_registrar/0 2

<0.42.0> inet_db:init/1 233 209 0

inet_db gen_server:loop/6 9

<0.44.0> global_group:init/1 233 55 0

global_group gen_server:loop/6 9

<0.45.0> file_server:init/1 233 79 0

file_server_2 gen_server:loop/6 9

<0.46.0> supervisor_bridge:standard_error/ 233 34 0

standard_error_sup gen_server:loop/6 9

<0.47.0> erlang:apply/2 233 10 0

standard_error standard_error:server_loop/1 2

<0.48.0> supervisor_bridge:user_sup/1 233 54 0

gen_server:loop/6 9

<0.49.0> user_drv:server/2 987 1975 0

user_drv user_drv:server_loop/6 9

<0.50.0> group:server/3 233 40 0

user group:server_loop/3 4

<0.51.0> group:server/3 987 12508 0

group:server_loop/3 4

<0.52.0> erlang:apply/2 4185 9537 0

shell:shell_rep/4 17

<0.53.0> kernel_config:init/1 233 255 0

gen_server:loop/6 9

<0.54.0> supervisor:kernel/1 233 56 0

kernel_safe_sup gen_server:loop/6 9

<0.58.0> erlang:apply/2 2586 18849 0

c:pinfo/1 50

Total 23426 220863 0

222

okThe i/0 function prints out a list of all processes in the system.

Each process gets two lines of information. The first two lines

of the printout are the headers telling you what the information

means. As you can see you get the Process ID (Pid) and the name of the

process if any, as well as information about the code the process

is started with and is executing. You also get information about the

heap and stack size and the number of reductions and messages in

the process. In the rest of this chapter we will learn in detail

what a stack, a heap, a reduction and a message are. For now we

can just assume that if there is a large number for the heap size,

then the process uses a lot of memory and if there is a large number

for the reductions then the process has executed a lot of code.

We can further examine a process with the i/3 function. Let

us take a look at the code_server process. We can see in the

previous list that the process identifier (pid) of the code_server

is <0.36.0>. By calling i/3 with the three numbers of

the pid we get this information:

2> i(0,36,0).

[{registered_name,code_server},

{current_function,{code_server,loop,1}},

{initial_call,{erlang,apply,2}},

{status,waiting},

{message_queue_len,0},

{messages,[]},

{links,[<0.35.0>]},

{dictionary,[]},

{trap_exit,true},

{error_handler,error_handler},

{priority,normal},

{group_leader,<0.33.0>},

{total_heap_size,46422},

{heap_size,46422},

{stack_size,3},

{reductions,93418},

{garbage_collection,[{max_heap_size,#{error_logger => true,

kill => true,

size => 0}},

{min_bin_vheap_size,46422},

{min_heap_size,233},

{fullsweep_after,65535},

{minor_gcs,0}]},

{suspending,[]}]

3>We got a lot of information from this call and in the rest of this

chapter we will learn in detail what most of these items mean. The

first line tells us that the process has been given a name

code_server. Next we can see which function the process is

currently executing or suspended in (current_function)

and the name of the function that the process started executing in

(initial_call).

We can also see that the process is suspended waiting for messages

({status,waiting}) and that there are no messages in the

mailbox ({message_queue_len,0}, {messages,[]}). We will look

closer at how message passing works later in this chapter.

The fields priority, suspending, reductions, links,

trap_exit, error_handler, and group_leader control the process

execution, error handling, and IO. We will look into this a bit more

when we introduce the Observer.

The last few fields (dictionary, total_heap_size, heap_size,

stack_size, and garbage_collection) give us information about the

process memory usage. We will look at the process memory areas in

detail in chapter Chapter 12.

Another, even more intrusive way of getting information

about processes is to use the process information given

by the BREAK menu: ctrl+c p [enter]. Note that while

you are in the BREAK state the whole node freezes.

3.1.2. Programmatic Process Probing

The shell functions just print the information about the

process but you can actually get this information as data,

so you can write your own tools for inspecting processes.

You can get a list of all processes with erlang:processes/0,

and more information about a process with

erlang:process_info/1. We can also use the function

whereis/1 to get a pid from a name:

1> Ps = erlang:processes().

[<0.0.0>,<0.1.0>,<0.4.0>,<0.30.0>,<0.31.0>,<0.33.0>,

<0.34.0>,<0.35.0>,<0.36.0>,<0.38.0>,<0.39.0>,<0.40.0>,

<0.41.0>,<0.42.0>,<0.44.0>,<0.45.0>,<0.46.0>,<0.47.0>,

<0.48.0>,<0.49.0>,<0.50.0>,<0.51.0>,<0.52.0>,<0.53.0>,

<0.54.0>,<0.60.0>]

2> CodeServerPid = whereis(code_server).

<0.36.0>

3> erlang:process_info(CodeServerPid).

[{registered_name,code_server},

{current_function,{code_server,loop,1}},

{initial_call,{erlang,apply,2}},

{status,waiting},

{message_queue_len,0},

{messages,[]},

{links,[<0.35.0>]},

{dictionary,[]},

{trap_exit,true},

{error_handler,error_handler},

{priority,normal},

{group_leader,<0.33.0>},

{total_heap_size,24503},

{heap_size,6772},

{stack_size,3},

{reductions,74260},

{garbage_collection,[{max_heap_size,#{error_logger => true,

kill => true,

size => 0}},

{min_bin_vheap_size,46422},

{min_heap_size,233},

{fullsweep_after,65535},

{minor_gcs,33}]},

{suspending,[]}]By getting process information as data we can write code

to analyze or sort the data as we please. If we grab all

processes in the system (with erlang:processes/0) and

then get information about the heap size of each process

(with erlang:process_info(P,total_heap_size)) we can

then construct a list with pid and heap size and sort

it on heap size:

1> lists:reverse(lists:keysort(2,[{P,element(2,

erlang:process_info(P,total_heap_size))}

|| P <- erlang:processes()])).

[{<0.36.0>,24503},

{<0.52.0>,21916},

{<0.4.0>,12556},

{<0.58.0>,4184},

{<0.51.0>,4184},

{<0.31.0>,3196},

{<0.49.0>,2586},

{<0.35.0>,1597},

{<0.30.0>,986},

{<0.0.0>,752},

{<0.33.0>,609},

{<0.54.0>,233},

{<0.53.0>,233},

{<0.50.0>,233},

{<0.48.0>,233},

{<0.47.0>,233},

{<0.46.0>,233},

{<0.45.0>,233},

{<0.44.0>,233},

{<0.42.0>,233},

{<0.41.0>,233},

{<0.40.0>,233},

{<0.39.0>,233},

{<0.38.0>,233},

{<0.34.0>,233},

{<0.1.0>,233}]

2>You might notice that many processes have a heap size of 233, that is because it is the default starting heap size of a process.

See the documentation of the module erlang for a full description of

the information available with process_info.

Notice how the process_info/1 function only returns a subset of all

the information available for the process and how the process_info/2

function can be used to fetch extra information. As an example, to

extract the backtrace for the code_server process above, we could

run:

3> process_info(whereis(code_server), backtrace).

{backtrace,<<"Program counter: 0x00000000161de900 (code_server:loop/1 + 152)\nCP: 0x0000000000000000 (invalid)\narity = 0\n\n0"...>>}See the three dots at the end of the binary above? That means that the

output has been truncated. A useful trick to see the whole value is to

wrap the above function call using the rp/1 function:

4> rp(process_info(whereis(code_server), backtrace)).An alternative is to use the io:put_chars/1 function, as follows:

5> {backtrace, Backtrace} = process_info(whereis(code_server), backtrace).

{backtrace,<<"Program counter: 0x00000000161de900 (code_server:loop/1 + 152)\nCP: 0x0000000000000000 (invalid)\narity = 0\n\n0"...>>}

6> io:put_chars(Backtrace).Due to its verbosity, the output for commands 4> and 6> has not

been included here, but feel free to try the above commands in your

Erlang shell.

3.1.3. Using the Observer to Inspect Processes

A third way of examining processes is with the Observer. The Observer is an extensive graphical interface for inspecting the Erlang RunTime System. We will use the Observer throughout this book to examine different aspects of the system.

The Observer can either be started from the OS shell and attach itself

to an node or directly from an Elixir or Erlang shell. For now we will

just start the Observer from the Elixir shell with :observer.start

or from the Erlang shell with:

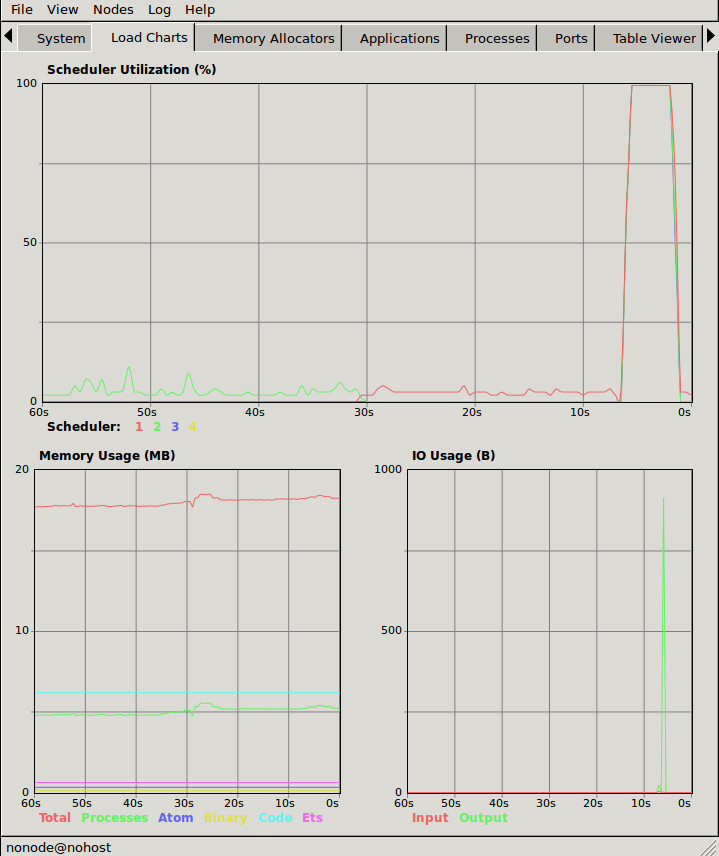

7> observer:start().When the Observer is started it will show you a system overview, see the following screen shot:

We will go over some of this information in detail later in

this and the next chapter. For now we will just use the Observer to look

at the running processes. First we take a look at the

Applications tab which shows the supervision

tree of the running system:

Here we get a graphical view of how the processes are linked. This is a very nice way to get an overview of how a system is structured. You also get a nice feeling of processes as isolated entities floating in space connected to each other through links.

To actually get some useful information about the processes

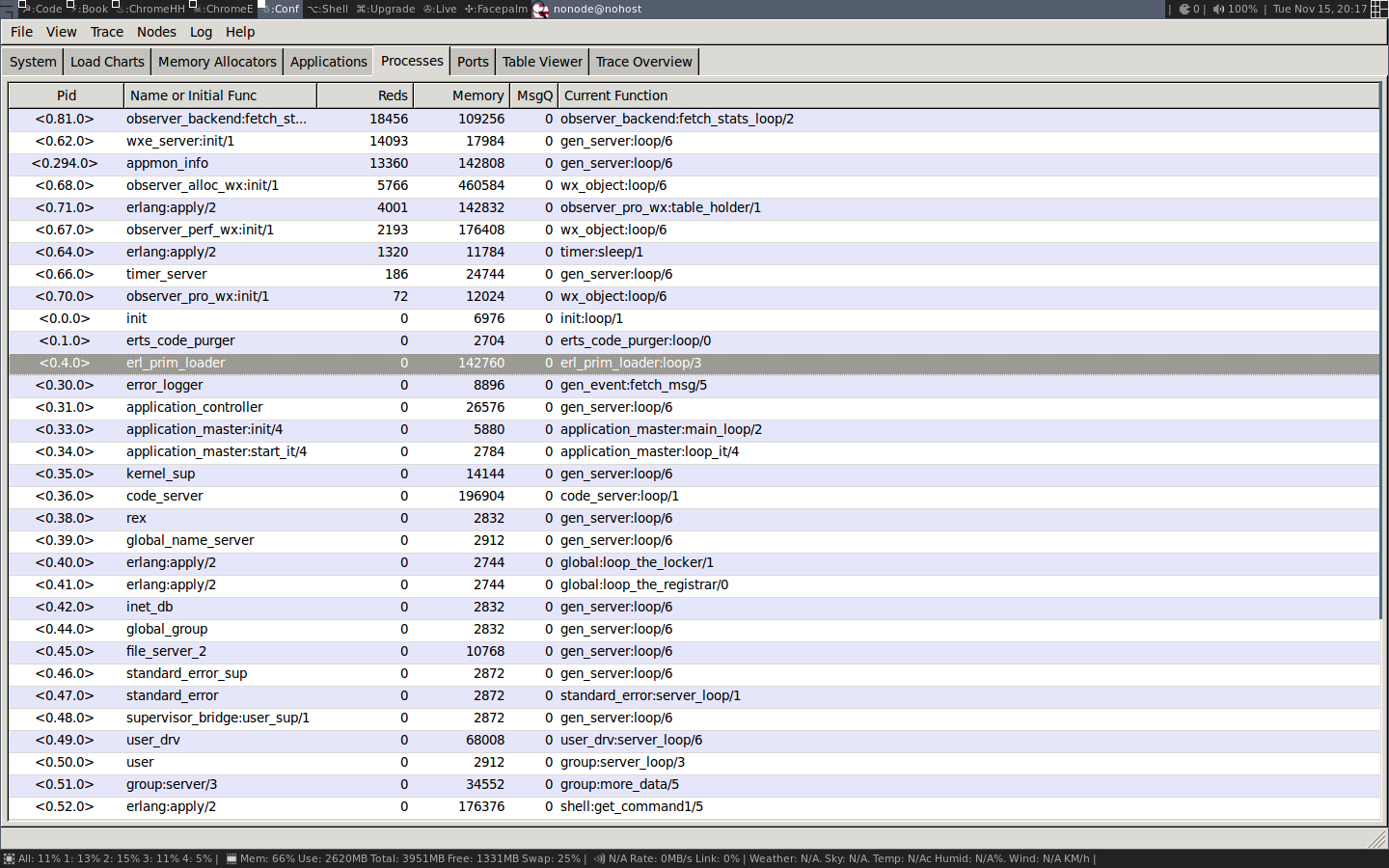

we switch to the Processes tab:

In this view we get basically the same information as with

i/0 in the shell. We see the pid, the registered name,

number of reductions, memory usage and number of messages

and the current function.

We can also look into a process by double clicking on its

row, for example on the code server, to get the kind of

information you can get with process_info/2:

We will not go through what all this information means right now, but if you keep on reading all will eventually be revealed.

Now that we have a basic understanding of what a process is and some tools to find and inspect processes in a system we are ready to dive deeper to learn how a process is implemented.

3.2. Processes Are Just Memory

A process is basically four blocks of memory: a stack, a heap, a message area, and the Process Control Block (the PCB).

The stack is used for keeping track of program execution by storing return addresses, for passing arguments to functions, and for keeping local variables. Larger structures, such as lists and tuples are stored on the heap.

The message area, also called the mailbox, is used to store messages sent to the process from other processes. The process control block is used to keep track of the state of the process.

See the following figure for an illustration of a process as memory:

This picture of a process is very much simplified, and we will go through a number of iterations of more refined versions to get to a more accurate picture.

The stack, the heap, and the mailbox are all dynamically allocated and can grow and shrink as needed. We will see exactly how this works in later chapters. The PCB on the other hand is statically allocated and contains a number of fields that controls the process.

We can actually inspect some of these memory areas by using HiPE’s Built In Functions (HiPE BIFs) for introspection. With these BIFs we can print out the memory content of stacks, heaps, and the PCB. The raw data is printed and in most cases a human readable version is pretty printed alongside the data. To really understand everything that we see when we inspect the memory we will need to know more about the Erlang tagging scheme (which we will go through in Chapter 4 and about the execution model and error handling which we will go through in Chapter 5, but using these tools will give us a nice view of how a process really is just memory.

We can see the context of the stack of a process with hipe_bifs:show_estack/1:

1> hipe_bifs:show_estack(self()).

| BEAM STACK |

| Address | Contents |

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238310 | 0x00007f9cc2ea6fe8 | BEAM PC shell:exprs/7 + 0x4e

| 0x00007f9cc3238318 | 0xfffffffffffffffb | []

| 0x00007f9cc3238320 | 0x000000000000644b | none

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238328 | 0x00007f9cc2ea6708 | BEAM PC shell:eval_exprs/7 + 0xf

| 0x00007f9cc3238330 | 0xfffffffffffffffb | []

| 0x00007f9cc3238338 | 0xfffffffffffffffb | []

| 0x00007f9cc3238340 | 0x000000000004f3cb | cmd

| 0x00007f9cc3238348 | 0xfffffffffffffffb | []

| 0x00007f9cc3238350 | 0x00007f9cc3237102 | {value,#Fun<shell.5.104321512>}

| 0x00007f9cc3238358 | 0x00007f9cc323711a | {eval,#Fun<shell.21.104321512>}

| 0x00007f9cc3238360 | 0x00000000000200ff | 8207

| 0x00007f9cc3238368 | 0xfffffffffffffffb | []

| 0x00007f9cc3238370 | 0xfffffffffffffffb | []

| 0x00007f9cc3238378 | 0xfffffffffffffffb | []

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238380 | 0x00007f9cc2ea6300 | BEAM PC shell:eval_loop/3 + 0x47

| 0x00007f9cc3238388 | 0xfffffffffffffffb | []

| 0x00007f9cc3238390 | 0xfffffffffffffffb | []

| 0x00007f9cc3238398 | 0xfffffffffffffffb | []

| 0x00007f9cc32383a0 | 0xfffffffffffffffb | []

| 0x00007f9cc32383a8 | 0x000001a000000343 | <0.52.0>

|....................|....................| BEAM CATCH FRAME

| 0x00007f9cc32383b0 | 0x0000000000005a9b | CATCH 0x00007f9cc2ea67d8

| | | (BEAM shell:eval_exprs/7 + 0x29)

|********************|********************|

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc32383b8 | 0x000000000093aeb8 | BEAM PC normal-process-exit

| 0x00007f9cc32383c0 | 0x00000000000200ff | 8207

| 0x00007f9cc32383c8 | 0x000001a000000343 | <0.52.0>

|--------------------|--------------------|

true

2>We will look closer at the values on the stack and the

heap in Chapter 4.

The content of the heap is printed by hipe_bifs:show_heap/1.

Since we do not want to list a large heap here we’ll just

spawn a new process that does nothing and show that heap:

2> hipe_bifs:show_heap(spawn(fun () -> ok end)).

From: 0x00007f7f33ec9588 to 0x00007f7f33ec9848

| H E A P |

| Address | Contents |

|--------------------|--------------------|

| 0x00007f7f33ec9588 | 0x00007f7f33ec959a | #Fun<erl_eval.20.52032458>

| 0x00007f7f33ec9590 | 0x00007f7f33ec9839 | [[]]

| 0x00007f7f33ec9598 | 0x0000000000000154 | Thing Arity(5) Tag(20)

| 0x00007f7f33ec95a0 | 0x00007f7f3d3833d0 | THING

| 0x00007f7f33ec95a8 | 0x0000000000000000 | THING

| 0x00007f7f33ec95b0 | 0x0000000000600324 | THING

| 0x00007f7f33ec95b8 | 0x0000000000000000 | THING

| 0x00007f7f33ec95c0 | 0x0000000000000001 | THING

| 0x00007f7f33ec95c8 | 0x000001d0000003a3 | <0.58.0>

| 0x00007f7f33ec95d0 | 0x00007f7f33ec95da | {[],{eval...

| 0x00007f7f33ec95d8 | 0x0000000000000100 | Arity(4)

| 0x00007f7f33ec95e0 | 0xfffffffffffffffb | []

| 0x00007f7f33ec95e8 | 0x00007f7f33ec9602 | {eval,#Fun<shell.21.104321512>}

| 0x00007f7f33ec95f0 | 0x00007f7f33ec961a | {value,#Fun<shell.5.104321512>}...

| 0x00007f7f33ec95f8 | 0x00007f7f33ec9631 | [{clause...

...

| 0x00007f7f33ec97d0 | 0x00007f7f33ec97fa | #Fun<shell.5.104321512>

| 0x00007f7f33ec97d8 | 0x00000000000000c0 | Arity(3)

| 0x00007f7f33ec97e0 | 0x0000000000000e4b | atom

| 0x00007f7f33ec97e8 | 0x000000000000001f | 1

| 0x00007f7f33ec97f0 | 0x0000000000006d0b | ok

| 0x00007f7f33ec97f8 | 0x0000000000000154 | Thing Arity(5) Tag(20)

| 0x00007f7f33ec9800 | 0x00007f7f33bde0c8 | THING

| 0x00007f7f33ec9808 | 0x00007f7f33ec9780 | THING

| 0x00007f7f33ec9810 | 0x000000000060030c | THING

| 0x00007f7f33ec9818 | 0x0000000000000002 | THING

| 0x00007f7f33ec9820 | 0x0000000000000001 | THING

| 0x00007f7f33ec9828 | 0x000001d0000003a3 | <0.58.0>

| 0x00007f7f33ec9830 | 0x000001a000000343 | <0.52.0>

| 0x00007f7f33ec9838 | 0xfffffffffffffffb | []

| 0x00007f7f33ec9840 | 0xfffffffffffffffb | []

|--------------------|--------------------|

true

3>We can also print the content of some of the fields in

the PCB with hipe_bifs:show_pcb/1:

3> hipe_bifs:show_pcb(self()).

P: 0x00007f7f3cbc0400

---------------------------------------------------------------

Offset| Name | Value | *Value |

0 | id | 0x000001d0000003a3 | |

72 | htop | 0x00007f7f33f15298 | |

96 | hend | 0x00007f7f33f16540 | |

88 | heap | 0x00007f7f33f11470 | |

104 | heap_sz | 0x0000000000000a1a | |

80 | stop | 0x00007f7f33f16480 | |

592 | gen_gcs | 0x0000000000000012 | |

594 | max_gen_gcs | 0x000000000000ffff | |

552 | high_water | 0x00007f7f33f11c50 | |

560 | old_hend | 0x00007f7f33e90648 | |

568 | old_htop | 0x00007f7f33e8f8e8 | |

576 | old_head | 0x00007f7f33e8e770 | |

112 | min_heap_.. | 0x00000000000000e9 | |

328 | rcount | 0x0000000000000000 | |

336 | reds | 0x0000000000002270 | |

16 | tracer | 0xfffffffffffffffb | |

24 | trace_fla.. | 0x0000000000000000 | |

344 | group_lea.. | 0x0000019800000333 | |

352 | flags | 0x0000000000002000 | |

360 | fvalue | 0xfffffffffffffffb | |

368 | freason | 0x0000000000000000 | |

320 | fcalls | 0x00000000000005a2 | |

384 | next | 0x0000000000000000 | |

48 | reg | 0x0000000000000000 | |

56 | nlinks | 0x00007f7f3cbc0750 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

464 | dictionary | 0x0000000000000000 | |

472 | seq..clock | 0x0000000000000000 | |

480 | seq..astcnt | 0x0000000000000000 | |

488 | seq..token | 0xfffffffffffffffb | |

496 | intial[0] | 0x000000000000320b | |

504 | intial[1] | 0x0000000000000c8b | |

512 | intial[2] | 0x0000000000000002 | |

520 | current | 0x00007f7f3be87c20 | 0x000000000000ed8b |

296 | cp | 0x00007f7f3d3a5100 | 0x0000000000440848 |

304 | i | 0x00007f7f3be87c38 | 0x000000000044353a |

312 | catches | 0x0000000000000001 | |

224 | arity | 0x0000000000000000 | |

232 | arg_reg | 0x00007f7f3cbc04f8 | 0x000000000000320b |

240 | max_arg_reg | 0x0000000000000006 | |

248 | def..reg[0] | 0x000000000000320b | |

256 | def..reg[1] | 0x0000000000000c8b | |

264 | def..reg[2] | 0x00007f7f33ec9589 | |

272 | def..reg[3] | 0x0000000000000000 | |

280 | def..reg[4] | 0x0000000000000000 | |

288 | def..reg[5] | 0x00000000000007d0 | |

136 | nsp | 0x0000000000000000 | |

144 | nstack | 0x0000000000000000 | |

152 | nstend | 0x0000000000000000 | |

160 | ncallee | 0x0000000000000000 | |

56 | ncsp | 0x0000000000000000 | |

64 | narity | 0x0000000000000000 | |

---------------------------------------------------------------

true

4>Now armed with these inspection tools we are ready to look at what these fields in the PCB mean.

3.3. The PCB

The Process Control Block contains all the fields that control the behaviour and current state of a process. In this section and the rest of the chapter we will go through the most important fields. We will leave out some fields that have to do with execution and tracing from this chapter, instead we will cover those in Chapter 5.

If you want to dig even deeper than we will go in this chapter you can

look at the C source code. The PCB is implemented as a C struct called process in the file

erl_process.h.

The field id contains the process ID (or PID).

0 | id | 0x000001d0000003a3 | |

The process ID is an Erlang term and hence tagged (See Chapter 4). This means that the 4 least significant bits are a tag (0011). In the code section there is a module for inspecting Erlang terms (see show.erl) which we will cover in the chapter on types. We can use it now to to examine the type of a tagged word though.

4> show:tag_to_type(16#0000001d0000003a3).

pid

5>The fields htop and stop are pointers to the top of the heap and

the stack, that is, they are pointing to the next free slots on the heap

or stack. The fields heap (start) and hend points to the start and

the stop of the whole heap, and heap_sz gives the size of the heap

in words. That is hend - heap = heap_sz * 8 on a 64 bit machine and

hend - heap = heap_sz * 4 on a 32 bit machine.

The field min_heap_size is the size, in words, that the heap starts

with and which it will not shrink smaller than, the default value is

233.

We can now refine the picture of the process heap with the fields from the PCB that controls the shape of the heap:

But wait, how come we have a heap start and a heap end, but no start and stop for the stack? That is because the BEAM uses a trick to save space and pointers by allocating the heap and the stack together. It is time for our first revision of our process as memory picture. The heap and the stack are actually just one memory area:

The stack grows towards lower memory addresses and the heap towards higher memory, so we can also refine the picture of the heap by adding the stack top pointer to the picture:

If the pointers htop and stop were to meet, the

process would run out of free memory and would have to do a garbage

collection to free up memory.

3.4. The Garbage Collector (GC)

The heap memory management schema is to use a per process copying generational garbage collector. When there is no more space on the heap (or the stack, since they share the allocated memory block), the garbage collector kicks in to free up memory.

The GC allocates a new memory area called the to space. Then it goes through the stack to find all live roots and follows each root and copies the data on the heap to the new heap. Finally it also copies the stack to the new heap and frees up the old memory area.

The GC is controlled by these fields in the PCB:

Eterm *high_water;

Eterm *old_hend; /* Heap pointers for generational GC. */

Eterm *old_htop;

Eterm *old_heap;

Uint max_heap_size; /* Maximum size of heap (in words). */

Uint16 gen_gcs; /* Number of (minor) generational GCs. */

Uint16 max_gen_gcs; /* Max minor gen GCs before fullsweep. */Since the garbage collector is generational it will use a heuristic to just look at

new data most of the time. That is, in what is called a minor

collection, the GC

only looks at the top part of the stack and moves new data to the new

heap. Old data, that is data allocated below the high_water mark

(see the figure below) on the heap, is moved to a special area called the old

heap.

Most of the time, then, there is

another heap area for

each process: the old heap, handled by the fields old_heap,

old_htop and old_hend in the PCB. This almost brings us back to

our original picture of a process as four memory areas:

When a process starts there is no old heap, but as soon as young data has matured to old data and there is a garbage collection, the old heap is allocated. The old heap is garbage collected when there is a major collection, also called a full sweep. See Chapter 12 for more details of how garbage collection works. In that chapter we will also look at how to track down and fix memory related problems.

3.5. Mailboxes and Message Passing