In the first part of this article we examined the types of conflicts and their causes. In this part, we will analyse some of the methods available to deal with conflicts.

Pessimistic locking (or: conflicts won't happen)

Applicability: synchronous clusters with 2pc

We've covered this topic in the previous article, but it's worth repeating. If you use a synchronous cluster, you don't have conflicts. For example, MySQL Cluster ensures consistent data with updates coming from different nodes. However, MySQL Cluster is not a replacement for a MySQL server, and it has severe limitations.

Optimistic locking

Applicability: synchronous clusters without 2pc (Galera)

Conflicting transactions proceed on different nodes with local locking. The last one then rolls back when it discovers a prior transaction got in first on the same data. For a more detailed analysis of this handling method, see this article by Jay Janssen

Conflict resolution after-the fact

Applicability: EnterpriseDB (none so far for MySQL)

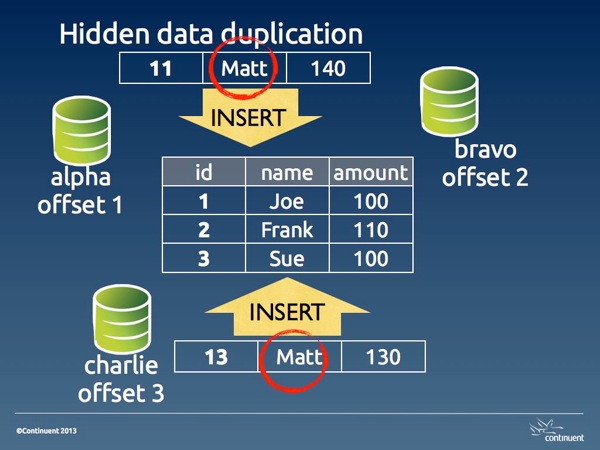

Asynchronous replication is hard for conflicts. A conflict in this state means that the data has been applied to the wrong node or to the wrong object, and something must be done to solve the issue.

Typical remedies offered for conflict resolution are:

- Earliest or Latest Timestamp: This method says that the oldest or the latest record prevails when a conflict happens. This is hardly a reliable resolution. It's the easiest method to implement, and thus it is offered. But it often results in a hidden data inconsistency problem, where we may find data that we don't expect. The current data was applied simply because it was updated later than the correct record. Also, timestamp calculation requires time synchronization across servers, and possibly across timezones, which calls for extra effort to keep the system functioning.

- Node Priority: There is a hierarchy of nodes, with different ranks. When a conflict occurs, the node with the highest rank prevails. This method requires the data origin to be stored alongside the contents, and to be easily searchable when conflicts occur. It must also take into account offline nodes, and therefore it should keep the conflict resolution metadata until the offline nodes are back in synch.

Methods that could be implemented in a more advanced technology may include:

- Origin enforcement: data coming from authorized nodes will be preserved. Data from wrong origin will be dropped, and a consolidation event will be generated and sent to the other nodes. This method would be possible in systems (like Tungsten) that keep track of the event origin.

- Data merge: If possible and desirable, data from two different sources can be preserved, and merged in the destination table. This rule should also originate a new event to fix the data in the other nodes.

Schema renaming

Applicability: fan-in topologies

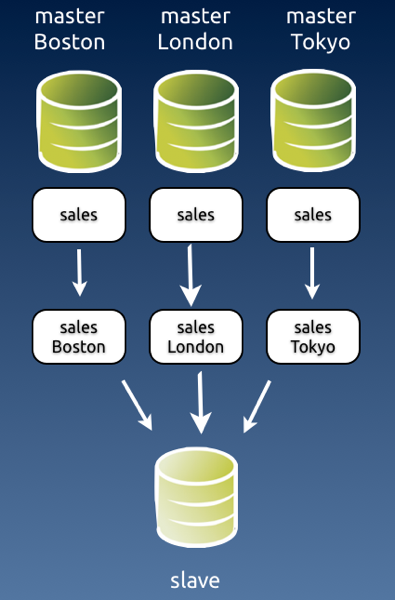

Image #1 - Fan-in topology with likely conflicts.

A fan-in topology is easy to implement with Tungsten Replicator, but not easy to maintain. By its nature, fan-in is a conflict waiting to happen. Assuming that all the masters have the same structure, they will replicate multiple changes into the same schema, and it is quite likely that some changes will clash. For this reason, the simple solution often adopted is renaming the schema before the data reaches the slave.

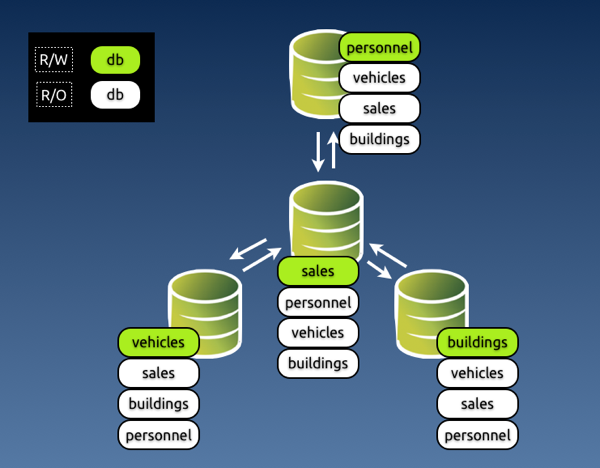

Image #2 - Fan-in topology with schema renaming.

I know of at least one user who has successfully applied this technique for a cluster made of 70 masters and one slave.

Conflict prevention: Discipline

Applicability: all topologies

A simple way of preventing conflicts, and one that would make life easier for all is discipline. The organization decides which entry points can update which data, and conflicts are not possible, because the data is inserted or modified only in the places where it is supposed to be.

Image #3 - Preventing conflicts with discipline in a star topology.

Conflict prevention: Enforced discipline

Applicability: all topologies

If you have worked in any large organization, either public or private, you know that discipline alone is the worst method you can rely on for something so delicate and valuable as your data. The reasons why this paradigm could fail are many: it could be because some people dislike discipline, or because someone makes a mistake, or because there are too many rules and they don't remember, or because of an application bug that lets you update what you shouldn't.

Either way, you end up with a system that has conflicts and nobody knows what happened and how to fix them. However, there is a way of enforcing this system based on discipline.

This is the "poor-man's" conflict avoidance system. It is based on simple technology, available in most database servers. If you can install a multi-master topology, using either native MySQL (circular) replication or Tungsten Replicator topologies, you can also apply this method.

The key to the system is to grant different privileges for every master. Looking at image #3, you can enforce discipline by granting different privileges to the application user in every master.

In master #1, where we can update personnel, app_user will have SELECT privileges on all databases, and all privileges on personnel.

In master #2, where we can update sales, app_user will have all privileges on sales and read only access to the other databases, and so on.

The key to make this system work well is that you should assign the privileges and not let the GRANT statement being replicated. It should work like this:

# master 1 GRANT SELECT on *.* to app_user identified by 'my password'; # This is good for all masters. Let it replicate # master 1 SET SQL_LOG_BIN=OFF; GRANT ALL on personnel.* to app_user; # This won't replicate # master 2 SET SQL_LOG_BIN=OFF; GRANT ALL on sales.* to app_user; # master 3 SET SQL_LOG_BIN=OFF; GRANT ALL on vehicles.* to app_user; # master 4 SET SQL_LOG_BIN=OFF; GRANT ALL on buildings.* to app_user;

This method works quite well. Since updates for a given schema can be applied only in one master, there is little chance of any mischief happening. Conflicts are not completely removed, though. There are super users and maintenance users who can, consciously or not, introduce errors. For these cases, you may want to look at the next section.

Enforced discipline with certified origin

Applicability: all Tungsten topologies

Discipline based on granted privileges is often robust enough for your needs. However, if you want to keep track of where the data comes from, you should look at a System Of Records technology, where the origin of each piece of data can be traced to its origin.

Tungsten Replicator implements this technology with several topologies. The theory of this matter is beautifully explained by Robert Hodges in an article written some time ago. Here I would like to look at the practical stuff.

To implement a System of Records in Tungsten, you decide where you want to update each schema (which is defined as a shard in our lingo,) assign that schema to a service, and the replicator will enforce your rules.

Once you have defined the shards, you can set the rules. When an event comes to a slave from an UNKNOWN shard, i.e. a shard that was not among the defined rules, you can:

- Accept the event; (not recommended, really)

- Drop the event silently

- Drop the event with a warning in the logs;

- Generate an error that will break replication (recommended)

You can choose among the above actions when setting a rule for events that come from UNWANTED shards, i.e. a shard that is not the one designated to update that schema.

Here's an example of a shard definition based on an all-masters schema with three nodes:

Image #4 - Sample conflict prevention in an all-masters topology

# Options to add during installation --svc-extractor-filters=shardfilter # policy for unknown shards --property=replicator.filter.shardfilter.unknownShardPolicy=error # policy for unwanted shards --property=replicator.filter.shardfilter.unwantedShardPolicy=error # Whether the policy for unwanted shards is activated or not --property=replicator.filter.shardfilter.enforceHomes=false # whether we allow whitelists to be created --property=replicator.filter.shardfilter.allowWhitelisted=false # Loading the rules set $ trepctl -host host1 -service charlie shard -insert < shards.map $ cat shards.map shard_id master critical employees alpha false buildings bravo false vehicles charlie false test whitelisted false

The rules are set by service, rather than host name. The schema 'employees' can be updated by the service named 'alpha', which has its master in host #1. Similarly, 'buildings' can be updated by 'bravo', with a master in host #2, and 'vehicles' is updated by 'charlie' master service in host #3. Remember that in Tungsten each replication stream from one master to many slaves is a separate service. This way we can keep track of the events origin. Even if the event is routed through a hub in a star topology, it retains its origin in the metadata.

The last line of the rules says that the schema 'test' is whitelisted, i.e. it can be freely updated by any master. And this means that conflicts can happen there, so be careful if you use this feature!

Image #5 - Example of a legitimate event coming through

When an expected event comes through, all is well. Each node checks that the event was originated by the authorised master, and the event is applied to the slave service.

Image #6 - Example of an event originated from an unauthorised node

When the event comes from a node that was not authorised, Tungsten looks at the rules for such case. In our setup, the rule says 'error', and therefore replication will break at the receiving end of the service 'bravo' in host #1 and host #3.

mysql #2> create table employees.nicknames( ... ) # Only server #2 creates the table # slave service 'bravo' in host1 and host3 get an error # No table is created in hosts #1 and #3

To detect the error, we can ask for the list of services in host #1 and host #3. What we will see is something like this.

#3 $ trepctl services | simple_services alpha [slave] seqno: 7 - latency: 0.136 - ONLINE bravo [slave] seqno: -1 - latency: -1.000 - OFFLINE:ERROR charlie [master] seqno: 66 - latency: 0.440 - ONLINE

This Listing says that replication was stopped with an error in slave service 'bravo'. To determine what happened exactly, we ask for the status of that service:

#3 $ trepctl -service bravo status NAME VALUE ---- ----- appliedLastEventId : NONE appliedLastSeqno : -1 appliedLatency : -1.0 (...) offlineRequests : NONE pendingError : Stage task failed: q-to-dbms pendingErrorCode : NONE pendingErrorEventId : mysql-bin.000002:0000000000001241;0 pendingErrorSeqno : 7 pendingExceptionMessage: Rejected event from wrong shard: seqno=7 shard ID=employees shard master=alpha service=bravo (...)

This status gives us quite a lot of useful information:

- The event with Global transaction ID (GTID) # 7 was rejected;

- The reason for rejection was because it came from the wrong shard;

- The expected shard master (i.e. the authorized service) was alpha;

- The event was instead originated from service bravo.

With the above information, we can take action to fix the event. We know that GTID 7 is wrong, so we can skip it in both servers where the error occurred. To clean up the error, we can simply generate the correct event in the authorized master

#host #1 $ trepctl -service bravo online -skip-seqno 7 mysql #1> drop table if exists employees.nicknames; mysql #1> create table if exists employees.nicknames ( ... ) ; #3 $ trepctl -service bravo online -skip-seqno 7

Statement-based vs row-based replication

As a general note about conflict solving, I need to mention that, in most cases, using row-based replication vs. statement based will help identifying conflicts, making them easier to clean up.

Even when the conflict involves a deleted row, row-based events will contain enough information that will allow us to identifying the critical data needed to recover information.

Be aware that, if you use binlog-row-image=minimal in MySQL 5.6, the binary log entry for a DELETE event will only include the primary key.

More about filters

We have seen at least in two examples (server renaming and conflict prevention) that you can help avoid conflicts with filters. This is a powerful feature that should be taken into account when planning a multi-master topology.

MySQL native replication offers very little in matter of data transformation through filtering. Tungsten Replicator, instead, allows you to define filters at several stages of the replication process: when extracting the data, after transporting it to the slaves, before applying it. You can write your own filters in JavaScript, and do with the data pretty much everything you want. If you have creative ideas about solving conflicts by manipulating data in transit, there is a good chance that you can implement them using filters. This topic deserves more than a paragraph, and probably I will come back to it soon with a full fledged article.

Parting thoughts

Multi master topologies are much coveted features. However, they often introduce the risk of conflicts.

Dealing with conflicts becomes somewhat easier if you understand how they happen and what kind of problems they generate.

There is no silver bullet solution for conflicts, but recent technology and good organization can help you ease the pain.