Abstract

The perspective camera and the isometric surface prior have recently gathered increased attention for Non-Rigid Structure-from-Motion (NRSfM). Despite the recent progress, several challenges remain, particularly the computational complexity and the unknown camera focal length. In this paper we present a method for incremental Non-Rigid Structure-from-Motion (NRSfM) with the perspective camera model and the isometric surface prior with unknown focal length. In the template-based case, we provide a method to estimate four parameters of the camera intrinsics. For the template-less scenario of NRSfM, we propose a method to upgrade reconstructions obtained for one focal length to another based on local rigidity and the so-called Maximum Depth Heuristics (MDH). On its basis we propose a method to simultaneously recover the focal length and the non-rigid shapes. We further solve the problem of incorporating a large number of points and adding more views in MDH-based NRSfM and efficiently solve them with Second-Order Cone Programming (SOCP). This does not require any shape initialization and produces results orders of times faster than many methods. We provide evaluations on standard sequences with ground-truth and qualitative reconstructions on challenging YouTube videos. These evaluations show that our method performs better in both speed and accuracy than the state of the art.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Unknown Focal Length

- Non-Rigid Structure-from-Motion (NRSfM)

- Perspective Camera

- Euclidean Distance Location

- Correct Intrinsics

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Given images of a rigid object from different views, Structure-from-Motion (SfM) [1,2,3] allows the computation of the object’s 3D structure. However, many such objects of interest are non-rigid and the rigidity constraints of SfM do not hold. The ever increasing number of monocular videos with deforming objects means provides a large incentive for being able to reconstruct such scenes. Such reconstruction problems can be solved with Non-Rigid Structure-from-Motion (NRSfM) which uses multiple images of a deforming object to reconstruct its 3D from a single camera. Another related approach computes the shape based on the object’s template shape and its deformed image, also termed as Shape-from-Template (SfT). While SfM is well-posed and has already seen several applications in commercial software [4, 5], non-rigid reconstruction has inherent theoretical problems. It is severely under-constrained without prior knowledge of the deformation or the shapes. In fact given a number of images, infinite possibilities of deformations exist that provide the same image projections. Therefore, one of the major challenges in NRSfM is to efficiently combine a realistic deformation constraint and the camera projection model to reduce the solution ambiguity.

A large majority of previous methods tackle NRSfM with an affine camera model and a low rank approximation of the deforming shapes [7,8,9,10,11,12,13,14]. However, such methods do not handle perspective effects and nonlinear deformations very well. In this paper we study the use of the uncalibrated perspective camera and the isometric deformation prior for non-rigid reconstruction. Isometry is a geometric prior which implies that the geodesic distances on the surface are preserved with the deformations. This is a good approximation for many real objects such as a human body, paper-like surfaces, or cloth. In SfT, the use of the isometric deformation prior with the perspective camera is considered to be the state-of-the-art [15,16,17] among the parameter-free approaches. In particular, [15, 18] also estimate the focal length while recovering the deformation. In NRSfM, some recent methods [6, 19] provide a convex formulation with the inextensible deformation for a calibrated perspective camera setup. The reconstruction is achieved by maximizing depth along the sightlines introduced in [20, 21] for template-based reconstruction. Although the methods use the perspective camera model and geometric priors for non-rigid reconstruction, their computational complexity does not allow reconstructing a large number of points. On the other hand, some recent dense methods using the perspective camera model have shown promising results, but they rely on piecewise rigidity constraints [22, 23] and shape initialization; this may be too constraining for several applications. Furthermore, methods using the perspective camera either rely on known intrinsics or cannot handle significant nonrigidity [28]. To the best of our knowledge, estimation of the unknown focal length has not been investigated in NRSfM for deforming surfaces.

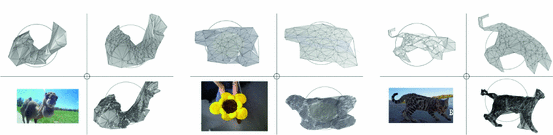

In this paper we address the aforementioned issues with methods based on the convex relaxation of isometry. More precisely, we provide the following contributions: (a) a method to ‘upgrade’ the non-rigid reconstruction obtained using incorrect camera intrinsics to the reconstruction of the correct one, (b) a method to estimate intrinsics - all five entries in the case of SfT and the unknown focal length in the case template-less NRSfM (c) an incremental method to add more points to the sparse 3D point-sets for consistent and semi-dense reconstruction (d) online method of reconstruction by adding images. Besides being of immense practical concern and theoretical value, questions (a) and (b) have not been attempted for NRSfM for deforming objects. We provide a unified framework to solve the problems (a) through (d) using depth maximization and the relaxations of the isometry prior. We provide theoretical justification along with practical methods for intrinsics/focal length estimation as well as densification and online reconstruction strategies. Despite being extremely challenging, we show the applicability of our method with compelling results. A few examples among them is shown in Fig. 1.

1.1 Related Work

We discuss briefly the methods based on the isometry prior and the perspective camera model. This has been widely explored in the template-based methods [20, 21, 24]. In particular, [21] uses the inextensibility as a relaxation of the isometry prior in order to formulate non-rigid reconstruction as a convex problem by maximizing the depth point-wise. Several recent NRSfM methods [6, 19, 25,26,27] also use isometry or inextensibility with the perspective camera model. [26, 27] require the correspondence mapping function with its first and second-order derivatives limiting their application in practice. [19] improved upon [25] by providing a convex solution to NRSfM. They achieve this by maximizing pointwise depth in all views under the inextensibility cone constraints of [21] while also computing the template geodesics. Very recently a method [6] improving upon [19] suggested the use of maximization of sightlines rather than the pointwise depth. Both these methods have shown that moving the surface away from the camera under the inextensibility constraints can be formulated as a convex problem effectively reconstructing non-rigid as well as rigid objects. A different class of methods that use energy minimization approach on an initial solution also use the perspective camera model but with a piece-wise rigidity prior [22, 23]. However, all of these methods discussed here require the calibrated camera for reconstruction and do not provide any insights on how they can be extended to an uncalibrated camera. One notable exception is given by [28], however this approach is limited to dynamic scenes featuring a few independently moving objects [29, 30]. Yet another problem that has not been addressed in [6, 19] is the incremental reconstruction of a large number of points. Semi-dense or dense reconstruction as such is not possible here due to the high computational complexity of these methods.

2 Problem Modelling

We pose the NRSfM problem as that of finding point-wise depth in each view. We write the unknown depth as \(\lambda _i^l\) and the known homogeneous image coordinates as \(\mathsf {u}_i^l\), for the point i in the l-th image. A set of neighboring points of i is denoted by \(\mathcal {N}(i)\). \(d_{ij}\) represents the template geodesic distance between point i and j, which is an unknown quantity for the NRSfM problem and a known quantity for the SfT problem. We define a nearest neighborhood graph as a set of fixed number of neighbors for each point i [19]. To represent the exact isometric NRSfM problem, we also introduce a geodesic distance function between two 3D points on the surface \(\mathcal {S}\), \(g_\mathcal {S}(x,y): \mathbb {R}^3 \times \mathbb {R}^3 \rightarrow \mathbb {R}\). Given the camera intrinsics \(\mathsf {K}\), the isometric NRSfM problem can be written as:

(1) defines a non-convex problem and is also not tractable in its given form. It has been shown that with various relaxations [6, 19, 25], problem (1) can be solved for a known \(\mathsf {K}\) when different views and deformations are observed. In order to tackle the NRSfM problem with an unknown focal length we start with the observation that not all such solutions provide isometrically consistent shapes through all the views. We formulate our methods in the following sections.

3 Uncalibrated NRSfM

Given a known object template and a calibrated camera the NRSfM problem in (1) can be formulated as a convex problem by relaxing the isometry constraint with an inextensibility constraint [21] as below:

We are, however, interested on solving the same problem when both \(d_{ij}\) and \(\mathsf {K}\) are unknown. Unfortunately, this problem is not only non-convex, but also unbounded. Therefore, we use two extra constraints on the variables \(\mathsf {K}\) and \(d_{ij}\) such that the problem of (2), for unknown \(d_{ij}\) and \(\mathsf {K}\), becomes bounded.

Despite being bounded with the addition of (3), the reconstruction problem is still non-convex. More importantly, the maximization of the objective function favors the solution when \(\mathsf {K}\) is as close as possible to \(\overline{\mathsf {K}}\). Therefore, we instead solve the reconstruction problem in (2) with a fixed initial guess \(\mathsf {\hat{K}}\) and seek for the upgrade of both intrinsics and reconstruction later. Note that fixing the intrinsics makes the problem convex and identical to that in [19].

Now, we are interested to upgrade the solution of (4) such that the upgraded reconstruction correctly describes the deformed object in the 3D-space. In this work, the upgrade is carried out using a pointwise upgrade equation. In the following, we first derive this upgrade equation assuming that the correct focal length is known and then provide the theory and practical approaches to recover the unknown focal length.

3.1 Upgrade Equation

Let us consider, \(\lambda _i^l\) and \(\hat{\lambda }_i^l\) are depths, of the point represented by \(\mathsf {u}_i^l\), obtained from (2) and (4), respectively. The following proposition is the key ingredient of our work that relates \(\hat{\lambda }_i^l\) to \(\lambda _i^l\) for the reconstruction upgrade.

Proposition 1

For \(\mathsf {u}_i^l\approx \mathsf {u}_{\mathcal {N}(i)}^l\), \(\hat{\lambda }_i^l\) can be upgraded to \(\lambda _i^l\) with the known \(\mathsf {K}\) using,

Proof

It is sufficient to show that every \(j\in \mathcal {N}(i)\) satisfies \(\left\| \mathsf {\hat{K}}^{-1}(\hat{\lambda }_{i}^l\mathsf {u}_{i}^l - \hat{\lambda }_{j}^l\mathsf {u}_{j}^l)\right\| \approx \left\| \mathsf {K}^{-1}(\lambda _{i}^l\mathsf {u}_{i}^l - \lambda _{j}^l\mathsf {u}_{j}^l)\right\| \). From (5), for any \(u_i^l\approx u_{\mathcal {N}(i)}^l\), \(\left\| \mathsf {\hat{K}}^{-1}(\hat{\lambda }_{i}^l\mathsf {u}_{i}^l - \hat{\lambda }_{j}^l\mathsf {u}_{j}^l)\right\| ^2\) can be expressed as,

\(\square \)

Note that the condition \(\mathsf {u}_i^l\approx \mathsf {u}_{\mathcal {N}(i)}^l\) is valid for any two sufficiently close neighbors. Such neighbors can be chosen using only the image measurements. More importantly, the assumption \(\mathsf {u}_i^l\approx \mathsf {u}_{\mathcal {N}(i)}^l\) still allows depths \(\lambda _i^l\) and \(\lambda _{\mathcal {N}(i)}^l\) to be different. This plays a vital role especially when the close neighboring points differ distinctly in depth, either due to camera perspective or high frequency structural changes. Although, (5) is only a close approximation for the reconstruction upgrade, its upgrade quality in practice was observed to be accurate. The following remark concerns Proposition 1.

Remark 1

As the guess on intrinsics \(\mathsf {\hat{K}}\) tends to the real intrinsics \(\mathsf {K}\), the upgrade equation (5) holds true for exact equality even when \(\mathsf {u}_i^l\not \approx \mathsf {u}_{\mathcal {N}(i)}^l\). In other words,

3.2 Upgrade Strategies

The upgrade equation presented in Proposition 1 assumes that the exact intrinsics \(\mathsf {K}\) is known. However, for uncalibrated NRSfM, \(\mathsf {K}\) is unknown. While the principal point can be assumed to be at the center of the image for most cameras [31], nothing can be said about the focal length. We henceforth, present strategies to estimate \(\mathsf {K}\) in two different scenarios of known and unknown shape template. We rely on the fact that isometric deformation, to a large extent, preserves local rigidity. This is reflected somewhat in the reconstruction obtained from (4). However, due to changes in the perspective and the extension of points along incorrect sightlines, the use of incorrect intrinsics produces reconstructions that are very less likely to remain isometric across different views. Similarly, an upgrade towards the correct intrinsics in that case produces reconstructions which satisfy the isometry better. This is also supported by the results in Sect. 6. There are various ways one can use isometry of the reconstructed surfaces to determine the correct intrinsics. A very simple method would be to use the fact that given reconstructed points that are dense enough, the correct intrinsics must preserve the local euclidean distance. For \(\hat{a}_{i}=\hat{\lambda }_i\left\| \mathsf {\hat{K}}^{-1}\mathsf {u}_i\right\| \), the euclidean distance between two upgraded neighboring 3D points, in any view as a function of intrinsics, can be expressed as,

Now, we present techniques to estimate \(\mathsf {K}\) when the shape template is known (SfT), followed by a method to estimate the focal length for template-less case of NRSfM.

Template-Based Calibration. For the sake of simplicity, we present the calibration theory using only one image. This is also the sufficient condition for reconstruction when the shape template is known [21]. Recall that for SfT, \(d_{ij}\) in (4) are already known during the reconstruction process. For known template distance \(d_{ij}\) and the estimated euclidean distance after reconstruction upgrade \(\hat{d}_{ij}(\mathsf {K})\), the intrinsics \(\mathsf {K}\) can be estimated by minimizing,

Alternatively, one can also derive polynomial equations on the entries of the so-called Image of the Absolute Conic (IAC), defined as \(\mathsf {\Omega }=\mathsf {K^{-\intercal }}\mathsf {K^{-1}}\).

Proposition 2

As long as the rigidity between any pair \(\{\mathsf {u}_i, \mathsf {u}_j\}\) is maintained, either for any \(\mathsf {\hat{K}}\) and \(\mathsf {u}_i\approx \mathsf {u}_j\) or for any pair \(\{\mathsf {u}_i, \mathsf {u}_j\}\) as \(\mathsf {\hat{K}}\rightarrow \mathsf {K}\), the IAC can be approximated by solving,

for sufficiently many pairs, where,

We provide the proof in the supplementary material.

Note that (10) is a degree 2 polynomial on the entries of \(\mathsf {\Omega }\). Since, \(\mathsf {\Omega }\) has 5 degrees of freedom, it can be estimated from 5 pairs of image points, using numerical methods.

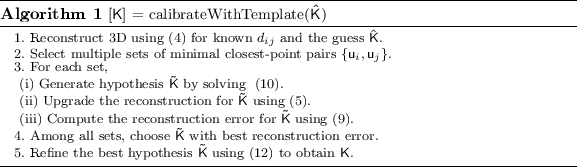

The core idea of our template-based calibration consists of three steps: (i) a fixed number of hypothesis generation, (ii) hypothesis validation using the upgraded reconstruction quality, (iii) refinement of the best hypothesis.

Hypothesis Generation: Given the template-based uncalibrated reconstruction from (4), we generate a set of hypotheses for camera intrinsics from randomly selected sets of minimal closest-point pairs. For every minimal set, we solve (10) for \(\mathsf {\Omega }\) to obtain these hypotheses. Then, the camera intrinsics \(\mathsf {K}\) is recovered by performing the Cholesky-decomposition on \(\mathsf {\Omega }\).

Hypothesis Validation: Each hypothesis is validated by computing its 3D reconstruction error. To do so, we first upgrade the initial reconstruction using the upgrade (5) for current hypothesis. Then, the reconstruction error is computed using (9). The hypothesis that results into minimum reconstruction error is chosen for further refinement.

Intrinsics Refinement: Starting from the best hypothesis, we refine the intrinsics by minimizing the following objective function:

where, \(k_{(i,j)}\) is the \(i^{th}\)-row and \(j^{th}\)-column entry of the normalized intrinsic matrix \(\mathsf {K}\). Note that, we regularize the 3D reconstruction error \(\varPhi _T(\mathsf {K})\) by the expected structure of \(\mathsf {K}\) (i.e. principal point close to the center and unit aspect ratio). Our regularization term is often the main objective for existing autocalibration methods [31, 32]. The minimization of objective \(\mathcal {E}(\mathsf {K})\) can be carried out efficiently using locally optimal iterative refinement methods.

Now, we summarize our calibration method in Algorithm 1.

Template-Less Calibration. As the self-calibration with the unknown template is extremely challenging, we relax it by considering that the principal point is at the center of the image and that the two focal lengths are equal. We assume that the intrinsics are constant across views. We then measure the consistency of the upgraded local euclidean distances, defined by (8), across different views. More precisely, we wish to estimate the focal length in \(\mathsf {K}\) by minimizing the following objective function,

Ideally, it is also possible to derive polynomials on \(\mathsf {\Omega }\), analogous to (10). This can be done by eliminating the unknown variable \(d_{ij}\) from two equations for two views of the same pair. Unfortunately, the equation derived in this manner does not turn out to be easily tractable. Alternatively, one can also attempt to solve the polynomials without eliminating variables \(d_{ij}\) – on both variables \(\mathsf {\Omega }\) and \(d_{ij}\). However for practical reasonsFootnote 1, we design a method assuming only one entry of \(\mathsf {\Omega }\), corresponding to the focal length, is unknown. Under such assumption, we show in the supplementary materials that a polynomial of degree 4, one variable, equivalent to (10), can also be derived.

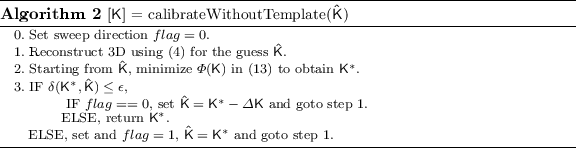

In this paper, we avoid making hypothesis on the focal length, since it is not really necessary. Unlike the case of template-based calibration, we address the problem of template-less calibration iteratively in two steps: (i) focal length refinement, (ii) focal length validation. Henceforth for the template-less calibration, we make a slight abuse of notation by using \(\mathsf {K}\) even for the intrinsics with only unknown focal length, unless mentioned otherwise.

Focal Length Refinement: Given an initial guess on focal length, its refinement is carried out by minimizing the objective function \(\varPhi (\mathsf {K})\) of (13) (optionally, on the full intrinsics). This refinement process finds a refined \(\mathsf {K}\) which results a better isometric consistency of the reconstructions across views.

Focal Length Validation: The main problem of template-less calibration is to obtain the validity for the given pair of intrinsics and the reconstruction. In other words, if one is given all reconstructions from all possible focal lengths, it is not trivial to know the correct reconstruction. Especially when reconstructing using overestimated intrinsics with MDH, \(\mathsf {K}\) allows the average depths to dominate the objective, while preserving the isometry. This usually leads to a flat and small scaled reconstruction [17]. Therefore an overestimated guess \(\mathsf {\hat{K}}\) favors its own reconstruction over any upgraded one, while minimizing \(\varPhi (\mathsf {K})\). Relying on this observation, we seek for the isometrically consistent reconstruction with the smallest focal length, which works very well in practice. An algebraic analysis of our reasoning is provided in the supplementary material.

While searching for focal length, we use a sweeping procedure. On the one hand, if a reconstruction with the given focal length does not favor any upgrade, the sweeping is performed towards the lower focal length with a predefined step size, unless it starts favoring the upgrade. On the other hand, if the reconstruction favors the upgrade, we follow the suggested focal length update, until it suggests no more upgrade. The sought focal length is the one below which the upgrade is favorable, whereas above which it is not. Let \(\delta (\mathsf {K}_1,\mathsf {K}_2)\) be gap in focal lengths of two intrinsics \(\mathsf {K}_1\) and \(\mathsf {K}_2\), \(\varDelta \mathsf {K}\) be a small step size which when added to an intrinsic matrix \(\mathsf {K}\) increases its focal length by that step size. Our template-less calibration method is summarized in Algorithm 2.

We show in the experiment section, that the Algorithm 2 converges in very few iterations. In every iteration, beside the reconstruction itself, the major computation is only required while minimizing \(\varPhi (\mathsf {K})\). Recall that, \(\varPhi (\mathsf {K})\) is minimized iteratively using a local method. During local search, the reconstruction for every update is required to compute \(\varPhi (\mathsf {K})\). Thanks to the upgrade equation, the cost \(\varPhi (\mathsf {K})\) can be computed instantly, without going through the computationally expensive reconstruction process.

3.3 Intrinsics Recovery in Practice

Although our reconstruction method makes inextensible shape assumption, the upgrade strategies use the piece-wise rigidity constraint. Despite the fact that the piece-wise rigid assumption is mostly true for inextensible shapes, it could be problematic in certain cases, for example, when the reconstructed points are too sparse. Therefore, some special care need to taken for a robust calibration.

Distance Normalization and Geodesics: Recall that the upgrade equation (5) is an approximation under the assumption that either the neighboring image points are sufficiently close to each other or a good guess \(\hat{\mathsf {K}}\) is provided. When neither of these conditions are satisfied, the intrinsics obtained from energy minimization may not be sufficiently accurate. While a larger focal length may reduce the residual error, it also reduces individual distances creating disparities in the reconstruction scale of different views. Therefore, during each iteration of refinement, we fix the scale by enforcing,

Another important practical aspect here is the use of geodesics \(\hat{g}^l(i,j)\) instead of \(\hat{d}^l_{ij}\) in Eq. (13) or Eq. (9). When the scene points are sparse, using geodesics instead of the local euclidean distances may be necessary. We therefore choose to use geodesics computed from Dijkstra’s algorithm [33] instead of the local euclidean distances for stability.

Re-reconstruction and Re-calibration: For a better calibration accuracy, especially when the initial guess \(\mathsf {\hat{K}}\) is largely inaccurate, we iteratively perform re-reconstruction and re-calibration, starting from newly estimated intrinsics, until convergence. This has already been included in Algorithm 2, which we also included on top of Algorithm 1 in our implementation. In practice, only a few such iterations are sufficient to converge, even when the initial guess on intrinsics is very arbitrary.

4 Incremental Semi-dense NRSfM

The SOCP problem of (4) has the time complexity of \(O(n^3)\). Therefore in practice, only a sparse set of points can be reconstructed in this manner. Here, we present a method to iteratively densify the initial sparse reconstruction, followed by online new view/camera addition strategy. Besides many obvious importance of incremental reconstruction, it is also necessary in our context: (a) to allow the selection of the closest image point pairs for camera calibration, (b) to compute 3D Geodesic distances for single view reconstruction.

4.1 Adding New Points

Let \(\mathcal {P}\) represents a set of sparse points reconstructed using (4). We would like to reconstruct a set of new points \(\mathcal {Q}\) with depths \(\zeta ^l_i\), such that \(\mathcal {Q}\cap \mathcal {P}=\emptyset \), consistent to the existing reconstruction. This can be achieved by solving the following convex optimization problem,

where, \(\varLambda =\sum _{l} \sum _{i\in \mathcal {P}} \lambda _{i}^l\), \(\mathcal {N}_p(i)=\mathcal {N}(i)\cap \mathcal {P}\), and \({\mathcal {N}_q(i)=\mathcal {N}(i)\cap \mathcal {Q}}\). The scalars \(\alpha \) and \(1-\alpha \) represent the contributions of initial reconstruction \(\mathcal {P}\) and new reconstruction \(\mathcal {Q}\), respectively. Note that the newly reconstructed points respect the inextensible criteria not only among themselves but also with respect to the initial reconstruction. This maintains the consistency between reconstructions \(\mathcal {P}\) and \(\mathcal {Q}\). The incremental dense reconstruction process iteratively adds disjoint sets \(\mathcal {Q}_1,\mathcal {Q}_2,\ldots \mathcal {Q}_r\) to the initial reconstruction \(\mathcal {P}\), where \(\mathcal {P}\) encodes the overall shape and \(\mathcal {Q}_r\) represents the details.

4.2 Adding New Cameras

Adding a new camera to the NRSfM reconstruction is fundamentally a template-based reconstruction problem. If the camera is calibrated, one can obtain the reconstruction directly from (2). For the uncalibrated case, the camera can be calibrated first using (10), and the reconstruction upgraded from (4) using (5). It is important to note that the computation of accurate template geodesic distances \(d_{ij}\), as required for template-based reconstruction, is possible only when the reconstruction is dense enough. This is not really a problem, thanks to the proposed incremental reconstruction method.

5 Discussion

Initial Guess \(\mathsf {\hat{K}}\): In all our experiments, we choose the initial guess \(\mathsf {\hat{K}}\) by setting both focal lengths to the half of the mean image size and principal point to the image center.

Missing Features: Feature points may be missing from some images due to occlusion or matching failure. This problem can be addressed during reconstruction by discarding all the variables corresponding to missing points together with all the inextensible constraints involving them as done in [19, 25].

Reconstruction Consistency: Alternative to (15), one can also think of reconstructing two overlapping sets \(\mathcal {P}\) and \(\mathcal {Q}\) such that \(\mathcal {P}\cap \mathcal {Q}=\mathcal {R}\) independently. Then, the registration between them can be done with the help of \(\mathcal {R}\) from two sides. However, this is not only computationally inefficient due to the overlap, but also geometrically inconsistent.

6 Experimental Results

We conduct extensive experiments in order to validate the presented theory and to evaluate the performance, run time and practicality of the proposed methods.

Datasets. We first provide a brief descriptions of the datasets we use to analyze our algorithms. KINECT Paper. This VGA resolution image sequence shows a textured paper deforming smoothly [34]. The tracks contain about 1500 semi-dense but noisy points. Hulk & T-Shirt. The datasets contain a comic book cover in 21 different deformations, and a textured T-Shirt with 10 different deformations [35], in high resolution images. Although the number of points is low (122 and 85, resp.), the tracks have very little noise and therefore we obtain a very accurate auto-calibration. Flag. This semi-synthetic dataset is created from mocap recordings of deforming cloth [36]. We generate 250 points in 30 views using a virtual 640 \(\times \) 480 perspective camera. Newspaper. This sequenceFootnote 2 contains the deformation and tearing of a double-page newspaper, recorded with KINECT in HD resolution [19]. Hand. The Hand dataset [19] features medium resolution images. Dense tracking [37] of image points yield up to 1500 tracks in 88 views. The dataset consists of ground-truth 3D for the first and the last image of the sequence. Minion & Sunflower. These sequences are recorded with a static Kinect sensor [38]. Minion contains a stuffed animal undergoing folding and squeezing deformations. Sunflower however features only small translation w.r.t. the camera. We incrementally reconstruct more than 10,000 points for Minion, and 5,000 for Sunflower, as shown in Fig. 1. We are able to reconstruct the global deformation, and mid-level details such as the glasses of Minion. Unfortunately, due to the failure of optical flow tracking, we fail to reconstruct homogeneous areas and fine details. In Sunflower we can capture the deformation of the outside leafs, whereas finer details in the center of the blossom is not recovered due to insufficient change in viewpoint. CamelFootnote 3 & Kitten.Footnote 4 We took two sequences from YouTube videos to show the incremental semi-dense NRSfM from uncalibrated cameras. The camel turns around its head towards the moving camera, providing enough motion to faithfully reconstruct the 3D motion of the animal. Fig. 1 shows the 3D structure of more than 3,000 points for one out of 61 views reconstructed. In the Kitten sequence (18,000 points for each 36 views), a cat performs both articulated and deforming motion with body and tail. Again, state-of-the-art optical flow methods struggle to maintain stable points tracks, especially on the head. Nevertheless, our method captures the general motion to a very good extent. In all of the above datasets, DLH fails to get the correct shape while MaxRig cannot reconstruct the shape faithfully as it cannot handle enough points. Cap. This dataset contains wide-baseline views of a cap in two different deformations [18]. The 3D template of the undeformed cap was obtained using SfM pipeline for the images from the first camera. Then, the second camera is calibrated using our template-based method.

6.1 Camera Calibration from a Non-rigid Scene

To measure the quality of our calibration results, we report the 3D root mean square error (RMSE), the relative focal length and principal point estimation error. Furthermore, we provide the number of iterations and the corresponding run times in Table 1.

Template-Based Camera Calibration. In the first part of Algorithm 1 we generate hypotheses for \(\mathsf {K}\) and choose the one with best isometric match with the template. We perform experiments on the KINECT Paper, Hulk and Flag dataset and report the results in Table 1. We observe a consistent improvement in reconstruction accuracy with the estimated intrinsics. The second part of Algorithm 1 involves gradient-based refinement on the intrinsics by minimizing Eq. (12). To analyze this part, we conduct two experiments: First, we perform refinement on the initially estimated intrinsics \(f_{\text {poly}}\). Here we can consistently improve reconstruction errors with the refined intrinsics. In the Hulk and Flag dataset, we also get a better estimate of the focal length. On KINECT Paper however, the focal length deteriorates, while reconstruction accuracy improves. This is most probably due to the noisy tracks in the sequence. Due to the effective regularization, the error in principal point is consistently low. In the second experiment, we gauge the robustness of our refinement method. To this end, we simulate initial intrinsics by adding \({\pm }20\%\) uniform noise independently on each of the entries of \(\mathsf {K}_{GT}\), and compare reconstruction error and the refined intrinsics shown in Table 2. We compare to Bartoli et al. [18] on the Cap dataset directly from the paper, since it is non-trivial to implement the method itself. We observed an error \(E_{\text {f}}\) of about 13% with our method, compared to 3.8%–7.3% reported by [18]. The slightly higher error in the Cap dataset can be partly attributed to the repeating texture that makes our image matches non-ideal. Overall we can observe a consistent improvement in almost all metrics, validating the robustness of the method and the assumptions it is based on.

Template-Less Camera Calibration. To visualize the dynamics of Algorithm 2, we plot the error in isometry \(\varPhi (\mathsf {K})\) over focal length for each iteration on the Hulk dataset in Fig. 3(a). Typically, less than 10 iterations are necessary for the method to converge. As we hypothesized above, Fig. 3(b) empirically verifies that we can find the termination criterion for our sweeping strategy by thresholding the focal length change \(\delta (\mathsf {K}^*,\mathsf {\hat{K}})\). Our method consistently recovers a correct estimate of the intrinsics as reported in Table 1. Moreover, the fact that we obtain better reconstruction accuracy in almost all datasets validates our approach of using the isometric consistency \(\varPhi (\mathsf {K})\).

6.2 Incremental Reconstruction

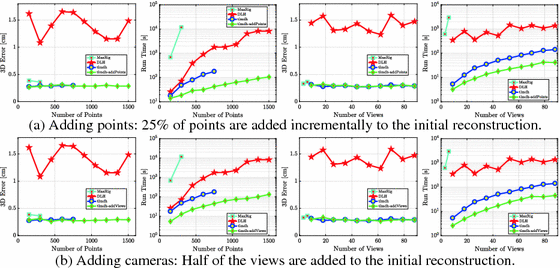

We first present experiments on the dense Hand dataset in Fig. 2. We compare to two state-of-the-art NRSfM approaches, MaxRig [6] and DLH [7], as well as the to batch version of our approach tlmdh [19]. In the first row, we plot the performance of tlmdh-addPoints: we start by reconstructing a random subset of \(\max \{150, \frac{N}{4}\}\) points, and incrementally add the remaining points in subsequent iterations according to Eq. (15). While achieving competitive reconstruction accuracy on par with tlmdh, we observe remarkable advantages in run time compared to all other methods. MaxRig shows good accuracy, but suffers from serious run time and memory problems. DLH on the other hand is slow and exhibits poor accuracy on this dataset, due to perspective and non-linear deformations. The second row of Fig. 2 shows the same experimental setup with tlmdh-addViews. Here, we reconstruct all points at once, but incrementally add the remaining 50% of views to the reconstruction of the first half. To this end, we compute the template from the first reconstruction and employ SfT. The graphs clearly show that tlmdh-addViews exhibits a favorable run time complexity without impairing the reconstruction accuracy. We provide more results in the supplementary material. Furthermore, we perform extensive experiments on a variety of additional datasets, and compare with the reconstructions of p-isomet [27], p-isolh [35], DLH [7], and o-kfc [39] in Table 3 obtained from [40]. Overall, we observe a significant advantage in accuracy and run time in particular compared to the best performing baseline tlmdh.

7 Conclusions

In this paper we formulated a method addressing the unknown focal-length in NRSfM and unknown intrinsics in SfT. Despite the computational complexity of convex NRSfM, we formulated an incremental framework to obtain semi-dense reconstruction and reconstruct new views. We developed our theory based on the surface isometry prior in the context of the perspective camera. We developed and verified our approach for intrinsics/focal-length recovery for both template-based and template-less non-rigid reconstruction. Essential to our method is a novel upgrade equation, that analytically relates reconstructions for different intrinsics. We performed extensive quantitative and qualitative analysis of our methods on different datasets which shows the proposed methods perform well despite addressing very challenging problems.

Notes

- 1.

For most of the cameras, it is safe to assume that their intrinsics have no skew, unit aspect ratio, and a principal point close to the image center.

- 2.

The dataset was provided by the authors.

- 3.

- 4.

References

Longuet-Higgins, H.: A computer algorithm for reconstructing a scene from two projections. Nature 293, 133–135 (1981)

Nistér, D.: An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 26(6), 756–777 (2004)

Hartley, R.I., Zisserman, A.: Multiple View Geometry in Computer Vision, 2nd edn. Cambridge University, New York Press (2004). ISBN 0521540518

Agisoft PhotoScan: Agisoft PhotoScan User Manual Professional Edition, Version 1.2 (2017)

Autodesk ReCap: ReCap 360 - Advanced Workflows (2015)

Ji, P., Li, H., Dai, Y., Reid, I.: “Maximizing Rigidity” revisited: a convex programming approach for generic 3D shape reconstruction from multiple perspective views. In: ICCV (2017)

Dai, Y., Li, H., He, M.: A simple prior-free method for non-rigid structure-from-motion factorization. In: CVPR (2012)

Bregler, C., Hertzmann, A., Biermann, H.: Recovering non-rigid 3D shape from image streams. In: CVPR (2000)

Torresani, L., Hertzmann, A., Bregler, C.: Nonrigid structure-from-motion: estimating shape and motion with hierarchical priors. IEEE Trans. Pattern Anal. Mach. Intell. 30(5), 878–892 (2008)

Del Bue, A.: A factorization approach to structure from motion with shape priors. In: CVPR (2008)

Garg, R., Roussos, A., Agapito, L.: Dense variational reconstruction of non-rigid surfaces from monocular video. In: CVPR (2013)

Fayad, J., Agapito, L., Del Bue, A.: Piecewise quadratic reconstruction of non-rigid surfaces from monocular sequences. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6314, pp. 297–310. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15561-1_22

Agudo, A., Montiel, J., Agapito, L., Calvo, B.: Online dense non-rigid 3D shape and camera motion recovery. In: BMVC (2014)

Taylor, J., Jepson, A.D., Kutulakos, K.N.: Non-rigid structure from locally-rigid motion. In: CVPR (2010)

Bartoli, A., Pizarro, D., Collins, T.: A robust analytical solution to isometric shape-from-template with focal length calibration. In: ICCV (2013)

Ngo, T.D., Östlund, J.O., Fua, P.: Template-based monocular 3D shape recovery using Laplacian meshes. IEEE Trans. Pattern Anal. Mach. Intell. 38(1), 172–187 (2016)

Chhatkuli, A., Pizarro, D., Bartoli, A., Collins, T.: A stable analytical framework for isometric shape-from-template by surface integration. IEEE Trans. Pattern Anal. Mach. Intell. 39(5), 833–850 (2017)

Bartoli, A., Collins, T.: Template-based isometric deformable 3D reconstruction with sampling-based focal length self-calibration. In: CVPR (2013)

Chhatkuli, A., Pizarro, D., Collins, T., Bartoli, A.: Inextensible non-rigid shape-from-motion by second-order cone programming. In: CVPR (2016)

Perriollat, M., Hartley, R., Bartoli, A.: Monocular template-based reconstruction of inextensible surfaces. Int. J. Comput. Vis. 95(2), 124–137 (2011)

Salzmann, M., Fua, P.: Linear local models for monocular reconstruction of deformable surfaces. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 931–944 (2011)

Kumar, S., Dai, Y., Li, H.: Monocular dense 3D reconstruction of a complex dynamic scene from two perspective frames. In: ICCV (2017)

Russell, C., Yu, R., Agapito, L.: Video pop-up: monocular 3D reconstruction of dynamic scenes. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8695, pp. 583–598. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10584-0_38

Bartoli, A., Gérard, Y., Chadebecq, F., Collins, T., Pizarro, D.: Shape-from-template. IEEE Trans. Pattern Anal. Mach. Intell. 37(10), 2099–2118 (2015)

Vicente, S., Agapito, L.: Soft inextensibility constraints for template-free non-rigid reconstruction. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7574, pp. 426–440. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33712-3_31

Chhatkuli, A., Pizarro, D., Bartoli, A.: Stable template-based isometric 3D reconstruction in all imaging conditions by linear least-squares. In: CVPR (2014)

Parashar, S., Pizarro, D., Bartoli, A.: Isometric non-rigid shape-from-motion in linear time. In: CVPR (2016)

Xiao, J., Kanade, T.: Uncalibrated perspective reconstruction of deformable structures. In: Tenth IEEE International Conference on Computer Vision (ICCV 2005), vol. 1, 2, pp. 1075–1082 (2005)

Salzmann, M., Hartley, R., Fua, P.: Convex optimization for deformable surface 3-D tracking. In: ICCV (2007)

Akhter, I., Sheikh, Y., Khan, S., Kanade, T.: Trajectory space: a dual representation for nonrigid structure from motion. IEEE TPAMI 33(7), 1442–1456 (2011)

Nistér, D.: Untwisting a projective reconstruction. Int. J. Comput. Vis. 60(2), 165–183 (2004)

Chandraker, M., Agarwal, S., Kahl, F., Nistér, D., Kriegman, D.: Autocalibration via rank-constrained estimation of the absolute quadric. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2007, pp. 1–8. IEEE (2007)

Dijkstra, E.W.: A note on two problems in connexion with graphs. Numer. Math. 1(1), 269–271 (1959)

Varol, A., Salzmann, M., Fua, P., Urtasun, R.: A constrained latent variable model. In: CVPR (2012)

Chhatkuli, A., Pizarro, D., Bartoli, A.: Non-rigid shape-from-motion for isometric surfaces using infinitesimal planarity. In: BMVC (2014)

White, R., Crane, K., Forsyth, D.: Capturing and animating occluded cloth. In: SIGGRAPH (2007)

Sundaram, N., Brox, T., Keutzer, K.: Dense point trajectories by GPU-accelerated large displacement optical flow. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6311, pp. 438–451. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15549-9_32

Innmann, M., Zollhöfer, M., Nießner, M., Theobalt, C., Stamminger, M.: VolumeDeform: real-time volumetric non-rigid reconstruction. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 362–379. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_22

Gotardo, P.F., Martinez, A.M.: Computing smooth time trajectories for camera and deformable shape in structure from motion with occlusion. IEEE Trans. Pattern Anal. Mach. Intell. 33(10), 2051–2065 (2011)

Chhatkuli, A., Pizarro, D., Collins, T., Bartoli, A.: Inextensible non-rigid structure-from-motion by second-order cone programming. IEEE Trans. Pattern Anal. Mach. Intell. 1(99), PP (2017)

Acknowledgements

Research was funded by the EU’s Horizon 2020 programme under grant No. 645331– EurEyeCase and grant No. 687757– REPLICATE, and the Swiss Commission for Technology and Innovation (CTI, Grant No. 26253.1 PFES-ES, EXASOLVED).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 2 (mp4 21436 KB)

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Probst, T., Paudel, D.P., Chhatkuli, A., Van Gool, L. (2018). Incremental Non-Rigid Structure-from-Motion with Unknown Focal Length. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11217. Springer, Cham. https://doi.org/10.1007/978-3-030-01261-8_46

Download citation

DOI: https://doi.org/10.1007/978-3-030-01261-8_46

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01260-1

Online ISBN: 978-3-030-01261-8

eBook Packages: Computer ScienceComputer Science (R0)