Abstract

Gaze is reported to have important functions in communication, such as expressing emotional states, exercising social control, highlighting the informational structure of speech, and coordinating floor-apportionment. For these reasons, studying these communicative functions is expected to contribute to HCI systems by identifying communication characteristics and the role of each participant. This study analyzes how the communicative functions of utterances affect the listener’s gazing activities from the viewpoint of grounding, based on a triadic corpus with newly labeled grounding tags. The results showed that the duration of a listener’s gaze is longer in second language (L2) conversations, in goal-oriented conversations, and during utterances presenting new information. These results suggest that linguistic proficiency, conversation topic, and grounding factors all affect a listener’s gazing activities, providing us with some information that could assist in the design of HCI, HRI, and CSCW systems that better reflect the interaction contexts and linguistic proficiency of users.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Nonverbal cues play important roles in everyday communication, and studies have examined their importance not only from the affectional and attitudinal aspects of communication (Mehrabian and Ferris 1967; Mehrabian and Wiener 1967), but also in coordination of communication and “grounding”, i.e. constructing a shared understanding of the communication context (Clark and Brennan 1991; Clark 1996; Clark and Krych 2004), suggesting that the potential exists to expand and augment HCI (human-computer interaction) systems.

Among nonverbal modalities in communication, gazing activities have been considered fundamental, attracting considerable attention from researchers working in the area of multi-modal communication. Studies have reported that gaze has important communicative functions including expressing emotional states, exercising social control, highlighting the informational structure of speech, and organizing the speech floor (Argyleet al. 1968; Duncan 1972; Holler and Kendrick 2015; Kendon 1967). From the viewpoint of interaction organization in communication, studies have reported that gaze can be a cue for speech floor coordination not only in dyadic (Kendon 1967), but also in multi-party conversations (Kalma 1992; Learner 2003). Although the findings of other studies are not necessarily entirely consistent with those of the studies mentioned above (Beattie 1978; Rutter et al. 1978), this can probably be attributed to the multi-functional nature of gaze in communication (Kleinke 1986), while recent studies have confirmed the speech floor coordination function of gaze for dyadic (Ho et al. 2015) and multi-party conversations (Jokinen et al. 2013; Ishii et al. 2016; Vertegaal et al. 2001; Ijuin et al. 2018). Another study indicated that gaze can be a collaborative signal that serves as a cue to coordinate the insertion of responses (Bavelas et al. 2002). Furthermore, another study reported that even uninvolved observers of dyadic interactions followed the interactants’ speaking turns with their gaze (Hirvenkari et al. 2013).

Inspired by and based on studies that examined the functions of social gaze, system studies that incorporate gaze modalities have been proposed in the HCI and CSCW (computer-supported cooperative work) fields. Such studies have covered not only conversational agents (Cassel et al. 1994; Vertegaal et al. 2001; Garau et al. 2001; Heylen et al. 2005; Rehm et al. 2005) but also robots and devices with simulated gaze expression (Sidner et al. 2004; Bennewitz et al. 2005; Kuno et al. 2007; Foster et al. 2012; Lala et al. 2019; Jaber et al. 2019; McMillan et al. 2019).

Although HCI, HRI (human-robot interaction) and CSCW systems have to some extent been able to take gazing cues into account and integrate gaze functions, they have been less successful in incorporating linguistic proficiency that may affect gazing activities. A remote work study in the HCI field argued that video transmission of facial information and gesture helped non-native pairs to negotiate a common ground, whereas this did not provide significant help for native pairs (Veinott et al. 1999). An analysis of second language conversation reported that eye gazes and facial expressions play an important role in monitoring both partners’ understanding in the repair process (i.e. a modification to the content or presentation of the current proposition under consideration (Schegloff et al. 1977; Traum 1994)) where participants with different levels of linguistic proficiency are involved (Hosoda 2006).

Some quantitative studies have also examined the effect of linguistic proficiency on the speech floor coordination function of gaze. Analyses of the duration of the listener’s gaze during utterances have shown that when other participants are looking at the speaker in a second language (L2) conversation, the duration is significantly longer than in a first language (L1) conversation (Yamamoto et al. 2013; Umata et al. 2013; Yamamoto et al. 2015). These studies, however, have not considered the communicative context effects. Kleinke pointed out that the conditions of a conversational setup may affect the relative importance of the multiple functions of gaze in communication (Kleinke et al. 1986). Holler and Kendrick analyzed three-party conversations among native English speakers, showing that unaddressed participants were able to anticipate next turns in question-response sequences involving just two of the participants (Holler and Kendrick 2015). There are also studies that have shown the effects of interaction contexts on gazing behavior in social interactions (Rossana 2013; Kendrick and Holler 2017; Rossana et al. 2009; Stivers and Rossana 2010). The role of gaze in communication is affected by the context, and it is important to analyze the function of gaze during utterances while taking their communicative function into consideration.

The current study examined the effects of linguistic proficiency on the listener’s gaze in triadic communication considering the communicative function of utterances from the viewpoint of grounding. Each utterance was categorized according to the grounding acts in the dialogue, and the gazing activities of the listeners were compared between native and the second language conversations. We anticipated that conversation topics could also affect a listener’s gazing activities, and included the topic factor in our analysis. The results suggest that both language proficiency and topic factors independently affect the duration of a listener’s gaze in utterances in cases where the speaker provides some new pieces of information, but not in utterances where they just acknowledge the previous speaker’s utterance.

2 Corpus

Our analysis is based on a multimodal triadic interaction corpus with eye-gaze data collected and analyzed in previous studies (Yamamoto et al. 2015; Ijuin et al. 2018; Umata et al. 2018).

The corpus consists of triadic conversations in a mother tongue (L1) and those in a second language (L2) made by the same interlocutors in the same group (for details, refer to Yamamoto et al. 2015). For the current study, all utterances were newly labeled with grounding act tags (details are provided below in this section), and all the conversation data were subjected to analysis in this study. A total of 60 subjects (23 females and 37 males: 20 groups) between the ages of 18 and 24 participated in data collection, and each conversational group consisted of three participants. All participants were native Japanese speakers.

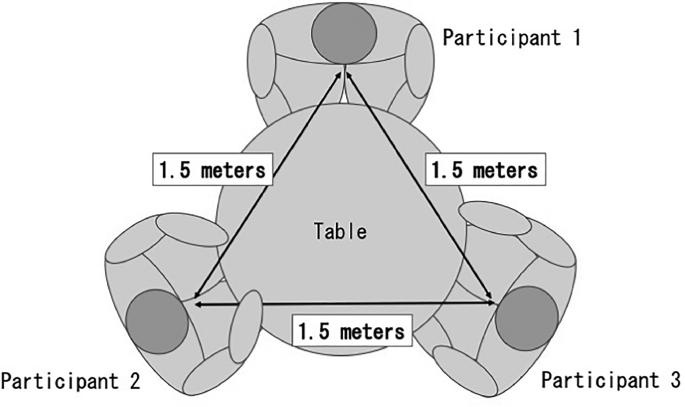

Their seats were placed about 1.5 m apart from each other in a triangular formation around a round table (see Fig. 1 and Fig. 2). The corpus covers two conversation types to examine whether such differences in types affect their interaction behaviors.

The first type is free-flowing, natural chatting that ranges over various topics such as hobbies, weekend plans, studies, and travels. The other type is goal-oriented, in which participants collaboratively decided what to take with them on trips to uninhabited islands or mountains. All the participants would be under pressure to contribute to the conversation to reach an agreement in the goal-oriented conversations, whereas such pressure would not be so strong in free-flowing conversations where reaching an agreement was not obligatory.

We expected that conversational flow would be more predictable in the goal-oriented conversations where the vocabulary was more limited and the domain of the discourse was defined more narrowly by the task than in the free-flowing conversations.

The order of the conversation types was arranged randomly to counterbalance any order effect. The order of the languages used in the conversations was also arranged randomly. Each group had approximately six-minute conversations of the two types in both Japanese and English. We collected multimodal data from 80 three-party conversations in L1 (Japanese) and in L2 (English) languages (20 free-flowing in Japanese, 20 free-flowing in English, 20 goal-oriented in Japanese, and 20 goal-oriented in English). Twenty groups engaged in all four conversation types. All the participants except those in the first three groups answered a questionnaire evaluating their conversation after each conversation condition. This material is to be analyzed in other studies (see Umata et al. 2013).

Their eye gazes and voices were recorded via three sets of NAC EMR-9 head-mounted eye trackers and headsets with microphones. The viewing angle of the EMR-9 was 62° and the sampling rate was 60 frames per second. We used the EUDICO Linguistic Annotator (ELAN) developed by the Max Planck Institute as the tool for gaze and utterance annotation (ELAN) (see Fig. 3). Each utterance is segmented from speech at inserted pauses of more than 500 ms, and the corpus was manually annotated in term of the time spans for utterances, backchannel, laughing, and eye movements. The corpus already had the grounding act tags according to the categories established by Traum (1994) for 20 groups engaging in goal-oriented conversations. For the current study, we trained a university student to perform annotation according to the categories for 20 groups engaging in free-flowing conversations. She annotated the tags using ELAN with video, gaze, and utterance transcription data in the same manner as in the previous study (Umata et al. 2019). Table 1 shows the grounding act tags and their descriptions, and Fig. 1 shows the frequency of grounding acts in L1 and L2 conversation.

3 Analyses of Gazes in Utterances

We analyzed the gazing activities of listeners in triadic conversation taking the factors of linguistic proficiency, topic and grounding into account. Previous studies of the listener’s gaze during utterances have shown that when other participants are looking at the speaker in a second language (L2) conversation, gaze is significantly longer than in a first language (L1) conversation (Yamamoto et al. 2013; Umata et al. 2013; Yamamoto et al. 2015), suggesting that listeners use visual information to compensate for their lack in linguistic proficiency in an L2 conversation. We assumed that the linguistic proficiency factor would affect the listener’s gazing activity. We also assumed that listeners would rely more heavily on visual information in a collaborative task where the requirement for communication organization is strong. The grounding act factor was also expected to affect the gazing activity of listeners; i.e. they would have greater reliance on visual information during an utterance in which new information is presented. Our hypotheses are listed as follows:

- H1::

-

The linguistic proficiency factor would affect the duration of a listener’s gaze: The listeners would gaze at the speaker for longer in second language conversations where they compensate for their lack of linguistic proficiency with gazing cues.

- H2::

-

The topic factor would affect the duration of a listener’s gaze: The listeners would gaze at the speaker for longer in goal-oriented conversation where the requirement for communication organization is stronger when an agreement has to be reached.

- H3::

-

The grounding act factor would affect the duration of a listener’s gaze: The listeners would gaze at the speaker for longer during utterances presenting new information (namely, init, cont and ack init) than in utterances just acknowledging the previous utterance

We compared the duration of each listener’s gaze during four major categories of grounding acts (i.e., init, ack init, cont, ack) between L1 and L2 conversations. We used the average of the listener’s gazing ratio to analyze how long the speaker was gazed at by other participants [4]. The average of listener’s gazing ratios was defined as:

Here, D(i) is the duration of the ith utterance and DLGj(i) is the total gaze duration of the jth participant (j = 1, 2, 3) in each group gazing at the speaker in the ith utterance.

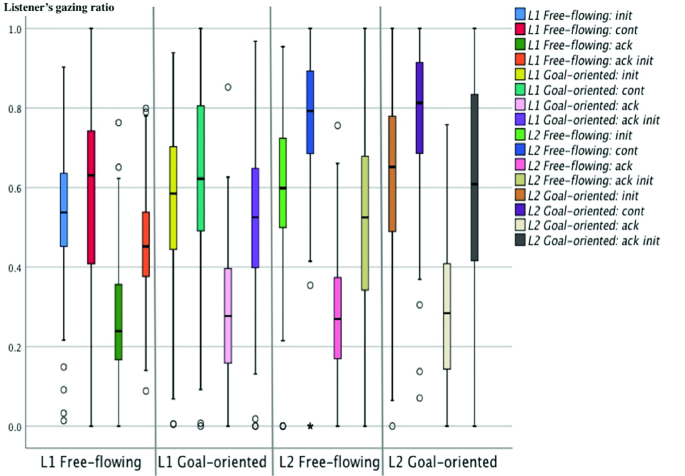

We expected that the topic factor would affect the duration of the listener’s gaze: the listeners would gaze at the speaker for longer in a goal-oriented conversation where they collaboratively decided what to take with them on a trip to a deserted island or to the mountains. We also expected that the linguistic proficiency factor would affect the duration of the listener’s gaze: the listeners would gaze at the speaker for longer in second language conversations where they compensate for their lack of linguistic proficiency with gazing cues, especially in speech turn organization. We conducted an analysis of variance (ANOVA) with language difference, topic difference, and grounding act as within-subject factors. The results revealed significant main effects of language (F(1, 113) = 45.875, p < .001), topic (F(1, 113) = 16.416, p < .001), and grounding act (F(2.589, 292.612) = 204.8, p < .01), and the multiple comparison analysis showed the differences among four major grounding acts were all significant (p < .001). Also, we observed significant first-order interaction between language and grounding acts (F(2.702, 305.327) = 24.551, p < .001), and between topic and grounding act (F(3, 339) = 4.516, p < .005). Sub-effect tests showed significant simple main effects of language in grounding act “init” (F(1, 113) = 15.81, p < .001), “cont” (F(1, 113) = 78.20, p < .001), and “ack-init” (F(1, 113) = 12.20, p < .01), and topic in grounding act “cont” (F(1, 113) = 6.22, p < .05) “ack-init” (F(1, 113) = 12.20, p < .01), and a marginally significant simple main effect of topic in grounding act “init” (F(1, 113) = 3.18, p < .1), but no significant simple main effect of either language nor topic in grounding act “ack”. The distribution of listeners’ gazing ratios (LGRs) is shown in the figure below.

As shown in Fig. 3, listeners gazed at the speaker for longer in L2 conversations than in L1 conversations. This was also the case in goal-oriented conversations compared to free-flowing conversations. Moreover, listeners gazed at the speaker for longer during init, cont and ack init utterances.

4 Discussion

We compared the duration of the listener’s gaze in triadic conversations to examine the effects of linguistic proficiency, topic and grounding on gazing activities. The results of ANOVA revealed significant main effects of language difference, topic and grounding, supporting our hypotheses H1, H2, and H3: the duration of a listener’s gaze is longer in L2 conversations, in goal-oriented conversations, and in init, cont and ack init utterances. The grounding factor had the greatest effect, followed by that of language proficiency.

The multiple comparison analysis showed that the differences among four major grounding acts were also all significant, and cont showed the longest duration for the listener’s gaze among all the grounding act categories. With cont utterances, the speakers were adding new pieces of information to their own previous utterances, and in doing so, they were sometimes observed using a filled pause to hold the speech floor while bringing order to their ideas. Such characteristics of cont utterances might have drawn the listener’s attention to the speaker. In contrast, ack showed the shortest duration for the listener’s gaze, suggesting that utterances just acknowledging the previous utterance without adding new information did not draw the listener’s visual attention to the speaker.

We observed significant first-order interaction between language difference and grounding acts, and sub-effect tests showed significant simple main effects of language difference in all the major grounding act categories except ack. The results suggest that linguistic proficiency affected the listener’s gazing activities only for utterances presenting new pieces of information. Similarly, we observed significant first-order interaction between topic and grounding acts, and sub-effect tests showed significant or marginally significant simple main effects of topic in all the major grounding act categories except ack. The results suggest that the topic also affected the listener’s gazing activities but only in the case where utterances presented new pieces of information.

Another interesting finding is that there was no significant interaction between the factors of language difference and topic. It suggests that in the current corpus settings, linguistic proficiency and topic independently affected the listener’s gazing activities.

These findings suggest that linguistic proficiency, conversation topic, and grounding all affect the listener’s gazing activities, and that these factors should be considered when attempting to design better HCI, HRI, and CSCW systems. It is also likely that the effects of these factors may not be just simple and independent but rather interlaced: our experimental results suggest that linguistic proficiency and grounding factors affect each other, and so do topic and grounding factors. Further detailed analyses are necessary to establish system design guidelines that reflect these factors.

5 Summary

We analyzed the effect of linguistic proficiency and conversation topic on the listener’s gaze in four major grounding acts. The results showed that the duration of a listener’s gaze is longer in second language (L2) conversations, in goal-oriented conversations, and during utterances presenting new information. The results also showed that both language proficiency and topic independently affect the duration of the listener’s gaze in utterances presenting new information. These results suggest that linguistic proficiency, conversation topic, and grounding factors all affect a listener’s gazing activities, supporting our hypotheses. The results are expected to contribute to HCI, HRI, and CSCW system design that reflects the interaction context and the linguistic proficiency of users.

References

Mehrabian, A., Ferris, S.R.: Inference of attitudes from nonverbal communication in two channels. J. Consul. Psychol. 31(3), 248–252 (1967)

Mehrabian, A., Wiener, M.: Decoding of inconsistent communications. J. Pers. Soc. Psychol. 6(1), 109–114 (1967)

Clark, H.H., Brennan, S.E.: Grounding in communication. In: Resnik, L.B., Levine, J.M., Teasley, S.D. (eds.) Perspectives on Socially Shared Cognition, pp. 503–512. APA Books (1991)

Clark, H.H.: Using Language. Cambridge University Press, Cambridge (1996)

Clark, H.H., Krych, M.A.: Speaking while monitoring addressees for understanding. J. Mem. Lang. 50, 62–81 (2004)

Argyle, M., Lallijee, M., Cook, M.: The effects of visibility on interaction in a dyad. Hum. Relat. 21, 3–17 (1968)

Duncan, S.: Some signals and rules for taking speaking turns in conversations. J. Pers. Soc. Psychol. 23, 283–292 (1972)

Holler, J., Kendrick, K.H.: Unaddressed participants’ gaze in multi-person interaction: optimizing recipiency. Front. Psychol. 6, 14, Article no. 98 (2015). https://doi.org/10.3389/fpsyg.2015.00098

Kendon, A.: Some functions of gaze-direction in social interaction. Acta Physiol. 26, 22–63 (1967)

Kalma, A.: Gazing in triads: a powerful signal in floor apportionment. Br. J. Soc. Psychol. 31(1), 21–39 (1992)

Lerner, G.H.: Selecting next speaker: the context-sensitive operation of a context-free organization. Lang. Soc. 32(02), 177–201 (2003)

Beattie, G.W.: Floor apportionment and gaze in conversational dyads. Br. J. Soc. Clin. Psychol. 17, 7–15 (1978)

Rutter, D.R., Stephenson, G.M., Ayling, K., White, P.A.: The timing of looks in dyadic conversation. Br. J. Soc. Clin. Psychol. 17, 17–21 (1978)

Kleinke, C.L.: Gaze and eye contact: a research review. Psychol. Bull. 100, 78–100 (1986)

Ho, S., Foulsham, T., Kingstone, A.: Speaking and listening with the eyes: gaze signaling during dyadic interactions. PLoS ONE 10(8), e0136905 (2015). https://doi.org/10.1371/journal.pone.0136905

Jokinen, K., Furukawa, H., Nishida, M., Yamamoto, S.: Gaze and turn-taking behavior in casual conversational interactions. ACM Trans. Interact. Intell. Syst. (TiiS) 3(2), 1–30 (2013)

Ishii, R., Otsuka, K., Kumano, S., Yamato, J.: Prediction of who will be the next speaker and when using gaze behavior in multiparty meetings. ACM Trans. Interact. Intell. Syst. 6(1), 31, Article no. 4 (2016). https://doi.org/10.1145/2757284

Vertegaal, R., Slagter, R., Verr, G., Nijholt, A.: Eye gaze patterns in conversations: there is more to conversational agents than meets the eyes. In: CHI 2001 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 301–308. ACM Press, Seattle (2001)

Ijuin, K., Umata, I., Kato, T., Yamamoto, S.: Difference in eye gaze for floor apportionment in native- and second-language conversations. J. Nonverbal Behav. 42, 113–128 (2018)

Bavelas, J.B., Coates, L., Johnson, T.: Listener responses as a collaborative process: the role of gaze. J. Commun. 52(3), 566–580 (2002). https://doi.org/10.1111/j.1460-2466.2002.tb02562.x

Hirvenkari, L., Ruusuvuori, J., Saarinen, V.M., Kivioja, M., Peräkylä, A., Hari, R.: Influence of turn-taking in a two-person conversation on the gaze of a viewer. PloS One 8(8), e71569 (2013). https://doi.org/10.1371/journal.pone.0071569

Cassell, J., et al.: Animated conversation: rule-based generation of facial expression, gesture & spoken intonation for multiple conversational agents. In: Proceedings of the 21st Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH 1994), pp. 413–420. ACM, New York (1994). https://doi.org/10.1145/192161.192272

Garau, M., Slater, M., Bee, S., Sasse, M.A.: The impact of eye gaze on communication using humanoid avatars. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 2001), pp. 309–316. ACM, New York (2001). https://doi.org/10.1145/365024.365121

Heylen, D., van Es, I., Nijholt, A., van Dijk, B.: Controlling the gaze of conversational agents. In: van Kuppevelt, J.C.J., Dybkjær, L., Bernsen, N.O. (eds.) Advances in Natural Multimodal Dialogue Systems. TLTB, pp. 245–262. Springer, Netherlands (2005). https://doi.org/10.1007/1-4020-3933-6_11

Rehm, M., André, E.: Where do they look? Gaze behaviors of multiple users interacting with an embodied conversational agent. In: Panayiotopoulos, T., Gratch, J., Aylett, R., Ballin, D., Olivier, P., Rist, T. (eds.) IVA 2005. LNCS (LNAI), vol. 3661, pp. 241–252. Springer, Heidelberg (2005). https://doi.org/10.1007/11550617_21

Sidner, C.L., Kidd, C.D., Lee, C., Lesh, N.: Where to look: a study of human-robot engagement. In: IUI 2004: Proceedings of the 9th International Conference on Intelligent User Interfaces, pp. 78–84 (2004). https://doi.org/10.1145/964456.964458

Bennewitz, M., Faber, F., Joho, D., Schreiber, M., Behnke, S.: Integrating vision and speech for conversations with multiple persons. In: 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 2523–2528 (2005). https://doi.org/10.1109/IROS.2005.1545158

Kuno, Y., Sadazuka, K., Michie Kawashima, M., Yamazaki, K., Yamazaki, A., Kuzuoka, H.: Museum guide robot based on sociological interaction analysis. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 2007), pp. 1191–1194. ACM, New York (2007). https://doi.org/10.1145/1240624.1240804

Foster, M.E., Gaschler, A., Giuliani, M., Isard, A., Pateraki, M., Petrick, R.P.A.: Two people walk into a bar: dynamic multi-party social interaction with a robot agent. In: Proceedings of the 14th ACM International Conference on Multimodal Interaction (ICMI 2012), pp. 3–10. ACM, New York (2012). https://doi.org/10.1145/2388676.2388680

Lala, D., Inoue, K., Kawahara, T.: Smooth turn-taking by a robot using an online continuous model to generate turn-taking cues. In: 2019 International Conference on Multimodal Interaction (ICMI 2019), Suzhou, China, 14–18 October 2019, 9 p. ACM, New York (2019). https://doi.org/10.1145/3340555.3353727

Jaber, R., McMillan, D., Belenguer, J.S., Brown, B.: Patterns of gaze in speech agent interaction. In: 1st International Conference on Conversational User Interfaces (CUI 2019), Dublin, Ireland, 22–23 August 2019, 10 p. ACM, New York (2019). https://doi.org/10.1145/3342775.3342791

Veinott, E., Olson, J., Olson, G., Fu, X.: Video helps remote work: speakers who need to negotiate common ground benefit from seeing each other. In: Proceedings of the SIGCHI Conference on Computer Human Interaction (CHI 1999), pp. 302–309. ACM, New York (1999)

Schegloff, E.A., Jefferson, G., Sacks, H.: The preference for self-correction in the organization of repair in conversation. Language 53(1977), 361–382 (1977)

Traum, D.R.: A computational theory of grounding in natural language conversation. Ph.D. dissertation, University of Rochester (1994)

Hosoda, Y.: Repair and relevance of differential language expertise. Appl. Linguist. 27(2006), 25–50 (2006)

Yamamoto, S., Taguchi, K., Umata, I., Kabashima, K., Nishida, M.: Differences in interactional attitudes in native and second language conversations: quantitative analyses of multimodal three-party corpus. In: Proceedings of the 35th Annual Meeting of the Cognitive Science Society, pp. 3823–3828 (2013)

Umata, I., Yamamoto, S., Ijuin, K., Nishida, M.: Effects of language proficiency on eye-gaze in second language conversations: toward supporting second language collaboration. In: Proceedings of the International Conference on Multimodal Interaction (ICMI 2013), pp. 413–419 (2013)

Yamamoto, S., Taguchi, K., Ijuin, K., Umata, I., Nishida, M.: Multimodal corpus of multiparty conversations in L1 and L2 languages and findings obtained from it. Lang. Resour. Eval. 49, 857–882 (2015). https://doi.org/10.1007/s10579-015-9299-2

Rossano, F.: Gaze in conversation. In: Stivers, T., Sidnell, J. (ed.) The Handbook of Conversation Analysis, pp. 308–329. Wiley-Blackwell, Malden (2013). https://doi.org/10.1002/9781118325001.ch15

Kendrick, K.H., Holler, J.: Gaze direction signals response preference in conversation (2017)

Rossano, F., Brown, P., Levinson, S.C.: Gaze, Questioning, and Culture, pp. 187–249. Cambridge University Press, Cambridge (2009). https://doi.org/10.1017/CBO9780511635670.008

Stivers, T., Rossano, F.: Mobilizing response. Res. Lang. Soc. Interact. 43, 3–31 (2010)

Umata, I., Ijuin, K., Kato, T., Yamamoto, S.: Floor apportionment function of speaker’s gaze in grounding acts. In: Proceedings of ICMI 2019: International Conference on Multimodal Interaction (ICMI 2019 Adjunct), Suzhou, China, 14–18 October 2019, 7 p. ACM, New York (2019). https://doi.org/10.1145/3351529.3360660

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Umata, I., Ijuin, K., Kato, T., Yamamoto, S. (2020). Effects of Linguistic Proficiency and Conversation Topic on Listener’s Gaze in Triadic Conversation. In: Meiselwitz, G. (eds) Social Computing and Social Media. Design, Ethics, User Behavior, and Social Network Analysis. HCII 2020. Lecture Notes in Computer Science(), vol 12194. Springer, Cham. https://doi.org/10.1007/978-3-030-49570-1_47

Download citation

DOI: https://doi.org/10.1007/978-3-030-49570-1_47

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-49569-5

Online ISBN: 978-3-030-49570-1

eBook Packages: Computer ScienceComputer Science (R0)