Abstract

With the rapid development of AR technology, the interaction between humans and computers has become increasingly complex and frequent. However, many interactive technologies in AR currently do not have a very perfect interaction mode, and they are facing huge challenges in terms of design and technical implementation, including that AR gesture interaction methods have not yet been established. There is no universal gesture vocabulary in currently developed AR applications. Identifying appropriate gestures for aerial interaction is an important design decision based on criteria such as ease of learning, metaphors, memory, subjective fatigue, and effort [1]. It must be designed and confirmed in the early stages of system development, and will seriously affect each aerial application project development process as well as the intended user of the user experience (UX) [2]. Thanks to user-centric and user-defined role-playing method, this paper set up a suitable car simulation scenarios, allowing users to define the 3D space matches the information exchange system under AR design environment based on their habits and cultural backgrounds, in particular, It is a demanding gesture during the tour guide and proposes a mental model of gesture preference.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Augmented Reality (AR)

Augmented reality is a technology that seamlessly fuses real-world information with virtual information [3]. It can bring physical information (visual, sound, taste, touch, etc.) that is difficult to experience in a certain space and time range in the real world. Through computer and other scientific technologies, virtual information is superimposed on the real world after simulation and is perceived by human senses, thereby achieving a sensory experience beyond reality. AR technology realizes the real-time overlay of real information and virtual information on the same screen or space at the same time. The integration of virtual and real is the key content of AR technology [4]. Integration of the actual situation makes the AR technology to achieve the extension of enhanced reality environments, seamless integration of virtual information with the real environment, to achieve integration is to focus on the actual situation on the track identification markers, related to the complex coordinate conversion information, requiring real-time mapping The position of the virtual information in the 3D space is displayed on the screen.

1.2 Mid-Air Gesture Interaction

Mid-air gesture interaction has evolved into a feasible human-computer interaction (HCI) interaction. As an auxiliary interaction mode, it has been applied in many fields, such as home entertainment [5], in-vehicle systems [6], and human-computer interaction [7]. Although the AR/VR glasses break device for air gesture interaction in the air is closer to the way of natural interaction, it is still not ideal. The problems of low recognition efficiency [8] and insufficient function mapping design [9] have not been completely solved. Although existing designs focus on specific scenarios, they ignore their spatiotemporal characteristics. The application of the interaction system is single [10] and the interaction dimension is relatively simple [11]. Moreover, improper design of the mapping between gestures and interaction functions will make the user’s cognitive burden relatively heavy, especially in 3D scenes such as information communication, virtual assembly, etc., the systematicity of interactive system design still needs to be improved [12].

1.3 Scene-Based Interaction

The original meaning of the scene refers to the scenes that appear in dramas and movies. Goffman proposed the pseudo-drama theory, which explained the behavior area: all the places that felt to some extent restricted [13]. Merovitz extended the concept of “scene” from Goffman’s “simulation theory” [14]. Robert Scoble proposes the “five forces” of the mobile Internet: mobile devices, social media, big data, sensors and positioning systems [15].

With the development of information technology, scenes are states where people and behaviors are connected through network technology (media), and this scene creates unprecedented value and also creates a new and beautiful experience that guides and standardizes the user’s Behavior has also formed a new lifestyle. The development of augmented reality technology and the use of fusion between virtual scenes and physical scenes become possible, and the scenes interact with each other and restrict each other. Scene interaction design is the design to improve the user experience for each scene. Therefore, based on the scene-based interaction, the development of augmented reality interaction design is focused on the interaction relationship between specific scenes and behaviors. Based on the time, place, person or other characteristics in the scene, it quickly searches and explores relevant information, and outputs content that meets the needs of users in specific situations. To construct specific experience scenarios, pay attention to the experience and perceptibility of design results, establish a connection between users and products, achieve a good interaction between the two, give users an immersive experience or stimulate user-specific behaviors [16]. In a word, the scene-based design is based on a specific scenario and through experience to promote events that are sufficient to balance the value relationship between the subject and the object [17].

1.4 Predictability Studies

In the user-centric design concept, intuitive design is the most important part. Cognitive psychology believes that intuitive design is the process by which people can quickly identify and handle problems based on experience. This process is unwise, fast and easy. Blackler’s research also confirms this process [18]. Cooper also mentioned that the intuitive interface design allows people to quickly establish a direct connection between functions and tasks based on interface guidance [19]. With the continuous development of gesture recognition devices, the main design problem turns to gesture-induced research, the purpose of which is to trigger gestures that meet certain design standards, such as learnability, metaphors, memory, subjective fatigue, and effort. One of the methods with the highest user adoption is the “guessability” study, which was first introduced as a unified method for assessing the ergonomic characteristics of a user’s mental model and gestures [20]. In general, predictability research refers to the cognitive quality of input actions and is performed by having participants present a gesture that can better match the task without any prior knowledge. Gesture sets are derived from data analysis using various quantitative and qualitative indicators and then classified [21]. Other studies have enriched demand gestures by applying other metrics for analysis, and further extended them to specific application systems [22]. Selection-based heuristic research is used as a variant of predictable research [23]. By establishing levels of predictability and protocol metrics, the results of user experience can be quantified, and they can be used to assess the degree of intuition in action design [24]. At present, there are researches based on guessability, such as interaction on large touch screens [25], Smartphone gesture interaction [26], Hand-foot interaction in the game [27], VR gesture interaction [28], etc. The User-defined method is a good method to study gesture interaction based on augmented reality, and it is worth further research.

1.5 Role-Playing

The role-playing method is mainly proposed by interaction designer Stephen P. Anderson. It is mainly used in the early stage of interactive prototyping during system development. The user’s interaction is elaborated, and the user’s interaction process and details are recorded, including the user’s mood and emotions during the process. Researchers need to control the user’s choices at all times during the experiment to streamline unnecessary choices [29]. By completing the entire test, it helps to adjust and make decisions in the preliminary design.

2 The User - Defined Gesture Set Is Established Under Guessability

2.1 Participants

A total of 12 volunteers (6 women, 6 men) were recruited. Participants were all undergraduates from Beijing university of posts and telecommunications, ranging in age from 20 to 23 (mean: 21.6, SD = 0.76). All participants have no experience in using 3D interactive devices such as Leap Motion and Xbox, so they can be regarded as novice participants. All users have no use of hand disorders and visual impairment and are right-handed. This experiment followed the ethics of each psychological society, and all participants obtained informed consent.

2.2 Tasks

In order to find the interactive command of visit guide class gesture that is consistent with users’ habits, and ensure the usability of gestures in the daily visits. We first listed 21 operating instructions of the 3D spatial information interaction system in the tour guide scene. Invited four familiar Leap Motion gestures of related experts (use Leap Motion at least three hours a day), through expert evaluation scenario task difficulty can be divided into level 3. Besides, an online questionnaire was conducted among 24 people who were familiar with gesture interaction in the past three months, and they scored the importance of the third-level difficulty task respectively. Finally, they selected 6 operation instructions of the first-level difficulty, 1 operation instruction of the second-level difficulty, and 3 operation instructions of the third-level difficulty. To visit the bootstrap class scene found in the process of collecting the 4S shop visit guide scene can cover screen instructions. Therefore, the scene of 4S shop car purchase is taken as an example to carry out the scenario-based command design (see Table 1).

2.3 Experiment Setup

The experimental Settings are shown in Fig. 1. The relevant 3D materials of the experiment were placed on a platform with a height of 130 cm, and participants were required to stand 60 cm away from the platform to perform relevant operations according to the instructions. In the experimental setting, the test subjects were deemed to have successfully triggered the function after making two different (less similar) gestures. Role-playing and interactive methods were used to give the subjects relevant information feedback.

During the formal experiment, users were asked to wear transparent glasses and told that the scene required a certain degree of imagination. It also prompts the user to allow any contact with the model material in the scene during the experiment. Participants were asked to make at least two gestures for each task to avoid lingering biases. The corresponding materials of role-playing props in the experiment are shown in Fig. 2 and the feedback Settings are shown in Table 2.

Based on previous studies [30], we collected the subjective scores of the proposed gestures from five aspects: learnability, metaphor, memorability, subjective fatigue, and effort. Participants were asked to use a 5-point Likert scale for every two gestures (1 = completely disagree, 5 = completely agree). To evaluate the five aspects: the gesture is easy to learn, the interactive gesture exists naturally in the scene, my gesture is easy to remember, the movement I perform is not easy to fatigue, I do not feel the exertion of this gesture.

2.4 Data Analysis

A total of 76 gestures, 163 different gestures, were collected.

The two experts first classified gestures based on video and verbal descriptions of the participants. A gesture is the same gesture if it contains the same movement or gesture. Movement is mainly the direction of the knuckle movement, including horizontal, vertical and diagonal; A pose is a position at the end of the body, such as an open or kneaded hand. The differences between two experts in the classification process need to be determined by a third expert. We ended up with 76, 163 gestures, five of which were excluded because participants confused the meaning of the task or because the gesture was hard to recognize.

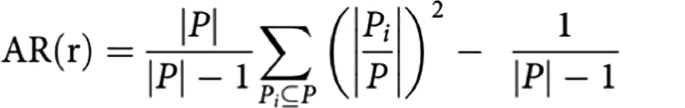

Then according to the consistency calculation formula proposed by Vatavu and Wobbrock (see Fig. 3) [31], the consistency of each command gesture was calculated. The experimental data are shown in Table 3.

Where P is the total number of gestures for command r, and Pi is the number of the same gesture subset I in command r [31]. The distribution of AR values is from 0 to 1. In addition, according to the explanation of Vatavu and Wobbrock: a low agreement is implied when AR ≤ 0.100, a moderate agreement is implied when 0.100 < AR ≤ 0.300, a higher agreement is implied when 0.300 < AR ≤ 0.500, and AR > 0.500 signifies very high agreement. By the way, when AR = 0.500, the agreement rate is equal to the disagreement rate, which means the low agreement rate [31].

2.5 Subjective Rating of the Proposed Gestures

Although the user-defined method is effective in revealing the user’s mental model of gesture interaction, it has some limitations [1]. User subjectivity may affect the accuracy of experimental results. An effective way to overcome this disadvantage is to show the user as many gestures as possible [32]. And re-check the most appropriate gesture.

2.6 Methods

According to the score of the subjects in experiment 1, two experts selected a group of high-grouping gesture sets from the gestures with scores in the first 50% of each instruction, and a group of low-grouping gesture levels from the last 50% of the gestures as the control group.

The new 12 students (1:1 male to a female) took part in the second experiment, which was set up in the same way as above. The 12 students were randomly divided into two groups, each with a 1:1 male to female ratio. The two groups of subjects did not conduct experiments in the same experimental environment at the same time. The subjects were required not to communicate with each other about the experimental contents during the experiment.

The actions corresponding to each instruction were repeated three times. After each command action, the subjects were given unlimited time to understand the intention behind the command. Once the participants understood the instructions, they were asked to rate the usability of the gestures based on ease of learning, metaphor, memorability, subjective fatigue and effort. After finishing all the actions for 10 min, the subjects need to recall the relevant actions according to relevant instructions, and the staff will record the error rate of the subjects.

3 Results and Discussion

As can be seen from Table 4, the average value of the high group is generally larger than that of the low group.

In the score of a single index, the gesture of the “hidden” menu at the AR glasses end showed a lower score of “hands over X” gesture than a higher score of “snapping fingers” gesture. However, in the subsequent test, it was found that only 66.67% of the participants in the lower group were correct. Finally, “snapping fingers” was included in the final gesture set.

It can also be seen from the data in Table 4 that for a pair of opposite operation instructions: the “wake up” and “hide” of the high group both adopted the operation instructions of snapping fingers, but when the subjects scored the “hide”, the score was lower than that of the “wake up”. Feedback from thinking out loud suggests that users prefer differentiated gestures.

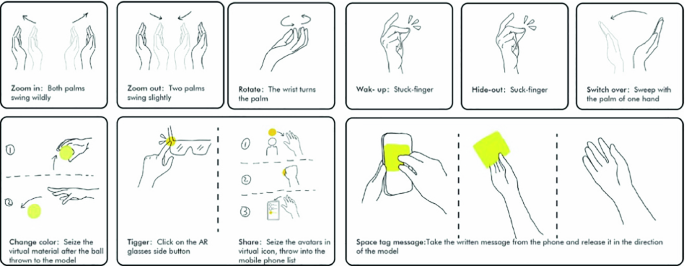

Finally, it is concluded that gestures with high grouping can all be included in the final gesture set, which is shown in Fig. 4. In order to further reduce the experimental error, the gender of the subjects was analyzed by one-way ANOVA and no gender difference was found.

3.1 Mental Model Observations

According to the results of user-defined experiments, the characteristics of user psychological model can be obtained:

-

Users prefer gestures that are tightly tied to the real world.

-

For a pair of opposite operation instructions, such as “on-off” and “zooming in and out”, users prefer those gestures with obvious differences.

-

Users prefer to use dynamic gestures, and the interaction is not limited to the palm.

-

In the face of complex scenes, users will choose multiple actions to complete the interaction.

4 Results and Discussion

In this paper, taking the 4S shop buying scene as an example, the most basic and common set of interactive commands and gestures in the scene of the tour guide is proposed. The goal is achieved by combining the method of guesswork, role play and scene interaction. The results were verified by newly recruited experimenters using the method of guessability. Combined with the two experimental data and the feedback of users’ loud thinking, the user psychological model of gesture interaction in the AR scene was proposed. The resulting gesture set fits the scene of tour guide, which conforms to the user’s cognition and usage habits, and provides some enlightenment for the design of 3D information interaction in the scene of the tour guide.

References

Hou, W., Feng, G., Cheng, Y.: A fuzzy interaction scheme of mid-air gesture elicitation. J. Vis. Commun. Image Represent. 64, 102637 (2019)

Nair, D., Sankaran, P.: Color image dehazing using surround filter and dark channel prior. J. Vis. Commun. Image Represent. 50, 9–15 (2018)

Graham, M., Zook, M., Boulton, A.: Augmented reality in urban places: contested content and the duplicity of code. Trans. Inst. Br. Geogr. 38, 464–479 (2013)

Zhang, S., Hou, W., Wang, X.: Design and study of popular science knowledge learning method based on augmented reality virtual reality interaction. Packag. Eng. 20, 60–67 (2017)

Lea, R., Gibbs, S., Dara-Abrams, A., Eytchison, E.: Networking home entertainment devices with HAVi. Computer 33, 35–43 (2000)

Basari, Saito, K., Takahashi, M., Ito, K.: Measurement on simple vehicle antenna system using a geostationary satellite in Japan. In: 2010 7th International Symposium on Communication Systems, Networks & Digital Signal Processing (CSNDSP 2010), pp. 81–85 (2010)

Hancock, P., Billings, D., Schaefer, K., Chen, J., de Visser, E., Parasuraman, R.: A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors 53, 517–527 (2011)

Atta, R., Ghanbari, M.: Low-memory requirement and efficient face recognition system based on DCT pyramid. IEEE Trans. Consum. Electron. 56, 1542–1548 (2010)

Ben-Abdennour, A., Lee, K.Y.: A decentralized controller design for a power plant using robust local controllers and functional mapping. IEEE Trans. Energy Convers. 11, 394–400 (1996)

Pramanik, R., Bag, S.: Shape decomposition-based handwritten compound character recognition for Bangla OCR. J. Vis. Commun. Image Represent. 50, 123–134 (2018)

Bai, C., Chen, J.-N., Huang, L., Kpalma, K., Chen, S.: Saliency-based multi-feature modeling for semantic image retrieval. J. Vis. Commun. Image Represent. 50, 199–204 (2018)

Han, J., et al.: Representing and retrieving video shots in human-centric brain imaging space. IEEE Trans. Image Process. 22, 2723–2736 (2013)

Goffman, E.: The Presentation of Self in Everyday Life. Doubleday, Oxford (1959)

Asheim, L.: Libr. Q.: Inf. Commun. Policy 56, 65–66 (1986)

Robert, S.: Age of Context: Mobile. Sensors, Data and the Future of Privacy (2013)

Liang, K., Li, Y.: Interaction design flow based on user scenario. Packag. Eng. 39(16), 197–201 (2018)

Wei, W.: Research on digital exhibition design based on scene interaction. Design 17, 46–48 (2018)

Blackler, A., Popovic, V., Mahar, D.: Investigating users’ intuitive interaction with complex artefacts. Appl. Ergon. 41, 72–92 (2010)

Cooper, A., Reimann, R., Cronin, D.: About Face 3: The Essentials of Interaction Design. Wiley, Indiana (2007)

Xu, M., Li, M., Xu, W., Deng, Z., Yang, Y., Zhou, K.: Interactive mechanism modeling from multi-view images. ACM Trans. Graph. 35, 236:201–236:213 (2016)

Han, J., Zhang, D., Hu, X., Guo, L., Ren, J., Wu, F.: Background prior-based salient object detection via deep reconstruction residual. IEEE Trans. Circuits Syst. Video Technol. 25, 1309–1321 (2015)

Xu, M., Zhu, J., Lv, P., Zhou, B., Tappen, M.F., Ji, R.: Learning-based shadow recognition and removal from monochromatic natural images. IEEE Trans. Image Process. 26, 5811–5824 (2017)

Xu, M., Li, C., Lv, P., Lin, N., Hou, R., Zhou, B.: An efficient method of crowd aggregation computation in public areas. IEEE Trans. Circuits Syst. Video Technol. 28, 2814–2825 (2018)

Wobbrock, J.O., Aung, H.H., Rothrock, B., Myers, B.A.: Maximizing the guessability of symbolic input. In: CHI 2005 Extended Abstracts on Human Factors in Computing Systems, pp. 1869–1872. ACM, Portland (2005)

Wobbrock, J.O., Morris, M.R., Wilson, A.D.: User-defined gestures for surface computing. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 1083–1092. ACM, Boston (2009)

Ruiz, J., Li, Y., Lank, E.: User-defined motion gestures for mobile interaction. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 197–206. ACM, Vancouver (2011)

Silpasuwanchai, C., Ren, X.: Jump and shoot!: prioritizing primary and alternative body gestures for intense gameplay. In: Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems, pp. 951–954. ACM, Toronto (2014)

Leng, H.Y., Norowi, N.M., Jantan, A.H.: A user-defined gesture set for music interaction in immersive virtual environment. In: Proceedings of the 3rd International Conference on Human-Computer Interaction and User Experience in Indonesia, pp. 44–51. ACM, Jakarta (2017)

Anderson, S., Heartbeat-A Guide to Emotional Interaction Design, Revised edn. (2015)

Chen, Z., et al.: User-defined gestures for gestural interaction: extending from hands to other body parts. Int. J. Hum.-Comput. Interact. 34, 238–250 (2018)

Vatavu, R.-D., Wobbrock, J.O.: Formalizing agreement analysis for elicitation studies: new measures, significance test, and toolkit. In: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, pp. 1325–1334. ACM, Seoul (2015)

Dim, N.K., Silpasuwanchai, C., Sarcar, S., Ren, X.: Designing mid-air TV gestures for blind people using user- and choice-based elicitation approaches. In: Proceedings of the 2016 ACM Conference on Designing Interactive Systems, pp. 204–214. ACM, Brisbane (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Wei, XL., Xi, R., Hou, Wj. (2020). User-Centric AR Sceneized Gesture Interaction Design. In: Chen, J.Y.C., Fragomeni, G. (eds) Virtual, Augmented and Mixed Reality. Design and Interaction. HCII 2020. Lecture Notes in Computer Science(), vol 12190. Springer, Cham. https://doi.org/10.1007/978-3-030-49695-1_24

Download citation

DOI: https://doi.org/10.1007/978-3-030-49695-1_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-49694-4

Online ISBN: 978-3-030-49695-1

eBook Packages: Computer ScienceComputer Science (R0)