Abstract

Speech is an important human capability, especially as a learning base for expression and communication. However, it is very difficult for children with hearing disorders to obtain this basic ability while they are young.

The authors have found the potential of using voice recognition in application systems for portable devices and tablet terminal for use in the speech training of children with hearing disorders since early childhood (pre-school). In this paper, we propose the creation of this application through evaluation and discussion of practicality.

The application focuses on the enjoyment of a game and a sense of accomplishment so that children can keep learning with motivation while operating. A tablet is used for this system for easy operation by children. The environment includes OS: Windows 10, Game Engine: Unity, and Voice analysis: Julius. The application has voice recognition, so it can be operated through speech. This voice recognition covers from simple sounds to utterance accuracy: it recognizes whether the child is using their voice and if the utterance is correct. In this way, the application can help children with hearing disorders to acquire speech and reduce disability in the future.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Speech training of children with hearing disorders

- Voice and speech training system

- Tablet terminal application

1 Introduction

In Japan, the widespread use of newborn hearing screening tests has led to early detection of hearing impairment and increased opportunities for early treatment. However, it has been well-known in Japan that a hearing-impaired child acquires less vocabulary than a normal-hearing child, and development is delayed relative to age from infancy. The inability of hearing-impaired children to learn speech affects the display of affection with families and others around them, and hinders appropriate communication with others, thus affecting social development.

Speech guidance necessary for communication using words and speech is carried out at the kindergarten of the school for the deaf. However, it is problematic that skilled persons are required for speech guidance. Another problem in the education of the hearing-impaired is the difficulty in learning words. It has also been well-known that the delay in vocabulary acquisition begins in childhood, and misuse is also noticeable. In addition, the hearing disability influences not only the delay in language acquisition but also on the development of other physical functions, the building of human relationships, and the development of emotions. With the development of technology in recent years, effective results have been obtained from research on hearing-impaired persons utilizing information technology, speech recognition, and speech synthesis technology [1, 2].

The goal of this research is to support the first steps of language acquisition and communication for hearing-impaired children using he advantages of portable terminals for young children so that the restrictions on social activities due to disabilities can be reduced as much as possible.

This paper describes the details of the proposed application as well as evaluations and discussions on practicality. This application focuses on the enjoyment of the game and a sense of accomplishment so that children can keep learning with motivation and enjoy the game and be able to operate this application. This game application is developed in the following two main types, whether the children are making a voice, and if the utterance is correct.

Ex1) A rocket flies in reaction to the voice of the user. The flight distance and direction of the rocket differ according to the voice volume and the utterance of the user.

Ex2) A character and the face of the user appear on the display. The application asks user to pronounce a certain vowel correctly. The character on the screen guides the user by indicating them how to move their mouth and what shape the mouth would be while pronouncing the vowel. When the user pronounces the vowel correctly, the sound is shown on the screen as a voice pattern.

Ex3) The user becomes the character for a role playing game and based on the sound they pronounce, they go on an adventure of catching escaped animals.

The proposed system uses a tablet terminal so that young children can practice alone, and can easily and continuously support basic pronunciation practice. This application can help children with hearing disorders to acquire speech.

2 Traditional Japanese Speech Training Methods

In the education of hearing-impaired children in Japan, it is important to teach spoken language, letters and sign language at the same time, and, not only increase vocabulary, but also promote the use of full sentences.

Language training using words and speech is carried out at the kindergarten of the school for the deaf. Speech training is conducted through practice of shaping the mouth for pronunciation of Japanese vowel sounds and by putting one’s fingers in the mouth and learning the number of fingers it takes to make the sound. Many studies on hearing-impaired children have already used information technology. These studies found that the use of information terminals (tablet terminals) is effective for language and knowledge acquisition for hearing-impaired children [3].

3 Proposed System

Delaying vocabulary acquisition until entering school has a major impact on primary school education as well as communication development.

For this reason, we propose a system for vocalization training for younger hearing- impaired children using a camera-equipped tablet terminal and voice, image recognition, and voice recognition. The target of the proposed system is to check whether the hearing-impaired child can produce the sound accurately. The shape of the mouth is one of the important factors in producing accurate sound; therefore, we propose a system that can guide the child to create the correct mouth shape. By using a tablet device with a camera, the system displays the face of the trainee so that trainee can understand whether “the correct sound has been emitted” and “the correct mouth shape has been made for the pronunciation”. In addition, we developed the system so that preschoolers can use to attain correct pronunciation without much explanation through the use of voice recognition because they do not understand many words. The system also shows scores through such things as cartoon animation and without the use of too many words so that the preschoolers can easily understand.

4 Prototype Application

4.1 Navigation System for Opening the Mouth Shape

Implementation

The proposed application uses a face detection method supported by image recognition libraries to navigate the shape of the mouth for checking correct vowel pronunciations. It uses OpenCV and Dlib to detect the shape of the mouth. The application shows the face using the camera, and displays the correct shape of the mouth over the shape of his/her mouth. The development environment included OS: Windows10, IDE: Visual studio2017, programing language: C ++, Library: OpenCV and Dlib, voice analysis: Julius.

The coordinates of the landmark points are stored in an array during the face detection by Dlib. Also, the number of coordinates of the feature points, the order of the coordinates, and the face part indicated by the coordinates are determined in advance. Therefore, the application also determines the position of the array in which the coordinates of the mouth necessary for the proposed system are stored in advance. The shape of the mouth is determined by comparing the coordinates of the feature point corresponding to the mouth in the array with the coordinates stored before, since it is difficult to determine the shape of the mouth using only the stored coordinates.

As a result, the shape of the mouth could be distinguished not by the coordinates but by the amount of change in the coordinates. In addition, the system uses the coordinates at which the authors were producing the desired vowel as the correct mouth shape. The application draws the shape of the user’s mouth as a line in green and the correct mouth shape as a line in red. Users can attain the correct mouth shape by changing the shape of the mouth so that the green line overlaps the red line.

Figure 1 shows a screen capture of the running application. Figure 1 (left) shows the state in which the shape of the mouth for the sound “A(a)” is being indicated while the mouth of the user is closed. Figure 1 (center) shows a state in which the mouth shape of “A(a)” is being indicated and the mouse of the user shapes for the sound “I(i)”. Figure 1 (right) shows the state in which the mouth shape of “A(a)” is being indicated and the mouth of the user shapes for “A(a)”. The red and green lines do not overlap in Fig. 1 (left) and (center). On the other hand, the lines greatly overlap in Fig. 1 (right).

Result

We could navigate the shape of the mouth detected by Dlib. Also, the shape of the mouth can be corrected to the desired shape of the mouth by changing the shape of the mouth to be closer to the navigation line

4.2 Flying Rocket

Implementation

The proposed system uses a speech recognition method to convey whether or not the pronounced sound is correct, using as few words as possible. The proposed system uses Julius to determine the correctness of pronunciation. The movement of the cartoon animation changes depending on the correctness of the pronunciation. The development environment includes OS: Windows10, game engine: Unity, programing language: C++, voice analysis: Julius.

Julius can edit the words to be recognized and treat the result of speech recognition as “confidence.” Therefore, the application determines the distance of the flight of the rocket based on the reliability (confidence) of the target words. We prepared four levels to the flying distance of the rocket. The higher the confidence factor, the closer the rocket would fly to the moon. In this way, the correctness of pronunciation is indicated without using words.

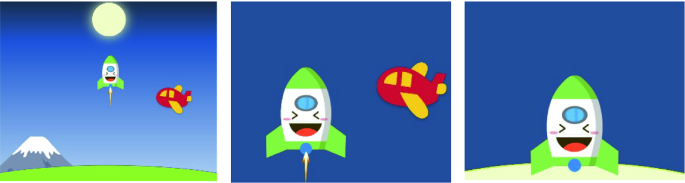

Figure 2 shows the start window. Voice input at this screen is recognized by Julius. The animation of a flying rocket appears as shown in Fig. 3 (left) when the target word is recognized. The higher the Julius word confidence, the more the rocket will fly. The rocket will land on the moon when the word confidence is the highest. Figure 3 (center) shows the result when the degree of certainty is 90 to 94%. Figure 3 (right) shows a result when the degree of certainty is 95 to 100%.

Result

We could practice and evaluate objective pronunciation by using Julius. In addition, we could show the evaluation of pronunciation using the minimum amount of words by using the animation of a flying rocket and by applying the word confidence of Julius.

4.3 The Game of Capture Animals

Implementation

We propose a game where users can clear a stage once they have produced the correct pronunciation. We aimed that young children can play repeatedly to target better results by enhancing entertainment factors into the application. The User must capture animals of the Japanese zodiac while playing with this game. The development environment includes OS: Windows10, game engine: Unity, programing language: C++, voice analysis: Julius.

As with the rocket application, the confidence of the target word determines whether the animal can be captured or not. We expect that this can be played repeatedly due to the effective entertainment factor.

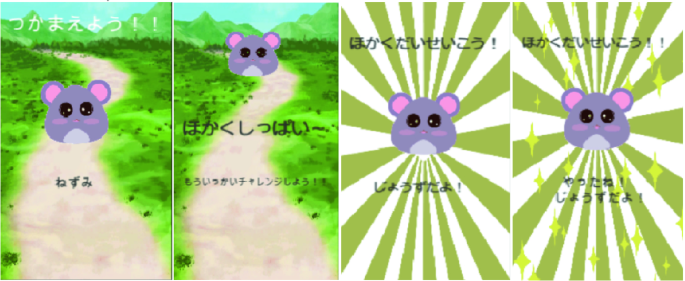

Figure 4 shows the start window. The voice input at this screen is recognized by Julius. Figure 4 (far left) shows the failure screen for when the confidence of the word is 0 to 84%. Figure 4 (center-left) shows the success screen for when the confidence is 85 to 89%. Figure 4 (center-right) shows the success screen for when the confidence is 90 to 94%. Figure 4 (far right) shows a success screen when the confidence is 95 to 100%.

Result

We believe that the word confidence of Julius allowed the creation of a game that can be played repeatedly thanks to the effective entertainment factor of catching animals. It is possible, however, that some children will not understand the game. More guidance is necessary for such a case.

5 Discussion

We have proposed a system for voice and speech training for hearing-impaired children using a camera-equipped tablet terminal and voice, visual, and sound recognition. We created prototype applications that could be used for practice. As a result, the intended operations were performed successfully. Although we could not have young children with hearing impairment experience the applications, we received positive feedback from trainers of such children.

Feedback from teachers at a school for the deaf (who were also deaf) was that the game gave a strong sense of accomplishment to the user because they were able to control the game with their own voice and repeat the activity if they failed. It increased the possibility of enjoyment while learning speech and words. It also fills the need for a way to fix speech mistakes. Although it is possible to speak without the correct mouth shape, there is a need for a way to teach it.

From this feedback, we believe that children can learn through this game, and that it would fill the need for a correction method when the speech is incorrect.

The Speech-Language-Hearing Therapist advised that it was important to judge and fix speech to attain correct speech. But, if the purpose of correct speech is ease of communication, the addition of information other than speech such as sign language, characters, mouth shapes, and facial expressions will increase the ease of communication. It is good to have the experience of being able to communicate with others by using spoken language or other means of communication, even if it is determined to be incorrect by this application. There should be a way to indicate a way to correct pronunciation if it is incorrect but it is important to teach spoken language, character and sign language at the same time. If the application plays a part in that, it would be worthwhile.

Therefore, we believe it can be useful for pronunciation practice of younger children.

6 Conclusion

The proposed prototype applications can be used by hearing-impaired children to practice pronunciation through image recognition and voice recognition technology. The prototype applications have succeeded in operating as intended.

As a future issue, it is necessary to have a younger hearing-impaired child experience the applications that were actually created, and verify whether it will trigger the vocabulary acquisition. In addition, it is necessary to develop a system by which young children can be trained not only in speech but sign language and written language at the same time as another future work.

References

Ogawa, N., Hiraga, R.: A voice game to improve speech by deaf and hard of hearing persons. IPSJ SIG Technical reports 2019-AAC-9(15), pp. 1–4 (2019)

Higashinoi, Y., et al.: Evaluation of the hearing environment for hearing-impaired people using an automatic voice recognition software program and the CI-2004 test battery. Audiol. Jpn 61(3), 222–231 (2018)

Shibata, K., Ayuha, D., Hattori, A.: Tablet-media for children with hearing-hard -language speaking and knowledge acquisition-. IPSJ SIG Technical reports 2013-DPS-156(13), pp. 1–6 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Takagi, H. et al. (2020). Voice and Speech Training System for the Hearing-Impaired Children Using Tablet Terminal. In: Stephanidis, C., Antona, M. (eds) HCI International 2020 - Posters. HCII 2020. Communications in Computer and Information Science, vol 1226. Springer, Cham. https://doi.org/10.1007/978-3-030-50732-9_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-50732-9_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-50731-2

Online ISBN: 978-3-030-50732-9

eBook Packages: Computer ScienceComputer Science (R0)