Abstract

The Vision Meets Drone Object Detection in Image Challenge (VisDrone-DET 2020) is the third annual object detector benchmarking activity. Compared with the previous VisDrone-DET 2018 and VisDrone-DET 2019 challenges, many submitted object detectors exceed the recent state-of-the-art detectors. Based on the selected 29 robust detection methods, we discuss the experimental results comprehensively, which shows the effectiveness of ensemble learning and data augmentation in drone captured object detection. The full challenge results are publicly available at the website http://aiskyeye.com/leaderboard/.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bochkovskiy, A., Wang, C., Liao, H.M.: Yolov4: optimal speed and accuracy of object detection. CoRR abs/2004.10934 (2020)

Bodla, N., Singh, B., Chellappa, R., Davis, L.S.: Soft-NMS - improving object detection with one line of code. In: ICCV, pp. 5562–5570 (2017)

Cai, Z., Vasconcelos, N.: Cascade R-CNN: delving into high quality object detection. In: CVPR, pp. 6154–6162 (2018)

Cao, Y., Xu, J., Lin, S., Wei, F., Hu, H.: GCNet: non-local networks meet squeeze-excitation networks and beyond. In: ICCVW, pp. 1971–1980 (2019)

Chen, C., et al.: RRNet: a hybrid detector for object detection in drone-captured images. In: ICCV, pp. 100–108 (2019)

Chen, H., Shrivastava, A.: Group ensemble: learning an ensemble of convnets in a single convnet. CoRR abs/2007.00649 (2020)

Dai, J., et al.: Deformable convolutional networks. In: ICCV, pp. 764–773 (2017)

Du, D., et al.: VisDrone-DET2019: the vision meets drone object detection in image challenge results. In: ICCVW, pp. 213–226 (2019)

Duan, K., Bai, S., Xie, L., Qi, H., Huang, Q., Tian, Q.: CenterNet: keypoint triplets for object detection. In: ICCV, pp. 6568–6577 (2019)

Dwibedi, D., Misra, I., Hebert, M.: Cut, paste and learn: surprisingly easy synthesis for instance detection. In: ICCV, pp. 1310–1319 (2017)

Gao, J., Wang, J., Dai, S., Li, L., Nevatia, R.: NOTE-RCNN: noise tolerant ensemble RCNN for semi-supervised object detection. In: ICCV, pp. 9507–9516 (2019)

Gao, S., Cheng, M., Zhao, K., Zhang, X., Yang, M., Torr, P.H.S.: Res2Net: a new multi-scale backbone architecture. TPAMI (2019)

Guo, W., Li, W., Gong, W., Cui, J.: Extended feature pyramid network with adaptive scale training strategy and anchors for object detection in aerial images. Remote. Sens. 12(5), 784 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR, pp. 770–778 (2016)

Howard, A., et al.: Searching for MobileNetV3. In: ICCV, pp. 1314–1324 (2019)

Huang, J., et al.: Speed/accuracy trade-offs for modern convolutional object detectors. In: CVPR, pp. 3296–3297 (2017)

Law, H., Deng, J.: CornerNet: detecting objects as paired keypoints. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. LNCS, vol. 11218, pp. 765–781. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_45

Li, X., et al.: Generalized focal loss: learning qualified and distributed bounding boxes for dense object detection. CoRR abs/2006.04388 (2020)

Li, Z., Peng, C., Yu, G., Zhang, X., Deng, Y., Sun, J.: Light-head R-CNN: in defense of two-stage object detector. CoRR abs/1711.07264 (2017)

Lin, T., Dollár, P., Girshick, R.B., He, K., Hariharan, B., Belongie, S.J.: Feature pyramid networks for object detection. In: CVPR, pp. 936–944 (2017)

Lin, T., Goyal, P., Girshick, R.B., He, K., Dollár, P.: Focal loss for dense object detection. In: ICCV, pp. 2999–3007 (2017)

Lin, T.-Y., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Liu, Y., et al.: CBNet: a novel composite backbone network architecture for object detection. In: AAAI, pp. 11653–11660 (2020)

Liu, Z., Gao, G., Sun, L., Fang, Z.: HRDNet: high-resolution detection network for small objects. CoRR abs/2006.07607 (2020)

Pang, J., Chen, K., Shi, J., Feng, H., Ouyang, W., Lin, D.: Libra R-CNN: towards balanced learning for object detection. In: CVPR, pp. 821–830 (2019)

Qiao, S., Chen, L., Yuille, A.L.: Detectors: detecting objects with recursive feature pyramid and switchable atrous convolution. CoRR abs/2006.02334 (2020)

Redmon, J., Farhadi, A.: Yolov3: an incremental improvement. CoRR abs/1804.02767 (2018)

Ren, S., He, K., Girshick, R.B., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R. (eds.) NeurIPS, pp. 91–99 (2015)

Rezatofighi, H., Tsoi, N., Gwak, J., Sadeghian, A., Reid, I.D., Savarese, S.: Generalized intersection over union: a metric and a loss for bounding box regression. In: CVPR, pp. 658–666 (2019)

Shao, F., Wang, X., Meng, F., Zhu, J., Wang, D., Dai, J.: Improved faster R-CNN traffic sign detection based on a second region of interest and highly possible regions proposal network. Sensors 19(10), 2288 (2019)

Solovyev, R., Wang, W.: Weighted boxes fusion: ensembling boxes for object detection models. CoRR abs/1910.13302 (2019)

Sun, K., Xiao, B., Liu, D., Wang, J.: Deep high-resolution representation learning for human pose estimation. In: CVPR, pp. 5693–5703 (2019)

Sun, K., et al.: High-resolution representations for labeling pixels and regions. CoRR abs/1904.04514 (2019)

Tan, M., Pang, R., Le, Q.V.: EfficientDet: scalable and efficient object detection. In: CVPR, pp. 10778–10787 (2020)

Tarvainen, A., Valpola, H.: Mean teachers are better role models: weight-averaged consistency targets improve semi-supervised deep learning results. In: NeurIPS, pp. 1195–1204 (2017)

Wang, J., Chen, K., Xu, R., Liu, Z., Loy, C.C., Lin, D.: CARAFE: content-aware reassembly of features. In: ICCV, pp. 3007–3016 (2019)

Wang, J., et al.: Deep high-resolution representation learning for visual recognition. TPAMI (2020)

Wu, Y., et al.: Rethinking classification and localization in R-CNN. CoRR abs/1904.06493 (2019)

Xu, J., Wang, W., Wang, H., Guo, J.: Multi-model ensemble with rich spatial information for object detection. PR 99, 107098 (2020)

Yun, S., Han, D., Chun, S., Oh, S.J., Yoo, Y., Choe, J.: CutMix: regularization strategy to train strong classifiers with localizable features. In: ICCV, pp. 6022–6031 (2019)

Zhang, S., Chi, C., Yao, Y., Lei, Z., Li, S.Z.: Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In: CVPR, pp. 9756–9765 (2020)

Zhong, Z., Zheng, L., Kang, G., Li, S., Yang, Y.: Random erasing data augmentation. In: AAAI, pp. 13001–13008 (2020)

Zhou, J., Vong, C., Liu, Q., Wang, Z.: Scale adaptive image cropping for UAV object detection. Neurocomputing 366, 305–313 (2019)

Zhou, X., Wang, D., Krähenbühl, P.: Objects as points. CoRR abs/1904.07850 (2019)

Zhu, P., Wen, L., Du, D., Bian, X., Hu, Q., Ling, H.: Vision meets drones: past, present and future. CoRR abs/2001.06303 (2020)

Zhu, P., et al.: VisDrone-DET2018: the vision meets drone object detection in image challenge results. In: Leal-Taixé, L., Roth, S. (eds.) ECCV 2018. LNCS, vol. 11133, pp. 437–468. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-11021-5_27

Zlocha, M., Dou, Q., Glocker, B.: Improving RetinaNet for CT lesion detection with dense masks from weak RECIST labels. In: Shen, D., et al. (eds.) MICCAI 2019. LNCS, vol. 11769, pp. 402–410. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-32226-7_45

Zoph, B., Cubuk, E.D., Ghiasi, G., Lin, T., Shlens, J., Le, Q.V.: Learning data augmentation strategies for object detection. CoRR abs/1906.11172 (2019)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61876127 and Grant 61732011, in part by Natural Science Foundation of Tianjin under Grant 17JCZDJC30800.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

A Submitted Detectors

A Submitted Detectors

In this appendix, we provide a short summary of all algorithms that were considered in the VisDrone-DET2020 Challenge.

1.1 A.1 Drone Pyramid Network V3 (DPNetV3)

Heqian Qiu, Zichen Song, Minjian Zhang, Mingyu Liu, Taijin Zhao, Fanman Meng, Hongliang Li hqqiu@std.uestc.edu.cn, szc.uestc@gmail.com, jamiezhang722@outlook.com, myl8562@163.com, zhtjww@std.uestc.edu.cn, fmmeng@uestc.edu.cn, hlli@uestc.edu.cn

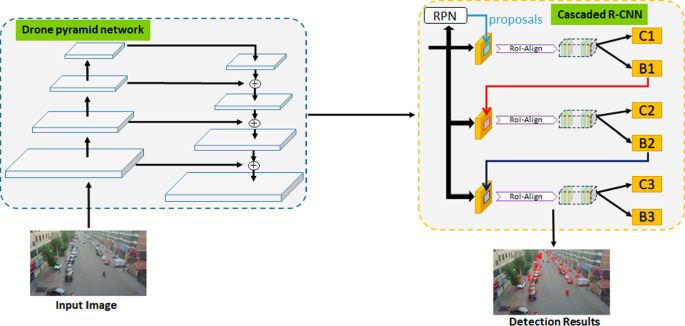

DPNetV3 is an ensemble model for object detection, see Fig. 2. First, it adopts HRNet-W40 [33] pre-trained on ImageNet dataset as our backbone network, which starts from a high-resolution subnetwork as the first stage, gradually adds high-to-low resolution subnetworks one by one to form more stages, and connects the mutli-resolution subnetworks in parallel. In addition, we also use Res2Net [12] as our backbone networks. To make features more robust for complex scenes, we introduce Balanced Feature Pyramid Network [26] with CARAFE (Content-Aware ReAssembly of FEatures) [37] and Deformable Convolution [7] into these backbone networks. Furthermore, we use Cascade R-CNN paradigm [3] to progressively refine detection boxes for accurate object localization. We ensemble them using weighted box fusion method.

1.2 A.2 Using Split, Mosaic and Paster Modules for Detecting Aerial Images (SMPNet)

Chengzhen Duan, Zhiwei Wei {18S151541, 19S051024}@stu.hit.edu.cn

In order to improve the accuracy of aerial image detection, we propose adaptive split method, mosaic data enhancement method and resampling enhancement method. The adaptive split method adjusts the split absolute area according to the average target size in the split, so that the detector can focus on the narrower target scale range that is conducive to detection. After that, we calculate the scaling factor required by the target in split, and then scale the split proportionally. Then we cut the four splits and splice them into mosaics [1]. In order to alleviate the problem of class imbalance, we use panoramic segmentation to build the target pool, and then paste the appropriate target from the target pool to the training sample. Different from the previous method of pasting the whole GT box [5], we only paste the target. We use multi-model to infer and fuse the detection results, including Cascade-RCNN [3] + HRNet [33], ATSS [42] + HRNet [33], and ATSS [42] + Res2Net [12] + general focal loss [18].

1.3 A.3 DeepBlueNet (DBNet)

Zhipeng Luo, Sai Wang, Zhenyu Xu, Yuehan Yao, Bin Dong {luozp, wangs, xuzy, yaoyh, dongb}@deepblueai.com

DBNet adopts Cascade_x101_64-4d [3] as the pipeline and add a global context block [4] to improve the ability of the extractor which could get more information from global feature. Meanwhile, we use DCN [7] to reduce the effect of feature misalignment, and adaptive part localization for objects with different shapes. As for R-CNN part, we use Double-Head RCNN [39]. Thus object classification is enhanced by adding classification task in conv-head, as it is complementary to the classification in fc-head. That is, bounding box regression provides auxiliary supervision for fc-head. We ensemble multi-scale testing results as our final result.

1.4 A.4 Cascade R-CNN on Drone-Captured Scenarios (DroneEye2020)

Sungtae Moon, Joochan Lee, Jungyeop Yoo, Jonghwan Ko, Yongwoo Kim stmoon@kari.re.kr, {maincold2, soso030, jhko}@skku.edu, yongwoo.kim

@smu.ac.kr

DroneEye2020 is improved on Cascade R-CNN [3]. We divide original training images by \(2\times 2\) and horizontally flip all patches. If a divided patch has no objects, we exclude the patch when training. We use Cascade R-CNN [3] with ResNet-50 backbone, which is pretrained by COCO dataset. Notably, we use Recursive Feature Pyramid (RFP) for neck, and additionally use Switchable Atrous Convolution (SAC) for better performance [27].

1.5 A.5 Tricks are All yoU Need (TAUN)

Jingkai Zhou, Weida Qin, Zhongjie Fan, Shuqin Huang, Qiong Liu, Ming-Hsuan Yang fs.jingkaizhou@gmail.com

TAUN is based on the cascade DetectoRS [3, 27]. The backbone is HRNet-40 [38], and the neck is the original HRFPN neck. ATSS [42] is used as the assigner in the RPN [29]. We use multi-scale image crop (not SAIC [44] for saving time and memory) to augment training and testing data, use mean teacher [36] to train the model. When model testing, we use ratio, outside, and scale filters to post filter outline bounding boxes. The threshold of those filters are counted based on the training dataset.

1.6 A.6 Cascade RCNN with DCN (CDNet)

Shuai Li, Yang Xiao, Zhiguo Cao {shuai_li1997, yang_xiao, zgcao}@hust.edu.cn

CDNet is based on Cascade RCNN [3] with ResNeXt101. Moreover, we add deformable convolutional network [7] for better performance. To reduce GPU memory and consider small objects, we split the train images into sub-image with size \(416\times 416\) and train the network between 416 and \(416\times 2\) size. In testing phase, we use Soft-NMS and multi-scale testing to achieve better accuracy.

1.7 A.7 Cascade Network with Test Time Self-supervised Adaptation (CascadeAdapt)

Weiping Yu, Chen Chen wyu4@unc.edu, chen.chen@uncc.edu

CascadeAdapt is improved from Cascade R-CNN [3] with ResNet backbone and Deformable Convolutions. We adopt several augmentation methods such as mosaic in YOLOv4 [1]. We use double heads instead of traditional box head to output the detection results. Although We use weighted box fusion to ensemble several models and obtain prediction on the test-challenge set. After that, we obtain pseudo labels of the test-challenge set by setting the threshold, and then finetune the model by the pseudo labels for 2 epoch to remove a large amount of false detections. The performance can be further improved by using other tricks such as GN, test time augmentation, label smooth and GIOU loss.

1.8 A.8 Enhanced Cascade R-CNN for Drone (ECascade R-CNN)

Wenxiang Lin, Yan Ding, Qizhang Lin {eutenacity, dingyan, 3120190071}@bit.edu.cn

ECascade R-CNN is an ensembling method based on the work in [32]. We additionally train a detector with the backbone of HRNetv2 [34], ResNet50 [14] and ResNet101 [14]. For the convenience, we call the additional detectors as HRDet, Res50Det and Res101Det and call the first detector as RXDet. Finally, we train two detectors (i.e., RXDet and HRDet) with the trick for class variance and four more detectors (RXDet, HRDet, Res50Det and Res101Det) without that. The final result is the output of the ensemble of all six detectors.

1.9 A.9 Cascade R-CNN with High-Resolution Network and Enhanced Feature Pyramid (HR-Cascade++)

Hansheng Chen, Lu Xiong, Dong Yin, Yuyao Huang, Wei Tian {1552083, xiong_lu, tjyd, huangyuyao}@tongji.edu.cn, tian-w@hotmail.com

HR-Cascade++ is based on the multi-stage detection architecture Cascade R-CNN [3], and is tuned specifically for dense small object detection. As the drone images include many small objects, we seek to obtain high resolution feature and improve the bounding box spatial accuracy. We adopt HRNet-W40 [38] as the backbone, which maintains high-resolution representations (1/4 of the original size) through the whole process. The feature pyramid network is enhanced with an additionally upsampled high resolution level (1/2 of the original image). This enables smaller and denser anchor generation, without resizing the original image. We adopt Quality Focal Loss [18] for R-CNN classification. Multi-scale and flip augmentations are applied in both training and testing. Photometric distortion is also used for training image augmentation. To reduce GPU memory consumption, the training images are cropped after resizing. If a ground truth bounding box is truncated during cropping, it is marked as ignore region when truncation ratio is greater than \(50\%\). Soft-NMS is used for post-processing.

1.10 A.10 CenterNet with Feature Pyramid and Adaptive Feature Selection (FPAFS-CenterNet)

Zehui Gong

zehuigong@foxmail.com

FPAFS-CenterNet is based on CenterNet [45], because of its simplicity and high efficiency for object detection. CenterNet presents a new representation for detecting objects, in terms of their center locations. Other object properties, e.g., object size, are regressed directly using the image features from the center locations. To achieve better performance, we have applied some useful modifications to CenterNet, with regard to the data augmentation, backbone network, feature fusion neck, and also, the detection loss. In order to extract more powerful features from input image, we employ CBNet [24] as our backbone network. We use BiFPN [35] as a feature fusion neck to enhance the information flow across the highly semantic and spatially finer features. We use GIOU loss [30], which is irrelevant to the object size.

1.11 A.11 CenterNet with Multi-Scale Cropping (MSC-CenterNet)

Xuanxin Liu, Yu Sun liuxuanxin@bjfu.edu.cn, sunyv@buaa.edu.cn

MSC-CenterNet employs CenterNet [45] with Hourglass-104 as the base network and does not use the pre-trained models. Considering the larger scale range for the intra-class and inter-class objects, the model is trained with multi-scale cropping. The input resolution is \(1024\times 1024\) and the input region is cropped from the image with the scale randomly choose from (0.9, 1.1, 1.3, 1.5, 1.8).

1.12 A.12 CenterNet for Small Object Detection (CenterNet+)

Qiu Shi qiushi_0425@foxmail.com

CenterNet+ is CenterNet [45] with the hourglass feature extractor where there are three hourglass blocks. In order to improve the performance of our detector for small samples, we change the stride from 7 to 2 in each hourglass block. Besides, we adopt multi-scale training and multi-scale test to improve the detector performance.

CenterNet+ employs CenterNet [45] with the hourglass feature extractor where there are three hourglass blocks. In order to improve the performance of our detector for small samples, we change the stride from 5 to 2 in each hourglass block. Besides, we adopt multi-scale training and multi-scale test to improve the detector performance.

1.13 A.13 Aerial Surveillance Network (ASNet)

Michael Schleiss michael.schleiss@fkie.fraunhofer.de

ASNet adopts the ATSS detector [42] based on the implementation from mmdetection v2.2. Standard settings are used if not stated otherwise. Our backbone is Res2Net [12] with 152 layers pretrained on ImageNet. In the neck we replace FPN with Carafe [37]. The neck has 384 output channels instead of 256 in the original implementation. Focal loss is replaced by generalized focal loss [30]. We use multi-scale training with sizes [600, 800, 1000, 1200] for the shorter side. We train on a single gpu with batch size 4 for 12 epochs with a step wise learning schedule. Learning rate starts with 0.01 and is divided by 10 after epoch 8 and 11 respectively. We use no TTA and apply a scale of 1200 for the shorter side during testing.

1.14 A.14 CenterNet-Hourglass-104 (CN-FaDhSa)

Faizan Khan, Dheeraj Reddy Pailla, Sarvesh Mehta {faizan.farooq, dheerajreddy.p}@students.iiit.ac.in, sarvesh.mehta@research.iiit.

ac.in

CN-FaDhSa is modified from CenterNet [45]. Instead of using the default resolution of \(512\times 512\) during training, we train the model at the resolution of \(1024\times 1024\) and test at various scales of \(2048\times 2048\).

1.15 A.15 High-Resolution Net (HRNet)

Guosheng Zhang, Zehui Gong 249200734@qq.com HRNet is similar to CenterNet [9]. However, we detect the object just as a single center point instead of triplets, and regress to object size \(s = (h, w)\) for each object. In addition, we use the High-Resolution Net (HRNet) followed by FPN as the backbone, which is able to maintain high-resolution representations through the whole process.

1.16 A.16 Density Map Guided Object Detection (DMNet)

Changlin Li cli33@uncc.edu

We use density crop+uniform crop to train baseline model and conduct fusion detection to obtain final detection. The baseline is Cascade R-CNN [3].

1.17 A.17 High-Resolution Detection Network (HRD-Net)

Ziming Liu, Guangyu Gao liuziming.email@gmail.com, guangyugao@bit.edu.cn

To keep the benefits of high-resolution images without bringing up new problems, we propose the High-Resolution Detection Network (HRDNet) [25]. HRDNet takes multiple resolution inputs using multi-depth backbones. To fully take advantage of multiple features, we propose Multi-Depth Image Pyramid Network (MD-IPN) and Multi-Scale Feature Pyramid Network (MS-FPN) in HRDNet. MD-IPN maintains multiple position information using multiple depth backbones. Specifically, high-resolution input will be fed into a shallow network to reserve more positional information and reducing the computational cost while low-resolution input will be fed into a deep network to extract more semantics. By extracting various features from high to low resolutions, the MD-IPN is able to improve the performance of small object detection as well as maintaining the performance of middle and large objects. MS-FPN is proposed to align and fuse multi-scale feature groups generated by MD-IPN to reduce the information imbalance between these multi-scale multi-level features.

1.18 A.18 A Slimmer Network with Polymorphic and Group Attention Modules for More Efficient Object Detection in Aerial Images (PG-YOLO)

Wei Guo, Jincai Cui {gwfemma, jinkaicui}@cqu.edu.cn

PG-YOLO is a YOLOv3 [28] based slimmer network for more efficient object detection in aerial images. Firstly, a polymorphic module (PM) is designed for simultaneously learning the multi-scale and multi-shape object features, so as to better detect the hugely different objects in aerial images. Then, a group attention module (GAM) is designed for better utilizing the diversiform concatenation features in the network. By designing multiple detection headers with adaptive anchors and above-mentioned two modules, the final one-stage network called PG-YOLO is obtained for realizing the higher detection accuracy.

1.19 A.19 Extended Feature Pyramid Network with Adaptive Scale Training Strategy and Anchors for Object Detection in Aerial Images (EFPN)

Wei Guo, Jincai Cui {gwfemma, jinkaicui}@cqu.edu.cn

EFPN comes from the work in [13]. To enhance the semantic information of small objects in deep layers of the network, the extended feature pyramid network is proposed. Specifically, we use the multi-branched dilated bottleneck module in the lateral connections and an attention pathway to improve the detection accuracy for small objects. For better locating the objects. Besides, an adaptive scale training strategy is developed to enable the network to deal with multi-scale object detection, where adaptive anchors are achieved by a novel clustering method.

1.20 A.20 Cluster Region Estimation Network (CRENet)

Yi Wang, Xi Zhao {wangyi0102, xizhao_1}@stu.xidian.edu.cn

Aerial images are increasingly used for critical tasks, such as traffic monitoring, pedestrian tracking, and infrastructure inspection. However, aerial images have the following main challenges: 1) small objects with non-uniform distribution; 2) the large difference in object size. In this paper, we propose a new network architecture, Cluster Region Estimation Network (CRENet), to solve these challenges. CRENet uses a clustering algorithm to search cluster regions containing dense objects, which makes the detector focus on these regions to reduce background interference and improve detection efficiency. However, not every cluster region can bring precision gain, so each cluster region is calculated a difficulty score, mining the difficult cluster region and eliminating the simple cluster region to speed up the detection. Finally, a Gaussian scaling function is used to scale the difficult cluster region to reduce the difference of object size.

1.21 A.21 Cascade R-CNN for Drone-Captured Scenes (Cascade R-CNN++)

Ting Sun, Xingjie Zhao sunting9999@stu.xjtu.edu.cn, 1243273854@qq.com

We use Cascade R-CNN [3] as the baseline with four improvements: 1) We use Group normalization instead of Batch normalization; 2) We use online hard example mining to select positive and negative samples; 3) We use multi-scale testing; 4) We use two stronger backbones to train models and integrate them. Besides, we use ResNeXt as backbone to training, and Soft-Non maximum suppression instead of Non maximum suppression. At the same time, we use online hard example mining to select positive and negative samples in Region proposal networks.

1.22 A.22 HRNet Based ATSS for Object Detection (HR-ATSS)

Jun Yu, Haonian Xie harryjun@ustc.edu.cn, xie233@mail.ustc.edu.cn

HR-ATSS is based on Adaptive Training Sample Selection (ATSS) [42], which can automatically select positive and negative samples according to statistical characteristics of object. We employ HRNet [33] as backbone to improve small object performance, where HRFPN [33] is adopted as the feature pyramid. Specifically, we adopt HRNet-W32 as backbone, HRFPN as the feature pyramid, and ATSS detection head to regress and classify objects. We adopt Synchronized BN instead of BN.

1.23 A.23 Concat Feature Pyramid Networks (CFPN)

Yingjie Liu 1497510582@qq.com

CFPN is improved from FPN [20] and Cascade R-CNN [3], which uses concatenation for lateral connections rather than the addition in FPN. Meanwhile, in the fast R-CNN stage, cascade architecture named Cascade R-CNN is utilized to refine the bounding box regression. ResNet-152 is used as the pre-trained backbone. In the training stage, we apply a manual adjustment on the learning rate to optimize detection performance. In the testing stage, we use Soft-NMS for better recall on the dense objects.

1.24 A.24 CenterNet+HRNet (Center-ClusterNet)

Xiaogang Jia 18846827115@163.com

The Center-ClusterNet detector is based on CenterNet [45] and HRNet [33]. We use MobileNetV3 [15] as the backbone to predict all centers of the objects. Then K-Means is used as a post-processing method to generate clusters. Both original images and cropped images are processed by the detector. Then the predicted bounding boxes are merged by standard NMS.

1.25 A.25 Deep Optimized High Resolution RetinaNet (DOHR-RetinaNet)

Ayush Jain, Rohit Ramaprasad, Murari Mandal, Pratik Narang {f20170093, f20180224}@pilani.bits-pilani.ac.in, 2015rcp9525@mnit.ac.in, pratik.narang@pilani.bits-pilani.ac.in

DOHR-RetinaNet is based on RetinaNet [21], using ResNet101 backbone for feature extraction. FPN is utilized for creating semantically strong features and focal loss to mitigate class imbalance. The ResNet101 backbone is pretrained on the ImageNet dataset. Additionally, we use a combination of anchors consisting of 5 ratios and 5 scales which are optimized using the algorithm in [48] to improve detection of smaller objects. All our input images are resized such that the minimum side is 1728px and the maximum side is less than 3072px. The training images are augmented through random rotation, random flipping and brightness variation to help detect objects captured in dark backgrounds.

1.26 A.26 High Resolution Cascade R-CNN (HRC)

Daniel Stadler, Arne Schumann, Lars Wilko Sommer daniel.stadler@kit.edu, {arne.schumann, lars.sommer}@iosb.fraunhofer.de

HRC is based on Cascade R-CNN [3] with FPN [20] and HRNetV2p-W32 [33] as backbone. We train four detectors with different anchor scales to account for varying object scales on randomly sampled image crops (\(608 \times 608\) pixels) of the VisDrone DET train and val set and use the SSD [23] data augmentation pipeline to enhance feature representation learning. For each class, the detector with best anchor scale is utilized. During test time, we follow a multi-scale strategy and, additionally, apply horizontal flipping. The resulting detections are combined via Soft-NMS [2]. To account for the large number of objects per frame, we increase the number of proposals and the maximum number of detections per image.

1.27 A.27 Iterative Scheme for Object Detection in Crowded Environments (IterDet)

Xin He 2962575697@whut.edu.cn

IterDet is an alternative iterative scheme, where a new subset of objects is detected at each iteration. Detected boxes from the previous iterations are passed to the network at the following iterations to ensure that the same object would not be detected twice. This iterative scheme can be applied to both one-stage and two-stage object detectors with just minor modifications of the training and inference procedures.

1.28 A.28 Small-Scale Object Detection for Drone Data (SSODD)

Yan Luo, Chongyang Zhang, Hao Zhou {luoyan_bb, sunny_zhang, zhouhao_0039}@sjtu.edu.cn

SSODD is based on Cascade R-CNN [3] using ResNeXt-101 64x4d as backbone and FPN [20] as feature extractor. We also use deformable convolution to enhance our feature extractor. Our pretrained model is based on COCO dataset. Other techniques like multi-scale training and soft-nms are involved in our method. To detect small-scale objects, we crop each image into four parts, which are evenly distributed on the row image. The cropped four regions are fed into the framework with the multi-scale training technique, in which the scale is (960, 720) and (960, 640).

1.29 A.29 Cascade R-CNN Enhanced by Gabor-Based Anchoring (GabA-Cascade)

Ioannis Athanasiadis, Athanasios Psaltis, Apostolos Axenopoulos, Petros Daras {athaioan, at.psaltis, axenop, daras}@iti.gr

GabA-Cascade is build upon Cascade R-CNN [3] which is enhanced by considering an additional set of anchors targeted explicitly at small objects. Inspired by [31], a set of simplified Gabor wavelets (SGWs) is applied on the input image resulting in an edge-enhanced version of the latter. Thereafter, the maximally stable extremal regions (MSERs) algorithm is applied on the edge-enhanced image extracting regions possible of containing an object, called edge anchors. As a next step, we aim at integrating the edge anchors into the Region Proposal Network (RPN). Due to edge anchors being of varying scale and having continuous center coordinates, some modifications are required so as to be compatible with the RPN training procedure. In order for the Feature Pyramid Network (FPN) feature maps to remain scale specific and have the bounding box regressor referring to identical shaped anchors, the edge anchors are refined to match the closest available shape and size hyper parameters configuration. The issue of edge anchor centers not being aligned with the pixel grid is addressed through rounding their centers. Furthermore, additional feature maps, dedicated to the edge anchors, are introduced with the purpose of minimizing the previously mentioned refinement. These feature maps correspond to different scales relevant to small objects and are identical to the feature map of the first FPN pyramid level. After the modifications described above the RPN is able to evaluate regions given both edge and regular anchors as input. Finally the rest of the object detection pipeline follows the cascade architecture as described in [3], deploying classifiers of increasing quality.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Du, D. et al. (2020). VisDrone-DET2020: The Vision Meets Drone Object Detection in Image Challenge Results. In: Bartoli, A., Fusiello, A. (eds) Computer Vision – ECCV 2020 Workshops. ECCV 2020. Lecture Notes in Computer Science(), vol 12538. Springer, Cham. https://doi.org/10.1007/978-3-030-66823-5_42

Download citation

DOI: https://doi.org/10.1007/978-3-030-66823-5_42

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-66822-8

Online ISBN: 978-3-030-66823-5

eBook Packages: Computer ScienceComputer Science (R0)