Abstract

This paper presents efficient structure-preserving signature schemes based on simple assumptions such as decisional linear. We first give two general frameworks for constructing fully secure signature schemes from weaker building blocks such as variations of one-time signatures and random message secure signatures. They can be seen as refinements of the Even–Goldreich–Micali framework, and preserve many desirable properties of the underlying schemes such as constant signature size and structure preservation. We then instantiate them based on simple (i.e., not q-type) assumptions over symmetric and asymmetric bilinear groups. The resulting schemes are structure-preserving and yield constant-size signatures consisting of 11–14 group elements, which compares favorably to existing schemes whose security relies on q-type assumptions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A structure-preserving signature (SPS) scheme [4] is a digital signature scheme with two structural properties: (1) the verification keys, messages, and signatures are all elements of a bilinear group; and (2) the verification algorithm checks a conjunction of pairing product equations over the key, the message, and the signature. This makes them compatible with the efficient non-interactive proof system for pairing product equations by Groth and Sahai (GS) [33]. Structure-preserving cryptographic primitives promise to combine the advantages of optimized number theoretic non-black-box constructions with the modularity and insight into protocols that use only generic cryptographic building blocks.

Indeed the instantiation of known generic constructions with an SPS scheme and the GS proof system has led to many new and more efficient schemes: Groth [32] showed how to construct an efficient simulation-sound zero-knowledge proof system (ss-NIZK) building on generic constructions of [20, 37, 41]. Abe et al. [4, 7] show how to obtain efficient round-optimal blind signatures by instantiating a framework by Fischlin [23]. SPS are also important building blocks for a wide range of cryptographic functionalities such as anonymous proxy signatures [25], delegatable anonymous credentials [9], transferable e-cash [26] and compact verifiable shuffles [18]. Most recently, Hofheinz and Jager [34] show how to construct a structure- preserving tree-based signature scheme with a tight security reduction following the approach of [21, 29]. This signature scheme is then used to build a ss-NIZK which in turn is used with the Naor and Yung [38] and Sahai [40] paradigm to build the first CCA-secure public-key encryption scheme with a tight security reduction. Examples for other schemes that benefit from efficient SPS are [8, 10, 11, 14, 24, 27, 30, 31, 35, 39].

Because properties (1) and (2) are the only dependencies on the SPS scheme made by these constructions, any structure-preserving signature scheme can be used as a drop-in replacement. Unfortunately, all known efficient instantiations of SPS [4, 5, 7] are based on so-called q-type or interactive assumptions. An open question since Groth’s seminal work [32] (only partially answered by Chase and Kohlweiss [17]) is to construct a SPS scheme that is both efficient—in particular constant-size in the number of signed group elements—and that is based on assumptions that are as weak as those required by the GS proof system itself.

1.1 Our Contribution

We begin by presenting two new generic constructions of signature schemes that are secure against chosen message attacks (CMA) from variations of one-time signatures and signatures secure against random message attacks (RMA). Both constructions inherit the structure-preserving and constant-size properties from the underlying components. We then instantiate the building blocks with the desired properties over bilinear groups. They yield constant-size structure-preserving signature schemes whose signatures consist of only 11–14 group elements and whose security can be proven based on simple assumptions such as decisional linear (\(\text {DLIN}\)) for symmetric bilinear groups and analogues of DDH and \(\text {DLIN}\) for asymmetric bilinear groups. These are the first constant-size structure-preserving signature schemes that eliminate the use of interactive or q-type assumptions while achieving reasonable efficiency. We give more details on our generic constructions and their instantiations:

-

The first generic construction (\(\mathsf {SIG{1}}\), Sect. 4.1) combines a new variation of one-time signatures which we call tagged one-time signatures (\(\mathsf {TOS}\)) and signatures secure against random message attacks (RMA). A \(\mathsf {TOS}\) is a signature scheme that attaches a fresh tag to each signature. It is unforgeable with respect to tags used only once. In our construction, a message is signed with our \(\mathsf {TOS}\) using a fresh random tag, and then the tag is signed with the second signature scheme, denoted by \({\mathsf {{r}SIG{}}}\). Since \({\mathsf {{r}SIG{}}}\) only signs random tags, RMA security is sufficient.

In Sect. 5, we construct structure-preserving \(\mathsf {TOS}\) and \({\mathsf {{r}SIG{}}}\) based on \(\text {DLIN}\) over symmetric (Type-I) bilinear groups. Our \(\mathsf {TOS}\) yields constant-size signatures and optimally small tags that consist of only one group element. The resulting structure-preserving signature scheme produces signatures consisting of 14 group elements, and relies solely on the \(\text {DLIN}\) assumption.Footnote 1

-

The second generic construction (\(\mathsf {SIG{2}}\), Sect. 4.2) combines partial one-time signatures and signatures secure against extended random message attacks (XRMA). The latter is a new notion that we explain below. A partial one-time signature scheme, denoted by \(\mathsf {POS}\), is a one-time signature scheme in which only a part of the key is renewed for every signing operation. The notion was first introduced by Bellare and Shoup [12] under the name of two-tier signatures. In our construction, a message is signed with \(\mathsf {POS}\) and then the one-time portion of the public-key is certified by the second signature scheme, denoted by \({\mathsf {{x}SIG{}}}\). The difference between a \(\mathsf {TOS}\) and \(\mathsf {POS}\) is that a one-time public-key is associated with a one-time secret-key. Since the one-time secret-key is needed for signing, it must be known to the reduction in the security proof. XRMA security guarantees that \({\mathsf {{x}SIG{}}}\) is unforgeable even if the adversary is given auxiliary information associated with the randomly chosen messages (e.g. the random coins used for selecting the message). The auxiliary information allows the reduction algorithm of the security proof of the second scheme to use the one-time secret-key to generate the \(\mathsf {POS}\) component correctly.

In Sect. 6, we construct structure-preserving \(\mathsf {POS}\) and \({\mathsf {{x}SIG{}}}\) signature schemes based on assumptions that are analogues of DDH and \(\text {DLIN}\) in Type-III bilinear groups. The resulting \({\mathsf {SIG{2}}}\) is structure-preserving and produces signatures consisting of 11 or 14 group elements depending on whether messages belong to either or both source groups.

The role of \(\mathsf {TOS}\) and \(\mathsf {POS}\) is to compress a message into a constant number of random group elements. This observation is interesting in light of [6] that implies the impossibility of constructing collision resistant and shrinking structure-preserving hash functions, which could immediately yield constant-size signatures. Our (extended) RMA-secure signature schemes are structure-preserving variants of Waters’ dual-signature scheme [44]. In general, the difficulty of constructing CMA-secure SPS arises from the fact that the exponents of the group elements chosen by the adversary as a message are not known to the reduction in the security proof. On the other hand, for RMA security, it is the challenger that chooses the message and therefore the exponents can be known in reductions. This is the crucial advantage for constructing (extended) RMA-secure structure-preserving signature schemes based on Waters’ dual-signature scheme.

As our SPSs can be drop-in replacements for existing SPS, we only briefly introduce recent applications in Sect. 7. They include group signatures, tightly-secure structure-preserving signatures and public-key encryption, and efficient adaptive oblivious transfer.

1.2 Related Works

1.2.1 On Generic Constructions

Even et al. [22] proposed a generic framework (the EGM framework) that combines a one-time signature scheme and a signature scheme that is secure against non-adaptive chosen message attacks (NACMA) to construct a signature scheme that is secure against adaptive chosen message attacks (CMA).

In fact, our generic constructions can be seen as refinements of the EGM framework. There are two reasons why the original framework falls short for our purpose. The first is that relaxing to NACMA does not seem to help much in constructing efficient structure-preserving signatures since the messages are still under the control of the adversary, and the exponents of the messages are not known to the reduction algorithm in the security proof. As mentioned above, resorting to (extended) RMA is a great help in this regard. In [22], they also showed that CMA-secure signatures exist iff RMA-secure signatures exist. The proof, however, does not follow their framework and their impractical construction is mainly a feasibility result. In fact, we argue that RMA-security alone is not sufficient for the original EGM framework. As mentioned above, the necessity of \(\text {XRMA}\) security arises in the reduction that uses \(\text {RMA}\)-security to argue security of the ordinary signature scheme, as the reduction not only needs to know the random one-time public-keys, but also their corresponding one-time secret-keys in order to generate the one-time signature components of the signatures. The auxiliary information in the \(\text {XRMA}\) definition facilitates access to these secret-keys. Similarly, tagged one-time signatures avoid this problem as tags do not have associated secret values. This observation applies also to a variation of the EGM framework in [42] that combines a trapdoor hash function and a NACMA-secure signature scheme. The second reason that the EGM approach is not quite suited to our task is that the EGM framework produces signatures that are linear in the size of one-time public-keys of the one-time signature scheme, and known structure-preserving one-time signature schemes have one-time public-keys that scale linearly with the number of group elements to be signed. Here, tagged or partial one-time signature schemes come in handy as they have one-time public-keys separated from long-term public-keys. Thus, to obtain constant-size signatures, we only require the one-time keys to be constant-size while allowing the long-term part to scale in the size of the message.

1.2.2 On Efficient Instantiations

All previous constructions of structure-preserving signature schemes either are inefficient, or use strong assumptions, or do not yield constant-size signatures. In particular, there are few schemes that are based on simple assumptions. Hofheinz and Jager [34] constructed an SPS scheme by following the EGM framework. The resulting scheme allows a tight security reduction to \(\text {DLIN}\), but the size of signatures depends logarithmically on the number of signing operations as their NACMA-secure scheme is tree-based (like the Goldwasser–Micali–Rivest signature scheme [29]). Chase and Kohlweiss [17] and Camenisch et al. [15] constructed SPS schemes with security based on \(\text {DLIN}\) that improve the performance of Groth’s scheme [32] by several orders of magnitude. The size of the resulting signatures, however, is still linear in the number of signed group elements.

2 Preliminaries

2.1 Notation

By \(X :=Y\), we denote that object Y is referred to as X. For set X, notation \(a \leftarrow X\) denotes a uniform sampling from X. Multiple independent samples from the same set X are denoted by \(a_1,a_2,a_3,\ldots \leftarrow X\). By \(Y \leftarrow A(X)\), we denote the process where algorithm A is executed with X as input and its output is labeled as Y. When A is an oracle algorithm that interacts with oracle \(\mathcal O\), it is denoted as \(Y \leftarrow A^\mathcal{O}(X)\). By \(\Pr [X \,|\, A_1, A_2, \ldots , A_k ]\) we denote the probability that event X happens after executing the sequence of algorithms \(A_1, \ldots , A_k\). The probability is taken over all coin flips observed in \(A_1,\ldots ,A_k\) unless otherwise noted. We say that a function \(\epsilon \) is negligible in security parameter \(\lambda \) if \(\epsilon < \lambda ^{-c}\) holds for all constant \(c>0\) and all sufficiently large \(\lambda \). We refer to probabilistic polynomial- time algorithms as p.p.t. algorithms. Unless stated otherwise, we assume that all algorithms are potentially probabilistic.

2.2 Bilinear Groups

Let \({\mathcal {G}}\) be a bilinear group generator that takes security parameter \(1^\lambda \) and outputs a description of bilinear groups \(\varLambda :=(p,{{\mathbb G}}_1,{{\mathbb G}}_2,{{\mathbb G}}_T,e)\), where \({{\mathbb G}}_1,\,{{\mathbb G}}_2\) and \({{\mathbb G}}_T\) are groups of prime order \(p\), and \(e\) is an efficient and non-degenerate bilinear map \({{\mathbb G}}_1\times {{\mathbb G}}_2\rightarrow {{\mathbb G}}_T\). In this paper, generators for \({{\mathbb G}}_1\) and \({{\mathbb G}}_2\) are implicit in \(\varLambda \), and default random generators \(G\) and \(\hat{G}\) are chosen explicitly and independently. Groups \({{\mathbb G}}_1\) and \({{\mathbb G}}_2\) are called the source groups and \({{\mathbb G}}_T\) is called the target group. We use multiplicative notation for \({{\mathbb G}}_1,\,{{\mathbb G}}_2\) and \({{\mathbb G}}_T\). By \({{\mathbb G}}_1^*\), we denote \({{\mathbb G}}_1{\setminus } \{1\}\), which is the set of all elements in \({{\mathbb G}}_1\) except the identity. The same applies to \({{\mathbb G}}_2\) and \({{\mathbb G}}_T\) as well. Following the terminology in [28], we say that \(\varLambda \) is Type-III when there is no efficient mapping between \({{\mathbb G}}_1\) and \({{\mathbb G}}_2\) in either direction.

In the Type-III setting, we denote elements in \({{\mathbb G}}_2\) by putting a tilde on a variable like \(\tilde{X}\) for visual aid. By using the same letter for elements in \({{\mathbb G}}_2\) and \({{\mathbb G}}_1\) with a hat on the \({{\mathbb G}}_2\) element, e.g., X and \(\hat{X}\), we denote a pair of elements in relation \(\log _{G} X= \log _{\hat{G}} \hat{X}\). Should their relation be explicitly stated, we write \(X \sim \hat{X}\). Note that default random generators \(G\) and \(\hat{G}\) are independent of each other but notational consistency retains.

We count the number of group elements to measure the size of cryptographic objects such as keys, messages, and signatures. For Type-III groups, we denote the size by (x, y) when it consists of x and y elements from \({{\mathbb G}}_1\) and \({{\mathbb G}}_2\), respectively. We refer to the setting as Type-I when \({{\mathbb G}}_1= {{\mathbb G}}_2\) (i.e., there are efficient mappings in both directions). This is also called the symmetric setting. In this case, we define \(\varLambda :=(p, {{\mathbb G}}, {{\mathbb G}}_T, e)\). When we need to be specific, the group description yielded by \({\mathcal {G}}\) will be written as \(\varLambda _{\mathsf {asym}}\) or \(\varLambda _\mathsf{sym }\).

2.3 Assumptions

Let \({\mathcal {G}}\) be a generator of bilinear groups. All hardness assumptions we deal with are defined relative to \({\mathcal {G}}\). We first define the computational and decisional Diffie–Hellman assumptions (\(\text {CDH}_1,\,\text {DDH}_{1} \)) and decisional linear assumption (\(\text {DLIN}_{1} \)) for Type-III bilinear groups. The corresponding more standard assumptions, \(\text {CDH},\,\text {DDH} \), and \(\text {DLIN} \), in Type-I groups are obtained by setting \({{\mathbb G}}_1= {{\mathbb G}}_2\) and \(G= \hat{G}\) in the respective definitions.

Definition 1

(Computation co-Diffie–Hellman assumption: \(\text {CDH}_1\)) Given \(\varLambda \!\leftarrow \! {\mathcal {G}}(1^{\lambda }),G\leftarrow {{\mathbb G}}_1^*,\,\hat{G}\leftarrow {{\mathbb G}}_2^*,\,G^{x},\,G^{y},\,\hat{G}^x\), and \(\hat{G}^y\) for \(x,y \leftarrow \mathbb {Z}_p\), any p.p.t. algorithm \(\mathcal{A}\) outputs \(G^{x y}\) with negligible probability \( \text {Adv} ^{\mathsf {co}\text {-}\mathsf {cdh}}_{{\mathcal {G}},\mathcal{A}}(\lambda )\) in \(\lambda \).

Definition 2

(Decisional Diffie—Hellman assumption in \({{\mathbb G}}_1\): \(\text {DDH}_{1}\)) Given \(\varLambda \leftarrow {\mathcal {G}}(1^\lambda ),\,G \leftarrow {{\mathbb G}}_1^*\), and \((G^x, G^y, Z_b)\) where \(Z_1 = G^{x y}\) and \(Z_0 = G^z\) for random \(x,y,z\leftarrow {{\mathbb Z}}_p\) and random bit b, any p.p.t. algorithm \(\mathcal{A}\) decides whether \(b=1\) or 0 with negligible advantage \( \text {Adv} ^{\mathsf {{\mathsf {ddh}}1}}_{{\mathcal {G}},\mathcal{A}} (\lambda )\) in \(\lambda \).

Definition 3

(Decisional linear assumption in \({{\mathbb G}}_1\): \(\text {DLIN}_{1}\)) Given \(\varLambda \leftarrow {\mathcal {G}}(1^\lambda ),({G_1},{G_2},{G_3})\leftarrow ({{\mathbb G}}_1^*)^3\) and \((G_1^x, G_2^y, Z_b)\) where \(Z_1 = G_3^{x+y}\) and \(Z_0 = G_3^z\) for random \(x,y,z \leftarrow \mathbb {Z}_p\) and random bit b, any p.p.t. algorithm \(\mathcal{A}\) decides whether \(b=1\) or 0 with negligible advantage \( \text {Adv} ^{\mathsf {{\mathsf {dlin}}1}}_{{\mathcal {G}},\mathcal{A}}(\lambda )\) in \(\lambda \).

For \(\text {DDH}_{1}\) and \(\text {DLIN}_{1}\), we define an analogous assumption in \({{\mathbb G}}_2\) (\(\text {DDH}_{2} \)) by swapping \({{\mathbb G}}_1\) and \({{\mathbb G}}_2\) in the respective definitions. In Type-III bilinear groups, it is assumed that both \(\text {DDH}_{1}\) and \(\text {DDH}_{2}\) hold simultaneously. The assumption is called the symmetric external Diffie–Hellman assumption (\(\text {SXDH} \)), and we define advantage \( \text {Adv} ^{\mathsf {sxdh}}_{{\mathcal {G}},\mathcal{C}}\) by \( \text {Adv} ^{\mathsf {sxdh}}_{{\mathcal {G}},\mathcal{C}}(\lambda ) := \text {Adv} ^{\mathsf {{\mathsf {ddh}}1}}_{{\mathcal {G}},\mathcal{A}}(\lambda ) + \text {Adv} ^{\mathsf {{\mathsf {ddh}}2}}_{{\mathcal {G}},\mathcal{B}}(\lambda )\). We extend \(\text {DLIN}\) in a similar manner:

Definition 4

(External decision linear assumption in \({{\mathbb G}}_1\): \(\text {XDLIN}_{1}\)) Given \(\varLambda \leftarrow {\mathcal {G}}(1^\lambda ),({G_1},{G_2},{G_3})\leftarrow ({{\mathbb G}}_1^*)^3\) and \((G_1^x, G_2^y, {\hat{G}_{1}},{\hat{G}_{2}},{\hat{G}_{3}},\hat{G}_{1}^x, \hat{G}_{2}^{y},Z_{b})\) where \(({G_{1}},{G_{2}},{G_{3}}) \sim ({\hat{G}_{1}},{\hat{G}_{2}},{\hat{G}_{3}}),\,Z_1 = G_3^{x+y}\), and \(Z_0 = G_3^z\) for random \(x,y,z \leftarrow \mathbb {Z}_p\) and random bit b, any p.p.t. algorithm \(\mathcal{A}\) decides whether \(b=1\) or 0 with negligible advantage \( \text {Adv} ^{\mathsf {xdlin} 1}_{{\mathcal {G}},\mathcal{A}}(\lambda )\) in \(\lambda \).

The \(\text {XDLIN}_{1}\) assumption is equivalent to the \(\text {DLIN}_{1}\) assumption in the generic bilinear group model [13, 43] where one can simulate the extra elements, \({\hat{G}_1},{\hat{G}_2},{\hat{G}_3},\hat{G}_1^x, \hat{G}_2^y\), in \(\text {XDLIN}_{1}\) from \({G_1},{G_2},{G_3},G_1^x, G_2^y\) in \(\text {DLIN}_{1}\). We define the \(\text {XDLIN}_{2}\) assumption analogously by giving \(\hat{G}_3^{x+y}\) or \(\hat{G}_3^z\) as \(Z_b\), to \(\mathcal{A}\) instead. Then we define the simultaneous external \(\text {DLIN}\) assumption, \(\text {SXDLIN} \), that assumes that both \(\text {XDLIN}_{1} \) and \(\text {XDLIN}_{2} \) hold at the same time. By \( \text {Adv} ^{\mathsf {{\mathsf {xdlin}}2}}_{{\mathcal {G}},\mathcal{A}}\) (\( \text {Adv} ^{\mathsf {sxdlin}}_{{\mathcal {G}},\mathcal{A}}\), resp.), we denote the advantage function for \(\text {XDLIN}_{2}\) (and SXDLIN, resp.).

Definition 5

(Double pairing assumption in \({{\mathbb G}}_1\) [4]: \(\text {DBP} _1\)) Given \(\varLambda \leftarrow {\mathcal {G}}(1^\lambda )\) and \((G_z, G_r) \leftarrow ({{\mathbb G}}_1^*)^2\), any p.p.t. algorithm \(\mathcal{A}\) outputs \((Z,R) \in ({{\mathbb G}}_2^*)^2\) that satisfies \(1 = e(G_z,Z)\; e(G_r,R)\) with negligible probability \( \text {Adv} ^{\mathsf {{\mathsf {dbp}}1}}_{{\mathcal {G}},\mathcal{A}}(\lambda )\) in \(\lambda \).

The double pairing assumption in \({{\mathbb G}}_2\) (\(\text {DBP} _2\)) is defined in the same manner by swapping \({{\mathbb G}}_1\) and \({{\mathbb G}}_2\). It is known that \(\text {DBP} _1\) (\(\text {DBP} _2\), resp.) is implied by \(\text {DDH}_{1}\) (\(\text {DDH}_{2}\), resp.) and the reduction is tight [7]. Note that the double pairing assumption does not hold in Type-I groups since \(Z=G_r,\,R=G_{z}^{-1}\) is a trivial solution. Thus in Type-I groups we will instead use the following extension:

Definition 6

(Simultaneous double pairing assumption [16]: \(\text {SDP}\)) Given \(\varLambda \leftarrow {\mathcal {G}}(1^\lambda )\) and \((G_z, G_r,H_z,H_s) \leftarrow ({{\mathbb G}}^*)^4\), any p.p.t. algorithm \(\mathcal{A}\) outputs \((Z,R,S) \in ({{\mathbb G}}^*)^3\) that satisfies \(1 = e(G_z,Z)\; e(G_r,R) {\;\textstyle \wedge }\;1 = e(H_z,Z)\; e(H_s,S)\) with negligible probability \( \text {Adv} ^{\mathsf {sdp}}_{{\mathcal {G}},\mathcal{A}}(\lambda )\) in \(\lambda \).

As shown in [16], for the Type-I setting the simultaneous double pairing assumption holds relative to \({\mathcal {G}}\) if the decisional linear assumption holds for \({\mathcal {G}}\).

3 Definitions

3.1 Common Setup

All building blocks make use of a common setup algorithm \(\mathsf {Setup}\) that takes the security parameter \(1^\lambda \) and outputs a global parameter \(gk\) that is given to all other algorithms. Usually \(gk\) consists of a description \(\varLambda \) of a bilinear group setup and a default generator for each group. In this paper, we include several additional generators in \(gk\) for technical reasons. Note that when the resulting signature scheme is used in multi-user applications different additional generators need to be assigned to individual users or one needs to fall back on the common reference string model, whereas \(\varLambda \) and the default generators can be shared. Thus we count the size of \(gk\) when we assess the efficiency of concrete instantiations. For ease of notation, we make \(gk\) implicit except w.r.t. key generation algorithms.

3.2 Signature Schemes

We use the following syntax for signature schemes suitable for the multi-user and multi-algorithm setting. We follow standard syntax with the following modifications: the key generation function takes as input global parameter \(gk\) generated by \(\mathsf {Setup}\) (instead of security parameter \(1^\lambda \)), and the message space \(\mathcal{M}\) is determined solely by \(gk\) (instead of being determined by the public-key).

Definition 7

(Signature scheme) A signature scheme \(\mathsf {SIG}\) is a triple of polynomial-time algorithms \((\mathsf {Key}, \mathsf {Sign},\mathsf {Vrf})\):

-

\(\mathsf {SIG}.\mathsf {Key} (gk)\) generates a public-key \(vk \) and a secret-key \(sk \).

-

\(\mathsf {SIG}.\mathsf {Sign} (sk, msg )\) takes \(sk \) and message \( msg \) and outputs a signature \(\sigma \).

-

\(\mathsf {SIG}.\mathsf {Vrf} (vk, msg , \sigma )\) outputs 1 for acceptance or 0 for rejection.

Correctness requires that \(1 = \mathsf {SIG}.\mathsf {Vrf} (vk, msg , \sigma )\) holds for any \(gk \) generated by \(\mathsf {Setup}\), any keys generated as \((vk,sk) \leftarrow \mathsf {SIG}.\mathsf {Key} (gk)\), any message \( msg \in \mathcal{M}\), and any signature \(\sigma \leftarrow \mathsf {SIG}.\mathsf {Sign} (sk, msg )\).

Definition 8

(Unforgeability against adaptive chosen message attacks) A signature scheme is unforgeable against adaptive chosen message attacks (UF-CMA) if for any probabilistic polynomial-time oracle algorithms \(\mathcal{A}\) the following advantage function  is bounded by a negligible function in \(\lambda \).

is bounded by a negligible function in \(\lambda \).

\(\mathcal{O}_s\) is a signing oracle that, on receiving message \( msg _j\), performs \(\sigma _j \leftarrow \mathsf {SIG}.\mathsf {Sign} (sk, msg _j)\), returns \(\sigma _j\) to \(\mathcal{A}\), and records \( msg _j\) to \(Q_m\), which is an initially empty list.

Definition 9

(Unforgeability against non-adaptive chosen message attacks) A signature scheme is unforgeable against non-adaptive chosen message attacks (UF-NACMA) if for any probabilistic polynomial-time algorithms \(\mathcal{A}\) and any polynomial n in \(\lambda \), the following advantage function \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {nacma}}_{\mathsf {SIG},\mathcal{A}}(\lambda )\) is bounded by a negligible function in \(\lambda \).

It is implicit that \(\mathcal{A}\) in the first run hands over an internal state to that in the second run.

Definition 10

(Unforgeability against random message attacks (\(\text {UF}\text {-}\text {RMA}\)) [22]) A signature scheme is unforgeable against random message attacks (UF-RMA) if for any probabilistic polynomial-time algorithms \(\mathcal{A}\) and any positive integer n bounded by a polynomial in \(\lambda \), the following advantage function \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {rma}}_{\mathsf {SIG},\mathcal{A}}\) is negligible in \(\lambda \).

We consider a variation of random message attacks where the adversary is given, for example, the random coin used to sample the random message. Our formal definition covers more a general idea of auxiliary information about the message generator as follows. Let \(\mathsf {MSGGen}\) be a message generation algorithm that takes \(gk\) (and random coins as well) as input and outputs \( msg \in \mathcal{M}\). Furthermore, \(\mathsf {MSGGen}\) outputs auxiliary information \(\omega \), which may give some hint about the random coins used for selecting \( msg \). The extended random message attack is defined relative to message generator \(\mathsf {MSGGen}\) as follows.

The above syntax and security notions can be applied to one-time signature schemes by restricting the oracle access only once or parameter n to 1.

Definition 11

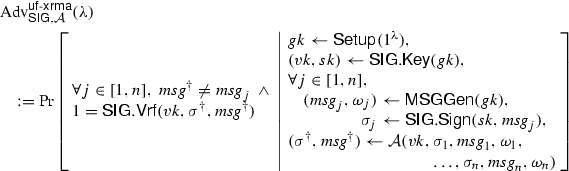

[Unforgeability against extended random message attacks (\(\text {UF}\text {-}\text {XRMA}\))] A signature scheme is unforgeable against extended random message attacks (UF-XRMA) with respect to message sampler \(\mathsf {MSGGen}\) if for any probabilistic polynomial-time algorithms \(\mathcal{A}\) and any positive integer n bounded by a polynomial in \(\lambda \), the following advantage function \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {xrma}}_{\mathsf {SIG},\mathcal{A}}\) is bounded by a negligible function in \(\lambda \).

For the above security notions,\(\text {UF}\text {-}\text {CMA}\Rightarrow \text {UF}\text {-}\text {XRMA}\Rightarrow \text {UF}\text {-}\text {RMA}\) holds. More precisely, for any signature scheme \(\mathsf {SIG}\), for any \(\mathcal{A}'\) there exists \(\mathcal{A}\) such that \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf cma }_{\mathsf {SIG},\mathcal{A}}(\lambda ) \!\ge \! \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {xrma}}_{\mathsf {SIG},\mathcal{A}'}(\lambda )\), and for any \(\mathcal{A}''\) there exists \(\mathcal{A}'\) such that \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {xrma}}_{\mathsf {SIG},\mathcal{A}'}(\lambda ) \!\ge \! \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {rma}}_{\mathsf {SIG},\mathcal{A}''}(\lambda ) \).

3.3 Partial One-Time and Tagged One-Time Signatures

Partial one-time signatures, also known as two-tier signatures [12], are a variation of one-time signatures where only part of the public-key and secret-key must be updated for every signing, while the remaining part can be persistent.

Definition 12

(Partial one-time signature scheme [12]) A partial one-time signature scheme \(\mathsf {POS}\) is a set of polynomial-time algorithms \(\mathsf {POS}.\{\mathsf {Key},\mathsf {Update}, \mathsf {Sign}, \mathsf {Vrf}\}\).

-

\(\mathsf {POS}.\mathsf {Key} (gk)\) generates a long-term public-key \( pk \) and secret-key \( sk \), and sets the associated message space to be \(\mathcal{M}_{o}\) as defined by \(gk\) (Recall that we require that \(\mathcal{M}_{o}\) be completely defined by \(gk\)).

-

\(\mathsf {POS}.\mathsf {Update} (gk)\) takes \(gk\) as input, and outputs a one-time key pair \(( opk , osk )\). We denote the space for \( opk \) by \(\mathcal{K}_{ opk }\).

-

\(\mathsf {POS}.\mathsf {Sign} ( sk , msg , osk )\) outputs a signature \(\sigma \) on message \( msg \) based on \( sk \) and \( osk \).

-

\(\mathsf {POS}.\mathsf {Vrf} ( pk , opk , msg , \sigma )\) outputs 1 for acceptance, or 0 for rejection.

Correctness requires that \(1 = \mathsf {POS}.\mathsf {Vrf} ( pk , opk , msg , \sigma )\) holds except for negligible probability for any \(gk,\, pk ,\, opk ,\,\sigma \), and \( msg \in \mathcal{M}_{o}\), such that \( gk \leftarrow \mathsf {Setup}(1^{\lambda }),\,( pk , sk ) \leftarrow \mathsf {POS}.\mathsf {Key} (gk),\,( opk , osk ) \leftarrow \mathsf {POS}.\mathsf {Update} (gk),\,\sigma \leftarrow \mathsf {POS}.\mathsf {Sign} ( sk , msg , osk )\).

A tagged one-time signature scheme is a signature scheme whose signing function in addition to the long-term secret-key takes a tag as input. A tag is one-time, i.e., it must be different for every signing.

Definition 13

(Tagged one-time signature scheme) A tagged one-time signature scheme \(\mathsf {TOS}\) is a set of polynomial-time algorithms \(\mathsf {TOS}.\{\mathsf {Key},\mathsf {Tag}, \mathsf {Sign}, \mathsf {Vrf}\}\).

-

\(\mathsf {TOS}.\mathsf {Key} (gk)\) generates a long-term public-key \( pk \) and secret-key \( sk \), and sets the associated message space to be \(\mathcal{M}_{t}\) as defined by \(gk\).

-

\(\mathsf {TOS}.\mathsf {Tag} (gk)\) takes \(gk\) as input and outputs \( tag \). By \(\mathcal{T}\), we denote the space for \( tag \).

-

\(\mathsf {TOS}.\mathsf {Sign} ( sk , msg , tag )\) outputs signature \(\sigma \) for message \( msg \) based on \( sk \) and \( tag \).

-

\(\mathsf {TOS}.\mathsf {Vrf} ( pk , tag , msg , \sigma )\) outputs 1 for acceptance, or 0 for rejection.

Correctness requires that \(1 = \mathsf {TOS}.\mathsf {Vrf} ( pk , tag , msg , \sigma )\) holds except for negligible probability for any \(gk,\, pk ,\, tag ,\,\sigma \), and \( msg \in \mathcal{M}_{t}\), such that \( gk \leftarrow \mathsf {Setup}(1^{\lambda }),\,( pk , sk ) \leftarrow \mathsf {TOS}.\mathsf {Key} (gk),\, tag \leftarrow \mathsf {TOS}.\mathsf {Tag} (gk),\,\sigma \leftarrow \mathsf {TOS}.\mathsf {Sign} ( sk , msg , tag )\).

A \(\mathsf {TOS}\) scheme is a \(\mathsf {POS}\) scheme for which \( tag = osk = opk \). We can thus give a security notion for \(\mathsf {POS}\) schemes that also applies to \(\mathsf {TOS}\) schemes by reading \(\mathsf {Update}= \mathsf {Tag}\) and \( tag = osk = opk \).

Definition 14

(Unforgeability against one-time adaptive chosen message attacks) A partial one-time signature scheme is unforgeable against one-time adaptive chosen message attacks (OT-CMA) if for any probabilistic polynomial-time oracle algorithms \(\mathcal{A}\) the following advantage function \( \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {cma}}_{\mathsf {POS},\mathcal{A}}\) is negligible in \(\lambda \).

\(Q_m\) is initially an empty list. \(\mathcal{O}_t\) is the one-time key generation oracle that on receiving a request invokes a fresh session j, performs \(( opk _j, osk _j)\leftarrow \mathsf {POS}.\mathsf {Update} (gk)\), and returns \( opk _j\). \(\mathcal{O}_s\) is the signing oracle that, on receiving a message \( msg _j\) for session j, performs \(\sigma _j \!\!\leftarrow \! \mathsf {POS}.\mathsf {Sign} ( sk , msg _j, osk _j)\), returns \(\sigma _j\) to \(\mathcal{A}\), and records \(( opk _j, msg _j, \sigma _j)\) to the list \(Q_m\). \(\mathcal{O}_s\) works only once for each session. Strong unforgeability is defined by replacing condition \( msg ^{\dagger } \ne msg \) with \(( msg ^{\dagger }, \sigma ^{\dagger }) \ne ( msg , \sigma )\).

We define a non-adaptive variant (OT-NACMA) of the above notion by integrating \(\mathcal{O}_t\) into \(\mathcal{O}_s\) so that \( opk _j\) and \(\sigma _j\) are returned to \(\mathcal{A}\) at the same time. Namely, \(\mathcal{A}\) must submit \( msg _j\) before seeing \( opk _j\). If a scheme is secure in the sense of \(\text {OT}\text {-}\text {CMA}\), the scheme is also secure in the sense of \(\text {OT}\text {-}\text {NACMA}\). By \( \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {nacma}}_{\mathsf {POS},\mathcal{A}}(\lambda )\) we denote the advantage of \(\mathcal{A}\) in this non-adaptive case. For \(\mathsf {TOS}\), we use the same notation, OT-CMA and OT-NACMA, and define advantage functions \( \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {cma}}_{\mathsf {TOS},\mathcal{A}}\) and \( \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {nacma}}_{\mathsf {TOS},\mathcal{A}}\) accordingly. We will also consider strong unforgeability, for which we use labels \(\mathsf {sot}\text {-}\mathsf {cma}\) and \(\mathsf {sot}\text {-}\mathsf {nacma}\). Recall that if a scheme is strongly unforgeable, it is unforgeable as well.

We define a condition that is relevant for coupling random message secure signature schemes with partial one-time and tagged one-time signature schemes in later sections.

Definition 15

(Tag/one-time public-key uniformity) A \(\mathsf {TOS}\) is called uniform tag if \(\mathsf {TOS}.\mathsf {Tag} \) outputs \( tag \) that is uniformly distributed over tag space \(\mathcal{T}\). Similarly, a \(\mathsf {POS}\) is called uniform-key if \(\mathsf {POS}.\mathsf {Update} \) outputs \( opk \) that is uniformly distributed over key space \(\mathcal{K}_{ opk }\).

3.4 Structure-Preserving Signatures

A signature scheme is structure-preserving over a bilinear group \(\varLambda \), if public-keys, signatures, and messages are all source group elements of \(\varLambda \), and the verification only evaluates pairing product equations. Similarly, \(\mathsf {POS}\) and \(\mathsf {TOS}\) schemes are structure-preserving if their public-keys, signatures, messages, and tags or one-time public-keys consist of source group elements and the verification only evaluates pairing product equations.

4 Generic Constructions

4.1 \(\mathsf {SIG{1}}\): Combining Tagged One-Time and RMA-Secure Signatures

Let \(\mathsf {{r}SIG{}}\) be a signature scheme with message space \(\mathcal{M}_{\mathsf {{r}}}\), and \({{\mathsf {TOS}}{}}\) be a tagged one-time signature scheme with tag space \(\mathcal{T}\) such that \(\mathcal{M}_{\mathsf {{r}}} = \mathcal{T}\) and both schemes use the same \(\mathsf {Setup}\). We construct a signature scheme \(\mathsf {SIG{1}}\) from \(\mathsf {{r}SIG{}}\) and \({{\mathsf {TOS}}{}}\). Let \(gk\) be the global parameter generated by \(\mathsf {Setup}(1^\lambda )\). It is assumed that a secret-key of \(\mathsf {{r}SIG{}}\) includes \(gk\).

[Generic Construction 1: \(\mathsf {SIG{1}}\) ]

-

\(\mathsf {SIG{1}}.\mathsf {Key} (gk)\): Run \(( pk _{t}, sk _{t}) \leftarrow \mathsf {TOS}.\mathsf {Key} (gk),\,(vk _{r},sk _{r}) \leftarrow \mathsf {{r}SIG{}}.\mathsf {Key} (gk)\). Output \(vk :=( pk _{t},vk _{r})\) and \(sk :=( sk _{t},sk _{r})\).

-

\(\mathsf {SIG{1}}.\mathsf {Sign} (sk, msg )\): Parse \(sk \) into \(( sk _{t},sk _{r})\) and take \(gk\) from \(sk _{r}\). Run \( tag \leftarrow \mathsf {TOS}.\mathsf {Tag} (gk),\,\sigma _{t} \leftarrow \mathsf {TOS}.\mathsf {Sign} ( sk _{t}, msg , tag ),\,\sigma _{r} \leftarrow \mathsf {{r}SIG{}}.\mathsf {Sign} (sk _{r}, tag )\). Output \(\sigma :=( tag , \sigma _{t}, \sigma _{r})\).

-

\(\mathsf {SIG{1}}.\mathsf {Vrf} (vk, msg , \sigma )\): Parse \(vk \) and \(\sigma \) accordingly. Output 1 if \(1 = \mathsf {TOS}.\mathsf {Vrf} ( pk _{t}, tag , msg , \sigma _{t})\) and \(1 = \mathsf {{r}SIG{}}.\mathsf {Vrf} (vk _{r}, tag , \sigma _{r})\). Output 0 otherwise.

We prove that \(\mathsf {SIG{1}}\) is secure by showing a reduction to the security of each component. As our reductions are efficient in their running time, we only relate success probabilities.

Theorem 1

\(\mathsf {SIG{1}}\) is UF-CMA if \(\mathsf {TOS}\) is uniform tag and OT-NACMA, and \(\mathsf {{r}SIG{}}\) is UF-RMA. In particular, for any p.p.t. algorithm \(\mathcal{A}\) there exist p.p.t. algorithms \(\mathcal{B}\) and \(\mathcal{C}\) such that \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf cma }_{\mathsf {SIG{1}},\mathcal{A}}(\lambda ) \le \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {nacma}}_{\mathsf {TOS},\mathcal{B}}(\lambda ) + \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {rma}}_{\mathsf {{r}SIG{}},\mathcal{C}}(\lambda )\).

Security against random message attacks is sufficient for \(\mathsf {{r}SIG{}}\) as it is used only to sign uniformly chosen tags. To formally prove it, however, we use the important fact that the signing function of \(\mathsf {TOS}\) does not require any secret behind the tags. Departing from the \(\text {UF}\text {-}\text {CMA}\) game for \(\mathsf {SIG{1}}\), the security proof is done by evaluating two game transitions. The first transition is based on the \(\text {OT}\text {-}\text {NACMA}\) security of \(\mathsf {TOS}\). This part is rather simple as we can construct a simulator in a straightforward manner by following the key generation and signing of \(\mathsf {{r}SIG{}}\). The second transition is based on \(\text {UF}\text {-}\text {RMA}\) of \(\mathsf {{r}SIG{}}\). We construct a simulator that, given signatures of \(\mathsf {{r}SIG{}}\) on uniformly chosen tags as messages, simulates signatures of \(\mathsf {SIG{1}}\) for messages provided by the adversary. For this to be done, the simulator needs to compute one-time signatures of \(\mathsf {TOS}\) for the given uniform tags. This, however, can be done without any problem since the simulator has legitimate signing keys that are sufficient to run the signing function of \(\mathsf {TOS}\) with uniform tags.

Proof

Any signature that is accepted as a successful forgery must either reuse an existing tag, or sign a new tag. We show that the former case reduces to attacking \(\mathsf {TOS}\) and the latter case reduces to attacking \(\mathsf {{r}SIG{}}\). Thus the success probability \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf cma }_{\mathsf {SIG{1}},\mathcal{A}}(\lambda )\) of an attacker on \(\mathsf {SIG{1}}\) will be bounded by the sum of the success probabilities \( \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {nacma}}_{\mathsf {TOS},\mathcal{B}}(\lambda )\) of an attacker on \(\mathsf {TOS}\) and the success probability \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {rma}}_{\mathsf {{r}SIG{}},\mathcal{C}}(\lambda )\) of an attacker on \(\mathsf {{r}SIG{}}\).

- Game 0: :

-

The actual unforgeability game. \(\Pr [\mathbf{Game 0}] = \text {Adv} ^{\mathsf {uf}\text {-}\mathsf cma }_{\mathsf {SIG{1}},\mathcal{A}}(\lambda )\).

- Game 1: :

-

The real security game except that the winning condition is changed to no longer accept repetition of tags.

Lemma 1

\(|\Pr [\mathbf{Game \,0}]- \Pr [\mathbf{Game\, 1}]| \le \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {nacma}}_{\mathsf {TOS},\mathcal{B}}(\lambda )\)

Proof

Attacker \(\mathcal{A}\) wins in Game 0, but loses in Game 1, iff it produces a forgery that reuses a tag from a signing query. We describe a reduction \(\mathcal{B}\) that uses such an attacker to break the OT-NACMA-security of \(\mathsf {TOS}\). The reduction \(\mathcal{B}\) receives \(gk\) and \( pk _{t}\) from the challenger of \(\mathsf {TOS}\), sets up \(vk _{r}\) and \(sk _{r}\) honestly by running \(\mathsf {{r}SIG{}}.\mathsf {Key} (gk)\), and provides \(gk\) and \(vk = (vk _{r}, pk _{t})\) to \(\mathcal{A}\).

To answer a signing query, \(\mathcal{B}\) uses the signing oracle of \(\mathsf {TOS}\) to get \( tag \) and \(\sigma _{t}\), signs \( tag \) using \(sk _{r}\) to produce \(\sigma _{r}\), and returns \(( tag , \sigma _{t}, \sigma _{r})\). When \(\mathcal{A}\) produces a forgery \(( tag ^{\dagger }, \sigma _{t}^{\dagger }, \sigma _{r}^{\dagger })\) on message \( msg ^{\dagger },\,\mathcal{B}\) outputs \(( msg ^{\dagger }, tag ^{\dagger }, \sigma _{t}^{\dagger })\) as a forgery for \(\mathsf {TOS}\).

- Game 2: :

-

The fully idealized game. The winning condition is changed to reject all signatures.

Lemma 2

\(|\Pr [\mathbf{Game \,1}] - Pr[\mathbf{Game \,2}]| \le \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {rma}}_{\mathsf {{r}SIG{}},\mathcal{C}}(\lambda )\)

Proof

Attacker \(\mathcal{A}\) wins in Game 1, iff it produces a forgery with a fresh tag. We describe a reduction algorithm \(\mathcal{C}\) that uses \(\mathcal{A}\) to break the \(\text {UF}\text {-}\text {RMA}\) security of \(\mathsf {{r}SIG{}}\). Algorithm \(\mathcal{C}\) receives \(gk\) and \(vk _{r}\), runs \(( pk _{t}, sk _{t}) \leftarrow \mathsf {TOS}.\mathsf {Key} (gk)\), and provides \(gk\) and \(vk = (vk _{r}, pk _{t})\) to \(\mathcal{A}\).

To answer signing query on message \( msg \), algorithm \(\mathcal{C}\) consults \(\mathcal{O}_s\) and receives random message \( msg _{r}\leftarrow \mathcal{T}\) and signature \(\sigma _{r}\). Algorithm \(\mathcal{C}\) then uses \( msg _{r}\) as a tag, i.e., \( tag = msg _{r}\), and creates signature \(\sigma _{t}\) on \( msg \) by running \(\mathsf {TOS}.\mathsf {Sign} ( sk _{t}, msg , tag )\). It then returns \(( tag , \sigma _{t}, \sigma _{r})\). Note that for a uniform tag \(\mathsf {TOS}\) scheme \(\mathsf {TOS}.\mathsf {Tag} (gk)\) would generate tags distributed uniformly over the tag space \(\mathcal{T}\). Thus the reduction simulation is perfect. When \(\mathcal{A}\) produces a forgery \(( tag ^{\dagger }, \sigma _{t}^{\dagger }, \sigma _{r}^{\dagger })\) on \( msg ^{\dagger }\), algorithm \(\mathcal{C}\) outputs \(( tag ^{\dagger }, \sigma _{r}^{\dagger })\) as a forgery.

Thus \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf cma }_{\mathsf {SIG{1}},\mathcal{A}}(\lambda ) = \Pr [\mathbf{Game \,0}] \le \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {nacma}}_{\mathsf {TOS},\mathcal{B}}(\lambda ) + \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {rma}}_{\mathsf {{r}SIG{}},\mathcal{C}}(\lambda )\) as claimed.

The following theorem is immediately obtained from the construction.

Theorem 2

If \(\mathsf {TOS}.\mathsf {Tag} \) produces constant-size tags and signatures in the size of input messages, the resulting \(\mathsf {SIG{1}}\) produces constant-size signatures as well. Furthermore, if \(\mathsf {TOS}\) and \(\mathsf {{r}SIG{}}\) are structure-preserving, so is \(\mathsf {SIG{1}}\).

4.2 \(\mathsf {SIG{2}}\): Combining Partial One-Time and XRMA-Secure Signatures

Let \(\mathsf {{x}SIG{}}\) be a signature scheme with message space \(\mathcal{M}_{\mathsf {{x}}}\), and \(\mathsf {POS}\) be a partial one-time signature scheme with one-time public-key space \(\mathcal{K}_{ opk }\) such that \(\mathcal{M}_{\mathsf {{x}}} = \mathcal{K}_{ opk }\) and both schemes use the same \(\mathsf {Setup}\). We construct a signature scheme \(\mathsf {SIG{2}}\) from \(\mathsf {{x}SIG{}}\) and \(\mathsf {POS}\). Let \(gk\) be a global parameter generated by \(\mathsf {Setup}(1^\lambda )\). It is assumed that a secret-key for \(\mathsf {{x}SIG{}}\) contains \(gk\).

[Generic Construction 2: \(\mathsf {SIG{2}}\) ]

-

\(\mathsf {SIG{2}}.\mathsf {Key} (gk)\): Run \(( pk _{p}, sk _{p}) \leftarrow \mathsf {POS}.\mathsf {Key} (gk),\,(vk _{x},sk _{x}) \leftarrow \mathsf {{x}SIG{}}.\mathsf {Key} (gk)\). Output \(vk :=( pk _{p},vk _{x})\) and \(sk :=( sk _{p},sk _{x})\).

-

\(\mathsf {SIG{2}}.\mathsf {Sign} (sk, msg )\): Parse \(sk \) into \(( sk _{p},sk _{x})\) and take \(gk\) from \(sk _{x}\). Run \(( opk , osk ) \leftarrow \mathsf {POS}.\mathsf {Update} (gk),\,\sigma _{p} \leftarrow \mathsf {POS}.\mathsf {Sign} ( sk _{p}, msg , osk ),\,\sigma _{x} \leftarrow \mathsf {{x}SIG{}}.\mathsf {Sign} (sk _{x}, opk )\). Output \(\sigma :=( opk , \sigma _{p}, \sigma _{x})\).

-

\(\mathsf {SIG{2}}.\mathsf {Vrf} (vk, msg , \sigma )\): Parse \(vk \) and \(\sigma \) accordingly. Output 1 if \(1 = \mathsf {POS}.\mathsf {Vrf} ( pk _{p}, opk , msg , \sigma _{p})\), and \(1 = \mathsf {{x}SIG{}}.\mathsf {Vrf} (vk _{x}, opk ,\sigma _{x})\). Output 0 otherwise.

Theorem 3

\(\mathsf {SIG{2}}\) is UF-CMA if \(\mathsf {POS}\) is uniform-key and OT-NACMA, and \(\mathsf {{x}SIG{}}\) is \(\text {UF}\text {-}\text {XRMA}\) relative to \(\mathsf {POS}.\mathsf {Update} \) as a message generator. In particular, for any p.p.t. algorithm \(\mathcal{A}\), there exist p.p.t. algorithms \(\mathcal{B}\) and \(\mathcal{C}\) such that \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf cma }_{\mathsf {SIG{2}},\mathcal{A}}(\lambda ) \le \text {Adv} ^{\mathsf {ot}\text {-}\mathsf {nacma}}_{\mathsf {POS},\mathcal{B}}(\lambda ) + \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {xrma}}_{\mathsf {{x}SIG{}},\mathcal{C}}(\lambda )\).

Proof

The proof is almost the same as that for Theorem 1. The only difference appears in constructing \(\mathcal{C}\) in the second step. Since \(\mathsf {POS}.\mathsf {Update} \) is used as the extended random message generator, the pair \(( msg ,\omega )\) is in fact \(( opk , osk )\). Given \(( opk , osk )\), adversary \(\mathcal{C}\) can run \(\mathsf {POS}.\mathsf {Sign} ( sk , msg , osk )\) to yield legitimate signatures.

As for our first generic construction, the following theorem holds from the construction.

Theorem 4

If \(\mathsf {POS}\) produces constant-size one-time public-keys and signatures in the size of input messages, the resulting \(\mathsf {SIG{2}}\) produces constant-size signatures as well. Furthermore, if \(\mathsf {POS}\) and \(\mathsf {{x}SIG{}}\) are structure-preserving, so is \(\mathsf {SIG{2}}\).

5 Instantiating \(\mathsf {SIG{1}}\)

We instantiate the building blocks \(\mathsf {TOS}\) and \(\mathsf {{r}SIG{}}\) of our first generic construction to obtain our first SPS scheme. We do so in the Type-I bilinear group setting. The resulting \(\mathsf {SIG{1}}\) scheme is an efficient structure-preserving signature scheme based only on the \(\text {DLIN}\) assumption.

5.1 Setup for Type-I Groups

The following setup procedure is common for all instantiations in this section. The global parameter \(gk\) is given to all functions implicitly.

-

\(\mathsf {Setup}(1^\lambda )\): Run \(\varLambda =(p,{{\mathbb G}},{{\mathbb G}}_T,e) \leftarrow {\mathcal {G}}(1^\lambda )\) and pick random generators \((G, C, F, U) \leftarrow ({{\mathbb G}}^*)^4\). Output \(gk:=(\varLambda , G, C, F,U)\).

The parameter \(gk\) fixes the message space \(\mathcal{M}_{\mathsf {{r}}} :=\{(C^m,F^m,U^m) \in {{\mathbb G}}^3 \;|\; m \in \mathbb {Z}_p\}\) for the RMA-secure signature scheme presented in Sect. 5.3. For our generic framework to work, the tagged one-time signature schemes should have the same tag space.

5.2 Tagged One-Time Signature Scheme

Our scheme generates tags consisting of only one group element, \(C^t\), which is optimally efficient in its size. However, as mentioned above, we need to adjust the tag space to match the message space of \(\mathsf {{r}SIG{}}\). We thus describe the scheme with a tag in the extended form of \((C^t, F^t, U^t)\). The extended elements \(F^t\) and \(U^t\) can be dropped when unnecessary.

Our concrete construction of \(\mathsf {TOS}\) can be seen as an adaptation of a one-time signature scheme in [7] so that it enjoys optimally short one-time public-key (i.e., a tag) with no corresponding one-time secret-key. We note that, given a \(\mathsf {TOS}\), one can construct a one-time signature scheme. But the reverse is not known in general.

[Scheme \({{\mathsf {TOS}}{}}\) ]

-

\({{\mathsf {TOS}}{}}.\mathsf {Key} ( gk )\): Parse \( gk = (\varLambda , G, C,F,U)\). Choose \(w_z,\,w_r, \mu _z,\, \mu _s, \tau \) uniformly from \(\mathbb {Z}_p^*\) and compute \(G_z :=G^{w_z},\,G_r :=G^{w_r},\,H_z :=G^{\mu _z},\,H_s :=G^{\mu _s},\,G_t :=G^{\tau }\) and For \(i=1,\ldots ,k\), uniformly choose \(\chi _i,\,\gamma _i,\,\delta _i\) from \(\mathbb {Z}_p\) and compute

$$\begin{aligned} G_i :=G_z^{\chi _i} G_r^{\gamma _i}, \quad \text {and} \quad H_i :=H_z^{\chi _i} H_s^{\delta _i}. \end{aligned}$$(1)Output \( pk :=(G_z,\, G_r, H_z,\, H_s, G_t,\, G_1, \ldots ,G_k, H_1, \ldots ,H_k )\in {{\mathbb G}}^{2k+5}\) and \( sk :=(w_r, \mu _s, \tau , \chi _1,\gamma _1,\delta _1, \ldots , \chi _k,\gamma _k,\delta _k, ) \in \mathbb {Z}_p^{3k+5}\).

-

\({{\mathsf {TOS}}{}}.\mathsf {Tag} (gk)\): Choose \(t \leftarrow \mathbb {Z}_p^*\), compute \(T :=C^t\). Output \( tag :=(T, T', T'') = (C^{t},F^t,U^t) \in {{\mathbb G}}^3\).

-

\({{\mathsf {TOS}}{}}.\mathsf {Sign} ( sk , msg , tag )\): Parse \( msg \) as \((\tilde{M}_1,\ldots ,\tilde{M}_k)\) and \( tag \) as \((T,T', T'')\). Parse \( sk \) accordingly. Choose \(\zeta \leftarrow \mathbb {Z}_p\) and output \(\sigma :=(\tilde{Z}, \tilde{R}, S) \in {{\mathbb G}}^3\) where

$$\begin{aligned} \begin{array}{ll} \tilde{Z}:=G^{\zeta } \prod \limits _{i=1}^{k} \tilde{M}_i^{-\chi _i},\quad \tilde{R}:=\left( T^{\tau } G_z^{-\zeta }\right) ^{\frac{1}{w_r}} \prod \limits _{i=1}^{k} \tilde{M}_i^{-\gamma _i}, \text{ and } S\!:=\! \left( H_z^{-\zeta }\right) ^{\frac{1}{\mu _s}} \prod \limits _{i=1}^{k} \tilde{M}_i^{-\delta _i}. \end{array} \end{aligned}$$ -

\({{\mathsf {TOS}}{}}.\mathsf {Vrf} ( pk , tag , msg ,\sigma )\): Parse \(\sigma \) as \((\tilde{Z},\tilde{R}, S) \in {{\mathbb G}}^3,\, msg \) as \((\tilde{M}_1,\ldots ,\tilde{M}_k) \in {{\mathbb G}}^k\), and \( tag \) as \((T,T', T'')\). Return 1 if the following equations hold. Return 0, otherwise.

$$\begin{aligned} e(T, G_t)&= e\left( G_z, \tilde{Z}\right) \; e\left( G_r, \tilde{R}\right) \; \prod _{i=1}^{k} e(G_i, \tilde{M}_i) \end{aligned}$$(2)$$\begin{aligned} 1&= e\left( H_z, \tilde{Z}\right) \; e\left( H_s, S\right) \; \prod _{i=1}^{k} e\left( H_i, \tilde{M}_i\right) \end{aligned}$$(3)

Correctness is verified by inspecting the following relations.

We state the following theorems, of which the first one is immediate from the construction.

Theorem 5

The above \(\mathsf {TOS}\) is structure-preserving, and yields uniform tags and constant-size signatures.

Theorem 6

The above \({{\mathsf {TOS}}{}}\) is strongly unforgeable against one-time tag adaptive chosen message attacks (SOT-CMA) if the \(\text {SDP}\) assumption holds. In particular, for all p.p.t. algorithms \(\mathcal{A}\), there exists p.p.t. algorithm \(\mathcal{B}\) such that \( \text {Adv} ^{\mathsf {sot}\text {-}\mathsf {cma}}_{{{\mathsf {TOS}}{,}}\mathcal{A}}(\lambda )\le \text {Adv} ^{\mathsf {sdp}}_{{\mathcal {G}},\mathcal{B}}(\lambda ) + 1/p(\lambda )\), where \(p(\lambda )\) is the size of the groups produced by \({\mathcal {G}}\). Moreover, the run-time overhead of the reduction \(\mathcal{B}\) is a small number of multi-exponentiations per signing or tag query.

Proof

Given successful forger \(\mathcal{A}\) against \({{\mathsf {TOS}}{}}\) as a black-box, we construct \(\mathcal{B}\) that breaks \(\text {SDP}\) as follows. Let \(I_{\mathsf {sdp}}=(\varLambda , G_z, G_r, H_z, H_s)\) be an instance of \({\text {SDP}}\). Algorithm \(\mathcal{B}\) simulates the attack game against \({{\mathsf {TOS}}{}}\) as follows. It first builds \(gk:=(\varLambda , G, C,F,U)\) by choosing \(G\) randomly from \({{\mathbb G}}^*\), choosing \(c,f,u\leftarrow \mathbb {Z}_p\), and computing \(C= G^c, F = G^f\), and \(U = G^u\). This yields a \(gk\) in the same distribution as produced by \(\mathsf {Setup}\). Next \(\mathcal{B}\) simulates \({{\mathsf {TOS}}{}}.\mathsf {Key} \) by taking \((G_z, G_r, H_z, H_s)\) from \(I_{\mathsf {sdp}}\) and computing \(G_t :=H_s^{\tau }\) for random \(\tau \) in \(\mathbb {Z}_p^*\). It then generates \(G_i\) and \(H_i\) according to (1). This perfectly simulates \({{\mathsf {TOS}}{}}.\mathsf {Key} \).

On receiving the jth query to \(\mathcal{O}_t\), algorithm \(\mathcal{B}\) computes

for \(\zeta , \rho \leftarrow \mathbb {Z}_p^*\). If \(T=1,\,\mathcal{B}\) sets \(Z^{\star } :=H_s,\,S^{\star } :=H_z^{-1}\), and \(R^{\star } :=(Z^{\star })^{\rho /\zeta }\), outputs \((Z^{\star },R^{\star },S^{\star })\) and stops. Otherwise, \(\mathcal{B}\) stores \((\zeta , \rho )\) and returns \( tag _j :=(T, T^{f/c}, T^{u/c})\) to \(\mathcal{A}\).

On receiving signing query \( msg _j = (\tilde{M}_1,\ldots ,\tilde{M}_k)\), algorithm \(\mathcal{B}\) takes \(\zeta \) and \(\rho \) used for computing \(tag_j\) (if \(tag_j\) is not yet defined, execute the above procedure for generating \(tag_j\) and take new \(\zeta \) and \(\rho \)) and computes

Then \(\mathcal{B}\) returns \(\sigma _j :=(Z,R,S)\) to \(\mathcal{A}\) and records \(( tag _j, \sigma _j, msg _j)\).

When \(\mathcal{A}\) outputs a forgery \(( tag ^{\dagger },\sigma ^{\dagger }, msg ^{\dagger })\), algorithm \(\mathcal{B}\) searches the records for \(( tag , \sigma , msg )\) such that \( tag ^{\dagger } = tag \) and \(( msg ^{\dagger },\sigma ^{\dagger }) \ne ( msg , \sigma )\). If no such entry exists, \(\mathcal{B}\) aborts. Otherwise, \(\mathcal{B}\) computes

where \((\tilde{Z},\tilde{R},S),\,(\tilde{M}_1,\ldots ,\tilde{M}_k)\) and their dagger counterparts are taken from \((\sigma , msg )\) and \((\sigma ^{\dagger }, msg ^{\dagger })\), respectively. \(\mathcal{B}\) finally outputs \((\tilde{Z}^{\star },\tilde{R}^{\star }, S^{\star })\) and stops. This completes the description of \(\mathcal{B}\).

We claim that \(\mathcal{B}\) solves the problem by itself or the view of \(\mathcal{A}\) is perfectly simulated. The correctness of key generation has been already inspected. In the simulation of \(\mathcal{O}_t\), there is a case of \(T=1\) that happens with probability 1 / p. If it happens, \(\mathcal{B}\) outputs a correct answer to \(I_{\mathsf {sdp}}\), which is clear by observing \(G_z=G_r^{-\rho /\zeta },\,Z^{\star } = H_s \ne 1,\,e(G_z, Z^{\star })e(G_r, R^{\star }) = e(G_r^{-\rho /\zeta }, Z^{\star })e(G_r, (Z^{\star })^{\rho /\zeta })=1\) and \(e(H_z, Z^{\star }) e(H_s, S^{\star }) = e(H_z, H_s) e(H_s, H_z^{-1}) = 1\). Otherwise, tag T is uniformly distributed over \({{\mathbb G}}^*\) and the simulation is perfect.

Oracle \(\mathcal{O}_s\) is simulated perfectly as well. Correctness of simulated \(\sigma _j = (\tilde{Z},\, \tilde{R},\, S)\) can be verified by inspecting the following relations.

Every \(\tilde{Z}\) is uniformly distributed over \({{\mathbb G}}\) due to the uniform choice of \(\zeta \). Then \(\tilde{R}\) and \(S\) are uniquely determined by following the distribution of \(\tilde{Z}\).

Accordingly, \(\mathcal{A}\) outputs a successful forgery with non-negligible probability and \(\mathcal{B}\) finds a corresponding record \(( tag ,\sigma , msg )\). We show that output \((\tilde{Z}^{\star }, \tilde{R}^{\star }, S^{\star })\) from \(\mathcal{B}\) is a valid solution to \(I_{\mathsf {sdp}}\). First, Eq. (2) is satisfied because

holds. Equation (3) can be verified similarly.

It remains to prove that \(\tilde{Z}^{\star } \ne 1\). Note that, if \( msg = msg ^{\dagger }\) but this is still a valid forgery then it must be the case that \((\tilde{Z}, \tilde{R})\ne (\tilde{Z}^{\dagger }, \tilde{R}^{\dagger })\). Since \(\tilde{R}\) (resp. \(\tilde{R}^{\dagger }\)) is uniquely determined by \(\tilde{Z}\) and \( msg \) (resp. \(\tilde{Z}^{\dagger }, msg ^{\dagger }\)), that would mean that \(\tilde{Z}^{\star }\ne 1\). Alternatively, if \( msg ^{\dagger } \ne msg \), then there exists \(\ell \in \{1,\ldots ,k\}\) such that \(\tilde{M}^{\dagger }_{\ell }/{M_{\ell }}\ne 1\). We claim that parameters \(\chi _1,\ldots ,\chi _k\) are independent of the view of \(\mathcal{A}\). We prove it by showing that, for every possible assignment to \(\chi _1,\ldots ,\chi _k\), there exists an assignment to other coins, i.e., \((\gamma _1,\ldots ,\gamma _k,\, \delta _1,\ldots ,\delta _k)\) and \((\zeta ^{(1)}, \rho ^{(1)},\ldots , \zeta ^{(q_s)}, \rho ^{(q_s)})\) for \(q_s\) queries, that is consistent with the view of \(\mathcal{A}\) (By \(\zeta ^{(j)}\), we denote \(\zeta \) with respect to the jth query. We follow this convention hereafter. Without loss of generality, we assume that \(\mathcal{A}\) makes \(q_s\) tag queries and the same number of signing queries). Observe that the view of \(\mathcal{A}\) consists of independent group elements \((G, G_z, G_r, H_z, H_s, G_t, G_1, H_1,\ldots ,G_k, H_k)\) and \((T_1^{(j)}, \tilde{Z}^{(j)}, \tilde{M}^{(j)}_1, \ldots , \tilde{M}^{(j)}_k)\) for \(j=1,\ldots ,q_s\) (Note that we omit \(\tilde{R}^{(j)}\) and \(S^{(j)}\) from the view since they are uniquely determined by the other components). We represent the view by the discrete logarithms of these group elements with respect to base \(G\). Namely, the view is represented by \((1, w_z, w_r, \mu _z, \mu _s, \tau , w_1, \mu _1,\ldots , w_k, \mu _k)\) and \((t^{(j)}, z^{(j)}, m^{(j)}_1,\ldots ,m^{(j)}_k)\) for \(j=1,\ldots ,q_s\). The view and the random coins follow relations from (1), (4), and (5), which translate to

For any \(\ell \in \{1,\ldots ,k\}\), fix \(\chi _1,\ldots , \chi _{\ell -1},\chi _{\ell +1},\ldots , \chi _k\), and consider \(\chi _\ell \). For every value of \(\chi _\ell \) in \(\mathbb {Z}_p\), the linear equations in (6) determine \(\gamma _\ell \) and \(\delta _\ell \). Then, if \(m^{(j)}_\ell \ne 0\), equation (8) determines \(\zeta ^{(j)}\), and \(\rho ^{(j)}\) follows from equation (7). If \(m^{(j)}_\ell = 0\), then \(\zeta ^{(j)},\,\rho ^{(j)}\) can be assigned independently from \(\chi _\ell \). The above holds for every \(\ell \) in \(\{1,\ldots ,k\}\). Thus, if \((\chi _1,\ldots ,\chi _k)\) is distributed uniformly over \(\mathbb {Z}_p^k\), then other coins are distributed uniformly as well and the view of \(\mathcal{A}\) is still consistent.

Now we see that given \(\mathcal{A}\)’s view, \(\left( {M^{\dagger }_{\ell }}/{M_{\ell }}\right) ^{\chi _{\ell }}\) is distributed uniformly over \({{\mathbb G}}\) and independent of the other \(\{\chi _i\}_{i\ne \ell }\). Therefore \(Z^{\star } = 1\) happens only with probability 1 / p. Thus, \(\mathcal{B}\) outputs a valid \((Z^{\star }, R^{\star },S^{\star })\) with probability \( \text {Adv} ^{\mathsf {sdp}}_{{\mathcal {G}},\mathcal{B}} = 1/p + (1-1/p) (1-1/p) \text {Adv} ^{\mathsf {sot}\text {-}\mathsf {cma}}_{{{\mathsf {TOS}}{}},\mathcal{A}}\), which leads to \( \text {Adv} ^{\mathsf {sot}\text {-}\mathsf {cma}}_{{{\mathsf {TOS}}{}},\mathcal{A}} \le \text {Adv} ^{\mathsf {sdp}}_{{\mathcal {G}},\mathcal{B}} + 1/p\) as claimed. \(\square \)

Remark 1

The above TOS does not trivially work in the Type-III setting since computing R from T in signing, simulating T using \(G_r\) in the reduction, and computing pairing \(e(G_r,R)\) in the verification cannot be consistent. In a very recent paper [AGOT14], it is claimed that it can work if some extra group elements are given in public-keys and the underlying assumption, though the resulting scheme would be slightly less efficient than our dedicated construction in the Type-III setting.

Remark 2

The \(\mathsf {TOS}\) can be used to sign messages of unbounded length by chaining the signatures. Every message block except for the last one is followed by a tag used to sign the next block. The signature consists of all internal signatures and tags. The initial tag is considered as the tag for the entire signature. For a message consisting of m group elements, it repeats \(\tau :=1 + \max (0,\lceil \frac{m-k}{k-1} \rceil )\) times and the resulting signature consists of \(4\tau -1\) elements.

5.3 RMA-Secure Signature Scheme

To sign random group elements, we will use a construction based on the dual system signature scheme of Waters [44]. For readers unfamiliar with Waters’ scheme we recall it in “Appendix.” Our intuition for making the original scheme structure-preserving is as follows. While the original scheme is CMA-secure under the \(\text {DLIN}\) assumption, the security proof makes use of a trapdoor commitment to elements in \(\mathbb {Z}_p\) and consequently messages are elements in \(\mathbb {Z}_p\) rather than \({{\mathbb G}}\). Our construction below resorts to RMA-security and removes this commitment to allow messages to be a sequence of random group elements satisfying a particular relation. Concretely, the message space \(\mathcal{M}_{\mathsf {{x}}} :=\{(C^{m},F^{m},U^{m}) \in {{\mathbb G}}^3 \;|\; m \in \mathbb {Z}_p\}\) is defined by generators (C, F, U) in \(gk\). Moreover, the tag elements of Waters’ scheme are removed in our RMA-secure scheme as they were primarily required for (adaptive) \(\text {CMA}\)-security.

Other minor modifications are needed for the structure-preserving property. We modify the verification algorithm. Our verification algorithm is deterministic and uses five verification equations. Two equations are for signature elements that are not related to the message part—this is a consequence of deterministic verification. Three equations are for the (extended) message part. We also slightly modify the verification key. One element in \({{\mathbb G}}_T\) is divided into two elements of \({{\mathbb G}}\) via randomization due to the requirement of SPS.

[Scheme \(\mathsf {{r}SIG{}}\) ]

-

\(\mathsf {{r}SIG{}}.\mathsf {Key} (gk)\): Given \(gk:=(\varLambda , G, C, F, U)\) as input, uniformly select \(V, V_1, V_2, H\) from \({{\mathbb G}}^*\) and \(a_1,a_2,b,\alpha \), and \(\rho \) from \(\mathbb {Z}_p^*\). Then compute and output \(vk :=(B, A_1, A_2\), \(B_1, B_2, R_1, R_2\), \(W_1, W_2, H, X_1, X_2)\) and \(sk \!:=\! (vk, K_1, K_2,V,V_1,V_2)\) where

$$\begin{aligned}&B:=G^b,&A_1:=G^{a_1},&A_2:=G^{a_2},&B_1:=G^{b \cdot a_1},&B_2:=G^{b \cdot a_2}\\&R_1:=VV_1^{a_1},&R_2:=VV_2^{a_2},&W_1:=R_1^b,&W_2:=R_2^b,\\&X_1:=G^{\rho },&X_2:=G^{\alpha \cdot a_1 \cdot b/\rho },&K_1:=G^\alpha ,&K_2:=G^{\alpha \cdot a_1}. \end{aligned}$$ -

\(\mathsf {{r}SIG{}}.\mathsf {Sign} (sk, msg )\): Parse \( msg \) into \((M_1, M_2, M_3)\). Pick random \(r_1, r_2, z_1, z_2 \in \mathbb {Z}_p\). Let \(r= r_1+r_2\). Compute and output signature \(\sigma :=(S_0,S_1, \ldots S_7)\) where

$$\begin{aligned}&S_0 :=(M_3H)^{r_1},&S_1 :=K_2V^{r},&S_2 :=K_1^{-1} V_1^{r} G^{z_1},&S_3 :=B^{-z_1},\\&S_4 :=V_2^{r} G^{z_2},&S_5 :=B^{-z_2},&S_6 :=B^{r_2},&S_7 :=G^{r_1}. \end{aligned}$$ -

\(\mathsf {{r}SIG{}}.\mathsf {Vrf} (vk, \sigma , msg )\): Parse \( msg \) into \((M_1, M_2, M_3)\) and \(\sigma \) into \((S_0,S_1, \ldots , S_7)\). Also parse \(vk \) accordingly. Verify the following pairing product equations:

$$\begin{aligned}&e(S_1,B)\, e(S_2,B_1)\, e(S_3,A_1) =e(S_6,R_1)\, e(S_7,W_1), \end{aligned}$$(9)$$\begin{aligned}&e(S_1,B)\, e(S_4,B_2)\, e(S_5, A_2) = e(S_6, R_2)\, e(S_7, W_2)\, e(X_1,X_2), \end{aligned}$$(10)$$\begin{aligned}&e(S_7, M_3 H) = e(G, S_0), \end{aligned}$$(11)$$\begin{aligned}&e(F,M_1)=e(C,M_2), \end{aligned}$$(12)$$\begin{aligned}&e(U, M_1)=e(C, M_3). \end{aligned}$$(13)

The scheme is structure-preserving by construction, and the correctness is easily verified as follows.

Equations (9) and (10) hold since \(r= r_1 + r_2\). The followings also hold.

Theorem 7

The above \(\mathsf {{r}SIG{}}\) scheme is UF-RMA under the \(\text {DLIN}\) assumption. In particular, for any p.p.t. algorithm \(\mathcal{A}\) against \(\mathsf {{r}SIG{}}\) that makes at most \(q_s(\lambda )\) signing queries, there exists p.p.t. algorithm \(\mathcal{B}\) for \(\text {DLIN}\) such that \( \text {Adv} ^{\mathsf {uf}\text {-}\mathsf {rma}}_{\mathsf {{r}SIG{}},\mathcal{A}}(\lambda ) \le (q_s(\lambda )+2) \cdot \text {Adv} ^{\mathsf {dlin}}_{{\mathcal {G}},\mathcal{B}}(\lambda )\).

Proof

We refer to the signatures output by the signing algorithm as normal signatures. In the proof we will consider an additional type of signatures which we refer to as simulation-type signatures; these will be computationally indistinguishable but easier to simulate. For \(\gamma \in \mathbb {Z}_p\), simulation-type signatures are of the form \(\sigma = (S_0,S_1'=S_1 \cdot G^{-a_1 a_2 \gamma }, S_2'=S_2 \cdot G^{a_2 \gamma }, S_3, S_4' = S_4 \cdot G^{a_1 \gamma }, S_5, \ldots , S_7)\) where \((S_0,\ldots , S_7)\) is a normal signature. We give the outline of the proof using some lemmas. Proofs for the lemmas are given after the outline.

Lemma 3

Any signature that is accepted by the verification algorithm must be either a normal-type signature or a simulation-type signature.

To prove this lemma, we introduced two verification equations for signature elements that are not related to a message. We consider a sequence of games. Let \(p_i\) be the probability that the adversary succeeds in Game i, and \(p_{i}^\text {norm}(\lambda )\) and \(p_i^\text {sim}(\lambda )\) that he succeeds with a normal-type, or simulation-type forgery, respectively. Then by Lemma 3, \(p_i(\lambda )=p_{i}^\text {norm}(\lambda )+p_i^\text {sim}(\lambda )\) for all i.

- Game 0: :

-

The actual unforgeability under random message attacks game.

Lemma 4

There exists an adversary \(\mathcal{B}_1\) such that \(p_0^\text {sim}(\lambda ) \le \text {Adv} ^{\mathsf {dlin}}_{{\mathcal {G}},\mathcal{B}_1}(\lambda )\).

- Game i: :

-

The real security game except that the first i signatures that are given by the oracle are simulation-type signatures.

Lemma 5

There exists an adversary \(\mathcal{B}_2\) such that \(|p_{i-1}^\text {norm}(\lambda )-p_{i}^\text {norm}(\lambda )| \le \text {Adv} ^{\mathsf {dlin}}_{{\mathcal {G}},\mathcal{B}_2}(\lambda )\).

- Game q: :

-

All signatures given by the oracle are simulation-type signatures.

Lemma 6

There exists an adversary \(\mathcal{B}_3\) such that \(p_q^\text {norm}(\lambda ) \le \text {Adv} ^{\mathsf {cdh}}_{{\mathcal {G}},\mathcal{B}_3}(\lambda )\).

We have shown that in Game q, \(\mathcal{A}\) can output a normal-type forgery with at most negligible probability. Thus, by Lemma 5 we can conclude that the same is true in Game 0 and it holds that

Proof of Lemma 3

We have to show that only normal and simulation-type signatures can fulfil these equations. We ignore verification equations (12) and (13) that establish that \( msg \) is well formed. A signature has four random exponents, \(r_1, r_2, z_1, z_2\). A simulation-type signature has additional exponent \(\gamma \).

We interpret \(S_7\) as \(G^{r_1}\), and it follows from verification equation (11) that \(S_0\) is \((M_3 H)^{r_1}\). We interpret \(S_3\) as \(G^{- b z_1},\,S_5\) as \(G^{- b z_2}\), and \(S_6\) as \(G^{r_2 b}\). Now we have fixed all exponents of a normal signature. The remaining two verification equations tell us that

We interpret \(S_1\) as \(G^{\alpha \cdot a_1} V^{r} G^{-a_1 a_2 \gamma }\). Now we have two equations and two unknowns that fix \(S_2\) to \(G^{-\alpha } V_1^rG^{z_1} G^{a_2 \gamma }\) and \(S_4\) to \(V_2^{r} G^{z_2} G^{a_1 \gamma }\), respectively. If \(\gamma =0\) we have a normal signature, otherwise we have a simulation-type signature.

Proof of Lemma 4

Suppose for contradiction that there is an adversary \(\mathcal{A}\), which, when playing Game 0 (and thus receiving only normal signatures), produces forgeries which are formed like simulation-type signatures. Then we can construct an adversary \(\mathcal{B}_1\) for DLIN as follows.

Let \(I_{\mathsf {dlin}} \!=\! (\varLambda , G_1, G_2, G_3, X, Y, Z)\) be an instance of \(\text {DLIN}\) where \(\varLambda \!=\! (p, {{\mathbb G}}, {{\mathbb G}}_T, e)\) is a Type-I bilinear group setting and \(G_1,\,G_2,\,G_3\) are randomly taken from \({{\mathbb G}}^*\) and there exist random \(x,y,z \in \mathbb {Z}_p\) such that \(X= G_1^x,\,Y=G_2^y\) and \(Z= G_3^z\) or \(G_3^{x+y}\). Given \(I_{\mathsf {dlin}}\), adversary \(\mathcal{B}_1\) works as follows. It first sets \(G:=G_3\) and chooses C, F, U at random from \({{\mathbb G}}^*\), and then sets them into gk. Next, it chooses \(v, v_1, v_2 \in \mathbb {Z}_p^*\) and computes \(V:=G_3^{v},\,V_1:=G_3^{v_1}\), and \(V_2:=G_3^{v_2}\) (This way we know the discrete log of these values w.r.t. \(G_3\)). Then it chooses random \(H \in {{\mathbb G}}^*,\,b, \alpha , \rho \in \mathbb {Z}_p^*\) and compute:

and sets them into vk and sk, accordingly. Note that both the distribution of the public- and secret-keys are statistically close to that in the real \(\text {DLIN}\) game. Moreover, to sign random messages, \(\mathcal{B}_1\) can follow the real signing algorithm by using \(sk \).

Suppose that \(\mathcal{A}\) produces a valid forgery \(\sigma ^{\dagger }\) and \( msg ^{\dagger }\). Then \(\mathcal{B}_1\) proceeds as follows. It parses \(\sigma ^{\dagger }\) as \((S_0,\ldots , S_7)\). By Lemma 3, it is shown that if the verification equations hold, then it must hold that \(S_1 = G^{\alpha a_1} V^{r} G^{-a_1a_2\gamma },\,S_2 = G^{-\alpha }V_1^{r} G^{z_1} G^{a_2 \gamma }\), and \(S_4 = V_2^{r} G^{z_2} G^{a_1 \gamma }\). If this is a simulation-type signature, it holds that \(\gamma \ne 0\). According to our choice of public-key, we can rewrite \(S_1 = G_1^{\alpha } V^{r} G_2^{- f \gamma },\,S_2 = G_3^{-\alpha } V_1^{r} G_3^{z_1} G_2^{ \gamma }\), and \(S_4 = V_2^{r} G_3^{z_2} G_1^{\gamma }\), where f is the discrete log of \(G_1\) w.r.t. \(G_3\). Thus, if \(\mathcal{B}_1\) can extract \(G_2^{-f \gamma }, G_2^{\gamma }, G_1^{\gamma }\), it can easily break the DLIN instance by testing whether \(1 = e(Z, G_2^{-f \gamma }) \cdot e(G_2^\gamma , X) e(G_1^{\gamma }, Y)\). \(\mathcal{B}_1\) can extract such values because the signature includes \(S_3 = G_3^{-bz_1},\,S_5 = G_3^{-bz_2},\,S_6 = G_3^{br_2}\), and \(S_7 = G_3^{r_1}\), and it has \(b,\alpha \) and the discrete logarithms of \(V, V_1, V_2\) w.r.t. \(G_3\). Thus, it will be straightforward to extract the above values.

Proof of Lemma 5

Suppose for contradiction that there exists an adversary \(\mathcal{A}\) such that the probabilities that \(\mathcal{A}\) outputs a normal-type forgery in Game i and Game \(i+1\) differ by a non-negligible amount. Then we will use \(\mathcal{A}\) to construct an algorithm \(\mathcal{B}_2\) that breaks the DLIN assumption.

\(\mathcal{B}_2\) is given an instance of \(\text {DLIN}\); \(I_{\mathsf {dlin}} = (\varLambda , G_1, G_2, G_3, X, Y, Z)\). Note that determining whether a signature is of normal type or simulation type naturally corresponds to a DLIN problem: each signature contains \(S_7=G^{r_1},\,S_6= (G^{b})^{r_2}\), and \(S_1\) which will include \(V^{r_1+r_2}\) or \(V^{r_1+r_2} G^{-a_1a_2\gamma }\) depending on whether this is a normal- or simulation-type signature (Recall that we define \(r=r_1+r_2\)). If \(\mathcal{B}_2\) sets \(G= G_2,\,G^{b} = G_1\), and \(V= G_3\), then it seems fairly straightforward to argue based on the DLIN assumption that it will be impossible for the adversary to distinguish normal and simulation-type signatures. However, \(\mathcal{B}_2\) cannot tell whether \(\mathcal{A}\)’s forgery is normal type or simulation type in this simulation. Thus, there will be no way for \(\mathcal{B}_2\) to take advantage of a change in \(\mathcal{A}\)’s success probability to solve the DLIN challenge.

The solution is to set things up so that, with high probability \(\mathcal{B}_2\) can take \(S_0\) from the adversary’s forgery and extract something that looks like \(G_3^{r_1}\) (which will allow \(\mathcal{B}_2\) to distinguish DLIN tuples and consequently detect simulation-type signatures), but at the same time it is guaranteed that for the ith message, the \(G_3\) component of \(S_0\) will cancel out, leaving only an \(G_2^{r_1}\) component which will not allow the challenger itself to know whether a simulated signature is normal type or simulation type.

More specifically, the idea will be to choose some secret values \(\xi ,\beta , \chi , \eta \) and embed them in the parameters so that for message \((C^w, F^w, U^w)\) we get \(U^{w} H\!=\! G_2^{\chi w + \eta } G_3^{\xi w +\beta }\). Then \(S_0 = (U^{w} H)^{r_1} = G_2^{(\chi w+\eta ) r_1}G_3^{(\xi w +\beta )r_1}\). If \(\xi w + \beta \ne 0\), this gives useful information on \(G_3^{r_1}\) (in particular it will allow \(\mathcal{B}_2\) to test candidate values), while if \(\xi w +\beta =0\), this has no \(G_3\) component and thus doesn’t help at all with finding \(G_3^{r_1}\). \(\mathcal{B}_2\) chooses \(\xi , \beta \) so that \(\xi w + \beta =0\) for the w used to generate the ith message. Furthermore, it will be guaranteed that \(\xi , \beta \) are information theoretically hidden even given w, so the adversary has only negligible chance of producing another message with \(U^{w^*}\) such that \(\xi w^*+\beta =0\) as well.

Now we show details of the algorithm for \(\mathcal{B}_2\). First of all, \(\mathcal{B}_2\) sets up the message space and generates the public-key in the following manner. \(\mathcal{B}_2\) sets \((C, F)\), used to define message space \(\mathcal{M}\), to \((G_1^\varphi ,G_3)\) by choosing random \(\varphi \leftarrow \mathbb {Z}_p^*\). It chooses random \(\xi ,\beta , \chi ,\eta \leftarrow \mathbb {Z}_p^*\), and computes \(U:=G_2^{\chi } G_3^{\xi }\), and \(H:=G_2^{\eta } G_3^\beta \). These values will be uniformly distributed, and independent of \(\xi ,\beta \). \(\mathcal{B}_2\) then sets

\(\mathcal{B}_2\) also sets \(B:=G_1\), and chooses \(V,V_1,V_2\). It must choose these values carefully so that it can compute both \(W_i\) and \(W_i^b\), and at the same time so that the component \(V^{r}\) of a signature value \(S_1\) gives \(\mathcal{B}_2\) some useful information (in particular it will allow \(\mathcal{B}_2\) to derive \(G_3^{r}\)). It does this by choosing \(v_1, v_2, \delta \leftarrow \mathbb {Z}_p^*\), and computing \(V:=G_3^{-a_1a_2\delta },\,V_1:=G_2^{v_1}G_3^{a_2\delta }\), and \(V_2:=G_2^{v_2}G_3^{a_1\delta }\).

Next, \(\mathcal{B}_2\) chooses \(a_1, a_2, \alpha ,\rho \leftarrow \mathbb {Z}_p^*\) and computes

and sets them into vk and sk, accordingly. Note that both of these tuples are distributed statistically close to those produced by \(\mathsf {Setup}\) and \(\mathsf {{r}SIG{}}.\mathsf {Key} \).

Next \(\mathcal{B}_2\) simulates signatures for the jth random message as follows.

-

Case \(j < i\): It chooses \(w_{j}\) at random and computes \((M_1, M_2, M_3) = (C^{w_{j}}, F^{w_{j}}, U^{w_{j}} )\). It can compute a simulation-type signatures for this message since it has sk and \(G^{a_{1} a_{2}} = G_2^{a_{1} a_{2}}\).

-

Case \(j=i\): It chooses w such that \(\xi w +\beta = 0\) and computes \((M_1, M_2, M_3) = (C^{w}, F^{w}, U^{w})\). Note that since no information about \(\xi , \beta \) is revealed this message will look appropriately random to the adversary. It will implicitly hold that \(r_1 = y\) and \(r_2 = x\). \(\mathcal{B}_2\) computes \(S_6 = G^{br_2} = G_1^{x} =X\) and \(S_7 = G^{r_1} = G_2^y = Y\). Recall that it chose \(U,H\) such that \(U^{w} H= G_2^{\chi w+\eta }\). Thus, \(\mathcal{B}_2\) can compute \(S_0 = (M_3 H)^{r_1} = Y^{\chi w + \eta }\).

What remains is to compute \(S_1, S_2, S_4\). Note that this involves computing \(V^r,\,V_1^r\), and \(V_2^r\), respectively. This is where \(\mathcal{B}_2\) will embed its challenge. Recall that \(V= G_3^{-a_1a_2\delta }\). Thus, it will compute \(V^r= (G_3^{r_1+r_2})^{-a_1a_2\delta }\) as \(Z^{-a_1a_2\delta }\). If \(Z= G_3^{x+y}\) this will be correct; if \(Z= G_3^z\) for random z, then there will be an extra factor of \(G_3^{-a_1a_2\delta (z-(x+y))}\). If \(\mathcal{B}_2\) lets \(G^\gamma = G_3^{\delta (z-(x+y))}\) (which is uniformly random from the adversary’s point of view), then this is distributed exactly as it should be in a simulation-type signature. Thus, \(\mathcal{B}_2\) computes \(S_1\) which should be either \(G^{\alpha a_1}V^r\) or \(G^{\alpha a_1}V^rG^{-a_1a_2\gamma }\) as \(G_2^{\alpha a_1}Z^{-a_1a_2\delta }\).

\(\mathcal{B}_2\) can try to apply the same approach to compute \(V_1^r\) to get \(S_2\). However, recall that \(\mathcal{B}_2\) sets \(V_1= G_2^{v_1}G_3^{a_2\delta }\). Thus, computing \(V_1^r\) involves computing \(G_2^r\), which \(\mathcal{B}_2\) cannot do (If it could it could use that to break the \(\text {DLIN}\) assumption). To get around this, \(\mathcal{B}_2\) uses \(z_1, z_2\). It chooses random \(s_1,s_2\) and implicitly sets \(G^{z_1} = G_2^{-v_1 r_2 +s_1}\) and \(G^{z_2} = G_2^{-v_2 r_2 + s_2}\). While it cannot compute these values, it can compute \(G^{-z_1 b} = G_1^{{v_1 r_2 -s_1}}=X^{v_1}G_1^{-s_1}\) and \(G^{-z_2 b} = X^{v_2}G_1^{-s_2}\). Then to generate \(S_2,\,\mathcal{B}_2\) can compute

$$\begin{aligned} G_2^{-\alpha }Y^{v_1}Z^{a_2\delta }G_2^{s_1}&= G^{-\alpha }G_2^{r_1v_1}Z^{a_2\delta }G_2^{s_1} G_2^{r_2v_1}G_2^{-r_2v_1} \\&= G^{-\alpha }G_2^{(r_1+r_2)v_1}Z^{a_2\delta }G_2^{s_1-r_2v_1}\\&= G^{-\alpha }G_2^{rv_1}Z^{a_2\delta }G^{z_1}. \end{aligned}$$If \(Z= G_3^{x+y} = G_3^r\), then this will be

$$\begin{aligned} G^{-\alpha }G_2^{rv_1}G_3^{ra_2\delta }G^{z_1}&=G^{-\alpha }\left( G_2^{v_1}G_3^{a_2\delta }\right) ^rG^{z_1}\\&=G^{-\alpha }V_1^rG^{z_1}. \end{aligned}$$If \(Z=G_3^{z\ne x+y}\), then this will be

$$\begin{aligned} G^{-\alpha }G_2^{rv_1}G_3^{za_2\delta }G^{z_1}&= G^{-\alpha }G_2^{rv_1}G_3^{ra_2\delta }G_3^{a_2\delta (z-(x+y))}G^{z_1} \\&= G^{-\alpha }G_2^{rv_1}G_3^{ra_2\delta }G^{a_2 \gamma }G^{z_1} \\&= G^{-\alpha }V_1^rG^{a_2\gamma }G^{z_1} \end{aligned}$$where the second to last equality follows from our choice of \(\gamma \) above. By a similar argument, \(\mathcal{B}_2\) computes \(S_4\) as \(Y^{v_2}Z^{a_1\delta }G_2^{s_2}\) and this will be either \(V_2^rG^{z_2}\) or \(V_2^rG^{z_2}G^{a_1\gamma }\) as desired. \(\mathcal{B}_2\) sets \(S:=(S_0, S_1,S_2,S_3,S_4,S_5,S_6,S_7)\) where

$$\begin{aligned}&S_0 = Y^{\chi w_{i} + \eta }&\quad&S_1 = G_2^{\alpha a_1}Z^{-a_1a_2\delta }&\quad&S_2 = G_2^{-\alpha }Y^{v_1}Z^{a_2\delta }G_2^{s_1}\\&S_3 = X^{v_1}G_1^{-s_1}&\quad&S_4= Y^{v_2}Z^{a_1\delta }G_2^{s_2}&\quad&S_5 = X^{v_2}G_1^{-s_2}\\&S_6 = X&\quad&S_7 = Y. \end{aligned}$$ -

Case \(j > i\): It chooses w and computes \(m_j = (M_1, M_2, M_3) = (C^{w}, F^{w}, U^{w})\) and a signature \(\sigma \) according to \(\mathsf {{r}SIG{}}.\mathsf {Sign} (sk,m_j)\). It outputs \(\sigma , m_j\).

On receiving forgery \(S= (S_0, S_1,\ldots , S_7)\) and \((M_1, M_2, M_3) = (C^{w}, F^{w}, U^{w})\) for some message \(w,\,\mathcal{B}_2\) outputs 1 if and only if