Abstract

Multi-objective Bayesian optimization (MOBO) is broadly used for applications with high cost observations such as materials discovery. In BO, a derivative-free optimization algorithm is generally employed to maximize the acquisition function. In this study, we present a method for acquisition function maximization based on a \((1 + 1)\)-evolutionary strategy in MOBO for materials discovery, which is a simple and easy-to-use approach with low computational complexity compared to conventional algorithms. In MOBO, weight vectors are used for scalarizing MO functions, typically employed to convert MO optimization into single-objective optimization. The weight vectors at each round of MOBO are generally obtained using either stochastic (random sampling) or deterministic methods based on searched results. To clarify the effect of both the scalarizing methods on MOBO, we examine the effectiveness of random sampling methods versus two deterministic methods: reference-vector-based and self-organizing map-based decomposition methods. Experimental results from four test functions and a hydrogen storage material database as a concrete application show the effectiveness of the proposed method and the random sampling method. These results implied that the proposed method was useful for real-world MOBO experiments in materials discovery.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

Enquiries about data availability should be directed to the authors.

Notes

The database was constructed from the datasets obtained from http://hydrogenmaterialssearch.govtools.us (data last accessed on Apr., 2021).

To improve convergence of the algorithms, the initial values were adjusted as follows. For L-BFGS-B, the initial values were 0.1 for TF1 and TF2; for CMA-ES, the initial values were 0.01 for TF1. CMA-ES was implemented by referring to https://github.com/CMA-ES/c-cmaes. L-BFGS-B was used in the SciPy (1.9.3) library in Python.

For Tf2 and Tf3, there were significant differences (\( < 5 \% \) significant level) between ES and L-BFGS-B; for Tf2, there was significant difference between ES and CMA-ES; for Tf4, CMA-ES and L-BFGS-B significantly outperformed ES.

For qParEGO, qEHVI, and qNEHVI, we used BoTorch (0.8.4) in Python (3.10.9), PyTorch (2.0.0) and Cuda (11.7).

In the comparisons, we used the HVR metric since two state-of-the-art algorithms use the hypervolume.

For F3, the state-of-the-art algorithms significantly outperformed ES. However, there were no significant differences (\( > 5 \% \) significant level) between them for F1, F2, and F4.

References

Akimoto Y, Nagata Y, Ono I, Kobayashi S (2012) Theoretical foundation for CMA-ES from information geometry perspective. Algorithmica 64(4):698–716

Audet C, Bigeon J, Cartier D, Le Digabel S, Salomon L (2021) Performance indicators in multiobjective optimization. Eur J Oper Res 292(2):397–422. https://doi.org/10.1016/j.ejor.2020.11.016

Auger A, Hansen N (2011) Theory of evolution strategies: a new perspective. In: Auger A, Doerr B (eds) Theory of randomized search heuristics: foundations and recent developments, vol 10. World Scientific Publishing, pp 289–325

Balandat M, Karrer B, Jiang D, Daulton S, Letham B, Wilson AG, Bakshy E (2020) Botorch: a framework for efficient Monte-Carlo bayesian optimization. In: Larochelle H, Ranzato M, Hadsell R, Balcan M, Lin H (eds) Advances in neural information processing systems, vol 33. Curran Associates Inc., pp 21524–21538

Bechikh S, Elarbi M, Ben Said L (2017) Many-objective optimization using evolutionary algorithms: a survey. Springer, Cham, pp 105–137

Bezdek JC (1981) Pattern recognition with fuzzy objective function algorithms. Kluwer Academic Publishers, New York

Bonilla EV, Chai KM, Williams C (2008) Multi-task Gaussian process prediction. In: Platt JC, Koller D, Singer Y, Roweis ST (eds) Advances in neural information processing systems, vol 20. Curran Associates Inc, pp 153–160

Brochu E, Cora VM, de Freitas N (2010) A tutorial on Bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning. arXiv:1012.2599

Campigotto P, Passerini A, Battiti R (2014) Active learning of pareto fronts. IEEE Trans Neural Netw Learn Syst 25(3):506–519

Chugh T, Sindhya K, Hakanen J, Miettinen K (2019) A survey on handling computationally expensive multiobjective optimization problems with evolutionary algorithms. Soft Comput 23(9):3137–3166

Cubuk E, Sendek A, Reed E (2019) Screening billions of candidates for solid lithium-ion conductors: a transfer learning approach for small data. J Chem Phys 150:214701

Daulton S, Balandat M, Bakshy E (2020) Differentiable expected hypervolume improvement for parallel multi-objective bayesian optimization. In: Proceedings of the 34th international conference on neural information processing systems, NIPS’20. Curran Associates Inc., Red Hook

Daulton S, Balandat M, Bakshy E (2021) Parallel bayesian optimization of multiple noisy objectives with expected hypervolume improvement. In: Neural information processing systems

Deb K, Thiele L, Laumanns M, Zitzler E (2005) Scalable test problems for evolutionary multiobjective optimization. Springer, London, pp 105–145. https://doi.org/10.1007/1-84628-137-7_6

Frazier PI, Wang J (2016) Bayesian optimization for materials design. Springer, Cham, pp 45–75

Frazier PI, Powell WB, Dayanik S (2008) A knowledge-gradient policy for sequential information collection. SIAM J Control Optim 47(5):2410–2439. https://doi.org/10.1137/070693424

Golovin D, Zhang QR (2020) Random hypervolume scalarizations for provable multi-objective black box optimization. In: Proceedings of the 37th international conference on machine learning, ICML’20. JMLR.org

Gu F, Cheung Y (2018) Self-organizing map-based weight design for decomposition-based many-objective evolutionary algorithm. IEEE Trans Evol Comput 22(2):211–225

Gu F, Liu HL, Tan K (2012) A multiobjective evolutionary algorithm using dynamic weight design method. Int J Innov Comput Inf Control 8:3677–3688

Halim AH, Ismail I, Das S (2020) Performance assessment of the metaheuristic optimization algorithms: an exhaustive review. Artif Intell Rev 54:2323–2409

Hansen N, Ostermeier A (2001) Completely derandomized self-adaptation in evolution strategies. Evol Comput 9(2):159–195

Jaszkiewicz A (2002) On the performance of multiple-objective genetic local search on the 0/1 knapsack problem-a comparative experiment. IEEE Trans Evolut Comput 6(4):402–412

Jones DR, Perttunen CD, Stuckman BE (1993) Lipschitzian optimization without the Lipschitz constant. J Optim Theory Appl 79(1):157–181

Kern S, Müller SD, Hansen N, Büche D, Ocenasek J, Koumoutsakos P (2004) Learning probability distributions in continuous evolutionary algorithms—a comparative review. Nat Comput Int J 3(3):355–356

Knowles J (2006) Parego: a hybrid algorithm with on-line landscape approximation for expensive multiobjective optimization problems. IEEE Trans Evol Comput 10(1):50–66

Li H, Zhang Q (2009) Multiobjective optimization problems with complicated pareto sets, MOEA/D and NSGA-II. IEEE Trans Evol Comput 13(2):284–302

Li B, Li J, Tang K, Yao X (2015) Many-objective evolutionary algorithms: a survey. ACM Comput Surv 48:1–35

Li M, Yao X (2017) What weights work for you? Adapting weights for any pareto front shape in decomposition-based evolutionary multi-objective optimisation. arXiv:1709.02679

Lyu W, Yang F, Yan C, Zhou D, Zeng X (2018) Multi-objective Bayesian optimization for analog/rf circuit synthesis. In: Proceedings of the 55th annual design automation conference, DAC ’18. Association for Computing Machinery, New York

Miettinen K (1999) Nonlinear multiobjective optimization. Kluwer Academic Publishers, Boston

Mockus J, Tiesis V, Zilinskas A (2014) The application of Bayesian methods for seeking the extremum. In: Dixon LCW, Szego GP (eds) Towards global optimization, vol 2. North-Holand, pp 117–129

Nikolaus H (2016) The CMA evolution strategy: a tutorial. CoRR arXiv:1604.00772

Paria B, Kandasamy K, Póczos B (2019) A flexible framework for multi-objective Bayesian optimization using random scalarizations. In: Proceedings of the thirty-fifth conference on uncertainty in artificial intelligence, UAI 2019, Tel Aviv, Israel, p 267

Qi Y, Ma X, Liu F, Jiao L, Sun J, Wu J (2014) MOEA/D with adaptive weight adjustment. Evol Comput 22(2):231–264

Rasmussen CE, Williams CKI (2006) Gaussian processes for machine learning. The MIT Press

Riquelme N, Von Lücken C, Barán B (2015) Performance metrics in multi-objective optimization. In: 2015 Latin American computing conference (CLEI), pp 1–11. https://doi.org/10.1109/CLEI.2015.7360024

Schlapbach L, Züttel A (2001) Hydrogen-storage materials for mobile applications. Nature 414(6861):353–358

Schweidtmann AM, Clayton AD, Holmes N, Bradford E, Bourne RA, Lapkin AA (2018) Machine learning meets continuous flow chemistry: automated optimization towards the pareto front of multiple objectives. Chem Eng J 352:277–282

Shahriari B, Swersky K, Wang Z, Adams RP, de Freitas N (2016) Taking the human out of the loop: a review of Bayesian optimization. Proc IEEE 104(1):148–175

Solomou A, Zhao G, Boluki S, Joy JK, Qian X, Karaman I, Arróyave R, Lagoudas DC (2018) Multi-objective Bayesian materials discovery: application on the discovery of precipitation strengthened niti shape memory alloys through micromechanical modeling. Mater Des 160:810–827

Srinivas N, Krause A, Kakade SM, Seeger MW (2012) Information-theoretic regret bounds for Gaussian process optimization in the bandit setting. IEEE Trans Inf Theory 58(5):3250–3265

Suzumura A, Ohno H, Kikkawa N, Takechi K (2022) Finding a novel electrolyte solution of lithium-ion batteries using an autonomous search system based on ensemble optimization. J Power Sources 541:231698. https://doi.org/10.1016/j.jpowsour.2022.231698

Turchetta M, Krause A, Trimpe S (2019) Robust model-free reinforcement learning with multi-objective Bayesian optimization. arXiv:1910.13399

Wang Z, Zoghi M, Hutter F, Matheson D, de Freitas N (2016) Bayesian optimization in a billion dimensions via random embeddings. J Artif Intell Res 55:361–387

Wang Z, Gehring C, Kohli P, Jegelka S (2018) Batched large-scale Bayesian optimization in high-dimensional spaces. In: Storkey A, Perez-Cruz F (eds) Proceedings of the twenty-first international conference on artificial intelligence and statistics, PMLR, Playa Blanca, Lanzarote, Canary Islands, Proceedings of machine learning research, vol 84, pp 745–754

Wilson J, Hutter F, Deisenroth M (2018) Maximizing acquisition functions for Bayesian optimization. In: Bengio S, Wallach H, Larochelle H, Grauman K, Cesa-Bianchi N, Garnett R (eds) Advances in neural information processing systems, vol 31. Curran Associates Inc

Wu W, He J, Tan AH, Tan CL (2002) On quantitative evaluation of clustering systems. Netw Theory Appl. https://doi.org/10.1007/978-1-4613-0227-8_4

Wu M, Kwong S, Jia Y, Li K, Zhang Q (2017) Adaptive weights generation for decomposition-based multi-objective optimization using Gaussian process regression. In: Proceedings of the genetic and evolutionary computation conference, GECCO ’17. Association for Computing Machinery, New York, pp 641—648

Yang XS (2010) Test problems in optimization. arXiv:1008.0549

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evolut Comput 11(6):712–731

Zhang Q, Liu W, Tsang E, Virginas B (2010) Expensive multiobjective optimization by MOEA/D with Gaussian process model. IEEE Trans Evol Comput 14(3):456–474

Zhang C, Tan KC, Lee LH, Gao L (2018) Adjust weight vectors in MOEA/D for bi-objective optimization problems with discontinuous pareto fronts. Soft Comput 22(12):3997–4012

Zitzler E, Thiele L (1999) Multiobjective evolutionary algorithms: a comparative case study and the strength pareto approach. IEEE Trans Evol Comput 3(4):257–271. https://doi.org/10.1109/4235.797969

Zuluaga M, Krause A, Püschel M (2016) \(\epsilon \)-PAL: an active learning approach to the multi-objective optimization problem. J Mach Learn Res 17(1):3619–3650

Acknowledgements

The author would like to thank Dr. Kazutoshi Miwa for helpful discussions about hydrogen storage materials.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that there is no conflict of interest regarding the publication of this article.

Human and animals rights

This article does not contain any studies with human participants or animals performed by the author.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

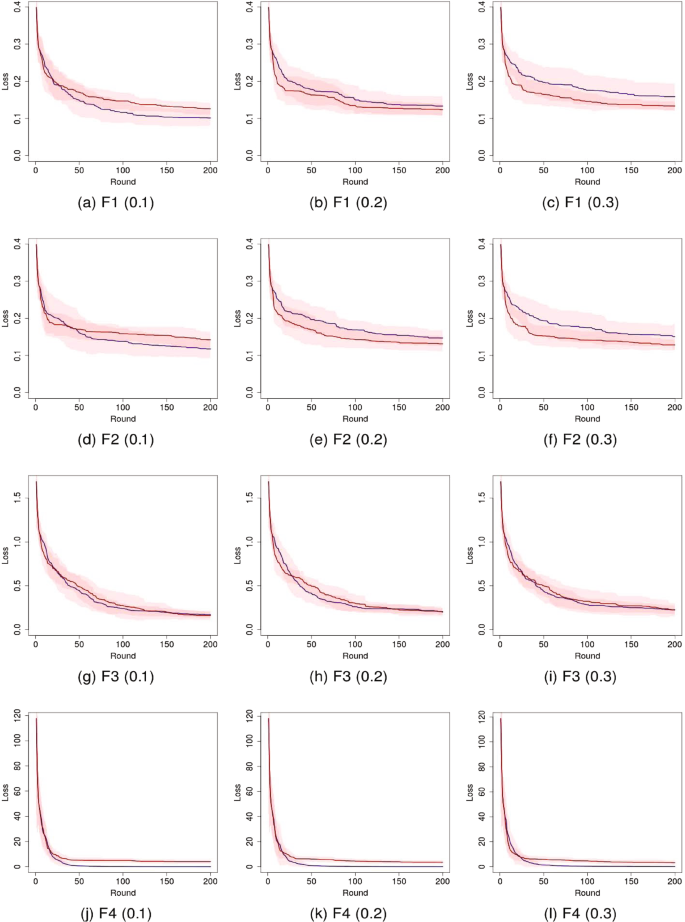

Loss curves of ES and DIR

In Fig. 16, the shaded areas indicate the standard deviation. As F1 and F2 results, as increasing the noise level, the convergence of ES became slower.

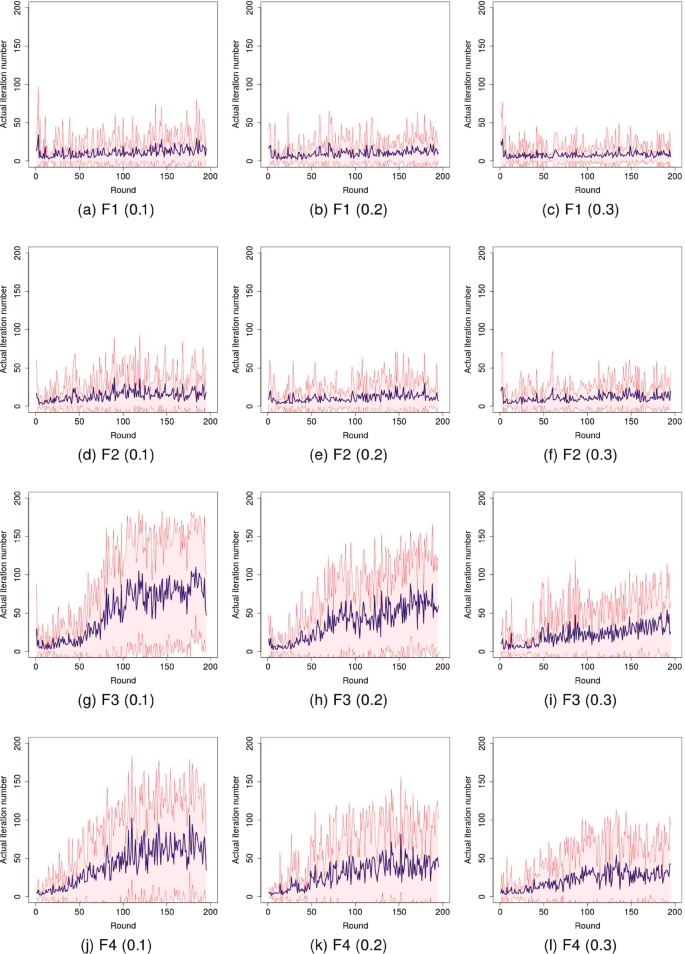

Actual iteration number of ES

For the experiments of the test functions, the actual iteration number of ES (Algorithm 2) at each round is shown in Fig. 17. The solid line denotes the averaged iteration number, and the shaded areas indicate the standard deviation. In the sub-captions, the noise level is shown in the parentheses. As can be seen, the standard deviations were relatively large because \( M = 200 \).

Wall time comparison between ES and DIR

The wall time results (mean and standard deviation values) for the test functions are shown in Table 9. Here, we implemented the algorithms by the C language on a Linux machine with Intel Core i7-5830K processor (3.3GHz) and memory 64GB. From this table, the average speedup of ES was about \( 15 \% \), while the variation of the wall time of ES became larger than that of DIR.

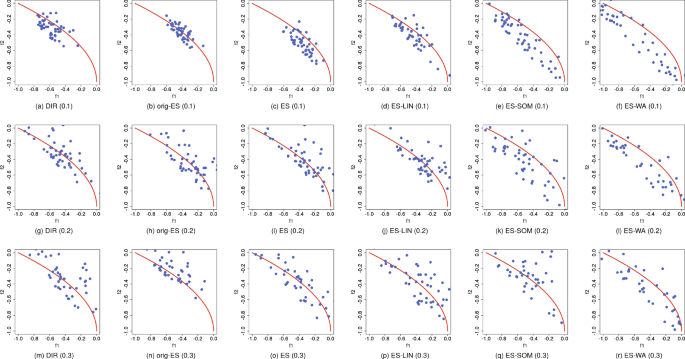

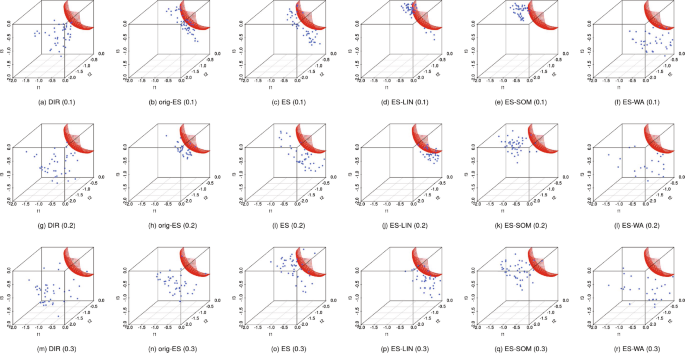

Approximated PFs

Test functions for function optimization

See Table 10.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ohno, H. Empirical study of evolutionary computation-based multi-objective Bayesian optimization for materials discovery. Soft Comput 28, 8807–8834 (2024). https://doi.org/10.1007/s00500-023-09058-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-023-09058-z