Abstract

Denoising has to do with estimating a signal \(\mathbf {x}_0\) from its noisy observations \(\mathbf {y}=\mathbf {x}_0+\mathbf {z}\). In this paper, we focus on the “structured denoising problem,” where the signal \(\mathbf {x}_0\) possesses a certain structure and \(\mathbf {z}\) has independent normally distributed entries with mean zero and variance \(\sigma ^2\). We employ a structure-inducing convex function \(f(\cdot )\) and solve \(\min _\mathbf {x}\{\frac{1}{2}\Vert \mathbf {y}-\mathbf {x}\Vert _2^2+\sigma {\lambda }f(\mathbf {x})\}\) to estimate \(\mathbf {x}_0\), for some \(\lambda >0\). Common choices for \(f(\cdot )\) include the \(\ell _1\) norm for sparse vectors, the \(\ell _1-\ell _2\) norm for block-sparse signals and the nuclear norm for low-rank matrices. The metric we use to evaluate the performance of an estimate \(\mathbf {x}^*\) is the normalized mean-squared error \(\text {NMSE}(\sigma )=\frac{{\mathbb {E}}\Vert \mathbf {x}^*-\mathbf {x}_0\Vert _2^2}{\sigma ^2}\). We show that NMSE is maximized as \(\sigma \rightarrow 0\) and we find the exact worst-case NMSE, which has a simple geometric interpretation: the mean-squared distance of a standard normal vector to the \({\lambda }\)-scaled subdifferential \({\lambda }\partial f(\mathbf {x}_0)\). When \({\lambda }\) is optimally tuned to minimize the worst-case NMSE, our results can be related to the constrained denoising problem \(\min _{f(\mathbf {x})\le f(\mathbf {x}_0)}\{\Vert \mathbf {y}-\mathbf {x}\Vert _2\}\). The paper also connects these results to the generalized LASSO problem, in which one solves \(\min _{f(\mathbf {x})\le f(\mathbf {x}_0)}\{\Vert \mathbf {y}-{\mathbf {A}}\mathbf {x}\Vert _2\}\) to estimate \(\mathbf {x}_0\) from noisy linear observations \(\mathbf {y}={\mathbf {A}}\mathbf {x}_0+\mathbf {z}\). We show that certain properties of the LASSO problem are closely related to the denoising problem. In particular, we characterize the normalized LASSO cost and show that it exhibits a “phase transition” as a function of number of observations. We also provide an order-optimal bound for the LASSO error in terms of the mean-squared distance. Our results are significant in two ways. First, we find a simple formula for the performance of a general convex estimator. Secondly, we establish a connection between the denoising and linear inverse problems.

Similar content being viewed by others

Notes

Observe that if \(\mathbf {z}\) has independent entries with variance \(\sigma ^2\), \( \Vert \mathbf {z}\Vert _2 ^2\) will concentrate around \(\sigma ^2m\).

These works appeared after the initial submission of this manuscript.

References

A. Agarwal, S. Negahban, M. J. Wainwright, et al. Noisy matrix decomposition via convex relaxation: Optimal rates in high dimensions. The Annals of Statistics, 40(2):1171–1197, 2012.

D. Amelunxen, M. Lotz, M. B. McCoy, and J. A. Tropp. Living on the edge: Phase transitions in convex programs with random data. Inform. Inference, 2014.

F. R. Bach. Structured sparsity-inducing norms through submodular functions. In Advances in Neural Information Processing Systems, pages 118–126, 2010.

A. Banerjee, S. Chen, F. Fazayeli, and V. Sivakumar. Estimation with norm regularization. In Advances in Neural Information Processing Systems, pages 1556–1564, 2014.

R. G. Baraniuk, V. Cevher, M. F. Duarte, and C. Hegde. Model-based compressive sensing. Information Theory, IEEE Transactions on, 56(4):1982–2001, 2010.

M. Bayati, M. Lelarge, and A. Montanari. Universality in polytope phase transitions and message passing algorithms. arXiv preprint arXiv:1207.7321, 2012.

M. Bayati and A. Montanari. The dynamics of message passing on dense graphs, with applications to compressed sensing. Information Theory, IEEE Transactions on, 57(2):764–785, 2011.

M. Bayati and A. Montanari. The lasso risk for gaussian matrices. Information Theory, IEEE Transactions on, 58(4):1997–2017, 2012.

A. Belloni, V. Chernozhukov, and L. Wang. Square-root lasso: pivotal recovery of sparse signals via conic programming. Biometrika, 98(4):791–806, 2011.

D. P. Bertsekas, A. Nedić, and A. E. Ozdaglar. Convex analysis and optimization. Athena Scientific, Belmont, 2003.

B. N. Bhaskar, G. Tang, and B. Recht. Atomic norm denoising with applications to line spectral estimation. Signal Processing, IEEE Transactions on, 61(23):5987–5999, 2013.

P. J. Bickel, Y. Ritov, and A. B. Tsybakov. Simultaneous analysis of lasso and dantzig selector. The Annals of Statistics, pages 1705–1732, 2009.

V. I. Bogachev. Gaussian measures. American Mathematical Society, Providence 1998.

S. Boyd and L. Vandenberghe. Convex optimization. Cambridge University Press, Cambridge 2009.

F. Bunea, A. Tsybakov, M. Wegkamp, et al. Sparsity oracle inequalities for the lasso. Electronic Journal of Statistics, 1:169–194, 2007.

J.-F. Cai, E. J. Candès, and Z. Shen. A singular value thresholding algorithm for matrix completion. SIAM Journal on Optimization, 20(4):1956–1982, 2010.

J.-F. Cai and W. Xu. Guarantees of total variation minimization for signal recovery. arXiv preprint arXiv:1301.6791, 2013.

T. T. Cai, T. Liang, and A. Rakhlin. Geometrizing local rates of convergence for linear inverse problems. arXiv preprint arXiv:1404.4408, 2014.

E. Candès and B. Recht. Simple bounds for recovering low-complexity models. Mathematical Programming, 141(1-2):577–589, 2013.

E. J. Candès, X. Li, Y. Ma, and J. Wright. Robust principal component analysis? Journal of the ACM (JACM), 58(3):11, 2011.

E. J. Candes and Y. Plan. Tight oracle inequalities for low-rank matrix recovery from a minimal number of noisy random measurements. Information Theory, IEEE Transactions on, 57(4):2342–2359, 2011.

E. J. Candès and B. Recht. Exact matrix completion via convex optimization. Foundations of Computational mathematics, 9(6):717–772, 2009.

E. J. Candès, J. Romberg, and T. Tao. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. Information Theory, IEEE Transactions on, 52(2):489–509, 2006.

E. J. Candes, J. K. Romberg, and T. Tao. Stable signal recovery from incomplete and inaccurate measurements. Communications on pure and applied mathematics, 59(8):1207–1223, 2006.

E. J. Candes, M. B. Wakin, and S. P. Boyd. Enhancing sparsity by reweighted \(\ell _1\) minimization. Journal of Fourier analysis and applications, 14(5-6):877–905, 2008.

V. Chandrasekaran and M. I. Jordan. Computational and statistical tradeoffs via convex relaxation. Proceedings of the National Academy of Sciences, 110(13):E1181–E1190, 2013.

V. Chandrasekaran, B. Recht, P. A. Parrilo, and A. S. Willsky. The convex geometry of linear inverse problems. Foundations of Computational Mathematics, 12(6):805–849, 2012.

S. Chen and D. L. Donoho. Examples of basis pursuit. In SPIE’s 1995 International Symposium on Optical Science, Engineering, and Instrumentation, pages 564–574. International Society for Optics and Photonics, 1995.

P. L. Combettes and J.-C. Pesquet. Proximal splitting methods in signal processing. In Fixed-point algorithms for inverse problems in science and engineering, pages 185–212. Springer, Berlin 2011.

P. L. Combettes and V. R. Wajs. Signal recovery by proximal forward-backward splitting. Multiscale Modeling & Simulation, 4(4):1168–1200, 2005.

D. Donoho, I. Johnstone, and A. Montanari. Accurate prediction of phase transitions in compressed sensing via a connection to minimax denoising. arXiv preprint arXiv:1111.1041, 2011.

D. Donoho and J. Tanner. Observed universality of phase transitions in high-dimensional geometry, with implications for modern data analysis and signal processing. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 367(1906):4273–4293, 2009.

D. L. Donoho. De-noising by soft-thresholding. Information Theory, IEEE Transactions on, 41(3):613–627, 1995.

D. L. Donoho. Compressed sensing. Information Theory, IEEE Transactions on, 52(4):1289–1306, 2006.

D. L. Donoho. High-dimensional centrally symmetric polytopes with neighborliness proportional to dimension. Discrete & Computational Geometry, 35(4):617–652, 2006.

D. L. Donoho and M. Gavish. Minimax risk of matrix denoising by singular value thresholding. arXiv preprint arXiv:1304.2085, 2013.

D. L. Donoho, M. Gavish, and A. Montanari. The phase transition of matrix recovery from gaussian measurements matches the minimax mse of matrix denoising. Proceedings of the National Academy of Sciences, 110(21):8405–8410, 2013.

D. L. Donoho, A. Maleki, and A. Montanari. Message-passing algorithms for compressed sensing. Proceedings of the National Academy of Sciences, 106(45):18914–18919, 2009.

D. L. Donoho, A. Maleki, and A. Montanari. The noise-sensitivity phase transition in compressed sensing. Information Theory, IEEE Transactions on, 57(10):6920–6941, 2011.

D. L. Donoho and J. Tanner. Neighborliness of randomly projected simplices in high dimensions. Proceedings of the National Academy of Sciences of the United States of America, 102(27):9452–9457, 2005.

D. L. Donoho and J. Tanner. Thresholds for the recovery of sparse solutions via l1 minimization. In Information Sciences and Systems, 2006 40th Annual Conference on, pages 202–206. IEEE, 2006.

Y. C. Eldar, P. Kuppinger, and H. Bolcskei. Block-sparse signals: Uncertainty relations and efficient recovery. Signal Processing, IEEE Transactions on, 58(6):3042–3054, 2010.

M. Fazel. Matrix rank minimization with applications. PhD thesis, Stanford University, 2002.

R. Foygel and L. Mackey. Corrupted sensing: Novel guarantees for separating structured signals. Information Theory, IEEE Transactions on, 60(2):1223–1247, 2014.

Y. Gordon. On Milman’s inequality and random subspaces which escape through a mesh in \({\mathbb{R}}^n\). Springer, Berlin 1988.

O. Güler. On the convergence of the proximal point algorithm for convex minimization. SIAM Journal on Control and Optimization, 29(2):403–419, 1991.

E. T. Hale, W. Yin, and Y. Zhang. A fixed-point continuation method for l1-regularized minimization with applications to compressed sensing. CAAM TR07-07, Rice University, 2007.

J.-B. Hiriart-Urruty and C. Lemaréchal. Convex Analysis and Minimization Algorithms I: Part 1: Fundamentals, volume 305. Springer, Berlin 1996.

R. Jenatton, J. Mairal, F. R. Bach, and G. R. Obozinski. Proximal methods for sparse hierarchical dictionary learning. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), pages 487–494, 2010.

M. A. Khajehnejad, A. G. Dimakis, W. Xu, and B. Hassibi. Sparse recovery of nonnegative signals with minimal expansion. Signal Processing, IEEE Transactions on, 59(1):196–208, 2011.

M. A. Khajehnejad, W. Xu, A. S. Avestimehr, and B. Hassibi. Weighted \(\ell _1\) minimization for sparse recovery with prior information. In Information Theory, 2009. ISIT 2009. IEEE International Symposium on, pages 483–487. IEEE, 2009.

V. Koltchinskii, K. Lounici, A. B. Tsybakov, et al. Nuclear-norm penalization and optimal rates for noisy low-rank matrix completion. The Annals of Statistics, 39(5):2302–2329, 2011.

M. Ledoux. The concentration of measure phenomenon, volume 89. American Mathematical Society, Providence, 2005.

M. Ledoux and M. Talagrand. Probability in Banach Spaces: isoperimetry and processes, volume 23. Springer, Berlin 1991.

J.-J. Moreau. Fonctions convexes duales et points proximaux dans un espace hilbertien. CR Acad. Sci. Paris Sér. A Math, 255:2897–2899, 1962.

D. Needell and R. Ward. Stable image reconstruction using total variation minimization. SIAM Journal on Imaging Sciences, 6(2):1035–1058, 2013.

S. Negahban, B. Yu, M. J. Wainwright, and P. K. Ravikumar. A unified framework for high-dimensional analysis of \( m \)-estimators with decomposable regularizers. In Advances in Neural Information Processing Systems, pages 1348–1356, 2009.

S. Oymak and B. Hassibi. New null space results and recovery thresholds for matrix rank minimization. arXiv preprint arXiv:1011.6326, 2010.

S. Oymak and B. Hassibi. Tight recovery thresholds and robustness analysis for nuclear norm minimization. In Information Theory Proceedings (ISIT), 2011 IEEE International Symposium on, pages 2323–2327. IEEE, 2011.

S. Oymak, A. Jalali, M. Fazel, Y. C. Eldar, and B. Hassibi. Simultaneously structured models with application to sparse and low-rank matrices. arXiv preprint arXiv:1212.3753, 2012.

S. Oymak, C. Thrampoulidis, and B. Hassibi. The squared-error of generalized lasso: A precise analysis. arXiv preprint arXiv:1311.0830, 2013.

N. Parikh and S. Boyd. Proximal algorithms. Foundations and Trends in optimization, 1(3):123–231, 2013.

N. Rao, B. Recht, and R. Nowak. Tight measurement bounds for exact recovery of structured sparse signals. arXiv preprint arXiv:1106.4355, 2011.

B. Recht, M. Fazel, and P. A. Parrilo. Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM review, 52(3):471–501, 2010.

B. Recht, W. Xu, and B. Hassibi. Necessary and sufficient conditions for success of the nuclear norm heuristic for rank minimization. In Decision and Control, 2008. CDC 2008. 47th IEEE Conference on, pages 3065–3070. IEEE, 2008.

E. Richard, F. Bach, and J.-P. Vert. Intersecting singularities for multi-structured estimation. In ICML 2013-30th International Conference on Machine Learning, 2013.

E. Richard, P.-A. Savalle, and N. Vayatis. Estimation of simultaneously sparse and low rank matrices. arXiv preprint arXiv:1206.6474, 2012.

R. T. Rockafellar. Monotone operators and the proximal point algorithm. SIAM journal on control and optimization, 14(5):877–898, 1976.

R. T. Rockafellar. Convex analysis. Princeton University Press, Princeton 1997.

L. I. Rudin, S. Osher, and E. Fatemi. Nonlinear total variation based noise removal algorithms. Physica D: Nonlinear Phenomena, 60(1):259–268, 1992.

A. A. Shabalin and A. B. Nobel. Reconstruction of a low-rank matrix in the presence of gaussian noise. Journal of Multivariate Analysis, 118:67–76, 2013.

M. Stojnic. Various thresholds for \(\ell _1\)-optimization in compressed sensing. arXiv preprint arXiv:0907.3666, 2009.

M. Stojnic. A framework to characterize performance of lasso algorithms. arXiv preprint arXiv:1303.7291, 2013.

M. Stojnic. A performance analysis framework for socp algorithms in noisy compressed sensing. arXiv preprint arXiv:1304.0002, 2013.

M. Stojnic, F. Parvaresh, and B. Hassibi. On the reconstruction of block-sparse signals with an optimal number of measurements. Signal Processing, IEEE Transactions on, 57(8):3075–3085, 2009.

M. Tao and X. Yuan. Recovering low-rank and sparse components of matrices from incomplete and noisy observations. SIAM Journal on Optimization, 21(1):57–81, 2011.

C. Thrampoulidis, A. Panahi, and B. Hassibi. Asymptotically exact error analysis for the generalized \(\ell _2^2\)-lasso. lasso. arXiv preprint arXiv:1502.06287, 2015.

R. Tibshirani. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological), pages 267–288, 1996.

N. Vaswani and W. Lu. Modified-cs: Modifying compressive sensing for problems with partially known support. Signal Processing, IEEE Transactions on, 58(9):4595–4607, 2010.

J. Wright, A. Ganesh, K. Min, and Y. Ma. Compressive principal component pursuit. Information and Inference, 2(1):32–68, 2013.

Z. Zhou, X. Li, J. Wright, E. Candes, and Y. Ma. Stable principal component pursuit. In Information Theory Proceedings (ISIT), 2010 IEEE International Symposium on, pages 1518–1522. IEEE, 2010.

Acknowledgments

This work was supported in part by the National Science Foundation under Grants CCF-0729203, CNS-0932428 and CIF-1018927, by the Office of Naval Research under the MURI Grant N00014-08-1-0747, and by a Grant from Qualcomm Inc. Authors would like to thank Michael McCoy and Joel Tropp for stimulating discussions and helpful comments. Michael McCoy pointed out Lemma 12.1 and informed us of various recent results most importantly Theorem 7.1. S.O. would also like to thank his colleagues Kishore Jaganathan and Christos Thrampoulidis for their support and to the anonymous reviewers for their valuable suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Michael Todd.

Appendices

Auxiliary Results

Fact 10.1

(Hyperplane separation theorem [10]) Assume \({\mathcal {C}}_1,{\mathcal {C}}_2\subseteq {\mathbb {R}}^n\) are disjoint closed sets at least one of which is compact. Then, there exists a hyperplane H such that \({\mathcal {C}}_1,{\mathcal {C}}_2\) lies on different open half planes induced by H.

Fact 10.2

(Properties of the projection [10, 14]) Assume \({\mathcal {C}}\subseteq {\mathbb {R}}^n\) is a nonempty, closed and convex set and \(\mathbf {a},\mathbf {b}\in {\mathbb {R}}^n\) are arbitrary points. Then,

The projection \(\text {Proj}(\mathbf {a},{\mathcal {C}})\) is the unique vector satisfying,

The projection \(\text {Proj}(\mathbf {a},{\mathcal {C}})\) is also the unique vector \(\mathbf {s}_0\) that satisfies,

In other words, \(\mathbf {a}\) and \({\mathcal {C}}\) lie on different half planes induced by the hyperplane that goes through \(\text {Proj}(\mathbf {a},{\mathcal {C}})\) and that is orthogonal to \(\mathbf {a}-\text {Proj}(\mathbf {a},{\mathcal {C}})\).

Fact 10.3

(Moreau’s decomposition theorem [55]) Let \({\mathcal {C}}\) be a closed and convex cone in \({\mathbb {R}}^n\). For any \(\mathbf {v}\in {\mathbb {R}}^n\), the followings are equivalent:

-

\(\mathbf {v}=\mathbf {a}+\mathbf {b}\), \(\mathbf {a}\in {\mathcal {C}},\mathbf {b}\in {\mathcal {C}}^*\) and \(\mathbf {a}^T\mathbf {b}=0\).

-

\(\mathbf {a}=\text {Proj}(\mathbf {v},{\mathcal {C}}),\mathbf {b}=\text {Proj}(\mathbf {v},{\mathcal {C}}^*)\).

Definition 10.1

(Lipschitz function) \(h(\cdot ):{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is called L-Lipschitz if for all \(\mathbf {x},\mathbf {y}\in {\mathbb {R}}^n\), \(|h(\mathbf {x})-h(\mathbf {y})|\le L\Vert \mathbf {x}-\mathbf {y}\Vert _2\).

The next lemma provides a concentration inequality for Lipschitz functions of Gaussian vectors [54].

Fact 10.4

Let \(\mathbf {g}\sim {\mathcal {N}}(0,I)\) and \(h(\cdot ):{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) be an L-Lipschitz function. Then for all \(t\ge 0\):

Lemma 10.1

For any \(\mathbf {g}\sim {\mathcal {N}}(0,I)\), \(c>1\), we have that

Proof

\({\mathbb {E}}[\Vert \mathbf {g}\Vert _2]\le \sqrt{{\mathbb {E}}[\Vert \mathbf {g}\Vert _2^2]}=\sqrt{n}\). Secondly \(\ell _2\) norm is a 1-Lipschitz function due to the triangle inequality. Hence

\(\square \)

Lemma 10.2

Let \({\mathcal {C}}\) be a closed and convex cone in \({\mathbb {R}}^n\). Then, \({\mathbf{D}}({\mathcal {C}})+{\mathbf{D}}({\mathcal {C}}^*)=n\).

Proof

Using Fact 10.3, any \(\mathbf {v}\in {\mathbb {R}}^n\) can be written as \(\text {Proj}(\mathbf {v},{\mathcal {C}})+\text {Proj}(\mathbf {v},{\mathcal {C}}^*)=\mathbf {v}\) and \(\left<\text {Proj}(\mathbf {v},{\mathcal {C}}),\text {Proj}(\mathbf {v},{\mathcal {C}}^*)\right>=0\). Hence,

Letting \(\mathbf {v}\sim {\mathcal {N}}(0,{\mathbf{I}})\) and taking the expectations, we can conclude. \(\square \)

Subdifferential of the Approximation

Proof (Proof of Lemma 3.3)

Recall that \(\hat{f}_{\mathbf {x}_0}(\mathbf {x}_0+\mathbf {v})-f(\mathbf {x}_0)\) is equal to the directional derivative \(f'({\mathbf {x}_0},\mathbf {v})=\sup _{\mathbf {s}\in \partial f(\mathbf {x}_0)} \left<\mathbf {s},\mathbf {v}\right>\). Also recall the “set of maximizing subgradients” from (34). Clearly, \(\partial f'({\mathbf {x}_0},\mathbf {v})=\partial \hat{f}_{\mathbf {x}_0}(\mathbf {x}_0+\mathbf {v})\). We will let \(\mathbf {x}={\mathbf {w}}+\mathbf {x}_0\) and investigate \(\partial f'({\mathbf {x}_0},{\mathbf {w}})\) as a function of \({\mathbf {w}}\).

If \({\mathbf {w}}=0:\) For any \(\mathbf {s}\in \partial f(\mathbf {x}_0)\) and any \(\mathbf {v}\) by definition, we have:

hence \(\mathbf {s}\in \partial f'({\mathbf {x}_0},0)\). Conversely, assume \(\mathbf {s}\not \in \partial f(\mathbf {x}_0)\), then there exists \(\mathbf {v}\) such that:

By convexity for any \(\epsilon >0\):

Taking \(\epsilon \rightarrow 0\) on the left-hand side, we find:

which implies \(\mathbf {s}\not \in \partial f'({\mathbf {x}_0},0)\).

If \({\mathbf {w}}\ne 0\): Now, consider the case \({\mathbf {w}}\ne 0\). Assume \(\mathbf {s}\in \partial f(\mathbf {x}_0,{\mathbf {w}})\). Then, for any \(\mathbf {v}\), we have:

Hence, \(\mathbf {s}\in \partial f'({\mathbf {x}_0},{\mathbf {w}})\). Conversely, assume \(\mathbf {s}\not \in \partial f(\mathbf {x}_0,{\mathbf {w}})\). Then, we will argue that \(\mathbf {s}\not \in \partial f'({\mathbf {x}_0},{\mathbf {w}})\).

Assume \(f'({\mathbf {x}_0},{\mathbf {w}})=c\Vert {\mathbf {w}}\Vert _2^2\) for some scalar \(c=c({\mathbf {w}})\). We can write \(\mathbf {s}=a{\mathbf {w}}+\mathbf {u}\) where \(\mathbf {u}^T{\mathbf {w}}=0\). Choose \(\mathbf {v}=\epsilon {\mathbf {w}}\) with \(|\epsilon |<1\). We end up with:

Consequently, we have \(c\epsilon \ge a\epsilon \) for all \(|\epsilon |<1\) which implies \(a=c\). Hence, \(\mathbf {s}\) can be written as \(c{\mathbf {w}}+\mathbf {u}\). Now, if \(\mathbf {s}\in \partial f(\mathbf {x}_0)\), then \(\mathbf {s}\in \partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0)\) as it maximizes \(\left<\mathbf {s}',{\mathbf {w}}\right>\) over \(\mathbf {s}'\in \partial f(\mathbf {x}_0)\). However, we assumed \(\mathbf {s}\not \in \partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0)\). Observe that \(\mathbf {u}=\mathbf {s}-c{\mathbf {w}}\) and \(\partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0)-c{\mathbf {w}}\) lies on \(n-1\)-dimensional subspace H that is perpendicular to \({\mathbf {w}}\). By assumption \(\mathbf {u}\not \in \partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0)-c{\mathbf {w}}\). We will argue that this leads to a contradiction. By making use of convexity of \(\partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0)-c{\mathbf {w}}\) and invoking hyperplane separation theorem (Fact 10.1), we can find a direction \(\mathbf {h}\in H\) such that:

Next, considering \(\epsilon \mathbf {h}\) perturbation, we have:

Denote the \(\mathbf {s}_1\) that establish equality by \(\mathbf {s}_1^*\).

Claim As \(\epsilon \rightarrow 0\), \(\left<\mathbf {s}_1^*,{\mathbf {w}}\right>\rightarrow c\Vert {\mathbf {w}}\Vert _2^2\). \(\square \)

Proof

Recall that \(\partial f(\mathbf {x}_0)\) is bounded. Let \(R=\sup _{\mathbf {s}'\in \partial f(\mathbf {x}_0)}\Vert \mathbf {s}'\Vert _2\). Choosing \(\mathbf {s}_1\in \partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0)\), we always have:

On the other hand, for any \(\mathbf {s}_1\) we may write:

Hence, for \(\mathbf {s}_1^*\), we obtain:

Letting \(\epsilon \rightarrow 0\), we obtain the desired result. \(\square \)

Claim Given \(\partial f(\mathbf {x}_0)\), for any \(\epsilon '>0\) there exists a \(\delta >0\) such that for all \(\mathbf {s}_1\in \partial f(\mathbf {x}_0)\) satisfying \(\left<\mathbf {s}_1,{\mathbf {w}}\right>>c\Vert {\mathbf {w}}\Vert _2^2-\delta \) we have \(\text {dist}(\mathbf {s}_1,\partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0))<\epsilon '\).

Proof

Assume for some \(\epsilon '>0\), claim is false. Then, we can construct a sequence \(\mathbf {s}(i)\) such that \(\text {dist}(\mathbf {s}(i),\partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0))\ge \epsilon '\) but \(\left<\mathbf {s}(i),{\mathbf {w}}\right>\rightarrow c\Vert {\mathbf {w}}\Vert _2^2\). From the well-known Bolzano–Weierstrass Theorem and the compactness of \(\partial f(\mathbf {x}_0)\subseteq {\mathbb {R}}^n\), \(\mathbf {s}(i)\) will have a convergent subsequence whose limit \(\mathbf {s}(\infty )\) will be inside \(\partial f(\mathbf {x}_0)\) and will satisfy \(\left<\mathbf {s}_\infty ,{\mathbf {w}}\right>=c\Vert {\mathbf {w}}\Vert _2^2=f'({\mathbf {x}_0},{\mathbf {w}})\). On the other hand, \(\text {dist}(\mathbf {s}(\infty ),\partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0))\ge \epsilon '\implies \mathbf {s}(\infty )\not \in \partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0)\) which is a contradiction.

Going back to what we have, using the first claim, as \(\epsilon \mathbf {h}\rightarrow 0\), \(\left<\mathbf {s}_1^*,{\mathbf {w}}\right>\rightarrow c\Vert {\mathbf {w}}\Vert _2^2\). Using the second claim, this implies for some \(\delta \) which approaches to 0 as \(\epsilon \rightarrow 0\), we have:

Finally, based on (88), whenever \(\epsilon \) is chosen to ensure \(\delta \Vert \mathbf {h}\Vert _2<\left<\mathbf {h},\mathbf {u}\right>-\sup _{\mathbf {s}'\in \partial f(\mathbf {x}_0,\mathbf {x}-\mathbf {x}_0)-c{\mathbf {w}}}\left<\mathbf {s}',\mathbf {h}\right>\) we have,

which contradicts with the initial assumption that \(\mathbf {s}\) is a subgradient of \(f'({\mathbf {x}_0},\cdot )\) at \({\mathbf {w}}\), since,

\(\square \)

Lemma 11.1

\(\hat{f}_{\mathbf {x}_0}(\mathbf {x})\) is a convex function of \(\mathbf {x}\).

Proof

To show convexity, we need to argue that the function \(f'({\mathbf {x}_0},{\mathbf {w}})\) is a convex function of \({\mathbf {w}}=\mathbf {x}-\mathbf {x}_0\).

Observe that \(g({\mathbf {w}})=f(\mathbf {x}_0+{\mathbf {w}})-f(\mathbf {x}_0)\) is a convex function of \({\mathbf {w}}\) and behaves same as the directional derivative \(f'({\mathbf {x}_0},{\mathbf {w}})\) for sufficiently small \({\mathbf {w}}\). More rigorously, from (32), for any \({\mathbf {w}}_1,{\mathbf {w}}_2\in {\mathbb {R}}^n\) and \( \delta >0\) there exists \(\epsilon >0\) such that, we have:

Hence, for any \(0\le c\le 1\):

Making use of the fact that \(f'({\mathbf {x}_0},\epsilon \mathbf {s})=\epsilon f'({\mathbf {x}_0},\mathbf {s})\) for any direction \(\mathbf {s}\), we obtain:

Letting \(\delta \rightarrow 0\), we may conclude with the convexity of \(f'({\mathbf {x}_0},\cdot )\) and problem (37). \(\square \)

Swapping the Minimization over \(\tau \) and the Expectation

Lemma 12.1

([13, 53]) Assume \(\mathbf {g}\sim {\mathcal {N}}(0,{\mathbf{I}}_n)\) and let \(h(\cdot ):{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) be an L-Lipschitz function. Then, we have:

We next show a closely related result.

Lemma 12.2

Assume \(\mathbf {g}\sim {\mathcal {N}}(0,{\mathbf{I}}_n)\) and let \(h(\cdot ):{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) be an L-Lipschitz function. Then, we have:

Proof

From Lipschitzness of \(h(\cdot )\), letting \(\mathbf {a}=h(\mathbf {g})-{\mathbb {E}}[h(\mathbf {g})]\) and invoking Lemma 10.4 for all \(t\ge 0\), we have:

Denote the probability density function of \(|\mathbf {a}|\) by \(p(\cdot )\) and let \(Q(u)={\mathbb {P}}(|\mathbf {a}|\ge u)\). We may write:

Using \(Q(u)\le 2\exp \left( -\frac{u^2}{2L^2}\right) \) for \(u\ge 0\), we have:

Next,

\(\square \)

Lemma 12.3

Suppose Assumption 6.1 holds. Recall that \(\tau (\mathbf {v})=\arg \min _{\tau \ge 0}\text {dist}(\mathbf {v},\tau \partial f(\mathbf {x}_0))\). Then, for all \(\mathbf {v}_1,\mathbf {v}_2\),

Hence, \(\tau (\mathbf {v})\) is \(\Vert \mathbf {e}\Vert _2^{-1}\)-Lipschitz function of \(\mathbf {v}\).

Proof

Let \(\mathbf {a}_i=\text {Proj}(\mathbf {v}_i,\text {cone}(\partial f(\mathbf {x}_0)))\) for \(1\le i\le 2\). Using Lemma 10.2, we have \(\Vert \mathbf {a}_1-\mathbf {a}_2\Vert _2\le \Vert \mathbf {v}_1-\mathbf {v}_2\Vert _2\) as \(\partial f(\mathbf {x}_0)\) is convex. Now, we will further lower bound \(\Vert \mathbf {v}_1-\mathbf {v}_2\Vert _2\) as follows:

Now, observe that \(\Vert \text {Proj}(\mathbf {a}_1-\mathbf {a}_2,T)\Vert _2=\Vert \tau (\mathbf {v}_1)\mathbf {e}-\tau (\mathbf {v}_2)\mathbf {e}\Vert _2\). Hence, we may conclude with (89). \(\square \)

Lemma 12.4

Let \({\mathcal {C}}\) be a convex and closed set. Define the set of \(\tau \) that minimizes \(\text {dist}(\mathbf {v},\tau {\mathcal {C}})\),

and let \(\tau (\mathbf {v})=\inf _{\tau \in {\mathbf{T}}(\mathbf {v})}\tau \). \(\tau (\mathbf {v})\) is uniquely determined, given \({\mathcal {C}}\) and \(\mathbf {v}\). Further, assume \(\tau (\mathbf {v})\) is an L Lipschitz function of \(\mathbf {v}\) and let \(R:=R({\mathcal {C}})=\max _{\mathbf {u}\in {\mathcal {C}}}\Vert \mathbf {u}\Vert _2\). Then,

Proof

Let \(\mathbf {g}\sim {\mathcal {N}}(0,{\mathbf{I}})\) and let \(\tau ^*={\mathbb {E}}[\tau (\mathbf {g})]\). Now, from triangle inequality:

Consequently,

This gives:

Observing \({\mathbb {E}}[\text {dist}(\mathbf {g},\tau (\mathbf {g}){\mathcal {C}})]={\mathbb {E}}[\text {dist}(\mathbf {g},\text {cone}({\mathcal {C}}))]\le \sqrt{{\mathbf {D}}(\text {cone}({\mathcal {C}}))}\), and using Lemma 12.2 we find:

This yields:

Using Lemma 12.1 we have \({\mathbb {E}}[\text {dist}(\mathbf {g},\tau (\mathbf {g}){\mathcal {C}})]^2\ge {\mathbb {E}}[\text {dist}(\mathbf {g},\tau (\mathbf {g}){\mathcal {C}})^2]-1\), which gives:

\(\square \)

Intersection of a Cone and a Subspace

1.1 Intersections of Randomly Oriented Cones

Based on Kinematic formula (Theorem 7.1), one may find the following result on the intersection of the two cones. We first consider the scenario in which one of the cones is a subspace.

Proposition 13.1

(Intersection with a subspace) Let A be a closed and convex cone and let B be a linear subspace. Denote \(\delta (A)+\delta (B)-n\) by \(\delta (A,B)\). Assume the unitary \({\mathbf {U}}\) is generated uniformly at random. Given \(\epsilon >0\), we have the following:

-

If \(\delta (A)+\delta (B)+\epsilon \sqrt{n}>n\),

$$\begin{aligned} {\mathbb {P}}(\delta (A\cap {\mathbf {U}}B)\ge \delta (A,B)+\epsilon \sqrt{n})\le 8\exp \left( -\frac{\epsilon ^2}{64}\right) . \end{aligned}$$ -

\({\mathbb {P}}(\delta (A\cap {\mathbf {U}}B)\le \delta (A,B)-\epsilon \sqrt{n})\le 8\exp \left( -\frac{\epsilon ^2}{64}\right) \).

Proof

Denote \(A\cap {\mathbf {U}}B\) by C. Let H be a subspace with dimension \(n-d\) chosen uniformly at random independent of \({\mathbf {U}}\). Observe that \({\mathbf {U}}B\cap H\) is a \(\delta (B)-d\)-dimensional random subspace for \(d<\delta (B)\). Hence, using Theorem 7.1 with A and \({\mathbf {U}}B\cap H\) yields:

Observe that (90) is true even when \(d\ge \delta (B)\) since if \(d\ge \delta (B)\), \({\mathbf {U}}B\cap H=\{0\}\) with probability 1.

Proving the first statement: Let \(\gamma =\delta (A)+\delta (B)-n\), \(\gamma _{\epsilon }=\gamma +\epsilon \sqrt{n}\) and \(\gamma _{\epsilon /2}=\gamma +\frac{\epsilon }{2}\sqrt{n}\). We assume \(\gamma _{\epsilon }>0\). Observing \(A\cap {\mathbf {U}}B\cap H=C\cap H\), we may write:

If \(\gamma _{\epsilon }>n\), \({\mathbb {P}}(\delta (C)\le \gamma _{\epsilon })=1\). Otherwise, choose \(d=\max \{\gamma _{\epsilon /2},0\}\).

Case 1 If \(d=0\), then \(\gamma _{\epsilon /2}\le 0\) and \(H={\mathbb {R}}^n\). This gives,

Also, choosing \(t=\frac{\epsilon }{2}\sqrt{n}\) in (90) and using \(\gamma \le -\frac{\epsilon }{2}\sqrt{n}\), we obtain:

Case 2 Otherwise, \(d=\gamma _{\epsilon /2}>0\). Applying Theorem 7.1, we find:

Next, choosing \(t=\frac{\epsilon }{2}\sqrt{n}\) in (90), we obtain:

Overall, combining (92), (93), (94), (95) and (96), we obtain:

Proving the second statement: In the exact same manner, this time, let \(\gamma _{-\epsilon }=\gamma -\epsilon \sqrt{n}\), \(\gamma _{-\epsilon /2}=\gamma -\frac{\epsilon }{2}\sqrt{n}\). If \(\gamma _{-\epsilon }<0\),

Otherwise, let \(d=\gamma _{-\epsilon /2}\), we may write,

in an identical way to (92). Repeating the previous argument and using (91), we may first obtain,

and using Theorem 7.1,

Combining these gives the desired result.

\(\square \)

Proof of Theorem 2.1: Lower Bound

Theorem 14.1

Let \({\mathcal {C}}\) be a closed and convex set, \(\mathbf {v}\sim {\mathcal {N}}(0,{\mathbf{I}})\) and let \(\mathbf {x}^*(\sigma \mathbf {v})=\arg \min _{\mathbf {x}\in {\mathcal {C}}} \Vert \mathbf {x}_0+\sigma \mathbf {v}-\mathbf {x}\Vert _2\). Then, we have,

Proof

Let \(1\ge \alpha ,\epsilon > 0\) be numbers to be determined. Denote probability density function of a \({\mathcal {N}}(0,c{\mathbf{I}})\) distributed vector by \(p_c(\cdot )\). From Lemma 15.1, the expected error \({\mathbb {E}}[\Vert \mathbf {x}^*-\mathbf {x}_0\Vert _2^2]\) is simply,

Let \(S_{\alpha }\) be the set satisfying:

Let \(\bar{S}_\alpha ={\mathbb {R}}^n-S_\alpha \). Using Proposition 15.1, given \(\epsilon >0\), choose \(\epsilon _0>0\) such that for all \(\Vert \mathbf {u}\Vert _2\le \epsilon _0\) and \(\mathbf {u}\in S_{\alpha }\), we have,

Now, let \(\mathbf {z}=\sigma \mathbf {v}\). Split the error into three groups, namely

-

\(F_1=\int _{\Vert \mathbf {z}\Vert _2\le \epsilon _0,\mathbf {z}\in S_{\alpha }}\Vert \text {Proj}(\mathbf {z},F_{\mathcal {C}}(\mathbf {x}_0))\Vert _2^2p_\sigma (\mathbf {z})d\mathbf {z}\), \(T_1=\int _{\Vert \mathbf {v}\Vert _2\le \frac{\epsilon _0}{\sigma },\mathbf {v}\in S_{\alpha }}\Vert \text {Proj}(\mathbf {v},T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2^2p_1(\mathbf {v})d\mathbf {v}\).

-

\(F_2=\int _{\Vert \mathbf {z}\Vert _2\ge \epsilon _0,\mathbf {z}\in S_{\alpha }}\Vert \text {Proj}(\mathbf {z},F_{\mathcal {C}}(\mathbf {x}_0))\Vert _2^2p_\sigma (\mathbf {z})d\mathbf {z}\), \(T_2=\int _{\Vert \mathbf {v}\Vert _2\ge \frac{\epsilon _0}{\sigma },\mathbf {v}\in S_{\alpha }}\Vert \text {Proj}(\mathbf {v},T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2^2p_1(\mathbf {v})d\mathbf {v}\).

-

\(F_3=\int _{\mathbf {z}\in \bar{S}_{\alpha }}\Vert \text {Proj}(\mathbf {z},F_{\mathcal {C}}(\mathbf {x}_0))\Vert _2^2p_\sigma (\mathbf {z})d\mathbf {z}\), \(T_3=\int _{\mathbf {v}\in \bar{S}_{\alpha }}\Vert \text {Proj}(\mathbf {v},T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2^2p_1(\mathbf {g})d\mathbf {v}\).

The rest of the argument will be very similar to the proof of Proposition 4.2. We know the following from Proposition 3.1:

To proceed, we will argue that the contributions of the second and third terms are small for sufficiently small \(\sigma ,\alpha ,\epsilon >0\). Observe that:

For \(T_2\), we have:

Since \(\Vert \mathbf {g}\Vert _2\) has finite second moment, fixing \(\epsilon _0>0\) and letting \(\sigma \rightarrow 0\), we have \(C\left( \frac{\epsilon _0}{\sigma }\right) \rightarrow 0\). For \(T_1\), from (98), we have:

Overall, we found:

Writing \(T_1={\mathbf {D}}(T_{\mathcal {C}}(\mathbf {x}_0)^*)-T_2-T_3\ge {\mathbf {D}}(T_{\mathcal {C}}(\mathbf {x}_0)^*)-\alpha ^2n-C\left( \frac{\epsilon _0}{\sigma }\right) \), we have:

Letting \(\sigma \rightarrow 0\) for fixed \(\alpha ,\epsilon _0,\epsilon \), we obtain

Since \(\alpha ,\epsilon \) can be made arbitrarily small, we obtain \(\lim _{\sigma \rightarrow 0}\frac{{\mathbb {E}}[\Vert \mathbf {x}^*-\mathbf {x}_0\Vert _2^2]}{\sigma ^2{\mathbf {D}}(T_{\mathcal {C}}(\mathbf {x}_0)^*)}=1\). \(\square \)

The next result shows that, as \(\sigma \rightarrow 0\), we can exactly predict the cost of the constrained problem.

Proposition 14.1

Consider the setup in Theorem 14.1. Let \({\mathbf {w}}^*(\sigma \mathbf {v})=\mathbf {x}^*(\sigma \mathbf {v})-\mathbf {x}_0\). Then,

Proof

Let \({\mathbf {w}}^*={\mathbf {w}}^*(\sigma \mathbf {v})\) and \(\mathbf {z}=\sigma \mathbf {v}\). \(\mathbf {z}-{\mathbf {w}}^*\) satisfies two conditions.

-

From Lemma 15.1, \(\Vert \mathbf {z}-{\mathbf {w}}^*\Vert _2=\text {dist}(\mathbf {z},F_{\mathcal {C}}(\mathbf {x}_0))\ge \text {dist}(\mathbf {z},T_{\mathcal {C}}(\mathbf {x}_0))\).

-

Using Lemma 15.4, \(\Vert \mathbf {z}-{\mathbf {w}}^*\Vert _2^2+\Vert {\mathbf {w}}^*\Vert _2^2\le \Vert \mathbf {z}\Vert _2^2\).

Consequently, when \(\mathbf {v}\sim {\mathcal {N}}(0,{\mathbf{I}})\), we find:

Normalizing both sides by \(\sigma ^2\) and subtracting \({\mathbf{D}}(T_{\mathcal {C}}(\mathbf {x}_0))={\mathbb {E}}[\text {Proj}(\mathbf {v},T_{\mathcal {C}}(\mathbf {x}_0)^*)^2]\) and \({\mathbb {E}}[\Vert {\mathbf {w}}^*\Vert _2^2]\), we find:

where we used Lemma 10.2. Now, letting \(\sigma \rightarrow 0\) and using the fact that \(\lim _{\sigma \rightarrow 0}\frac{{\mathbb {E}}[\Vert {\mathbf {w}}^*\Vert _2^2]}{\sigma ^2}={\mathbf {D}}(T_{\mathcal {C}}(\mathbf {x}_0)^*)\), we find the desired result. \(\square \)

Approximation Results on Convex Cones

Remark

Throughout the section, \({\mathcal {C}}\) is assumed to be a nonempty, closed and convex set in \({\mathbb {R}}^n\).

1.1 Standard Observations

Property 15.1

Let \(\mathbf {x}_0\in {\mathcal {C}}\) and \(\mathbf {y}=\mathbf {x}_0+\mathbf {z}\in {\mathbb {R}}^n\). From Lemma 86, recall that \(\text {Proj}(\mathbf {y},{\mathcal {C}})\) is the unique vector that is equal to \(\arg \min _{\mathbf {u}\in {\mathcal {C}}}\Vert \mathbf {y}-\mathbf {u}\Vert _2\). By definition of feasible set \(F_{\mathcal {C}}(\mathbf {x}_0)\), we also have, \(\text {Proj}(\mathbf {y},{\mathcal {C}})=\text {Proj}(\mathbf {z},F_{\mathcal {C}}(\mathbf {x}_0))\).

Lemma 15.1

For all \(\mathbf {z}\in {\mathbb {R}}^n\) and \(\mathbf {x}_0\in {\mathcal {C}}\), we have

Proof

Setting \(f(\cdot )=0\) and \(\mathbf {y}=\mathbf {x}_0+\mathbf {z}\) in Lemma 3.2, we have

\(\square \)

The following lemma shows that projection onto the feasible cone is arbitrarily close to the projection onto the tangent cone as we scale down the vector. This is due to Proposition 5.3.5 of Chapter III of [48].

Lemma 15.2

Assume \(\mathbf {x}_0\in {\mathcal {C}}\). Then, for any \(\mathbf {z}\in {\mathcal {C}}\),

Hence,

-

If \(\text {Proj}({\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0))=0\), using Lemma 15.1, \(\text {Proj}(\mathbf {z},F_{\mathcal {C}}(\mathbf {x}_0))=0\).

-

If \(\text {Proj}({\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0))\ne 0\),

$$\begin{aligned} \lim _{\epsilon \rightarrow 0}\frac{\Vert \text {Proj}(\epsilon {\mathbf {w}},F_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}{\Vert \text {Proj}(\epsilon {\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}=1. \end{aligned}$$

1.2 Uniform Approximation to the Tangent Cone

Proposition 15.1

Let \({\mathcal {C}}\) be a closed and convex set including \(\mathbf {x}_0\). Denote the unit \(\ell _2\)-sphere in \({\mathbb {R}}^n\) by \({\mathcal {S}}^{n-1}\) and let \(1\ge \alpha >0\) be arbitrary. Given \(\alpha ,\epsilon >0\), there exists an \(\epsilon _0>0\) such that for all \({\mathbf {w}}\in {\mathcal {S}}^{n-1}\), \(\Vert \text {Proj}({\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2\ge \alpha \) and for all \(0<t\le \epsilon _0\), we have:

In particular, setting \(\alpha =1\), given \(\epsilon >0\), there exists \(\epsilon _0>0\) such that, for all \(t\le \epsilon _0\) and all \({\mathbf {w}}\in T_{\mathcal {C}}(\mathbf {x}_0)\cap {\mathcal {S}}^{n-1}\), \(\Vert \text {Proj}(t{\mathbf {w}},F_{\mathcal {C}}(\mathbf {x}_0))\Vert _2\ge (1-\epsilon )t\).

Remark

Note that statements of Propositions 15.1 and 4.1 are quite similar.

Proof

Given \(\alpha >0\), consider the following set:

This set is closed and bounded and hence compact. Define the following function on this set

\(c({\mathbf {w}})\) is strictly positive due to Lemma 15.2 and it can be as high as infinity. Furthermore, from Lemma 15.3, we know that whenever \(c<c({\mathbf {w}})\)

as well. Let \(s({\mathbf {w}})=\min \{1,c({\mathbf {w}})\}\). If \(s({\mathbf {w}})\) is continuous, since \({\mathcal {S}}^{n-1}\) is compact \(s({\mathbf {w}})\) will attain its minimum which implies \(c({\mathbf {w}})\ge s({\mathbf {w}})\ge \epsilon _0>0\) for some \(\epsilon _0\). Again, this also implies, for all \({\mathbf {w}}\in {\mathcal {S}}^{n-1}\), and \(0<t\le \epsilon _0\),

To end the proof, we will show continuity of \(s({\mathbf {w}})\).

Claim \(s({\mathbf {w}})\) is continuous. \(\square \)

Proof

We will show that \(\lim _{{\mathbf {w}}_2\rightarrow {\mathbf {w}}_1}s({\mathbf {w}}_2)=s({\mathbf {w}}_1)\). To do this, we will make use of the continuity of the functions \(\Vert \text {Proj}(c_1{\mathbf {w}},F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2\), \(\Vert \text {Proj}(c_1{\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0)\Vert _2\) and \(\frac{\Vert \text {Proj}(c_1{\mathbf {w}},F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}{\Vert \text {Proj}(c_1{\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}\) when the denominator is nonzero. Given \({\mathbf {w}}_1\), let \(c_1=\min \{2,c({\mathbf {w}}_1)\}\).

Case 1 If \(\frac{\Vert \text {Proj}(c_1{\mathbf {w}}_1,F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}{\Vert \text {Proj}(c_1{\mathbf {w}}_1,T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}>1-\epsilon \), then \(c({\mathbf {w}}_1)>2\) and for all \({\mathbf {w}}_2\) sufficiently close to \({\mathbf {w}}_1\), \(\frac{\Vert \text {Proj}(c_1{\mathbf {w}}_2,F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}{\Vert \text {Proj}(c_1{\mathbf {w}}_2,T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}\) is more than \(1-\epsilon \) and hence \(c({\mathbf {w}}_2)\ge 2>1\). Hence, \(s({\mathbf {w}}_1)=s({\mathbf {w}}_2)\).

Case 2 Now, assume \(\frac{\Vert \text {Proj}(c_1{\mathbf {w}}_1,F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}{\Vert \text {Proj}(c_1{\mathbf {w}}_1,T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}=1-\epsilon \) which implies \(c_1=c({\mathbf {w}}_1)\). Using the “strict decrease” part of Lemma 15.3, for any \(\epsilon '>0\) and \(c'=c_1-\epsilon '\), \(\frac{\Vert \text {Proj}(c'{\mathbf {w}}_1,F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}{\Vert \text {Proj}(c'{\mathbf {w}}_1,T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}>1-\epsilon \). Then, for \({\mathbf {w}}_2\) sufficiently close to \({\mathbf {w}}_1\), \(\frac{\Vert \text {Proj}(c'{\mathbf {w}}_2,F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}{\Vert \text {Proj}(c'{\mathbf {w}}_2,T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}>1-\epsilon \) which implies \(c({\mathbf {w}}_2)\ge c'\). Hence, \(c({\mathbf {w}}_2)\ge c_1-\epsilon '\) for arbitrarily small \(\epsilon '>0\). Conversely, for any \(\epsilon '>0\) and \(c'=c_1+\epsilon '\), \(\frac{\Vert \text {Proj}(c'{\mathbf {w}}_1,F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}{\Vert \text {Proj}(c'{\mathbf {w}}_1,T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}<1-\epsilon \). Then, for \({\mathbf {w}}_2\) sufficiently close to \({\mathbf {w}}_1\), \(\frac{\Vert \text {Proj}(c'{\mathbf {w}}_2,F_{\mathcal {C}}(\mathbf {x}_0)\Vert _2}{\Vert \text {Proj}(c'{\mathbf {w}}_2,T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}<1-\epsilon \) which implies \(c({\mathbf {w}}_2)\le c'\). Hence, \(c({\mathbf {w}}_2)\le c_1+\epsilon '\) for arbitrarily small \(\epsilon '>0\). Combining these, we obtain \(c({\mathbf {w}}_2)\rightarrow c({\mathbf {w}}_1)\) as \({\mathbf {w}}_2\rightarrow {\mathbf {w}}_1\). This also implies \(s({\mathbf {w}}_2)\rightarrow s({\mathbf {w}}_1)\). \(\square \)

This finishes the proof of the main statement (99). For the \(\alpha =1\) case, observe that \(\Vert {\mathbf {w}}\Vert _2=1\) and \(\Vert \text {Proj}({\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2=1\) implies \({\mathbf {w}}\in T_{\mathcal {C}}(\mathbf {x}_0)\).

Lemma 15.3

Let \(\mathbf {x}_0{\in }{\mathbb {R}}^n\) and let \({\mathbf {w}}\) have unit \(\ell _2\)-norm and set \(l_T{=}\Vert \text {Proj}({\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0))\Vert _2\). Define the function,

Then, \(g(\cdot )\) is continuous and nonincreasing on \([0,\infty )\). Furthermore, it is strictly decreasing on the interval \([t_0,\infty )\) where \(t_0=\sup _{t} \{t>0\big |g(t)=l_T\}\).

Proof

Due to Lemma 15.1, \(g(t)\le l_T\) and from Lemma 15.2, the function is continuous at 0. Continuity at \(t\ne 0\) follows from the continuity of the projection (see Fact 10.2). Next, if \(g(t)=l_T\), using the fact that \(F_{\mathcal {C}}(\mathbf {x}_0)\) contains 0, the second statement of Lemma 15.4 gives,

From convexity, \(\text {Proj}(t'{\mathbf {w}},T_{\mathcal {C}}(\mathbf {x}_0))\in F_{\mathcal {C}}(\mathbf {x}_0)\) for all \(0\le t'\le t\). Hence, \(g(t')=l_T\). This implies \(g(t)=l_T\) for \(t\le t_0\).

Now, assume \(t_1>t_0\) and \(t_1>t_2>0\) for some \(t_1,t_2>0\). Then, \(g(t_1)<l_T\), and hence, the third statement of Lemma 15.4 applies. Setting \(\alpha =\frac{t_2}{t_1}\) in Lemma 15.4, we find,

which implies the strict decrease of \(\frac{\Vert \text {Proj}(t{\mathbf {w}},F_{\mathcal {C}}(\mathbf {x}_0))\Vert _2}{t}\) over \(t\ge t_0\). \(\square \)

For the rest of the discussion, given three points A, B, C in \({\mathbb {R}}^n\), the angle induced by the lines AB and BC will be denoted by \(A\hat{B}C\).

Lemma 15.4

Let \({\mathcal {K}}\) be a convex and closed set in \({\mathbb {R}}^n\) that includes 0. Let \(\mathbf {z}\in {\mathbb {R}}^n\) and \(0<\alpha <1\) be arbitrary, let \(\mathbf {p}_1=\text {Proj}(\mathbf {z},{\mathcal {K}})\), \(\mathbf {p}_2=\text {Proj}(\alpha \mathbf {z},{\mathcal {K}})\). Denote the points whose coordinates are determined by \(0,\mathbf {p}_1,\mathbf {p}_2,\mathbf {z}\) by \(O, P_1,P_2\) and Z, respectively. Then,

-

\(Z\hat{P_1}O\) is either wide or right angle.

-

If \(Z\hat{P_1}O\) is right angle, then \(\mathbf {p}_1=\frac{\mathbf {p}_2}{\alpha }=\text {Proj}(\mathbf {z},T_{\mathcal {K}}(0))\).

-

If \(Z\hat{P_1}O\) is wide angle, then \(\Vert \mathbf {p}_1\Vert _2<\frac{\Vert \mathbf {p}_2\Vert _2}{\alpha }\le \Vert \text {Proj}(\mathbf {z},T_{\mathcal {K}}(0))\Vert _2\).

Proof

Acute angle: Assume \(Z\hat{P_1}O\) is acute angle. If \(Z\hat{O}P_1\) is right or wide angle, then 0 is closer to \(\mathbf {z}\) than \(\mathbf {p}_1\) which is a contradiction. If \(Z\hat{O}P_1\) is acute angle, then draw the perpendicular from Z to the line \(OP_1\). The intersection is in \({\mathcal {K}}\) due to convexity and it is closer to \(\mathbf {z}\) than \(\mathbf {p}_1\), which again is a contradiction.

Right angle: Now, assume \(Z\hat{P_1}O\) is right angle. Using Fact 10.2, there exists a hyperplane H that separates \(\mathbf {z}\) and \({\mathcal {K}}\) passing through \(P_1\) which is perpendicular to \(\mathbf {z}-\mathbf {p}_1\). The line \(P_1O\) lies on H. Consequently, for any \(\alpha \in [0,1]\), the closest point to \(\alpha \mathbf {z}\) over \({\mathcal {K}}\) is simply \(\alpha \mathbf {p}_1\). Hence, \(\mathbf {p}_2=\alpha \mathbf {p}_1\). Now, let \(\mathbf {q}_1:=\text {Proj}(\mathbf {z},T_{\mathcal {K}}(0))\). Then, \(\text {Proj}(\alpha \mathbf {z},T_{\mathcal {K}}(0))=\alpha \mathbf {q}_1\). If \(\mathbf {q}_1\ne \mathbf {p}_1\), then \(\Vert \mathbf {q}_1\Vert _2>\Vert \mathbf {p}_1\Vert _2\) since \(\Vert \mathbf {z}-\mathbf {q}_1\Vert _2<\Vert \mathbf {z}-\mathbf {p}_1\Vert _2\) and:

where the last inequality follows from the fact that \(Z\hat{P_1}O\) is not acute. Then,

which contradicts with Lemma 15.2.

Wide angle: Finally, assume \(Z\hat{P_1}O\) is wide angle. We start by reducing the problem to a two-dimensional one. Obtain \({\mathcal {K}}'\) by projecting the set \({\mathcal {K}}\) to the 2D plane induced by the points \(Z,P_1\) and O. Now, let \(\mathbf {p}_2'=\text {Proj}(\alpha \mathbf {z},{\mathcal {K}}')\). Due to the projection, we still have

and \(\Vert \mathbf {p}_2'\Vert _2\le \Vert \mathbf {p}_2\Vert _2\). Next, we will prove that \(\Vert \mathbf {p}_2'\Vert _2>\Vert \alpha \mathbf {p}_1\Vert _2\) to conclude. Figure 7 will help us explain our approach. Let the line \(UP_1\) be perpendicular to \(ZP_1\). Assume, it crosses ZO at S. Let \(P'Z'\) be parallel to \(P_1Z_1\). Observe that \(P'\) corresponds to \(\alpha \mathbf {p}_1\). H is the intersection of \(P'Z'\) and \(P_1U\). Denote the point corresponding to \(\mathbf {p}_2'\) by \(P_2'\). Observe that \(P_2'\) satisfies the following:

-

\(P_1\) is the closest point to Z in \({\mathcal {K}}\) and hence \(P_2'\) lies on the left of \(P_1U\) (same side as O).

-

\(P_2\) is the closest point to \(Z'\). Hence, \(Z'\hat{P_2}P_1\) is not acute angle. Otherwise, we can draw a perpendicular to \(P_2P_1\) from \(Z'\) and end up with a shorted distance. This would also imply that \(Z'\hat{P_2'}P_1\) is not acute as well. The reason is, due to projection, \(|Z'P_2'|\le |Z'P_2|\) and \(|P_2'P_1|\le |P_2P_1|\) and hence,

$$\begin{aligned} |Z'P_1|\ge |Z'P_2|^2+|P_2P_1|^2\ge |Z'P_2'|^2+|P_2'P_1|^2. \end{aligned}$$(100) -

\(P_2'\) has to lie below or on the line \(OP_1\) otherwise, perpendicular to \(OP_1\) from \(Z'\) would yield a shorter distance than \(|P_2'Z'|\).

-

\(\mathbf {p}_2\ne \alpha \mathbf {p}_1\). To see this, note that \(Z'\hat{P'}O\) is wide angle. Let \(\mathbf {q}\in {\mathbb {R}}^n\) be the projection of \(\alpha \mathbf {z}\) on the line \(\{c\mathbf {p}_1\big |c\in {\mathbb {R}}\}\) and point Q denote the vector \(\mathbf {q}\). If Q lies between O and \(P_1\), \(\mathbf {q}\in {\mathcal {K}}\) and \(|QZ'|<|P'Z'|\). Otherwise, \(P_1\) lies between Q and \(P'\) and hence \(|P_1Z'|<|P'Z'|\) and \(\mathbf {p}\in {\mathcal {K}}\). This implies \(P_2,P_2'\ne P'\).

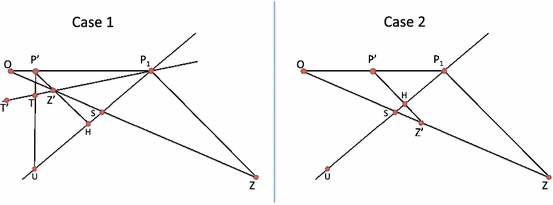

Based on these observations, we investigate the problem in two cases illustrated in Fig. 7.

Possible configurations of the points in Lemma 15.4

Case 1 (S lies on \(Z'Z\)): Consider the left-hand side of Fig. 7. If \(P_2'\) lies on the right-hand side of \(P'U\), this implies \(|P_2'O|> |P'O|\) which is what we wanted.

If \(P_2'\) lies on the region induced by \(OP'TT'\), then \(P_1\hat{P}_2'Z'\) is acute angle as \(P_1\hat{Z}'P_2'>P_1\hat{Z}'P'\) is wide, which contradicts with (100).

If \(P_2'\) lies on the remaining region \(T'TU\), then \(Z'\hat{P}_2'P_1\) is acute. The reason is that \(P'_2\hat{Z}'P_1\) is wide as follows

Case 2 (S lies on \(OZ'\)): Consider the right-hand side of Fig. 7. Due to location restrictions, \(P_2'\) lies on either \(P_1P'H\) triangle or the region induced by \(OP'HU\). If it lies on \(P_1P'H\), then \(O\hat{P'}P_2'\ge O\hat{P'}H\) (thus wide), which implies \(|OP_2'|>|OP'|\) as \(O\hat{P}'P_2'\) is wide angle and \(P'\ne P_2'\).

If \(P_2'\) lies on \(OP'HU\), then \(P_1\hat{P}_2'Z'<P_1\hat{H}Z'=\frac{\pi }{2}\) hence \(P_1\hat{P}'_2Z'\) is acute angle which contradicts with (100).

In all cases, we end up with \(|OP_2'|>|OP'|\) which implies \(\Vert \mathbf {p}_2\Vert _2\ge \Vert \mathbf {p}_2'\Vert _2>\alpha \Vert \mathbf {p}_1\Vert _2\) as desired.

Finally, apply Lemma 15.1 on \(\alpha \mathbf {z}\) to upper bound \(\Vert \mathbf {p}_2\Vert _2\) by \(\alpha \Vert \text {Proj}(\mathbf {z},T_{\mathcal {K}}(0))\Vert _2\). \(\square \)

Rights and permissions

About this article

Cite this article

Oymak, S., Hassibi, B. Sharp MSE Bounds for Proximal Denoising. Found Comput Math 16, 965–1029 (2016). https://doi.org/10.1007/s10208-015-9278-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10208-015-9278-4

Keywords

- Convex optimization

- Proximity operator

- Structured sparsity

- Statistical estimation

- Model fitting

- Stochastic noise

- Linear inverse

- Generalized LASSO