Abstract

In this paper, a novel neighborhood based document smoothing model for information retrieval has been proposed. Lexical association between terms is used to provide a context sensitive indexing weight to the document terms, i.e. the term weights are redistributed based on the lexical association with the context words. A generalized retrieval framework has been presented and it has been shown that the vector space model (VSM), divergence from randomness (DFR), Okapi Best Matching 25 (BM25) and the language model (LM) based retrieval frameworks are special cases of this generalized framework. Being proposed in the generalized retrieval framework, the neighborhood based document smoothing model is applicable to all the indexing models that use the term-document frequency scheme. The proposed smoothing model is as efficient as the baseline retrieval frameworks at runtime. Experiments over the TREC datasets show that the neighborhood based document smoothing model consistently improves the retrieval performance of VSM, DFR, BM25 and LM and the improvements are statistically significant.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Information retrieval is broadly concerned with the problem of organizing collections of documents, such that a manageable number can be selected to support various information requests by users. To accomplish this task, some kind of representation is needed for each document in the collection. Most of the traditional information retrieval systems including the Vector Space Model (VSM) (Salton et al. 1975), the Robertson-Sparck-Jones probabilistic model (Robertson et al. 1981; Robertson and Sparck Jones 1988; Jones et al. 2000), inference networks (Turtle and Croft 1990), multinomial model (Kalt 1998) and the language model (Ponte and Croft 1998) are keyword based. Among these models, the multinomial model assumes independence even between multiple occurrences of a word in a document, while other models assume the occurrence of different words in a document to be independent. Though these approaches are reasonably simple and easy-to-use, the semantics of the content cannot be represented by the inherent independence assumption.

Currently, most conventional models use very elementary document features to allocate an indexing weight to the document terms. These document features include the term frequency, document length, occurrence of a term in a background corpora etc. However, the indexing weight remains independent of the other terms appearing in the document and therefore, the context in which the term occurs is overlooked in assigning its indexing weight. This results in context independent document indexing.

This paper addresses this issue by developing an effective neighborhood based document smoothing scheme, which gives a context sensitive indexing weight to the terms in the document. A document contains both the content-carrying (topical) terms as well as background (non-topical) terms. In a document, the content-carrying words will be highly associated with each other, while the background terms will have very low association with the other terms in the document. The association between terms is captured in this paper by the corpus analysis. The proposed smoothing modifies the indexing weight of a term in a document by using the combined association with all the context words. Using a well-argued theoretical framework, it has been established that the proposed neighborhood based smoothing decreases the retrieval risk of a non-topical term with respect to a topical term. Since the proposed smoothing model does not use document expansion, it is as efficient as the baseline models in terms of the storage and runtime computational complexity and improves the retrieval precision consistently, with the improvements being statistically significant.

Additionally, most of the existing semantic smoothing methods are specific to a certain retrieval framework (Language Modeling in particular). In this paper, a generalized retrieval framework is presented and it is shown that the VSM, DFR, BM25 and LM retrieval frameworks can be duplicated from this model. By using the generalized retrieval framework, the proposed smoothing model is applicable to all the term-frequency indexing schemes. Experiments over the TREC datasets show that it improves the performance of all the retrieval frameworks consistently across various datasets and different query lengths.

The paper is organized as follows. Section 2 discusses related work in information retrieval and presents the proposed model in the context of recently proposed smoothing models for language model based information retrieval. A generalized retrieval framework for the vector space model, DFR, Okapi BM25 and language model is presented in Sect. 3 The proposed document smoothing model is provided in Sect. 4, where it is shown that the model decreases the risk of retrieving topically unrelated words. Evaluation results on the TREC datasets are reported in Sect. 5 along with discussions. Conclusions and suggestions for future work are provided in Sect. 6.

2 Related work

Measuring relevance between a document and a query has long been a fundamental problem in the field of information retrieval. The information retrieval task requires a representation of the document as well as the user query such that:

-

The representation allows a real time search to be computationally efficient.

-

The representation minimizes the information loss.

Several models have been proposed for indexing a document. For s indexing terms, the vector space model (Salton et al. 1975; Wong et al. 1985) considers a document as well as a query as an s-dimensional vector. The cosine measure is applied for measuring the similarity between document and query vectors. A probabilistic indexing framework based on a 2-Poisson model was proposed in Brookstein and Swanson (1974), where the clustering property of words was used to give an indexing weight to the terms. Robertson et al. (1981) and Robertson and Sparck Jones (1988) developed this model into the Okapi best matching 25 (Okapi BM25). The 2-Poisson model makes a parametric assumption that the terms follow a mixture of 2-Poisson distributions. This assumption was relaxed by Ponte and Croft (1998) to develop the language model. The language model approach estimates a language model for each document and the likelihood of the query according to the estimated language model is used for the document ranking. Language modeling has recently become a popular IR model because of its sound theoretical basis and good empirical success (Berger and Lafferty 1999; Zhao and Yun 2009; Zhou et al. 2007). Inference networks (Turtle and Croft 1990), multinomial model (Kalt 1998) and multivariate Bernoulli models (Losada and Azzopardi 2008) are some other examples of using various probabilistic distributions for document indexing. Spectral document ranking methods such as the discrete cosine transform (Park et al. 2005a) and wavelet transform (Park et al. 2005b) have also been applied for document indexing and ranking.

A limitation of the above approaches is that the representation of a word in a document is independent of the other terms appearing in the document (context terms). Although the parameters like document length, document frequency and frequency of a word in a large corpora do reflect the topicality of a term to some degree, the context terms do not impact upon the indexing weight of a term and therefore, the representation of a term remains independent of the context. Hence a term appearing in two different documents (of the same length) will receive the same indexing weight (assuming the term frequency to be the same in both the documents), though it might be topically related to the document in one case and not in the other.

Some of the early attempts in the IR community to capture the dependency between words include the n-gram (or n-term) models (Song and Croft 1999; Srikanth and Srihari 2002), models based on grammar structures (Gao et al. 2004; Maisonnasse et al. 2007; Nallapati and Allan 2002) and the models based on phrase-based indexing (Strzalkowski and Vauthey 1992). These models work with a much more complex data structure and therefore, present additional storage and computational requirements. However, the improvements shown by these models are insignificant. Approaches such as pseudo relevance feedback (Lavrenko and Croft 2001; Xu and Croft 1996; Xu and Croft 2000) and global query expansion (Bai and Nie 2008; Vechtomova and Karamuftuoglu 2007; Qiu and Frei 1993; Collins-Thompson and Callan 2005; Park and Ramamohanarao 2007) also address the problem of term dependencies. Query expansion techniques explore the global term co-occurrence to address the ‘term mismatch’ problem (Furnas et al. 1987), where for a user query, additional terms are added that tend to co-occur with the query terms.

Latent data structures have exploited the corpus structure to capture term dependencies. The latent semantic analysis (LSA) approach (Deerwester et al. 1990) uses the semantic structure resulting from the term-document association matrix. LSA maps these high dimensional term frequency vectors into a low dimensional ‘latent semantic space’. The approach addresses the ‘synonymy’ and ‘polysemy’ problems in the vector space model. A statistical view of LSA led to the development of Probabilistic Latent Semantic Analysis (PLSA) (Hofmann 2001). Unfortunately, the virtue of LSA and PLSA for information retrieval has generally been demonstrated using very small document sets containing 1000 to 3000 documents. Keller and Bengio (2005) showed that PLSA produced inferior results when used with large document sets. However, recent experiments using LSA term correlations (LSA-TSC; Park and Ramamohanarao 2009) have shown improved performances over BM25.

PLSA does not define a generative model at the level of documents, which led to the Latent Dirichlet allocation (LDA) approach (Blei et al. 2003). The Correlated Topic Model (CTM; Blei and Lafferty 2007) was proposed to consider correlations between topics. A nested Chinese restaurant process (nCRP) based model was proposed recently by Blei et al. (2010) to enable clustering of documents according to sharing of topics at multiple levels of abstraction. Soft clustering strategies such as LDA-based document models (Wei and Croft 2006) and cluster language models (Kurland and Lee 2009) have provided good empirical success in the information retrieval experiments.

Most of the existing literature on document smoothing has focused on the Language Modeling framework and the term ‘smoothing’ has been used to estimate the probability of a term t j in a document D i . The maximum likelihood estimate (MLE) of the document model is given by

The MLE estimate gives a zero probability to the words not appearing in the document D i and therefore, smoothing techniques are used to assign a nonzero probability to the unseen words and improve the accuracy of word probability estimation. Zhai and Lafferty (2004) addressed the problem of ‘smoothing’ in terms of adjusting the maximum likelihood estimator to compensate for data sparseness but did not take into account the context information for smoothing. Zhou et al. (2007) proposed a context sensitive semantic smoothing approach which decomposes a document into topic signatures and uses these topic signatures for term smoothing. Multi-phrase words and ontology concepts were used as the topic signatures and Expectation Maximization (EM) algorithm was used to estimate the topic model for each term t j .

Hiemstra (2002) and Hiemstra et al. (2004) proposed parsimonious models for term specific smoothing, where the EM algorithm was applied to maximize the document likelihood. They used a two-component mixture model: a background model and a topic model. The content-carrying words are supposed to get high probabilities after the application of EM. This approach was used by Na et al. (2007) to develop the Parsimonious Translation Model (PTM), where only highly topical terms are selected and common terms are discarded in the estimated document model. Though the parsimonious models have been shown to reduce the indexing size, they haven’t shown significant improvements in retrieval.

Cluster based smoothing models categorize the dataset into clusters of similar documents and smooth the document model using the cluster, containing the document. Liu and Croft (2004) proposed the cluster based document model for information retrieval. They utilized the k-means clustering scheme, where a document model is smoothed by interpolation both with the language model of the single cluster to which the document belongs, and with the language model of the whole collection. They combined documents in the same cluster and modeled it as a big document. The probability of term t j appearing in cluster z k was calculated as \(p(t_j|z_k)=\frac{tf(t_j,z_k)}{\sum_j tf(t_j,z_k)}, \) where tf(t j , z k ) is the number of times term t j appears in cluster z k . The original document model p(t j |D i ) was interpolated with the cluster language model p(t j |z k ). A novel algorithmic framework for cluster based information retrieval was proposed by Kurland and Lee (2009), where they enhanced the information provided by the document models by incorporating information drawn from neighboring documents. For each document D i , they build a \(\bar{K}\)-element set consisting of D i and \(\bar{K}-1\) nearest neighbors of D i . At retrieval time, a query dependent subset clus(D, q) of all clusters is selected. The similarity of a query q with respect to document D i is given by

where cl refers to any cluster in the subset clus(D, q) and λ is a mixing parameter. Shakery and Zhai (2008) used a graph of documents to propagate term counts. The document graph was constructed on the top retrieved documents and the approach can be considered as a smoothing model based on pseudo relevance. Query specific clusters (Kurland 2009; Kurland and Domshlak 2008) is another smoothing model for automatic re-ranking of top-retrieved documents.

The idea of document expansion was proposed in Singhal and Pereira (1999) for speech retrieval. Cluster based smoothing models (Tao et al. 2006; Liu and Croft 2004; Kurland and Lee 2009) also expand the document model based on the words obtained in the neighboring documents. The statistical translation model was proposed by Berger and Lafferty (1999), where terms in a document are mapped to the query terms. They used ‘synthetic queries’ to train the translation model. The model was computationally expensive and the training procedure required a large number of query-document pairs without any assurance that a query word can be covered. Karimzadehgan and Zhai (2010) proposed a mutual information based approach as a more efficient method to estimate the translation model.

In this paper, we use the term ‘lexical association’ to mean the global co-occurrence measure between two terms (co-occurrence in a large dataset) and for a document term, ‘term context’ refers to the terms co-occurring in that document only. We use the term ‘smoothing’ in the sense of redistributing the original indexing weights of the document terms, as obtained by using any of the traditional retrieval frameworks, without using the document expansion, such that the weights of the topical terms in a document can be increased and the weights of the non-topical terms can be decreased. The neighborhood based document smoothing model (NBDS) presented in this paper differs from earlier models in that the proposed smoothing reallocates the indexing weights of terms in a document by the cumulative effect of the lexical association with all the context words, as opposed to only the context insensitive topicality of the term (Hiemstra 2002; Hiemstra et al. 2004; Na et al. 2007) or the neighboring documents (Tao et al. 2006; Kurland and Lee 2009; Shakery and Zhai 2008). It is to be noted that the proposed NBDS model does not use the document expansion as opposed to the translation model (Berger and Lafferty 1999; Karimzadehgan and Zhai 2010) and is one of the very few smoothing models, which are applicable to all the term-document frequency schemes based retrieval frameworks. Since the proposed NBDS model does not use document expansion, it is as efficient as the baseline models (LM and Okapi BM25, for instance) as per the storage and computational requirements at run-time and enhances the retrieval performance significantly without using any top retrieved documents, soft/hard clustering, query expansion or document model expansion.

3 A generalized retrieval framework

As discussed in the introduction, one of the motivations of this article is to propose a document smoothing model, which is applicable to all of the term-document frequency schemes. The existing approaches for document smoothing have focused on the language model based retrieval framework and cannot be theoretically applied to other retrieval frameworks such as BM25, VSM etc. A generalized framework helps in developing a framework-independent document smoothing scheme.

We consider a set of N documents. Let these documents have s unique words, which will be used to index these documents, thus called ‘index terms’. Let \(T=\{t_1,t_2,\ldots,t_s\}\) be the set of these index terms. Let the set of N documents be \(D=\{D_1,D_2,\ldots,D_N\}. \) A document D i will be represented in the generalized retrieval framework as:

Each t ij is a real number characterizing the weight of term t j in D i . These weights will be defined for each retrieval framework separately. Let us assume a general query representation for this retrieval framework. A query q will be represented by the term frequency of all the s index terms in the query. A general query representation will be given by:

where t qi is the term frequency of index term t i in the query q.

In the generalized retrieval framework, the following lemma can be proposed:

Lemma 1

For a suitably defined document representation D i for any of the considered retrieval frameworks (VSM, DFR, BM25 or LM), the similarity of a document D i to a query q can be given by:

where t ij and t qj represent the weight of term t j in the document D i and query q in the generalized retrieval framework respectively. q′ represent the transpose of the query vector. The indexing weight t ij can be suitably defined for each of the term-document frequency scheme separately.

As a first step, conventional term-frequency based indexing schemes in information retrieval are considered. These are enumerated below:

3.1 Vector space model (VSM)

In VSM, the degree of match between a query and a document is obtained by comparing the query and document vectors. Given a document D i and a query q, the cosine similarity measure SIM(D i , q)VSM is defined as:

where f ij is the frequency with which term t j occurs in document D i , N is the number of documents in the collection and N j is the number of documents in which the term t j occurs at least once. N j is also called the document frequency of term t j .

In the generalized retrieval framework, let us denote the document indexing and the indexing weight corresponding to VSM by D V i and t ij = w V ij respectively, with w V ij given by:

Using Eq. 6 for VSM, the similarity of D i to query q is:

Equation 10 represents the same ranking as Eq. 9, since it is obtained by dividing Eq. 11 by a term \(\sqrt{\sum_{j=1}^s {t^2}_{qj}}, \) which is constant for a given query and will not affect the ranking.

The Pivoted Normalization Method (PNM; Singhal et al. 1996) has been shown to be one of the best performing vector space models. The PNM formula for measuring the similarity between a document D i and a user query q is:

In the generalized retrieval framework, let D P i and t ij = w P ij denote the document indexing and indexing weights corresponding to PNM, with w P ij given by:

It is trivial to show that in the generalized retrieval framework, the similarity of D i to query q, given by sim(D P i , q) (Equation 6) represents the same ranking as given by Eq. 12.

3.2 Divergence from randomness (DFR) framework

Amati and Van Rijsbergen (2002) proposed probabilistic models of IR based on a divergence from randomness (DFR) framework. PL2 method (Poisson model with Laplace after-effect and normalization 2) is a representative retrieval function of the DFR retrieval model. The PL2 model measures the informative content of a term by computing the divergence of term frequency distribution from a distribution obtained through a random process. The similarity between a document and a query, as given by the PL2 method is:

where \(fn_{ij}=f_{ij}\times log(1+g\frac{avgDl}{|D_i|}),\upsilon_j=\frac{N}{\sum_i f_{ij}}, \) g is a retrieval parameter.

In the generalized retrieval framework, let D D i and t ij = w D ij denote the document indexing and indexing weights corresponding to DFR framework (PL2 specifically), with w D ij given by:

Using Eq. 6 for PL2 method, the similarity of D i to query q is:

where Eq. 17 follows from Eq. 15.

3.3 Okapi BM25

The Okapi best matching 25 (BM25) (Jones et al. 2000) approach is based on the probabilistic retrieval framework developed in the 1970s and 1980s by Robertson et al. (1981). The BM25 formula used for measuring the similarity between a user query q and a document D i is:

where N is the number of documents in the collection, N j is the number of documents containing term t j , f ij is the frequency term with which the term t j occurs in document D i , avgDl is the average document length, k 1 and b are scalar parameters, which are constant for a given dataset. t qj is the frequency of term t j in the query q and |q| is the number of terms in the query. |D i | denotes the length of the document D i . R is the number of relevant documents for which the relevance judgment is available. r j is the number of documents in set R that contain the term t j .

If no relevance information is known, R and r j are set to 0 and Eq. 19 reduces to:

Let us denote the generalized retrieval framework based document indexing and the indexing weight corresponding to Okapi BM25 by D B i and t ij = w B ij respectively, with w B ij given by:

Using Eq. 6 for BM25, the similarity of D i to query q is:

where Eq. 23 follows from Eq. 21. Equation 24 represents the same ranking as that of Eq. 23, since it is obtained by dividing Eq. 23 by a term |q|, which is constant for a given query and does not affect the ranking.

3.4 Language modeling (LM)

In the language modeling framework, the probability of a query is considered as being “generated” by a probabilistic model based on a document. To smooth the probability of the words in a document, many different language models have been proposed. For instance, the Dirichlet method (Mackay and Peto 1994) uses a Bayesian smoothing using the Dirichlet priors and the smoothing function is given by:

where Eq. 26 follows from Eq. 1. \(p(\cdot|C)\) is the collection language model and μ is the Dirichlet prior parameter. The Jenilek-Mercer method (1980) uses a linear interpolation of the maximum likelihood model with the background collection, with the smoothing function given by:

where λ is the Jelinek-Mercer smoothing parameter. In the two stage language model (TSLM; Zhai and Lafferty 2004), a document language model is smoothed using a Dirichlet prior at the first stage and in the second stage, it is further smoothed using Jelinek-Mercer. The combined smoothing function is given by

In the language modeling framework, the query likelihood is generalized to negative KL divergence between the query model and the document language models as:

p(t j |q) is the query model for user query q and p(t j |D i ) is the document language model for the document D i . The document language model is estimated by any of the smoothing models (Eqs. 26, 27 or 28). The ranking by Eq. 29 is exactly the same as the query likelihood p(q|D i ) while using the maximum likelihood estimate (MLE) for query terms.

Let us denote the generalized retrieval framework based document indexing and the indexing weight corresponding to the two stage language model by D L i and t ij = w L ij respectively, with w L ij given by:

Using Eq. 6 for LM, the similarity of D i to query q is:

Equation 32 is obtained using Eq. 30. Equation 33 is obtained by the addition of a term ∑ j t qj log(λ p(t j |C)) to Eq. 32, which is constant for a given query and does not affect the retrieval performance.

Similarly, it can be shown that using t ij = w Dir ij and t ij = w JM ij in the generalized retrieval framework gives the same ranking as obtained by Dirichlet smoothing and Jelinek-Mercer smoothing based Language models respectively, where w Dir ij and w JM ij are given by:

From Eqs. 11, 18, 25 and 34, the proof for Lemma 1 is complete for VSM, DFR, BM25 and LM based retrieval frameworks respectively.

4 Neighborhood based document smoothing (NBDS) model

4.1 Notations and definitions

Before going into the mathematical development, we define the notation. The generalized indexing scheme will be used and D i (s dimensional vector) will correspond to the original indexing weights of the document. The retrieval influence of term t j on the document D i will be given by t ij , which is consistent with the generalized retrieval framework, where a binary weight is assigned to the query term. The lexical association matrix is denoted by A (s × s dimensional matrix), such that A jk represents the association strength between the terms t j and t k . The document representation (weights) obtained after NBDS are denoted by D N i , with t N ij representing the weight of term t j in D i after smoothing. We will use α and β as the smoothing parameters.

4.2 Motivation

A text document contains both the topically related (or content-carrying) terms as well as the background terms (that do not convey semantic information about the document). We will denote these terms by ‘topical’ and ‘non-topical’ terms respectively. To motivate the discussion, let us consider two arbitrary documents D 1 and D 2 containing 6 terms each as described below:

The first document is concerned about research and applications of ‘robotics’ such as ‘healthcare, soccer’ etc. The second document is related to FIFA world cup and the word ‘robot’ might have appeared in the sense of ‘played like a robot’. Let us concentrate on the word ‘robot’ in both documents. By using any of the traditional indexing schemes, ‘robot’ will be given approximately the same indexing weight in both documents. However, it is topically related to the first document and a background term in the second. Therefore, as per our definitions, it is a ‘topical’ term in D 1 and a ‘non-topical’ term in D 2. The term ‘soccer’ on the other hand, is topically related to both the documents and is a ‘topical’ term in both D 1 and D 2.

Before developing an algorithm for the identification of the ‘topical’ and ‘non-topical’ terms, it is important to reflect upon how do we decide that the term ‘robot’ is ‘topical’ in D 1 and ‘non-topical’ in D 2. In D 1, the term ‘robot’ appears with many other terms such as ‘healthcare, mobile, research, autonomous’ which are associated with the term ‘robot’. In D 2 however, the term ‘robot’ seems to be related only with the term ‘soccer’ and not with other terms such as ‘field, fifa, germany’. Our knowledge of the ‘word association’ is based on the knowledge, we have captured about the world. For computational purposes, this knowledge can be discovered by the corpus analysis. Lexical association measures based on corpus analysis have served this purpose for many applications related to natural language understanding such as word classification (Morita et al. 2004), knowledge acquisition (Yoshinari et al. 2008), word sense disambiguation (Andreopoulos et al. 2008) and word clustering (Li 2002).

Now consider an arbitrary user query q = {robot, soccer, application}. The user wants the documents related to the ‘robot being used for soccer application’. As per the traditional indexing schemes, the relevance of D 1 and D 2 to q will be nearly the same and D 2 might appear before D 1 in the results shown to the user.

The example presented above gives an insight about the problem under consideration. Given a document, is it possible to identify the ‘topical’ and ‘non-topical’ terms? Once these terms have been identified, the indexing weight can be transferred from the ‘non-topical’ terms to the ‘topical’ terms. As per the example, if the indexing weight of the term ‘robot’ can be decreased in D 2 and increased in D 1, the document D 1 will always appear before D 2 in the search results for the considered query.

4.3 Neighborhood based document smoothing (NBDS)

As discussed in Sect. 4.2, lexical association can be used for the identification of ‘topical’ and ‘non-topical’ terms. Let us consider the term ‘robot’ again. Once the lexical association has been calculated, ‘robot’ will have a higher association with the terms such as ‘healthcare, mobile, research, autonomous’ than the terms such as ‘field, fifa, germany’. The indexing weight of ‘robot’ can be modified by using the combined association with all the terms in the document. For a general document D i , we propose that the context sensitive weight of a term in the document can be given by a linear interpolation of its original weight and the association with other terms. Therefore, the smoothed document weights can be given by:

For a general term t j in the document D i :

where α is the weight given to the original indexing and β is the weight given to the neighborhood based smoothing. A further analysis of the the term ∑ k (A jk t ik ) in Eq. 38 tells us that it serves as the matching of association values with the document weights. In other words, the smoothing model uses the similarity between the global (A jk ) and local (t ik ) contexts of the term t k . We can use a simplifying assumption (∑ j t ij = ∑ j t N ij ) to get rid of the parameter β. This assumption makes sure that the indexing weight is transferred from the ‘non-topical’ terms to the ‘topical’ terms.

Equation 40 follows from the assumption (∑ j t ij = ∑ j t N ij ).

We have now intuitively justified the use of the document smoothing model given by Eq. 37. We will now prove that the proposed smoothing model decreases the retrieval risk (Na et al. 2007) of a non-topical term with respect to a topical term, given a suitable association measure. As discussed in the example presented in Sect. 4.2, this property will ensure that the indexing weight of term ‘robot’ decreases in D 2 and increases in D 1, resulting in D 1 being presented to the user before D 2. The definition below gives the concept of retrieval risk.

Definition 1

Assume that the document D i contains a non-topical term t j and a topical term t k .

Risk(t j , t k ): Retrieval risk of t j for t k is defined by the difference between retrieval influences of non-topical term t j and topical term t k .

For the generalized retrieval framework:

The following Lemma argues that a suitably chosen lexical association measure will reduce the retrieval risk of a non-topical term with respect to a topical term.

Lemma 2

For a suitable choice of association measure A, it can be proved that the neighborhood based document smoothing, given by Eq. 37 decreases the retrieval risk of non-topical term t j with respect to a topical term t k if the following condition holds:

Proof

The proposed indexing will decrease the retrieval risk of a non-topical term t j with respect to a topical term t k if:

where

From Eqs. 45 and 46, it is equivalent to prove that:

Now, based on Lemma 2, what should be the property that the association measure satisfies? We will do that analysis under some simplifying assumptions. Let us consider that document D i has 2M terms (for which the term frequency is positive), out of which M terms are topical and M terms are non-topical. Also, let ϕ t and ϕ n be the average indexing weights of the topical terms and non-topical terms respectively. Considering the average case, let A tt be the association between two topical terms, A tn be the association between a topical and non-topical term and A nn be the association between two non-topical terms. Equation 44 can be written as:

which is equivalent to show that

It can be shown that it is equivalent to prove that:Footnote 1

Let us assume that ϕ t /ϕ n = η. We need to consider the following cases separately:

-

If η ≤ 1, the left hand side of Eq. 52 is ≤0 and the right hand side is positive since any chosen association measure should satisfy A tt /A tn > 1 and A nn /A tn ≤ 1, which corresponds to the fact that:

-

The association between two topical terms should be higher than the association between a topical and a non-topical term.

-

The association between a topical and a non-topical term should be higher than or equal to the association between two non-topical terms.

-

-

If η ≥ 1, the inequality 52 is equivalent to

$$ \frac{A_{tt}}{A_{tn}} \geq \frac{M}{M-1}\left(\eta-\frac{1}{\eta}\right)+\frac{A_{nn}}{A_{tn}} $$(53)

Let us assume that \(A_{tt}/A_{tn}=\zeta\) where \(\zeta>1\) and A nn /A tn = κ, κ ≤ 1, which is consistent with the assumptions made for the case η ≤ 1. Equation 53 can be equivalently written as:

For a sufficiently large M (approximately 90–150), inequality 54 can be approximated by \((\zeta-\kappa)>(\eta-\frac{1}{\eta}). \) η is the ratio of indexing weight of topical and non-topical terms on average. If η > > 1, the topical and non-topical terms can be easily distinguished by the indexing weights. We are assuming that the original indexing weights can’t discriminate the ‘topical’ and ‘non-topical’ terms. Therefore, we can expect η to be close enough to 1 and given η > 1, let us write \(\eta=1+\epsilon, \) with \(\epsilon\) being close to zero. \((\eta-\frac{1}{\eta})\) can be approximated as:

Equation 57 follows from Eq. 56 by the fact that \(\epsilon\) being close to zero, \({\epsilon}^2\) can be neglected in comparison to 1. Therefore, we require to prove that \((\zeta-\kappa)>2\epsilon. \) With \(A_{tt}/A_{tn} (\zeta)\) being sufficiently larger than 1, A nn /A tn (κ) being less than or equal to 1 and \(\epsilon\) being close to zero, the proof is trivial.

The next task is to choose an association measure which satisfies the constraints, under which we have proved Lemma 2. Let us go back to the assumptions made during the analysis:

-

1.

A tt /A tn is sufficiently larger than 1: A tt refers to the association between two topical terms, while A tn refers to the association between a topical and a non-topical term. For A tt to be sufficiently larger than A tn , the association measure should follow the following constraints:

-

(a)

If a term t k appears very frequently in the documents containing t j , t k should be highly associated to t j . Therefore, t k should be highly associated to the term t j if the probability of t k given t j is high. This constraint will put the association A tn to be much smaller than A tt .

-

(b)

If a term t k appears very frequently in the corpus, it is very likely to appear with t j as well. Therefore, the association of term t k to term t j (A jk ) should decrease as the probability of term t k in the corpus increases. It will account for very general non-topical terms, which appear in the whole corpus with high probability and therefore, may have a high probability of appearing with the topical terms as well.

From the above constraints,

$$ A_{jk}=f\left(\frac{Pr(t_j,t_k)}{Pr(t_j)Pr(t_k)}\right) $$(58)Using a logarithm function would make the association measure equivalent to the pointwise mutual information (PMI). Therefore, the chosen association measure can be written as:

$$ A_{jk} = log\left(\frac{Pr(t_j,t_k)}{Pr(t_j)Pr(t_k)}\right) $$(59)$$ =log\left(\frac{\sum_i\sum_jt_{ij}\cdot\sum_i(\delta_{ij} t_{ik})}{\sum_it_{ij}\cdot\sum_it_{ik}}\right) $$(60) -

(a)

-

2.

A nn /A tn ≤ 1: Let us first analyze whether the above chosen association measure will follow this constraint. It is to be noted that we are considering the average case. Two non-topical terms can be expected to have a very low probability of co-occurrence, the only exception being the case when both are very common terms with high probability of appearance in the corpus. This case will be taken care of by the product Pr(t j )Pr(t k ) in the denominator. Therefore, we can expect A nn /A tn ≤ 1 to be followed by Eq. 60.

Therefore, Lemma 2 allows us to conclude that when the association measure given by Eq. 60 is utilized for the document smoothing given by Eq. 38, it will decrease the retrieval risk of a non-topical term t j with respect to a topical term t k . Therefore, the PMI measure is expected to work well for the document smoothing from retrieval perspective. It is to be noted that the proposed document smoothing only redistributes the weights between the document terms and does not expand the document term vector.

Fang and Zhai (2006) analyzed the problem of semantic term matching in an axiomatic framework for information retrieval. They showed using constraint analysis as to what association measure would work better from a retrieval perspective and came up with the mutual information (MI) measure to bridge the vocabulary gap. However, the study was focused on term semantic similarity for expanding the user query, in contrast to the theoretical analysis as presented in this paper, which is focused on document smoothing.

The theoretical analysis in this section does not specifically take into consideration of the case of ‘homonyms’, that is, a term t j may have multiple senses and it may be topical to term t k in some documents and non-topical in others. Let us assume that the association measure between terms t j and t k (A jk ) is high as per the predominant sense of t j . Consider a document D i where a different sense of t j is being used (and t j is non-topical) and t k also occurs in D i . Though A jk is high, term t j being non-topical in D i , it is to be expected that the other terms appearing in D i will not be lexically associated with t j and therefore, the weight of term t j will not increase only because of t k . In other words, using the cumulative effect of all the document terms helps to avoid the noise incorporated by a few terms, specially in the case of ‘homonyms’.

After establishing the PMI as the chosen association measure, let us try to assess its possible use for the task of document smoothing. Traditional indexing schemes provide a context insensitive indexing weight to the document terms, without distinguishing content-carrying (topical) or non-topical (background) terms. In a document, the content-carrying words will be highly associated to each other, while the background terms will have very low association with the other terms in the document. This association measure can be captured by exploiting the corpus structure and the PMI measure serves this purpose. The proposed smoothing model will redistribute the indexing weights of the document terms, providing higher weights to the content-bearing terms and lower weights to the background terms.

The proposed smoothing model requires the computation of matrix A, which is an s × s matrix and requires the storage of s 2 elements. However, we may not need all of the s 2 elements. The storage requirement can be reduced by using the following assumptions:

-

A set of T U unique terms with the highest document frequency can be used to compute the association values.

-

For each of these T U terms, a set of T N terms (neighbors) with the highest association can be calculated and stored.

By using these assumption, the storage requirement will reduce to T U × T N . It will also reduce the offline computational time required for smoothing the document terms. Further, it is also to be noted that this storage requirement is only at the indexing time and the matrix A is not required at the retrieval time.

4.4 Algorithm for neighborhood based smoothing

The algorithm for the neighborhood based smoothing can be described as below:

-

The documents are indexed according to the indexing given by the generalized retrieval framework.

-

A set of T U terms with the highest document frequency are selected.

-

For each of these T U terms, the lexical association with these T U terms is calculated using Eq. 60.

-

For each of these T U terms, T N terms with the highest association value are obtained and stored along with the association values.

-

For each document, the neighborhood based smoothing is performed using Eq. 37, where A jk is non-zero only if the terms t j and t k appear in the document D i and also in set T U and t k is one of the T N neighbors of the term t j .

After discussing the theoretical framework of the proposed NBDS model, let us discuss its connections with the previous work in the document smoothing. The cluster based smoothing algorithms (Tao and Zhai 2006; Liu and Croft 2004) use the neighboring documents to expand the document vector and since the topical terms are likely to appear in the neighboring documents, these algorithms are expected to distinguish between the topical and non-topical terms to some extent, though it has not been established theoretically. The proposed NBDS model uses the neighboring terms (terms having high lexical association with a document term) and not the neighboring documents for document smoothing.

The translation model (Berger and Lafferty 1999) uses a globally trained translation probability matrix to smooth the weights of the terms in a document using the other terms present in the document and therefore, is related to the NBDS model. Since they used ‘synthetic queries’ for the translation model, the approach was computationally inefficient. They also used document expansion and thus, the computational complexity at runtime was higher. Karimzadehgan and Zhai (2010) used the normalized mutual information (MI) measure to compute the translation probabilities. However, the use of the normalized MI measure was not theoretically established. The PMI measure (mathematically different from the MI measure) for the NBDS model is calculated using the indexing weights in the generalized retrieval framework, instead of the document frequencies only. While the translation model smooths the maximum likelihood estimate of a document term, the NBDS model modifies the indexing weights, which can directly be used for computing the similarity of a document with respect to a user query. Only a few neighboring words of a term are utilized for the smoothing.

Additionally, the NBDS model only redistributes the indexing weights of the document terms, as opposed to the document expansion, which is common to all the above discussed smoothing approaches. While the above algorithms are developed in the Language modeling framework, the proposed NBDS model is applicable to many retrieval frameworks.

5 Experiments

Experiments were performed to empirically assess and validate the proposed neighborhood based document smoothing model (NBDS) as a smoothing model for the considered retrieval frameworks. The experiments have been reported using TRECFootnote 2 2, 3 and 7. The query topics are composed of three fields (title, description and narrative). While special emphasis was given to the title field (‘Title queries’), experiments over the description field (‘Description queries’) have also been reported. No stemming was performed. Table 1 gives some statistics about the datasets and queries used for the experiment. ‘Av. Title Query’ and ‘Av. Desc. Query’ denote the averange length of the ‘Title queries’ and ‘Description queries’ for the corresponding query topic.

5.1 Evaluation criteria

The evaluation is made using the TREC protocol (Voorhees and Harman 1998). The system returns a ranked sequence of documents in response to a query. Let rel(.) be a binary function on the relevance of a given rank, i.e. rel(r) = 1 if the document with rank r is relevant to the query as per the relevance judgments. For each query, precision P(r) is computed at various ranks. Since only the title field of the query has been used, comparisons have been made for P10 (or P(10)) and Mean Average Precision (MAP) values only. A P10 precision is calculated as follows:

MAP scores are calculated using the average of precision at all recall points. A point x is a recall point if the xth document returned by the system is relevant.

To better evaluate whether the obtained results are statistically significant, we performed a statistical significance test, namely Student paired t test. This t test assigns a confidence value (p value) to the null hypothesis. The typical null hypothesis is that there is no difference between the compared systems. When the p value is low, the null hypothesis is rejected. In the improvements shown in the comparison tables, the signs * and ** indicate that the improvement is statistically significant according to the paired-sample t test at the levels of p < 0.05 and p < 0.01 respectively.

We set the following goals for the experiments:

-

To find out the general behavior of the proposed model, we will perform a sensitivity analysis with respect to the parameters of interest. The sensitivity analysis will help us to fix the values of parameters for the comparisons (Sect. 5.2).

-

To show how our model behaves in comparison to the Okapi BM25 and the language model, which are the most consistent and best performing retrieval frameworks across various datasets from TREC.

-

To analyze the robustness of the proposed approach, additional experiments will be performed to validate that the proposed approach is applicable to many retrieval frameworks (VSM and DFR frameworks for instance, Sect. 5.3.1), to various query lengths (Description queries, Sect. 5.3.2) and is independent of stemming at the indexing time (Sect. 5.3.3). Additionally, the proposed model will be compared to other methods that capture the term dependence (LSA-TSC; Park and Ramamohanarao (2009)) and TM-MI; Karimzadehgan and Zhai (2010), Sect. 5.3.4).

-

Experiments will be performed to compare the proposed approach to a state-of-the-art document expansion model (DELM; Tao and Zhai 2006). It will also be verified that the NBDS model can be used with other smoothing models for additional improvements (Sect. 5.3.5).

5.2 Sensitivity analysis

The following parameters need to be set for the proposed NBDS model:

-

The smoothing parameter (α)

-

Number of terms with the highest document frequencies (T U )

-

Number of neighboring terms (T N )

Firstly, we perform an analysis with respect to T U and T N for a fixed α. Table 2 shows the variation of MAP with respect to T U and T N for α = 0.8 over TREC 2 with BM25 as the baseline retrieval model. The baseline MAP for the BM25 was 0.181.

From Table 2, the results were relatively insensitive to the parameters T N and T U and consistent improvements were obtained. However T U = 40,000 performed superior to both other values of T U for various number of neighbors. For computational efficiency at indexing time, it is preferable to choose a low value of T N . We, therefore opted for T U = 40,000 and T N = 200. These observations were consistent with TREC-3 as well and T U = 40,000, T N = 200 were used.

Experiments were performed to fix the parameters T U and T N for TREC-7. It was found that while T U = 40,000 and T N = 200 performs better than the baseline LM/BM25, the improvements were statistically significant only for T U ≥ 70,000 and T N ≥ 500. On further analysis, it was found that the parameter T U is associated with the type of query words, under consideration. Figure 1 plots the histogram of document frequency, corresponding to the query terms for TREC-2 and TREC-7. Clearly, the query terms in TREC-2 are more general (having high document frequency) than the query terms in TREC-7. T U = 40,000 for TREC-2 corresponds to the terms with document frequency (DF) >80 and T U = 70,000 for TREC-7 corresponds to the terms with DF >25 for TREC-7.

Regarding the parameter T N , as can also be seen from Table 2 as well, with higher value of T U (T U = 50,000), T N = 400 and T N = 600 performed slightly better than T N = 200. Therefore, with T U = 70,000, a higher value of T N was required and T U = 70,000, T N = 500 was used for all the experiments with TREC-7.

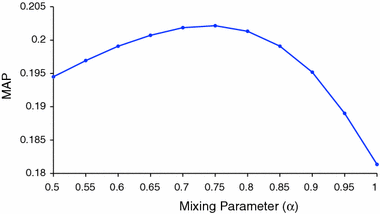

Figures 2, 3 and 4 show the sensitivity of MAP with respect to the smoothing parameter α for TREC-2, TREC-3 and TREC-7 respectively, with Okapi BM25 as the baseline retrieval model. α was varied in the range \(\{0.5,0.55,\ldots,0.95,1\}. \) α = 1 implies an equivalence with the baseline retrieval model (Okapi BM25 in this case).

From Figs. 2, 3 and 4, any value of \(\alpha \in (0.65,0.95)\) performed better than the baseline model. We chose α = 0.8 for all our experiments with TREC-2 and TREC-3 and α = 0.9 for the experiments with TREC-7.

5.3 Comparison with Okapi BM25 and language model

For the Okapi BM25, the parameters k1 and b were to be optimized. The optimization was performed to obtain the best MAP by varying \(k1\in (0.3,1.5)\) and \(b\in (0.05,0.95), \) the optimal values for TREC 2 and 3 were: k1 = 1.1, b = 0.3. For TREC-7, k1 = 0.4 and b = 0.1 gave the optimal results for BM25.

Regarding the baseline language model, experiments were performed to pick a baseline model among the Dirichlet smoothing language model (Dirichlet), Jelinek-Mercer Smoothing (JM) and two-stage language model (TSLM). It was found during the experiments that the JM model performs worse than the other two. Table 3 compares the Dirichlet smoothing based language model to the two-stage language model over TREC 2 and TREC 3 for two different query lengths. For the TSLM, the parameters of concern were the smoothing parameters λ and μ. \(\lambda\in (0.2,0.9)\) and \(\mu\in (200,2000)\) were varied to obtain the best MAP and the optimal values obtained for both TREC 2 and 3 were λ = 0.5, μ = 700 for the Title queries and λ = 0.9 and μ = 200 for the Description queries. For the Dirichlet smoothing, only the parameter μ was to be optimized. For TREC-2, μ was chosen to be 1,200 and 400 for the Title queries and Description queries respectively. For TREC-2, μ was chosen to be 400 and 200 for the Title queries and Description queries, respectively.

From Table 3, it is clear that the Dirichlet smoothing based language model performs slightly better than the TSLM for Title queries. However, for the description queries, TSLM performs much better. Since the results for Title queries were comparable, TSLM was chosen to be the baseline language model for rest of the experiments. For TREC-7, the parameters λ = 0.5 and μ = 700 were chosen for TSLM.

Tables 4 and 5 compare the proposed NBDS model, while applied over the baseline language model (LM) and Okapi BM25 (BM25) respectively, for the MAP and P10 values. Improvements obtained by NBDS over the baseline (LM/BM25) are shown in the last column. Improvements which are significant to p < 0.05 and p < 0.01, respectively as per the student paired t-test are marked by * and ** respectively.

As is evident from Tables 4 and 5, NBDS provides statistically significant improvements, which are consistent over both the Language model and BM25 over TREC 2, 3 and 7. The improvements in MAP were significant to the degree of p < 0.01 for all the experiments over TREC-2 and TREC-3 and significant to p < 0.05 for TREC-7. Improvements over P10 were also significant for two experiments.

5.3.1 Experiments with VSM and DFR frameworks

Experiments discussed in Sect. 5.3 demonstrate that the proposed NBDS model improves the performance of both the Okapi BM25 and the Language Modeling frameworks significantly across various datasets from TREC. To further analyze the robustness of the proposed approach, additional experiments were conducted over other retrieval frameworks as well. The PNM model (as a representative of the VSM framework) and PL2 method (as a representative of the DFR framework) were used as the original indexing models, over which the NBDS model was applied. Experiments were conducted over all the datasets with title queries. The retrieval parameters for PNM and PL2, ρ and g respectively, were optimized for the best MAP and ρ = 0.05 and g = 7.0 were fixed for both TREC-2 and TREC-3. For TREC-7, the parameters were fixes as ρ = 0.02 and g = 15.0. For the NBDS model, the same parameters were used as for the BM25 and LM framework, that is, (T U , T N , α) = (40,000, 200, 0.8) were used for TREC-2 and TREC-3 and (T U , T N , α) = (70,000, 500, 0.9) were used for TREC-7. Experimental results are shown in Tables 6 and 7 corresponding to the PNM and PL2 methods respectively.

Tables 6 and 7 establish the generality of the proposed approach with respect to various retrieval frameworks. Statistically significant improvements were obtained over the MAP values for all the datasets. Improvements over the P10 values were also achieved, with improvements being statistically significant for TREC-2.

5.3.2 Effect of longer queries

The experiments reported in Tables 4 and 5 were conducted with Title queries only. For Title queries, the query terms themselves do not provide much context and therefore, incorporating context knowledge through the NBDS model improved the performance significantly. For longer queries, the query terms may be disambiguated by other terms to some extent. To explore the effect of the proposed NBDS model for the longer queries, experiments were conducted with the ‘Description queries’ over TREC-2, TREC-3 and TREC-7 with LM and BM25 as the baseline retrieval frameworks. The retrieval parameters corresponding to LM and BM25 were optimized. (λ, μ) = (0.9, 200) and (k1, b) = (1.3, 0.5) were used for TREC-2 and TREC-3 and (λ, μ) = (0.8, 500) and (k 1, b) = (1.1, 0.25) were used for TREC-7. The parameters corresponding to the NBDS model were the same as used for the Title queries. Tables 8 and 9 compare the NBDS model for the Description queries for the language model and Okapi BM25 frameworks respectively.

As is evident from Tables 8 and 9, statistically significant improvements were obtained using the Description queries as well for all the datasets. For TREC-2, improvements over the P10 values were also significant to a large degree for both the LM and BM25 frameworks.

5.3.3 Effect of stemming at indexing time

The stemming algorithm was not applied at the indexing time for the experiments, reported in this paper. The hypothesis was that the proposed approach is independent of stemming and therefore, applying stemming should not affect the relative performance of the approach. For the validation of our hypothesis, an experiment was conducted with Okapi BM25 as the baseline model for TREC-2. (k1, b) = (1.2, 0.5) were chosen for the BM25 model. For the NBDS model, the same smoothing parameters were used.

As is evident from Table 10, stemming does not affect the relative performance of the proposed NBDS model and statistically significant improvement over MAP was achieved.

5.3.4 Comparisons with other models for term co-occurrence

In this section, the NBDS model is compared with some other approaches that capture term-dependence. Latent Semantic Analysis with Term Self Correlation (LSA-TSC; Park and Ramamohanarao 2009) and Translation model with mutual information (TM-MI; Karimzadehgan and Zhai 2010) are considered as the baseline approaches. Since TM-MI is only applicable for the Language Modeling framework, LM has been taken as the retrieval framework under consideration. These approaches are described in brief below.

LSA-TSC: LSA uses the singular value decomposition (SVD) to express the document matrix D as:

for a rank |S| version of D, the similarity scores for a query q are:

A LSA is the set of term correlations in the LSA framework. The advantage with LSA is that the term correlations are obtained through SVD as V′I |S| V.

Through the analysis of matrix A LSA = V′I |S| V, Park has shown that by altering the LSA term self-correlations, a substantial increase in precision can be obtained. He used the following form of the association matrix, denoted here by A P LSA :

where I is the identity matrix and α is a mixing parameter.

TM-MI: Karimzadehgan and Zhai (2010) used mutual information measure to compute the lexical association of two terms. The mutual information between terms t i and t j is calculated as:

where the binary variables δ j and δ k indicate the presence/absence of terms t j and t k .

The mutual information score is normalized to obtain the translation probability as:

The translation probability is used to replace the maximum likelihood term (\(p_{ml}(t_j|D_i)=\frac{f_{ij}}{|D_i|}\)) with the translation document model, given by:

A parameter γ was introduced to control the effect of self-translation such that

Parameter Tuning: For LSA-TSC, |S| = 300 dimensions were used for computing the A LSA matrix. The parameter α in Eq. 64 was set to 0.95 for all the experiments. For TM-MI, the parameter γ was optimized for best MAP and γ = 0.6 was fixed for all the experiments. (The parameters α and γ were optimized to obtain the best MAP.)

Table 11 compares the NBDS model with the LSA-TSC and TM-MI for TREC 2, 3 and 7 for the Language modeling framework. Improvements obtained by NBDS over the LM, LSA-TSC and TM-MI are shown in the brackets in the last column along with the significance tests.

As is clear from Table 11, the NBDS model performed significantly better than the LSA-TSC for all the three datasets. NBDS performed better than the TM-MI for all the datasets and improvements over the P10 values for TREC-3 were statistically significant. It is to be noted that the TM-MI model uses document expansion for smoothing, while the NBDS model does not expand the document vector.

5.3.5 Comparison with a clustering-based document expansion model

In this section, we compare the NBDS model with the document expansion language model (DELM; Tao and Zhai 2006) which uses soft clustering to modify the term counts in a document. For each document D i , a document set X i containing K most similar documents as per the cosine similarity measure is obtained and the similarity values are normalized. The modified term counts of a term t j in D i , f T ij , are obtained as:

where γ ik denotes the cosine similarity between documents D i and D k , normalized with respect to set X i such that ∑γ ik = 1. These modified term counts are used for the language model retrieval framework.

For a fair comparison, we will also use a variation of DELM, where we restrict the document expansion such that only the weights of the existing terms are adjusted and no new terms are added. We will denote this variation as DELM-restricted (DELM-R). The same set of parameters have been used as provided in Tao and Zhai (2006), i.e. {α, K} = {0.5, 100} have been used. As discussed in their paper, the same set of λ and μ for TSLM are used for the document expansion runs, without further tuning.

Table 12 compares the NBDS model with the DELM-R and DELM for TREC 2, 3 and 7 for the Language modeling framework. Improvements obtained by NBDS over the DELM-R and DELM are shown in the brackets in last column along with the significance tests.

Clearly, the results obtained by the NBDS model are comparable to the DELM. From Table 12, NBDS always outperforms the DELM-R, with the improvements over TREC-7 MAP values and TREC-3 P10 values being statistically significant. The full expansion model performed better than NBDS for TREC-2 and TREC-3 MAP values, however the difference was not statistically significant. NBDS, on the other hand, outperformed DELM for TREC-2 and TREC-3 P10 values and TREC-7 MAP values, with the improvements over TREC-3 P10 values being statistically significant to a large degree. The findings from Table 12 can be summarized as follows:

-

It clearly establishes that the NBDS model is a better approach than the DELM-R method, where the DELM model is used without a document expansion.

-

The document expansion model (DELM) expands the original document using words that don’t occur in the original document but are semantically related. As can be seen by the superior performance of DELM over DELM-R, it does improve the retrieval performance. The improvement, however, comes with an extra computational burden, which may be traded-off if it gives statistically significant improvements.

-

The experiments are inconclusive as to which one of the methods, DELM or NBDS, is superior. DELM uses a full document expansion and therefore, is much more computationally expensive than the NBDS model at the runtime.

We performed further experiments to explore whether it is possible to adjust the term weights obtained by the DELM-R model using the proposed NBDS approach. The idea was that if applying NBDS over DELM-R can outperform DELM, it would be much preferable to apply a cascade of DELM-R and NBDS instead of DELM, since it will improve the run-time computational complexity. Additionally, we also report the experiments to validate that the proposed NBDS model is very general and can be used with any other document smoothing technique for additional improvements. The results of these experiments are presented in Table 13. DELM-R+NBDS and DELM+NBDS represent the application of NBDS model over the DELM-R and DELM models respectively. For DELM-R+NBDS, improvements with respect to both the DELM and DELM-R models are shown, while for DELM+NBDS, only the improvements over the DELM model are shown.

From Table 13, we can conclude the followings:

-

The NBDS model, when applied with the DELM-R and DELM separately, gives statistically significant improvements over all the datasets for the MAP values. Improvements over the P10 values for TREC-3 were also statistically significant. It validates the hypothesis that the NBDS mode may be used with other document smoothing models for additional improvements.

-

Applying NBDS over DELM-R (DELM-R+NBDS) always performs at-least as well as the DELM method. It helps in that we can achieve similar/better performance by adjusting the weights of the existing terms using the NBDS model rather than doing a document expansion, which causes computational burden at run-time.

5.4 Discussions with some specific examples

We took some of the TREC queries as samples and analyzed the effect of neighborhood based smoothing on the retrieval performance. A consideration of two of the queries is provided here.

5.4.1 TREC topic 112: funding biotechnology

Table 14 shows the top five documents obtained by Okapi BM25 for the above query, before and after applying NBDS. The document identifier (DocId), similarity value (Sim) and relevance judgment by experts (Rel) has been provided (R: Relevant, NR: Non-Relevant).

We will concentrate on the documents marked in bold. Table 15 shows the indexing weight of the query terms in these documents by BM25 (D ij ), BM25+NBDS (D N ij ) and the modification in indexing weight δD ij = D N ij − D ij .

From Table 15, the term ‘biotechnology’ contributes to the relevance nearly 3–4 times more than the word ‘funding’. Document ‘FR891128-0097’ was ranked 5th by the Okapi BM25 model. However, document smoothing decreases the weight of both ‘funding’ and ‘biotechnology’, which is consistent with the fact that this being a non-relevant document, these terms were not topically related to the document and their weights are decreased by the document smoothing. The other 3 documents shown in Table 15 were not present in the set of top 5 documents returned by BM25. Document smoothing increased the weight of term ‘biotechnology’ by a significant amountFootnote 3 (though the weight of ‘funding’ was decreased by a small margin). The overall effect was an increase in the P(5) precision for query 112.

5.4.2 TREC Topic 144: management problems at the united nations

Table 16 lists the top 5 documents obtained through BM25 and BM25+NBDS.

Table 17 focuses on the 5 documents marked in bold in Table 16. The documents ‘FR88817-0089’, ‘AP880406-0124’, ‘FR88922-0050’ and ‘FR89918-0057’ were present in the top 5 documents returned by BM25. However, these documents are non-relevant and the query terms were not topically related to the document. Neighborhood based smoothing moderately decreases the indexing weight of the query terms in these documents (Though, there is a slight increase in the indexing weight of term ‘management’ in documents ‘FR88817-0089’ and ‘FR89918-0057’, it is quite insignificant in comparison to the decrease in weight of other terms.), resulting in the exclusion of documents ‘FR88817-0089, AP880406-0124, FR88922-0050’ from top 5 documents’ list, while document ‘FR89918-0057’ moved from the top ranking to 4th in the rank. In contrast, the indexing weight of query terms in document ‘AP891026-0155’ are increased by NBDS, which is a relevant document, resulting in an increase in the P(5) precision for this query.

5.5 Computational complexity and storage requirements

At the retrieval time, the proposed NBDS model does not cause any computational or storage burden and will be as efficient as the original baseline retrieval frameworks. At the indexing time, the following steps need additional computation and storage:

-

1.

Computation of the lexical association matrix A: The matrix A is an s × s matrix. For instance, for the TREC 2 dataset, it requires a storage of 400,000 × 400,000 elements. However, we are using only T U = 40,000 in this case. For each term, T N = 200 neighbors along with the association values are stored. This leads to a storage of 2 matrices with dimensions 40,000 × 200 each, reducing the storage requirement by a factor of 20,000. Fot TREC-7, the storage requirement was reduced by a factor of 4000 approximately. The matrix A need not be stored at the retrieval time.

-

2.

Neighborhood based smoothing: For each term in a document, its association is calculated with all other document terms. Therefore, if T av is the average number of unique terms in a document, this step has a computational complexity of the order \(O(N\cdot {T_{av}}^2), \) where N is the number of documents.

The complexity of the smoothing depends on the square of the average number of unique terms in the document, which fall under the top T U terms, selected as per the highest frequency. This criteria reduces this number significantly. Additionally, the number of unique terms in a document is not linearly proportional to the total number of terms in the document. Conducting an experiment over TREC-7, we figured that the Pearson’s correlation coefficient (Benesty et al. 2009) between the number of total terms and the number of unique terms is 0.75, while the correlation coefficient between the square root of the number of total terms and the number of unique terms is 0.96. Thus, the square root of the total terms in a document can be taken as a good approximation of the number of unique terms and therefore, the computational complexity can be expected to be linearly proportional to the number of total terms in a document (More importantly, only those terms that fall under the top T U terms). Finally, this complexity is only at the preprocessing time and the system does not cause any computational complexity at run-time.

6 Conclusions and future work

This paper presents a novel neighborhood based document smoothing model (NBDS), which uses the pointwise mutual information from the underlying corpus of documents to give a context sensitive weight to each term in a document. A generalized retrieval framework has been presented, which makes the model equally applicable to all of the term-document frequency based retrieval models. Using a well-argued analytical derivation, it has been shown that the proposed model reduces the retrieval risk of a ‘non-topical’ term with respect to a ‘topical’ term. The association measure for redistributing the term weights has been chosen as per the retrieval perspective. The consistent and statistically significant improvements in the results across various datasets under diverse settings show the potential effectiveness of the proposed approach. The proposed algorithm for document smoothing is reasonably easy-to use and enhances the retrieval performance across all the considered retrieval frameworks without using any top retrieved documents, soft/hard clustering, query expansion or document model expansion. The proposed approach causes slight computational overhead at the preprocessing stage, however it is as efficient as the baseline models at runtime.

NBDS is one of the very few smoothing models, which are general to all the ‘term-document frequency scheme’ models. The authors have developed the NBDS model in a well-argued theoretical framework, with the lexical association measure analytically validated from a retrieval perspective. Although some of the smoothing models developed in the Language modeling framework work on a similar principle of using term co-occurrence, the NBDS model differs notably both from a theoretical and implementation point of view. Since the NBDS model does not expand a document vector, the size of the document vector remains the same and the resulting document vector can be stored exactly in the same manner as any of the baseline retrieval frameworks and the computational complexity remains unaffected.

This paper presents numerous options for future work. As demonstrated in Sect. 5.3.5, the NBDS model can be used with a document expansion model for significantly additional improvements. Further work needs to explore whether the proposed smoothing can be combined with pseudo feedback/query expansion for additional improvements. The proposed neighborhood based smoothing is not specific to information retrieval, since it gives a context sensitive smoothed representation to the document terms. It would be interesting to apply NBDS to automatic text categorization (ATC). In addition, the smoothing parameter α is fixed across all the documents in a corpus and it would be worth exploring whether the smoothing parameter α can be automatically selected and optimized based on the properties of each document separately.

Notes

Since we are taking an average case, we will not be considering the boundary cases, where A tn ≤ 0 or A nn ≤ 0

These collections are provided by TREC-NIST. Each collection is composed of a set of documents, a set of topics (queries) and a set of relevant judgments corresponding to the relevant documents per query.

Which is consistent with the fact that these being relevant documents, this term is topically related.

References

Amati, G., & Van Rijsbergen, C. J. (2002). Probabilistic models of information retrieval based on measuring the divergence from randomness. ACM Transactions on Information Systems, 20, 357–389. doi:http://doi.acm.org/10.1145/582415.582416 URL http://doi.acm.org/10.1145/582415.582415.

Andreopoulos, B., Alexopoulou, D., & Schroeder, M. (2008). Word sense disambiguation in biomedical ontologies with term co-occurrence analysis and document clustering. International Journal Data Mining Bioinformatics, 2, 193–215. doi:10.1504/IJDMB.2008.020522 URL http://portal.acm.org/citation.cfm?id=1413934.1413935.

Bai, J., & Nie, J. Y. (2008). Adapting information retrieval to query contexts. Information Processing & Management, 44(6), 1901–1922. doi:http://dx.doi.org/10.1016/j.ipm.2008.07.006.

Benesty, J., Chen, J., Huang, Y., & Cohen, I. (2009). Pearson Correlation Coefficient. In Noise reduction in speech processing, pp. 1–4.

Berger, A., & Lafferty, J. (1999). Information retrieval as statistical translation. In In Proceedings of the 1999 ACM SIGIR conference on research and development in information retrieval, pp. 222–229.

Blei, D. M., & Lafferty, J. D. (2007). A correlated topic model of science. The Annals of Applied Statistics, 1(1), 17–35.

Blei, D. M., Ng, A. Y., Jordan, M. I., & Lafferty, J. (2003). Latent dirichlet allocation. Journal of Machine Learning Research, 3, 993–1022.

Blei, D. M., Griffiths, T. L., & Jordan, M. I. (2010). The nested chinese restaurant process and bayesian nonparametric inference of topic hierarchies. Journal of the ACM , 57, 7:1–7:30. doi:http://doi.acm.org/10.1145/1667053.1667056 URL http://doi.acm.org/10.1145/1667053.1667053.

Brookstein, A., & Swanson, D. R. (1974). Probabilistic models for automatic indexing. Journal of the American Society Informations Science Technology, 25(5), 312–318.

Collins-Thompson, K., & Callan, J. (2005). Query expansion using random walk models. In Proceedings of the 14th ACM international conference on Information and knowledge management, CIKM ’05 (pp. 704–711). New York, NY: ACM. doi:http://doi.acm.org/10.1145/1099554.1099727 URL http://doi.acm.org/10.1145/1099554.1099727.

Deerwester, S., Dumais, S. T., Furnas, G. W., L, T. K., & Harshman, R. (1990). Indexing by latent semantic analysis. Journal of the American Society for Information Science, 41, 391–407.

Fang, H., & Zhai, C. (2006). Semantic term matching in axiomatic approaches to information retrieval. In Proceedings of the 29th annual international ACM SIGIR conference on research and development in information retrieval, SIGIR ’06 (pp. 115–122). New York, NY: ACM. doi:http://doi.acm.org/10.1145/1148170.1148193 URL http://doi.acm.org/10.1145/1148170.1148193.

Furnas, G., W., Landauer, T. K., Gomez, L. M., & Dumais, S. T. (1987). The vocabulary problem in human-system communication. Communications of the ACM, 30(11):964–971. doi:http://doi.acm.org/10.1145/32206.32212

Gao, J., Nie, J. Y., Wu, G., & Cao, G. (2004). Dependence language model for information retrieval. In SIGIR ’04: Proceedings of the 27th annual international ACM SIGIR conference on research and development in information retrieval (pp. 170–177). New York, NY: ACM. doi:http://doi.acm.org/10.1145/1008992.1009024.

Hiemstra, D. (2002). Term-specific smoothing for the language modeling approach to information retrieval: the importance of a query term. In SIGIR ’02: Proceedings of the 25th annual international ACM SIGIR conference on research and development in information retrieval (pp. 35–41). New York, NY: ACM. doi:http://doi.acm.org/10.1145/564376.564385.

Hiemstra, D., Robertson, S., & Zaragoza, H. (2004). Parsimonious language models for information retrieval. In SIGIR ’04: Proceedings of the 27th annual international ACM SIGIR conference on research and development in information retrieval (pp. 178–185). New York, NY: ACM. doi:http://doi.acm.org/10.1145/1008992.1009025.

Hofmann, T. (2001). Unsupervised learning by probabilistic latent semantic analysis. Machine Learning,, 42(1–2), 177–196. doi:http://dx.doi.org/10.1023/A:1007617005950.

Jelinek, F., & Mercer, R. (1980). Interpolated estimation of markov source parameters from sparse data. In Pattern recognition in practice pp. 381–402. URL http://ci.nii.ac.jp/naid/10020648370/en/.

Jones, K. S., Walker, S., & Robertson, S. E. (2000). A probabilistic model of information retrieval: development and comparative experiments. Information Processing and Management, 36(6), 779–808. doi:http://dx.doi.org/10.1016/S0306-4573(00)00015-7.

Kalt, T. (1998). A new probabilistic model of text classification and retrieval title2:. Tech. rep., University of Massachusetts, Amherst, MA, USA.

Karimzadehgan, M., & Zhai, C. (2010). Estimation of statistical translation models based on mutual information for ad hoc information retrieval. In Proceeding of the 33rd international ACM SIGIR conference on research and development in information retrieval, SIGIR ’10 (pp. 323–330). New York, NY: ACM. doi:http://doi.acm.org/10.1145/1835449.1835505 URL http://doi.acm.org/10.1145/1835449.1835505.

Keller, M., & Bengio, S. (2005). A neural network for text representation. In Artificial neural networks: Biological inspirations ICANN 2005: 15th International conference (pp. 11–15). Springer-Verlag GmbH.

Kurland, O. (2009). Re-ranking search results using language models of query-specific clusters. Information Retrieval, 12, 437–460. URL http://dx.doi.org/10.1007/s10791-008-9065-9.

Kurland, O., & Domshlak, C. (2008). A rank-aggregation approach to searching for optimal query-specific clusters. In Proceedings of the 31st annual international ACM SIGIR conference on research and development in information retrieval, SIGIR ’08 (pp. 547–554). New York, NY:ACM. doi:http://doi.acm.org/10.1145/1390334.1390428 URL http://doi.acm.org/10.1145/1390334.1390428.

Kurland, O., & Lee, L. (2009). Clusters, language models, and ad hoc information retrieval. ACM Transactions on Information Systems , 27(3), 1–39. doi:http://doi.acm.org/10.1145/1508850.1508851.

Lavrenko, V., & Croft, W. B. (2001). Relevance based language models. In SIGIR ’01: Proceedings of the 24th annual international ACM SIGIR conference on research and development in information retrieval (pp. 120–127). New York, NY: ACM. doi:http://doi.acm.org/10.1145/383952.383972.

Li, H. (2002). Word clustering and disambiguation based on co-occurrence data. Natural Language Engineering, 8, 25–42. doi:10.1017/S1351324902002838 URL http://portal.acm.org/citation.cfm?id=973860.973863.

Liu, X., & Croft, W. B. (2004). Cluster-based retrieval using language models. In SIGIR ’04: Proceedings of the 27th annual international ACM SIGIR conference on research and development in information retrieval (pp. 186–193). New York, NY: ACM. doi:http://doi.acm.org/10.1145/1008992.1009026.

Losada, D. E., & Azzopardi, L. (2008). Assessing multivariate bernoulli models for information retrieval. ACM Transactions on Information Systems, 26, 17:1–17:46. doi:http://doi.acm.org/10.1145/1361684.1361690 URL http://doi.acm.org/10.1145/1361684.1361690

Mackay, D. J. C., & Peto, L. (1994). A hierarchical dirichlet language model. Natural Language Engineering, 1(3), 1–19.