Abstract

Lifting the 2D human pose to the 3D pose is an important yet challenging task. Existing 3D human pose estimation suffers from (1) the inherent ambiguity between the 2D and 3D data, and (2) the lack of well-labeled 2D–3D pose pairs in the wild. Human beings are able to imagine the 3D human pose from a 2D image or a set of 2D body key-points with the least ambiguity, which should be attributed to the prior knowledge of the human body that we have acquired in our mind. Inspired by this, we propose a new framework that leverages the labeled 3D human poses to learn a 3D concept of the human body to reduce ambiguity. To have consensus on the body concept from the 2D pose, our key insight is to treat the 2D human pose and the 3D human pose as two different domains. By adapting the two domains, the body knowledge learned from 3D poses is applied to 2D poses and guides the 2D pose encoder to generate informative 3D “imagination” as an embedding in pose lifting. Benefiting from the domain adaptation perspective, the proposed framework unifies the supervised and semi-supervised 3D pose estimation in a principled framework. Extensive experiments demonstrate that the proposed approach can achieve state-of-the-art performance on standard benchmarks. More importantly, it is validated that the explicitly learned 3D body concept effectively alleviates the 2D–3D ambiguity, improves the generalization, and enables the network to leverage the abundant unlabeled 2D data.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Cao, Z., Simon, T., Wei, S.E., & Sheikh, Y. (2017). Realtime multi-person 2d pose estimation using part affinity fields. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (pp 7291–7299).

Chen, C.H., Tyagi, A., Agrawal, A., Drover, D., Mv, R., Stojanov, S., & Rehg, J. M. (2019). Unsupervised 3d pose estimation with geometric self-supervision. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 5714–5724).

Csurka, G. (2017). Domain adaptation for visual applications: A comprehensive survey. arXiv preprint arXiv:1702.05374.

Drover, D., Chen, C.H., Agrawal, A., Tyagi, A., & Phuoc Huynh, C. (2018). Can 3d pose be learned from 2d projections alone? In: Proceedings of the European Conference on Computer Vision (ECCV) Workshops, (pp 0–0).

Fang, H.S., Xu, Y., Wang, W., Liu, X., & Zhu, S. C. (2018). Learning pose grammar for monocular 3d pose estimation. In: Proceedings of the AAAI Conference on Artificial Intelligence.

Gong, K., Zhang, J., & Feng, J. (2021). Poseaug: A differentiable pose augmentation framework for 3d human pose estimation.

Guan, S., Xu, J., Wang, Y., Ni, B., & Yang, X. (2021). Bilevel online adaptation for out-of-domain human mesh reconstruction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 10,472–10,481).

Habibie, I., Xu, W., Mehta, D., Pons-Moll, G., & Theobalt, C. (2019). In the wild human pose estimation using explicit 2d features and intermediate 3d representations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 10,905–10,914).

Hoffman, J., Tzeng, E., Park, T., Zhu, J. Y., Isola, P., Saenko, K., & Darrell, T. (2018). Cycada: Cycle-consistent adversarial domain adaptation. In: International Conference on Machine Learning, (pp 1989–1998). PMLR.

Hossain, M.R.I., & Little, J.J. (2018). Exploiting temporal information for 3d human pose estimation. In: Proceedings of the European Conference on Computer Vision (ECCV), (pp 68–84).

Ionescu, C., Papava, D., Olaru, V., & Sminchisescu, C. (2014). Human36m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(7), 1325–1339.

Iqbal, U., Molchanov, P., & Kautz, J. (2020). Weakly-supervised 3d human pose learning via multi-view images in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 5243–5252).

Kanazawa, A., Black, M.J., Jacobs, D.W., & Malik, J. (2018). End-to-end recovery of human shape and pose. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (pp 7122–7131).

Kocabas, M., Karagoz, S., & Akbas, E. (2019). Self-supervised learning of 3d human pose using multi-view geometry. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 1077–1086).

Kostopoulos, G., Karlos, S., Kotsiantis, S., et al. (2018). Semi-supervised regression: A recent review. Journal of Intelligent and Fuzzy Systems, 35(2), 1483–1500.

Kundu, J.N., Seth, S., Jampani, V., Rakesh, M., Babu, R. V., & Chakraborty, A. (2020). Self-supervised 3d human pose estimation via part guided novel image synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 6152–6162).

Lee, K., Lee, I., & Lee, S. (2018). Propagating LSTM: 3d pose estimation based on joint interdependency. In: Proceedings of the European Conference on Computer Vision (ECCV), (pp 119–135).

Li, C., Lee, G.H. (2019). Generating multiple hypotheses for 3d human pose estimation with mixture density network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 9887–9895).

Li, S., Chan, A.B. (2014). 3d human pose estimation from monocular images with deep convolutional neural network. In: Asian Conference on Computer Vision, (pp 332–347). Springer.

Li, S., Ke, L., Pratama, K., Tai, Y. W., Tang, C. K., & Cheng, K.T. (2020). Cascaded deep monocular 3d human pose estimation with evolutionary training data. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 6173–6183).

Li, Y., Yuan, L., & Vasconcelos, N. (2019a). Bidirectional learning for domain adaptation of semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 6936–6945).

Li, Y. F., & Zhou, Z. H. (2014). Towards making unlabeled data never hurt. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37(1), 175–188.

Li, Z., Wang, X., Wang, F., & Jiang, P. (2019b). On boosting single-frame 3d human pose estimation via monocular videos. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, (pp 2192–2201).

Liu, Z., Miao, Z., Pan, X., Zhan, X., Lin, D., Yu, S. X., & Gong, B. (2020). Open compound domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 12,406–12,415).

Luo, C., Chu, X., &Yuille, A. (2018). Orinet: A fully convolutional network for 3d human pose estimation.

Martinez J, Hossain R, Romero J, & Little, J. J. (2017). A simple yet effective baseline for 3d human pose estimation. In: Proceedings of the IEEE International Conference on Computer Vision, (pp 2640–2649).

Mehta D, Rhodin H, Casas D, Fua, P., Sotnychenko, O., Xu, W., & Theobalt, C. (2017). Monocular 3d human pose estimation in the wild using improved CNN supervision. In: 2017 international conference on 3D vision (3DV), (pp 506–516). IEEE.

Mehta D, Sotnychenko O, Mueller F, Xu, W., Sridhar, S., Pons-Moll, G., & Theobalt, C. (2018). Single-shot multi-person 3d pose estimation from monocular RGB. In: 2018 International Conference on 3D Vision (3DV), (pp 120–130). IEEE.

Mitra R, Gundavarapu NB, Sharma A, & Jain, A. (2020). Multiview-consistent semi-supervised learning for 3d human pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 6907–6916).

Moon, G., Chang, J. Y., & Lee, K. M. (2019). Camera distance-aware top-down approach for 3d multi-person pose estimation from a single RGB image. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, (pp 10,133–10,142).

Moreno-Noguer, F. (2017). 3d human pose estimation from a single image via distance matrix regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (pp 2823–2832).

Newell, A., Yang, K., & Deng, J. (2016). Stacked hourglass networks for human pose estimation. In: European conference on computer vision, (pp 483–499). Springer.

Nie, Q., Liu, Z., & Liu, Y., (2020). Unsupervised 3d human pose representation with viewpoint and pose disentanglement. In: European Conference on Computer Vision, (pp 102–118). Springer.

Oliver, A., Odena, A., Raffel, C. A., Cubuk, E. D., & Goodfellow, I. (2018). Realistic evaluation of deep semi-supervised learning algorithms. Advances in Neural Information Processing Systems, 31, 3897.

Pavlakos, G., Zhou, X., Derpanis, K. G., & Daniilidis, K. (2017). Coarse-to-fine volumetric prediction for single-image 3D human pose. In: Proceedings of the IEEE conference on computer vision and pattern recognition, (pp. 7025-7034).

Pavllo, D., Feichtenhofer, C., Grangier, D., & Auli, M. (2019). 3d human pose estimation in video with temporal convolutions and semi-supervised training. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp. 7753-7762).

Rhodin, H., Sporri, J., Katircioglu, I., Constantin, V., Meyer, F., Muller, E., & Fua, P. (2018). Learning monocular 3d human pose estimation from multi-view images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (pp. 8437-8446).

Sharma, S., Varigonda, P. T., Bindal, P., Sharma, A., & Jain, A. (2019). Monocular 3d human pose estimation by generation and ordinal ranking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, (pp. 2325-2334).

Sigal, L., Balan, A. O., & Black, M. J. (2010). Humaneva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion. International Journal of Computer Vision, 87(1), 4–27.

Sun, K., Xiao, B., Liu, D., & Wang, J. (2019). Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, (pp. 5693-5703).

Tekin, B., Katircioglu, I., Salzmann, M., Lepetit, V., & Fua, P. (2016). Structured prediction of 3d human pose with deep neural networks. In: British Machine Vision Conference, (BMVC).

Tripathi, S., Ranade, S., Tyagi, A., & Agrawal, A. (2020). Posenet3d: Learning temporally consistent 3d human pose via knowledge distillation. In: 2020 International Conference on 3D Vision (3DV), (pp. 311-321). IEEE.

Tzeng, E., Hoffman, J., Saenko, K., & Darrell, T. (2017). Adversarial discriminative domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (pp. 7167-7176).

Wandt, B., & Rosenhahn, B. (2019). Repnet: Weakly supervised training of an adversarial reprojection network for 3d human pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (pp 7782–7791).

Wang, J., Huang, S., Wang, X., & Tao, D. (2019). Not all parts are created equal: 3d pose estimation by modeling bi-directional dependencies of body parts. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, (pp 7771–7780).

Wei, S. E., Ramakrishna, V., Kanade, T., & Sheikh, Y. (2016). Convolutional pose machines. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, (pp 4724–4732).

Xu, J., Yu, Z., Ni, B., Yang, J., Yang, X., & Zhang, W. (2020). 3d human pose estimation in the wild by adversarial learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (pp 5255–5264).

Xu, J., Yu, Z., Ni, B., Yang, J., Yang, X., & Zhang, W. (2020). Deep kinematics analysis for monocular 3d human pose estimation. In: proceedings of the ieee/cvf conference on computer vision and pattern recognition, (pp 899–908).

Zhang, J., Nie, X., & Feng, J. (2020). Inference stage optimization for cross-scenario 3d human pose estimation. Advances in Neural Information Processing Systems, 33, 2408–2419.

Zhao, L., Peng, X., Tian, Y., Kapadia, M., & Metaxas, D. N. (2019). Semantic graph convolutional networks for 3d human pose regression. In: Proceedings of the IEEE/CVF Conference on computer Vision and Pattern Recognition, (pp. 3425-3435).

Zhou K, Han X, Jiang N, Jia, K., & Lu, J. (2019). Hemlets pose: Learning part-centric heatmap triplets for accurate 3d human pose estimation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, (pp 2344–2353).

Zhou, X., Huang, Q., Sun, X., Xue, X., & Wei, Y. (2017). Towards 3d human pose estimation in the wild: a weakly-supervised approach. In Proceedings of the IEEE International Conference on Computer Vision, (pp. 398-407).

Acknowledgements

This work is supported in part by the Hong Kong Centre for Logistics Robotics, in part by the CUHK T Stone Robotics Institute, in part by Tencent Youtu Lab, in part by NTU NAP, MOE AcRF Tier 2 (T2EP20221-0033), and under the RIE2020 Industry Alignment Fund - Industry Collaboration Projects (IAF-ICP) Funding Initiative, as well as in-kind contribution from the industry partner(s). In particular, we want to thank Shang Xu, Yong Liu and Chengjie Wang, who are the first author’s colleagues in Tencent Youtu Lab, for their valuable suggestions and support on this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Wenjun Kevin Zeng.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Implementation Details

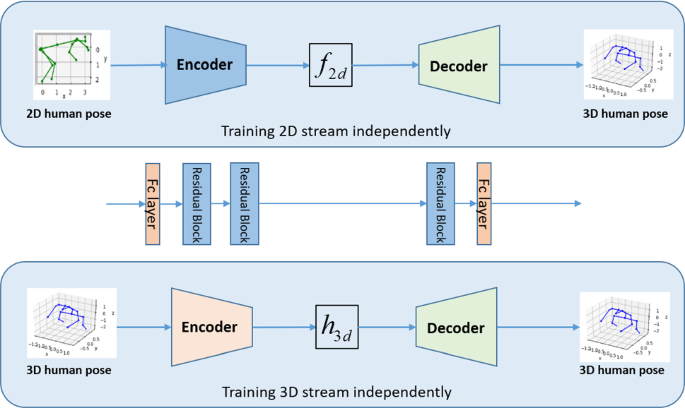

Details in evaluating the effects of domain adaptation module To reveal the effects of the domain adaptation module in our learning architecture, we compared the features learned by training the 2D/3D stream independently with the feature learned by the proposed method. The network details of training these two streams independently are shown in Fig. 11. When training the 2D stream with labeled data independently, the input 2D pose goes through the encoder and decoder to estimate the corresponding 3D pose. As we use the same structure of residual blocks and Fc layers with Martinez et al., the 2D stream training actually is the same with Martinez et al. (2017) both in network structure and supervised training strategy. Training the 3D stream independently makes the network become an autoencoder, where the 3D poses are reconstructed through an encoder-decoder structure. By comparing the intermediate features when training the 2D stream and 3D stream separately, it reveals that the path from 3D to 3D is different from the path from 2D–3D. This difference indicates that the 3D concept of the human body is not well used or learned in the process of lifting 2D–3D separately. That is, the 2D pose lifting with only 3D supervision at the output end does not truly capture the intrinsic feature of the human 3D pose, which affects the efficiency and generalization of the trained model. With the domain adaptation in the intermediate feature, a common area is found between the path from 2D to 3D and the path from 3D to 3D. In this manner, the 3D concept of the human body is transferred to the 2D stream and guides the 2D encoder to generate an informative 3D “imagination” in the hidden space.

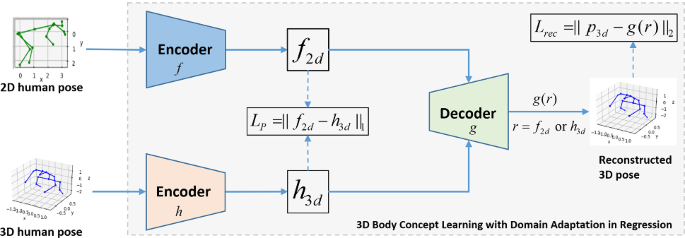

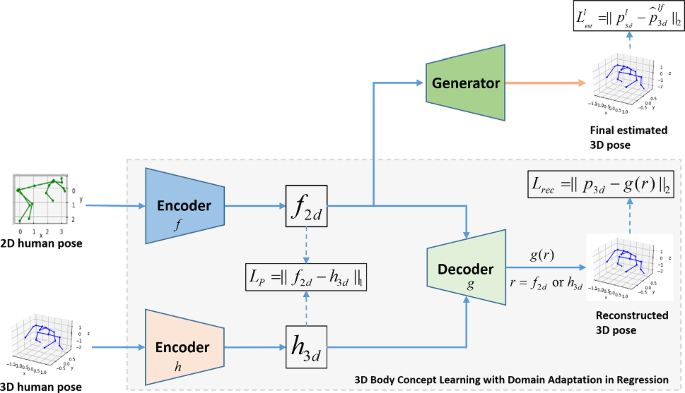

Network structures in the ablation study on components The network structures for modules “DA” and “DA+G” in Table 2 are shown in Fig. 12 and Fig. 13. “DA” means the case of only using the domain adaptation module for pose lifting. “DA + G” refers to the case that predicting 3D pose with the final Generator using the 3D body concept only, i.e., the learned 3D body concept is not concatenated with the original 2D pose.

1.2 Discussion on adding a 3D space discriminator at the output end

Recently, many methods (Kanazawa et al., 2018; Chen et al., 2019; Tripathi et al., 2020) utilized discriminators to match the predicted distribution with the real one in the 3D space or the 2D space. Different from these methods, the proposed method exploits a discriminator in the semantic space to learn the inherent 3D body concept. To have a more comprehensive study, we discuss the case of adding a discriminator at the output end for regularizing the predicted 3D poses. As shown in Table 10, the experiments are implemented both on a subset “S1” of the training dataset and the whole training set. Without the 3D body concept learning, our method is equal to the method of Martinez et al. (2017). Therefore, results of the Martinez et al. (2017) are compared with as baseline results and represent the case without 3D body concept learning. Table 10 shows that the discriminator added to Martinez et al. (2017) promotes the accuracy both on the detected 2D poses and on the ground truth poses. While on our method, the benefit brought by the additional discriminator is negligible. The performance is even worse than without the additional discriminator when labeled data is abundant. In fact, the real distribution of the 3D pose has been acquired in the learned 3D body concept which plays the same role as the added discriminator. However, with the 3D body concept, the proposed method performs much better than the method of Martinez et al. (2017) with a discriminator. The results indicate that learning the 3D body concept with a discriminator in semantic space benefits the 2D pose lifting process more than the discriminator at output 3D space.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nie, Q., Liu, Z. & Liu, Y. Lifting 2D Human Pose to 3D with Domain Adapted 3D Body Concept. Int J Comput Vis 131, 1250–1268 (2023). https://doi.org/10.1007/s11263-023-01749-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01749-2