Abstract

Web image datasets curated online inherently contain ambiguous in-distribution instances and out-of-distribution instances, which we collectively call non-conforming (NC) instances. In many recent approaches for mitigating the negative effects of NC instances, the core implicit assumption is that the NC instances can be found via entropy maximization. For “entropy” to be well-defined, we are interpreting the output prediction vector of an instance as the parameter vector of a multinomial random variable, with respect to some trained model with a softmax output layer. Hence, entropy maximization is based on the idealized assumption that NC instances have predictions that are “almost” uniformly distributed. However, in real-world web image datasets, there are numerous NC instances whose predictions are far from being uniformly distributed. To tackle the limitation of entropy maximization, we propose \((\alpha , \beta )\)-generalized KL divergence, \({\mathcal {D}}_{\text {KL}}^{\alpha , \beta }(p\Vert q)\), which can be used to identify significantly more NC instances. Theoretical properties of \({\mathcal {D}}_{\text {KL}}^{\alpha , \beta }(p\Vert q)\) are proven, and we also show empirically that a simple use of \({\mathcal {D}}_{\text {KL}}^{\alpha , \beta }(p\Vert q)\) outperforms all baselines on the NC instance identification task. Building upon \((\alpha ,\beta )\)-generalized KL divergence, we also introduce a new iterative training framework, GenKL, that identifies and relabels NC instances. When evaluated on three web image datasets, Clothing1M, Food101/Food101N, and mini WebVision 1.0, we achieved new state-of-the-art classification accuracies: \(81.34\%\), \(85.73\%\) and \(78.99\%\)/\(92.54\%\) (top-1/top-5), respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

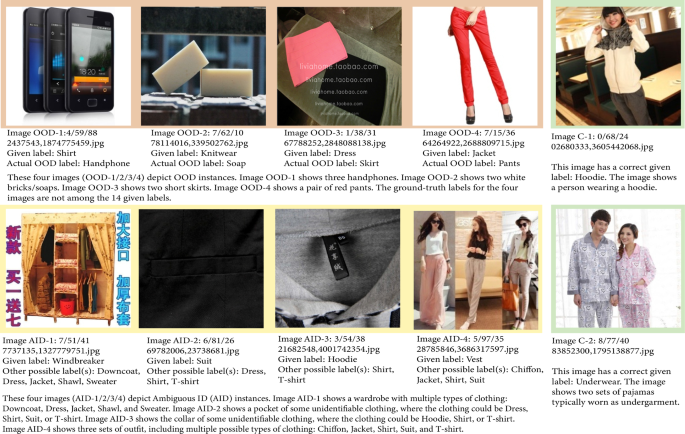

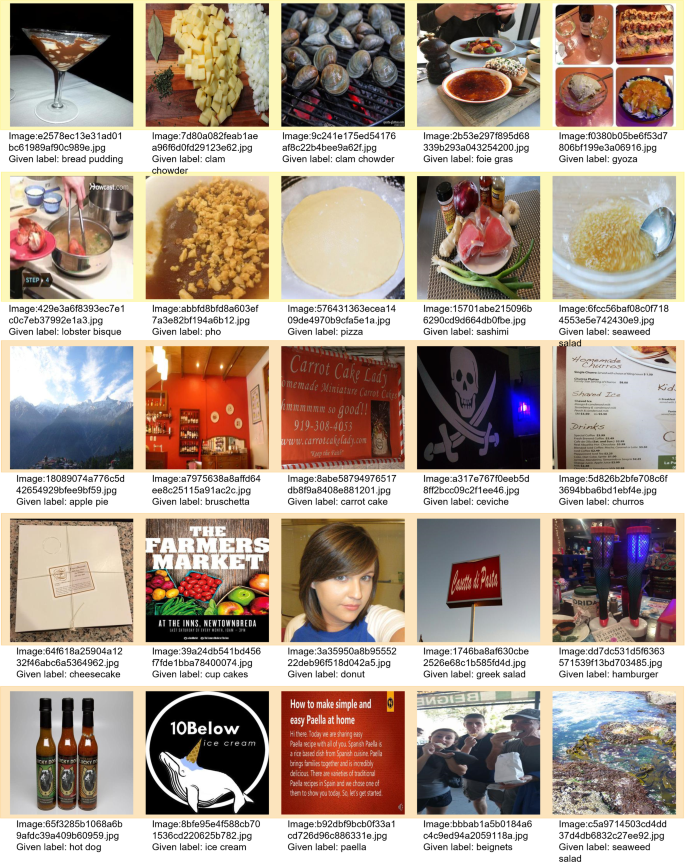

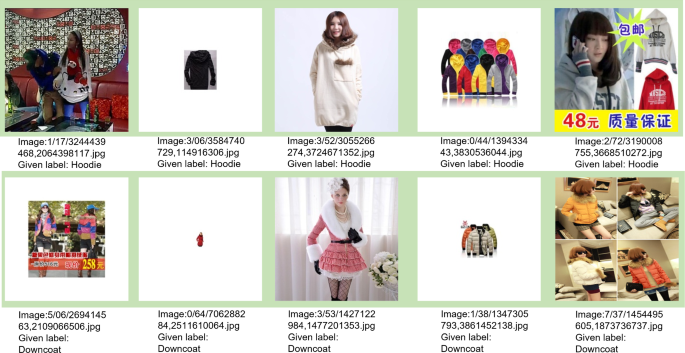

A depiction of NC instances versus clean instances in the Clothing1M dataset. This figure is divided into three colored sections: orange, yellow and green. The images in the orange section depict OOD instances. The images in the yellow section depict ambiguous ID (AID) instances. The images in the green section depict clean instances, i.e. images with correct labels. The 14 given labels in Clothing1M are: T-Shirt, Shirt, Knitwear, Chiffon, Sweater, Hoodie, Windbreaker, Jacket, Downcoat, Suit, Shawl, Dress, Vest, Underwear

Web data is an abundant source for curating image datasets (Bossard et al., 2014; Kaur et al., 2017; Lee et al., 2018; Liang et al., 2020; Shang et al., 2018; Xiao et al., 2015). Raw web images collected online are typically annotated with weak-supervision methods (Xiao et al. 2015; Varma & Ré 2018; Tekumalla & Banda 2021; Zhang et al. 2021; Helmstetter & Paulheim 2021; Yang et al. 2022). Although much more efficient in comparison to manual annotation, such automated annotation methods inevitably introduce non-conforming (NC) instances, which comprise both ambiguous in-distribution (ID) instances and out-of-distribution (OOD) instances. For example, Clothing1M (Xiao et al., 2015), a large-scale web image dataset that is well-known for containing real-world label noise, also contains NC instances; see Fig. 1 for explicit examples. Whether ambiguous ID or OOD, these NC instances may lead to significant performance degradation during training, which is not surprising since neural networks are capable of achieving zero training error even in the extreme case of completely noisy data (Arpit et al., 2017; Yao et al., 2021; Zhang et al., 2021). How then do we deal with such NC instances in web image datasets?

Numerous works (Goldberger & Ben-Reuven, 2016; Hendrycks et al., 2018; Ma et al., 2020; Patrini et al., 2017; Peng et al., 2020; Sharma et al., 2020; Xia et al., 2019; Yao et al., 2020) have tackled this problem by viewing NC instances from the lens of label noise. The underlying assumption is that NC instances hurt performance because they (may) have incorrect labels. From this viewpoint, the problem is then reduced to a simpler one: How do we alleviate the effect of label noise? However, such a simplification ignores the role of image content in these NC instances. Ambiguous ID instances, especially those that do not fit neatly into a single label class (e.g. due to vague or incomplete object presentation, occlusion, etc.), could still hurt performance even if they are correctly labeled. Although incorrectly labeled by definition, OOD instances could still have similar visual features that are present in images of certain label classes, which may distort the learned feature space (and so hurt performance), especially if they could be confused even by humans for some of the given label classes.

More recent methods avoid this over-simplification by incorporating various data sampling techniques based on image content (Albert et al., 2022; Guo et al., 2018; Han et al., 2019; Lee et al., 2018; Tu et al., 2020; Xu et al., 2021; Yao et al., 2021), many of which use a common underlying idea that NC instances can be identified via entropy maximization (Albert et al., 2022; Chan et al., 2021; Kirsch et al., 2021; Macêdo & Ludermir, 2021a; Macêdo et al., 2021b, c; Yao et al., 2021; Yu & Aizawa, 2019). Informally, the most easily identifiable NC instances are precisely those with near-maximum entropy. To make sense of this information-theoretic notion of (Shannon) entropy, we implicitly assumeFootnote 1 that, with respect to a trained classifier, instances have prediction vectors that are valid as parameter vectors of multinomial distributions. Moreover, we interpret the prediction of an instance probabilistically to be a random variable with this multinomial distribution. The entropy of an instance is then simply the entropy of its prediction. Since the discrete uniform distribution has maximum entropy by definition, it follows that the most easily identifiable NC instances are precisely those instances whose predictions are “almost” uniformly distributed. Thus, entropy maximization is the idea of categorizing an instance as an NC instance if its entropy exceeds a certain threshold.

However, there is a fundamental limitation of using entropy maximization: Not all NC instances have predictions that are “almost” uniformly distributed. Consider the hypothetical example of a web image dataset with 10 classes, consisting of 5 animal classes and 5 plant classes. Let x be an instance whose prediction score for each animal class is 0.20, and whose prediction scores for all plant classes are 0.00. Clearly, x could be interpreted as an NC instance that is predicted to be related to the 5 animal classes with equal uncertainty, but predicted to be unrelated to any of the plant classes. Next, consider another instance \(x'\), whose prediction score for the first animal class is 0.55, and whose prediction scores for all remaining 9 classes are 0.05 each. In contrast, \(x'\) is naturally not an NC instance, since it fits well into exactly one class. However, the normalizedFootnote 2 entropy of x is \(\frac{\log 5}{\log 10} \approx 0.699\), while the normalized entropy of \(x'\) is \(\approx 0.728\). If the threshold is less than 0.728, then \(x'\) would be misclassified as an NC instance. If the threshold is greater than 0.699, then x would be misclassified as a non-NC instance. Hence, this implies that all possible thresholds when using normalized entropy to identify NC instances would miscategorize at least one of \(x, x'\). For more concrete examples, see Table 1 for NC instances and non-NC instances (in Clothing1M) that cannot be distinguished using thresholds on their normalized entropies. As these examples reveal, entropy maximization is inherently inadequate for identifying NC instances.

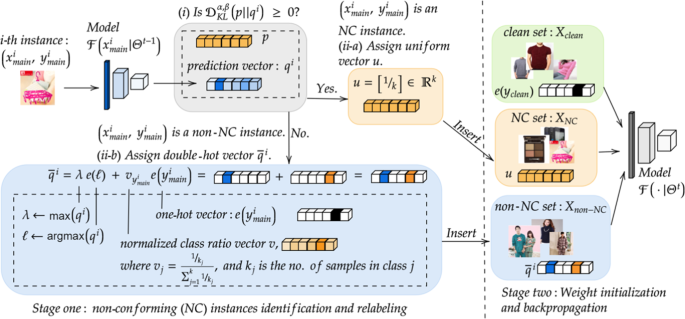

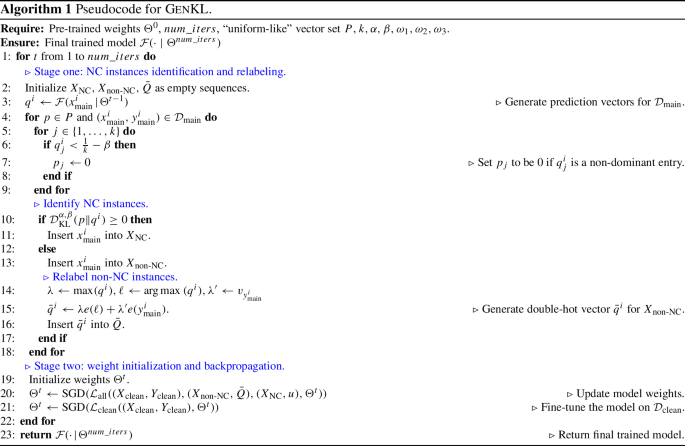

An overview of our GenKL framework in iteration t. GenKL has two stages; we iterate between the two stages until the model converges. Stage one includes two critical components: (i) identification of NC instances using \((\alpha ,\beta )\)-generalized KL divergence; and (ii) relabeling of NC and non-NC instances using soft labels. In component (i), given the i-th instance \((x^{i}_{{{\,\textrm{main}\,}}}, y^{i}_{{{\,\textrm{main}\,}}})\), we obtain its prediction vector \(q^i\) from the model \({\mathcal {F}}(x^{i}_{{{\,\textrm{main}\,}}}\!\mid \!\Theta ^{t-1})\). Next, we compute the \((\alpha ,\beta )\)-generalized KL divergence \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha ,\beta }(p\Vert q^i)\), where p is a uniform-like vector (see Sect. 3.4 for its definition), then identify our i-th instance as an NC instance if \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha ,\beta }(p\Vert q^i) \ge 0\), and as a non-NC instance otherwise. In component (ii), there are two scenarios: (ii-a) If \((x^{i}_{{{\,\textrm{main}\,}}}, y^{i}_{{{\,\textrm{main}\,}}})\) is an NC instance, then assign to it a uniform vector \(\big [\tfrac{1}{k}, \dots , \tfrac{1}{k}\big ]\) as its soft label; and (ii-b) If \((x^{i}_{{{\,\textrm{main}\,}}}, y^{i}_{{{\,\textrm{main}\,}}})\) is a non-NC instance, then assign to it a double-hot vector \({\bar{q}}^i\) as its soft label. In stage two, an initialized model is trained on \(X_{{{\,\mathrm{non-NC}\,}}}\), \(X_{{{\,\textrm{NC}\,}}}\) with their respective soft labels, and \(X_{{{\,\textrm{clean}\,}}}\) with its given labels. Full details can be found in Sect. 3.5

Beyond entropy maximization. By definition, entropy maximization is equivalent to the minimization of the Kullback–Leibler (KL) divergence. Building upon this observation, we introduce (\(\alpha , \beta \))-generalized KL divergence, a new generalization of KL divergence that is well-suited for identifying NC instances. In essence, we are extending this KL divergence minimization idea to the case of minimizing the “divergence” from p to q, relative to those dominant entries in the parameter vector of q. Here, the precise meaning of “dominant” depends on our hyperparameters \(\alpha \) and \(\beta \), whose values we can adjust. (Intuitively, an entry is dominant if it is not small.) By using (\(\alpha , \beta \))-generalized KL divergence, we are able to not only identify NC instances whose prediction vectors have entries that are all dominant (i.e. instances with “almost” uniformly distributed predictions), but also identify additional NC instances whose prediction vectors have less dominant entries. Thus, (\(\alpha , \beta \))-generalized KL divergence directly addresses the fundamental limitation of using entropy maximization.

Robust training with generalized KL divergence. To improve the robustness of training classifiers on web image datasets with both NC instances and non-NC instances with label noise, we propose GenKL, a general training framework based on our \((\alpha ,\beta )\)-generalized KL divergence. There are two stages in GenKL. For stage one, there are two key components: (i) identification of NC instances using \((\alpha ,\beta )\)-generalized KL divergence; and (ii) relabeling of NC and non-NC instances using soft labels. For stage two, we perform weight initialization, then carry out the usual training on the relabeled instances and clean instances. By iteratively alternating between the two stages, GenKL is robust to both NC instances, and label noise in the input data. See Fig. 2 for an overview of the GenKL framework. Our experiments on web image datasets show that GenKL is able to achieve state-of-the-art (SOTA) accuracies.

Our main contributions are given as follows:

-

We propose a new generalized KL divergence, \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha ,\beta }(p\Vert q)\), which is well-suited for identifying NC instances.

-

We prove theoretical properties of \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha ,\beta }(p\Vert q)\), whose proofs are provided in the Appendix.

-

We propose GenKL, a training framework that is robust to NC instances in training data.

-

In our experiments on web image datasets, Clothing1M (Xiao et al., 2015), Food101/ Food101N (Bossard et al., 2014; Lee et al., 2018) and mini WebVision 1.0 (Li et al., 2017), we achieved new SOTA accuracies \(81.34\%\), \(85.73\%\), and \(78.99\%\)/\(92.54\%\) (top-1/top-5), respectively.

2 Related Work

2.1 Dealing with NC Instances

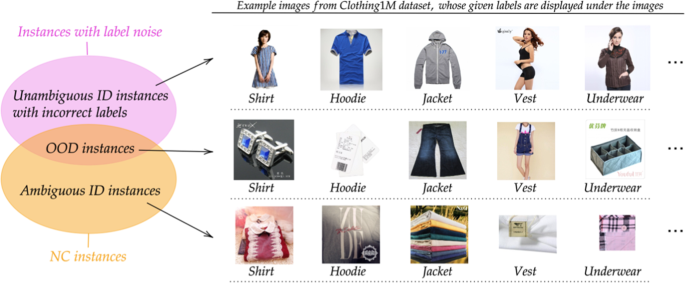

Existing approaches for dealing with NC instances can be broadly categorized into two types: (i) treating NC instances more generally as instances with label noise; and (ii) identifying and alleviating the effects of NC instances. Intuitively, although instances with label noise include unambiguous ID instances with incorrect labels, which are not NC instances, any method that tackles the problem of label noise would naturally tackle the sub-problem of OOD instances, which are NC instances with incorrect labels.Footnote 3 See Fig. 3 for a Venn diagram that illustrates the relationship between NC instances and instances with label noise. (See also Sect. 3.1 for a rigorous treatment of NC instances, including how they relate to label assignment.)

For the first type, existing works can be categorized based on their use of (i) estimators for noise transition matrices, (ii) robust loss functions, (iii) regularization techniques, and (iv) other specialized model architectures. An accurate estimate of the noise transition matrix is vital to many applications, because the matrix can not only model the label corruption process for the dataset, but also can be used to infer the clean class posterior probabilities of the dataset (Han et al., 2020). There are numerous methods that estimate the noise transition matrix, which is then used either to alleviate the effect of label noise (Sukhbaatar & Fergus, 2014; Xiao et al., 2015; Han et al., 2018), or to perform label correction (Hendrycks et al., 2018; Patrini et al., 2017; Xia et al., 2020, 2019). Various loss functions have been proposed (Ghosh et al., 2017; Lyu & Tsang, 2019; Ma et al., 2020; Song et al., 2019; Wang et al., 2019; Hendrycks et al., 2018; Patrini et al., 2017; Xia et al., 2019; Yao et al., 2020), which are specifically designed to be robust to noisy data. Also, regularization techniques (Ioffe & Szegedy, 2015; Jenni & Favaro, 2018; Krogh & Hertz, 1991; Pereyra et al., 2017; Shorten & Khoshgoftaar, 2019; Srivastava et al., 2014; Zhang et al., 2017) are used to increase the generalization capability of a trained model. Such techniques are frequently combined with other methods. Finally, some methods do not fit neatly into the first three sub-categories, and instead use newly proposed specialized model architectures (Wang et al., 2021; Peng et al., 2020; Yang et al., 2021; Tu et al., 2020; Yao et al., 2018). CAN (Yao et al., 2018) is a contrastive-additive noise network that has a contrastive layer to estimate a quality embedding space, and an additive layer for estimation aggregation. AFM (Peng et al., 2020) introduces a training block that suppresses mislabeled data via grouping and self-attention; see also (Xu et al., 2022). SOMNet (Tu et al., 2020) groups images and their proposed regions-of-interest (ROIs) from the same category into bags, and thereafter, weights are assigned to the bags based on their discriminative scores with the nearest clusters. These methods of the first type are designed to deal with label noise, and do not specifically target the challenge of NC instances. Hence, these methods have limited impact on web image datasets that have a non-negligible number of NC instances.

For the second type, numerous works identify NC instances using entropy-based methods. Many of them use entropy maximization to identify OOD instances (Chan et al., 2021; Kirsch et al., 2021; Macêdo & Ludermir, 2021a; Macêdo et al., 2021b, c). Other works use divergences closely related to KL divergence to identify NC instances. For example, Jo-SRC (Yao et al., 2021) uses Jensen–Shannon divergence to separate clean instances from OOD and noisy ID instances. It then compares the consistency of the predictions of each non-clean instance from multiple views (via data augmentation) to determine whether the instance is OOD or noisy ID. Another example is DSOS (Albert et al., 2022), which first separates non-clean instances from clean instances via collision entropy, then uses beta mixture models to identify noisy ID and OOD instances, and applies label correction to improve classification accuracy. It should be noted that in Albert et al. (2022), the authors hypothesized that DSOS might not perform as well if most of the non-clean instances are OOD rather than noisy ID, which may limit the effectiveness of DSOS on some web image datasets, e.g. Clothing1M.

2.2 Generalizations of KL Divergence and other divergences

KL divergence (also called relative entropy) is a non-negative, unbounded divergence between two stochastic vectors p and q, defined as follows:

Note that KL divergence is asymmetric, i.e. in general, \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}(p\Vert q) \ne {\mathcal {D}}_{{{\,\textrm{KL}\,}}}(q\Vert p)\). There are multiple variants of KL divergence and other divergences:

-

Jeffreys divergence (Jeffreys, 1998) is defined by

$$\begin{aligned} \qquad \quad {\mathcal {D}}_{\text {Jeff}}(p\Vert q):= \frac{1}{2}{\mathcal {D}}_{{{\,\textrm{KL}\,}}}(p\Vert q) + \frac{1}{2}{\mathcal {D}}_{{{\,\textrm{KL}\,}}}(q\Vert p),\qquad \quad \end{aligned}$$which is a symmetric analog of KL divergence.

-

Jensen–Shannon (JS) divergence is defined by

$$\begin{aligned} \qquad {\mathcal {D}}_{\text {JS}}(p\Vert q):= \frac{1}{2}{\mathcal {D}}_{{{\,\textrm{KL}\,}}}(p\Vert \tfrac{p+q}{2}) + \frac{1}{2}{\mathcal {D}}_{{{\,\textrm{KL}\,}}}(q\Vert \tfrac{p+q}{2}), \qquad \end{aligned}$$which is another symmetric analog of KL divergence. This divergence is bounded within [0, 1] if logarithms are taken over base 2 (Lin, 1991).

-

Decision Cognizant (DC) KL divergence was first introduced in Ponti et al. (2017). Suppose \({{\,\mathrm{arg\,max}\,}}({p})=s, {{\,\mathrm{arg\,max}\,}}({q})=t\), and define the set \({\Lambda }:= \{1, \dots , k\} \backslash \{ s, t\}\). Then this divergence is defined by

\({\mathcal {D}}_{\text {DC}}(p\Vert q):= \!\!\!\!\displaystyle \sum _{j \in \{s, t\}} \!\!\! q_{j} \log {\frac{q_j}{p_j}} + \big (\displaystyle \sum _{j \in \Lambda } q_{j}\big ) \log {\frac{\sum _{j \in \Lambda } q_{j}}{\sum _{j \in \Lambda } p_{j}}}.\) Similar to KL divergence, this DC KL divergence is non-negative, unbounded and asymmetric. The main difference for this divergence is that the contributions from minority classes are reduced, which is what (Ponti et al., 2017) refers to as being “decision cognizant”.

-

Delta divergence (Kittler & Zor, 2018): Let \({{\,\mathrm{arg\,max}\,}}({p})=s\), let \({{\,\mathrm{arg\,max}\,}}({q})=t\), and define the index set \(\Lambda := \{1, \dots , k\}\backslash \{ s, t\}\). Then Delta divergence is defined by

$$\begin{aligned} \qquad {\mathcal {D}}_{\Delta }(p\Vert q):= \frac{1}{2} \Big [ \!\!\sum _{j \in \{s, t\}} \!\!\mid \! p_{j} - q_{j}\!\!\mid + \mid \! p_{\Lambda } - q_{\Lambda }\!\!\mid \Big ],\qquad \end{aligned}$$where \(p_{\Lambda } = \sum _{j \in \Lambda } p_j\) and \(q_{\Lambda } = \sum _{j \in \Lambda } q_j\). This divergence is non-negative, bounded, symmetric and effectively groups non-dominant entries into a single class.

Rényi entropy generalizes the usual notion of (Shannon) entropy, and it is defined by

where \(\alpha \ge 0, \alpha \ne 1\). Note that Rényi entropy becomes Shannon entropy in the limit as \(\alpha \rightarrow 1\).

Collision entropy (Albert et al., 2022) is a popular special case (\(\alpha = 2\)) of Rényi entropy, given by

3 Proposed Method

This section introduces GenKL, an iterative training framework robust to both NC instances and instances with label noise. We first begin in Sect. 3.1 with a rigorous definition of NC instances. Next, we cover the preliminaries in Sect. 3.2, followed by a formal introduction to our \((\alpha ,\beta )\)-generalized KL divergence \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p\Vert q)\) in Sect. 3.3. Section 3.4 describes the usage of \((\alpha ,\beta )\)-generalized KL divergence. We then build upon this \((\alpha ,\beta )\)-generalized KL divergence, and give full algorithmic details of our proposed GenKL framework in Sect. 3.5. Finally in Sect. 3.6, we give further details on “double-hot vectors”, an important ingredient of our GenKL framework.

3.1 What Exactly are NC Instances?

To rigorously define what NC instances are, it is helpful to think of the dataset annotation process. In particular, the annotation process of “assigning a single class label to an input image” can be interpreted in terms of solving an object detection task.

Imagine a two-step object detection process:

-

Step 1: We locate the objects in the input image, which correspond to regions of the image (specified by bounding boxes) whose content is distinguishable from the background; this is commonly known as generic object detection (Liu et al., 2020); cf. Maaz et al. (2022). For convenience, let \({\mathcal {O}}\) denote the set of located objects.

-

Step 2: Given a fixed set \({\mathcal {L}}\) of object class labels, we shall try to assign each object in \({\mathcal {O}}\) to a label in \({\mathcal {L}}\). We shall assume that an object is assigned a label \(y \in {\mathcal {L}}\), only if all salient features of the object class associated to y are present/detected in the bounding box of the object. It is possible that some objects in \({\mathcal {O}}\) cannot be assigned any label in \({\mathcal {L}}\), in which case, we consider such objects to be out-of-distribution (OOD). Objects that are assigned labels in \({\mathcal {L}}\) are called in-distribution (ID). If an ID object in \({\mathcal {O}}\) could be assigned multiple labels in \({\mathcal {L}}\), then we say it is an ambiguous ID object.

Based on the located objects in \({\mathcal {O}}\), together with the labels assigned to these objects wherever possible, our goal is to assign a single label from \({\mathcal {L}}\) to represent the entire image. Although our goal is seemingly simple, there are subtle issues we have to address. Crucially, is there an obvious main object of interest? Are there multiple main objects of interest? How do we characterize objects in \({\mathcal {O}}\) to be main objects of interest?

Among the bounding boxes of all objects in \({\mathcal {O}}\), let A denote the maximum possible area among these bounding boxes. Given a threshold \(\eta \) (e.g., \(\eta = 0.5\)), we shall define an object \(obj \in {\mathcal {O}}\) to be a main object of interest, if the bounding box for obj has an area at least \(\eta A\). Let \({\mathcal {O}}_{\text {interest}}\) denote the subset of \({\mathcal {O}}\) consisting of main objects of interest.Footnote 4

For a single label \(y \in {\mathcal {L}}\) to accurately represent a given input image, we require every object in \({\mathcal {O}}_{\text {interest}}\) to be assigned a single label y. This brings us to our formal definition of NC instances:

Definition 1

Fix a set \({\mathcal {L}}\) of object class labels. For a given instance (x, y) (where x represents an image, and y is the corresponding given label in \({\mathcal {L}}\), which may possibly be incorrect), define \({\mathcal {O}}_{\text {interest}}\) for image x as above. Then we call (x, y) an NC instance, if there is no possible single label \(y' \in {\mathcal {L}}\) such that every object in \({\mathcal {O}}_{\text {interest}}\) is assigned the same label \(y'\).Footnote 5

Examples. In Fig. 1, images AID-1/2/3/4 and OOD-1/2/3/4 depict NC instances. The main object(s) of interest in images AID-1/2/3/4 are ambiguous ID objects, and the main object(s) of interest in images OOD-1/2/3/4 are OOD objects.

3.2 Preliminaries

Throughout, given any vector v, we shall let its j-th entry be denoted by \(v_{j}\). (We shall always reserve subscripts on vectors to refer to their entries.) For any dataset \({\mathcal {D}} = \{(x^i, y^i)\}^{N}_{i=1}\) with N instances, we use the convention that the i-th instance is the pair \((x^i, y^i)\), where \(x^i\) is the i-th feature vector/image. The corresponding label \(y^i\) is an integer \(j\in \{1, \dots , k\}\). Let \(e(y^i) = e(j)\) be the one-hot vector whose j-th entry is 1, and whose remaining entries are 0. For convenience, let \(X_{{\mathcal {D}}}:= (x^i)_{i=1}^N\) (resp. \(Y_{{\mathcal {D}}}:= (y^i)_{i=1}^N\)) be the sequence of feature vectors/images (resp. sequence of labels) for \({\mathcal {D}}\). A neural network model in iteration t is denoted by \({\mathcal {F}}(\cdot \!\!\mid \!\! \Theta ^{t})\), where \(\Theta ^{t}\) represents the model weights in iteration t. Assume that for every input \(x^i\), the output \(q^i = {\mathcal {F}}(x^i \!\!\mid \!\! \Theta ^{t})\) is a stochastic vector. For convenience, we define the entropy of a stochastic vector p to be \(H(p):= -\sum _{j =1}^{k} p_j \log (p_j)\). Analogously, for stochastic vectors p and q, we define the cross-entropy from p to q to be \(H(p,q):= -\sum _{j =1}^{k} p_j \log (q_j)\). In both H(p) and H(p, q), we use the usual convention that \(0\log 0:= 0\). Later, we shall abuse notation and use H(p, q) for the case when p is a non-stochastic vector. In this case, H(p, q) is defined using the same formula.

3.3 \((\alpha ,\beta )\)-generalized KL Divergence

Given any two stochastic vectors p and q of length k, and given real values \(\alpha , \beta \) satisfying \(\alpha > 0\) and \(0 \le \beta \le \frac{1}{k}\), we shall define the \((\alpha ,\beta )\)-generalized KL divergence from p to q as follows:

Here, \(\mathbbm {1}_{q_j \ge \frac{1}{k} - \beta }\) is the indicator function that returns 1 if \(q_j \ge \frac{1}{k} - \beta \), and returns 0 otherwise. Succinctly, we can write (1) as:

where \(^{\beta }\!{p}{}:= [p_1 \mathbbm {1}_{q_1 \ge \frac{1}{k} - \beta }, \dots , p_k \mathbbm {1}_{q_k \ge \frac{1}{k} - \beta }]\). Note that we can easily compute \(^{\beta }\!{p}{}\) by replacing those entries in p that are less than \(\frac{1}{k} - \beta \) with the value 0. In both (1) and (2), we use the usual convention that \(0\log 0:= 0\).

There are two hyperparameters in \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p\Vert q)\): \(\alpha \) and \(\beta \). Note that when \(\alpha =1\) and \(\beta =\frac{1}{k}\), our \((\alpha ,\beta )\)-generalized KL divergence coincides exactly with the usual KL divergence. Informally, the first term \(-\alpha H(p)\) is negative, where \(\alpha >0\) is a hyperparameter that controls “how negative” this weighted “entropy” term is. The second hyperparameter \(\beta \) appears in the second term \(H(^{\beta }{p}{}, q)\), which is a positive “cross-entropy” term. Intuitively, \(\beta \) controls the threshold of what it means to be a non-dominant entry. Given a stochastic vector q of length k, we say that its i-th entry \(q_i\) is dominant if \(q_i \ge \frac{1}{k} - \beta \), and non-dominant otherwise. Thus, we are effectively computing the cross-entropy only for the dominant entries of q, where the contributions of the non-dominant entries of q are ignored.

Theorem 2

Let p and q be two stochastic vectors of length \(k \ge 2\). Let \(\alpha >0\) and \(\beta \in [0, \frac{1}{k}]\). Then \((\alpha ,\beta )\)-generalized KL divergence \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\) is bounded as follows:

-

(i)

$$\begin{aligned} {\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q) \ge {\left\{ \begin{array}{ll} 0, &{} \text {if }\beta = \frac{1}{k}, 0<\alpha \le 1; \\ (1-\alpha ) \log k, &{} \text {if }\beta = \frac{1}{k}, \alpha > 1;\\ -\alpha \log k, &{} \text {if }0 \le \beta < \frac{1}{k}. \end{array}\right. } \end{aligned}$$(3)

-

If \(\beta = \frac{1}{k}\) and \(0 < \alpha \le 1\), then equality holds if and only if \(q = p\) and p is a one-hot vector.

-

If \(\beta = \frac{1}{k}\) and \(\alpha > 1\), then equality holds if and only if \(p=q\) and p is a uniform vector.

-

If \(0 \le \beta < \frac{1}{k}\), then equality holds if and only if q is a one-hot vector and p is a uniform vector.

-

-

(ii)

If \(\beta = \frac{1}{k}\), then \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\) is unbounded. If instead \(0 \le \beta < \frac{1}{k}\), then \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q) \le \log \frac{1}{\frac{1}{k}-\beta }\).

A detailed proof is provided in Appendix A.

Informally, Theorem 2 gives the full range of values that \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\) can attain, over all possible pairs of values for the hyperparameters \(\alpha \) and \(\beta \). In the special case when \(\alpha =1\) and \(\beta =\frac{1}{k}\), \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\) is the usual KL divergence from p to q, and Theorem 2 becomes the well-known “information” from Information Theory; see (Thomas & Joy 2006, Thm. 2.6.3).

Theorem 3

For any 0/1-vector \(u = [u_1, \dots , u_k]\), \(\alpha \ge 1\), and \(\beta \in [0, \frac{1}{k}]\), we define

Then for all \(\alpha \ge 1\), \(\beta \in [0, \frac{1}{k}]\), we have that (\(\alpha , \beta \))-generalized KL divergence is piecewise convex: This means that for all 0/1-vectors u, all pairs (p, q), \((p', q') \in {\mathbb {R}}^{\beta }_{u}\), and all \(\lambda \in [0, 1]\),

A detailed proof is provided in Appendix B.

It is well-known that KL divergence \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}( p \Vert q)\) is convex in the pair (p, q); see (Thomas & Joy 2006, Thm. 2.7.2). Theorem 3 extends this result to the general case of \((\alpha , \beta )\)-generalized KL divergence, where instead of convexity, we have piecewise convexity for \({\mathcal {D}}^{\alpha , \beta }_{{{\,\textrm{KL}\,}}}( p \Vert q)\).

3.4 Identification of NC Instances

Later, when we introduce GenKL in Sect. 3.5, we shall be considering two disjoint datasets \({\mathcal {D}}_{{{\,\textrm{main}\,}}}\) and \({\mathcal {D}}_{{{\,\textrm{clean}\,}}}\) with the same set of label classes, where \({\mathcal {D}}_{{{\,\textrm{main}\,}}}\) has both NC instances and instances with label noise, while \({\mathcal {D}}_{{{\,\textrm{clean}\,}}}\) is assumed to contain only non-NC instances that are clean (i.e., all labels are correct). We do not assume that the feature vectors/images of \({\mathcal {D}}_{{{\,\textrm{main}\,}}}\) and \({\mathcal {D}}_{{{\,\textrm{clean}\,}}}\) are sampled from the same distribution. We shall use labels “main” and “clean” to indicate membership in the respective datasets; for example, \(X_{{{\,\textrm{main}\,}}}\) refers to the sequence \(X_{{\mathcal {D}}_{{{\,\textrm{main}\,}}}}\), while \((x_{{{\,\textrm{clean}\,}}}^{i}, y_{{{\,\textrm{clean}\,}}}^{i})\) refers to the i-th instance of \({\mathcal {D}}_{{{\,\textrm{clean}\,}}}\).

When using \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\) to identify NC instances, the vector q we used is a prediction vector from a trained neural network. To capture the variance of the predictions, we use multiple “uniform-like” vectors for p, which is defined as follows: First, we sample a vector \({\hat{p}}\), where each entry \({\hat{p}}_j\) is sampled from the normal distribution \({\mathcal {N}}(\frac{1}{k},\,\sigma ^{2})\). (When \(\sigma =0\), \({\hat{p}}\) is a uniform vector.) If the entries of \({\hat{p}}\) are all non-negative, then we normalize the vector \({\hat{p}}\) to generate the “uniform-like” vector p, where \(p_j = \frac{{\hat{p}}_j}{\sum _{i=1}^{k} {\hat{p}}_j}\). Let P be the set of uniform-like vectors p obtained via this sampling process. Given an instance with prediction vector q, we say that this instance is an NC instance if \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q) \ge 0\) for any p in P.

3.5 GenKL Framework

Our framework GenKL comprises two stages. In stage one, we identify NC and non-NC instances in \({\mathcal {D}}_{{{\,\textrm{main}\,}}}\), and generate respective soft labels for both types of instances. In stage two, we perform training on \({\mathcal {D}}_{{{\,\textrm{main}\,}}}\) with its newly assigned labels and \({\mathcal {D}}_{{{\,\textrm{clean}\,}}}\) with its given labels (usual training). We iterate between stage one and stage two, until the model converges.

Stage one. There are two critical components in stage one: (i) identification of NC instances using \((\alpha ,\beta )\)-generalized KL divergence; and (ii) relabeling of NC and non-NC instances using soft labels.

For component (i), we obtain the set Q of prediction vectors for all instances in \({\mathcal {D}}_{{{\,\textrm{main}\,}}}\) from our model \({\mathcal {F}}(\cdot \!\!\mid \!\! \Theta ^{t-1})\). Using our \((\alpha ,\beta )\)-generalized KL divergence, we then partition \(X_{{{\,\textrm{main}\,}}}\) into two sub-sequences: \(X_{{{\,\textrm{NC}\,}}}\) and \(X_{{{\,\mathrm{non-NC}\,}}}\), comprising the images of NC instances and non-NC instances, respectively. In particular, our \((\alpha ,\beta )\)-generalized KL divergence allows the identification of NC instances whose predictions are not “almost” uniformly distributed.

For component (ii), the uniform vector \(u:= [\frac{1}{k}, \dots , \frac{1}{k}]\) is assigned to every image in \(X_{{{\,\textrm{NC}\,}}}\) as its soft label. This makes the model more likely to have uniform-like prediction vectors for NC instances. In contrast, we assign what we call a double-hot vector, \({\bar{q}}^i\), as the soft label to an image in \(X_{{{\,\mathrm{non-NC}\,}}}\). The double-hot vector \({\bar{q}}^{i}\) of a non-NC instance \((x^{i}_{{{\,\textrm{main}\,}}}, y^{i}_{{{\,\textrm{main}\,}}})\) is defined as follows: First, let the vector of normalized class ratios of \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\)Footnote 6 be denoted by v, and let the size of class j in \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\) be \(k_{j}\). This means that the j-th entry of v is:

(This vector v of normalized class ratios was previously used in Li et al. (2019) to define a weighted cross-entropy loss for imbalanced datasets.) Let \(\lambda ' = v_{y^{i}_{{{\,\textrm{main}\,}}}}\), \(\lambda = \text {max}(q^{i})\), and \(\ell = {{\,\mathrm{arg\,max}\,}}{q^i}\). In words, \(\lambda '\) is the class ratio for the class label of \(x^{i}_{{{\,\textrm{main}\,}}}\), while \(\lambda \) is the maximum value of the entries in the prediction vector \(q^i\), corresponding to an entry with index \(\ell \). Then \({\bar{q}}^{i}\) is defined by:

Note that \({\bar{q}}^i\) is a weighted sum of two one-hot vectors, where the two weights \(\lambda \) and \(\lambda '\) do not necessarily sum to 1. Let \({\bar{Q}}\) denote the sequence of double-hot vectors corresponding to \(X_{{{\,\mathrm{non-NC}\,}}}\). More details on double-hot vectors, including its motivation and interpretation, can be found later in Sect. 3.6.

Stage two. In iteration t, a model is initialized and trained on \(X_{{{\,\textrm{clean}\,}}}\) with given labels \(Y_{{{\,\textrm{clean}\,}}}\), \(X_{{{\,\textrm{NC}\,}}}\) with the uniform vector u as the common soft label, and \(X_{{{\,\mathrm{non-NC}\,}}}\) with double-hot vectors \({\bar{Q}}\) as soft labels, respectively. Their respective loss functions are defined by (5), (6), and (7):

The overall loss function is defined by:

where \(\omega _1, \omega _2\) and \(\omega _3\) are hyperparameters that represent the weightage of the contributions of \(X_{{{\,\textrm{clean}\,}}}, X_{{{\,\textrm{NC}\,}}}\) and \(X_{{{\,\mathrm{non-NC}\,}}}\) to the overall loss.

The model weights update process is given by SGD ( ,

\(( \!X_{{{\,\mathrm{non-NC}\,}}}, \! {\bar{Q}})\),

\((\!X_{{{\,\textrm{NC}\,}}},\! u), \! \Theta ^{t})\)) in iteration t. A trained model is returned at the end of stage two, which would produce the prediction vectors for

\({\mathcal {D}}_{{{\,\textrm{main}\,}}}\) in the next iteration. See Algo. 1 for full algorithmic details.

,

\(( \!X_{{{\,\mathrm{non-NC}\,}}}, \! {\bar{Q}})\),

\((\!X_{{{\,\textrm{NC}\,}}},\! u), \! \Theta ^{t})\)) in iteration t. A trained model is returned at the end of stage two, which would produce the prediction vectors for

\({\mathcal {D}}_{{{\,\textrm{main}\,}}}\) in the next iteration. See Algo. 1 for full algorithmic details.

3.6 More on Double-Hot Vectors

The role of double-hot vectors in loss optimization during training can naturally be viewed from the lens of information theory. The key idea we use is that information content can be assigned to events of random processes. Recall that for any event A with corresponding probability \(\Pr (A) = p\), the information content of A is defined to be \(-\log p\), which intuitively quantifies how “surprising” the occurrence of that event would be.

In our paper, the prediction of an instance is defined to be a random variable, where the set of possible outcomes is \(\{1, \dots , k\}\), i.e. the set of all possible labels. For convenience, an outcome of the prediction shall be called a predicted label. This means if q is the prediction vector of an instance, then each entry \(q_j\) is the corresponding probability that the predicted label is j. Thus, the usual cross entropy loss of an instance can be interpreted as the information content of the event that the predicted label is the given label. For a clean instance, this cross entropy loss (see (5)) becomes the information content of the event that the predicted label is the correct label.

For a given non-NC instance, in addition to the usual prediction vector q, we also have a double-hot vector \({\overline{q}}\). Let A be the event that the predicted label is the correct label. Naturally, we are interested in event A, but we also have to deal with the uncertainty that the given label may be incorrect. Informally, we can think of both vectors q and \({\overline{q}}\) as measurements of two independent random processes that each yields information about the correct label of the given non-NC instance, and we would like to quantify the “combined” information we get from the measurements.

For an instance (x, y) with given prediction vector q, the double-hot vector \({\overline{q}}\) associated to this instance has at most two non-zero entries, at indices y and \(\ell := {{\,\mathrm{arg\,max}\,}}q\). (Note that \({\overline{q}}\) has only one non-zero entry if \(y=\ell \).) Recall that the value of \(\lambda '\) in (4) depends only on the class distribution of the entire dataset \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\), where \(\lambda '\) is larger if the normalized class ratio for object class y is larger (i.e. if class y is rarer, in the case that \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\) is an imbalanced dataset). Intuitively, a given label is “more surprising” if it corresponds to a rarer class, and in this “more surprising” case, we would like to assign a larger prior belief probability that the given label is correct. Hence, \(\lambda '\) can be interpreted as a measure of our prior belief that the given label is correct. The value \(\lambda := q_{\ell }\) in (4) is by definition the probability of the most probable predicted label \(\ell \). Hence, \(\lambda \) can be interpreted as a measure of the model’s belief that the most probable predicted label is correct. Consequently, the double-hot vector \({\overline{q}}\) can be interpreted as the overall relative measure of our prior belief (based on normalized class ratios) in comparison to the model’s belief (based on training on the given labels), for the correctness of the given label versus the most probable predicted label.

If \({\overline{q}}\) is a stochastic vector, then we can define a random variable V for the same set \(\{1, \dots , k\}\) of possible outcomes, such that \(\Pr (V = j) = {\overline{q}}_j\). For convenience, an outcome of V shall be called a belief label. Hence, each entry \({\overline{q}}_j\) is the corresponding probability that the belief label is j. Thus, we can interpret the loss value in (6) as the information content of \(A\cap B\), where A is the event defined as above, and B is the event that the belief label is the correct label. Under the assumption that A and B are independent, it then follows from the law of total probability that \(\Pr (A\cap B) = \sum _{j=1}^k q_j{\overline{q}}_j\), so the information content of event \(A\cap B\) is \(-\log \big (\sum _{j=1}^k q_j{\overline{q}}_j\big )\).

Intuitively, as we minimize the value of the loss function \({\mathcal {L}}_{{{\,\mathrm{non-NC}\,}}}\) (see (6)), we are maximizing the probability that the correct label for the given non-NC instance is either the given label or the most probable prediction label, i.e. exactly one of the indices of the non-zero entries of the double-hot vector. This intuition still holds when we drop the requirement that \({\overline{q}}\) must be a stochastic vector, and later in our ablation study (see Table 6), we show that allowing \({\overline{q}}\) to be a non-stochastic vector yields better performance in our experiments.

4 Discussion

Not all NC instances have “almost” uniformly distributed predictions. This was the fundamental limitation of entropy maximization methods that we highlighted in Sect. 1. Through the use of (\(\alpha , \beta \))-generalized KL divergence, \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p\Vert q)\), we are able to overcome this limitation and identify more NC instances. (Later in Sect. 5.2, we report our experiment results on the NC instance identification task.)

Intuitively, the additional NC instances we identified would have prediction vectors whose entries are not all dominant, such that the values of those dominant entries are near-uniform. Since the prediction vector represents the multinomial distribution of the prediction (treated as a random variable), it then follows from our definition of NC instances (in Sect. 3.1) that the object classes corresponding to those dominant entries would have salient features that are detected in the images of these NC instances.

Consequently, for our proposed \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p\Vert q)\) to be effective in identifying more NC instances, we require the implicit assumption that the prediction model can detect the salient features of all object classes that are present in any input image. Specifically, the j-th entry of the prediction vector should be a good measure of the presence of the salient features of the j-th object class, where the more confident the prediction model is in detecting the salient features, the larger this j-th entry should be.

For example, consider Image AID-2 in Fig. 1. This is an ambiguous ID image where the feature “wrinkle-resistant fabric” is present. Interestingly, this is a salient feature of several object classes: Shirt, Windbreaker, Suit, Shawl (e.g. satin shawl), and Underwear (e.g. wrinkle-resistant pyjamas), and we noticed that the corresponding prediction vector obtained for this image has high scores for these respective entries.

In our GenKL framework, after NC instances have been identified, we then relabel non-NC instances with double-hot vectors. As elaborated in Sect. 3.6, double-hot vectors represent the overall relative measure of the beliefs for the correctness of the given label versus the most probable predicted label. Note that the effectiveness of the double-hot vectors in capturing this overall relative measure would depend on the effectiveness of the prediction model in detecting salient features. Hence, our implicit assumption is not only important to the NC instance identification task, but also to the iterative training process in our GenKL framework.

To obtain a prediction model that is able to adequately detect the salient features of all object classes, there are two general approaches. The first approach is rather natural: Maximize the overall classification accuracy of the prediction model. This is based on the intuition that a well-trained model with high classification accuracy, especially on non-NC instances, would be able to detect the salient features of the object classes with high confidence. The second approach is to train a model on a dataset consisting of sufficiently many unambiguous ID instances. This would naturally be satisfied if NC instances are relatively rare, while for a dataset where NC instances are more common, it could be better to pre-train on a clean subset of the dataset. Here we are implicitly assuming that the model is able to detect salient features of object classes with higher confidence, when trained on more unambiguous ID instances.

Finally, note that our GenKL framework has multiple hyperparameters. For a comprehensive sensitivity analysis of the effects of different choices of hyperparameter values, see Sect. 5.5 in the next section.

5 Experiments

We first describe in Sect. 5.1 the datasets we used in our experiments. Next, we report our experiments for the identification of NC instances in Sect. 5.2, and our experiments for the classification of web images in Sect. 5.3. In Sect. 5.4, we analyze the effectiveness of each component of GenKL via an ablation study. Finally in Sect. 5.5, we provide a sensitivity analysis of the hyperparameters of our GenKL framework.

5.1 Datasets

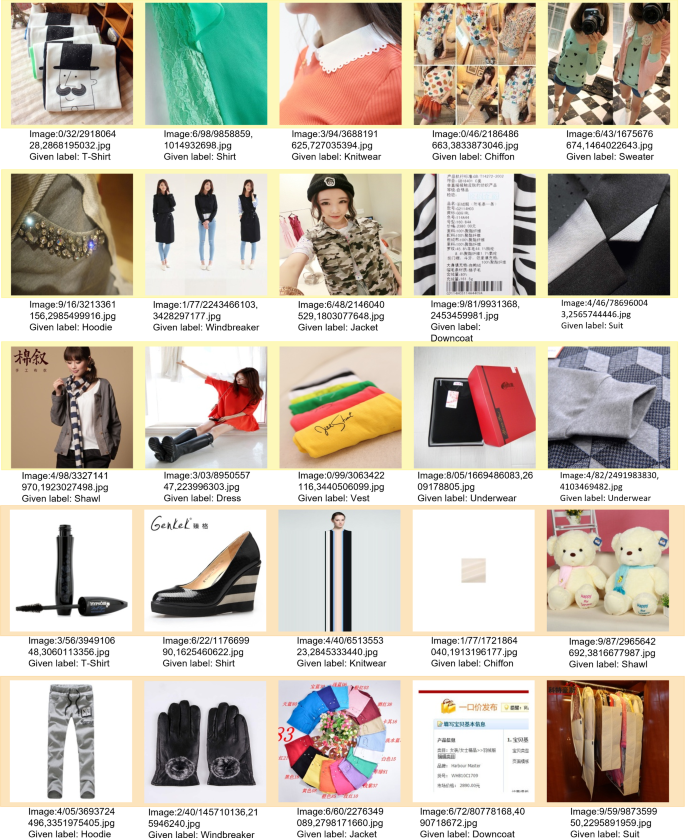

Clothing1M: Clothing1M (Xiao et al., 2015) has over 1 million images collected from online shopping websites. There are a total of 14 clothing classes. During data curation, labels are automatically assigned based on the keywords in the text surrounding the collected images, which may be incorrect. The authors also provide an additional clean training set with 50k images, a clean validation set with 14k images, and a clean test set with 10k images.

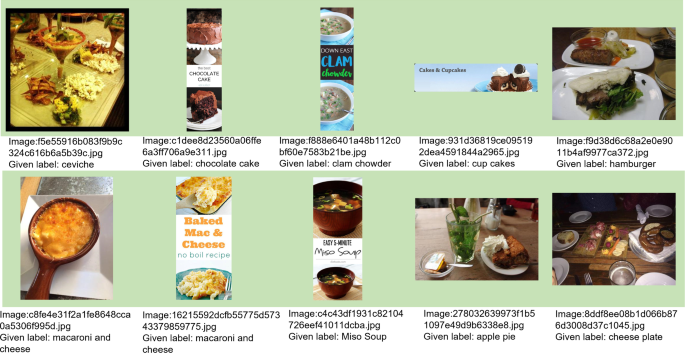

Food101/Food101N: Food101 (Bossard et al., 2014) contains 101k food images collected from foodspotting.com, while Food101N (Lee et al., 2018) contains 310k images collected from Google, Bing, Yelp and TripAdvisor. Both datasets use a common taxonomy of 101 food classes. For Food101N, there are 305k images in the training set, of which 53k images have verified labels. It also has a validation set containing 5k images with verified labels.

Mini WebVision 1.0: The mini WebVision 1.0 (Jiang et al., 2018) dataset is a subset of the WebVision 1.0 dataset (Li et al., 2017). The training set of mini WebVision 1.0 has 66k images collected from Flickr and Google, which collectively form the first 50 classes of the larger WebVision 1.0 dataset. The test set of mini WebVision 1.0 consists of 2.5k images with verified labels.

5.2 Experiments on Identification of NC Instances

This section compares the effectiveness of several divergences and methods to identify NC instances.

Baselines. We used 7 baselines in total, where 5 of these (Jo-SRC (Yao et al., 2021), DSOS (Albert et al., 2022), Delta divergence (Kittler & Zor, 2018), DC KL divergence (Ponti et al., 2017), and KL divergence) were introduced in Sect. 2. Our remaining two baselines are normalized entropy, which was described in the introduction, and the classic mean-squared error. Throughout, logarithms are taken over base 2, q is the prediction vector of an instance \((x^i, y^i)\), and p is a uniform vector, with the exception that in our method, p is instead a uniform-like vector. Each method determines whether \((x^i, y^i)\) is an NC instance, as described below; see also Appendix C for more details.

-

Jo-SRC (Yao et al., 2021) has two hyperparameters \(\tau _{{{\,\textrm{clean}\,}}}\) and \(\tau _{\text {OOD}}\). Let q and \(q'\) be two different prediction vectors of the same instance \((x^i, y^i)\) obtained under two data augmentations. If \(1 - {\mathcal {D}}_{\text {JS}}(q\Vert e(y^i)) > \tau _{{{\,\textrm{clean}\,}}}\), and if

$$\begin{aligned} \qquad \qquad \min \{1, \mid \!{{\,\mathrm{arg\,max}\,}}{q} - {{\,\mathrm{arg\,max}\,}}{q'}\!\mid \} > \tau _{\text {OOD}}, \end{aligned}$$then this instance is an NC instance.

-

Delta divergence (Kittler & Zor, 2018) has a hyperparameter \(\tau _{\Delta }\). If \({\mathcal {D}}_{\Delta }(p \Vert q) \le \tau _{\Delta }\), then \((x^i, y^i)\) is an NC instance.

-

DSOS (Albert et al., 2022) has two hyperparameters \(\gamma \) and \(\delta \). Let the output value of a beta mixture model with two components using input q be z. If collision entropy \(H_{2}(\frac{q+e(y^{i})}{2}) \ge -\log (\gamma )\) and \(z \ge \delta \), then \((x^i, y^i)\) is an NC instance.

-

DC KL divergence (Ponti et al., 2017) has a hyperparameter \(\tau _{\text {DC}}\). If \({\mathcal {D}}_{\text {DC}}(p \Vert q) \le \tau _{\text {DC}}\), then \((x^i, y^i)\) is an NC instance.

-

Mean Squared Error (MSE), which is defined by

$$\begin{aligned} \qquad \qquad {{\,\textrm{MSE}\,}}(p,q):= \frac{1}{k}{\sum _{i=1}^{k} ( p_i-q_i )^2 }, \end{aligned}$$has a hyperparameter \(\tau _{\text {MSE}}\). If \({{\,\textrm{MSE}\,}}(p,q) \le \tau _{\text {MSE}}\), then \((x^i, y^i)\) is an NC instance.

-

Normalized entropy has a hyperparameter \(\tau _{\text {Nor}}\). If normalized entropy is at least \(\tau _{\text {Nor}}\), then \((x^i, y^i)\) is an NC instance.

-

KL divergence has a hyperparameter \(\tau _{{{\,\textrm{KL}\,}}}\). If \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}(p \Vert q) \le \tau _{{{\,\textrm{KL}\,}}}\), then \((x^i, y^i)\) is an NC instance.

Experimental set-up. For Clothing1M, we used the 50k clean set as our clean instances. We also manually verified 200 NC instances out of approximately 2500 instances randomly selected from the 1 million noisy dataset. We used ResNet-50 (He et al., 2016) pretrained on ImageNet for all experiments in this section. To keep the class ratios invariant, we first used stratified sampling to randomly select \(10\%\) of the 50k clean set as test data. For the remaining \(90\%\) of the instances, we used stratified 5-fold cross-validation to split the data. The validation set is randomly shuffled and then selected with the same size as the test set. We randomly split the 200 NC instances into 2 folds of equal sizes: One fold is used to augment the validation set, while the other fold is used to augment the test set. A model is trained to generate prediction vectors for the respective (augmented) validation set, which has 100 NC instances; see Appendix C for further experiment details.

We obtained the test accuracies for all methods using the hyperparameters tuned on the validation set. Recall that P is our set of uniform-like vectors. For our experiments on the identification of NC instances, we used a set P with two vectors, one of which is the uniform vector, and we used \(\alpha =1.0247, \beta =0.0665\), and \(\sigma =0.06\).

Evaluation metrics. The main metrics used are F1 score and Cohen’s kappa score (Feuerman & Miller, 2005). Let the number of true positives, true negatives, false positives, and false negatives be denoted by TP, TN, FP and FN, respectively. Note that the number of predicted positives (resp. predicted negatives) is given by TP\(+\)FP (resp. TN\(+\)FN).

-

Precision is the ratio of true positives to predicted positives, i.e. given by \(\frac{\text {TP}}{\text {TP} + \text {FP}}\).

-

Recall, also known as sensitivity, is the true positive rate, i.e. given by \(\frac{\text {TP}}{\text {TP} + \text {FN}}\).

-

Specificity is the true negative rate, i.e. given by \(\frac{\text {TN}}{\text {TN} + \text {FP}}\).

In general, there is a trade-off between precision and recall. By adjusting probability thresholds, we can increase precision at the cost of decreasing recall, and vice versa. F1 score is a popular metric used to balance this trade-off between precision and recall, given by \(\frac{\text {TP}}{\text {TP} + \frac{1}{2}(\text {FP}+\text {FN})}\).

Similarly, there is a trade-off between sensitivity and specificity. Again, by adjusting probability thresholds, we can increase sensitivity at the cost of decreasing specificity, and vice versa. Cohen’s kappa score (Feuerman & Miller, 2005) is a popular metric used to balance this trade-off between sensitivity and specificity. This score is given by the formula \(\frac{2(\text {TP} \times \text {TN} - \text {FN} \times \text {FP})}{(\text {TP} + \text {FP}) \times (\text {FP} + \text {TN}) + (\text {TP}+ \text {FN}) \times (\text {FN} + \text {TN})}.\)

Experiment results. Our experiment results are reported in Table 2. Among all evaluated methods, we achieved the highest F1 score of 0.463, and the highest kappa score of 0.448. Note that our method achieves the highest recall/sensitivity of 0.508, which is a significant margin above the second highest value 0.488. For precision, our method has the value of 0.434, which is only marginally second to that of normalized entropy, 0.438. For specificity, all methods perform well, with specificity values at least 0.969. Our method has specificity 0.985, which is only marginally second to that of normalized entropy, 0.991.

Note that although normalized entropy (used in entropy maximization methods) has the highest precision and specificity (with our method coming a close second), its true positive rate (i.e. recall or sensitivity) is only 0.306, which is significantly lower than 0.508 achieved by our method. This means that our method is able to identify \(20.2\%\) more NC instances than normalized entropy.

5.3 Experiments on Web Image Classification

Baselines. We used 9 baselines in total, where 2 of the baselines, DSOS (Albert et al., 2022) and Jo-SRC (Yao et al., 2021), were already introduced in Sect. 2.1. The rest of the baselines are described as follows:

-

AFM (Peng et al., 2020) introduces a training block that suppresses mislabeled data via grouping and self-attention.

-

CleanNet (Lee et al., 2018) detects instances with label noise and assigns weights accordingly.

-

DivideMix (Li et al., 2020) uses sample loss to partition the training data into a clean set and a noisy set. Then, two networks are trained jointly based on each network’s data partition.

-

Joint optimization (Tanaka et al., 2018) tackles label noise by alternately updating network parameters and labels during training.

-

MetaCleaner (Zhang et al., 2019) uses a noisy weighting module to estimate weights for each instance and uses a clean hallucinating module to learn from weighted representations.

-

MoPro (Li et al., 2021) identifies clean, noisy and OOD instances and assigns pseudo-labels accordingly. “Momentum prototypes” are then computed, after which both cross-entropy loss and contrastive loss are jointly used to train the model with the newly assigned pseudo-labels and momentum prototypes.

-

SMP (Han et al., 2019) is an iterative self-training framework that measures data complexity and classifies data into several class prototypes. Models are trained on prototypes with the least complexity, which are assumed less likely to be noisy.

Experiment Set-up. Across all experiments on the Clothing1M, Food101/Food101N and mini WebVision 1.0 datasets, we used the same ResNet-50 architecture (He et al., 2016). For Clothing1M and Food101/Food101N, we initialize the ResNet-50 using weights pretrained on ImageNet. For mini WebVision 1.0, we follow the same set-up in our baselines (Li et al., 2020, 2021; Albert et al., 2022), and used the default random weight initialization.Footnote 7

For the Clothing1M dataset, we first trained on the combined set of 1 million noisy instances and 50k clean instances, then fine-tuned on the 50k clean set.

For the Food101/Food101N datasets, we followed the popular experiment set-up, where the models are first trained on the combined set of 306k noisy instances and 53k clean instances of Food101N, then fine-tuned on the 53k clean instances of Food101N. Evaluation is then done on the Food101 test set.

For the mini WebVision 1.0 dataset, there is no clean set provided. To identify clean instances, we followed the common set-up in MoPro (Li et al., 2021) and DivideMix (Li et al., 2020), and let \(X_{{{\,\textrm{clean}\,}}}\) vary over the epochs, where at the beginning of each epoch, we initialized \(X_{{{\,\textrm{clean}\,}}}\) as the empty sequence, then computed \(X_{{{\,\textrm{clean}\,}}}\) as follows: Using the weights from the previous epoch, an image is inserted into \(X_{{{\,\textrm{clean}\,}}}\) if and only if the prediction value corresponding to its given label exceeds 0.5. For the first epoch, since there is no previous epoch, we instead trained a model with cross-entropy loss over 10 epochs, and used the trained model (trained over these 10 epochs) to identify \(X_{{{\,\textrm{clean}\,}}}\).

Note that full experimental set-up details (for all methods, across all datasets) are provided in Appendix C.2. In particular, we trained all models until convergence.

In stage two of our GenKL framework, across all datasets, the set Q of prediction vectors is obtained by averaging over multiple models trained on \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\).Footnote 8 For subsequent iterations, the set Q of prediction vectors is obtained by averaging from the models in previous iterations. Throughout, for the Clothing1M dataset and the Food101/Food101N datasets, we used SGD with initial learning rate 0.001, and Nesterov momentum 0.9, while for the mini WebVision 1.0 dataset, we used SGD with initial learning rate 0.01. For Clothing1M, we used \(\omega _1=1\), \(\omega _2=32\), \(\omega _3=1\). For Food101/Food101N, we used \(\omega _1=20\), \(\omega _2=100\), \(\omega _3=1\). For mini WebVision 1.0, we used \(\omega _1=10\), \(\omega _2=32\), \(\omega _3=4\). For more training details (e.g. learning schedule), see Appendix C.

Experiment results. In comparison to all baselines, our proposed GenKL consistently achieved the highest test accuracies: \(81.34\%\) for Clothing1M, \(85.73\%\) for Food101/Food101N and top-1/top-5 accuracies \(78.99\%\)/\(92.54\%\) for mini WebVision 1.0. See Tables 3, 4 and 5 for full experiment results.

For Clothing1M, the next best replicable baseline (i.e. with publicly available code) after GenKL is DivideMix, which is on average \(1.86\%\) lower (\(79.48\%\) versus our \(81.34\%\)). For Food101/Food101N, although Jo-SRC (Yao et al., 2021) and AFM (Peng et al., 2020) have test accuracies closest to ours, it should be noted that these two methods are the two lowest-performing baselines when evaluated on Clothing1M. As for mini WebVision 1.0, we outperformed the second best method (DivideMix) by a significant margin of \(0.71\%\) for top-1 accuracy (\(78.99\%\) versus \(78.28\%\)), and a margin of \(0.16\%\) for top-5 accuracy (\(92.54\%\) versus \(92.38\%\)).

5.4 Ablation Study

In Table 6, we evaluate the performances of GenKL on the Clothing1M dataset when individual components are removed.

Averaging prediction vectors. When the set Q of prediction vectors was obtained from one single model (instead of being obtained by averaging from multiple models), we had a test accuracy of \(81.31\%\), which is a minor drop of \(0.03\%\) from the complete GenKL framework.

Stratified sampling. Our test accuracy dropped by \(0.09\%\) when vanilla random sampling was used in place of stratified sampling. This shows that our framework is not too affected by the imbalance between clean and non-clean (noisy and NC) instances.

Double-hot vector normalization. Recall that we used double-hot vectors for our iterative relabeling process in GenKL. By definition, these double-hot vectors are not stochastic vectors. We chose not to normalize these double-hot vectors because we could achieve higher test accuracies; cf. Section 3.6. For this part of our ablation study, we evaluated the effect of normalizing the double-hot vectors \({\bar{q}}^i\). By (4), the new j-th entry after normalization is \(\frac{{\bar{q}}^{i}_{j}}{\lambda + \lambda '}\). With this normalization, the resulting average test accuracy is \(81.18\%\), which is a slight drop of \(0.16\%\) when compared to the original GenKL framework without normalization. In particular, this shows that the updated label vectors (via our relabeling process) need not be stochastic to achieve better performance.

Iterative training. Recall that GenKL is an iterative framework.Footnote 9 To understand the effect of iterations, we report in Table 6 the test accuracy when the training stops after the first iteration. Among all the components evaluated, the removal of this component has the most significant impact, yielding a \(0.40\%\) drop in test accuracy.

5.5 Sensitivity Analysis

Recall from Sect. 3.5 that our GenKL framework has seven hyperparameters \(\alpha , \beta , \vert P \vert \), \(\sigma \), \(\omega _1\), \(\omega _2\) and \(\omega _3\). The first two hyperparameters (\(\alpha \) and \(\beta \)) come from our \((\alpha , \beta )\)-generalized KL divergence, \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\). The next two hyperparameters (\(\vert P \vert \) and \(\sigma \)) are used in stage one (identification of NC instances) of our GenKL framework to generate a set P of uniform-like vectors with associated standard deviation \(\sigma \). The last three hyperparameters (\(\omega _1\), \(\omega _2\) and \(\omega _3\)) are the weight factors for the respective three loss terms, (5), (6) and (7), in our loss function (8).

In Tables 7, 8, 9, 10, 11 and 12, we analyze the sensitivity of the performance of GenKL on the Clothing1M dataset, with respect to the values of the hyperparameters \(\alpha , \beta , \vert P \vert , \sigma \), \(\omega _2\), as well as with respect to the choice of the loss function for training. In particular, for our GenKL experiments on Clothing1M, we fixed \(\omega _1 = 1\) and \(\omega _3 = 1\) for simplicity. Hence, for our sensitivity analysis, we focused on \(\omega _2\), which is the weight factor of the loss term (6) computed on non-NC instances.

Sensitivity of hyperparameter \(\alpha \). Recall that \(\alpha \) is the weight for the negative entropy term \(- \alpha H(p)\) in our \((\alpha , \beta )\)-generalized KL divergence \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\); see (2). An instance is identified as an NC instance if \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q) \ge 0\). Hence, as the value of \(\alpha \) increases (note that \(\alpha > 0\)), the number of identified NC instances would decrease. We used \(\alpha =1.05\) in our GenKL experiments on the Clothing1M dataset. To see the effect of the value of \(\alpha \), we also report the performance of GenKL for \(\alpha = 0.90\) and \(\alpha =1.20\), while keeping other hyperparameter values the same; see Table 7. Our analysis demonstrates that the performance of GenKL is sensitive to the value of \(\alpha \) (and hence the number of identified NC instances), where a deviation of \(\pm 0.15\) in the value of \(\alpha \) (from the optimal value \(\alpha = 1.05\)) resulted in an approximate \(0.15\%\) drop in accuracy.

Sensitivity of hyperparameter \(\beta \). Recall that \(\beta \) appears in the positive term \(H(^{\beta }{p}{},q)\) in our \((\alpha , \beta )\)-generalized KL divergence \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\). The larger the value of \(\beta \), the larger this positive term \(H(^{\beta }{p}{},q)\) will be, and hence, more NC instances would be identified. Intuitively, \(\beta \) controls the threshold for when an entry of the prediction vector is considered dominant, where if \(\beta \) is too large, then almost all entries are considered dominant. We used \(\beta =0.03\) in our GenKL experiments on the Clothing1M dataset. Since Clothing1M has \(k=14\) classes, it means that our value \(\beta =0.03\) is slightly less than half of \(\frac{1}{k} \approx 0.07143\). To see the effect of the value of \(\beta \), we also report the performance of GenKL for \(\beta = 0.02\) and \(\beta =0.04\), while keeping other hyperparameter values the same; see Table 8. Our analysis demonstrates that the performance of GenKL is sensitive to the value of \(\beta \) (and hence the number of identified NC instances), where a deviation of \(\pm 0.01\) in the value of \(\beta \) (from the optimal value \(\beta = 0.03\)) resulted in an approximate \(0.2\%\) drop in accuracy.

Sensitivity of hyperparameter \(\vert P \vert \). Recall that P is the set of uniform-like vectors, such that each \(p \in P\) is used to compute \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\) for the identification of NC instances. As we vary p over all vectors in P, we take the union of all identified NC instances. Hence, as the value of \(\vert P \vert \) increases (note that \(\vert P \vert \ge 1\)), the number of identified NC instances is expected to increase. We used \(\vert P \vert =20\) in our GenKL experiments on the Clothing1M dataset. To see the effect of the value of \(\vert P \vert \), we also report the performance of GenKL for \(\vert P \vert = 1\) and \(\vert P \vert =10\), while keeping other hyperparameter values the same; see Table 10.

Sensitivity of hyperparameter \(\sigma \). Recall that \(\sigma \) is the standard deviation of the normal distribution \({\mathcal {N}}(\frac{1}{k},\,\sigma ^{2})\), used for sampling the value of each entry in a uniform-like vector \({p} \in P\) (before normalization). Hence, for a sufficiently large set P, as the value of \(\sigma \) increases (note that \(\sigma > 0\)), the number of identified NC instances would tend to increase. We used \(\sigma =0.05\) in our GenKL experiments on the Clothing1M dataset. To see the effect of the value of \(\sigma \), we also report the performance of GenKL for \(\sigma = 0.01\) and \(\sigma =0.1\), while keeping other hyperparameter values the same; see Table 11. Our analysis demonstrates that the performance of GenKL is sensitive to the value of \(\sigma \) (and hence the number of identified NC instances), where the value \(\sigma =0.1\) may be a little too large, resulting in a slight \(0.07\%\) drop in accuracy (as compared to \(\sigma =0.05\)).

Sensitivity of hyperparameter \(\omega _2\). Recall that \(\omega _2\) is the weight for the loss term \({\mathcal {L}}_{{{\,\mathrm{non-NC}\,}}}\) in (8). As the value of \(\omega _2\) increases, the contribution of the non-NC instances to the overall loss would increase. We used \(\omega _2 =32\) in our GenKL experiments on the Clothing1M dataset. To see the effect of the value of \(\omega _2\), we also report the performance of GenKL for \(\omega _2 = 1\) and \(\omega _2=64\), while keeping other hyperparameter values the same; see Table 12. Intuitively, when \(\omega _2\) is sufficiently large (e.g. in the range of \(\omega _2=32\) to \(\omega _2=64\)), the contribution of \({\mathcal {L}}_{{{\,\mathrm{non-NC}\,}}}\) to the overall loss has a regularization effect, thereby improving overall accuracy.

Sensitivity of the choice of loss function. Recall that we used cross-entropy loss as our loss function; see (5), (6), (7) and (8); cf. Section 3.6. To see the effect of the choice of loss function, we also report the performance of GenKL when the loss function is replaced by MSE, mean absolute error (MAE) and KL loss, respectively, while keeping all hyperparameter values the same; see Table 9. Our analysis demonstrates that our choice of cross-entropy loss is crucial for the outperformance of GenKL over the baselines.

6 Conclusion

We introduced the notion of non-conforming (NC) instances, which encompasses both ambiguous ID and OOD instances. Although there are numerous methods that tackle the problem of OOD instances, we are not aware of any method that explicitly tackles the problem of ambiguous ID instances, which are prevalent in web image datasets curated online. To tackle NC instances in a unified manner, we proposed a new generalized KL divergence, \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\), and an iterative training framework GenKL built upon this new generalized KL divergence. Moreover, we proved theoretical properties of \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\). The key advantage of using \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\) is that we can effectively identify more NC instances, including those whose predictions are not “almost” uniformly distributed, for which the usual approaches of entropy maximization and KL divergence minimization are unable to identify. We showed empirically that using \({\mathcal {D}}_{{{\,\textrm{KL}\,}}}^{\alpha , \beta }(p \Vert q)\) yields the best performance for NC instance identification. For our GenKL framework, we outperformed SOTA methods on real-world web image datasets: Clothing1M, Food101/Food101N and mini WebVision 1.0.

NC instances are unavoidable in web image datasets. Since the identification of NC instances is clearly a prerequisite step for tackling NC instances, we expect future work to further build upon the effectiveness of our new generalized KL divergence for NC instance identification.

Data Availability

In the interest of reproducibility, we have made our code available at https://github.com/codetopaper/GenKL. All experiments are conducted on publicly available datasets; see the references cited. For our experiments on the identification of NC instances, the list of the (manually verified) 200 NC instances in the Clothing1M dataset is also available at the same link.

Notes

This is a mild assumption, since softmax output layers are frequently used.

Normalized entropy refers to entropy divided by the maximum possible entropy (\(\log k\)). Here, \(\log 5\) is the entropy of x, and \(\log 10\) is its maximum possible entropy.

In particular, methods that deal with label noise typically have the implicit assumption that the given label for any instance must, with full certainty, be either correct or incorrect. Such an assumption omits the possibility that an instance could be ambiguous, whereby it is not clear what a single “correct” label would be, especially when there are multiple possible labels that could potentially be deemed “correct”. (See Sect. 3.1 for a rigorous definition of what “ambiguous instance” means precisely.) Consequently, under such an assumption, where ambiguous ID instances are assumed to not exist, the remaining NC instances (i.e. OOD instances), together with the unambiguous ID instances with incorrect labels, are then treated by these methods in a general manner as instances with label noise.

A more sophisticated definition for “main object of interest” is certainly possible. However, when defining NC instances, we only need the notion of “main object of interest” to be well-defined. Whichever semantics we choose to impose on this notion, our definition of NC instances would still make sense. Hence, we shall use our simplified definition for “main object of interest”.

Note that \(y'\) can be different from y. In particular, an instance with an incorrect given label may not be an NC instance; see Fig. 3 for a visualization.

We define \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\) to be the dataset that the initial model \({\mathcal {F}}(\cdot \mid \Theta ^{0})\) in the first iteration is pretrained on. Note that \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\) could be \({\mathcal {D}}_{{{\,\textrm{clean}\,}}}\), or \({\mathcal {D}}_{{{\,\textrm{main}\,}}} \cup {\mathcal {D}}_{{{\,\textrm{clean}\,}}}\). See Sect. 5.3 for more implementation details.

We used the PyTorch implementation of ResNet-50 with no pre-trained weights; see https://pytorch.org/vision/0.8/_modules/torchvision/models/resnet.html for default settings.

For Clothing1M, \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\) is the 50k clean training set. For Food101/Food101N, we used the entire training set (with both noisy and clean instances) as our \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\). For mini WebVision 1.0, we similarly used the entire training set as our \({\mathcal {D}}_{{{\,\textrm{pre}\,}}}\).

References

Albert, P., Ortego, D., Arazo, E., O’Connor, N.E., & McGuinness, K. (2022) Addressing out-of-distribution label noise in Webly–Labelled data. In Proceedings of the IEEE/CVF winter conference on applications of computer vision (pp. 392–401).

Arpit, D., Jastrzębski, S., Ballas, N., Krueger, D., Bengio, E., Kanwal, M.S., Maharaj, T., Fischer, A., Courville, A., & Bengio, Y. (2017). A closer look at memorization in deep networks. In International conference on machine learning (pp. 233–242). PMLR.

Bossard, L., Guillaumin, M., & Gool, L.V. (2014). Food-101–mining discriminative components with random forests. In European conference on computer vision (pp. 446–461). Springer.

Bossard, L., Guillaumin, M., & Van Gool, L. (2014) Food-101—mining discriminative components with random forests. In European conference on computer vision

Chan, R., Rottmann, M., & Gottschalk, H. (2021). Entropy maximization and meta classification for out-of-distribution detection in semantic segmentation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 5128–5137).

Feuerman, M., & Miller, A. (2005). The kappa statistic as a function of sensitivity and specificity. International Journal of Mathematical Education in Science and Technology, 36(5), 517–527.

Ghosh, A., Kumar, H., & Sastry, P. (2017) Robust loss functions under label noise for deep neural networks. In Proceedings of the AAAI conference on artificial intelligence (vol. 31).

Goldberger, J., & Ben-Reuven, E. (2016) Training deep neural-networks using a noise adaptation layer

Guo, S., Huang, W., Zhang, H., Zhuang, C., Dong, D., Scott, M.R., & Huang, D. (2018) Curriculumnet: Weakly supervised learning from large-scale web images. In Proceedings of the European conference on computer vision (ECCV) (pp. 135–150).

Han, J., Luo, P., & Wang, X. (2019) Deep self-learning from noisy labels. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 5138–5147).

Han, B., Yao, Q., Liu, T., Niu, G., Tsang, I.W., Kwok, J.T., & Sugiyama, M. (2020) A survey of label-noise representation learning: Past, present and future. arXiv preprint arXiv:2011.04406

Han, B., Yao, J., Niu, G., Zhou, M., Tsang, I., Zhang, Y., & Sugiyama, M. (2018) Masking: A new perspective of noisy supervision. Advances in Neural Information Processing Systems 31

He, K., Zhang, X., Ren, S., & Sun, J. (2016) Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778).

Helmstetter, S., & Paulheim, H. (2021). Collecting a large scale dataset for classifying fake news tweets using weak supervision. Future Internet, 13(5), 114.

Hendrycks, D., Mazeika, M., Wilson, D., & Gimpel, K. (2018) Using trusted data to train deep networks on labels corrupted by severe noise. Advances in Neural Information Processing Systems 31

Ioffe, S., & Szegedy, C. (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning (pp. 448–456). PMLR.

Jeffreys, H. (1998). The theory of probability. Oxford: OUP Oxford.

Jenni, S., & Favaro, P. (2018) Deep bilevel learning. In Proceedings of the European conference on computer vision (ECCV), pp. 618–633

Jiang, L., Zhou, Z., Leung, T., Li, L.-J., & Fei-Fei, L. (2018) Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels. In International conference on machine learning (pp. 2304–2313). PMLR

Kaur, P., Sikka, K., & Divakaran, A. (2017). Combining weakly and webly supervised learning for classifying food images. arXiv preprint arXiv:1712.08730

Kirsch, A., Mukhoti, J., van Amersfoort, J., Torr, P. H. S., & Gal, Y. (2021). On Pitfalls in OoD Detection: Entropy Considered Harmful. ICML: Uncertainty Robustness in Deep Learning Workshop.

Kittler, J., & Zor, C. (2018). Delta divergence: A novel decision cognizant measure of classifier incongruence. IEEE Transactions on Cybernetics, 49(6), 2331–2343.

Krogh, A., & Hertz, J. (1991) A simple weight decay can improve generalization. Advances in Neural Information Processing Systems 4

Lee, K.-H., He, X., Zhang, L., & Yang, L. (2018). Cleannet: Transfer learning for scalable image classifier training with label noise. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5447–5456).

Li, J., Socher, R., & Hoi, S.C.(2020). Dividemix: Learning with noisy labels as semi-supervised learning. arXiv preprint arXiv:2002.07394.

Li, W., Wang, L., Li, W., Agustsson, E., & Gool, L.V. (2017) Webvision database: Visual learning and understanding from web data. CoRR

Li, J., Xiong, C., & Hoi, S.C.H. (2021) Mopro: Webly supervised learning with momentum prototypes. ICLR

Liang, C., Yu, Y., Jiang, H., Er, S., Wang, R., Zhao, T., & Zhang, C. (2020) Bond: Bert-assisted open-domain named entity recognition with distant supervision. In Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery & data mining (pp. 1054–1064).

Lin, J. (1991). Divergence measures based on the Shannon entropy. IEEE Transactions on Information Theory, 37(1), 145–151.

Li, J., Song, Y., Zhu, J., Cheng, L., Su, Y., Ye, L., Yuan, P., & Han, S. (2019). Learning from large-scale noisy web data with ubiquitous reweighting for image classification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(5), 1808–1814.

Liu, L., Ouyang, W., Wang, X., Fieguth, P., Chen, J., Liu, X., & Pietikäinen, M. (2020). Deep learning for generic object detection: A survey. International Journal of Computer Vision, 128(2), 261–318.

Lyu, Y., & Tsang, I.W. (2019) Curriculum loss: Robust learning and generalization against label corruption. arXiv preprint arXiv:1905.10045

Ma, X., Huang, H., Wang, Y., Romano, S., Erfani, S., Bailey, J. (2020) Normalized loss functions for deep learning with noisy labels. In International conference on machine learning (pp. 6543–6553). PMLR

Maaz, M., Rasheed, H., Khan, S., Khan, F.S., Anwer, R.M., & Yang, M.-H. (2022). Class-agnostic object detection with multi-modal transformer. In European conference on computer vision (pp. 512–531). Springer.

Macêdo, D., & Ludermir, T. (2021) Enhanced isotropy maximization loss: Seamless and high-performance out-of-distribution detection simply replacing the softmax loss. arXiv preprint arXiv:2105.14399

Macêdo, D., Ren, T.I., Zanchettin, C., Oliveira, A.L.I., & Ludermir, T. (2021) Entropic out-of-distribution detection. In 2021 International Joint Conference on Neural Networks (IJCNN) (pp. 1–8). https://doi.org/10.1109/IJCNN52387.2021.9533899.