Abstract

Online reviews of products have a significant impact on consumers' purchasing decisions, making it important for both platform retailers and consumers to rank products, and eventually purchase products. With respect to the problem of product ranking that consists of the information contained in online reviews; by considering the sentiment intensity distribution of online reviews, we establish a fine-grained sentiment intensity analysis and then exploit grey incidence analysis and TOPSIS to establish a multi-attribute approach for product ranking. Finally, a case study of laptop purchases verifies the applicability and effectiveness of the proposed approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The rapid development of the Internet has fundamentally reshaped various industries, with e-commerce emerging as one of the most transformative sectors. E-commerce, often referred to as electronic commerce, involves the buying and selling of goods and services over digital platforms. It has provided both consumers and businesses with unprecedented levels of convenience and accessibility. Among the most prominent e-commerce models is the business-to-consumer (B2C) model, which facilitates direct transactions between businesses and individual consumers. This model has become integral to the daily lives of millions worldwide, particularly in China, where platforms such as Taobao, Jingdong, and Jindo dominate the online retail landscape. These platforms not only offer vast marketplaces for product purchases but also serve as spaces for consumers to share feedback through online reviews.

With the surge in online shopping, an increasing number of consumers base their purchasing decisions on the information available on these platforms. Reviews have become a critical part of this decision-making process, as consumers often rely on the experiences and opinions of others to assess product quality, performance, and value [7]. These online reviews, which range from brief comments to detailed evaluations, now play a crucial role in shaping consumer behavior and preferences. Additionally, they provide retailers and manufacturers with invaluable feedback, offering insights into customer satisfaction, product shortcomings, and areas for improvement [21]. Businesses that can harness these insights are better positioned to refine their offerings, ultimately enhancing customer satisfaction and driving higher sales [3, 10].

However, the exponential growth of e-commerce platforms has led to an overwhelming volume of customer feedback. Consumers are now confronted with vast amounts of reviews that they must sift through to extract relevant and useful information. This deluge of data poses significant challenges, as discerning valuable insights from the sheer volume of content can be both difficult and time-consuming [11]. Traditional methods, which often rely on predefined weights to evaluate products based on specific attributes, are increasingly inadequate. These methods fail to account for the diversity of consumer preferences, which vary significantly across different demographic groups. As a result, both consumers and retailers require more sophisticated tools to effectively navigate and analyze review data.

In response to these challenges, text mining techniques have emerged as a powerful solution for processing and analyzing large-scale review data. These techniques enable the extraction of meaningful patterns and insights from unstructured textual information, offering a more nuanced understanding of customer needs and preferences. By leveraging these methods, businesses can streamline decision-making processes and enhance their ability to deliver personalized, data-driven solutions that cater to a broad range of consumer demands.

Given this context, this paper proposes an innovative method that utilizes text mining techniques to incorporate the distribution of sentiment intensity in online reviews for product ranking. The proposed framework integrates sentiment analysis, feature extraction, grey relational analysis, and the TOPSIS method to facilitate multi-attribute product rankings, which is validated through a case study. The findings demonstrate that this approach efficiently processes and analyzes large volumes of review data, producing scientifically robust and credible product rankings. Unlike traditional methodologies, this approach does not require consumers to predefine attribute weights, reducing the complexity of user involvement while maintaining objectivity and accuracy in the rankings. This paper's approach shows broad applicability and practical significance, offering potential for widespread implementation across diverse product domains.

The rest of this paper is organized as follows. Section 2 provides a review of the relevant literature on methods of product ranking through online reviews. Section 3 constructs our approach to product ranking based on the sentiment intensity distribution of online reviews. Section 4 gives a realistic application of the proposed method and generates some discussion. Finally, the conclusion and some future research directions are given in Sect. 5.

2 Related Literature

Being important user-produced content, online reviews are of great significance to potential consumers, relevant enterprises, and platform retailers. To obtain valuable product information from online reviews for ranking products, an increasing number of scholars have devoted their time and energy to theoretical and practical discussions and analyses. According to the existing literature on product ranking based on online reviews, we divide the literature into two aspects: processing review information and the construction of product ranking methods.

2.1 Processing Review Information Methods

Processing online review data can help acquire information about products or services. The existing literature mainly study the product features and the consumer sentiment intensity by using different methods of data processing. Liu et al. [13] constructed a method for automatically extracting product features from user online reviews to improve the accuracy of product feature representation. Ji et al. [8] developed a method for review mining and demand acquisition based on the hierarchical nature of product attributes. Zheng et al. [28] produced a method for calculating sentiment intensity based on fuzzy statistics of sentiment words, and the results showed that both negation words and degree adverbs affect sentiment intensity. Li et al. [11] proposed an IWOM sentiment intensity measurement model based on the PAD three-dimensional sentiment model by using the ACSI model to divide the IWOM sentiment measurement model into four dimensions, and through mapping the sentiment in review feature words to the PAD sentiment space and calculate sentiment intensity to achieve consumer sentiment refinement. Meanwhile, in terms of the sentiment analysis of product features, the consumers’ sentiment orientation towards products is limited to "positive", "medium" and "negative”, which cannot represent consumers’ real sentiment [14, 15].

These studies provide a good exploration and mining of the emotional information contained in online reviews. However, the suggested processing of data inevitably leads to other problems, such as loss of original information. In most of the previous studies, the online reviews with neutral sentiment orientations are ignored. It is worth noting that these neutral emotional messages that are ignored often contain consumer hesitations about evaluating the product. In addition, all reviews have their specific published contexts, and the method for analyzing product features should be adapted to the specific evaluation subjects to improve the accuracy of the sentiment analysis. For example, in the sentiment analysis of the review statement, ''It's hot to use'', this is a negative review when the evaluation subject of the review is a mobile phone, and it is a positive review when the evaluation subject is a heater.

2.2 Construction of Product Ranking Methods

To empower consumers with convenient access to high-quality products and services, this study integrates state-of-the-art sentiment analysis techniques from the field of natural language processing with established multi-attribute decision-making (MADM) theories. By combining different methods, such as TOPSIS [14, 25], VIKOR [9], PROMETHEE II [14, 18], scholars constructed many novel and valuable methods for product ranking. By considering that the product feature values take on different expressional forms and consist of some incomplete and inaccurate information, some scholars have converted the information from online reviews into grey numbers [16], fuzzy numbers [18], probabilistic linguistic term sets [Liu and Teng 2019, 12] and intuitive fuzzy numbers [14, 15]. They consequently proposed various methods for ranking products. However, these methods inevitably result in a loss of sentiment information during the process of data conversion.

Among such studies, Chen et al. [2] established a grey 2-tuple linguistic model that combines online reviews with ratings for potential travel consumers to compare different travel services. However, the greyness for each online review was given by experts which limits the further development of this method. By considering that reviews collectively show a form of probability distribution, You et al. [26] used the criterion of foreground random dominance to construct a relationship matrix of foreground random dominance for the comparison of two alternative goods based on the information of online reviews and consumer expectations. They used the PROMETHEE-II method to rank alternative goods. By considering the temporal dynamics of online reviews, Wang et al. [22] designed a method for effective time-aware review ranking, providing consumers with a compact review from a combined perspective of consistency and time-awareness in light of product features and sentiment orientations. To well represent consumers’ sentimental tendencies contained in online reviews, Bi et al. [1] proposed a multi-granularity algorithm for sentiment intensity analysis to determine the sentiment intensity values of product attributes,they then used the method of random approximation ideal point ranking to determine the ranking of alternative products. Further, Shan et al. [19] used sentiment analysis of features to supplement users' preference opinions on products, and a collaborative filtering algorithm as the framework to build a hybrid recommendation algorithm based on user preferences and product features. Zhang and You [27] transformed the information from online reviews on each attribute of a product into a probability distribution, constructed a weighted distribution function for each attribute of the commodity, and combined it with TOPSIS to calculate the closeness of the integrated positive and negative ideal goods to rank alternative products. Wu et al. [23] proposed a novel group consensus-based travel destination evaluation method with online reviews to deal with the increasing tourism products and group tourists. Dash et al. [4] introduced a new Product feature-based Personalized Review Ranking, which aims to predict the usefulness of individual consumers' online reviews based on their product feature preferences through a potential category regression model, and to derive consumer categories using similarities among consumers. Derakhshan et al. [6] developed a two-stage sequential search model in which, in the first stage, the consumer sequentially filters the positions to observe the preference weights of the products placed in them and forms a consideration set. In the second stage, consumers look at the additional idiosyncratic utility available from each product and choose the product with the highest utility in their consideration set. Wu and Liao [24] proposed a product ranking method based on probabilistic language preference relations to solve the management problem of consumer preference uncertainty in e-commerce platforms. Through the interactive process to capture consumer preferences on product attributes, and designed based on language terminology and probability interface to express preferences, in order to be more close to consumers' expression habits. In order to solve the possible deceptive problems of rankings in online review platforms, such as fake reviews or ratings that are inconsistent with reality, Novas et al. [17] proposed a ranking model based on user-generated content and fuzzy logic. The proposed ranking model extracts topics from qualitative data (review text) and converts them into quantitative values through fuzzy logic. At the same time, the items are pairwise compared to extract a ranking ladder.

According to the above discussion and analysis, the existing research cannot represent consumers’ real sentiments by portraying the granularity of sentiment analysis and accurately extracting consumers’ emotional orientation and product features. And they cannot reduce sentiment information loss during the data conversion process. In the process of quantifying review information, online reviews that contain neutral sentiment are often ignored, resulting in the loss of sentiment information. To deal with these shortcomings, by considering the sentiment intensity distribution of online reviews, this paper proposes a method for fine-grained sentiment intensity analysis and establishes an approach for product ranking based on sentiment intensity distribution.

3 An Approach for Product Ranking Based on the Sentiment Intensity Distribution of Online Reviews

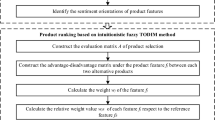

In an online platform, there exists a large amount of homogeneous product information and online reviews. Therefore, consumers must spend a lot of time and energy to conduct evaluations, comparisons, and selections of the most suitable products. In the process of shopping, consumers often look at their favorite products and relevant online reviews before making purchases [7]. However, these reviews are often voluminous and varied, making it difficult for individual consumers to go through and then select the right products [20], [21]. To describe and solve the problem of product ranking, in this section, we will construct a multi-attribute approach for product ranking based on the extraction of product features and sentiment analysis. The underlying decision process is shown in Fig. 1, where there are mainly four parts: online comment data acquisition and preprocessing, product feature extraction, sentiment analysis of the intensity distribution for online reviews, and product ranking based on grey incidence analysis and TOPSIS. The process is detailed below.

3.1 Description of the Problem of Product Ranking Based on Online Reviews

Online shopping includes a set of decision makers or buyers, alternative products and online reviews. These online reviews generally cover information about the products’ attribute, features and consumer's preferences. In fact, the problem of product ranking invade the alternative products and the number of online reviews with multi-attribute information. According to the above analysis and the elements of multi-attribute decision-making, to describe this product ranking decision problem with online reviews, some symbols and variables are given in this section shown in Table 1.

In a ranking decision problem with online reviews, assume that there exists a set of alternative products, a number of online reviews on the alternative products, and a collection of comments on some of the alternative products, which are denoted as \(A = \left\{ {A_{1} ,...,A_{i} ,...,A_{n} } \right\},B = \left\{ {b_{1} ,...,b_{i} ,....,b_{n} } \right\},R_{i} = \left\{ {R_{i1} ,....,R_{ik} ,...,R_{{ib_{i} }} } \right\}\), respectively, where,\(A_{i} ,b_{i} ,R_{ik}\) indicate the \(i{\text{th}}\) alternative product that consumers are interested in, number of comments on the alternative product \(A_{i}\), \(k{\text{th}}\) comment on the alternative product \(A_{i}\), \(i = 1,2, \cdots ,n,k = 1,2, \cdots ,b_{i}\). To extract emotional information about the attributes of the alternative products from comments, it is necessary to preprocess feature extraction. Assume that we can obtain preprocessed comment words, feature words, and feature word classes (which can be considered as the attributes of a product). Assume that \({\text{WD}}_{ik} = \left\{ {{\text{WD}}_{ik}^{1} ,...,{\text{WD}}_{ik}^{t} ,...,{\text{WD}}_{ik}^{{q_{ik} }} } \right\},W = \left\{ {W_{1} ,...,W_{l} ,...,W_{q} } \right\},\overline{W} = \left\{ {\overline{{W_{1} }} ,...,\overline{{W_{j} }} ,...,\overline{{W_{s} }} } \right\}\) express the set of the preprocessed comment words, feature words extracted from all alternative product reviews, and feature word classes formed by classifying the feature words, respectively. Here, \({\text{WD}}_{ik}^{t} ,W_{l} ,\overline{{W_{j} }} t = 1,2, \cdots ,q_{ik}\) denote \(t{\text{th}}\) word in preprocessed comment word set \(\text{WD}_{ik}\) of comment \(R_{ik}\),\(l{\text{th}}\) feature words extracted from all alternative product reviews, \(j{\text{th}}\) class of feature words, and the number of preprocessed comment word contained in the comment \(R_{ik}\), respectively. Assume that \(n_{j}\) stands for the total number of feature words contained in \(\overline{{W_{j} }}\), satisfying \(\sum\nolimits_{j = 1}^{s} {n_{j} } = n,j = 1,2 \cdots s\).

3.2 Acquisition and Preprocessing of Online-Review Data

To solve the problem of product ranking, it is necessary to first obtain and preprocess online-review information related to the alternative products. In this section, we will use Octopus software to crawl available comment information and preprocess the underlying online review information.

1. Selection of alternative products

Product selection on any platform is based on the platform's internal data, as the products you choose still selling on the platform. For example, the internal ranking list provided by Amazon platform is the most informative for product selection. That is what sellers often refer to as the Best Seller New Release, Movers & Shakers, Most Wished For, Gift Ideas. The data from the New Release and Movers & Shakers sectors are more informative.

2. Data crawling

Crawling the online reviews of the alternative products of a chosen class is the basis of obtaining information on product features and consumers’ preferences. In this paper, we use crawler software (bazhuayu.com) to crawl review information from shopping websites and obtain alternative products’ online-review data. We crawled over 10,000 comments related to alternative products, each containing multiple key fields including: comment link, page number, comment nickname, comment ID, comment homepage link, comment time, comment IP location, comment likes, comment level, and comment content. Among them, the comment levels include: root comment, second level comment, and second level expanded comment.

3. Data preprocessing

After having acquired many reviews, we preprocess the obtained comments. This step consists of four main parts is shown in the following.

①Noise reduction: In this process, some data, such as advertising information, copyright information, duplicate data, etc., are not relevant to the subject of study and need to be dealt with. Reducing noise out of the acquired online data, i.e. the removal of some useless and duplicated data is conducive to improving the efficiency and accuracy of the following data analysis.

②Word separation and part-of-speech tagging: Online reviews that are concerned with alternative products are fed into the ICTCLAS (a Chinese word separation system), and are separated into words and part-of-speech tagging.

③Stop word removal: Stop words are those words that appear frequently in Chinese sentences but have no real meaning. In order to improve the efficiency of sentiment analysis and reduce the dimensionality of the consequently developed feature vector, these stop words must be removed from the online-review information. These stop words are compared to those contained within a list of commonly used stop words to improve analysis efficiency and then removed from online comments after word separation and part-of-speech tagging.

④Feature word processing: After having removed stop words, all the remaining words in the acquired online reviews are sorted by word frequency counts; and low-frequency words and non-featured high-frequency words are eliminated from the rest of the analysis. Dictionary-based approaches deal with low-frequency words by constructing a special dictionary detailing each word in the language and its frequency of occurrence. High-frequency extraction is actually the term frequency (TF) strategy in natural language processing, which has the following two main interference items: (1) label symbols, which generally have no practical value (2) deactivated words: such as “is”, 'ha ' and other meaningless words.

After these four steps, as outlined above, we can obtain the set of preprocessed comment words \({\text{WD}}_{ik}\) for \(R_{ik}\). Further, we can obtain the set of comment words for all reviews.

3.3 Extraction of Product Features

After preprocessing all the acquired online reviews, the features or attributes of the alternative products will be extracted. There exist two main types of methods to extract feature words, which are algorithms developed to extract unsupervised and supervised feature words. The methods developed for unsupervised feature word extraction are mainly divided into three techniques based on statistical features (TF, TF-IDF), word graph models (PageRank, TextRank), and topic models (LDA), respectively. They help reduce a lot of time cost and enhance the accuracy and recall of the extraction by continuously improving the employed unsupervised algorithm. However, there exist some shortcomings. For example, these algorithms based on a lone keyword cannot achieve the desired effect of feature word extraction. To overcome such problems while still using the algorithms, in this section, we will propose an improved TF-IDF algorithm to extract product features. The improved algorithm includes two steps, feature word extraction, and feature word screening.

1. Feature word extraction

According to the information obtained from the preprocessed online reviews, we will analyze the contained words in these reviews. Relevant terms related to feature word extraction are explained as follows.

Word frequency: It expresses the number of times a word appears in a comment. The higher frequency a word appears, the more often the word is mentioned. The more reviews contain the word, the more attention people pay to the feature. For example, if a product is overpriced, the phrase "price is too high" will appear frequently in reviews, and the price will be mentioned frequently. Assume that \(A*\) represents one word. The occurrence of the word \(A*\) is represented by \(N_{A}\), where \(\left\{ {N_{{A{*}}} = \sum\nolimits_{r = 1}^{N} {A_{r} } ,A_{r} \in A{*}} \right\}\), \(A_{r}\) is the \(r{\text{th}}\) occurrence of the word \(A*,r = 1,2,...N\).

Term frequency: It represents the rate of one particular feature term among all feature terms, denoted as \({\text{TF}}_{A*}\). The higher the rate of a word’s frequency, the more important the feature word is among all identified feature terms, and the more likely it is to become the word that describes a product’s feature. Assume that a review is divided into a set of words \(\left\{ {A_{1} ,A_{2} , \cdots ,A_{N} } \right\}\). If there are \(M\) comments, then the set of these words can form an \(N \times M\) matrix. Let \(V\) stand for the set of all identified feature words, where \(V = \sum\nolimits_{r = 1}^{N} {\sum\nolimits_{p = 1}^{M} {B_{rp} } }\), and \(B_{rp}\) the word in the \(i{\text{th}}\) row and \(j{\text{th}}\) column. The total word frequency of \(A{*}\) can be represented by \(N^{\prime}_{A*}\), where \(\left\{ {N^{\prime}_{A*} = \sum\nolimits_{r = 1}^{N} {\sum\nolimits_{p = 1}^{M} {A_{rp} ,A_{rp} \in A{*}} } } \right\}\). Then, the formula for computing the rate of word frequency can be written as follows:

Inverse document frequency (IDF): It expresses the frequency of a document in which a word or phrase is found in the corpus as a whole and it is denoted as \({\text{IDF}}_{{A{*}}}\). The basic idea is that if the number of comments containing the word \(A{*}\) is smaller, i.e. \(N_{{A{*}}}\) is smaller, then \({\text{IDF}}_{{A{*}}}\) is larger. Let \(N{*}\) represent the total number of online reviews, and then, the expression of IDF can be written as follows:

Term frequency-inverse document frequency (TF-IDF): Word frequency-inverse document frequency is an index that measures feature words by combining word frequency and inverse document frequency. It is denoted as \({\text{TF - IDF}}\) and represents one of the most effective and commonly used feature extraction algorithms [9]. The formula of TF-IDF is given as follows:

2. Feature word screening

In the feature word extraction session, TF-IDF is calculated and sorted in order from largest to smallest to obtain the feature words that are related to the features of a product. As the extracted feature words vary greatly in semantic granularity and have a high feature word dimension, they must be filtered, otherwise, they will cause great computational trouble and increase the computational difficulty for the subsequent selection and analysis of product features.

In our paper, the product feature words are categorized with the help of the HIT synonym word forest (HIT IR-Lab Tongyici Cilin), which is a “Harbin Institute of Technology University Information Retrieval Research Center Synonym Thesaurus Expansion” with a large Chinese word list, which is formed by the Harbin Institute of Technology Information Retrieval Research Laboratory based on the frequency of the occurrence of words in the People's Daily Corpus by expanding the words and improving the structure and coding of the thesaurus. We first determine the standard term \(W_{j}^{*}\) for product attribute \(j\) by the product description displayed on the webpage. Afterward, the similarity between the top 100 nouns in TF-IDF value and the standard term \(W_{j}^{*}\) was calculated respectively according to the method proposed by Bi et al. (2016). If the similarity is greater or equal to 0.5, the feature word \(W_{j}\) and standard term \(W_{j}^{*}\) are classified into the same category. If the similarity is less than 0.5, the feature word \(W_{j}\) and standard term \(W_{j}^{*}\) are not the same class. After this process, we can obtain \(\overline{{W_{j} }}\), the set of feature word classes after classifying the feature words.

3.4 Sentimental Analysis of the Intensity Distribution of Online Reviews

Sentimental analysis is a good way to studying sentiments information in the online reviews. For different sentiment words on different features of a product, we build a respective sentiment dictionary of alternative product. And it improve the analysis accuracy of the sentiment intensity of the product’s features.

Let \({\text{WS}}^{i} = \left\{ {{\text{WS}}^{i}_{1} ,{\text{WS}}^{i}_{2} ,...{\text{WS}}^{i}_{{q_{i} }} } \right\}\) denote the set of sentiment words extracted from online reviews on the alternative product \(A_{i}\). Let \(WS\) denote the set of sentiment words extracted from online reviews on all alternative products. Then, \({\text{WS}}\) can be written as follows:

In addition, we deeply analyze the emotional information reflected in online comments and introduce the concepts of emotional intensity and emotional level. Assume that \({\text{WS}}_{j} = \left\{ {{\text{WS}}_{j}^{1} ,{\text{WS}}_{j}^{2} ,{\text{WS}}_{j}^{3} ,{\text{WS}}_{j}^{4} ,{\text{WS}}_{j}^{5} } \right\}\) stands for the reference word set of sentiment intensity level for \(j{\text{th}}\) class of feature words, and \({\text{WS}}_{j}^{ - } = \left\{ {{\text{WS}}_{j}^{1} ,{\text{WS}}_{j}^{2} } \right\},{\text{WS}}_{j}^{o} = \left\{ {{\text{WS}}_{j}^{3} } \right\},{\text{WS}}_{j}^{ + } = \left\{ {{\text{WS}}_{j}^{4} ,{\text{WS}}_{j}^{5} } \right\}\) the reference word set of negative, neutral, and positive sentiment intensity levels for the \(j{\text{th}}\) class of feature words, respectively.

Assume that \(\overline{{{\text{WS}}_{j} }} = \left\{ {\overline{{{\text{WS}}_{j} }}^{1} ,\overline{{{\text{WS}}_{j} }}^{2} ,\overline{{{\text{WS}}_{j} }}^{3} ,\overline{{{\text{WS}}_{j} }}^{4} ,\overline{{{\text{WS}}_{j} }}^{5} } \right\}\) stands for the dictionary of sentiment intensity levels for the \(j{\text{th}}\) class of feature words, and \(\overline{{{\text{WS}}_{j} }}^{ - } = \left\{ {\overline{{{\text{WS}}_{j} }}^{1} ,\overline{{{\text{WS}}_{j} }}^{2} } \right\},\overline{{{\text{WS}}_{j} }}^{\Delta } = \left\{ {\overline{{{\text{WS}}_{j} }}^{3} } \right\},\overline{{{\text{WS}}_{j} }}^{ + } = \left\{ {\overline{{{\text{WS}}_{j} }}^{4} ,\overline{{{\text{WS}}_{j} }}^{5} } \right\}\) the word set of negative, neutral, and positive sentiment intensity levels for the \(j{\text{th}}\) class of feature words, respectively. This paper provides indicators of sentiment intensity and intuitively expresses consumers' emotional information.

Firstly, we established a reference word set for emotional intensity. Then, we grouped synonyms and classified the sentiment words extracted from online comments based on the pre-established sentiment strength reference set. Finally, this article obtained a dictionary of sentiment intensity levels for the \(j{\text{th}}\) class of features. Through these steps, this paper has improved the quality of emotional intensity analysis.

Let \(\text{WS}_{j} = \left\{ {\text{WS}_{j}^{1} ,\text{WS}_{j}^{2} ,\text{WS}_{j}^{3} ,\text{WS}_{j}^{4} ,\text{WS}_{j}^{5} } \right\}\) stand for the set of pre-established, ordered reference words of sentiment intensity levels for \(\overline{{W_{j} }}\), and \(\text{WS}_{j}^{ - } = \left\{ {\text{WS}_{j}^{1} ,\text{WS}_{j}^{2} } \right\},\text{WS}_{j}^{o} { = }\left\{ {\text{WS}_{j}^{3} } \right\},\text{WS}_{j}^{ + } = \left\{ {\text{WS}_{j}^{4} ,\text{WS}_{j}^{5} } \right\}\) the reference word sets of negative, neutral and positive sentiment intensity levels for \(\overline{{W_{j} }}\), respectively. After using the synonym merging rule, \(\overline{{\text{WS}_{j} }}\), the dictionary of sentiment intensity levels for the \(j{\text{th}}\) class of feature words \(\overline{{W_{j} }}\) is obtained, where \(\overline{{\text{WS}_{j} }} = \left\{ {\overline{{\text{WS}_{j} }}^{1} ,\overline{{\text{WS}_{j} }}^{2} ,\overline{{\text{WS}_{j} }}^{3} ,\overline{{\text{WS}_{j} }}^{4} ,\overline{{\text{WS}_{j} }}^{5} } \right\}\). Let \(\overline{{\text{WS}_{j} }}^{ - } = \left\{ {\overline{{\text{WS}_{j} }}^{1} ,\overline{{\text{WS}_{j} }}^{2} } \right\},\overline{{\text{WS}_{j} }}^{\Delta } = \left\{ {\overline{{\text{WS}_{j} }}^{3} } \right\},\overline{{\text{WS}_{j} }}^{ + } = \left\{ {\overline{{\text{WS}_{j} }}^{4} ,\overline{{\text{WS}_{j} }}^{5} } \right\}\) represent the word sets of negative, neutral and positive sentiment intensity levels for the \(j{\text{th}}\) class of feature words, respectively.

In this paper, we divide the sentiment intensity into 5 levels. For example, if the sentiment intensity set is \(\left\{ { - 2, - 1,0,1,2} \right\}\), then "1" and "2" represents "good" and "very good" in the positive sentiment intensity scale, respectively, while "− 1" and "− 2" represents "poor" and "very poor" in the negative sentiment intensity scale, respectively, where "0" represents the neutral sentiment intensity scale. For convenience, we can use the set \(\left\{ {\alpha ,\beta ,\gamma ,\mu ,\eta } \right\}\) to represent the sentiment intensity set \(\left\{ { - 2, - 1,0,1,2} \right\}\). The sentiment intensity level from \(\alpha\) to \(\eta\) is –2–2.

Often, a product review may contain information about more than one feature word. So, it is necessary to identify reviews on different attributes of a product. Let \(\text{WD}_{ik}^{j}\) denote the review information about the attribute \(j\). as determined as follows. After using the synonym substitution rules, compare the feature words contained in \(\text{WD}_{ik}\) with the standard feature words in the set \(\overline{{W_{j} }}\). If \(\overline{{W_{j} }} \in \text{WD}_{ik}\), then extract all the adjectives, verbs, and adverbs between the two adjacent punctuation marks of the word. That produces \(\text{WD}_{ik}^{j}\), where \(\text{WD}_{ik}^{j} { = }\left\{ {\text{WD}_{ik}^{j1} ,\text{WD}_{ik}^{j2} \cdots \text{WD}_{ik}^{{jq_{j} }} } \right\}\) and \(q_{j}\) represents the total number of words in \(\text{WD}_{ik}^{j}\).

Considering that an online review may contain multi-sentiment intensity words for a certain feature, we design sentiment intensity recognition rules. The rules are given as follows: If a review for one product feature contains three kinds of sentiment word (neutral, positive, negative), then we assume the sentiment intensity level of the reviews is neutral. If a review for a product feature contains more than one positive word and does not contain a negative word, then we assume the most positive word indicates the sentiment intensity level of the feature. If a review for a product feature contains more than one negative word and does not contain a positive word, then the most negative word indicates the sentiment intensity level of the feature. And we use an indicator vector of sentiment intensity to indicate the sentiment intensity level.

Let \(s_{ik}^{j} = (\alpha_{ik}^{j} ,\beta_{ik}^{j} ,\gamma_{ik}^{j} ,\mu_{ik}^{j} ,\eta_{ik}^{j} )\) denote the indicator vector of sentiment intensity for the \(k{\text{th}}\) review of product \(A_{i}\) about attribute \(j\), where \(\alpha_{ik}^{j} ,\beta_{ik}^{j} ,\gamma_{ik}^{j} ,\mu_{ik}^{j}\), and \(\eta_{ik}^{j}\) are indicator variables for very poor, poor, neutral, good, and very good sentiment orientations, respectively, satisfying \(\alpha_{ik}^{j} ,\beta_{ik}^{j} ,\gamma_{ik}^{j} ,\mu_{ik}^{j} ,\eta_{ik}^{j} \in \left\{ {0,1} \right\}\),\(\alpha_{ik}^{j} + \beta_{ik}^{j} + \gamma_{ik}^{j} + \mu_{ik}^{j} + \eta_{ik}^{j} = \, 0{\text{ or }}1\), \(i = 1,2, \cdots ,n\), \(j = 1,2, \cdots ,s\), \(k = 1,2, \cdots ,b_{i} ,\) Here, \(s_{ik}^{j}\) is determined by going through the following algorithm:

Step 1 Determine whether the set \(\text{WD}_{ik}^{j}\) is empty. If \(\text{WD}_{ik}^{j} = \emptyset\), then \(s_{ik}^{j} = (0,0,0,0,0)\), otherwise skip to step 2.

Step 2 Judge whether the intersection of \(\text{WD}_{ik}^{j}\) and \(\overline{{\text{WS}_{j} }}^{ - }\) is an empty set. If \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{ - } = \emptyset\), then \(\alpha_{ik}^{j} = \beta_{ik}^{j} = 0\); if \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{ - } \ne \emptyset\) continue to judge whether or not \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{1} \ne \emptyset\). If true, then \(\alpha_{ik}^{j} = 1\); otherwise \(\alpha_{ik}^{j} = 0\). If \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{2} \ne \emptyset\), then \(\beta_{ik}^{j} = 1\); otherwise \(\beta_{ik}^{j} = 0\).

Step 3 Determine whether the intersection of \(\text{WD}_{ik}^{j}\) and \(\overline{{\text{WS}_{j} }}^{ + }\) is an empty set. If \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{ + } = \emptyset\), then \(\mu_{ik}^{j} = \eta_{ik}^{j} { = 0}\); if \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{ + } \ne \emptyset\), continue to check whether or not \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{4} \ne \emptyset\). If yes, then \(\mu_{ik}^{j} = 1\); otherwise \(\mu_{ik}^{j} { = 0}\). If \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{5} \ne \emptyset\), then \(\eta_{ik}^{j} { = }1\); otherwise \(\eta_{ik}^{j} = 0\).

Step 4 Determine whether the intersection of \(\text{WD}_{ik}^{j}\) and \(\overline{{\text{WS}_{j} }}^{\Delta }\) is an empty set. If \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{\Delta } = \emptyset\), then \(\gamma_{ik}^{j} = 0\); otherwise \(\gamma_{ik}^{j} = 1\).

Step 5 Determine whether \(\text{WD}_{ik}^{j}\) has a non-empty intersection with both \(\overline{{\text{WS}_{j} }}^{ + }\) and \(\overline{{\text{WS}_{j} }}^{ - }\). If \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{ + } \ne \emptyset\) and \(\text{WD}_{ik}^{j} \cap \overline{{\text{WS}_{j} }}^{ - } \ne \emptyset\), then \(s_{ik}^{j} = {(}0,0,0,0,0)\).

Step 6 If \(\alpha_{ik}^{j} { = }\beta_{ik}^{j} { = }1\), then \(\alpha_{ik}^{j} = 0,\beta_{ik}^{j} = 1\). If \(\mu_{ik}^{j} = \eta_{ik}^{j} = 1\), then \(\mu_{ik}^{j} = 0\), \(\eta_{ik}^{j} { = }1\).

Step 7 Output \(s_{ik}^{j} = (\alpha_{ik}^{j} ,\beta_{ik}^{j} ,\gamma_{ik}^{j} ,\mu_{ik}^{j} ,\eta_{ik}^{j} )\).

3.5 A Method for Product Ranking Based on Grey Incidence Analysis and TOPSIS

The product ranking problem is a typical multi-attribute decision-making problem. TOPSIS and grey relational analysis methods are beneficial for solving decision-making problems with uncertain information. We have developed a multi-attribute decision-making method by combining the advantages of these two methods. This method analyzes the emotional intensity level of the characteristics and attributes of alternative products. In this section, we will exploit grey incidence analysis and TOPSIS to construct a multi-attribute approach for product ranking based on the sentiment intensity distribution of online reviews.

For the product ranking based on online reviews, the paper calculates the sentiment intensity distribution of the alternative products over each attribute or feature based on the indicator vector of sentiment intensity. Assume that \(F_{i}^{j} = (\alpha_{i}^{j} ,\beta_{i}^{j} ,\gamma_{i}^{j} ,\mu_{i}^{j} ,\eta_{i}^{j} )\) denotes the sentiment intensity distribution of the product \(A_{i}\) for the attribute \(j\). Where \(\alpha_{i}^{j} ,\beta_{i}^{j} ,\gamma_{i}^{j} ,\mu_{i}^{j} {\text{ and }}\eta_{i}^{j}\) denotes the cumulative indicator variables for very poor, poor, neutral, good, and very good sentiment orientation, respectively. It is calculated as follows.

And then, let \(F_{i}\) stand for the sentiment intensity distribution of the alternative product \(A_{i}\) with respect to each attribute or feature over the products, and we can determine it. Where \(F_{i} = (F_{i}^{1} ,...,F_{i}^{j} ,...,F_{i}^{s} )\), \(i = 1,2, \cdots ,n, \quad j = 1,2, \cdots ,s\).

For consumers, there exist their most and least favorite products, which could be called the ideal and anti-ideal products and denoted as \(A^{ + } ,A^{ - }\), respectively. Assume that \(F^{j + } { = }(\alpha^{{j{ + }}} ,\beta^{{j{ + }}} ,\gamma^{{j{ + }}} ,\mu^{{j{ + }}} ,\eta^{{j{ + }}} ),F^{j - } { = }(\alpha^{j - } ,\beta^{j - } ,\gamma^{j - } ,\mu^{j - } ,\eta^{j - } )\) represent the ideal and anti-ideal sentiment intensity distributions over attribute \(j\), where \(\alpha^{{j{ + }}} ,\beta^{{j{ + }}} ,\gamma^{{j{ + }}} ,\mu^{{j{ + }}}\) and \(\eta^{{j{ + }}}\) denote the ideal cumulative indicator variables for very poor, poor, neutral, good, and very good sentiment orientations, respectively. And \(\alpha^{j - } ,\beta^{j - } ,\gamma^{j - } ,\mu^{j - }\) \(\eta^{j - }\) denote the anti-ideal cumulative indicator variables for very poor, poor, neutral, good, and very good sentiment orientations, respectively. For an ideal product, most people usually hold a good or very good sentiment orientation for it, so the values of \(\mu^{j + }\) and \(\eta^{j + }\) are the bigger the better. Besides, most people tend to hold less poor or very poor sentiment orientations for an ideal product. Therefore, the values of \(\alpha^{j + }\) and \(\beta^{j + }\) are the small the better. As for neutral sentiment orientation, it is difficult to determine whether the value of \(\gamma^{j + }\) is the largest or smallest among all \(\gamma_{i}^{j}\). Besides, in this part, we use the largest value of \(\gamma_{i}^{j}\) to represent the ideal product’s neutral sentiment orientation and the smallest value of \(\gamma_{i}^{j}\) to represent the anti-ideal product’s neutral sentiment orientation. Further analysis of the ideal value of neutral sentiment orientation is shown in Sect. 4.3.

According to the above analysis, the following hold trues:

Assume that \(F^{ + } ,F^{ - }\) stand for the ideal and anti-ideal sentiment intensity distributions over the alternative products, respectively, such that \(F^{ - } = (F^{1 + } ,...,F^{j + } ,...,F^{s + } )\), \(F^{ - } = (F^{1 - } ,...,F^{j - } ,...,F^{s - } )\). According to the above equations, we can determine these distributions.

Grey incidence analysis is an important part of the grey system theory [5]. In this article, we use the grey incidence analysis method to calculate the similarity between the product \(A_{i}\) and the ideal product. Next, we will briefly introduce it. Let \(X_{o} = (x_{o} (1),...x_{o} (c),\ldots,x_{o} (g))\) be the reference sequence and \(X_{f} = (x_{f} (1),...x_{f} (c)\ldots,x_{f} (g))\) be the behavior sequence. The grey incidence degree between them is calculated below.

\(r(x_{o} (c),x_{f} (c))\) is the grey incidence coefficient between \(x_{o} (c)\) and \(x_{f} (c)\),\(r(X_{o} ,X_{f} )\) is the grey incidence degree between \(X_{o}\) and \(X_{f}\),\(\xi\) is the discrimination factor, which is generally taken as \(0.5\).

Then, let \(r_{ij}^{\theta + }\) denote the grey incidence coefficient between the product \(A_{i}\) and the ideal product with respect to sentiment intensity level \(\theta\) over attribute (or feature) class \(j\). Then based on the idea and principle of grey incidence analysis, the following holds:

where

The symbol \(\xi\) stands for the discrimination factor, which is generally taken as \(0.5\), and for \(i = 1,2, \cdots ,n\),\(\theta \in \left\{ {\alpha ,\beta ,\gamma ,\mu ,\eta } \right\}\),\(m = \mathop {\min }\limits_{i} \mathop {\min }\limits_{\theta } \Delta_{i} (\theta )\),\(M = \mathop {\max }\limits_{i} \mathop {\max }\limits_{\theta } \Delta_{i} (\theta )\).

Let \(r_{ij}^{ + } ,r_{ij}^{ - }\) be the grey incidence degrees between the product \(A_{i}\) and the ideal and anti-ideal product concerning attribute \(j\), respectively. Then, according to the above calculations, it can be verified that the following hold trues:

From the perspective of consumers, they cannot obtain the subjective weight information of the underlying attributes. Therefore, it is necessary to determine the weight of each attribute in combination with the objective online review information and the product features. Let \(\omega_{j}\) denote the weight of the attribute \(j\). Considering that the alternative products are homogeneous, based on the principle of minimum deviation of grey incidence degrees, we can establish an optimization model to find all relevant weights as follows

By constructing a Lagrangian function, we can obtain the following equation.

According to the condition, for extreme points to existing, the following must be satisfied:

The solution is:

Assume that \(R_{i}^{ + } ,R_{i}^{ - }\) stand for the grey comprehensive incidence degrees between \(A_{i}\) and \(A^{ + }\),\(A^{ - }\), respectively. According to the weights obtained above, we can have

Let \(C_{i}\) represent the closeness degree of alternative product \(A_{i}\). According to the above grey comprehensive incidence degree \(C_{i}\) can be obtained by the following formula.

Obviously, the closer \(A_{i}\) is to the ideal product and farther from the anti-ideal product, the better \(A_{i}\) is. Namely, the greater \(C_{i}\) is, the better \(A_{i}\) is.

4 Case Study

Assume that a consumer wants to select and buy a brand-name laptop from the Jingdong shopping website (https://www.jd.com/), and he/she pays attention to laptops of four homogeneous brands of Dell, Honor, Xiaomi, and Lenovo. We use \(A_{1} ,A_{2} ,A_{3} ,A_{4}\) to respect Dell, Honor, Lenovo, and Xiaomi respectively. Let the collection of laptops be \(A = \left\{ {A_{1} ,A_{2} ,A_{3} A_{4} } \right\}\). On the Jingdong shopping website, some information about the four name laptops is shown in Fig. 2. According to this figure and online reviews over these brand-named laptops, the homogeneous laptops are very similar in terms of price and product attributes, and most consumers' reviews on the four laptops are relatively similar, and the overall product ratings are basically the same. Therefore, it is difficult for the consumer to choose from these four brand-named choices. To deal with this difficulty, we will exploit the proposed approach in this paper to rank the laptops based on online reviews.

The information of the four alternative laptops. On the Jingdong shopping website (https://www.jd.com/), some laptops' information about four homogeneous brands is shown in Fig. 2

4.1 Data Collection

According to the determined objective and data source of online reviews, we use Octopus collector (bazhuayu.com) to gather information from the online reviews of the alternative laptops from the Jingdong shopping website (https://www.jd.com/), which can ensure that these reviews contained as many attributes related to the laptops as possible. Some information about the online reviews is shown in Table 2.

Because Chinese uses characters as the basic unit of writing and cannot automatically identify and segment words. At the same time, even segmented Chinese text contains many special symbols and stop words, we preprocess the data. Let \(R_{i} = \left\{ {R_{i1} ,R_{i2} ,...R_{{ib_{i} }} } \right\}\) denote the set of laptop reviews and \(\text{WD}_{ik} { = }\left\{ {\text{WD}_{ik}^{1} ,\text{WD}_{ik}^{2} ,...\text{WD}_{ik}^{{q_{ik} }} } \right\}\) the set of review words for each review, where \(i{ = }1,2,3,4\) \(k = 1,2, \cdots ,b_{i}\) \(b_{1} = b_{2} = b_{3} = b_{4} = 1000\).

Then, we can use the TD-IDF algorithm to extract product feature words, calculate the word frequency-inverse document frequency of words, and sort in order from the largest to smallest to obtain feature words related to the product’s features. By utilizing product description information and HIT synonym word forest, we can categorize the product feature words, from which we can obtain classes of attributes or features. In particular, the classes are Performance, Cooling, Price, Screen, Battery endurance, and Appearance, respectively. Some processing results are shown in Table 3.

According to the extracted feature words over the attributes of the alternative laptops, we can construct a collection of sentiment words for the feature domain of the alternative laptops. So, we establish a positive sentiment dictionary \({\text{OCR}}_{j}^{ + }\), negative sentiment dictionary \({\text{OCR}}_{j}^{ - }\), sentiment enhancement level word set \({\text{HOWNet}}_{{{\text{adv}}}}^{ + }\), sentiment weakening level word set \({\text{HOWNet}}_{{{\text{adv}}}}^{ - }\), and negative word set \({\text{OCR}}_{{{\text{neg}}}}\) for the candidate product attribute domain. Some display words are shown in Table 4.

By using our proposed algorithm for recognizing sentiment intensity in this paper, we can determine \(s_{ik}^{j} = (\alpha_{ik}^{j} ,\beta_{ik}^{j} ,\gamma_{ik}^{j} ,\mu_{ik}^{j} ,\eta_{ik}^{j} )\), \(i = 1,2,3,4\), \(k = 1,2,...,q_{i}\), \(j = 1,2,3,4,5,6\) and obtain the sentiment intensity distribution of alternative the laptop \(A_{i}\) over attribute \(j\). For convenience, we only present partial sentiment intensity indicator vectors in this paper. The indicator vectors of partial sentiment intensity are shown in Table 5.

And then, according to Eqs. (5)–(9), we can acquire the sentiment intensity distribution of the product \(A_{i}\) over each attribute. The sentiment intensity distributions are shown in Table 4. We can note that there are some zero in Table 6. For example, the sentiment intensity value of \(\alpha\) respect to \(A_{1} ^{\prime}s\) attribute ‘performance’ is zero which means that no reviews contain the opinion that the performance of product A is very poor. That is, the performance evaluation of the product \(A_{1}\) by all buyers is positive.

4.2 Ranking Alternative Laptops

According to Eqs. (10)–(19), we can determine the sentiment intensity distributions of the ideal laptop \(A^{ + }\) and the anti-ideal laptop \(A^{ - }\). By using Eqs. (22)–(29), we can obtain the grey incidence degrees between each alternative laptop and the ideal laptop (and the anti-ideal laptop). The sentiment intensity distributions of the ideal laptop \(A^{ + }\) and the anti-ideal laptop \(A^{ - }\) and the grey incidence degrees between each alternative laptop and the ideal laptop (and the anti-ideal laptop) are shown in Tables 7 and 8, respectively.

According to Eqs. (30)–(33), we can determine the weights of the attributes and obtain \(\omega = (0.1631,0.15163,0.16599,0.14485,0.20965,0.16477)\). According to Eqs. (34) and (35), we can compute the comprehensive grey incidence degrees between the alternative product \(A_{i}\) and the ideal laptop, and the anti-ideal laptop as follows:\(R_{1}^{ + } = 0.7417,R_{1}^{ - } { = }0.66843\), \(R_{2}^{ + } { = } 0.669264,R_{2}^{ - } { = }0.823302\), \(R_{3}^{ + } { = } 0.746219,R_{3}^{ - } { = }0.707611\), \(R_{4}^{ + } { = } 0.806888,R_{4}^{ - } { = }0.674648\).

Then, according to Eqs. (36), we can calculate the relative closeness degrees of all the individual alternative laptops and obtain the following: \(C_{1} = 0.47852,C_{2} = 0.55294,C_{3} = 0.48468,C_{4} = 0.45262\). Therefore, according to these closeness degrees, we can produce the following ranking of the available laptops:\({\text{Honor}} \succ {\text{Xiaomi}} \succ {\text{Dell}} \succ {\text{Lenovo}}\).

According to the above discussion and analysis, our proposed approach can provide consumers with a decision support in order to understand the competitive situation of similar products in the market and identify directions for product improvement.

4.3 Scenarios Under Different Sentiment Intensity Distributions

In our proposed process of product ranking, the structure of the sentiment intensity distribution influences the decision results. Generally speaking, for an ideal product, there exist many more positive and neutral reviews along with a few negative ones. For an anti-ideal product, there are many more bad reviews along with a few positive and neutral ones. However, there is another possible case, where the ideal product, the sentiment intensity distribution takes on many more positive reviews and a few negative and neutral ones. And for the anti-ideal product, the sentiment intensity distribution takes on many more negative and neutral reviews along with few positive ones. To analyze the impact of a sentiment intensity distribution on ranking results, we discuss the effects of the ideal and anti-ideal sentiment distributions in this section.

Let \({\text{FF}}^{ + } = ({\text{FF}}^{1 + } ,...,{\text{FF}}^{j + } ,...,{\text{FF}}^{s + } )\) denote another sentiment intensity distribution of the ideal product, where \(i = 1,2,3,4\),\(j = 1,2,3,4,5,6,7\),\(b_{1} = b_{2} = b_{3} = b_{4} = 1000\), where

Let \({\text{FF}}^{ - } = ({\text{FF}}^{{{1} - }} ,...,{\text{FF}}^{j - } ,...,{\text{FF}}^{{{\text{s}} - }} )\) denote another sentiment intensity distribution of the anti-ideal product, where

The ranking results under different sentiment intensity distributions are shown in Table 9.

According to Table 5, the final ranking is influenced by the maximum or minimum values of the neutral sentiment intensity under the positive and negative ideal sentiment distributions. When the ideal sentiment distribution is \(F^{ + }\) and the anti-ideal sentiment distribution is \({\text{FF}}^{ - }\), the ranking result will change. \({\text{Xiaomi}}\) will become better than \({\text{Dell}}\). Therefore, the ideal value of the neutral sentiment intensity has an impact on product ranking. Based on these results, we can confirm the well-known belief that the neutral sentiment words do not necessarily indicate general attitude of consumers over the products of concern. Similarly, positive sentiment words do not necessarily mean the attitude of consumer satisfaction over the products. However, consumer ratings can reflect the overall attitude of consumers, and hence, the ideal value of the neutral sentiment intensity should be determined by combining the overall consumer ratings. When the overall consumer rating is high, the neutral sentiment of an ideal sentiment intensity distribution should take the smallest value, and the neutral sentiment of an anti-ideal sentiment intensity distribution should take the largest value; and vice versa. According to the above discussion and analysis, our proposed approach is more flexible in determining the ideal point; and it can deal with a variety of problems each of which involves multi scenarios.

4.4 Results Comparison Based on Different Methods

In previous studies, several methods for ranking products through online reviews have been proposed. However, in most of them, the product attributes and attribute weights are given in advance or determined by experts. To avoid attribute weights affecting the final ranking results, we assume that the weights of all attributes are the same, that is, \( {\omega _1} = {\omega _2} = {\omega _3} = {\omega _4} = {\omega _5} = {\omega _6} = = \frac{1}{6} \)\(\omega_{1} = \omega_{2} = \omega_{3} = \omega_{4} = \omega_{5} = \omega_{6} = = \frac{1}{6}\). Then, the method in our paper are compared with the methods proposed by Bi et al. [1], which are donated as the literature 1, 12 and 22, respectively. The ranking results under different methods are shown in Table 10.

As shown in Table 10, when traditional methods assume equal weights for all attributes—representing the most generalized case—our method produces similar or identical ranking results compared to the methods it is benchmarked against. However, other methods typically require manually assigned or predefined attribute weights during practical application. If these weight settings are inaccurate, they may lead to differing results. While these methods can generate comparable rankings, there are notable variations among them. In contrast, the attribute weights in our method are derived from an objective analysis of online review data, whereas the other methods rely on weights predetermined by buyers, which reflect individual consumer preferences. The distinct advantages of our method are twofold:

-

1.

Broader applicability: In most cases, it is difficult to accurately determine buyers' preference information, making it challenging to design precise attribute weights. Our method, free from such requirements, can be applied across a wide range of scenarios.

-

2.

Greater objectivity: The analysis of sentiment intensity and the final product ranking in our approach are based on a large volume of review data, without subjective input. This ensures a higher level of objectivity, providing a more reliable decision-making reference for both consumers and manufacturers.

The product ranking method proposed in this paper has significant practical implications for e-commerce platforms. By integrating advanced text analytics technology, the platform is able to more accurately capture and parse consumers' true emotions and preferences, enabling personalized product recommendations and dynamic ranking presentations. This approach enhances customer satisfaction, increases purchase conversion rates, optimizes the user experience, and increases user engagement. However, implementation has also faced challenges, including ensuring privacy compliance in data processing, technical challenges in processing large data sets, and adapting to rapidly changing consumer behaviors and market trends. In addition, platforms need to balance algorithm updates with user acceptance, ensure the privacy of consumer data, put in place appropriate data security measures to prevent information leakage, and be alert to possible bias and discrimination during the analysis process, without alienating existing users. Despite these challenges, the study's methodology provides a powerful tool for e-commerce platforms, and researchers and platform developers should establish strict ethical guidelines to ensure fairness and transparency in the application of technology, ranking products in a more refined and transparent way, and helping platforms gain an edge in the fierce market competition.

5 Conclusions

To deal with the problem of product ranking based on online reviews, this paper constructs a decision approach by using the sentiment intensity distribution of these reviews. According to the analysis of the approach and the case study, what is proposed here can avoid the loss of sentiment information that often occurs during the conversion process of data and accurately extract consumers’ emotional orientation and product features based on the sentiment intensity distribution of online reviews. Therefore, the proposed methodology presents an effective approach for extracting authentic consumer sentiment from online reviews, thereby providing a more scientifically robust foundation for product ranking. In contrast to alternative methods, it does not depend on prior knowledge of consumer preferences or predefined attribute weights, which enhances its practicality and objectivity. Furthermore, its broad applicability enables utilization across a diverse array of product categories without being restricted to specific domains, thus expanding its potential application in various contexts. A further significant advantage lies in its capacity to perform multi-granularity sentiment intensity analysis, facilitating rapid and precise evaluations and recommendations regarding product sentiment. This capability aids both consumers and online platforms in making more efficient and informed decisions, ultimately improving the decision-making process for all stakeholders involved.

Although this study proposes a novel product ranking method based on the sentiment-intensity distribution of online reviews and verifies its effectiveness through case studies, it also encounters some challenges and potential limitations during the research process. First, the methodology of this study does not take into account the individual risk appetite of consumers, which may affect the interpretation of the sentiment-intensity distribution and the final product ranking results. Second, the study mainly focused on the distribution of sentiment-intensity, but did not delve into the impact of consumer preferences on the distribution of sentiment-intensity and other potential information in the reviews, such as the specific needs and expectations of consumers. In order to overcome these limitations, we can proceed from the following aspects in future research. First of all, further consider the individual risk preference and personalized needs of consumers, and improve the personalization and accuracy of product ranking by integrating consumer behavior analysis and personalized recommendation algorithms. Secondly, in addition to sentiment-intensity, we can also consider building a comprehensive evaluation system including price, quality, service and other dimensions to evaluate the market performance of the product more comprehensively. Finally, considering the dynamic changes in the market and consumer preferences, a dynamic ranking model that can adapt to these changes is developed to achieve real-time or near-real-time product ranking updates.

Data availability

The data used to support the findings of this study will be considered by the corresponding author.

References

Bi, J.W., Liu, Y., Fan, Z.P.: Sorting method based on online reviews. J. Syst. Eng. 33(03), 422–432 (2018)

Chen, Y., Mi, C., Shan, X., et al.: A new method for evaluating tour online review based on grey 2-tuple linguistic. Kybernetes 43, 601–602 (2014)

Chen, Y., Xie, J.: Online consumer review: Word-of-mouth as a new element of marketing communication mix. Manag. Sci. 54(3), 477–491 (2008)

Dash, A., Zhang, D.S., Zhou, L.N.: Personalized ranking of online reviews based on consumer preferences in product features. Int. J. Electron. Commerce 25(1), 29–50 (2021)

Deng, J.L.: Introduction to grey system. J. Grey Syst. (UK). 1(1), 1–24 (1989)

Derakhshan, M., Golrezaei, N., Manshadi, V., et al.: Product ranking on online platforms. Manag. Sci. 68(6), 4024 (2022)

Elwalda, A., Lu, K.: The impact of online customer reviews (OCRs) on customers’ purchase decisions: an exploration of the main dimensions of OCRs. J. Custom. Behav. 15(2), 123–152 (2016)

Ji, X., Gao, Q., Li, X.F., et al.: Comment mining and demand acquisition methods considering the hierarchy of product attributes. Comput. Integr. Manuf. Syst. 26(03), 747–759 (2019)

Kang, D., Park, Y.: Review-based measurement of customer satisfaction in mobile service: sentiment analysis and VIKOR approach. Expert Syst. Appl. 41(4), 1041–1050 (2014)

Kostyra, D.S., Reiner, J., Natter, M., et al.: Decomposing the effects of online customer reviews on brand, price, and product attributes. Int. J. Res. Mark. 33(1), 11–26 (2016)

Li, J., Huang, W., Guo, S.L., et al.: Research on the emotion intensity measurement model of internet WOM public opinion–based on the PAD three-dimensional emotion Model. J. Inf. 38(03), 277–285 (2019)

Li, Y., Zhang, Y., Xu, Z.: A decision-making model under probabilistic linguistic circumstances with unknown criteria weights for online customer reviews. Int. J. Fuzzy Syst. 22(3), 777–789 (2020)

Liu, T., Zhang, C., Wu, M.Y.: Product feature extraction algorithm based on boundary average information entropy in online reviews. Syst. Eng. Theory Pract. 36(09), 2416–2423 (2016)

Liu, Y., Bi, J.W., Fan, Z.P.: A method for ranking products through online reviews based on sentiment classification and interval-valued intuitionistic fuzzy TOPSIS. Int. J. Inf. Technol. Decis. Mak. 16(06), 1497–1522 (2017)

Liu, Y., Bi, J.W., Fan, Z.P.: Ranking products through online reviews: a method based on sentiment analysis technique and intuitionistic fuzzy set theory. Inf. Fus. 36, 149–161 (2017)

Mi, C., Shan, X., Qiang, Y., et al.: A new method for evaluating tour online review based on grey 2-tuple linguistic. Kybernetes 43(3–4), 601–613 (2014)

Novas, D., Papakyriakopoulos, D., Kartaloglou, E.P.: A ranking model based on user generated content and fuzzy logic. Int. J. Hosp. Manag. 114, 103561 (2023)

Peng, Y., Kou, G., Li, J.: A fuzzy PROMETHEE approach for mining customer reviews in Chinese. Arab. J. Sci. Eng. 39(6), 5245–5252 (2014)

Shan, X.H., Wang, C.W., Liu, X.Y., et al.: Hybrid recommendation algorithm based on online reviews. System Engineering 37(06), 130–138 (2019)

Susan, M.M., David, S.: What makes a helpful online review? A study of customer reviews on amazon.com. MIS Q. 34(1), 185–200 (2010)

Thakur, R.: Customer engagement and online reviews. J. Retail. Consum. Serv. 41, 48–59 (2018)

Wang, C., Chen, G., Wei, Q.: A temporal consistency method for online review ranking. Knowl. Based Syst. 143, 259–270 (2018)

Wu, J., Hong, Q., Cao, M., Liu, Y., Fujita, H.: A group consensus-based travel destination evaluation method with online reviews. Appl. Intell. 52(2), 1306–1324 (2022)

Wu, X.L., Liao, H.C.: Managing uncertain preferences of consumers in product ranking by probabilistic linguistic preference relations. Knowl. Based Syst. 262, 110240 (2023)

Xi, Y., Fan, Z.P.: Attribute weight determination and scheme ranking method using online evaluation information. Control Decis. 31(11), 1998–2004 (2016)

You, T.H., Zhang, J., Fan, Z.P.: Commodity selection method based on online evaluation information and consumer expectations. China Manag. Sci. 25(11), 94–102 (2017)

Zhang, J., You, T.H.: Multi-attribute online review product selection method considering consumer expectations. J. Eng. Manag. 34(05), 24–31 (2020)

Zheng, L.J., Wang, H.W., Zheng, K.Q.: Research on the emotion strength of internet comments based on fuzzy statistics of emotion words. J. Syst. Manag. 23(03), 324–330 (2014)

Funding

This work is partially funded by the National Natural Science Foundation of China (71503103; 72372059); National Social Science Foundation of China (19FGLB031; 22AJL002); National Statistical Science Research Program of China (2024LZ015); Educational Planning Project of Jiangsu Province (ZYJN/2024/01); Outstanding Youth in Social Sciences of Jiangsu Province; Qinglan Project of Jiangsu Province, and Engineering Research Center of Integration and Application of Digital Learning Technology, Ministry of Education(1321005) and Special project of Jiangsu province Social Science support for Qinghai and Social Science Fund of Qinghai Province(23YQA-003), and the Fundamental Research Funds for the Central Universities (JUSRP622047;JUSRP321016) and Soft Science Foundation of Wuxi city (KX-24-A15). Even so, this work does not involve any conflict of interest.

Author information

Authors and Affiliations

Contributions

Sheng-qiang Gu, Shi-tong Liu and Yong Liu wrote the main manuscript text and Jia-ming Ding made analysis of case. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no financial and personal relationships with other people or organizations that can inappropriately influence our work, and there is no professional or other personal interest of any nature or kind in any product, service, or company that could be construed as influencing the position presented in, or the review of, the manuscript entitled.

Ethical approval

In this paper, there are no ethical issues involved and not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Gu, Sq., Liu, St., Liu, Y. et al. A Novel Product Ranking Approach Considering Sentiment Intensity Distribution of Online Reviews. Int J Comput Intell Syst 17, 277 (2024). https://doi.org/10.1007/s44196-024-00688-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-024-00688-1