Abstract

Social relationships, such as parent-offspring and friends, are crucial and stable connections between individuals, especially at the person level, and are essential for accurately describing the semantics of videos. In this paper, we analogize such a task to scene graph generation, which we call video social relationship graph generation (VSRGG). It involves generating a social relationship graph for each video based on person-level relationships. We propose a context-aware graph neural network (CAGNet) for VSRGG, which effectively generates social relationship graphs through message passing, capturing the context of the video. Specifically, CAGNet detects persons in the video, generates an initial graph via relationship proposal, and extracts facial and body features to describe the detected individuals, as well as temporal features to describe their interactions. Then, CAGNet predicts pairwise relationships between individuals using graph message passing. Additionally, we construct a new dataset, VidSoR, to evaluate VSRGG, which contains 72 h of video with 6276 person instances and 5313 relationship instances of eight relationship types. Extensive experiments show that CAGNet can make accurate predictions with a comparatively high mean recall (mRecall) when using only visual features.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recent advances in the study of the synergy between vision and language have led to the understanding of video content, where the analysis of the relationships between two persons/objects has received much attention [1–3]. Existing research has mostly focused on the detection of visual relationships between objects [1, 2], i.e., the interactions and spatial relationships represented visually in one or more video frames, while less attention has been paid to the more stable and essential relationships, such as social relationships [3–5]. As an indispensable part of people’s daily lives, social relationship refers to the association between different people, such as colleagues, couples and friends [6].

Social relationship analysis lays the groundwork for high-level visual reasoning tasks. This analysis enhances the generation of descriptive visual captions [7, 8], such as “the father gives an apple to his son” instead of “a man gives an apple to a child”. It also improves the accuracy of answers to visual questions [9, 10]. For instance, it is able to answer “his father” to the question “who is giveing the child an apple?” instead of just “a man”. In addition, social relationship analysis enables the extraction of individuals’ attributes in social media networks and facilitates the provision of personalized recommendations and services [11].

Traditional research on social relationship analysis focuses primarily on recognizing social relationships in images [12–15]. However, image-based social relationship analysis methods are not suitable for video analysis tasks because they lack the ability to aggregate temporal information and recognize related pairs of people that do not appear in the same frame. Previously, video-based methods only annotated one or more social relationships in a given video [3, 4] without providing person-level social relationship analysis. The effectiveness of understanding video content is limited because, especially when there are multiple people in the videos, existing methods are unable to determine which pair of people or relationship is being described or which relationship is being mentioned.

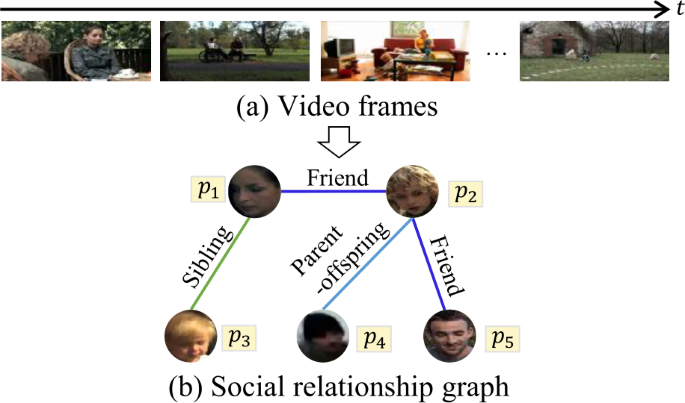

Person-level social understanding [16–18] requires predicting the social relationship between two persons. We draw an analogy between this task and scene graph generation [19–21], where the goal is to construct a graph of social relationships between individuals in a video. We refer to this task as video social relationship graph generation (VSRGG). Given a video V (Fig. 1 (a)), VSRGG requires detecting the set of people \(P={p_{k}}\) appearing in V, and determining the social relationship of each pair of people \(< p_{i},p_{j}>\) or no social relationship between them. VSRGG presents the social relationship analysis result of a video in the form of a graph, named social relationship graph (SRG), where each vertex denotes a detected person in P and the edge between two vertexes denotes the social relationship of the corresponding pairs of people (Fig. 1 (b)).

An example of the generation of a social relationship graph on a given video, where p represents person and t represents time (source from the DVU challenge [22])

Compared to existing tasks for analyzing social relationships in images and videos, VSRGG faces several technical challenges. The richness of pairs of people with social relationships in videos exceeds that of images. In video, two individuals can have a social relationship without appearing in the same frame. This increases the computational complexity and costs of VSRGG compared to the tasks analyzing social relationships in images. Furthermore, detecting social relationships at the person-pair level proves to be more challenging than detecting social relationships at the video level. Because the performance of the methods in VSRGG relies heavily on the accuracy of person detection, we introduce another task called weak video social relationship graph generation (VSRGG∗). VSRGG∗ takes video, along with a collection of character faces and bodies, as input to generate the SRG. In other words, VSRGG∗ uses annotated faces and bodies instead of detected faces and bodies. Compared to VSRGG, VSRGG∗ focuses specifically on the ability of the method to perform classification.

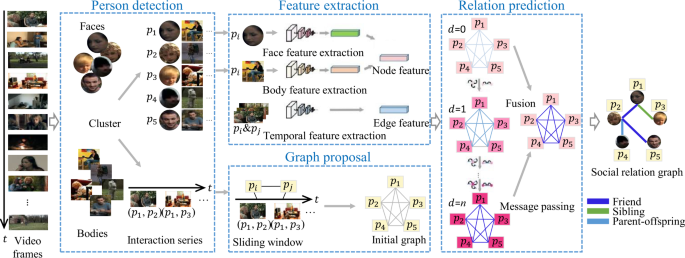

Most methods for VSRGG use multimodal features extracted from video, audio, and transcripts [5, 16–18, 23–25]. However, visual features are not completely exploited, especially in the fusion of character features and temporal context features. The methods in Refs. [5, 16, 18, 23, 25] encode visual features sequentially, while the methods in Refs. [17, 24] encode graphs to update visual features. Sequential encoding methods often combine temporal information with character features using a simple concatenation strategy. Wu et al. [24] proposed combining frame-level graph convolutional network (GCN) and clip-level GCN to generate the final social graph. Frame-level GCN updates character representations and character-pair representations with multi-modal and multi-view information on each frame. Long short-term memory (LSTM) is used to temporally accumulate these representation features and generate the input node of the clip-level graph. Hu et al. [17] used an overall GCN and a distinctive-level GCN. The overall level GCN with intra-edges and inter-edges propagates the representation of each character. The distinctive-level GCN focuses on the interaction between two characters. LSTM is also used to encode visual features temporally. The two methods both encode temporal context with LSTM, which is still a sequential-style encoder with limited whole-time encoding capabilities. In this paper, we introduce a novel VSRGG method, named the context-aware graph neural network (CAGNet), which focuses on visual information encoding for social relationship analysis in video. Figure 2 shows an overview of CAGNet. CAGNet exploits the message passing mechanism to capture visual context while taking temporal information into account. To address the limited presence of social relationships in video, we propose a graph proposal module to construct a sparse SRG. Each edge in the graph represents a potential social relationship between two individuals. Then, facial body and interaction features are extracted. Next, we propose a cross-vertex message passing mechanism that incorporates temporal context information into each edge representation during prediction, which can encode visual features in a global view. We employ both temporal context edge representation and discriminative vertex representation during prediction to identify the social relationship of each edge and remove edges without social relationships.

An overview of the proposed context-aware graph neural network (CAGNet) method (source from the DVU challenge [22]). d denotes the d-th iteration in message passing and n represents the numbers of iterations

The early video social relationship analysis datasets [3, 4] are annotated with video-level labels. Currently, MovieGraphs [26] is the most frequently used dataset for VSRGG, which was collected from 51 movies and focused on human-centered situations such as people’s interactions, relationships, emotions, and motivations. BiliBili [16] videos have been collected for VSRGG; however, they have not been published yet. Hence, we construct another video social relationship dataset named VidSoR for VSRGG. The dataset consists of 1798 video clips with 8 relationships. In comparison to MovieGraphs, VidSoR has more valid video clips, the average duration of the clips is longer, and there are more average relationship instances in each clip. Thus, our dataset presents significant challenges in social relationship detection. We evaluate the performance of the proposed CAGNet method using this dataset. The results demonstrate that GACNet outperforms state-of-the-art baselines.

In summary, the contributions of this study are as follows.

-

1)

We create VidSoR, a more challenging dataset for evaluating VSRGG. This dataset was collected from a larger number of data sources, and consists of more valid clips with a greater number of relationship instances and a longer average duration.

-

2)

We propose a novel method called CAGNet for VSRGG. This method incorporates a temporal message passing mechanism to effectively utilize visual information in a global view. In addition, it achieves superior performance to methods that rely on multi-modal features.

2 Related work

2.1 Social relationship recognition

As an important component of social network analysis, researchers have devoted significant attention to image social relationship recognition. Initially, researchers focused primarily on kinship recognition or verification, as demonstrated by the studies in Refs. [27–31]. Inspired by Ref. [32], Zhang et al. [6] utilized relationship traits to represent diverse relationships and employed human facial expressions to explore relationship traits between individuals. Based on domain theory [33], Sun et al. [12] introduced a dataset named “people in photo album”, which included pair-wise relationships such as father-son, friends, and colleagues. A double-stream CaffeNet was utilized to perform classification in their works. Li et al. [13] defined social relationships according to prototype theory [34] and proposed a dataset named “people in social context”. In their work, they introduced a dual glance net that could capture individual visual information and extract information of background objects. However, since static images are unable to capture the temporal dynamics between characters, this task may differ considerably from our task. Wang et al. [14] linked background objects with relationships between people and constructed a knowledge graph to assist in social relationship classification and reasoning. Zhang et al. [35] extracted global knowledge and intermediate-level information from the relative position of the image scene and objects to infer social relationships between people. Fine-grained information on key points of the human body was used to establish a person-pose graph. Goel et al. [15] presented the initial social relationship graph generation task. Different from our social relationship graph, they utilized age and gender to distinguish different person instances rather than faces. They employed a message passing mechanism to merge the vertex feature with the edge feature and used the merged feature for pair-wise relationship prediction. In contrast, we pass messages for bi-direction, which enables each edge representation to capture the whole context of the social relationship graph.

2.2 Video social relationship analysis

In recent years, researchers have begun to pay attention to video social relationship recognition. Lv et al. [3] defined video social relationship recognition as a multi-label video classification task and proposed the first video social relationship recognition dataset named social relationship in videos (SRIV), which contained approximately 3000 video clips with multi-label annotation. They designed a multi-stream model to extract and fuse features of RGB images, audio series and optical flow and used a late fusion strategy to merge different features for final classification. Liu et al. [4] introduced the first single label video social relationship dataset, the video social relationship (ViSR) dataset. In their work, three types of graphs were constructed that were designed to capture the behavior of each person, the interaction between different people, and the person-object relationship separately. Moreover, a novel pyramid graph convolutional network was designed to extract features from three graphs, which were recently merged with the global feature to perform final classification. MovieGraphs [26] and HLVU [36] were constructed for person-level social relationship analysis in videos. Kukleva et al. [5] presented a joint framework to predict both interactions and relationships between characters utilizing visual and textual features. Cao et al. [23] proposed fusing spatio-temporal and multi-modal semantic knowledge in videos. Xu et al. [16] designed a multi-stream architecture for jointly embedding visual and textual information after character pair searching. Multi-modal cues in a hierarchical-cumulative GCN structure was integrated by Wu et al. [24] to generate the social graphs for characters. Teng et al. [25] proposed a self-supervised multi-modal feature learning framework based on the Transformer model. Hu et al. [17] introduced a hierarchical-cumulative graph convolutional network to integrate the short-term multi-modal cues to generate frame-level graphs and aggregate all frame-level subgraphs along the temporal trajectory to construct a global video-level social graph with various social relationships among multiple characters. Hu et al. [18] integrated automatic speech recognition, natural language understanding, face recognition and face clustering, and extracted multi-modal video relationships.

2.3 Scene graph generation

Another related topic is the generation of scene graphs, which is widely studied in the computer vision field to describe the spatial and structural relationships between objects. In fact, the concept of using graph-based context to improve scene understanding has been explored by many studies in recent decades [37, 38]. For example, Johnson et al. [39] were the first to introduce the problem of modeling objects and their relationships using scene graphs, which aimed to simultaneously detect objects and their pairwise relationships. Zellers et al. [40] proposed capturing higher-order repeated structures of scene graphs for better performance. Similarly, Yang et al. [41] developed an attention-aware GCN framework to update node and relationship representations by propagating context between nodes in candidate scene graphs, and RNNs were used by Xu et al. [42] to jointly refine object and relationship features in an iterative way to construct the scene graphs. Wu et al. [24] introduced a GCN to generate the social relationship graph for multiple characters in videos.

Inspired by the structural representation of scene graphs, we approach the video social relationship recognition task by generating a social graph.

3 Method

To investigate visual information for analyzing social relationships in videos, we propose a context-aware graph neural network composed of four modules: a person detection module, a graph proposal module, a feature extraction module and a relationship prediction module. The person detection module identifies faces and bodies in a given video and groups them together as characters. The graph proposal module builds a character graph that includes features of characters and their interactions. The feature extraction module produces features for faces, bodies and frames that capture person interactions. The relationship prediction module utilizes message passing to combine entity features and context features, thereby enhancing the representation capability of graph features for predicting social relationships.

3.1 Person detection

We first need to find the main characters in the videos to detect relationships between people. Inspired by Ref. [12], we detect both faces and bodies for further feature extraction. One keyframe per second is selected for each clip and faces in keyframes are detected using RetinaFace [43]. The faces are clustered via consensus-driven propagation [44] and each cluster is treated as a detected person. To filter out unimportant people, only the clusters with required number of faces (at least six faces in our experiments) are retained. Moreover, similar to Ref. [13], bodies appearing in keyframes are detected by Faster-RCNN [45], which is pre-trained on the MSCOCO dataset [46]. Although many effective pedestrian detection models have been proposed, these pre-trained models perform worse than the pre-trained Faster-RCNN on the MSCOCO dataset. For example, the state-of-the-art Cascade Mask-RCNN [47] pre-trained on the CrowdHuman [48] dataset achieves only 58.01% coverage rate of the pre-annotated faces, while Faster-RCNN pre-trained on the MSCOCO achieves 99.24% coverage rate. A possible explanation is that the pedestrian detetion models are usually pre-trained on the datasets with entire small bodies, but people appearing in the VidSoR dataset are mostly cut off and noticeable, which is more similar to the case in the MSCOCO dataset. Considering that the performance of the pre-trained Faster-RCNN is sufficient, we do not re-train pedestrian detection models but use Faster-RCNN for body detection.

Cross validation between bodies and faces detected is utilized for body detection filtering. If the bounding box of a detected body can cover more than 95% of a detected face, the detected body is retained and assigned to the face. Moreover, if all the faces of a person do not have assigned body, that person is omitted. If more than one body is assigned to the same face, we randomly select one of the assigned bodies. Based on face clustering and face-body cross validation, the person set in a video is extracted as \(P = \{p_{i}|i=1, 2, 3, \ldots \}\). Each person is represented by a series of faces and bodies. Each person \(p_{i}\) is treated as a vertex in the SRG. Moreover the interaction series \(C = \{c_{t}|t=1,2,3, \ldots \}\) is constructed, where each \(c_{t}\) represents the collection of people that appear at time t.

3.2 Graph proposal

Each SRG of a video consists of the vertices representing the people appearing in the video and the edges representing their social relationships. Considering that an SRG is sparser than a complete graph, we propose the potential edges between detected people to reduce the computational cost in relationship prediction.

The potential edges between the vertices in the SRG are proposed based on whether the corresponding two people have potential social relationship. We assume that two people with a social relationship should have interactions at some points in the video. Interactions are determined via the following method: co-occurrence of the two people within a sliding window of several adjacent keyframes. In our experiments, the size of the sliding window is set to 2, i.e., two people are considered to be interacting if they appear in the same keyframe or two adjacent keyframes. As illustrated in Fig. 2, in the graph proposal module, we add an edge between the corresponding two vertices for each pair of person appearing in the same sliding window.

Following the procedures mentioned above, an initial graph \(G_{0} = \{\{v_{i}\}, \{e_{mn}\}\}\) is generated, where \(v_{i}\) denotes the vertex representing person \(p_{i}\) and \(e_{mn}\) denotes an edge between vertex \(v_{m}\) and \(v_{n}\) in \(G_{0}\).

3.3 Feature extraction

Social relationship detection is related to both the personal attributes of people, such as age, sexuality and clothing, and the interactions between people. Thus, we extract face features and body features for VSRGG. There are many face instances and body instances in the keyframes for each person \(p_{i}\) in the video. If we extract features for all the face instances and body instances, the features would be large-scale and redundant. Hence, only the most representative instances for each person are selected for feature extraction. Since clear and front face can provide more effective features, face instance \(\hat{f_{i}}\) with the largest area is selected. Bodies in videos, especially movies and dramas, are often incomplete due to occlusions or close-ups, and thus the body instance \(\hat{b_{i}}\) with the maximum height-width ratio is selected to capture the whole body of each person.

Features are extracted based on \(\hat{f_{i}}\) and \(\hat{b_{i}}\), using a VGG16 model [49] pre-trained on the UTKFace dataset [50] and a ResNet50 model [51] pre-trained on the Market1501 dataset [52, 53]. We represent the vertex \(v_{i}\) by fusing the features of \(\hat{f_{i}}\) and \(\hat{b_{i}}\), with a two-layer multi-layer perceptron

where \(\boldsymbol{v}_{i}^{0}\) denotes the fused feature of vertex \(v_{i}\) in \(G_{0}\), \(\boldsymbol{f}_{i}^{\text{f}}\) and \(\boldsymbol{f}_{i}^{\text{b}}\) denote the features of \(\hat{f_{i}}\) and \(\hat{b_{i}}\), respectively, \([\cdot ]\) denotes vector concatenation, and ϕ is implemented with a \(4096 \times 2048\) dimension fully connected layer followed by the ReLU Layer.

Features that describe the interactions between people are also extracted. When two people are represented by adjacent vertices in \(G_{0}\), they co-occur in one or more sliding windows of adjacent keyframes. The keyframes within these sliding windows are collected as

where \(\Theta _{ij}\) denotes the set of keyframes of sliding windows containing person pairs \(p_{i}\) and \(p_{j}\), \(K_{t}\) denotes a keyframe at time t, Δt denotes the bias of sliding window, which is 1 in our experiments, \(p_{i}, p_{j} \in c_{t} \cup \cdots \cup c_{t+{{\Delta}} t}\) denotes \(p_{i}\) and \(p_{j}\) appearing in the sliding window beginning at t. The number of keyframes in \(\Theta _{ij}\) is adjusted to a constant, which is 16 in the experiments, by uniformly sampling or oversampling existing frames. Based on the adjusted \(\Theta _{ij}\), a 3D-ResNet50 [54] pre-trained on the activity network [55] is applied to extract the feature \(\boldsymbol{e}^{0}_{ij}\) representing the edge \(e_{ij}\) in \(G_{0}\).

3.4 Relationship prediction

Context information is leveraged for relationship prediction. Inspired by iterative message passing for scene graph generation [42], a complete graph message passing mechanism is introduced, in which the vertex feature and edge feature are iteratively updated with messages from adjacent vertices and edges.

Let \(v_{i}\) denote vertex i and its corresponding feature is \(\boldsymbol{v}_{i}\). \(e_{ij}\) denotes the edge between \(v_{i}\) and \(v_{j}\), and its corresponding feature is \(\boldsymbol{\varepsilon}^{ij}\). \(E_{i} = \{e_{ik_{1}},\ldots , e_{ik_{n}}\}\) is the set of all edges connected to \(v_{i}\), and \(V_{ij} = \{v_{i},v_{j}\}\) is the set of the two vertices of the edge \(e_{ij}\). We calculate the vertex message \(\boldsymbol{\nu}^{d}_{i}\) and edge message \(\boldsymbol{\xi}^{d}_{ij}\) after the d-th iteration as follows:

where \(\boldsymbol{\nu}^{d}_{i}\) and \(\boldsymbol{\xi}^{d}_{ij}\) denote message for vertex \(v_{i}\) and edge \(e_{ij}\) after the d-th iteration, respectively; \(e_{mn}\) denote an edge in \(E_{i}\); \(v_{k}\) denote a vertex in \(V_{ij}\); \(\boldsymbol{\omega}_{e}\) and \(\boldsymbol{\omega}_{v}\) are two weighted vectors to calculate the attention weights of the collected vertices or edges for the message, respectively; \(\boldsymbol{v}_{i}^{d}\) and \(\boldsymbol{v}_{k}^{d}\) denote features of vertex \(v_{i}\) and \(v_{k}\) and after the d-th iteration, respectively; \(\boldsymbol{\varepsilon}_{mn}^{d}\) and \(\boldsymbol{\varepsilon}_{ij}^{d}\) denote features of edge \(e_{mn}\) and \(e_{ij}\) after the d-th iteration, respectively; \(\sigma (\cdot )\) denotes sigmoid function for converting attention weights to range (0,1); d denotes the d-th iteration in message passing, which is initially set to 0.

We upgrade \(G_{0}\) to the final SRG by updating the representation of its vertices and edges iteratively through two gate recurrent units [56], in which we use the message as the input and the representation as the hidden state. The representation features of the graph’s vertices and edges are updated by Eqs. (5) and (6):

where \(\Psi _{v}\) and \(\Psi _{e}\) are two gate recurrent units for updating edge representation and vertex representation, respectively.

Unlike in scene graph generation [42], the category label of each vertex cannot be used because all the vertices in a SRG represent people, leading to vertex representation confusion, i.e. all of the vertex representations in the graph are similar and the prediction result can easily overfit the most common undirected relationships such as friends and colleagues. One primary solution is to treat each person as an independent category; however, there are uncertain person numbers in each video and people in different videos are not the same. Another solution proposed in Ref. [4] is to only aggregate messages from vertices to edges and use edge representation for social relationship prediction. However, such a solution hampers the propagation of context information. Therefore, instead of constraining the representation of vertices, we fuse the final representation of each edge \(e_{ij}\) with the initial representations of its two vertices \(v_{i}\) and \(v_{j}\), and conduct context-aware social relationship prediction on a fully connected layer using Eq. (7):

where \(\boldsymbol{r}_{ij}\) denotes the predicted social relationship on edge \(e_{ij}\), i.e., the social relationship between person \(p_{i}\) and \(p_{j}\); \(\boldsymbol{v}_{i}^{0}\) and \(\boldsymbol{v}_{j}^{0}\) denote the initial representations of \(v_{i}\) and \(v_{j}\), respectively; \(\boldsymbol{\varepsilon}_{ij}^{n}\) denotes the final representation of \(e_{ij}\) and ϕ implemented with a fully connected layer.

4 Dataset

Early video social relationship analysis datasets such as SRIV [3] and ViSR [4] only provide social relationship tags for video clips. These datasets lack social relationship annotations at the person level. MovieGraphs [26] and the extension of the HLVU [36] in the deep video understanding challenge are datasets depicting human-centered situation, containing interactions between characters, their relationships and various visible and inferred properties such as the reasons behind certain interactions. The annotations in the MovieGraphs and HLVU datasets can support VSRGG evaluation, but are noisy and sparse. The scale of the HLVU is much smaller than that of the MovieGraphs. Xu et al. [16] constructed a BiliBili dataset especially for VSRGG, but this dataset had not yet been published.

We construct a new VidSoR dataset for the VSRGG task. The dataset consists of videos collected from over 750 episodes of more than 300 different TV dramas, encompassing four categories: situation comedy, legal/medical, modern life and romance. We exclude categories such as cartoon and fiction, which are less relevant to people’s daily lives. We identify and define eight relationship types based on domain theory [33]. Table 1 presents a detailed description of the relationship types.

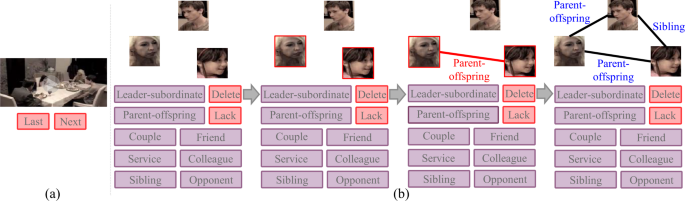

The main process of constructing the VidSoR dataset contains four steps. Figure 3 displays the key steps of annotating the relationships:

-

1)

Each episode is cut into multiple clips ranging from 1 min to 4 min 51 s.

-

2)

A filtering process is conducted to ensure that at least two individuals are included in each video clip and clips with opening and ending are discarded.

-

3)

Faces are then detected by RetinaFace [43] with a sample rate of one frame per second, and two annotators are asked to manually categorize recognizable faces into different individuals as the pivot face set of each character. Unimportant characters in the video clips such as pedestrians in the background are discarded.

-

4)

Two annotators are asked to annotate the results separately and compare them. A third annotator is asked to vote in case of conflicting opinions. If the third annotator is unsure of the annotation results, the disputed video clip is discarded.

An example of the annotation process (source from the DVU challenge [22]). (a) Watch the video clip to be annotated. (b) Confirm characters, choose a pair of characters, choose the relationship label of the pair and finally annotate all relationships

The VidSoR dataset comprises 1798 valid video clips totaling 72 h in duration, with an average duration of 144 s. Additionally, there is an average of 3.50 person instances and 2.95 relationship instances in each video. Some annotation examples are shown in Fig. 4.

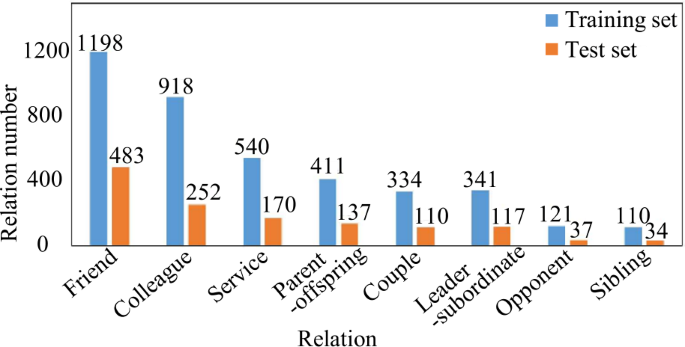

There are a total of 5313 relationship instances in the VidSoR dataset, and their distribution between the training set and test set is depicted in Fig. 5. The dataset has a long-tail distribution, with certain relationship types such as “friends” and “colleagues” appearing more frequently than the others, posing a challenge to our task. Two constraints are ensured for the dataset split. First, the video clips from different TV drama categories are similarly distributed between the training and test sets. In addition, video clips from the same TV drama are restricted to appear in only one split, preventing the method from enhancing its performance by memorizing repeated characters within the same TV drama. Initially, we split our dataset into a training set and a test set at a ratio of 3:1 in accordance with the split ratio of ViSR. To meet these constraints, we make minor adjustments and end up with a training set size of 1347 episodes and the test set size of 451 episodes.

Table 2 compares the VidSoR and MovieGraphs datasets. VidSoR is derived from 750 episodes of more than 300 diverse TV dramas, while MovieGraphs is derived from 51 films. Although a greater number of video clips are included in MovieGraphs, VidSoR contains a greater number of valid clips that include social relationship annotations. Additionally, the average number of relationship instances per clip is higher in VidSoR. In terms of clip duration, the average clip length in MovieGraphs is 44 s, while in VidSoR it is 144 s. As a result, VidSoR is more complex and challenging than MovieGraphs. The social relationship annotation of VidSoR follows domain theory [33] and categorizes all descriptions into 8 classes. Although MovieGraphs encompass 107 types of social relationships, current methods reduce them to 5 [16], 8 [23, 25, 57] and 15 [5, 23, 57] types of social relationships.

5 Experiments

5.1 Evaluation metrics

Following Refs. [2, 12, 13], we employ the mean average precision (mAP) and mean recall over all classes (mRecall) to evaluate different methods. In particular, the mAP is more important in the generation of social relationship graphs, where false predictions can severely affect scene understanding.

During testing, for each person, we select the face image with the feature of minimum distance to the center of its face collection. If the selected face has more than 0.5 mean intersection-over-union (mIoU) with one of the annotated person’s pivot faces, or has a cosine similarity to the pivot face of its nearest annotated person greater than 0.6, we consider this face the targeted person. If duplicated persons are detected for one person in the ground truth, we choose the face collection that has more faces of this person as the person to evaluate its relationships.

5.2 Implementation details

In the VSRGG task, faces and bodies of persons need to be detected by RetinaFace and Faster-RCNN, respectively. Due to incorrect clusters or unfiltered pedestrians, there are large numbers of negative relationship samples in proposals generated by the graph proposal module. To address this issue, we retain the negative and positive samples at a ratio of 1:1 during training and only during training.

In the VSRGG∗ task, the input faces and bodies are those after manual annotation. Although in dataset construction, the original faces are also detected by RetinaFace, they are filtered and categorized by annotators. Thus, the bodies that are aligned to the faces after manual annotation are also manually filtered. Moreover, no sample strategy is used for VSRGG∗.

In the CAGNet method, all the modules must be trained except the person detection module. The feature extraction submodels initialized with pre-trained parameters also need to be fine-tuned. In both tasks, our model is trained using the stochastic gradient descent optimizer, with the learning rate initialized to \(1.0 \times 10^{-5}\) and a batch size of 8. Our experiments are conducted using an RTX-3090 GPU with 24 GB of memory.

5.3 Comparison with state-of-the-arts

We compare our CAGNet with different methods related to VSRGG, including methods for social relationship recognition in images: UnionCNN [1], PairCNN [13], First-glance [13], Dual-glance [13], graph reasoning model (GRM) [14] and social relationship graph generation network (SRG-GN) [15]; social relationship recognition at video level: multi-scale spatial-temporal reasoning (MSTR) [4]; video social relationship detection at person level: learning interactions and relationships between characters (LIREC) [5]; methods for video scene graph generation: gated spatio-temporal fully-connected energy graph (GSTEG) [19], spatial-temporal transformer (STTran) [20] and target adaptive context aggregation network (TRACE) [21]. UnionCNN [1] is an image-based visual relationship detection method that combines visual cues with a single CNN and language priors from a word embedding model. PairCNN [13] contains two CNNs with shared weights to encode two persons separately. First-glance [13] only looks at pairs of people and makes a rough prediction directly. Dual-glance [13] takes another glance at region proposals, and aggregates the region-level predictions to refine the results. GRM [14] constructs a graph of persons and objects, and propagates node messages through the graph to fully explore the interaction of the persons with the contextual objects. SRG-GN [15] builds a social relationship graph and uses memory cells to update social relationship states using scenes and attribute contexts. MSRT [4] proposes a multi-scale spatial-temporal reasoning framework containing triple graphs to predict video-level social relationships. LIREC [5] proposes learning interaction and relationship between movie characters jointly with fused visual, audio and text features. GSTEG [19] constructs a fully-connected spatio-temporal graph to jointly learn spatial and temporal information about visual relationships in videos. STTran [20] proposes a spatial-temporal transformer framework to encode the spatial context within single frames and decode visual relationship representations with temporal dependencies across frames. TRACE [21] proposes a target adaptive context aggregation network following the detect-to-track paradigm with hierarchical relationship tree. Although there are several new methods for VSRGG recently [16–18, 23–25, 57], their codes have not been published and they are evaluated on the pre-processed MovieGraphs and the self-constructed datasets, which are not suitable for comparison.

We adapt all of these baselines to VSRGG. 1) Image-based methods: Because image social relationship recognition methods require related people to appear in the same image, all key frames with more than one person are selected and each person pair appearing in the same key frame is treated as the input. During the training process, each pair of people is taken and the ground truth is the annotation of that pair. The VSRGG task uses sampling strategies similar to our method to handle large numbers of negative samples. During the test, pair-wise relationship prediction is performed and predictions of the same person-pair are collected. After that, a voting mechanism is employed to aggregate predictions from different key frames on each person-pair. 2) Video scene graph generation methods: We transfer social relationship annotations to scene graph annotations and train the methods with person-level social relationship as the groundtruth for supervised learning. 3) Video-level Social relationship analysis methods: We adjust person-level social relationship annotations to video-level annotations as the ground truth for supervised learning by cutting video clips into shorter clips according to person co-occurrence, and each clip contains one person-pair with one social relationship. 4) Person-level social relationship analysis methods: Since LIREC predicts interaction and social relationship together and VidSoR does not provide interaction annotations, we adapt the interaction prediction branch to relationship prediction with social relationship supervision.

As summarized in Table 3, CAGNet achieves balanced performance on both mAP and mRecall over two tasks. Despite significant exploration of person representation, context encoding and feature fusion in image-based methods, these methods do not excel in video social relationship analysis. Image-based social relationship detection methods only detect relationships when people are visible in the same frame. In contrast, video-based detection methods can identify relationships even when people appear in different video frames. Consequently, the performances of image-based methods are slightly inferior to ours.

Methods for scene graph generation primarily concentrate on detecting visual relationships between common objects in videos. However, these methods have been limited in their ability to analyze social relationships that require the reasoning about implicit information. Consequently, the metric values for most of these methods are relatively low, with the exception of the mAP of STTran.

The MSRT method, designed for video-level social relationship analysis, shows poor performance in person-level social relationship analysis. The LIREC method, which leverages multi-modal features for person-level video social relationship detection, obtains the highest mRecalls in both VSRGG and VSRGG∗. However, our method using only visual features achieves 88.35% (7.05 vs. 7.98) and 93.61% (12.74 vs. 13.61) of LIREC’s mRecalls in VSRGG and VSRGG∗, respectively, while yielding significantly higher mAP values (10.04 vs. 2.07, 17.79 vs. 10.49).

In summary, our method can make accurate predictions with a comparatively high mRecall when using only visual features. The proposed graph module helps to detect more complete relationship graphs and thus improve the mRecall. The feature extraction module can help to incorporate both static personal information and information about interactions. The message passing module can help to aggregate context information.

5.4 Ablation study

Backbone. VGG16 [49], ResNet50 [51]/ and 3D-ResNet50 [54] are used as feature extraction submodels in our method. We also evaluate the performance of a variant of CAGNet, which is denoted as “CAGNet-ViT” and exploits ViT-B [58] for face and body feature extraction and ViViT-B [59] for temporal feature extraction. As shown in Table 4, the performance of CAGNet is worse than that of CAGNet-ViT. It is assumed that the scale of the dataset is not large enough to train a method using the transformer as the backbone. Additionally, it is observed that GPU memory is sometimes exceeded during training of the variant. Thus, CAGNet based on VGG and ResNet can achieve better performance.

Body and facial features. In our method, both body and facial features are extracted. To validate the influence of body and facial features to proposed CAGNet, the performance of CAGNet trained with only facial feature (Face), only body feature (Body) and both (Body + Face) is presented. As shown in Table 5, CAGNet with both facial and body features has the best performance on all metrics. The results show that both facial and body features are important. Furthermore, the results also illustrate that face features are more effective. On one hand, face implies a lot of information, such as gender, age and emotion of the character, while body does not focus on such fine-grained information; on the other hand, bodies in videos such as movies and dramas are often incomplete because of occlusion or close-ups, which hardly hurts the expression ability of body features.

Sliding window width. The sliding window width is a key factor for identifying potential SRGs. An increase in the sliding window width has not only the potential to improve the completeness of the relationship graph, but also results in more false positive relationship proposals. Hence, we calculate the F-score to evaluate the performance:

where the \(\beta ^{2}\) takes a value of 0.3 to increase the weight of the mAP. It is worth noting that the increase in completeness is limited and will not continue to increase as the sliding window width increases. We evaluate our method on VSRGG∗ with width the ranging from 1 to 5, and the results are shown in Table 6. When the sliding window width is 2, the mAP and F-score reach the maximum values.

Combinations of representations. In our method, the edge representations are used after message passing, and the vertex representations are used before message passing to capture context information and incorporate distinguishable personal features. To study the influence of different combinations of vertex and edge representations, a set of experiments that include \(E_{0}\), \(E_{n}\), \(V_{0}+E_{0}\), \(V_{n}+E_{n}\), \(V_{n}+E_{0}\), \(V_{n}+E_{0}\) are conducted, where \(E_{0}\) and \(E_{n}\) mean edge representation before and after message passing, and \(V_{0}\) and \(V_{n}\) mean vertex representation before and after message passing. We also calculate the F-score to evaluate the performance.

As displayed in Table 7, the proposed method outperforms \(V_{0}+E_{0}\) on mAP and F-score, demonstrating the effectiveness of message passing for incorporating context information. Moreover, it outperforms \(V_{n}+E_{n}\) on mAP and F-score, which shows that distinguishable vertex representation is helpful for making balanced prediction for all categories of relationships.

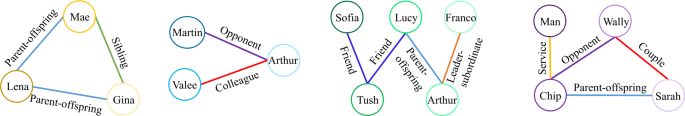

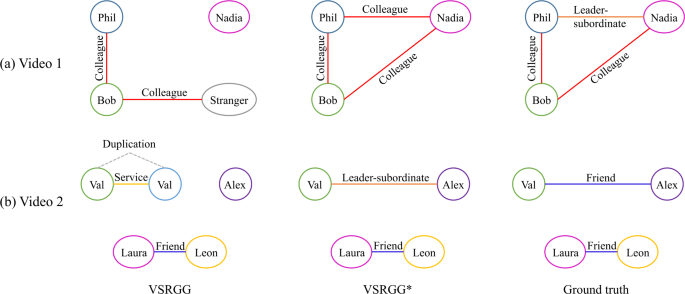

5.5 Qualitative results

The qualitative results are shown in Fig. 6, and the performance of our method on VSRGG is worse than that on VSRGG∗. In particular, the performance of mRecall on VSRGG is even less than half that of the mRecall on VSRGG∗. There are two possible reasons and we use qualitative examples to better illustrate the performance gap. One reason is that in the VSRGG task, there are false positives. As presented in Fig. 6, there are duplicate detections of the same person or some people as missing. The other reason is that even for the correctly detected person, there are some incorrect faces in the face cluster of each person, which leads to false predictions between correct person pairs. As shown in Fig. 6, the prediction of VSRGG∗ is better than that of VSRGG for correctly detected person pairs.

6 Conclusions

In this paper, we propose a novel CAGNet method for the person-level video social relationship analysis task VSRGG, which requires the construction of a social relationship graph containing social relationships between people appearing in a given video. CAGNet consists of person detection, graph proposal, feature extraction and relationship prediction to detect relationships in videos. Furthermore, a more complex and challenging dataset VidSoR is constructed for VSRGG evaluation. The dataset consists of 6276 person instances and 5313 relationship instances. The CAGNet method is compared with several state-of-the-art baselines on the VidSoR dataset, and achieves comparatively satisfactory performance.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Abbreviations

- CAGNet:

-

context-aware graph neural network

- SRG:

-

social relationship graph

- SRIV:

-

social relationship in video

- ViSR:

-

video social relationship

- VSRGG:

-

video social relationship graph generation

References

Lu, C., Krishna, R., Bernstein, M., & Li, F. (2016). Visual relationship detection with language priors. In B. Leibe, J. Matas, N. Sebe, et al. (Eds.), Proceedings of the 14th European conference on computer vision (pp. 852–869). Cham: Springer.

Tang, K., Niu, Y., Huang, J., Shi, J., & Zhang, H. (2020). Unbiased scene graph generation from biased training. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 3716–3725). Piscataway: IEEE.

Lv, J., Liu, W., Zhou, L., Wu, B., & Ma, H. (2018). Multi-stream fusion model for social relation recognition from videos. In K. Schoeffmann, T. H. Chalidabhongse, C.-W. Ngo, et al. (Eds.), Proceedings of the 24th international conference on multimedia modeling (pp. 355–368). Cham: Springer.

Liu, X., Liu, W., Zhang, M., Chen, J., Gao, L., Yan, C., et al. (2019). Social relation recognition from videos via multi-scale spatial-temporal reasoning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 3566–3574). Piscataway: IEEE.

Kukleva, A., Tapaswi, M., & Laptev, I. (2020). Learning interactions and relationships between movie characters. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 9846–9855). Piscataway: IEEE.

Zhang, Z., Luo, P., Loy, C.-C., & Tang, X. (2015). Learning social relation traits from face images. In Proceedings of the IEEE international conference on computer vision (pp. 3631–3639). Piscataway: IEEE.

Zhao, W., Wu, X., & Zhang, X. (2020). MemCap: memorizing style knowledge for image captioning. In Proceedings of the 34th AAAI conference on artificial intelligence (pp. 12984–12992). Palo Alto: AAAI Press.

Chen, S., & Jiang, Y.-G. (2019). Motion guided spatial attention for video captioning. In Proceedings of the 33rd AAAI conference on artificial intelligence (pp. 8191–8198). Palo Alto: AAAI Press.

Shah, S., Mishra, A., Yadati, N., & Talukdar, P.P. (2019). KVQA: knowledge-aware visual question answering. In Proceedings of the 33rd AAAI conference on artificial intelligence (pp. 8876–8884). Palo Alto: AAAI Press.

Guo, W., Zhang, Y., Yang, J., & Yuan, X. (2021) Re-attention for visual question answering. 30, 6730–6743.

Wu, L., Sun, P., Fu, Y., Hong, R., Wang, X., & Wang, M. (2019). A neural influence diffusion model for social recommendation. In Proceedings of the international ACM SIGIR conference on research and development in information retrieval (pp. 235–244). New York: ACM.

Sun, Q., Schiele, B., & Fritz, M. (2017). A domain based approach to social relation recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3481–3490). Piscataway: IEEE.

Li, J., Wong, Y., Zhao, Q., & Kankanhalli, M.S. (2017). Dual-glance model for deciphering social relationships. In Proceedings of the IEEE international conference on computer vision (pp. 2650–2659). Piscataway: IEEE.

Wang, Z., Chen, T., Ren, J., Yu, W., Cheng, H., & Lin, L. (2018). Deep reasoning with knowledge graph for social relationship understanding. In J. Lang (Ed.), Proceedings of the 27th international joint conference on artificial intelligence (pp. 1021–1028). Palo Alto: AAAI Press.

Goel, A., Ma, K.T., & Tan, C. (2019). An end-to-end network for generating social relationship graphs. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 11186–11195). Piscataway: IEEE.

Xu, T., Zhou, P., Hu, L., He, X., Hu, Y., & Chen, E. (2021). Socializing the videos: a multimodal approach for social relation recognition. ACM Transactions on Multimedia Computing Communications and Applications, 17(1), 1–23.

Hu, Y., Cao, C., Li, F., Yan, C., Qi, J., & Wu, B. (2023). Overall-distinctive gcn for social relation recognition on videos. In D.T. Dang-Nguyen, C. Gurrin, M.A. Larson, et al. (Eds.), Proceedings of the 29th proceedings of the international conference on multimedia modeling (pp. 57–68). Cham: Springer.

Hu, Y., Yan, C., Cao, C., Wang, H., & Wu, B. (2023). Social relation graph generation on untrimmed video. In D.T. Dang-Nguyen, C. Gurrin, M.A. Larson, et al. (Eds.), Proceedings of the 29th international conference on multimedia modeling (pp. 739–744). Cham: Springer.

Tsai, Y.-H.H., Divvala, S., Morency, L.-P., Salakhutdinov, R., & Farhadi, A. (2019). Video relationship reasoning using gated spatio-temporal energy graph. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10424–10433). Piscataway: IEEE.

Cong, Y., Liao, W., Ackermann, H., Rosenhahn, B., & Yang, M.Y. (2021). Spatial-temporal transformer for dynamic scene graph generation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 16372–16382). Piscataway: IEEE.

Teng, Y., Wang, L., Li, Z., & Wu, G. (2021). Target adaptive context aggregation for video scene graph generation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 13688–13697). Piscataway: IEEE.

Curtis, K., Awad, G., Rajput, S., & Hlvu, I.S. A new challenge to test deep understanding of movies the way humans do. Retrieved June 19, 2024, from https://www-nlpir.nist.gov/projects/trecvid/dvu/dvu.development.dataset/.

Cao, C., Yan, C., Li, F., Liu, Z., Wang, Z., & Wu, B. (2021). Recognizing characters and relationships from videos via spatial-temporal and multimodal cues. In Proceedings of the IEEE international conference on big knowledge (pp. 174–181). Piscataway: IEEE.

Wu, S., Chen, J., Xu, T., Chen, L., Wu, L., Hu, Y., et al. (2021). Linking the characters: video-oriented social graph generation via hierarchical-cumulative gcn. In Proceedings of the ACM international conference on multimedia (pp. 4716–4724). New York: ACM.

Teng, Y., Song, C., & Wu, B. (2022). Learning social relationship from videos via pre-trained multimodal transformer. IEEE Signal Processing Letters, 29, 1377–1381.

Vicol, P., Tapaswi, M., Castrejon, L., & Fidler, S. (2018). Moviegraphs: towards understanding human-centric situations from videos. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8581–8590). Piscataway: IEEE.

Wang, G., Gallagher, A., Luo, J., & Forsyth, D. (2010). Seeing people in social context: recognizing people and social relationships. In K. Daniilidis, P. Maragos, & N. Paragios (Eds.), Proceedings of the 11th European conference on computer vision (pp. 169–182). Piscataway: IEEE.

Dibeklioglu, H., Salah, A.A., & Gevers, T. (2013). Like father, like son: facial expression dynamics for kinship verification. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1497–1504). Piscataway: IEEE.

Fang, R., Tang, K.D., Snavely, N., & Chen, T. (2010). Towards computational models of kinship verification. In Proceedings of the IEEE international conference on image processing (pp. 1577–1580). Piscataway: IEEE.

Xia, S., Shao, M., Luo, J., & Fu, Y. (2012). Understanding kin relationships in a photo. IEEE Transactions on Multimedia, 14(4), 1046–1056.

Guo, Y., Dibeklioglu, H., & van der Maaten, L. (2014). Graph-based kinship recognition. In Proceedings of the international conference on pattern recognition (pp. 4287–4292). Piscataway: IEEE.

Kiesler, D.J. (1983). The 1982 interpersonal circle: a taxonomy for complementarity in human transactions. Psychological Review, 90(3), 185.

Bugental, D.B. (2000). Acquisition of the algorithms of social life: a domain-based approach. Psychological Bulletin, 126(2), 187.

Fiske, A.P. (1992). The four elementary forms of sociality: framework for a unified theory of social relations. Psychological Review, 99(4), 689.

Zhang, M., Liu, X., Liu, W., Zhou, A., Ma, H., & Mei, T. (2019). Multi-granularity reasoning for social relation recognition from images. In IEEE international conference on multimedia and expo (pp. 1618–1623). Piscataway: IEEE.

Curtis, K., Awad, G., Rajput, S., & Hlvu, I.S. (2020). A new challenge to test deep understanding of movies the way humans do. In Proceedings of the international conference on multimedia retrieval (pp. 355–361). New York: ACM.

Chen, L., Zhang, H., Xiao, J., He, X., Pu, S., & Chang, S.-F. (2019). Counterfactual critic multi-agent training for scene graph generation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 4613–4623). Piscataway: IEEE.

Liu, A.-A., Su, Y.-T., Nie, W.-Z., & Kankanhalli, M. (2016). Hierarchical clustering multi-task learning for joint human action grouping and recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(1), 102–114.

Johnson, J., Krishna, R., Stark, M., Li, L.-J., Shamma, D., Bernstein, M., et al. (2015). Image retrieval using scene graphs. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3668–3678). Piscataway: IEEE.

Zellers, R., Yatskar, M., Thomson, S., & Choi, Y. (2018). Neural motifs: scene graph parsing with global context. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5831–5840). Piscataway: IEEE.

Yang, J., Lu, J., Lee, S., Batra, D., & Parikh, D. (2018). Graph R-CNN for scene graph generation. In V. Ferrari, M. Hebert, C. Sminchisescu, et al. (Eds.), Proceedings of the 15th European conference on computer vision (pp. 670–685). Cham: Springer.

Xu, D., Zhu, Y., Choy, C. B., & Li, F. (2017). Scene graph generation by iterative message passing. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5410–5419). Piscataway: IEEE.

Deng, J., Guo, J., Ververas, E., Kotsia, I., & Zafeiriou, S. (2020). Retinaface: single-shot multi-level face localisation in the wild. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5203–5212). Piscataway: IEEE.

Zhan, X., Liu, Z., Yan, J., Lin, D., & Loy, C.C. (2018). Consensus-driven propagation in massive unlabeled data for face recognition. In V. Ferrari, M. Hebert, C. Sminchisescu, et al. (Eds.), Proceedings of the 15th European conference on computer vision (pp. 568–583). Cham: Springer.

Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster R-CNN: towards real-time object detection with region proposal networks. In Proceedings of the 29th international conference on neural information processing systems (pp. 91–99). Red Hook: Curran Associates.

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., et al. (2014). Microsoft coco: common objects in context. In D. Fleet, T. Pajdla, B. Schiele, et al. (Eds.), Proceedings of the 13th European conference on computer vision (pp. 740–755). Cham: Springer.

Hasan, I., Liao, S., Li, J., Akram, S.U., & Shao, L. (2021). Generalizable pedestrian detection: the elephant in the room. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 11328–11337). Piscataway: IEEE.

Shao, S., Zhao, Z., Li, B., Xiao, T., Yu, G., Zhang, X., et al. (2018). Crowdhuman: a benchmark for detecting human in a crowd. arXiv preprint. arXiv:1805.00123.

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint. arXiv:1409.1556.

Zhang, Z., Song, Y., & Qi, H. (2017). Age progression/regression by conditional adversarial autoencoder. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5810–5818). Piscataway: IEEE.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778). Piscataway: IEEE.

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., & Tian, Q. (2015). Scalable person re-identification: a benchmark. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1116–1124). Piscataway: IEEE.

Luo, H., Gu, Y., Liao, X., Lai, S., & Jiang, W. (2019). Bag of tricks and a strong baseline for deep person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops (pp. 1487–1495). Piscataway: IEEE.

Hara, K., Kataoka, H., & Satoh, Y. (2018). Can spatiotemporal 3D CNNs retrace the history of 2D CNNs and imagenet? In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6546–6555). Piscataway: IEEE.

Heilbron, F.C., Escorcia, V., Ghanem, B., & Niebles, J.C. (2015). Activitynet: a large-scale video benchmark for human activity understanding. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 961–970). Piscataway: IEEE.

Cho, K., van Merriënboer, B., Bahdanau, D., & Bengio, Y. (2014). On the properties of neural machine translation: encoder-decoder approaches. In Proceedings of the syntax, semantics and structure in statistical translation (pp. 103–111). Stroudsburg: ACL.

Yan, C., Liu, Z., Li, F., Cao, C., Wang, Z., & Wu, B. (2021). Social relation analysis from videos via multi-entity reasoning. In Proceedings of the international conference on multimedia retrieval (pp. 358–366). New York: ACM.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., et al. (2020). An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint. arXiv:2010.11929.

Arnab, A., Dehghani, M., Heigold, G., Sun, C., Lučić, M., & Vivit, C. S. (2021). A video vision transformer. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 6836–6846). Piscataway: IEEE.

Acknowledgements

Ao Zhang and Lusha Chen provided preliminary work and technical support for this project.

Funding

This work was supported by the National Natural Science Foundation of China (No. 62072232), the Fundamental Research Funds for the Central Universities (No. 021714380026) and the Collaborative Innovation Center of Novel Software Technology and Industrialization.

Author information

Authors and Affiliations

Contributions

JB is the leader of the project and has set up the entire framework for the review. All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by FY, YF, ZZ and JB. The first draft of the manuscript was written by FY, YF and JB. ZZ, TR and GW commented on previous versions of the manuscript. All the authors have read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yu, F., Fang, Y., Zhao, Z. et al. CAGNet: a context-aware graph neural network for detecting social relationships in videos. Vis. Intell. 2, 22 (2024). https://doi.org/10.1007/s44267-024-00056-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44267-024-00056-9