Abstract

As machine learning (ML) continues to advance in the field of materials science, the variation in strategies for the same steps of the ML workflow becomes increasingly significant. These details can have a substantial impact on results, yet they have not received the deserved attention. This review aims to explore the various strategies available for each detail within the general workflow of materials ML. Firstly, the general workflow of materials ML will be introduced to help readers gain an understanding of potential details. Subsequently, different strategies of details within each step of the workflow will be presented through state-of-the-art case studies. The potential outcomes associated with choosing different strategies to details will be explored. Following this, suitable strategies for details will be recommended based on distinct application scenarios. Finally, directions for the future development of materials ML concerning details will be proposed. Through these discussions, we aspire to offer a comprehensive understanding of the nuances in details within materials ML. This will serve as valuable reference and guidance for researchers in both materials science and ML.

Similar content being viewed by others

Introduction

Advanced materials serve as the cornerstone of a nationâs industrial prowess. To reduce the time and cost of materials development, the United States launched the Materials Genome Initiative (MGI)1 in 2011, aiming at enhancing global competitiveness. Subsequently, the European Union, Japan, India, and China swiftly embarked on similar research programs. These research initiatives all underscore the drive to promote the application of artificial intelligence (AI) in advanced materials research and development2. Machine learning (ML), as a vital tool for realizing AI, is widely applied in materials design. The âdata-driven scientific paradigmâ is referred to as the fourth paradigm of scientific development. ML, developed upon data, has the capacity to extract valuable insights from existing data, including even unsuccessful experimental samples3. It establishes implicit mapping relationships between features and target variables, allowing the guidance of material design through predictive models to predict the properties of unknown samples4. Over the past decades, ML has assisted researchers in achieving significant breakthroughs in various material design domains, including alloy materials5,6, photovoltaic materials7, perovskites8, superconductors9, etc. Compared to traditional empirical and computational methods, ML offers the advantage of rapidly and accurately constructing predictive models based on existing data, even in cases where the underlying physical mechanisms are not well-understood, thus guiding material development10. When there is a certain level of theoretical understanding, the incorporation of features combined with domain knowledge can further enhance the precision of model predictions4. Furthermore, ML can uncover potential patterns within extensive datasets, promoting the refinement of domain knowledge. For instance, Priyanga et al.11 utilized features including elemental composition, ionic radii, electron properties, and electronegativity to construct a random forest (RF) model for classifying the direct and indirect band gaps of perovskite oxides. Through the feature importance estimation method embedded in the RF model, the average ionic character was identified as the most crucial determinant. Correlation analysis further revealed that higher values of the average ionic character were conducive to obtaining a direct band gap.

The general workflow of ML comprises four steps: dataset construction, feature selection, model evaluation and selection, and model application. In each step, various details need to be taken into consideration. For instance, in the context of dataset construction, considerations include how to obtain features, handling missing values, the necessity of data transformation, the treatment of duplicate samples with the same features but different target values, and the approach to handling outliers. Furthermore, decisions must be made regarding the strategy and ratio for partitioning the dataset into training and test sets. When it comes to feature selection, there are considerations such as whether to perform feature selection for each model; how to choose an appropriate feature selection method from the various available options; and how to strike a balance between model accuracy, complexity, and interpretability during feature selection. Regarding model evaluation and selection, some of the details include what criteria to use for model selection; whether only one model can be chosen as the optimal model; and how to assess the generalization ability, extrapolation capability, and stability of the model. The application of models can be divided into two main aspects: one involves designing candidates that meet specific performance objectives, and the other entails exploring relevant physical and chemical principles via feature interpretation methods and statistical techniques. When designing candidates with single-target property, the strategies could involve generating a lot of virtual samples and filtering them through predictive models, or it could incorporate optimization algorithms or other reverse design methods to identify candidates. For candidates aiming to satisfy multiple performance objectives, potential strategies include ε-constrained method, converting the multi-objective problem into a single-objective one using weighted methods, or employing multi-objective optimization algorithms. In the pursuit of uncovering physical and chemical principles, researchers may utilize various methods such as SHapley Additive exPlanations (SHAP)12, partial dependence plots (PDP)13, sensitivity analysis (SA) techniques, or employ visualization-based statistical methods. These unresolved questions provoke profound contemplation in the field.

As illustrated above, there are numerous details to consider at each step of materials ML. These details are not merely binary choices of yes or no; many of them offer a plethora of available strategies. The varied strategies employed can yield distinct effects, making it a subject worthy of exploration. This review aims to integrate cutting-edge research findings, summarize the various strategies for details, and analyze the potential differences in results. The discussion can assist readers in selecting the most suitable strategies for their specific materials science problems when using ML methods. Additionally, future directions for the details in materials ML are also proposed.

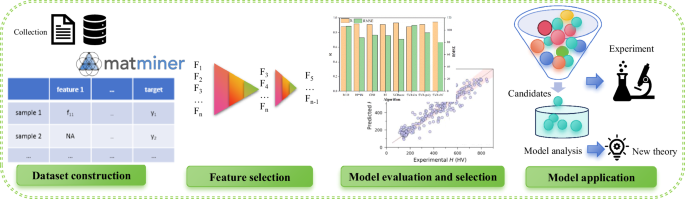

The workflow of materials machine learning

The general workflow of ML-assisted materials design, as depicted in Fig. 1, begins with the collection of data, including values of target properties and relevant features such as materials composition, process parameters, and features generated from the composition or structure. Subsequently, the data undergoes preprocessing.

Following data preprocessing, feature selection is performed to establish a predictive model for the target variable using the optimal subset of features obtained. The primary function of this predictive model is to predict unknown samples, thus designing candidates meeting the desired performance criteria. Another aspect of model application involves analyzing the mechanism of the constructed model. Employing interpretable ML methods and statistical techniques, researchers can dissect how certain crucial features influence the target variable, thereby advancing the development of pertinent theories.

Dataset construction

The dataset forms the foundation of ML modeling, encompassing a comprehensive set of features along with their corresponding target variable values. The quality of the dataset dictates the upper limits of ML. Foundational data such as target variables and material compositions can be sourced from relevant literature and databases. It is worth noting that experimental data holds more persuasive power compared to computational data because it is obtained through actual observations and measurements of real-world phenomena14. On the other hand, computational data is generated through mathematical models and simulations, which may not always accurately reflect the complexities of the real world. Therefore, experimental data is often used as a benchmark to validate the accuracy of theoretical models or simulations15,16. However, the value of experimental data also depends on the quality of the experiments, the accuracy of measurements, and other factors. In some cases, computational data from the well-designed and validated computational models can provide valuable insights when experimental data is limited or challenging to obtain17,18,19.

Features are also referred to as descriptors. Apart from the elemental composition of materials and process parameters during production, for inorganic materials, Ward et al.20 have developed a series of universal descriptors. These descriptors are based on elemental basic properties, and mathematical operators (such as maximum value, minimum value, average, and standard deviation, among others) are used to construct descriptors from the physical properties of elements. These descriptors can be obtained through Python packages such as the Mendeleev Python package21 and the Matminer Python package22. For organic materials, which are primarily composed of carbon and hydrogen atoms, their molecular structures are often more complex compared to inorganic materials. This complexity arises from the presence of carbon-carbon bonds and various functional groups, making their structures more intricate and diverse. Relying solely on features based on elemental properties may not be sufficient to construct an accurate mapping relationship between features and target variables for organic materials. In such cases, molecular descriptors and molecular fingerprints can be employed as additional features. These can be obtained through software tools such as CDK23, Pybel24, RDKit25, and PaDEL26. In addition, there are features constructed by incorporating domain knowledge, such as features for the phase parameters or mechanical performance of HEAs4, and features associated with perovskite stability: tolerance factor (t)27, improved tolerance factor (Ï)28, and octahedral factor (Of)27.

After obtaining these fundamental features, more complex features can be reconstructed from them. For example, the sure independence screening sparsifying operator (SISSO)29,30 method can be employed to generate such features. SISSO is a feature engineering technique based on compressive sensing. It combines simple descriptors using mathematical operators to create a multitude of more intricate features, and the least absolute shrinkage and selection operator (LASSO) model is utilized to identify the optimal low-dimensional feature subset.

The raw dataset often presents several issues, including variations in the values of target variables for the same composition and experimental conditions across different literature sources, as well as missing values and outliers. Consequently, it may not be directly suitable for ML modeling. Therefore, data preprocessing is necessary, which commonly involves handling missing values, addressing outliers, and performing data transformations, such as taking logarithms and data standardization, etc. After data preprocessing, selecting an appropriate partitioning method to divide the dataset into training and test sets becomes essential. The strategy for dividing the dataset can impact the modelâs performance and the evaluation of its predictive ability.

Feature selection

The candidate feature pool may contain a wealth of information, but not every feature is necessarily correlated with the target variable. Modeling with all features may not guarantee the highest model accuracy, and it can significantly increase model complexity, thereby elevating the risk of overfitting31. Before determining the feature selection method, it is necessary to define the objective function during the feature selection process, which serves as a metric for assessing the quality of a feature subset. Typically, model accuracy is chosen as the objective function for feature selection. However, considerations can also include model complexity and interpretability. By placing emphasis on different aspects, the optimal feature subset may vary accordingly.

Common feature selection methods can be categorized into filter, wrapper, and embedded approaches32,33. Filter methods are model-agnostic and include techniques such as variance threshold filtering, Pearson correlation coefficient (PCC) method, maximum information coefficient (MIC) method, and the maximum relative minimum redundancy (mRMR)34,35,36. Wrapper methods customize feature subsets for specific algorithms by evaluating their performance. Common wrapper methods include sequential forward/backward selection (SFS/SBS)37, recursive feature addition/elimination (RFA/RFE)38, and genetic algorithm (GA)39,40 method. Embedded methods incorporate feature selection into the model training process and are often utilized with tree models and models with regularization terms, such as LASSO and ridge regression (RR). The detailed descriptions of these feature selection methods can be found in the Lu et al.âs41 review. It is worth noting that there are numerous feature selection methods available, and researchers are not limited to choosing only one. In practice, a combination of multiple feature selection methods is often employed to determine the optimal feature subset.

Model evaluation and selection

The results of model evaluation typically serve as the basis for model selection, where different evaluation methods may identify different optimal models. Aspects to be evaluated include model fitting ability, generalization capability, stability, and extrapolation ability. Commonly used evaluation methods include independent test sets, k-fold cross-validation (CV), leave-out-group (LOG) CV, etc. Utilizing various evaluation methods simultaneously can elucidate both the strengths and limitations of the model across different aspects. However, due to computational resource constraints, some evaluation methods may be used exclusively to assess the optimal model selected by a single evaluation method.

At present, there is no universally applicable ML algorithm suitable for all scientific problems42. Hence, selecting the most suitable algorithm based on the specific problem is of paramount importance37. Commonly used ML algorithms mainly include linear algorithms, decision tree-based algorithms, artificial neural networks, support vector machines (SVM), and Bayesian-based algorithms, et al.43. Linear algorithms are relatively simple and offer strong interpretability but may sacrifice some model accuracy. Decision tree-based algorithms, such as random forest (RF) and extreme gradient boosting (XGBoost)44, exhibit good fitting performance for many nonlinear problems, but their poor extrapolation capability has been criticized. Artificial neural networks theoretically can fit various functional relationships but require a substantial amount of training data, making them a cautious choice for small datasets45. SVM often perform well on small datasets and are known for their kernel functions against linear or nonlinear data distribution. Bayesian-based algorithms, such as Gaussian process regression (GPR), describe problems probabilistically and can provide prediction intervals to address uncertainty quantification46. Based on the characteristics of the dataset and the modeling objectives, the most suitable algorithm can be selected after comparing several algorithms. Algorithm selection criteria can be based on results from CV or independent test sets. It is worth noting that when multiple models perform similarly well and are closely matched, ensemble methods can be employed to obtain an optimal model. After model selection, it is usually necessary to optimize the internal hyperparameters of the model to further enhance its performance.

Model application

The application of the model involves two main aspects: one is to predict unknown samples to design materials with desired performance, the other is the exploration of physical and chemical principles through model analysis.

Candidates with optimal performance can be obtained through forward prediction and inverse design. The forward prediction means predicting the target variable by inputting features into the model available. In contrast, inverse design starts from desired properties as âinputâ and ends in chemical space as âoutputâ47. Typical forward prediction methods include high-throughput virtual screening (HTVS), where candidates with desirable performance are selected from a large pool of virtual samples based on predictions. In inverse design, the problem is often transformed into an optimization problem, which is solved using optimization algorithms. In the field of engineering applications, the design of candidates satisfying multiple objectives is receiving growing attention48. Solutions to such problems typically come in three forms. The first approach involves converting multiple objectives into a single objective through multi-objective weighting, which is then addressed using single-objective design methods. The second method is ε-constrained method, where candidates within the range of secondary objective variables are selected to form a subset, and then the optimal candidate are chosen from this subset based on the primary objective variable. The third approach employs multi-objective optimization algorithms to obtain candidates, typically seeking optimality in Pareto sense. The solutions obtained are not single points but rather a set, allowing decision-makers to choose based on different application scenarios.

Model analysis enhances the interpretability of the modelâs predictions by uncovering the influence mechanisms between critical features and the target variable. This process facilitates the advancement of domain knowledge and guides materials design. Since model analysis is based on a well-established ML model, it is a crucial component of model application. Statistical methods employed in model analysis include feature importance ranking methods, SHAP, SA, and PDP et al.

Details

In this section, more comprehensive discussions of the details within each step of ML for materials design will be processed. The insights obtained from various strategies of detail can assist researchers in selecting the most suitable strategies for their own studies.

Details in dataset construction

Source of data

Different candidate feature pools can ultimately impact the upper limit of the accuracy of the model. Lian et al.49 devised domain knowledge features based on empirical formulas for ML modeling to predict the fatigue life of seven different series of aluminum alloys. The results revealed that the designed features exhibited the highest PCC with the target variable. Additionally, the inclusion of these designed features significantly improved the accuracy of the model. Wen et al.4 used the experimental samples to construct ML model for predicting the hardness of HEAs. Their results showed that better model was obtained by using compositional features combined with domain knowledge descriptors rather than using composition alone as features. Chen et al.50, in order to predict the Charpy impact toughness of low-alloy steels, proposed three feature construction strategies: the first adopting alloy composition solely, the second incorporating both alloy composition and heat treatment parameters, and the third considering alloy composition, heat treatment parameters, and physical features. As the feature pool expanded, the accuracy of the model significantly improved. The PCC between predicted values and actual values increased from 0.79 to a remarkable 0.96. Zhao et al.51 examined the impact of different descriptors on the power conversion efficiency (PCE) for donor/acceptor pairs. The results revealed that ML predictions were more accurate when both structural and physical descriptors were used concurrently, as opposed to using either one exclusively.

Highlights

When the physical mechanisms of the target variable are not fully understood, it is advisable to enrich the information in the candidate feature pool as much as possible. In addition to basic features such as composition and process parameters, incorporating domain knowledge-based features derived from empirical and physical formulas, structural features, and other types of features can enhance the accuracy of the model.

Missing values

In practice, datasets often contain missing values, and training models on such datasets can significantly impact prediction quality. The simplest approach is to remove the missing values directly, which is suitable for large datasets with a small proportion of missing values (<5%)52. In materials science, each experimental data point is valuable. When datasets are limited and contain missing values, to address data scarcity and avoid bias from direct deletion, imputation methods can be employed. Replacing missing values with the mean or median of the non-missing values is an easy-to-implement method suitable for small numerical datasets. However, this approach does not consider feature correlations or imputation uncertainty53. The k-nearest neighbors (KNN) imputation method uses feature similarity to predict missing values by taking the weighted average of the corresponding feature values from the k most similar samples in the training set. Its drawback is sensitivity to outliers. The multiple imputation by chained equations (MICE) method uses a series of estimates obtained through an iterative process to perform multiple imputations. Initially, all missing values are imputed with the mean. The variable with the fewest missing values is selected as the dependent variable, and the dataset is split into training and test sets based on the presence of missing values in this variable. A regression model is then used with other variables as predictors to estimate the missing values of the dependent variable, replacing the initial mean imputations. This process is repeated for the next variable with the fewest missing values. Lyngdoh et al.54 aimed to establish the link between concrete composition and strength. They compared mean and median imputation, KNN imputation (kâ=â5 and kâ=â10), and MICE imputation methods. XGBoost demonstrated the best predictive performance when missing data were imputed using KNN with kâ=â10. Deng et al.55 proposed a method for missing data imputation based on a clustering-based collaborative filtering (ClubCF) algorithm to address missing values in squeeze casting process parameters. ClubCF, which utilizes K-means clustering, outperformed mean imputation and regression imputation methods on their dataset. Additionally, there are imputation methods based on principal component analysis56,57,58 and neural networks59.

Highlights

Directly deleting data with missing values can bias the dataset and reduce its representativeness of the real-world data distribution. To avoid this and maximize data utilization, various imputation methods have been developed, including mean and median imputation, MICE, KNN-based imputation, and PCA-based imputation. In the materials field, imputation methods are still rarely used. Different datasets have varying missing data mechanisms and coupling characteristics, making no single method universally applicable. Therefore, the application of imputation methods in materials science deserves further research.

Transformation of data

Normalization is a data preprocessing technique to scale or transform data into a standardized range, typically within the range of 0 to 1 or â1 to 1. Common normalization methods include Z-score normalization and Min-Max scaling, and its primary objective is to eliminate scale differences among different variables. Wu et al.60 found that the performance of SVR and kernel ridge regression (KRR) models was highly sensitive to feature normalization (Z-score standardization). After normalization, SVR and KRR models exhibited significant improvement in performance, with coefficient of determination (R2) values increasing from 0.826 to 0.936 and from 0.819 to 0.932, respectively. However, normalization had only a slight impact on the gradient boosting regression (GBR) model, with R2 changing from 0.943 to 0.936. For SVR and KRR algorithms, the hyperparameters of the kernel function are heavily influenced by unnormalized features. Some truly important features may receive smaller hyperparameters without normalization, leading to significant errors in ML models and ultimately providing unreliable prediction results60. However, unlike SVR and KRR algorithms, the GBR algorithm relies on regression trees, where the decision process of tree models does not involve distance metrics. Instead, it partitions data features continuously for classification or regression based on feature thresholds. Consequently, the scale of the data is typically not a critical factor for tree models.

Large fluctuations in the distribution of the target variable or the presence of outliers can lead to instability in the predictions of the model. To mitigate this effect, one approach is to take the logarithm of the target variable. In a study by Hu et al.61, to address the issue of uneven data distribution, a natural logarithm transformation was applied to the target variable. As shown in Fig. 2, this transformation resulted in a more uniform distribution of the target variable, approaching a normal distribution. This transformation can enhance the performance of models like linear regression and Bayesian models, as they often assume the normality of data distribution. However, it should be noted that taking the logarithm is only suitable for target variables with strictly positive values and is not applicable to cases that include zeros or negative numbers. Moreover, when using the logarithmic transformation, the error of the model is scaled. Therefore, to accurately assess the modelâs performance and facilitate comparisons with similar models, inverse transformation of predicted results should be applied when calculating evaluation metrics.

Highlights

When using ML models that involve distance metrics, such as SVM, KRR, and KNN, normalization for features should be performed. However, for tree models, feature normalization may be optional. To make the distribution of variable values more uniform and conform to a normal distribution, a logarithmic transformation can be applied, but the conditions for its use must be noted. Additionally, for ease of comparison and analysis, the data should be back-transformed when calculating errors or conducting analyses.

Duplicate samples

Here, duplicate samples refer to samples with identical features but different experimental values for the target variable collected in different studies. The generation of duplicate samples can be attributed to two main factors. Firstly, it may stem from incomplete data collection, such as the oversight of varying experimental conditions and process parameters. When these parameters are used as features, some experimental samples may not be considered duplicates. Secondly, experimental errors are inevitable. Apart from measurement errors, unrecorded factors overlooked by experimenters can also lead to variations in experimental results. This can reduce the reproducibility of experiments and potentially conceal important patterns within the data. Therefore, it is encouraged that experimenters maintain comprehensive records during experiments to mitigate these issues. Similarly, researchers should exercise caution when dealing with duplicate samples during the ML process. Zhao et al.51 took the average of duplicate samples and removed other duplicate samples from the database. Yang et al.5 and Wen et al.4 discarded duplicate samples with significant differences in experimental values, while retaining them with averaging if the differences were not significant. Chen et al.62 removed extreme values from duplicate samples and used the average of the remaining duplicate sample experimental values as the target value. Meanwhile, Xu et al.63 included duplicate samples in the test set, trained the model using other samples, and then predicted the target values of the duplicate samples with the trained model. The experimental value closest to the predicted value was selected as the âtrue valueâ. These duplicate samples were considered the result of experimental errors, and high-performing ML models have the ability to correct inappropriate experimental values for duplicate samples. Some researchers do not process duplicate values and treat them as distinct samples.

Highlights

Using duplicate samples with a relatively large relative error directly to train the model can increase the error of the model. The same ML model will inevitably yield the same output for the same input values. If these duplicate samples are included in the training set, the model may predict an intermediate value among multiple target values for duplicate samples during training to reduce the fitting error. However, for duplicate samples with relatively large relative errors, this could be due to significant experimental errors for some duplicate samples, leading to a larger deviation of the predicted intermediate value from the true value, affecting the fitting performance of the model. Therefore, directly including all duplicate samples in the training set is not appropriate. Instead, duplicate samples with negligible relative errors can be preserved by computing the average of their values. The threshold for relative error can be determined based on acceptable measurement inaccuracies. Duplicate samples with large relative errors can either be discarded or included in the test set, where a trained ML model can be used to correct their experimental values.

Poorly performing samples (negative samples)

Zhang et al.64 employed ML methods to balance the saturation magnetization (Bs), glass-forming ability (measured by the critical casting size, Dmax), and ductility of iron-based metallic glasses (MG). During data preprocessing, samples with Fe content less than 0.3 were removed because of their low Bs. Additionally, samples with Dmaxâ<â1âmm were discarded due to their poor glass-forming ability. Therefore, poorly performing samples were removed effectively from the dataset. Consequently, the Dmax might be overestimated since the training set lacks samples that cannot transform into MG. Conversely, Wu et al.60 took a reverse approach when searching for HOIPs with bandgaps ranging from 0.9 to 1.6âeV. Negative samples were included in the training set, comprising 132 metal candidates (with a bandgap of 0) that had been incorrectly filtered out by a prior ML model65. This strategy resulted in more reliable candidate selections, and subsequent DFT validation revealed only one incorrectly filtered metal candidate, greatly improving the accuracy.

Highlights

These cases illustrate the importance of including both negative and positive samples in the dataset. Poorly performing samples, also referred to as failed samples, may contain distinct information from successful ones, thereby complementing each other to enhance the overall performance of the ML model. However, the challenge lies in the limited reporting of failed samples. It encourages researchers to report failed samples, thus further promoting data-driven research in related fields.

Outliers

The approaches of dealing with outliers that deviate significantly from the mean level will be discussed. When Zhang et al.66 modeled the hardness of HEAs, they compared the models with and without outliers and found a minor but statistically insignificant improvement in R2 and root mean square error (RMSE) after removing the outliers. The authors believed that since their dataset originated from the real world and scientific measurements, the probability of outliers being caused by measurement errors was low. In the real world, the presence of outliers is objective, and one must consider scenarios involving outliers to establish a connection between the model and the real world. Removing outliers can result in better fitting for the corresponding samples, but if an unknown sample happens to resemble a previously removed outlier when making predictions, the prediction bias may dramatically increase at that point.

Highlights

The presence of outliers in the real world is objective, and samples corresponding to outliers may contain crucial information, especially regarding groundbreaking insights into target properties. In the case of interpolation predictions, the removal of outliers can be considered if it significantly enhances the performance of the model. However, if the model is intended for groundbreaking predictions, outliers should not be removed.

Dataset splitting methods

The most commonly used data splitting method is random partitioning, where the training set percentage is generally set at 75%, 80%, or 90%. Mannodi-Kanakkithodi et al.67 collected 284 polymer data points to predict their dielectric performance using ML methods. The optimal training set size was investigated by progressively splitting the dataset from 50 (approximately 20% of the dataset) to 250 (approximately 90%), with the remaining data utilized as the test set. This process was repeated 50 times for each proportion, and the average and standard deviation of prediction errors for both the training and test sets were computed. The results showed a general trend of decreasing average test sets error with increasing training set size. However, it was also observed that for larger training set sizes, in many cases, while the average error for the training set was smaller, the standard deviation for the test sets appeared to be higher. This was attributed to some unreasonable data partitioning in 50 random splits, resulting in significant distribution differences between the training and test sets. Therefore, when using a single random split, careful selection of the training and test set distributions is necessary; otherwise, the model evaluation results from random splitting may be subject to randomness. To obtain more robust models and evaluation results, multiple random splits can be employed, and their averages can represent the predictive performance of the model. However, there is no consensus on the exact number of repetitions required. In order to reasonably partition the collected 437 samples into training and test sets, Lu et al.68 optimized two parameters, namely the random seed (representing the sample distribution) and the partition ratio. The optimal training and test sets ratio was determined to be 81.95% and 18.05%, respectively, along with the optimal parameter for the random seed, which was set to 1959.

In addition to random splitting, Liang et al.69 applied a stratified splitting method in their study on the thermodynamic stability classification of halide double perovskites. This approach ensures that the proportions of samples in each category are roughly balanced between the training and test sets, alleviating the issue of class imbalance70. While stratified splitting is commonly used in classification problems, it can also be employed in regression tasks when the distribution of the target variable varies unevenly across different ranges71. Barnard et al.72 constructed regression models to predict the stability of diamond nanoparticles, the ionization potential, and the electronic band gap. Due to the imbalanced distribution of the target variables, stratified splitting was used to split the dataset. Stratified splitting is a data splitting strategy based on the perspective of the target variable, and alternatively, datasets can also be partitioned based on the features. Xu et al.73 used the sphere exclusion algorithm74 to partition the dataset sensibly. This algorithm aims to diversify the chemical substances in the training set structurally, covering the entire descriptor space of the dataset while keeping the samples in the training and test sets close.

Highlights

A reasonable partitioning should aim to make the distributions of the training and test sets as similar as possible to that of the entire dataset, ensuring that the model receives adequate training on the training set and that the performance evaluation on the test set is meaningful75. If there is a substantial disparity between the distributions of the training set and the dataset, the model may not be exposed to enough information during training, potentially resulting in lower-than-expected performance in practical applications. Similarly, if there is a significant difference in distribution between the test set and the dataset, the performance evaluation on the test set may not be sufficiently objective and effective. For datasets with relatively uniform distributions, random partitioning can be employed, while for datasets with imbalanced sample distributions, more effective partitioning methods such as stratified splitting or the sphere exclusion algorithm should be considered. Additionally, the consistency of the distributions between the dataset and the training set and test set can be assessed through visualization or other comparative analytical methods.

Details in feature selection

The sequence of model selection and feature selection

Wrapper methods are commonly employed in feature selection, requiring a careful choice of the ML model to be integrated. This raises the question of whether to first select the optimal model and perform wrapper methods exclusively on the optimal model or to apply wrapper methods to all models. Wen et al.76 attempted all combinations of 8 features separately for all six models. Chen et al.62 conducted feature selection for RR, XGBoost, and SVR models separately. Meanwhile, Yang et al.5 initially used the composition of HEAs as features and established hardness prediction models based on several widely used ML algorithms, including multiple linear regression (MLR), backpropagation neural network, GBR, RF, XGBoost, and SVM. The optimal model was identified, and feature selection was subsequently performed based solely on the optimal model.

Highlights

Performing feature selection for each algorithm can improve the accuracy of each model. The best algorithm may differ before and after feature selection. Subsequently, model selection is carried out, which aligns more with the conventions of ML but comes with significantly increased computational complexity. Conversely, selecting the optimal algorithm first and then performing feature selection often involves using the same algorithm before and after feature selection. This strategy offers efficiency, particularly with high-dimensional features, saving substantial computational resources. However, the drawback is that it may not lead to each model exhibiting its optimal performance. Researchers can make a comprehensive consideration, taking computational costs and model accuracy into account, to choose which strategy to adopt.

Selection of feature selection methods

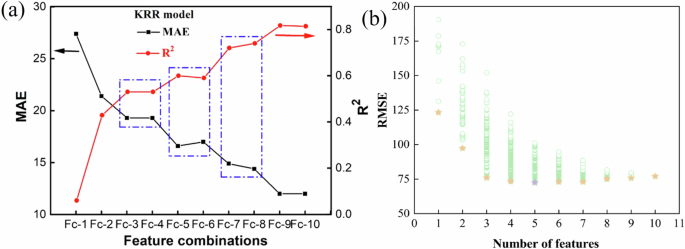

Feature selection methods are generally categorized into three types: filter methods, wrapper methods, and embedded methods. However, in many research studies, these three methods are not exclusively used; instead, they are often combined. Jiang et al.77 employed ML methods to select compounds with the desired wavelength and excellent stability for garnet structure. To obtain the optimal feature subset for wavelength prediction, 19 features with zero variance were first removed. Subsequently, Pearson correlation analysis was performed on the remaining 66 features. The feature pairs with PCC exceeding 0.9 were identified, and among them, those with lower correlations with wavelength were removed. Then, SFS was employed with different models to reduce the 47-dimensional feature space to a 10-dimensional feature subspace. During this process, instances were observed where the R2 and mean absolute error (MAE) in 10-fold CV remained unchanged or even deteriorated as the number of features increased from 1 to 10, as shown in Fig. 3a. It is attributed to the greedy nature of the SFS algorithm, leading to the inclusion of redundant features. Consequently, features that did not significantly impact model performance were removed, and ultimately, 7 features were selected.

Due to the drawbacks of the SFS method, Yang et al.5 introduced an additional step in their four-step feature selection process. Instead of directly using the 10 feature combinations with the lowest errors obtained in the third step of SFS, the âbest-subset selectionâ78 was added as the fourth step. In this step, the optimal combination was selected from all possible subsets of the 10 features, as illustrated in Fig. 3b, resulting in the final choice of 5 features. In addition to SFS, Li et al.79 employed SBS, while Zhang et al.80 utilized RFA based on feature importance ranking. The disadvantage of these algorithms is that once a feature is added (or removed), it cannot be further adjusted, and the resulting feature subset may not necessarily be globally optimal81. Xu et al.63 employed GA as their feature selection method, which is one of the most efficient feature selection methods. GA, inspired by the principles of biological evolution, iterate and update individuals within a population to find the global optimum without exhaustively testing all feature combinations82. Multiple wrapper methods can also be combined. Zhang et al.66 used a combination of RF-based feature selection, GA, and RFE methods to select features, retaining those features selected by at least three of these methods.

In addition to the application of wrapper methods, embedded methods are frequently used. Embedded methods integrate feature selection with model training, offering higher efficiency compared to wrapper methods. Lyu et al.83 employed a logistic regression (LR) model with an L1 penalty, removing unimportant features with coefficient values of 0. Similarly, Ghiringhelli et al.84 utilized the LASSO to select the best feature subset from a pool of 10,000 material descriptors for predicting the crystal structures of semiconductors. However, the models used in embedded methods are typically linear models with L1 regularization, which may not perform well in capturing complex mapping relationships.

Highlights

When dealing with a lot of features, stepwise feature selection methods are more preferable. Begin with a filter method, such as removing features with zero variance or highly linearly correlated redundant features. Following this, employ wrapper methods. There are several feature selection algorithms available within the wrapper methods framework, and the most suitable one can be chosen through comparative analysis. Additionally, the best feature subset can also be a combination of multiple wrapper methods. Finally, when dealing with a reduced number of features, the âbest-subset selectionâ can be used. In cases where a linear model can be used to fit the mapping relationship between features and target variables effectively, consider using a linear regression model with an L1 regularization term as an embedded method to simultaneously handle feature selection and model training.

Emphasis in feature selection: model accuracy vs. model complexity vs. model interpretability

Feature selection is guided by three distinct objectives: model accuracy, model complexity, and model interpretability. Different objectives lead to variations in the optimal feature subset. Most commonly, the primary goal of feature selection is to enhance model accuracy. Wu et al.60 selected the top 16 most important features out of 32 based on the feature importance obtained from the GBR model and used them for modeling. The results showed that the reduced feature set performed slightly worse than using the complete feature set, indicating that the remaining non-important features also contribute to improving the performance of the ML model. Therefore, the complete feature set was reintroduced in subsequent computations. This case study prioritized model accuracy, but it is essential to note that the dataset was relatively large, comprising 906 samples with 90% of the data used for training, which mitigated the risk of overfitting given the number of features.

Typically, many researchers simultaneously consider both model accuracy and model complexity. Yang et al.5 adhering to the âOccamâs Razorâ principle, struck a balance between model simplicity and accuracy by employing the minimum number of features necessary for a sufficiently accurate model. An R-value of 0.94 for the test set predictions was achieved using only five features in the modeling process. Similarly, Zhang et al.66 explored all possible combinations of seven features. It was observed that increasing the number of features beyond five did not significantly improve the accuracy of the model but substantially increased model complexity. Consequently, a set of five features was opted. In addition to manually observing changes in model evaluation metrics corresponding to different feature subsets for the selection of the optimal feature subset, Chen et al.62 employed the Akaike Information Criterion (AIC) that considers both fitting performance and model complexity as the selection criterion. The feature subset with the lowest AIC was opted.

Increasing the interpretability of the model is also crucial. Selecting features that incorporate domain knowledge and giving their physical and chemical significance, can reduce the risk of model overfitting. Lu et al.68 employed ML to predict the experimental bandgaps of HOIPs. From the features generated by the Villars and Mendeleev Python package, only simple descriptors with clear meanings were selected, while another 66 descriptors containing more complex or meaningless information were discarded, with the aim of achieving a model that is as interpretable as possible. Zhao et al.51 argued that using solely chemical fingerprint descriptors can lead to strong fitting capabilities of ML models but may hinder the discovery of molecules with entirely different chemical characteristics. Therefore, retaining well-performing descriptors with chemical and physical significance is essential not only for enhancing model interpretability but also for maintaining accurate predictions when the model encounters entirely different chemical compounds. It is important to note that increasing model interpretability and improving model accuracy are not necessarily contradictory goals. Integrating domain knowledge into ML often leads to better performance compared to purely data-driven approaches85. Furthermore, it can enhance the reliability and robustness of ML models.

Highlights

Three aspects need to be considered when constructing a model: accuracy, complexity, and interpretability. Accuracy is a fundamental requirement, and model complexity and interpretability can be further considered based on an acceptable accuracy. The accuracy and complexity of a model are often considered simultaneously, aiming to achieve as high accuracy with as few features as possible. This becomes particularly critical when handling limited data, as an excessive number of features increases the risk of overfitting. Selecting features based on domain knowledge is advantageous as they possess chemical and physical significance, guiding the model. This not only enhances the interpretability and generalization capability of the model but also improves its accuracy.

Details in model evaluation and selection

Details of model evaluation methods

Model evaluation and model selection are not separate processes. Model selection relies on the results of model evaluation, and after model selection, the optimal model can undergo more comprehensive evaluation. However, if sufficient time and computational resources are available, evaluating each candidate model comprehensively before model selection may lead to selecting a model with superior overall performance.

In most of the ML works, the trainâtest splitting scores or CV results are usually used as the basis for model evaluation86. The train-test splitting scores depend largely on the allocation of data points to the training and test sets, and the evaluation results can vary significantly based on this allocation. To avoid the randomness of train-test splitting scores, it is advisable to repeat the dataset splitting process multiple times and use the average results from the test sets as the basis for model selection60,76. Repeated train-test splitting ensures extensive coverage of the target variable within the test sets, thus effectively assessing the performance of the model. Similarly, the test sets generated by k-fold CV techniques can uniformly cover the entire range of the target variable. In the k-fold CV, the dataset is initially randomly divided into k equally-sized, mutually exclusive subsets. Each time, (k - 1) of these subsets are combined to form the training set, and the remaining subset is used as the test set. This process results in k sets of training/test data, where training and test are performed iteratively, and the final evaluation is based on the average of the k test results. In k-fold CV, data resampling is conducted without replacement, ensuring that each data point appears once in the test sets. This evenly covers the entire range of target variables, effectively testing the performance of ML algorithms. Typical choices for the value of k are 5 or 1087. For larger datasets, smaller values of k are typically sufficient, whereas for smaller datasets, larger values of k are needed88. Additionally, when k is equal to the size of the sample set, it represents a special case of k-fold CV known as leave-one-out cross-validation (LOOCV). LOOCV provides nearly unbiased estimates of accuracy89, but it incurs a high computational cost. Therefore, it is often employed for evaluating model performance on small datasets.

Although k-fold cross-validation is widely used for model evaluation, the assessment of ML model predictive performance using an independent test set should not be overlooked. Furthermore, as more samples may be reported over time, these data can serve as an extended test set to further validate the predictive capabilities of the model83. Many researchers employ multiple evaluation methods. Yang et al.5 and Zhang et al.66 used independent test set, 10-fold CV, and LOOCV to assess model performance. Mai et al.90 assessed the predictive capabilities of models using independent test set, extended test set, 5-fold CV, 10-fold CV, and LOOCV. The average results from repeated CV are commonly used to evaluate the stability of the model5,14,90, but there is no consensus on the number of repetitions required to obtain reliable results.

A challenge in materials science is the discovery of materials with higher or lower physical/chemical properties among all known materials91. Currently, the performance of ML algorithms used for materials property prediction is often evaluated through k-fold CV or train-test splitting, which tends to overestimate their exploratory predictive performance in discovering unknown materials. Xiong et al.91 proposed the k-fold-m-step forward cross-validation (kmFCV) method to assess the extrapolation capability of materials property prediction models. Unlike traditional k-fold CV methods that randomly partition data into k subsets, kmFCV first sorts all samples based on material property values and then evenly divides them into k subsets. As shown in Fig. 4, in each iteration, the subset with the lowest property values is used as the training set, and the next subset is used as the validation set, continuously advancing until the subset with the highest property values serves as the validation set. The average of validation set metrics is used as the final evaluation result. The evaluation results revealed that tree models and KNN exhibited poor extrapolation capabilities.

kmFCV partitions the dataset based on the target property values to evaluate the modelâs ability to explore materials with groundbreaking performance. Furthermore, as another scenario of extrapolation, we may be interested in designing materials with structures, compositions, or other features that are radically different from those reflected in the training data, i.e., exploring unknown chemical spaces. Random CV often overestimates the modelâs ability to predict unknown chemical spaces because during random partitioning, the training and test sets may typically have similar constituent elements, with only slight variations in composition. This allows the model to effectively act as a âlook-upâ table to predict nearby materials rather than learning deeper physical principles92. Leave-out-group (LOG) CV can be used to assess the machine learning modelâs ability of exploring unknown chemical spaces. It partitions the dataset into different groups based on features for CV, with the test data comprising an entire distinct group of data.

Groups can be determined based on the results of clustering algorithms or manually defined criteria, such as grouping data with similar chemical compositions. LOG CV imposes higher requirements than k-fold CV, aiming to simulate the modelâs performance when presented with test data significantly different from the training data. Jacobs et al.93 grouped the data from different databases and found that the modelâs RMSE in LOG CV was significantly higher than that in 5-fold CV. Wu et al.94 and Afflerbach et al.92 grouped the data based on chemical composition and used LOG CV to assess the modelâs predictive ability for target properties in unknown chemical spaces. Furthermore, Afflerbach et al.92 indicate that when the number of samples with similar compositions reaches a critical threshold in the training set, the modelâs performance improves to some extent, resulting in more stable predictions. However, the average error does not exhibit a significant, sustained decrease. Meredig et al.95 partitioned the dataset into clusters using the k-means clustering method and trained machine learning models on the remaining clusters to predict each test cluster, demonstrating the effectiveness of LOG CV in evaluating machine learning models for high-temperature superconductors. Lu et al.96 evaluated the performance of Gaussian kernel ridge regression and GPR in modeling the activation energy of impurities for 408 host-solute systems based on elemental properties. They demonstrated the advantages of using the RMSE in LOG CV for feature selection, because it has similar errors in 5-fold CV but much lower errors in LOG CV compared to using the RMSE in 5-fold CV for feature selection. The development and application of similar evaluation methods contribute to accelerating breakthroughs in material performance and exploration of unknown chemical spaces.

CV evaluation methods typically reflect the average performance of overall prediction accuracy and may not be sensitive enough to samples with large deviations in certain regions. Wu et al.97 aimed to evaluate the generalization ability of regression models across different target variable ranges. The samples in the test set were divided into three groups based on the target variable PCE values: high-level (A, PCEâ>â11%), medium-level (B, 7% ⤠PCEâ<â11%), and low-level (C, 0ââ¤âPCEâ<â7%). By comparing predicted values with experimental values, significant differences in prediction accuracy across different PCE levels were observed. Similar methods enable us to gain a more comprehensive understanding of the modelâs predictive capabilities across different ranges of the target variable.

Highlights

The most commonly used model evaluation methods are k-fold CV and independent test set evaluation. Due to the random nature of their partitioning, the distribution of the test set and training set may be quite similar, allowing them to be used to assess the modelâs predictive ability on test sets with distributions similar to that of the training set. However, even if a model performs well in k-fold CV, it does not necessarily indicate strong extrapolation capability. To assess a modelâs extrapolation ability regarding the target variable, one should consider employing the kmFCV method. To evaluate a modelâs predictive ability in unknown chemical spaces, LOG CV should be used instead. To assess the stability of a model, the dataset can be repeatedly partitioned, and the average evaluation metric can be calculated to represent the modelâs performance. Additionally, employing partitioning strategies can evaluate the predictive accuracy of the model within different regions of the target variable. We encourage the use of diverse model evaluation methods. By considering different evaluation results, a more comprehensive understanding of the model can be obtained, thereby identifying its strengths and limitations.

Details in model selection

Many studies choose a model by comparing different models using evaluation metrics computed by (repeated) train-test splitting or k-fold CV5,77,98,99. However, Chen et al.62 independently constructed RR, XGBoost, and SVR models to predict alloy melting points. As all three models exhibited strong performance and showed similar results, a straightforward ensemble model was created by averaging their predictions, referred to as the R-X-S model. The ensemble model was observed to potentially exhibit lower performance than individual models on specific samples; however, when considering overall evaluation metrics, it outperformed any individual model. In essence, it traded off some predictive accuracy on certain samples to mitigate the predictive risk associated with individual models, resulting in a more consistent model performance. Similarly, Zhang et al.66 employed a stacking ensemble algorithm to integrate the high-performing light gradient boosting machine (LightGBM) and categorical boosting (CatBoost) models. Compared to individual models, the 10-fold CV R2 improved, and RMSE decreased. Therefore, in addition to individual models, ensemble strategies can also be employed to enhance model stability and accuracy.

In addition to relying solely on model accuracy as the criterion for model selection, some studies also consider factors such as model interpretability, the ability to quantify uncertainty, and extrapolation capability. Iwasaki et al.100 did not select more accurate models like neural network (NN) and SVM to predict the thermopower (SSTE) of spin-driven thermoelectric (STE) materials. Instead, they adopted an interpretable ML approach, the factorized asymptotic Bayesian inference hierarchical mixture of experts (FAB/HMEs). FAB/HMEs constructed piecewise sparse linear models, outperforming RF, LASSO and MLR when predicting SSTE. The model revealed distinct behaviors between magnetic and non-magnetic materials in the context of STE. Consequently, separate models were constructed for non-magnetic and magnetic STE, as illustrated in Fig. 5. A STE material with the highest SSTE at the time was designed using the insights provided by the FAB/HMEs model. On the other hand, other models such as NN, SVM, RF, and MLR attempted to represent the STE phenomenon using a single model for both magnetic and non-magnetic material data. This approach could result in averaging the different dependencies found in each magnetic and non-magnetic STE model, potentially obscuring important patterns. Hierarchical data structures are common in materials data, and FAB/HMEs, capable of discovering and representing this data hierarchy, can assist scientists in more effectively modeling and comprehending physical phenomena, thus accelerating material discovery.

FAB/HMEs creates piecewise sparse linear model, which is visualized with (a) tree structure and (b) regression models. Scientists can interpret tree structure and regression models from viewpoint of materials science and physics100.

Pan et al.101 included the Kriging algorithm as one of the candidate methods to build a model for predicting the photocurrent of halide perovskite materials. Kriging is suitable for exploring various chemical systems due to its capability to generate predictive values along with confidence intervals, allowing for the quantification of uncertainty102. This feature has proven attractive for design space exploration. Lyu et al.83 used ML algorithms such as logistic regression (LR), SVM, KNN, Decision Trees, etc., to predict the dimensions of lead iodide-based perovskite materials. Both LR and SVM models achieved the highest accuracy, but considering the simplicity and interpretability of constructing mathematical expressions, this study ultimately chose the LR model with an L1 penalty.

Decision trees and tree-based models, including RF and Gradient Boosting Trees (GBT), typically exhibit strong fitting capabilities within the training data domain and possess high interpretability. However, they encounter challenges when it comes to extrapolation, which entails predicting values for data points lying beyond the bounds of the training data103,104. These models employ feature values from the training set as thresholds for splitting tree nodes. Consequently, all samples outside the training domain are assigned to the same leaf nodes as those situated at the boundaries of the training data. This limitation can result in prediction inaccuracies, especially when the training dataset contains trends, such as those related to time-dependent features103. Additionally, the predicted values are essentially the averages of observed values within the corresponding regions of the training set partitions, constraining the predicted values to fall within the range of the training setâs target variable. Therefore, if the purpose of building a predictive model is to screen for breakthrough performance in virtual samples, tree-based models should not be used, and instead, linear models, neural networks, support vector machines, and similar models should be considered.

Highlights

With numerous machine learning algorithms available, there is no universal algorithm. To select the most suitable algorithm for a given dataset, it is often necessary to model with multiple algorithms and choose the best-performing one based on evaluation metrics. Additionally, ensemble strategies can be employed when several algorithms perform well. When specific requirements arise, such as assessing prediction uncertainty, the Kriging algorithm can be employed. For models requiring interpretability, linear models and decision tree models are prioritized. However, linear models, due to their simplicity, may struggle to fit complex functional relationships. They can be substituted with piecewise linear models, such as FAB/HMEs, which can uncover hierarchical structures within the data, establishing interpretable and highly accurate predictive models. Furthermore, it is essential to recognize the limitations of each model. For instance, tree models may have poor extrapolation capabilities, while NN models require a large sample size. Therefore, it is crucial to combine the characteristics of the specific dataset with the modeling objectives to select the appropriate algorithm.

Details in model application

Details of design candidates

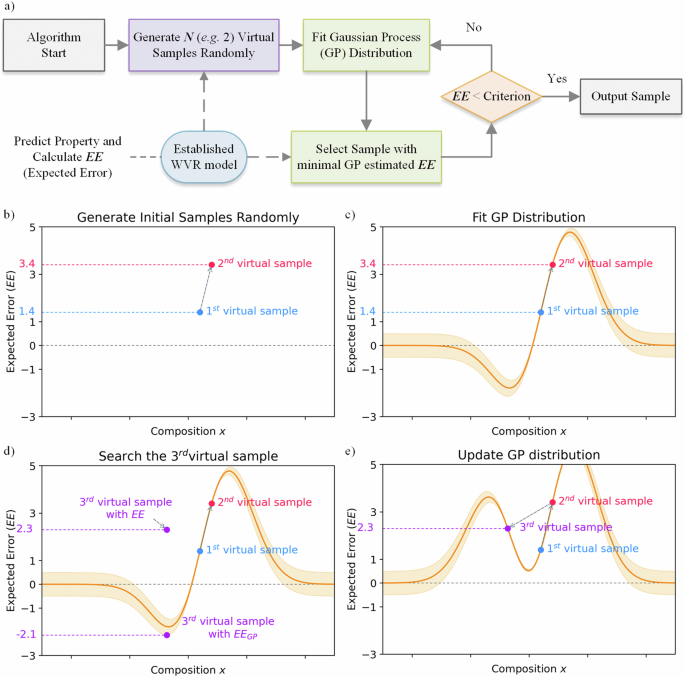

Various strategies in both forward prediction and reverse design can be employed to design candidates that meet a single objective. Yang et al.5 used two methods to optimize the hardness of HEAs, namely the inverse projection (IP)105 based on the Fisher projection and HTVS, respectively. IP method designed a HEA with hardness 24.8% higher than the highest one in the original dataset. IP is an inverse design method that involves projecting the feature space onto a two-dimensional plane using either principal component projection or Fisher projection. Samples are categorized into superior and inferior classes based on the values of the target variable. Designed points are selected within the regions where superior class samples cluster, and the prediction model is used to predict the target variable for these points. Inverse design of IP is realized by solving a system of equations formed by two projection formulas and boundary conditions. The IP method combines qualitative and quantitative design approaches, enhancing the reliability of candidate designs. The inverse design is usually processed by solving an optimization problem, therefore combining ML models with metaheuristic methods is a promising strategy. Metaheuristic methods, including GA, ant colony optimization (ACO), particle swarm optimization (PSO), and simulated annealing, can find near-optimal solutions in complex optimization problems with a lot of variables106. She et al.99 used a two-step ML approach to design perovskite solar cells (PSCs) with high PCE for doped electron transport layers (ETLs). As shown in Fig. 6, the first step of ML used an RF regression model combined with GA to predict a series of undoped ETLs for PSCs that could potentially exhibit high PCEs. The second step of ML, also using RF combined with GA, predicted doped ETLs that could lead to high efficiency improvement rates (EIRs). Finally, by multiplying the PCEs of undoped ETLs predicted by the first step of ML with the EIRs of doped ETLs predicted by the second step of ML, the PCEs of doped ETLs for PSCs were obtained. This resulted in PCE predictions of 30.47% for Cs-doped TiO2 ETL (24.53% Cs doping) and 28.54% for S-doped SnO2 ETL (32.85% S doping). In addition to commonly used metaheuristic optimization algorithms, Lu et al.68 developed the Proactive Searching Progress (PSP) method to realize inverse design. Illustrated in Fig. 7, this method initiates by generating N random samples and employing a Weighted Voting Regressor (WVR) model to predict outcomes for these N samples, while simultaneously calculating the error between the predicted values and the expected values (denoted as EE). The distribution of these N points is fitted using a Gaussian process (GP), with the corresponding EE serving as the response. It identifies the point with the smallest EE estimated by the current GP distribution, obtains the true EE for this point using the WVR model, and integrates this point into the GP model to update the current GP distribution. The process continues the search for potential candidates until the EE value from the WVR model falls below a predefined threshold, at which point it is output as a candidate.

First-step ML (left side), second-step ML (right side)99.

a Workflow details of PSP method. b First and second generated virtual samples. c Simulated GP distribution based on the first and second generated virtual samples. d Third virtual samples searched by GP distribution. e Updated GP distribution adjusted by the third virtual samples68.

Designing candidates with multiple desired performance remains a significant challenge. Wu et al.60 developed a multi-stage material screening approach to search for stable HOIPs with suitable bandgaps for photovoltaic power generation. The selection process involves several key screening stages: charge neutrality screening, stability screening (requiring appropriate tolerance factor (Tf) and octahedral factor (Of) ranges to maintain stable perovskite structures), and ML model screening. In the ML model screening stage, the dataset is randomly split 100 times, and 100 models are constructed. Candidates among the HOIPs are only chosen if the predictions from all 100 models fall within the range of 0.9 ⤠EgMLââ¤â1.6âeV. Similarly, to select candidates for garnet phosphors with the desired wavelength and excellent thermal stability, Jiang et al.77 first used a trained wavelength model to filter phosphors with wavelengths in the range of 480â510ânm. Then, a thermal stability prediction model was combined with an efficient global optimization (EGO) strategy to balance exploitation (aims at finding the best prediction) and exploration (aims at improving the predictive model), and candidates were selected based on expected improvement (EI) ranking. Essentially, both of these cases involve solving multi-objective optimization problems using the ε-constrained method, which means selecting a primary optimization objective and treating the others as constraints. These constraints are typically defined by setting ranges for secondary objective variables. By optimizing the primary objective within the bounds of these secondary objectives, the multi-objective optimization problem is transformed into a constrained single-objective optimization problem. The advantage of this strategy is that it ensures that the generated solutions satisfy the constraints, meaning the solutions are feasible.

However, selecting appropriate ranges for the secondary objectives relies on empirical knowledge, and the generated solutions may only approximate the Pareto front. Similar traditional solving methods include the weighted-sum method, but determining the weights for each objective also relies on empirical knowledge. These methods are suitable for researchers experienced in the problem domain who can set appropriate parameters to obtain more accurate solutions.

In addition to traditional multi-objective optimization methods, Pareto-based multi-objective optimization algorithms have been successfully applied in materials design. Compared to ε-constrained and weighted-sum methods with only an optimal solution, Pareto-based multi-objective optimization algorithms provide a set of Pareto-optimal solutions adaptable to diverse application scenarios and requirements. Recently, Li et al.98 employed the ε-constrained method, weighted-sum method, and non-dominated sorting genetic algorithm II (NSGA-II) to design HEAs with a favorable combination of saturated magnetization and hardness. The experimental results underscored the advantage of NSGA-II in directly identifying a set of optimal compositions with the desired multiple properties. Zhang et al.64 proposed a multi-objective optimization strategy to find the optimal combination of critical casting dimensions (Dmax), saturation magnetization (Bs), and plasticity for iron-based metallic glasses (MGs). The objective functions were constructed by NN models for predicting Dmax and Bs, along with a plasticity empirical formula. Genetic algorithm-based multi-objective optimization was performed to obtain Pareto-optimal solutions, which represent the best trade-offs among the objectives.

Highlights

When designing unknown samples to meet a single target performance, the method of obtaining the best candidates through HTVS is straightforward. However, generated virtual samples require comprehensive coverage of the virtual space. Otherwise, the obtained optimal solution may not necessarily be the best. To a vast virtual space, the computational cost can significantly increase. In such cases, inverse design methods like IP, optimization algorithms or PSP, can result in a more efficient search for the global optimum. When designing samples to meet multiple target performance criteria, traditional methods such as the ε-constrained method and weighted-sum method can be employed. These methods transform the multi-objective problem into a single-objective problem for resolution. However, their drawback lies in the reliance on empirical knowledge for parameter setting. Utilizing multi-objective optimization algorithms eliminates the need for mandatory parameter settings in traditional solving methods. Furthermore, the solutions obtained exhibit diversity, allowing for the selection of solutions from the Pareto-optimal set based on varying requirements for the objective variables. The application of multi-objective optimization algorithms in materials science accelerates the design of materials with desired multiple properties. Additionally, candidate selection can account for prediction uncertainty through multiple predictions and by calculating EI.

Details of model analysis

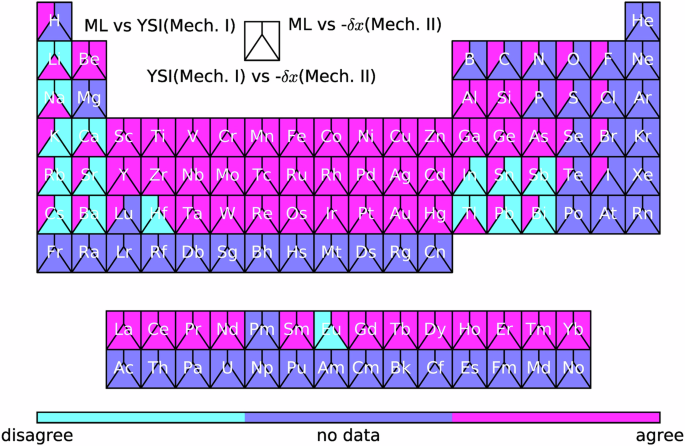

ML model can be used not only to explore uncharted chemical spaces but also to extract physical and chemical insights from model analysis. Wen et al.76 derived three critical features from eight dimensions related to solid solution strengthening (SSS) in HEAs through feature selection. These features were employed to construct an empirical model for SSS, surpassing existing physics-based models for single-phase HEAs hardness prediction. This advancement has contributed to a better understanding of SSS. Similarly, Pei et al.107 employed GP classification algorithms to reliably and effectively predict promising solutes for toughening magnesium alloys. Based on the ML results, an assessment of the consistency between two existing ductility descriptors, namely the yttrium similarity index (YSI) and the size-misfit parameter (δX), revealed that 92% and 78% of the results aligned with ML predictions, respectively. These descriptors correspond to two distinct deformation mechanisms, Mechanism I and Mechanism II. As depicted in Fig. 8, they exhibited a strong correlation, providing insight into a significant debate regarding the dominant ductility mechanisms in these lightweight alloys. This discovery represents a crucial step toward unraveling the mysteries of the primary ductility mechanisms in such materials, showcasing the potential of ML in contributing insights into physicochemical mechanisms from data. These two cases, employing ML methods, have contributed to the advancement of domain-specific knowledge, one through the construction of empirical formulas and the other through the evaluation of various physicochemical mechanisms associated with a specific phenomenon. The application of ML in aiding the exploration of undiscovered patterns still offers significant potential.

The cyan blocks represent where the two predictions disagree, the blue ones represent no data available, and the pink ones represent where the two predictions agree107.

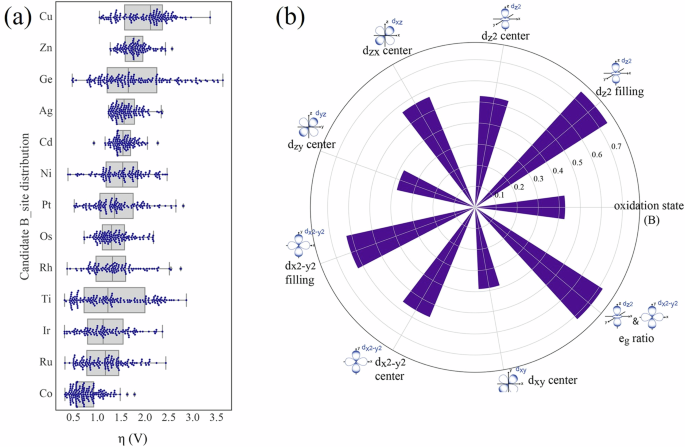

Feature analysis can further aid in uncovering physical and chemical patterns. To design high-performance ABO3 perovskite catalysts for the oxygen evolution reaction (OER), Li et al.79 compared candidates with different B-site elements by analyzing the overpotential distribution, as depicted in Fig. 9a. The type of B-site element significantly influenced the OER catalytic activity, with perovskites containing elements like Co, Ru, Ir, and Ti at the B-site exhibiting lower overpotentials, indicating higher catalytic activity compared to other series.

a Box and swarm plots display the overpotential distributions for the candidate data set with different B-site metals. b Polar distribution plots of the most informatic descriptors (KL entropy index >0.4)79.

In addition to simple statistical analysis, hidden physical and chemical patterns can be discovered using various feature analysis methods. The Kullback-Leibler (KL) divergence108 measures the asymmetric loss of information when an empirical probability distribution is approximated by a model distribution. A higher KL divergence indicates a greater information content for the corresponding descriptor, playing a crucial role in distinguishing between samples from two classification categories. Li et al.79 categorized candidates into two groups based on their OER activity compared to LaCoO3 and computed the KL divergence values for important features. In Fig. 9b, the descriptors with the highest information content (KL divergence > 0.4) were emphasized. The results showed that descriptors related to the electronic structure of the metal B-site are closely associated with the OER activity of perovskites, providing physical insights from data-driven models. Similarly, methods like SHAP and feature importance assessment methods inherent to tree-based models (such as RF) can be employed for feature ranking based on their importance.

SHAP is a method for model interpretation, and its advantage over traditional feature interpretation methods lies in its ability to not only rank the importance of features but also to reflect the positive and negative effects of each feature on each sample, as well as the important patterns of how features contribute to the predictions. This effectively unifies the global and local interpretability attributes of a model. Mai et al.90, in predicting the maximum absorption wavelength (λmax) of azo dyes, found a strong positive correlation between the sulfur atom count in the R2 group and λmax according to SHAP analysis. Furthermore, as the number of C-N pairs with a topological distance of 4 increased in the R1 group, the likelihood of a redshift in the molecular λmax also increased. SHAP analysis can determine the range within which a feature positively or negatively contributes to the target variable but does not provide the range of values of the target variable corresponding to the feature within that range. SA can be used to explore how the target variable changes when a selected feature varies while keeping other variables fixed. In the study of polymer bandgaps, Xu et al.73 conducted SA to understand how the target variable varies with key features. The patterns revealed by SA exhibit significant consistency with correlation analysis, and they can highlight inflection points that correlation analysis has not captured.