- Regular article

- Open access

- Published:

The shocklet transform: a decomposition method for the identification of local, mechanism-driven dynamics in sociotechnical time series

EPJ Data Science volume 9, Article number: 3 (2020)

Abstract

We introduce a qualitative, shape-based, timescale-independent time-domain transform used to extract local dynamics from sociotechnical time series—termed the Discrete Shocklet Transform (DST)—and an associated similarity search routine, the Shocklet Transform And Ranking (STAR) algorithm, that indicates time windows during which panels of time series display qualitatively-similar anomalous behavior. After distinguishing our algorithms from other methods used in anomaly detection and time series similarity search, such as the matrix profile, seasonal-hybrid ESD, and discrete wavelet transform-based procedures, we demonstrate the DST’s ability to identify mechanism-driven dynamics at a wide range of timescales and its relative insensitivity to functional parameterization. As an application, we analyze a sociotechnical data source (usage frequencies for a subset of words on Twitter) and highlight our algorithms’ utility by using them to extract both a typology of mechanistic local dynamics and a data-driven narrative of socially-important events as perceived by English-language Twitter.

1 Introduction

The tasks of peak detection, similarity search, and anomaly detection in time series is often accomplished using the discrete wavelet transform (DWT) [1] or matrix-based methods [2, 3]. For example, wavelet-based methods have been used for outlier detection in financial time series [4], similarity search and compression of various correlated time series [5], signal detection in meteorological data [6], and homogeneity of variance testing in time series with long memory [7]. Wavelet transforms have far superior localization in the time domain than do pure frequency-space methods such as the short-time Fourier transform [8]. Similarly, the chirplet transform is used in the analysis of phenomena displaying periodicity-in-perspective (linearly- or quadratically-varying frequency), such as images and radar signals [9–12]. Thus, when analyzing time series that are partially composed of exogenous shocks and endogenous shock-like local dynamics, we should use a small sample of such a function—a “shock”, examples of which are depicted in Fig. 1, and functions generated by concatenation of these building blocks, such as that shown in Fig. 2.

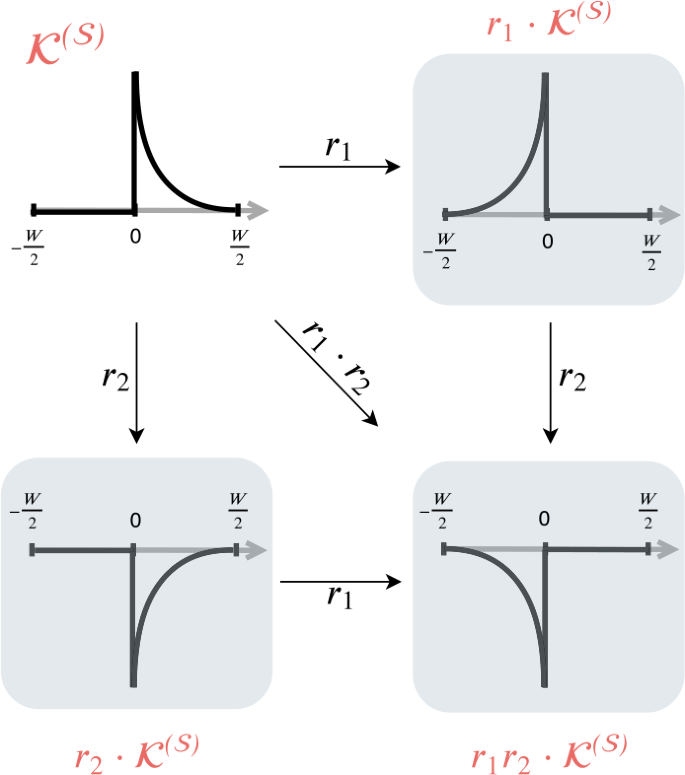

The discrete shocklet transform is generated through cross-correlation of pieces of shocks. this figure displays effects of the action of group elements \(r_{i} \in R_{4}\) on a base “shock-like” kernel \(\mathcal{K}\). The kernel \(\mathcal{K}\) captures the dynamics of a constant lower level of intensity before an abrupt increase to a relatively high intensity which decays over a duration of \(W/2\) units of time. By applying elements of \(R_{4}\), we can effect a time reversal (\(r_{1}\)) and abrupt cessation of intensity followed by asymptotic convergence to the prior level of intensity (\(r_{2}\)), as well as the combination of these effects (\(r _{3} = r_{1} \cdot r_{2}\))

Shock dynamics. This figure provides a schematic for the construction of more complicated shock dynamics from a simple initial shape (\(\mathcal{K}^{(S)}\)). By acting on a kernel with elements \(r_{i}\) of the reflection group \(R_{4}\) and function concatenation, we create shock-like dynamics, as exemplified by the symmetric shocklet kernel \(\mathcal{K}^{(C)} = \mathcal{K}^{(S)} \oplus [r_{1} \cdot \mathcal{K}^{(S)}]\) in this figure. In Sect. 2.3 we illuminate a typology of shock dynamics derived from combinations of these basic shapes

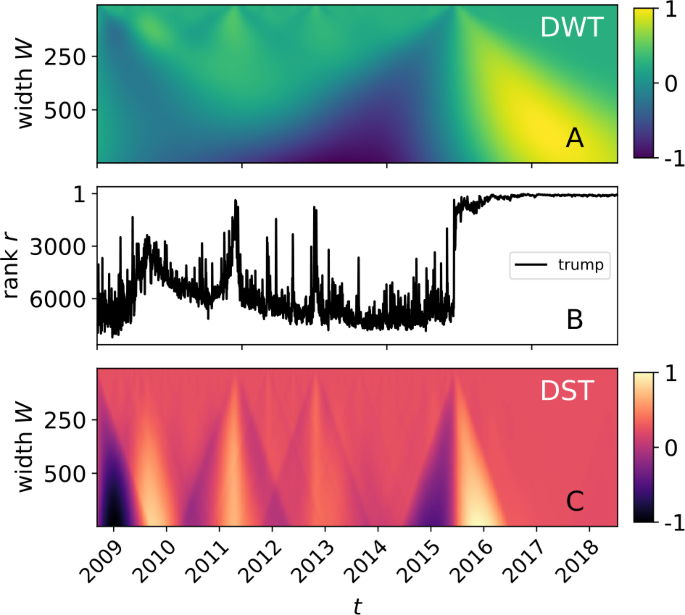

In this work, we introduce the Discrete Shocklet Transform (DST), generated by cross-correlation functions of a shocklet. As an immediate example (and before any definitions or technical discussion), we contrast the DWT with the DST of a sociotechnical time series—popularity of the word “trump” on the social media website Twitter—in Fig. 3, which is a visual display of what we claim is the DST’s suitability for detection of local mechanism-driven dynamics in time series.

A comparison between the standard discrete wavelet transform (DWT) and our discrete shocklet transform (DST) of a sociotechnical time series. Panel (B) displays the daily time series of the rank \(r_{t}\) of the word “trump” on Twitter. As a comparison with the DST, we computed the DWT of \(r_{t}\) using the Ricker wavelet and display it in panel (A). Panel (C) shows the DST of the time series using a symmetric power shock, \(\mathcal{K}^{(S)}(\tau |W,\theta ) \sim \mathrm{rect}(\tau ) \tau ^{\theta }\), with exponent \(\theta = 3\). We chose to compare the DST with the DWT because the DWT is similar in mathematical construction (see Appendix 1 for a more extensive discussion of this assertion), but differs in the choice of convolution kernel (a wavelet, in the case of the DWT, and a piece of a shock, in the case of the DST) and the method by which the transform accounts for signal at multiple timescales

We will show that the DST can be used to extract shock and shock-like dynamics of particular interest from time series through construction of an indicator function that compresses time-scale-dependent information into a single spatial dimension using prior information on timescale and parameter importance. Using this indicator, we are able to highlight windows in which underlying mechanistic dynamics are hypothesized to contribute a stronger component of the signal than purely stochastic dynamics, and demonstrate an algorithm—the Shocklet Transform and Ranking (STAR) algorithm—by which we are able to automate post facto detection of endogenous, mechanism-driven dynamics. As a complement to techniques of changepoint analysis, methods by which one can detect changes in the level of time series [13, 14], the DST and STAR algorithm detect changes in the underlying mechanistic local dynamics of the time series. Finally, we demonstrate a potential usage of the shocklet transform by applying it to the LabMT Twitter dataset [15] to extract word usage time-series matching the qualitative form of a shock-like kernel at multiple timescales.

2 Data and theory

2.1 Data

Twitter is a popular micro-blogging service that allows users to share thoughts and news with a global community via short messages (up to 140 or, from around November 2017 on, 280 characters, in length). We purchased access to Twitter’s “decahose” streaming API and used it to collect a random 10% sample of all public tweets authored between September 9, 2008 and April 4, 2018 [16]. We then parsed these tweets to count appearances of words included in the LabMT dataset, a set of roughly 10,000 of the most commonly used words in English [15]. The dataset has been used to construct nonparametric sentiment analysis models [17] and forecast mental illness [18] among other applications [19–21]. From these counts, we analyze the time series of word popularity as measured by rank of word usage: on day t, the most-used word is assigned rank 1, the second-most assigned rank 2, and so on to create time series of word rank \(r_{t}\) for each word.

2.2 Theory

2.2.1 Algorithmic details: description of the method

There are multiple fundamentally-deterministic mechanistic models for local dynamics of sociotechnical time series. Nonstationary local dynamics are generally well-described by exponential, bi-exponential, or power-law decay functions; mechanistic models thus usually generate one of these few functional forms. For example, Wu and Huberman described a stretched-exponential model for collective human attention [22], and Candia et al. derived a biexponential function for collective human memory on longer timescales [23]. Crane and Sornette assembled a Hawkes process for video views that produces power-law behavior by using power-law excitement kernels [24], and Lorenz-Spreen et al. demonstrated a speeding-up dynamic in collective social attention mechanisms [25], while De Domenico and Altmann put forward a stochastic model incorporating social heterogeneity and influence [26], and Ierly and Kostinsky introduced a rank-based, signal-extraction method with applications to meteorology data [27]. In Sect. 1.2.2 we conduct a literature review, contrasting our methods with existing anomaly detection and similarity search time series data mining algorithms and demonstrating that the DST and associated STAR algorithm differ substantially from these existing algorithms. We have open-sourced implementations of the DST and STAR algorithm; code for these implementations is available at a publicly-accessible repository.Footnote 1

We do not assume any specific model in our work. Instead, by default we define a kernel \(\mathcal{K}^{(\cdot )}\) as one of a few basic functional forms: exponential growth,

monomial growth,

power-law decay,

or sudden level change (corresponding with a changepoint detection problem),

where \(\varTheta (\cdot )\) is the Heaviside step function. The function rect is the rectangular function (\(\mathrm{rect}(x)=1\) for \(0< x< W/2\) and \(\mathrm{rect}(x) = 0\) otherwise), while in the case of the power-law kernel we add a constant ε to ensure nonsingularity. The parameter W controls the support of \(\mathcal{K}^{(\cdot )}(\tau |W,\theta )\); the kernel is identically zero outside of the interval \([\tau - W/2, \tau + W/2]\). We define the window parameter W as follows: moving from a window size of W to a window size of \(W + \Delta W\) is equivalent to upsampling the kernel signal by the factor \(W + \Delta W\), applying an ideal lowpass filter, and downsampling by the factor W. In other words, if the kernel function \(\mathcal{K}^{(\cdot )}\) is defined for each of W linearly spaced points between \(-N/2\) and \(N/2\), moving to a window size of W to \(W + \Delta W\) is equivalent to computing \(\mathcal{K} ^{(\cdot )}\) for each of \(W + \Delta W\) linearly-spaced points between \(-N/2\) and \(N/2\). This holds the dynamic range of the kernel constant while accounting for the dynamics described by the kernel at all timescales of interest. We enforce the condition that \(\sum_{t=- \infty }^{\infty } \mathcal{K}^{(\cdot )}(t| W,\theta ) = 0\) for any window size W.

It is decidedly not our intent to delve into the question of how and why deterministic underlying dynamics in sociotechnical systems arise. However, we will provide a brief justification for the functional forms of the kernels presented in the last paragraph as scaling solutions to a variety of parsimonious models of local deterministic dynamics:

If the time series \(x(t)\) exhibits exponential growth with a state-dependent growth damper \(D(x)\), the dynamics can be described by

$$ \frac{{d}x(t)}{{d}t} = \frac{\lambda }{D(x(t))}x(t),\qquad x(0) = x _{0}. $$(5)If \(D(x) = x^{1/n}\), the solution to this IVP scales as \(x(t) \sim t ^{n}\), which is the functional form given in Eq. (2). When \(D(x) \propto 1\) (i.e., there is no damper on growth) then the solution is an exponential function, the functional form of Eq. (1).

If instead the underlying dynamics correspond to exponential decay with a time- and state-dependent half-life \(\mathcal{T}\), we can model the dynamics by the system

$$\begin{aligned} &\frac{{d}x(t)}{{d}t} = -\frac{x(t)}{\mathcal{T}(t)},\qquad x(0) = x_{0}, \end{aligned}$$(6)$$\begin{aligned} &\frac{{d}\mathcal{T}(t)}{{d}t} = f\bigl(\mathcal{T}(t), x(t)\bigr),\qquad \mathcal{T}(0) = \mathcal{T}_{0}. \end{aligned}$$(7)If f is particularly simple and given by \(f(\mathcal{T}, x) = c\) with \(c > 0\), then the solution to Eq. (6) scales as \(x(t) \sim t^{-1/c}\), the functional form of Eq. (3). The limit \(c \rightarrow 0^{+}\) is singular and results in dynamics of exponential decay, given by reversing time in Eq. (1) (about which we expound later in this section).

As another example, the dynamics could be essentially static except when a latent variable φ changes state or moves past a threshold of some sort:

$$\begin{aligned} &\frac{{d}x(t)}{{d}t} = \delta \bigl( \varphi (t) - \varphi ^{*} \bigr),\qquad x(0) = x_{0}, \end{aligned}$$(8)$$\begin{aligned} &\frac{{d}\varphi (t)}{{d}t} = g\bigl(\varphi (t), x(t)\bigr),\qquad \varphi (0) = \varphi _{0}. \end{aligned}$$(9)In this case the dynamics are given by a step function from \(x_{0}\) to \(x_{0} + 1\) the first time \(\varphi (t)\) changes position relative to \(\varphi ^{*}\), and so on; these are the dynamics we present in Eq. (4).

This list is obviously not exhaustive and we do not intend it to be so.

We can use kernel functions \(\mathcal{K}^{(\cdot )}\) as basic building blocks of richer local mechanistic dynamics through function concatenation and the operation of the two-dimensional reflection group \(R_{4}\). Elements of this group correspond to \(r_{0} = \mathrm{id}\), \(r_{1} = \) reflection across the vertical axis (time reversal), \(r_{2} = \) negation (e.g., from an increase in usage frequency to a decrease in usage frequency), and \(r_{3} = r_{1} \cdot r_{2} = r_{2} \cdot r_{1}\). We can also model new dynamics by concatenating kernels, i.e., “glueing” kernels back-to-back. For example, we can generate “cusplets” with both anticipatory and relaxation dynamics by concatenating a shocklet \(\mathcal{K}^{(S)}\) with a time-reversed copy of itself:

We display an example of this concatenation operation in Fig. 2. For much of the remainder of the work, we conduct analysis using this symmetric kernel.

The discrete shocklet transform (DST) of the time series \(x(t)\) is defined by

which is the cross-correlation of the sequence and the kernel. This defines a \(T \times N_{W}\) matrix containing an entry for each point in time t and window width W considered.

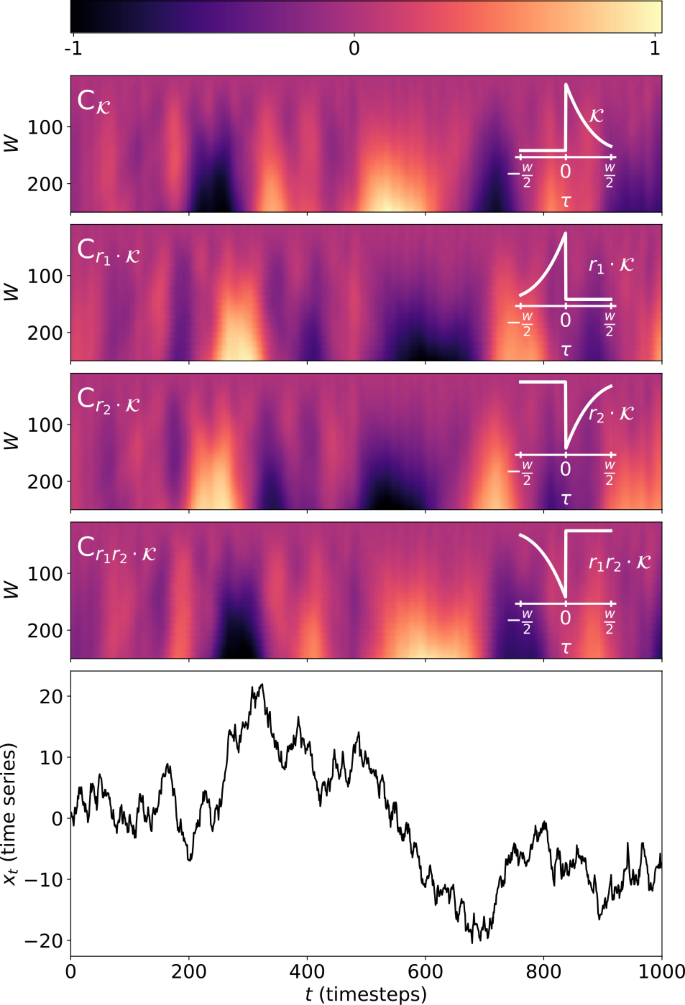

To convey a visual sense of what the DST looks like when using a shock-like, asymmetric kernel, we compute the DST of a random walk \(x_{t} - x_{t-1} = z_{t}\) (we define \(z_{t} \sim \mathcal{N}(0,1)\)) using a kernel function \(\mathcal{K}^{(S)}(\tau |W, \theta ) \sim \mathrm{rect}(\tau )\tau ^{\theta }\) with \(\theta = 3\) and display the resulting matrix for window sizes \(W \in [10, 250]\) in Fig. 4.

Effects of the reflection group \(R_{4}\) on the shocklet transform. The top four panels display the results of the shocklet transform of a random walk \(x_{t} = x_{t-1} + z_{t}\) with \(z_{t} \sim \mathcal{N}(0,1)\), displayed in the bottom panel, using the kernels \(r_{j} \cdot \mathcal{K}^{(S)}\), where \(r_{j} \in R_{4}\)

The effects of time reversal by action of \(r_{1}\) are visible when comparing the first and third panels with the second and fourth panels, and the result of negating the kernel by acting on it with \(r_{2}\) is apparent in the negation of the matrix values when comparing the first and second panels and with the third and fourth. For this figure, we used a random walk as an example time series here as there is, by definition, no underlying generative mechanism causing any shock-like dynamics; these dynamics appear only as a result of integrated noise. We are equally likely to see large upward-pointing shocks as large downward-pointing shocks because of this, which allows us to see the activation of both upward-pointing and downward-pointing kernel functions.

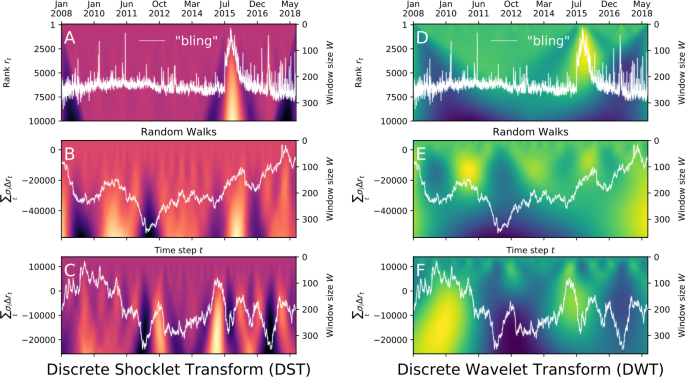

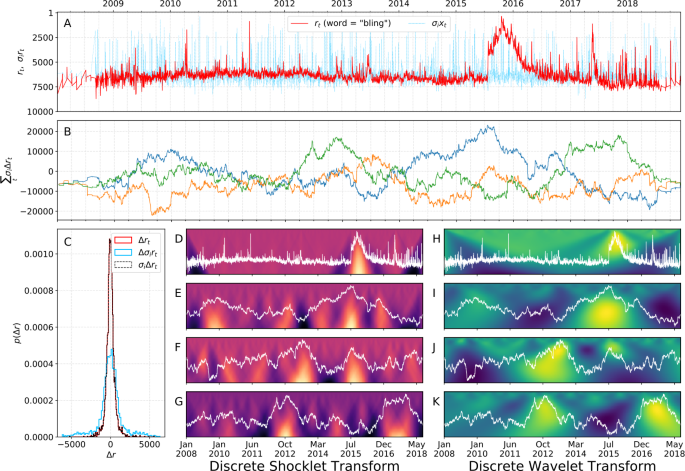

As a comparison with this null example, we computed the DST of a sociotechnical time series, the rank of the word “bling” among the LabMT words on Twitter, and two draws from a null random walk model, and displayed the results in Fig. 5. Here, we calculated the DST using the symmetric kernel given in Eq. (10). (For more statistical details of the null model, see Appendix 1.) We also computed the DWT of each of these time series and display the resulting wavelet transform matrices next to the shocklet transform matrices in Fig. 5. Direct comparison of the sociotechnical time series (\(r_{t}\)) with the draws from the null models reveals \(r_{t}\)’s moderate autocovariance as well as the large, shock-like fluctuation that occurs in late July of 2015. (This underlying driver of this fluctuation was the release of a popular song entitled “Hotline Bling” on July 31st, 2015.) In comparison, the draws from the null model have a covariance with much more prominent time scaling and do not exhibit dramatic shock-like fluctuations as does \(r_{t}\). Comparing the DWT of these time series with the respective DST provides more evidence that the DST exhibits superior space-time localization of shock-like dynamics than does the DWT.

Intricate dynamics of sociotechnical time series. Panels (A) and (D) show the time series of the ranks down from top of the word “bling” on Twitter. Until mid-summer 2015, the time series presents as random fluctuation about a steady, relatively-constant level. However, the series then displays a large fluctuation, increases rapidly, and then decays slowly after a sharp peak. The underlying mechanism for these dynamics was the release of a popular song titled “Hotline Bling”. To demonstrate the qualitative difference of the “bling” time series from draws from a null random walk model, the details of which are given in Appendix 1. Panels (A), (B), and (C) show the discrete shocklet transform of the original series for “bling” and the random walks \(\sum_{t'\leq t} \Delta r_{\sigma _{i} t}\), showing the responsiveness of the DST to nonstationary local dynamics and its insensitivity to dynamic range. Panels (D), (E), and (F), on the other hand, display the discrete wavelet transform of the original series and of the random walks, demonstrating the DWT’s comparatively less-sensitive nature to local shock-like dynamics

To aggregate deterministic behavior across all timescales of interest, we define the shock indicator function as the function

for all windows W considered. The function \(p(W|\theta )\) is a probability mass function that encodes prior beliefs about the importance of particular values of W. For example, if we are interested primarily in time series that display shock- or shock-like behavior that usually lasts for approximately one month, we might specify \(p(W|\theta )\) to be sharply peaked about \(W = 28\) days. Throughout this work we take an agnostic view on all possible window widths and so set \(p(W|\theta ) \propto 1\), reducing our analysis to a strictly maximum-likelihood based approach. Summing over all values of the shocklet parameter θ defines the shock indicator function,

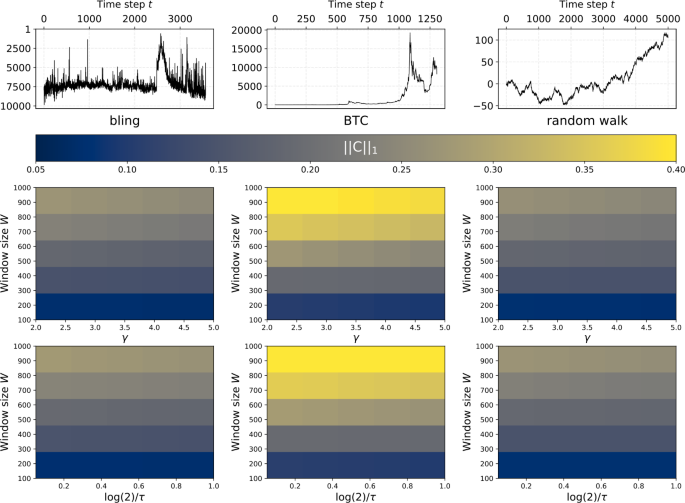

In analogy with \(p(W\theta )\), the function \(p(\theta )\) is a probability density function describing our prior beliefs about the importance of various values of θ. As we will show later in this section, and graphically in Fig. 6, the shock indicator function is relatively insensitive to choices of θ possessing a nearly-identical \(\ell _{1}\) norm for wide ranges of θ and different functional forms of \(\mathcal{K}^{(S)}\).

Parameters Sweep. The shock indicator function is relatively insensitive to functional forms \(\mathcal{K}^{(\cdot )}\) and values of the kernel’s parameter vector θ so long as the kernel functions are qualitatively similar (e.g., for cusp-like dynamics—as considered in this figure and in Eq. (10)—\(\mathcal{K}^{(C)}\) displaying increasing rates of increase followed by decreasing rates of decrease). Here we have computed the shock indicator function \(\mathrm{C}_{\mathcal{K}^{(S)}}(\tau |\theta )\) (Eq. (12)) for three different time series: two sociotechnical and one null example. From left to right, the top row of figures displays the rank usage time series of the word “bling” on Twitter, the price of the cryptocurrency Bitcoin, and a simple Gaussian random walk. Below each time series we display parameter sweeps over combinations of \((\theta , W_{\max })\) for two kernel functions: one kernel given by the function of Eq. (10) and another of the identical form but constructed by setting \(\mathcal{K}^{(S)}(\tau |W, \theta )\) to the function given in Eq. (1). The \(\ell _{1}\) norms of the shock indicator function are nearly invariant across the values of the parameters θ for which we evaluated the kernels. However, the shock indicator function does display dependence on the maximum window size \(W_{\max }\), with large \(W_{\max }\) associated with larger \(\ell _{1}\) norm. This is because a larger window size allows the DST to detect shock-like behavior over longer periods of time

After calculation, we normalize \(\mathrm{C}_{\mathcal{K}^{(S)}}(t)\) so that it again integrates to zero and has \(\max_{t} \mathrm{C}_{ \mathcal{K}^{(S)}}(t) - \min_{t} \mathrm{C}_{\mathcal{K}^{(S)}}(t) = 2\). The shock indicator function is used to find windows in which the time series displays anomalous shock- or shock-like behavior. These windows are defined as

where the parameter \(s > 0\) sets the sensitivity of the detection.

The DST is relatively insensitive to quantitative changes to its functional parameterization; it is a qualitative tool to highlight time periods of unusual events in a time series. In other words, it does not detect statistical anomalies but rather time periods during which the time series appears to take on certain qualitative characteristics without being too sensitive to a particular functional form. We analyzed two example sociotechnical time series—the rank of the word “bling” on Twitter (for reasons we will discuss presently)— and the price time series of Bitcoin (symbol BTC) [28], the most actively-used cryptocurrency [29], and of one null model, a pure random walk. For each time series, we computed the shock indicator function using two kernels, each of which had a different functional form (one kernel given by the function of Eq. (10) and one of the identical form but constructed by setting \(\mathcal{K}^{(S)}(\tau |W,\theta )\) to the function given in Eq. (1)), and evaluating each kernel over a wide range of its parameter θ. We also vary the maximum window size from \(W = 100\) to \(W = 1000\) to explore the sensitivity of the shock indicator function to this parameter. We display the results of this comparative analysis in Fig. 6. For each time series, we plot the \(\ell _{1}\) norm of the shock indicator function for each \((\theta , W)\) combination. We find that, as stated earlier in this section, the shock indicator function is relatively insensitive to both functional parameterization and value of the parameter θ; for any fixed W, the \(\ell _{1}\) norm of the shock indicator function barely changed regardless of the value of θ or choice of \(\mathcal{K}^{(\cdot )}\). However, the maximum window size does have a notable effect on the magnitude of the shock indicator function; higher values of W are associated with larger magnitudes. This is a reasonable finding, since higher maximum W means that the DST is able to capture shock-like behavior that occurs over longer timespans and hence may have values of higher magnitude over longer periods than for comparatively lower maximum W.

That the shock indicator function is a relative quantity is both beneficial and problematic. The utility of this feature is that the dynamic behavior of time series derived from systems of widely-varying time and length scales can be directly compared; while the rank of a word on Twitter and—for example—the volume of trades in an equity security are entirely different phenomena measured in different units, their shock indicator functions are unitless and share similar properties. On the other hand, the Shock Indicator Function carries with it no notion of dynamic range. Two time series \(x_{t}\) and \(y_{t}\) could have identical shock indicator functions but have spans differing by many orders of magnitude, i.e., \(\operatorname{diam} x_{t} \equiv \max_{t} x _{t} - \min_{t} x_{t} \gg \operatorname{diam} y_{t}\). (In other words, the diameter of a time series in interval I is just the dynamic range of the time series over that interval.) We can directly compare time series inclusive of their dynamic range by computing a weighted version of the shock indicator function, \(\mathrm{C}_{\mathcal{K}}(t) \Delta x(t)\), which we term the weighted shock indicator function (WSIF). A simple choice of weight is

where \(t_{b}\) and \(t_{e}\) are the beginning and end times of a particular window. We use this definition for the remainder of our paper, but one could easily imagine using other weighting functions, e.g., maximum percent change (perhaps applicable for time series hypothesized to increment geometrically instead of arithmetically).

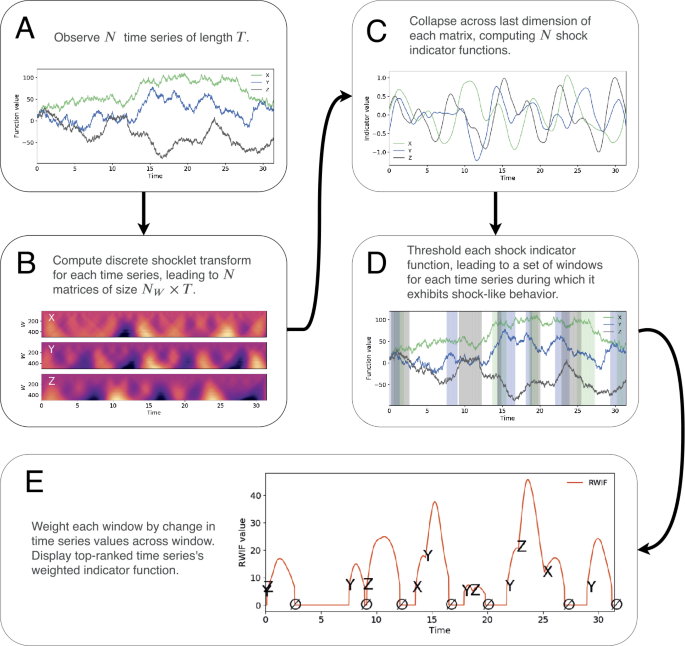

These final weighted shock indicator functions are the ultimate output of the shocklet transform and ranking (STAR) algorithm; the weighting corresponds to the actual magnitude of the dynamics and constitutes the “ranking” portion of the algorithm, while the weighting will only be substantially larger than zero if there existed intervals of time during which the time series exhibited shock-like behavior as indicated in Eq. (15). We present a conceptual, bird’s-eye view of the STAR algorithm (of which the DST is a core component) in Fig. 7. Though this diagram is lacking in technical detail, we have included it in an effort to provide a bird’s-eye view of the entire STAR algorithm and to help orient the reader on the conceptual process underpinning the algorithm.

STAR. The Shocklet Transform And Ranking (STAR) algorithm combines the discrete shocklet transform (DST) with a series of transformations that yield intermediate results, such as the cusp indicator function (panel (C) in the figure) and windows during which each univariate time series displays shock-like behavior (panel (D) in the figure). Each of these intermediate results is useful in its own right, as we show in Sect. 2. We display the final output of the STAR algorithm, a univariate indicator that condenses information about which of the time series exhibits the strongest shock-like behavior at each point in time

2.2.2 Algorithmic details: comparison with existing methods

On a coarse scale, there are five nonexclusive categories of time series data mining tasks [30]: similarity search (also termed indexing), clustering, classification, summarization, and anomaly detection. The STAR algorithm is a qualitative, shape-based, timescale-independent, similarity search algorithm. As we have shown in the previous section, the discrete shocklet transform (a core part of the overarching STAR algorithm) is qualitative, meaning that it does not depend too strongly on values of functional parameters or even the functions used in the cross-correlation operation themselves, as long as the functions share the same qualitative dynamics (e.g., increasing rates of increase followed by decreasing rates of decrease for cusp-like dynamics); hence, it is primarily shape-based rather than relying on the quantitative definition of a particular functional form. STAR is timescale-independent as it is able to detect shock-like dynamics over a wide range of timescales limited only by the maximum window size for which it is computed. Finally, we believe that it is best to categorize STAR as a similarity search algorithm as this seems to be the best-fitting label for STAR that is given in the five categories listed at the beginning of this section; STAR is designed for searching within sociotechnical time series for dynamics that are similar to the shock kernel in some way, albeit similar in a qualitative sense and over any arbitrary timescale, not functionally similar in numerical value and characteristic timescale. However, it could also be considered a type of qualitative, shape-based anomaly detection algorithm because we are searching for behavior that is, in some sense, anomalous compared to a usual baseline behavior of many time series (though see discussion at the beginning of the anomaly detection subsection near the end of this section: STAR is an algorithm that can detect defined anomalous behavior, not an algorithm to detect arbitrary statistical anomalies).

As such, we are unaware of any existing algorithm that satisfies these four criteria and believe that STAR represents an entirely new class of algorithms for sociotechnical time series analysis. Nonetheless, we now provide a detailed comparison of the DST with other algorithms that solve related problems, and in Sect. 2.1 provide an in-depth quantitative comparison with another nonparametric algorithm (Twitter’s anomaly detection algorithm) that one could attempt to use to extract shock-like dynamics from sociotechnical time series.

Similarity search—here the objective is to find time series that minimize some similarity criterion between candidate time series and a given reference time series. Algorithms to solve this problem include nearest-neighbor methods (e.g., k-nearest neighbors [31] or a locality-sensitive hashing-based method [32, 33]), the discrete Fourier and wavelet transforms [5, 34–36]; and bit-, string-, and matrix-based representations [30, 37–39]. With suitable modification, these algorithms can also be used to solve time series clustering problems. Generic dimensionality-reduction techniques, such as singular value decomposition/principal components analysis [40–42], can also be used for similarity search by searching through a dataset of lower dimension. Each of these classes of algorithms differs substantially in scope from the discrete shocklet transform. Chief among the differences is the focus on the entire time series. While the discrete shocklet transform implicitly searches the time series for similarity with the kernel function at all (user-defined) relevant timescales and returns qualitatively-matching behavior at the corresponding timescale, most of the algorithms considered above do no such thing; the user must break the time series into sliding windows of length τ and execute the algorithm on each sliding window; if the user desires timescale-independence, they must then vary τ over a desired range. An exception to this statement is Mueen’s subsequence similarity search algorithm (MSS) [43], which computes sliding dot products (cross-correlations) between a long time series of length T and a shorter kernel of length M before defining a Euclidean distance objective for the similarity search task. When this sliding dot product is computed using the fast Fourier transform, the computational complexity of this task is \(\mathcal{O}(T \log T)\). This computational step is also at the core of the discrete shocklet transform, but is performed for multiple kernel function arrays (more precisely, for the kernel function resampled at multiple user-defined timescales). Unlike the discrete shocklet transform, MSS does not subsequently compute an indicator function and does not have the self-normalizing property, while the matrix profile algorithm [39] computes an indicator function of sorts (their “matrix profile”) but is not timescale-independent and is quantitative in nature; it does not search for a qualitative shape match as does the discrete shocklet transform. We are unaware of a similarity-search algorithm aside from STAR that is both qualitative in nature and timescale-independent.

Clustering—given a set of time series, the objective is to group them into groups, or clusters, that are more homogeneous within each cluster than between clusters. Viewing a collection of N time series of length T as a set of vectors in \(\mathbb{R}^{T}\), any clustering method that can be effectively used on high-dimensional data has potential applicability to clustering time series. Some of these general clustering methods include k-means and k-medians algorithms [44–46], hierarchical methods [47–49], and density-based methods [47, 50–52]. There are also methods designed for clustering time series data specifically, such as error-in-measurement models [53], hidden Markov models [54], simulated annealing-based methods [55], and methods designed for time series that are well-fit by particular classes of parametric models [56–59]. Although the discrete shocklet transform component of the STAR algorithm could be coerced into performing a clustering task by using different kernel functions and elements of the reflection group, clustering is not the intended purpose of the discrete shocklet transform or STAR more generally. In addition, none of the clustering methods mentioned replicate the results of the STAR algorithm. These clustering methods uncover groups of time series that exhibit similar behavior over their entire domain; application of clustering methods to time series subsequences carries leads to meaningless results [60]. Clustering algorithms are also shape-independent in the sense that they cluster data into groups that share similar features, but do not search for specific known features or shapes in the data. In contrast with this, when using the STAR algorithm we already have specified a specific shape—for example, the shock shape demonstrated above—and are searching the data across timescales for occurrences of that shape. The STAR algorithm also does not require multiple time series in order to function effectively, differing from any clustering algorithm in this respect; a clustering algorithm applied to \(N=1\) data points trivially returns a single cluster containing the single data point. The STAR algorithm operates identically on one or many time series as it treats each time series independently.

Classification—classification is the canonical supervised statistical learning problem in which data \(x_{i}\) is observed along with a discrete label \(y_{i}\) that is taken to be a function of the data, \(y_{i} = f(x_{i}) + \varepsilon \); the goal is to recover an approximation to f that precisely and accurately reproduces the labels for new data [61]. This is the category of time series data mining algorithms that least corresponds with the STAR algorithm. The STAR algorithm is unsupervised—it does not require training examples (“correct labels”) in order to find subsequences that qualitatively match the desired shape. As above, the STAR algorithm also does not require multiple time series to function well, while (non-Bayesian) classification algorithms rely on multiple data points in order to learn an approximation to f.Footnote 2

Summarization—since time series can be arbitrarily large and composed of many intricately-related features, it may be desirable to have a summary of their behavior that encompasses the time series’s “most interesting” features. These summaries can be numerical, graphical, or linguistic in nature. Underlying methodologies for time series summary tasks include wavelet-based approaches [62, 63], genetic algorithms [64, 65], fuzzy logic and systems [66–68], and statistical methods [69]. Though intermediate steps of the STAR algorithm can certainly be seen as a time series summarization mechanism (for example, the matrix computed by the DShT or the weighted shock indicator functions used in determinning rank relevance of individual time series at different points in time), the STAR algorithm was not designed for time series summarization and should not be used for this task as it will be outperformed by essentially any other algorithm that was actually designed for summarization. Any “summary” derived from the STAR algorithm will have utility only in summarizing segments of the time series the behavior of which match the kernel shape, or in distinguishing segments of the time series that do have a similar shape as the kernel from ones that do not.

Anomaly detection—if a “usual” model can be defined for the system under study, an anomaly detection algorithm is a method that finds deviations from this usual behavior. Before we briefly review time series anomaly detection algorithms and compare them with the STAR algorithm, we distinguish between two subtly different concepts: this data mining notion of anomaly detection, and the physical or social scientific notion of anomalous behavior. In the first sense, any deviation from the “ordinary” model is termed an anomaly and marked as such. The ordinary model may not be a parametric model to which the data is compared; for example, it may be implicitly defined as the behavior that the data exhibits most of the time [70]. In physical and social sciences, on the other hand, it may be observed that, given a particular set of laboratory or observational conditions, a material, state vector, or collection of agents exhibits phenomena that is anomalous when compared to a specific reference situation, even if this behavior is “ordinary” for the conditions under which the phenomena is observed. Examples of such anomalous behavior in physics and economics include: spectral behavior of polychromatic waves that is very unusual compared to the spectrum of monochromatic waves (even though it is typical for polychromatic waves near points where the wave’s phase is singular) [71]; the entire concept of anomalous diffusion, in which diffusive processes with mean square displacement (autocovariance functions) scaling as \(\langle r(t)\rangle \sim t^{\alpha }\) are said to diffuse anomalously if \(\alpha \not \approx 1\) (since \(\alpha = 1\) is the scaling of the Wiener process’s autocovariance function) [72, 73], even though anomalous diffusion is the rule rather than the exception in intra-cellular and climate dynamics, as well as financial market fluctuations; and behavior that deviates substantially from the “rational expectations” of non-cooperative game theory, even though such deviations are regularly observed among human game players [74, 75]. This distinction between algorithms designed for the task of anomaly detection and algorithms or statistical procedures that test for the existence of anomalous behavior, as defined here, is thus seen to be a subtle but significant difference. The DST and STAR algorithm fall into the latter category: the purpose for which we designed the STAR algorithm is to extract windows of anomalous behavior as defined by comparison with a particular null qualitative time series model (absence of clear shock-like behavior), not to perform the task of anomaly detection writ large by indicating the presence of arbitrary samples or dynamics in a time series that does not in some way comport with the statistics of the entire time series.

With these caveats stated, it is not the case that there is no overlap between anomaly detection algorithms and algorithms that search for some physically-defined anomalous behavior in time series; in fact, as we show in Sect. 2.1, there is some significant convergence between windows of shock-like behavior indicated by STAR and windows of anomalous behavior indicated by Twitter’s anomaly detection algorithm when the underlying time series exhibits relatively low variance. Statistical anomaly detection algorithms typically propose a semi-parametric model or nonparametric test and confront data with the model or test; if certain datapoints are very unlikely under the model or exceed certain theoretical boundaries derived in constructing the test, then these datapoints are said to be anomalous. Examples of algorithms that operate in this way include: Twitter’s anomaly detection algorithm (ADV), which relies on generalized seasonal ESD test [76, 77]; the EGADS algorithm, which relies on explicit time series models and outlier tests [78]; time-series model and graph methodologies [79, 80]; and probabilistic methods [81, 82]. Each of these methods is strictly focused on solving the first problem that we outlined at the beginning of this subsection: that of finding points in one or more time series during which it exhibits behavior that deviates substantially from the “usual” or assumed behavior for time series of a certain class. As we outlined, this goal differs substantially from the one for which we designed STAR: searching for segments of time series (that may vary widely in length) during which the time series exhibits behavior that is qualitatively similar to underlying deterministic dynamics (shock-like behavior) that we believe is anomalous when compared to non-sociotechnical time series.

3 Empirical results

3.1 Comparison with Twitter’s anomaly detection algorithm

Through the literature review in Sect. 1.2 we have demonstrated that, to our knowledge, there exists no algorithm that solves the same problem for which STAR was designed—to provide a qualitative, shape-based, timescale-independent measure of similarity between multivariate time series and a hypothesized shape generated by mechanistic dynamics. However, there are existing algorithms designed for nonparametric anomaly detection that could be used to alert to the presence of shock-like behavior in sociotechnical time series, which is the application for which we originally designed STAR. One leading example of such an algorithm is Twitter’s Anomaly Detection Vector (ADV) algorithm.Footnote 3 This algorithm uses an underlying statistical test, seasonal-hybrid ESD, to test for the presence of outliers in periodic and nonstationary time series [76, 77]. We perform a quantitative and qualitative comparison between the STAR and ADV to compare their effectiveness at the task for which we designed STAR—determining qualitative similarity between shock-like shapes over a wide range of timescales—and to contrast the signals picked up by each algorithm, which, as we show, differ substantially. Before presenting results of this analysis, we note that this comparison is not entirely fair; though ADV is a state-of-the-art anomaly detection algorithm, it was not designed for the task for which we designed STAR, and so it is not exactly reasonable to assume that ADV would perform as well as STAR on this task. In an attempt to ameliorate this problem, we have chosen a quantitative benchmark for which our a priori beliefs did not favor the efficacy of either algorithm.

As both STAR and ADV are unsupervised algorithms, we compare their quantitative performance by assessing their utility in generating features for use in a supervised learning problem. Since the macro-economy is a canonical example of a sociotechnical system, we consider the problem of predicting the probability of a U.S. economic recession using only a minimal set of indicators from financial market data. Models for predicting economic recessions variously use only real economic indicators [83–85], only financial market indicators [86, 87], or a combination of real and financial economic indicators [88, 89]. We take an approach that is both simple and relatively granular, focusing on the ability of statistics of individual equity securities to jointly model U.S. economic recession probability. For each of the equities that was in the Dow Jones Industrial Average between 1999-07-01 to 2017-12-31 (a total of \(K=32\) securities), we computed both the DST (outputting the shock indicator function), STAR algorithm (outputting windows of shock-like behavior), and the ADV routine on that equity’s volume traded time series (number of shares transacted), which we sampled at a daily resolution for a total of \(T = 6759\) observations for each security. We then fit linear models of the form

where \(p_{t}\) is the recession probability on day t as given by the U.S. Federal Reserve (hence p is the length-T vector of recession probabilities).Footnote 4 When we the model represented by Eq. (17) using ADV or STAR as the algorithms generating features, the design matrix X is a binary matrix of shape \(T \times (K + 1)\) with entry \(X_{tk}\) equal to one if the algorithm indicated an anomaly or shock-like behavior respectively in security k at time t and equal to zero if it did not (the +1 in the dimensionality of the matrix corresponds to the prepended column of ones that is necessary to fit an intercept in the regression). When we fit the model using the shock indicator function generated by the DST, the matrix X is instead given by the matrix with column k equal to the shock indicator function of security k.

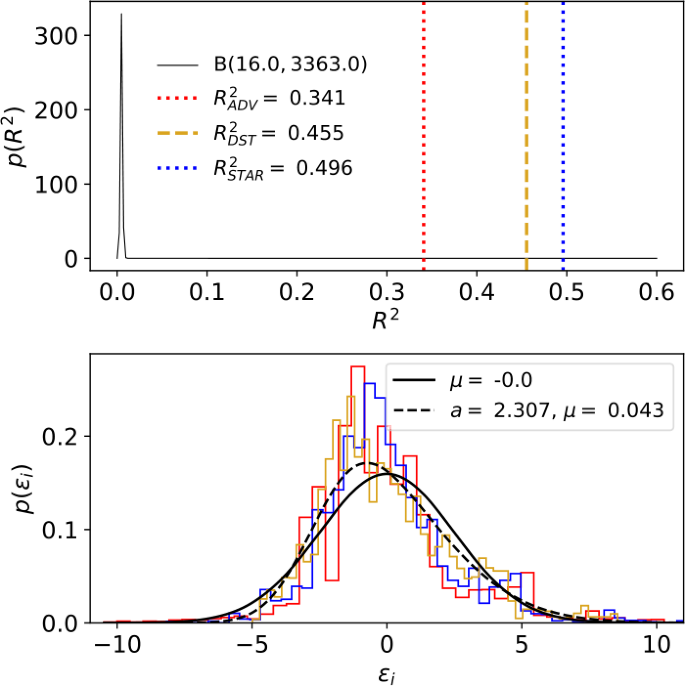

We evaluate the goodness of fit of these linear models using the proportion of variance explained (\(R^{2}\)) statistic; these results are summarized graphically in Fig. 8. The linear using ADV-indicated anomalies as features had \(R^{2}_{ \mathrm{ADV}} = 0.341\), while the model using the shock indicator function as columns of the design matrix had \(R^{2}_{\mathrm{DST}} = 0.455\) and the model using STAR-indicated shocks as features had \(R^{2}_{\mathrm{STAR}}= 0.496\). This relative ranking of feature importance remained constant when we used model log-likelihood ℓ as the performance metric instead of \(R^{2}\), with ADV, DST, and STAR respectively exhibiting \(\ell _{\mathrm{ADV}} = -16{,}278\), \(\ell _{\mathrm{DST}} = -15{,}633\), and \(\ell _{\mathrm{STAR}} = -15{,}372\). Each linear model exhibited a distribution of residuals \(\varepsilon _{t}\) that did not drastically violate the zero-mean and distributional-shape assumptions of least-squares regression; a maximum likelihood fit of a normal probability density to the empirical error probability distribution \(p( \varepsilon _{t})\) gave mean and variance as \(\mu = 0\) to within numerical precision and \(\sigma ^{2} \approx 6.248\), while a maximum likelihood fit of a skew-normal probability density [90] to the empirical error probability distribution gave mean, variance, and skew as \(\mu \approx 0.043\), \(\sigma ^{2} \approx 6.025\), and \(a \approx 2.307\). Taken in the aggregate, these results constitute evidence to suggest that features generated by the DST and STAR algorithms are superior in the task of classifying time periods as belonging to recessions or not than are features derived from the ADV method.

Analytical comparison of U.S. economic recession. We modeled the log odds ratio of a U.S. economic recession using three ordinary least squares regression models. Each model used one of the ADV method’s anomaly indicator, the shock indicator function resulting from the discrete shocklet transform, and the windows of shock-like behavior output by the STAR algorithm as elements of the design matrix. The models that used features constructed by the DST or STAR outperformed the model that used features constructed by ADV as measured by both \(R^{2}\) (displayed in the top panel) and model log-likelihood. The black curve in the top panel displays the null distribution of \(R^{2}\) under the assumption that no regressor (column of the design matrix) actually belongs to the true linear model of the data [91, 92]. The lower panel displays the empirical probability distributions of the model residuals \(\varepsilon _{i}\)

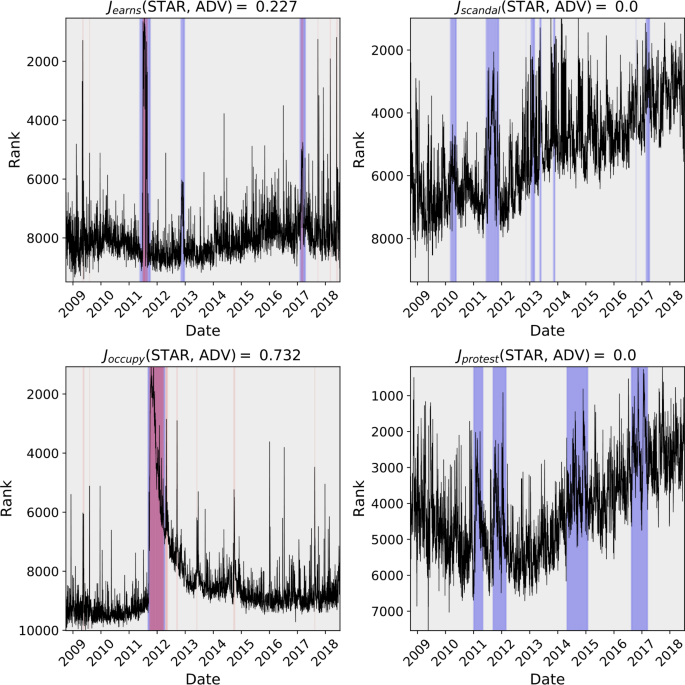

As a further comparison of the STAR algorithm and ADV, we generated anomaly windows (in the case of ADV) and windows of shock-like behavior (in the case of STAR) for the usage rank time series of each of the 10,222 words in the LabMT dataset. We computed the Jaccard similarity index for each word w (also known as the intersection over union) between the set of STAR windows \(\{I_{i}^{\mathrm{STAR}}(w)\}_{i}\) and the set of ADV windows \(\{I_{i}^{\mathrm{ADV}}(w)\}_{i}\),

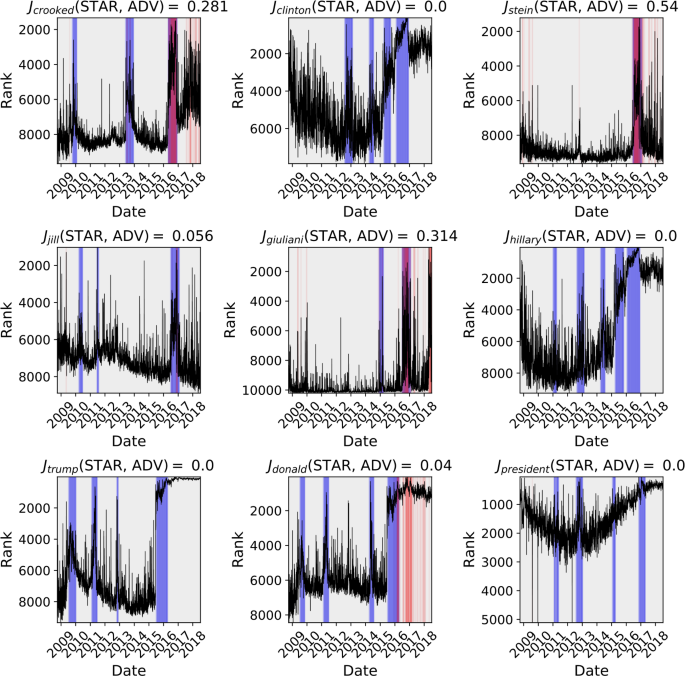

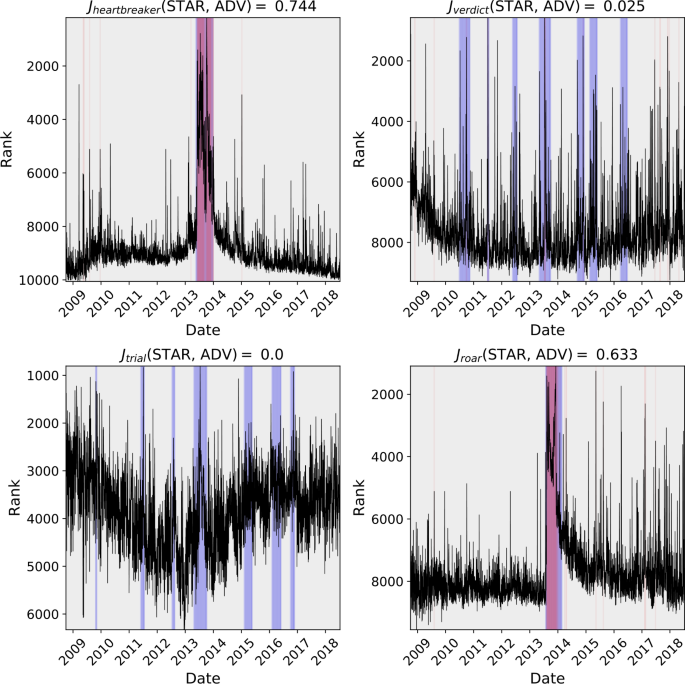

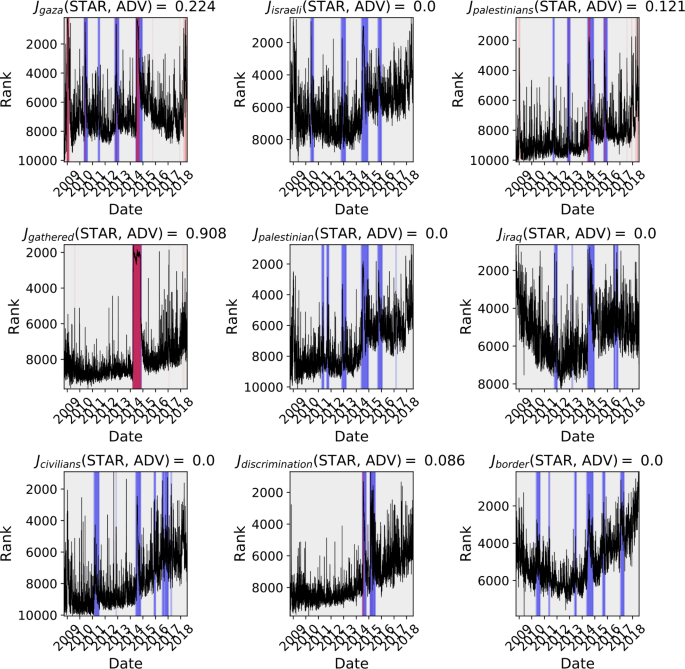

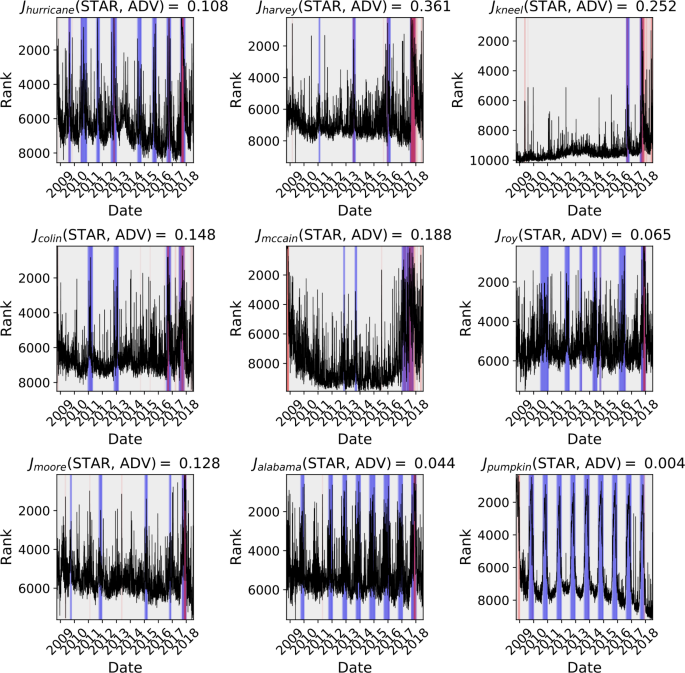

We display the word time series and ADV and STAR windows for a selection of words pertaining to the 2016 U.S. presidential election in Fig. 9. (These words display shock-like behavior in a time interval surrounding the election, as we demonstrate in the next section, hence our selection of them as examples here.)

Comparison of STAR and Twitter’s Anomaly Detection Vector (ADV) algorithm used for detecting phenomena in Twitter 1gram time series. The Jaccard similarity coefficient is presented for each 1-gram and the region where events on detected are shaded for the respective algorithm. Blue-shaded windows correspond with STAR windows of shock-like behavior, while red-shaded windows correspond with ADV windows of anomalous behavior (and hence purple windows correspond to overlap between the two). In general, ADV is most effective at detecting brief spikes or strong shock-like signals, whereas STAR is more sensitive to longer-term shocks and shocks that occur in the presence of surrounding noisy or nonstationary dynamic. ADV does not treat strong periodic fluctuations as anomalous by design; though this may or may not be a desirable feature of a similarity search or anomaly detection algorithm, it is certainly not a flaw in ADV but simply another differentiator between ADV and STAR

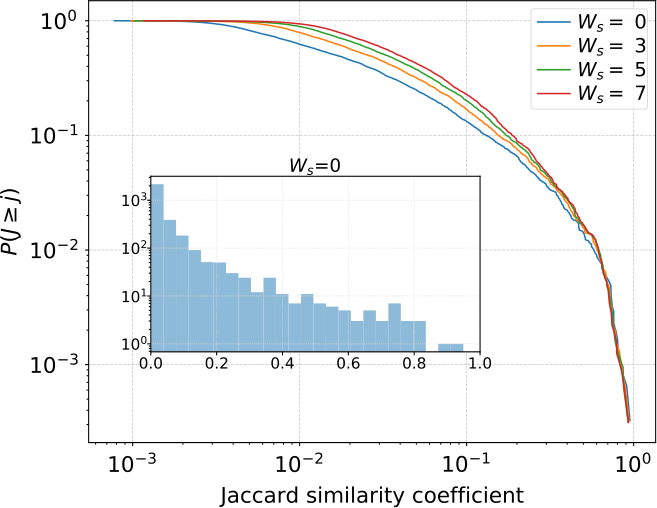

We display the distribution of all Jaccard similarity coefficients in Fig. 10. Most words have relatively little overlap between anomaly windows returned by ADV and windows of shock-like dynamics returned by STAR, but there are notable exceptions. In particular, a review of the figures contained in the online index suggests that ADV’s and STAR’s windows overlap most when the shock-like dynamics are particularly strong and surrounded by a time series with relatively low variance; they agree the most when hypothesized underlying deterministic mechanics are strongest and the effects of noise are lowest. The pronounced spikes in the words “crooked” and “stein” in Fig. 9 are an example of this phenomenon. However, when the time series has high variance or exhibits strong nonstationarity, ADV often does not indicate that there are windows of anomalous behavior while STAR does indicate the presence of shock-like dynamics; the panels of the words “trump”, “jill”, and “hillary” in Fig. 9 demonstrate these behaviors.

Jaccard similarity coefficients. Complimentary cumulative distribution function (CCDF) of Jaccard similarity coefficients for regions that Twitter’s ADV and our STAR algorithm detect patterns or anomalies (see Fig. 9). Window sizes are varied to include \(W_{s} \in \{0,3,5,7\}\) (i.e. detections within \(t_{i}\pm W_{s}\) are as part of the intersection). Time series with \(J_{\mathrm{word}_{i}}=0\) are omitted from the CCDF. The inset histogram shows the distribution of Jaccard similarity coefficients for \(W_{s}=0\) (i.e. exact matches), \(J=0\) time series are included

Taken in the aggregate, these results suggest that a state-of-the-art anomaly detection algorithm, such as Twitter’s ADV, and a qualitative, shape-based, timescale-independent similarity search algorithm, such as STAR, do have some overlapping properties but are largely mutually-complementary approaches to identifying and analyzing the behavior of sociotechnical time series. While ADV and STAR both identify strongly shock-like dynamics that occur when the surrounding time series has relatively low variance, their behavior diverges when the time series is strongly nonstationary or has high variance. In this case, ADV is an excellent tool for indicating the presence of strong outliers in the data, while STAR continues to indicate the presence of shock-like dynamics in a manner that is less sensitive to the time series’s stationarity or variance.

3.2 Social narrative extraction

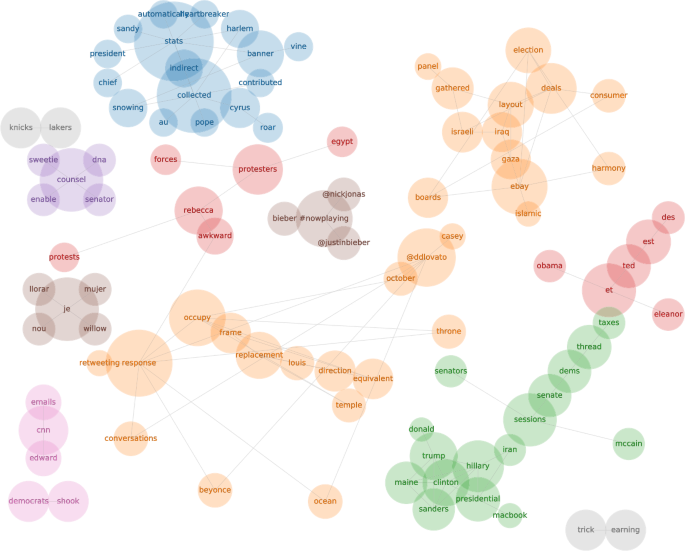

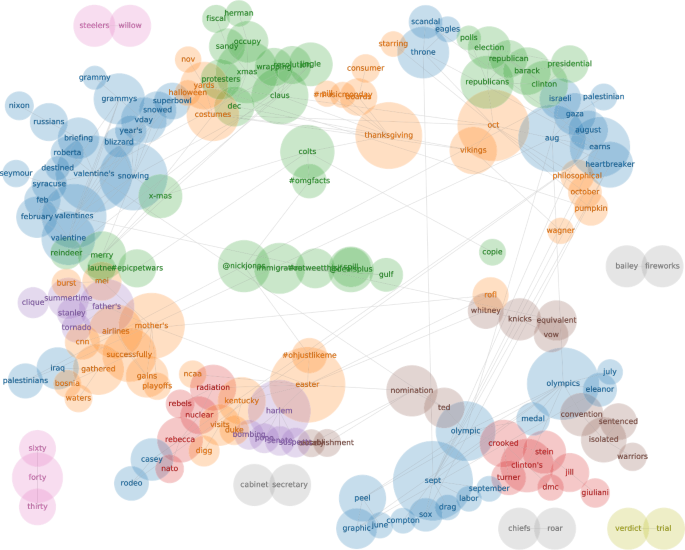

We seek both an understanding of the intertemporal semantic meaning imparted by windows of shock-like behavior indicated by the STAR algorithm and a characterization of the dynamics of the shocks themselves. We first compute the shock indicator and weighted shock indicator functions (WSIFs) for each of the 10,222 labMT words filtered from the gardenhose dataset, described in Sect. 1.1, using a power kernel with \(\theta =3\). At each point in time, words are sorted by the value of their WSIF. The jth highest valued WSIF at each temporal slice, when concatenated across time, defines a new time series. We perform this computation for the top ranked \(k = 20\) words for the entire time under study. We also perform this process using the “spike” kernel of Eq. (4) and display each resulting time series in Fig. 11 (shock kernel) and Fig. 12 (spike kernel). (We term the spike kernel as such because we have \(\frac{{d} \mathcal {K}^{(Sp)}(\tau )}{{d}\tau } = \delta (\tau )\) on the domain \([-W/2, W/2]\), the Dirac delta function; its underlying mechanistic dynamics are completely static except for one point in time during which the system is driven by an ideal impulse function.)

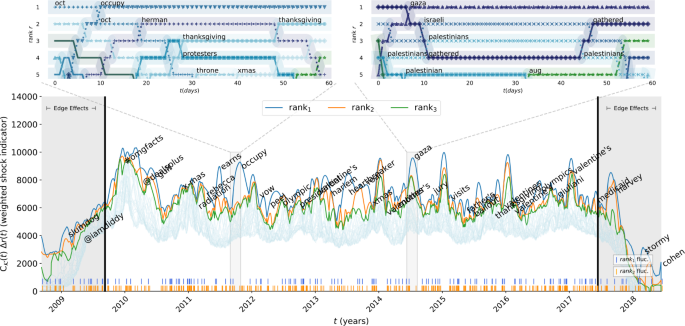

Shock leaderboard. Time series of the ranked and weighted shock indicator function. At each time step t, the weighted spike indicator functions (WSIF) are sorted so that the word with the highest WSIF corresponds to the top time series, the words with the second-highest WSIF corresponds to the second time series, and so on. Vertical ticks along the bottom mark fluctuations in the word occupying ranks 1 and 2 of WSIF values. Top panels present the ranks of WSIF values for words in the top 5 WSIF values in a given time step for the sub-sampled period of 60 days. An interactive version of this graphic is available at the authors’ webpage: http://compstorylab.org/shocklets/ranked_shock_weighted_interactive.html

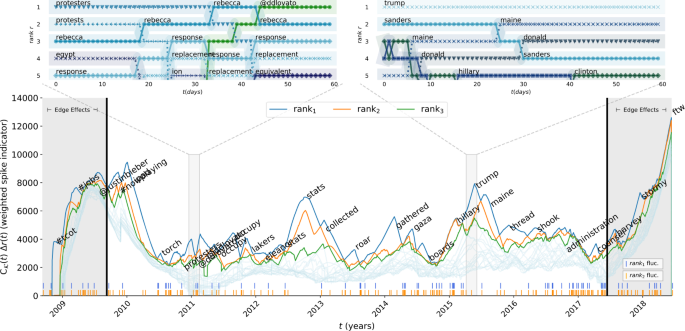

Spike leaderboard. Time series of the ranked and weighted spike indicator function. At each time step t, the weighted spike indicator functions (WSpIF) are sorted so that the word with the highest WSpIF corresponds to the top time series, the words with the second-highest WSpIF corresponds to the second time series, and so on. Vertical ticks along the bottom mark fluctuations in the word occupying ranks 1 and 2 of WSpIF values. Top panels present the ranks of WSpIF values for words in the top 5 WSpIF values in a given time step for the sub-sampled period of 60 days. The top left panel, demonstrates the competition for social attention between geopolitical concerns—street protests in Egypt—and popular artists and popular culture influence—Rebecca Black and Demi Lovato. The top right panel displays the language surrounding the 2016 U.S. presidential election immediately after Donald Trump announced his candidacy. An interactive version of this graphic is available at the authors’ webpage: http://compstorylab.org/shocklets/ranked_spike_weighted_interactive.html

The \(j=1\) word time series is annotated with the corresponding word at relative maxima of order 40. (A relative maximum \(x_{s}\) of order k in a time series is a point that satisfies \(x_{s} > x_{t}\) for all t such that \(|t - s| \leq k\).) This annotation reveals a dynamic social narrative concerning popular events, social movements, and geopolitical fluctuation over the past near-decade. Interactive versions of these visualizations are available on the authors’ website.Footnote 5 To further illuminate the often-turbulent dynamics of the top j ranked weighted shock indicator functions, we focus on two particular 60-day windows of interest, denoted by shading in the main panels of Figs. 11 and 12. In Fig. 11, we outline a period in late 2011 during which multiple events competed for collective attention:

the 2012 U.S. presidential election (the word “herman”, referring to Herman Cain, a presidential election contender);

Occupy Wall Street protests (“occupy” and “protestors”);

and the U.S. holiday of Thanksgiving (“thanksgiving”)

Each of these competing narratives is reflected in the top-left inset. In the top right inset, we focus on a time period during which the most distinct anomalous dynamics corresponded to the 2014 Gaza conflict with Israel (“gaza”, “israeli”, “palestinian”, “palestinians”, “gathered”). In Fig. 12, we also outline two periods of time: one, in the top left panel, demonstrates the competition for social attention between geopolitical concerns:

street protests in Egypt (“protests”, “protesters”, “egypt”, “response”);

and popular artists and popular culture (“rebecca”, referring to Rebecca Black, a musician, and “@ddlovato”, referring to another musician, Demi Lovato).

In the top right panel we demonstrate that the most prominent dynamics during late 2015 are those of the language surrounding the 2016 U.S. presidential election immediately after Donald Trump announced his candidacy (“trump”, “sanders”, “donald”, “hillary”, “clinton”, “maine”).

We note that these social narratives uncovered by the STAR algorithm might not emerge if we used a different algorithm in an attempt to extract shock-like dynamics in sociotechnical time series. We have already shown (in the previous section) that at least one state-of-the-art anomaly detection algorithm is unlikely to detect abrupt, shock-like dynamics that occur in time series that are nonstationary or have high variance. We display side-by-side comparisons of the indicator windows generated by each algorithm for every word in the LabMT dataset in the online appendix (http://compstorylab.org/shocklets/all_word_plots/). A review of figures in the online appendix corresponding with words annotated in Figs. 11 and 12 provides evidence that an anomaly detection algorithm, such as ADV, may not necessarily capture the sane dynamics as does STAR. We include selected panels of these figures in Appendix 3, displaying words corresponding with some peaks of the weighted shock and spike indicator functions. (We hasten to note that this of course does not preclude the possibility that anomaly detection algorithms might indicate dynamics that are not captured by STAR.)

3.3 Typology of local mechanistic dynamics

To further understand divergent dynamic behavior in word rank time series, we analyze regions of these time series for which Eq. (15) is satisfied—that is, where the value of the shock indicator function is greater than the sensitivity parameter. We focus on shock-like dynamics since these dynamics qualitatively describe aggregate social focusing and subsequent de-focusing of attention mediated by the algorithmic substrate of the Twitter platform.

We extract shock segments from the time series of all words that made it into the top \(j = 20\) ranked shock indicator functions at least once. Since shocks exist on a wide variety of dynamic ranges and timescales, we normalize all extracted shock segments to lie on the time range \(t_{\mathrm{shock}} \in [0, 1]\) and have (spatial) mean zero and variance unity. Shocks have a focal point about their maxima by definition, but in the context of stochastic time series (as considered here), the observed maximum of the time series may not be the “true” maximum of the hypothesized underlying deterministic dynamics. Shock points—hypothesized deterministic maxima—of the extracted shock segments were thus determined by two methods: The maxima of the within-window time series,

and the maxima of the time series’s shock indicator function,

We then computed empirical probability density functions of \(t_{1}^{*}\) and \(t_{2}^{*}\) across all words in the LabMT dataset. While the empirical distribution of \(t^{*}_{1}\) is uni-modal, the corresponding empirical distribution of \(t^{*}_{2}\) demonstrated clear bi-modality with peaks in the first and last quartiles of normalized time. To better characterize these maximum a posteriori (MAP) estimates, we sample those shock segments \(x_{t}\) the maxima of which are temporally-close to the MAPs and calculate spatial means of these samples,

where

The number ε is a small value which we set here to \(\varepsilon = 10 / 503\).Footnote 6 We plot these curves in Fig. 13. Shock segments that are close in spatial norm to the \(\langle x_{t_{ \mathrm{shock}}} \rangle _{n}\)—that is, shock segments \(x_{t_{ \mathrm{shock}}}\) that satisfy

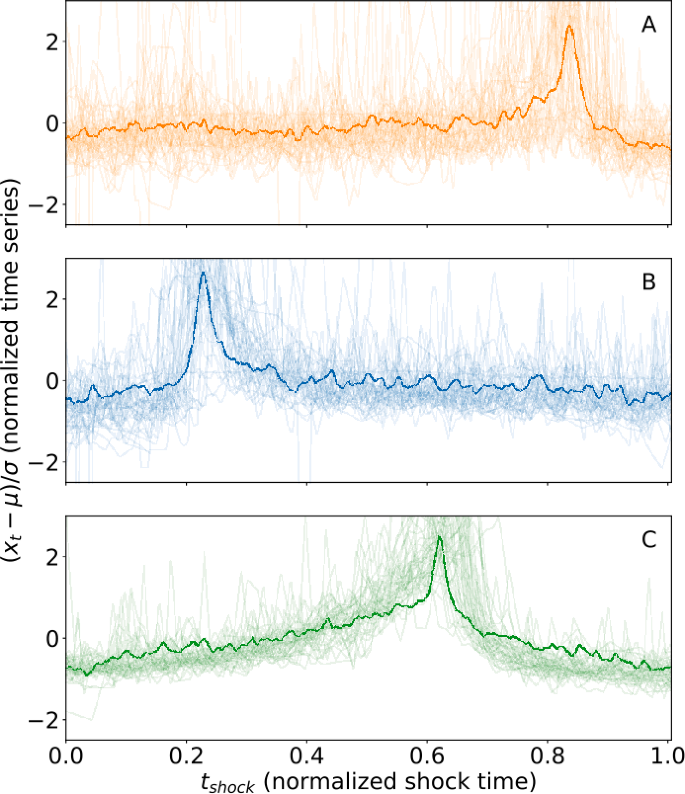

where \(F^{\leftarrow }_{Z}(q)\) is the quantile function of the random variable Z—are plotted in thinner curves. From this process, three distinct classes of shock segments emerge, corresponding with the three relative maxima of the shock point distributions outlined above:

Social dynamics in cusp segments. Extracted cusp Extracted shock segments show diverse behavior corresponding to divergent social dynamics. We extract “important” shock segments (those that breach the top \(k=20\) ranked weighted shock indicator at least once during the decade under study) and normalize them as described in Sect. 2. We then find the densities of shock points \(t^{*}_{1}\), measured using the maxima of the within-window time series, and alternatively measured using the maxima of the (relative) shock indicator function. We calculate relative maxima of these distributions and spatially-average shock segments whose maxima were closest to these relative maxima; we display these mean shock segments along with sample shock segments that are close to these mean shock segments in norm. We introduce a classification scheme for shock dynamics: Type I (panel (A)) dynamics are those that display slow buildup and fast relaxation; Type II (panel (B)) dynamics, conversely, display fast (shock-like) buildup and slow relaxation; and Type III (panel (C)) dynamics are relatively symmetric. Overall, we find that Type III dynamics are most common (40.9%) among words that breach the top \(k=20\) ranked weighted shock indicator function, while Type II are second-most common (36.4%), followed by Type I (22.7%)

-

Type I: exhibiting a slow buildup (anticipation) followed by a fast relaxation;

-

Type II: with a correspondingly short buildup (shock) followed by a slow relaxation;

-

Type III: exhibiting a relatively symmetric shape.

Words corresponding to these classes of shock segments differ in semantic context. Type I dynamics are related to known and anticipated societal and political events and subjects, such as:

“hampshire” and “republican”, concerning U.S. presidential primaries and general elections,

“labor”, “labour”, and “conservatives”, likely concerning U.K. general elections,

“voter”, “elected”, and “ballot”, concerning voting in general, and

“grammy”, the music awards show.

To contrast, Type II (shock-like) dynamics describe events that are partially- or entirely-unexpected, often in the context of national or international crises, such as:

“tsunami” and “radiation”, relating to theFukushima Daichii tsunami and nuclear meltdown,

“bombing”, “gun”, “pulse”, “killings”, and “connecticut”, concerning acts of violence and mass shootings, in particular the Sandy Hook elementary school shooting in the United States;

“jill” (Jill Stein, a 2016 U.S. presidential election competitor), “ethics”, and “fbi”, pertaining to surprising events surrounding the 2016 U.S. presidential election, and

“turkish”, “army”, “israeli”, “civilian”, and “holocaust”, concerning international protests, conflicts, and coups.

Type III dynamics are associated with anticipated events that typically re-occur and are discussed substantially after their passing, such as

“sleigh”, “x-mas”, “wrapping”, “rudolph”, “memorial”, “costumes”, “costume”, “veterans”, and “bunny”, having to do with major holidays, and

“olympic” and “olympics”, relating to the Olympic games.

We give a full list of words satisfying the criteria given in Eqs. (22) and (23) in Table 1. We note that, though the above discussion defines and distinguishes three fundamental signatures of word rank shock segments, these classes are only the MAP estimates of the true distributions of shock segments, our empirical observations of which are displayed as histograms in Fig. 13; there is an effective continuum of dynamics that is richer, but more complicated, than our parsimonious description here.

4 Discussion

We have introduced a nonparametric pattern detection method, termed the discrete shocklet transform (DST) for its particular application in extracting shock- and shock-like dynamics from noisy time series, and demonstrated its particular suitability for analysis of sociotechnical data. Though extracted social dynamics display a continuum of behaviors, we have shown that maximizing a posteriori estimates of shock likelihood results in three distinct classes of dynamics: anticipatory dynamics with long buildups and quick relaxations, such as political contests (Type I); “surprising” events with fast (shock-like) buildups and long relaxation times, examples of which are acts of violence, natural disasters, and mass shootings (Type II); and quasi-symmetric dynamics, corresponding with anticipated and talked-about events such as holidays and major sporting events (Type III). We analyzed the most “important” shock-like dynamics—those words that were one of the top-20 most significant at least once during the decade of study—and found that Type III dynamics were the most common among these words (40.9%) followed by Type II (36.4%) and Type I (22.7%). We then showcased the discrete shocklet transform’s effectiveness in extracting coherent intertemporal narratives from word usage data on the social microblog Twitter, developing a graphical methodology for examining meaningful fluctuations in word—and hence topic—popularity. We used this methodology to create document-free nonparametric topic models, represented by pruned networks based on shock indicator similarity between two words and defining topics using the networks’ community structures. This construction, while retaining artifacts from its construction using intrinsically-temporal data, presents topics possessing qualitatively sensible semantic structure.

There are several areas in which future work could improve on and extend that presented here. Though we have shown that the discrete shocklet transform is a useful tool in understanding non-stationary local behavior when applied to a variety of sociotechnical time series, there is reason to suspect that one can generalize this method to essentially any kind of noisy time series in which it can be hypothesized that mechanistic local dynamics contribute a substantial component to the overall signal. In addition, the DST suffers from noncausality, as do all convolution or frequency-space transforms. In order to compute an accurate transformed signal at time t, information about time \(t + \tau \) must be known to avoid edge effects or spectral effects such as ringing. In practice this may not be an impediment to the DST’s usage, since: empirically the transform still finds “important” local dynamics, as shown in Fig. 11 near the very beginning (the words “occupy” and “slumdog” are annotated) and the end (the words “stormy” and “cohen” are annotated) of time studied. Furthermore, when used with more frequently-sampled data the lag needed to avoid edge effects may have decreasing length relative to the longer timescale over which users interact with the data. However, to avoid the problem of edge effects entirely, it may be possible to train a supervised learning algorithm to learn the output of the DST at time t using only past (and possibly present) data. The DST could also serve as a useful counterpart to phrase- and sentence-tracking algorithms such as MemeTracker [93, 94]. Instead of applying the DST to time series of simple words, one could apply it to arbitrary n-grams (including whole sentences) or sentence structure pattern matches to uncover frequency of usage of verb tenses, passive/active voice construction, and other higher-order natural language constructs. Other work could apply the DST to more and different natural language data sources or other sociotechnical time series, such as asset prices, economic indicators, and election polls.

Notes

Python implementations of the DST and STAR algorithms are located at this git repository: https://gitlab.com/compstorylab/discrete-shocklet-transform.

Bayesian classification algorithms can perform classification based only on prior information, but this is also not similar to the STAR algorithm, since the STAR algorithm is a maximum-likelihood method that by definition requires at least one time series to operate.

Data is available at https://fred.stlouisfed.org/series/RECPROUSM156N.

This value comes from an arbitrary but small number of indices (five) we allow a shock segment to vary (±) about the index of the MAP estimate of the distributions of shock points, each of which can be considered as multinomial distributions supported on a 503-dimensional vector space. The number 503 is the dimension of each shock segment after time normalization since the longest original shock segment in the labMT dataset was 503 days.

The dataset is available for purchase from Twitter at http://support.gnip.com/apis/firehose/overview.html. The on-disk memory statistic is the result of du -h <dirname> | tail -n 1 on the authors’ computing cluster and so may vary by machine or storage system.

Abbreviations

- ADV:

-

anomaly detection vector, Twitter’s anomaly detection algorithm

- DST:

-

Discrete Shocklet Transform, the analytical method at the core of this article

- STAR:

-

Shocklet Transform and Ranking algorithm, method that uses the DST to provide qualitative, shape-based similarity search in multivariate time series

- DWT:

-

Discrete Wavelet Transform, a transform that performs a task similar to, yet fundamentally different from, that performed by the DST

- LabMT:

-

Lab Mechanical Turk dataset, set of over 10,000 words used here and in multiple studies of human language and behavior

- MAP:

-

Maximum a posteriori, estimated maximum of a posterior distribution

- WSIF:

-

weighted shock indicator function, one of the outputs of the STAR algorithm

References

Chaovalit P, Gangopadhyay A, Karabatis G, Chen Z (2011) Discrete wavelet transform-based time series analysis and mining. ACM Comput Surv (CSUR) 43(2):6

Yeh C-CM, Kavantzas N, Keogh E (2017) Matrix profile vi: meaningful multidimensional motif discovery. In: 2017 IEEE international conference on data mining (ICDM). IEEE Press, New York, pp 565–574

Zhu Y, Imamura M, Nikovski D, Keogh E (2018) Introducing time series chains: a new primitive for time series data mining. Knowl Inf Syst: 1–27

Struzik ZR, Siebes AP (2002) Wavelet transform based multifractal formalism in outlier detection and localisation for financial time series. Phys A, Stat Mech Appl 309(3–4):388–402

Popivanov I, Miller RJ (2002) Similarity search over time-series data using wavelets. In: Proceedings 18th international conference on data engineering. IEEE Press, New York, pp 212–221

Lau K-M, Weng H (1995) Climate signal detection using wavelet transform: how to make a time series sing. Bull Am Meteorol Soc 76(12):2391–2402

Whitcher B, Byers SD, Guttorp P, Percival DB (2002) Testing for homogeneity of variance in time series: long memory, wavelets, and the Nile river. Water Resour Res 38(5)

Benítez R, Bolós V, Ramírez M (2010) A wavelet-based tool for studying non-periodicity. Comput Math Appl 60(3):634–641

Mann S, Haykin S (1991) The chirplet transform: a generalization of Gabor’s logon transform. In: Vision interface, vol 91, pp 205–212

Wang G, Xia X-G, Root BT, Chen VC (2002) Moving target detection in over-the-horizon radar using adaptive chirplet transform. In: Proceedings of the 2002 IEEE radar conference (IEEE cat. no. 02CH37322). IEEE Press, New York, pp 77–84

Spanos P, Giaralis A, Politis N (2007) Time–frequency representation of earthquake accelerograms and inelastic structural response records using the adaptive chirplet decomposition and empirical mode decomposition. Soil Dyn Earthq Eng 27(7):675–689

Taebi A, Mansy H (2016) Effect of noise on time-frequency analysis of vibrocardiographic signals. J Bioeng & Biomed Sci 6(4)

Page E (1955) A test for a change in a parameter occurring at an unknown point. Biometrika 42(3/4):523–527

Mallat S, Hwang WL (1992) Singularity detection and processing with wavelets. IEEE Trans Inf Theory 38(2):617–643

Dodds PS, Harris KD, Kloumann IM, Bliss CA, Danforth CM (2011) Temporal patterns of happiness and information in a global social network: hedonometrics and Twitter. PLoS ONE 6(12):26752

Li Q, Shah S, Thomas M, Anderson K, Liu X, Nourbakhsh A, Fang R (2017) How much data do you need? Twitter decahose data analysis

Reagan AJ, Danforth CM, Tivnan B, Williams JR, Dodds PS (2017) Sentiment analysis methods for understanding large-scale texts: a case for using continuum-scored words and word shift graphs. EPJ Data Sci 6(1):28

Reece AG, Reagan AJ, Lix KL, Dodds PS, Danforth CM, Langer EJ (2017) Forecasting the onset and course of mental illness with Twitter data. Sci Rep 7(1):13006

Frank MR, Mitchell L, Dodds PS, Danforth CM (2013) Happiness and the patterns of life: a study of geolocated tweets. Sci Rep 3:2625

Mitchell L, Frank MR, Harris KD, Dodds PS, Danforth CM (2013) The geography of happiness: connecting Twitter sentiment and expression, demographics, and objective characteristics of place. PLoS ONE 8(5):64417

Lemahieu R, Van Canneyt S, De Boom C, Dhoedt B (2015) Optimizing the popularity of Twitter messages through user categories. In: 2015 IEEE international conference on data mining workshop (ICDMW). IEEE Press, New York, pp 1396–1401

Wu F, Huberman BA (2007) Novelty and collective attention. Proc Natl Acad Sci 104(45):17599–17601

Candia C, Jara-Figueroa C, Rodriguez-Sickert C, Barabási A-L, Hidalgo CA (2019) The universal decay of collective memory and attention. Nat Hum Behav 3(1):82

Crane R, Sornette D (2008) Robust dynamic classes revealed by measuring the response function of a social system. Proc Natl Acad Sci 105(41):15649–15653

Lorenz-Spreen P, Mønsted BM, Hövel P, Lehmann S (2019) Accelerating dynamics of collective attention. Nat Commun 10(1):1759

De Domenico M, Altmann EG (2019) Unraveling the origin of social bursts in collective attention. arXiv preprint. arXiv:1903.06588

Ierley G, Kostinski A (2019) A universal rank-order transform to extract signals from noisy data. arXiv preprint. arXiv:1906.08729

Nakamoto S, et al. (2008) Bitcoin: a peer-to-peer electronic cash system

Al Shehhi A, Oudah M, Aung Z (2014) Investigating factors behind choosing a cryptocurrency. In: 2014 IEEE international conference on industrial engineering and engineering management. IEEE Press, New York, pp 1443–1447

Lin J, Keogh E, Wei L, Lonardi S (2007) Experiencing sax: a novel symbolic representation of time series. Data Min Knowl Discov 15(2):107–144

Yang K, Shahabi C (2007) An efficient k nearest neighbor search for multivariate time series. Inf Comput 205(1):65–98

Kale DC, Gong D, Che Z, Liu Y, Medioni G, Wetzel R, Ross P (2014) An examination of multivariate time series hashing with applications to health care. In: 2014 IEEE international conference on data mining. IEEE Press, New York, pp 260–269

Driemel A, Silvestri F (2017) Locality-sensitive hashing of curves. arXiv preprint. arXiv:1703.04040

Keogh EJ, Pazzani MJ (2000) A simple dimensionality reduction technique for fast similarity search in large time series databases. In: Pacific-Asia conference on knowledge discovery and data mining. Springer, Berlin, pp 122–133

Wu Y-L, Agrawal D, El Abbadi A (2000) A comparison of dft and dwt based similarity search in time-series databases. In: Proceedings of the ninth international conference on information and knowledge management. ACM, New York, pp 488–495

Chan F-P, Fu A-C, Yu C (2003) Haar wavelets for efficient similarity search of time-series: with and without time warping. IEEE Trans Knowl Data Eng 15(3):686–705

Ratanamahatana C, Keogh E, Bagnall AJ, Lonardi S (2005) A novel bit level time series representation with implication of similarity search and clustering. In: Pacific-Asia conference on knowledge discovery and data mining. Springer, Berlin, pp 771–777

Keogh E, Lin J, Fu A (2005) Hot sax: efficiently finding the most unusual time series subsequence. In: Fifth IEEE international conference on data mining (ICDM’05). IEEE Press, New York, p 8

Yeh C-CM, Zhu Y, Ulanova L, Begum N, Ding Y, Dau HA, Silva DF, Mueen A, Keogh E (2016) Matrix profile I: all pairs similarity joins for time series: a unifying view that includes motifs, discords and shapelets. In: 2016 IEEE 16th international conference on data mining (ICDM). IEEE Press, New York, pp 1317–1322

Eastman JR, Fulk M (1993) Long sequence time series evaluation using standardized principal components. Photogramm Eng Remote Sens 59(6)

Harris D (1997) Principal components analysis of cointegrated time series. Econom Theory 13(4):529–557

Lansangan JRG, Barrios EB (2009) Principal components analysis of nonstationary time series data. Stat Comput 19(2):173

Mueen A, Viswanathan K, Gupta C, Keogh E (2017) The fastest similarity search algorithm for time series subsequences under Euclidean distance

Seref O, Fan Y-J, Chaovalitwongse WA (2013) Mathematical programming formulations and algorithms for discrete k-median clustering of time-series data. INFORMS J Comput 26(1):160–172

Vlachos M, Lin J, Keogh E, Gunopulos D (2003) A wavelet-based anytime algorithm for k-means clustering of time series. In: Proc. Workshop on clustering high dimensionality data and its applications. Citeseer

Goutte C, Toft P, Rostrup E, Nielsen F, Hansen LK (1999) On clustering fmri time series. NeuroImage 9(3):298–310

Jiang D, Pei J, Zhang A (2003) Dhc: a density-based hierarchical clustering method for time series gene expression data. In: Third IEEE symposium on bioinformatics and bioengineering, 2003. Proceedings. IEEE Press, New York, pp 393–400

Rodrigues PP, Gama J, Pedroso JP (2006) Odac: hierarchical clustering of time series data streams. In: Proceedings of the 2006 SIAM international conference on data mining. SIAM, Philadelphia, pp 499–503

Rodrigues PP, Gama J, Pedroso J (2008) Hierarchical clustering of time-series data streams. IEEE Trans Knowl Data Eng 20(5):615–627

Denton A (2005) Kernel-density-based clustering of time series subsequences using a continuous random-walk noise model. In: Fifth IEEE international conference on data mining (ICDM’05). IEEE Press, New York, p 8

Birant D, Kut A (2007) St-dbscan: an algorithm for clustering spatial–temporal data. Data Knowl Eng 60(1):208–221

Çelik M, Dadaşer-Çelik F, Dokuz AŞ (2011) Anomaly detection in temperature data using dbscan algorithm. In: 2011 international symposium on innovations in intelligent systems and applications. IEEE Press, New York, pp 91–95

Kumar M, Patel NR, Woo J (2002) Clustering seasonality patterns in the presence of errors. In: Proceedings of the eighth ACM SIGKDD international conference on knowledge discovery and data mining. ACM, New York, pp 557–563

Oates T, Firoiu L, Cohen PR (1999) Clustering time series with hidden Markov models and dynamic time warping. In: Proceedings of the IJCAI-99 workshop on neural, symbolic and reinforcement learning methods for sequence learning, pp 17–21. Citeseer

Schreiber T, Schmitz A (1997) Classification of time series data with nonlinear similarity measures. Phys Rev Lett 79(8):1475

Kalpakis K, Gada D, Puttagunta V (2001) Distance measures for effective clustering of arima time-series. In: Proceedings 2001 IEEE international conference on data mining. IEEE Press, New York, pp 273–280

Bagnall AJ, Janacek GJ (2004) Clustering time series from arma models with clipped data. In: Proceedings of the tenth ACM SIGKDD international conference on knowledge discovery and data mining. ACM, New York, pp 49–58