Scalability 101: How to Build, Measure, and Improve It

Scalability is probably one of the most crucial non-business features of every modern-day system and this post is a perfect intro to it.

Join the DZone community and get the full member experience.

Join For FreeIn this post, I'd like to talk a little about scalability from a system design perspective. In the following paragraphs, I'll cover multiple concepts related to scalability—from defining what it is, to the tools and approaches that help make the system more scalable, and finally, to the signs that show whether a system is scaling well or not.

What Is Scalability?

First things first: I’m pretty sure you know what scalability is, but let’s have a brief look at the definition just to be safe.

Scalability is the ability—surprise—of a system or application to scale. It is probably one of the most crucial non-business features of every modern-day piece of code. After all, we all want our systems to be able to handle increasing traffic and not just crash the first chance they can.

Besides just making our system work properly under increased load, good scalability offers other benefits:

- Stable User Experience - We can ensure the same level of experience for an increased number of users trying to use our system.

- Future Proof - The more we think about the scalability and extensibility of our system during the design phase, the smaller the chance that we will need an architecture rework in the near future.

- Competitive Advantage - Stable user experience under an extensive increase in traffic can shortly evolve into a business advantage, especially when some competitors’ sites are down while our site is up and running.

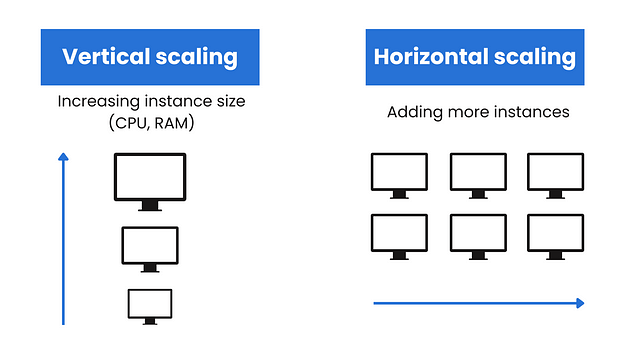

In the most simple way, when we’re looking at scaling, there are two ways to handle that task—Horizontal and Vertical. Horizontal scaling is focused on adding more nodes to the system, while Vertical scaling is about adding more resources to a single machine that is responsible for hosting our application.

However, this is only the beginning, or the end, depending on the perspective. Adding more resources to the physical machines has its physical limits. On the other hand, spawning multiple instances also has its limitations, not to mention significant complexity penalties with the possible architecture redesigns.

That is why today we will dive deeper into tools and concepts that will help us make our system more scalable. Before that, let’s take a look at how we can measure the scalability of our system.

How To Measure Scalability

There are a couple of methods to do that. We can go from an experimental method and set up some stress tests, for example using Gatling, that will show us pretty visible results on how far our systems can scale. On the other hand, you can go for a more quantitative approach and try to calculate the limits of your system. You can read more about the quantitative approach here. As for the experimental methods, I recommend you taking a look at the Gatling Academy. It looks like a nice introduction into writing performance tests.

Signs Your System Is Not Scaling

Well, there are a couple of metrics and behaviors we can notice, which indicate possible scalability problems within our system:

- Steadily increasing response times - The response time starts to increase steadily as new users join, after a certain threshold.

- Higher error rate - The number of erroneous responses, timeouts, dropped messages or connections starts to increase.

- High cost to low performance - Gaining actual performance benefits (for example by adding more instances) gives little to no results despite investing a relatively high amount of money.

- Backlog growth - Processing queues, task managers, thread pools, schedulers start failing to keep up with incoming load, extending processing times, possibly later ending up as timeouts.

- Direct feedback - An important client is calling CTO/CIO or whoever else and complaining that everything is down, alerts start spinning, everything explodes, and other funny events.

The Scalability Game Changer

There is one key concept that can impact the scalability of our system quite significantly. The concept in question is statelessness, or stateless services/processing. The more stateless services or steps in our workflow we have, the easier it is to scale up the system. We can basically keep spawning new and new instances infinitely—there are some theoretical and of course practical limits, but they are highly dependent on your architecture. You can read more about them here.

Of course, this approach has some potential drawbacks. For example, we have to store the state somewhere, since most of the systems are, by definition, stateful. We can offload it to some external dependencies like caches or databases, but this also has its drawbacks. While usually quite scalable, such tools can impose some further penalties on our systems and, what is more, impose some architectural requirements on our systems. That is why we should do in-depth research before actually picking the third-party solutions we want to offload our state to.

Nevertheless, in my opinion, the potential benefits of having stateless services overcome their drawbacks, or at least make it an approach worth considering.

With this somewhat long dive into the basics of scalability done, we can move to the main course of today's article: The tools and concepts that will make our application scale.

Tools For Scalability

We can skip captain obvious and move right away past the things like adding more resources to the server or spawning more instances of our service.

Caching

Creating a cache layer in front of our application is probably the simplest method to increase the scalability of our application. We can relatively easily offload part of the incoming traffic to a cache service that can store frequently queried data. Then, our service can focus either on handling incoming write requests while only handling read requests in case of cache misses and invalidations.

Unfortunately, caching also has a couple of drawbacks and considerations that we have to think of before going head-on into adding it to our service. Firstly, cache invalidation is probably one of the biggest problems in the software world. Picking and tuning it correctly may require substantial time and effort. Next, we can take a look at cache inconsistencies and all the possible problems related to handling them correctly.

There are a couple more problems related to caching, but these should be enough for a start. I promise to take a more detailed look at caching and all its quirks in a separate blog.

Database Optimizations

It is not a tool per se. However, it is probably one of the most important things we can do to make our system more scalable. What I exactly mean by this is: Well, sometimes the database can be the limiting factor. It is not a common case; nevertheless, after reaching a certain processing threshold, the chances for it to happen increase exponentially. On the other hand, if you do a lot of database queries (like 10–100 thousands) or your queries are suboptimal, then the chances also increase quite significantly.

In such cases, performance tuning of your database can be a good idea. In every database engine there are switches and knobs that we can move up or down to change its behavior. There are also concepts like sharding and partitioning that may come in handy with processing more work. What is more, there is a concept called replication that is particularly useful for read-heavy workflows based on plain SQL databases. We can spawn multiple replicas of our database and forward all the incoming read traffic to them, while our primary node will take care of writes.

As a last resort, you can try to exchange your database for some other solution. Just please do proper research beforehand, as this change is neither easy nor simple, and may result in degradation, not the up-scaling, of your database performance. Not to mention all the time and money spent along the way.

Usually, even plain old databases like Postgres and MySQL (Uber) can handle quite a load, and with some tuning and good design, should be able to meet your requirements.

Content Delivery Network (CDN)

Setting up a CDN is somewhat similar to setting up a cache layer despite having quite a different purpose and setup process. While the caching layer can be used to store more or less anything we want, in the case of a CDN it is not that simple. They are designed to store and then serve mostly static content, like images, video, audio, and CSS files. Additionally, we can store different types of web pages.

Usually, the CDN is a paid service managed and run by third-party companies like Cloudflare or Akamai. Nevertheless, if you are big enough, you can set up your own CDN, but the threshold is pretty high for this to make sense. For example, Netflix has its own CDN, while Etsy uses Akamai.

The CDN consists of multiple servers spread across the globe. They utilize location-based routing to direct the end users to the nearest possible edge node that contains the requested data. Such an approach greatly reduces wait time for users and moves a lot of the traffic off the shoulders of our services.

Load Balancing

Load balancing is more of a tool for helping with availability than with the scalability of the system. Nonetheless, it can also help with the scaling part. As the name suggests, it is about balancing the incoming load equally across available services. There are different types of load balancers (4th and 7th level) that can support various load balancing algorithms.

The point for load balancers and scalability is that we can route requests based on request processing time, routing new requests to less busy services. We can also use location-based routing similar to the case of a CDN. What is more, Load Balancers can cache some responses, integrate with CDNs, and manage the on-demand scaling of our system.

On the other hand, using Load Balancers requires at least some degree of statelessness in the connected service. Otherwise, we have to use sticky sessions, which is not desired behavior nor recommended practice.

Another potential drawback of using load balancers is that they may introduce a single point of failure into our systems. We all know what the result of such an action may be. The desired approach would be to use some kind of distributed load balancers, where some nodes may actually fail without compromising the whole application. Yet doing so adds additional complexity to our design.

As you see, it is all about the trade-offs.

Async Communication

Here, the matter is pretty straightforward: Switching to async communication frees up processing power in our services. Services do not have to wait for responses and may switch to other tasks while the targets process the requests we already sent. While the exact numbers or performance increase may vary significantly from use case to use case, the principle remains.

Besides the additional cognitive load put upon the maintainers and some debugging complexity, there are no significant drawbacks related to switching to an async communication model. This will get more interesting in the next topic.

Just to clarify, in this point I thought mostly of approaches like async HTTP calls, WebSockets, async gRPC, and SSE. We will move into the messaging space in the next paragraph.

Messaging

It is a type of asynchronous communication that uses message queues, message brokers to exchange data between services. The most important change that it brings is the decoupling of sender and consumer. We can just put the message on the queue and everyone interested can read it and act as they want.

Message brokers are usually highly scalable and resilient. They will probably handle your workload and not even break a sweat while doing so.

Unfortunately, they introduce a few drawbacks:

- Additional complexity - Messaging is totally different on a conceptual level than the plain request-response model, even if done in an async manner. There may be problems with logging, tracing, debugging, message ordering, messages being lost, and possible duplication.

- Infrastructure overhead - Setting up and running a platform for exchanging messages can be a challenging undertaking, especially for newcomers.

- Messages backlog - If the producers send messages faster than the consumer is able to process them, then the backlog grows, and if we do not handle this matter, it may lead to system-wide failures. There is a concept of Backpressure that aims to address this issue.

Service Oriented Architecture (SOA)

Last but not least, I wanted to spend some time describing any type of service-oriented architecture and its potential impact on how our application scales.

While this type of architecture is inherently complex and hard to implement correctly, it can bring our system’s scalability to a totally different level:

- Decoupling the components enables scaling components in isolation. If one service becomes a bottleneck, we can focus optimization efforts solely on that service.

- We can find the root cause of potential performance bottlenecks in a more efficient and timely manner, as we can gather more insights into the behavior of different services.

- Our autoscaling becomes more granular, allowing us to apply tailored scaling strategies on a per-service basis.

All of these features improve our efficiency, which can result in reduced costs. We should be able to act faster and more adequately. We also avoid potential over-provisioning of resources across the entire application.

However, the sheer amount of potential problems related to this architecture can easily make it a no-go.

Why We Fail To Scale

After what, how, and why, it is time for why we fail. In my opinion and experience, there are a few factors that lead to our ultimate failure in making an application scalable.

- Poor understanding of tools we use - We can have state-of-the-art tools; but we have to use them correctly. Otherwise, we should better drop using them—at least we will not increase complexity.

- Poor choice of tools - While the previous point addresses the improper use of state-of-the-art tools, this one addresses the issue of choosing incorrect tools and approaches for a job, either on a conceptual or implementation level. I kind of tackled the problem with the quantity of different tools for a single task here.

- Ignoring the trade-offs - Every decision we make has short- and long-lasting consequences we have to be aware of. Of course, we can ignore them; still, we have to know them first and be conscious as to why we are ignoring some potential drawbacks.

- Bad architecture solutions - Kind of similar point as the one above. This is a point that addresses what happens if we ignore one too many trade-offs—the answer is that our architecture will be flawed in a fundamental way, and we will have to change it sooner or later.

- Under-provisioning / Over-provisioning - It is the result of loopholes in our data while we are researching what we would need to handle incoming traffic. While over-provisioning is not a scalability failure per se, as we were able to meet the demand, someone may ask, but at what cost?

Summary

We walked through a number of concepts and approaches to make our application more scalable.

Here they are:

| Concept | Description | Pros | Cons |

|---|---|---|---|

| Caching | Introduces a cache layer to store frequently accessed data and offload traffic from the application. | - Reduces load on the backend - Improves response time - Handles read-heavy workflows efficiently |

- Cache invalidation is complex - Risk of inconsistencies |

| Database Optimizations | Improves database performance through tuning, sharding, replication, and partitioning. | - Handles high read/write workloads - Scalability with replication for read-heavy workflows - Efficient query optimization |

- Complex setup (e.g., sharding) - High migration costs if switching databases - Risk of degraded performance |

| CDN | Distributes static content (e.g., images, CSS, videos) across globally distributed servers. | - Reduces latency - Offloads traffic from core services |

- Primarily for static content - Can be quite costly |

| Load Balancing | Distributes incoming traffic across multiple instances. | - Balances traffic efficiently - Supports failover and redundancy - Works well with auto-scaling |

- Requires stateless services - SPOF (if centralized) |

| Async Communication | Frees up resources by processing requests asynchronously, allowing services to perform other tasks in parallel. | - Increases throughput - Reduces idle waiting time |

- Adds cognitive load for maintainers - Debugging and tracing can be complex |

| Messaging | Uses message queues to decouple services. | - Decouples sender/consumer - Highly scalable and resilient - Handles distributed workloads effectively |

- Infrastructure complexity - Risks like message backlog, duplication, or loss - Conceptually harder to implement |

| Service-Oriented Architecture (SOA) | Breaks down the application into independent services that can scale and be optimized individually. | - Granular auto-scaling - Simplifies root-cause analysis - Enables tailored optimizations - Reduces resource wastage |

- High complexity - Inter-service communication adds overhead - Requires robust observability and monitoring |

Also, here is the table of concrete tools we can use to implement some of the concepts from above. The missing ones are either too abstract or require multiple tools and techniques to implement.

| Concept | Tool |

|---|---|

| Caching | Redis, Memcached |

| CDN | CloudFlare, Akamai |

| Load Balancing | HAProxy, NGINX |

| Async Communication | Async HTTP, WebSockets, async gRPC, SSE |

| Messaging | RabbitMQ, Kafka |

I think that both these tables are quite a nice summary of this article. I wish you luck on your struggle with scalability, and thank you for your time.

Published at DZone with permission of Bartłomiej Żyliński. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments