Abstract

We present new protocols for Asynchronous Verifiable Secret Sharing for Shamir (i.e., threshold \(t<n\)) sharing of secrets. Our protocols:

-

Use only “lightweight” cryptographic primitives, such as hash functions;

-

Can share secrets over rings such as \({\mathbb {Z}}/(p^k)\) as well as finite fields \(\mathbb {F}_q\);

-

Provide optimal resilience, in the sense that they tolerate up to \(t < n/3\) corruptions, where n is the total number of parties;

-

Are complete, in the sense that they guarantee that if any honest party receives their share then all honest parties receive their shares;

-

Employ batching techniques, whereby a dealer shares many secrets in parallel and achieves an amortized communication complexity that is linear in n, at least on the “happy path”, where no party provably misbehaves.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We present new protocols for asynchronous verifiable secret sharing (AVSS). An AVSS protocol allows one party, the dealer, to distribute shares of a secret to parties \(P_1, \ldots ,P_n\). Important properties of such a protocol are correctness, which means that even if the dealer is corrupt, the shares received by the honest parties are valid (i.e., they correspond to points that interpolate a polynomial of correct degree), and privacy, which means that if the dealer is honest, an adversary should only learn the shares held by the corrupt parties. A third property that is important in many applications is completeness, which means that if the dealer is honest, or if any honest party obtains a share, then eventually all honest parties obtain a share. In this paper, we will only be interested in AVSS protocols that satisfy the completeness property: some authors also call this asynchronous complete secret sharing (ACSS). Our protocols allow the dealer to share secrets that lie in a finite field \(\mathbb {F}_q\), or more generally a finite ring, such as \({\mathbb {Z}}/(p^k)\).

In the asynchronous setting, we assume secure (authenticated and private) point-to-point channels between parties, but we do not assume any bound on how quickly messages are transmitted between parties. In defining completeness, “eventually” means “if and when all messages sent between honest parties are delivered”. While there is a vast literature on secret sharing in the synchronous communication model, there has been considerably less research in the asynchronous model. We feel that this is unfortunate, as the asynchronous model is the only one that corresponds to the practical setting of a wide area network. For this reason, we focus exclusively on the asynchronous model.

It is well known that any AVSS protocol can withstand at most \(t < n/3\) corrupt parties. If an AVSS protocol can withstand this many corruptions, we say it provides optimal resilience. In this paper, we will focus exclusively on AVSS protocols that provide optimal resilience.

We are mainly focused here in designing AVSS protocols with good communication complexity. We define the communication complexity to be the sum of the length of all messages sent by honest parties (to either honest or corrupt parties) over the point-to-point channels. That said, we are interested protocols with good computational complexity as well.

In many applications, it is possible to run many AVSS protocols together as a “batch”. That is, a dealer has many secrets that he wants to share, and can share them all in parallel. Please note that such “batched” secret sharing operations are not to be confused with “packed” secret sharing operations: in a “batched” secret operation (a technique used, for example, in [22]), many secrets are shared in parallel, resulting in many ordinary sharings, while in a “packed” secret sharing (a technique introduced in [29]), many secrets are packed in a single sharing. With “packed” secret sharing, one must sacrifice optimal resilience, which we are not interested in here. Our focus will be exclusively on “batched” secret sharing. With “batching”, it is still possible to achieve optimal resilience, while obtaining very good communication and computational complexity in an amortized sense (i.e., per sharing).

We also make a distinction between the “happy path” and the “unhappy path”. To enter the “unhappy path”, a corrupt party must provably misbehave. If this happens, all honest parties will learn of this and can take action: in the short term, the honest parties can safely ignore this party, and in the longer term, the corrupt party can be removed from the network. Also, such provable misbehavior could lead to legal or financial jeopardy for the corrupt party, and this in itself may be enough to discourage such behavior. Note that the “happy path” includes corrupt behavior that cannot be used as reliable evidence to convince other honest parties or an external authority of corrupt behavior—this includes collusion among the corrupt parties, as well as behavior that may clearly be seen as corrupt by an individual honest party. For these reasons, we believe it makes sense to make a distinction between the complexity of the protocol on the “happy path” versus the “unhappy path”.

1.1 Information Theoretic Versus Computational Security

Up until now, most research in this area has been focused in two different settings: information theoretic and computational.

In the information-theoretic setting, security is unconditional, while in the computational setting, the protocol may use various cryptographic primitives and the security of the protocol is conditioned on specific cryptographic assumptions. In the information-theoretic setting, one can further make a distinction between statistically secure protocols, which may be broken with some negligible probability, and perfectly secure protocols, which cannot be broken at all. We shall not be particularly interested in the distinction between statistical and perfectly secure information-theoretic protocols in this paper.

In the cryptographic setting, the cryptography needed is often quite “heavyweight”, being based, for example, on the discrete logarithm problem or even pairings.

-

The state of the art in batched, complete AVSS protocols over finite fields with optimal resilience in the information-theoretic setting (with statistical security) is the protocol from [20], which achieves amortized communication complexity that is cubic in n.

-

In contrast, the state of the art in batched, complete AVSS protocols over finite fields with optimal resilience in the computational setting is the protocol from [3], which achieves amortized communication complexity that is linear in n. This protocol relies on discrete logarithms and pairings (although as noted in [30], pairings are not needed to achieve the same result if we amortize over larger batches).

For both of these protocols, the complexity bounds are worst-case bounds (making no distinction between a “happy path” and a “unhappy path”).

1.2 The Space in Between: “Lightweight” Cryptography

In this paper, we explore the space between the information theoretic and computational settings. Specifically, we consider the computational setting, but where we only allow “lightweight” cryptographic primitives, such as collision-resistant hash functions and pseudorandom functions. In one of our protocols, we need to make a somewhat nonstandard (but entirely reasonable) assumption about hash functions: a kind of related-key indistinguishability assumption for hash functions, which certainly holds in the random oracle model [10]. In another protocol, we fully embrace the random oracle model, which yields an even simpler and more efficient protocol. Both protocols are batched, complete AVSS protocols with optimal resilience that achieve communication complexity that is linear in n on the “happy path” and quadratic in n on the “unhappy path”.

We believe there are several reasons to explore this space of protocols that rely only on “lightweight” cryptography:

-

Such protocols are potentially harder to break than protocols that rely on such things as discrete logarithms and pairings. In particular, they provide post-quantum security.

-

Such protocols will typically exhibit much better computational complexity than those that rely on “heavyweight” cryptography. For example, the protocol in [3] requires that each receiving party perform a constant number of exponentiations and pairings per sharing (in an amortized sense). In contrast, in our protocol, each receiving party only performs a constant number of field operations and hashes per sharing (again, in an amortized sense, at least on the “happy path”).

Moreover, using any form of cryptography can allow improvements in both communication and computational complexity over protocols using only information-theoretic tools.

Our protocols do not require any public-key cryptography. However, as we shall point out, in a practical implementation, it might be advantageous to sparingly use some public-key cryptographic techniques in certain places.

1.3 Fields Versus Rings

To our knowledge there has been no work in the asynchronous setting for VSS protocols sharing secrets over rings such as \({\mathbb {Z}}/(p^k)\), with all prior work in the setting focused on sharing elements in finite fields \(\mathbb {F}_q\). In the synchronous setting there has recently been an interest in MPC over rings such as \({\mathbb {Z}}/(p^k)\), see, for example, [15, 16, 27, 36] in the dishonest majority (and computational) setting; [1, 2] in the honest majority setting (and information-theoretic) setting; and [34] in the honest majority setting (and computational) setting.Footnote 1 The heart of the protocol [1] is a synchronous VSS protocol for elements in \({\mathbb {Z}}/(p^k)\), which itself is a natural generalization of the method for fields from [12, 13]. The methods from these last two papers are perfectly information theoretically secure.

Another approach, related to [12, 13], is that of [22]. This is a statistically secure information-theoretic MPC protocol that works in the synchronous setting with honest majority, which at its heart performs a highly efficient batched VSS protocol over the finite field \(\mathbb {F}_q\). The batch is proved to be correct using a probabilistic checking procedure, which has a negligible probability of being bypassed by an adversary (a similar probabilistic check was used in a different context in [6]). While [22] provides only statistical security, its advantage over other techniques is the fact that the batch sizes are larger, resulting in a greater practical efficiency.

In the synchronous setting, generalizing these results from fields to Galois rings appears at first sight to be tricky. The field results are almost all defined for Shamir sharing, which in its standard presentation for n players over \(\mathbb {F}_q\), requires \(n>q\). When working with rings such as \({\mathbb {Z}}/(2^k)\) it is not clear, a priori, that the theory for fields will pass over to the ring case. However, by using so-called Galois rings and carefully defining the Shamir evaluation points and other data structures, the entire theory for fields can be carried over to the ring setting with very little change. The original work in this space for rings can be traced back, at least, to [28], with a more complete treatment being provided in [1]. The latter paper generalizes the synchronous protocol from [13] to the case of \({\mathbb {Z}}/(p^k)\) completely.

In this work we initiate the study of asynchronous VSS protocols for rings. As explained above we focus on a middle ground which utilizes lightweight cryptography. Our motivating starting point is the underlying batched synchronous VSS protocol contained in [22]. At a high level, this protocol works in the following steps:

-

1.

The sharing party shares a large number of values.

-

2.

After the shares are distributed a random beacon is called in order to generate a random value. In [22] this is instantiated with a “standard” traditional VSS protocol.

-

3.

Using the value from the random beacon random linear equations on the originally produced shares are computed and opened. The checking of these random linear combination for correctness implies the original shares are correct, with a negligible probability of success.

We follow the same strategy, but we need to modify this slightly, not only to deal with our asynchronous network situation, but also to deal with the potential uses of rings such as \({\mathbb {Z}}/(p^k)\). In [22], a single linear equation is checked over a field extension, in the case of small q, in order to obtain an appropriate soundness level. In our case we will check multiple such equations in parallel, relying on a generalization of the Schwarz–Zippel lemma to rings.

Another technique we employ, to move from the synchronous setting of [22] to our asynchronous setting, is the “encrypt then disperse” technique from [39]. However, as developed in [39], this technique relies on “heavyweight” cryptography, including discrete logarithms and pairings. We show how to replace all of this “heavyweight” cryptography by “lightweight” cryptography.

In [22] the shared secret is guaranteed to be an element in \(\mathbb {F}_q\) if q is large enough to support Shamir secret sharing over \(\mathbb {F}_q\), i.e., \(n < q\). When sharing secrets in \({\mathbb {Z}}/(p^k)\) (or a small finite fields \(\mathbb {F}_q\) with \(n \ge q\)), the shares themselves lie in a Galois ring (or field) extension. In fact, a corrupt dealer might share a secret that lies in the extension, rather than in the base ring (or field). In most applications of our AVSS protocol, this will not be an issue (for example, in producing multiplication triples for MPC protocols); however, in some applications, we really need to ensure that the shared elements are indeed in the base ring (or field) and not some extension. In this situation, we require further machinery which we introduce.

1.4 Application to AMPC

Of course, as has already been alluded to, one of the main applications of AVSS over fields is to asynchronous secure multiparty computation (AMPC), especially in the information theoretic setting. The state of the art for AMPC with optimal resilience for arithmetic circuits over finite fields in the information theoretic setting is the protocol from [20], whose communication complexity grows as \(n^4 \cdot c_M\), where \(c_M\) is the number of multiplication gates in the circuit to be evaluated.

We can use our new “lightweight” cryptographic AVSS protocols as a drop-in replacement for the information-theoretic AVSS protocol in [20], which yields an AMPC protocol whose communication complexity grows as \(n^2 \cdot c_M\) on the “happy path” and \(n^3 \cdot c_M\) on the “unhappy path”. One can easily improve the communication on the “happy path” to \(n \cdot c_M\) by assuming that \(t < (1/3-\epsilon ) \cdot n\) for some constant \(\epsilon \). Alternatively, one can achieve the same communication bound with \(t < n/3\) at least on a “very happy path” where at least \((2/3+\epsilon ) \cdot n\) parties are actually online and cooperative and network delay is bounded (which in practice is often reasonable to assume). Indeed, the technique in [20], which derives from [19], involves a step where we have to wait for \(n-t\) parties to each contribute sharings of validated Beaver triples. From this collection of sharings, some number of truly random shared triples may be extracted. Unfortunately, when \(n = 3t+1\), and we only collect \(n-t=2t+1\) triples, this extraction process yields only one truly random triple. However, in practice, it may make sense to just wait a little while to try to collect more triples. Indeed, if we can collect \((2/3+\epsilon ) \cdot n\) triples, we can extract \(\varOmega (n)\) truly random triples. Hence, on this “very happy path”, the communication complexity grows as \(n \cdot c_M\). Note that this pragmatic approach to reducing the communication complexity on this “very happy path” is still secure assuming \(t < n/3\)—so we still obtain optimal resilience, but we also obtain linear communication complexity per multiplication gate on this “very happy path”.

As is well known, one can realize AMPC in the computational setting without using AVSS. Indeed, the state of art for AMPC with optimal resilience in the computational setting is the protocol from [18], which has a communication complexity that is independent of the circuit size. This protocol relies on very “heavyweight” cryptography: threshold fully homomorphic encryption and threshold signatures. Using somewhat less “heavyweight” cryptography, namely, additively homomorphic threshold encryption, the protocol in [32] has communication complexity that grows as \(n^2 \cdot c_M\).

So we see that with our new AVSS protocols, one can achieve secure AMPC in the computational setting with very good communication complexity using only “lightweight” cryptography.

As has already been mentioned, our lightweight AVSS protocol works not only over fields but over rings such as \({\mathbb {Z}}/(p^k)\). These rings offer many advantages for various forms of MPC computation, especially when the ring is chosen to be \({\mathbb {Z}}/(2^k)\). It remains an open question as to how the above techniques for AMPC can be extended from fields to rings, given our AVSS protocol as a building block. In future work, we aim to investigate this in our context of utilizing lightweight cryptography.

1.5 The Rest of the Paper

In Sect. 2, we review basic concepts such as polynomial interpolation, Reed-Solomon codes, and secret sharing. In particular, in Sect. 2.3, we give the formal definition of AVSS that we will use throughout the paper. In Sect. 3, we review the subprotocols we will need to build our new AVSS protocols. Some of these subprotocols are standard, some are slight variations of standard protocols, and some are new. In particular, in Sect. 3.3, we define a new type of protocol, which we call a secure message distribution protocol. In this section, we just state the properties such a protocol should satisfy, and then in Sect. 4 we show how to build one. In Sect. 5, we present and analyze our new AVSS protocol. In Sect. 6, we extend our AVSS protocol to ensure that the secrets shared by a corrupt dealer lie in a restricted domain. The AVSS protocols in Sects. 5 and Sect. 6 rely in a random beacon. In Sect. 7, we show how to modify both of these protocols so that they do not rely on a random beacon, but instead rely on modeling a hash function as a random oracle. The resulting protocols also have the advantage of requiring fewer rounds of communication (and we speculate that they are resistant to adaptive corruptions, rather than just static corruptions).

2 Polynomial Interpolation, Reed–Solomon Codes, and Secret Sharing

We recall some basic facts about polynomial interpolation, Reed-Solomon codes, and secret sharing. As we want to work over both finite fields and Galois rings, we state these facts more generally, working over an arbitrary, finite, commutative ring with identity. For more details see [1, 28] or [37].

If \(\mathbb {A}\) is a commutative ring with identity, we let \(\mathbb {A}^*\) denote its group of units. Let \(\mathbb {A}[x]\) denote the ring of univariate polynomials over \(\mathbb {A}\) in the variable x. For positive integer d, let \(\mathbb {A}[x]_{< d}\) denote the \(\mathbb {A}\)-subalgebra of \(\mathbb {A}[x]\) consisting of all polynomials of degree less than d.

2.1 Polynomial Interpolation

The key to making polynomial interpolation work over an arbitrary ring \(\mathbb {A}\) is to restrict the choice of points at which we evaluate polynomials over \(\mathbb {A}\). To this end, we work with the notion of an exceptional sequence, which is a sequence \((s_1, \ldots , s_n)\) such that each \(s_i \in \mathbb {A}\), and for all \(i, j \in [n]\) with \(i \ne j\), we have \(s_i-s_j \in \mathbb {A}^*\).Footnote 2 When ordering does not matter, we use the (somewhat nonstandard but more natural) term exceptional set to denote a set \(\mathcal {E}\subseteq \mathbb {A}\) such that \(s - t \in \mathbb {A}^*\) for all \(s, t \in \mathcal {E}\) with \(s \ne t\). Clearly, if \(\mathcal {E}\) is an exceptional set, then so is any subset of \(\mathcal {E}\). The size of the largest exceptional set in a ring \(\mathbb {A}\) is called the Lenstra constant of the ring.

For example, if \(\mathbb {A}\) is a field, then \(\mathbb {A}\) is itself an exceptional set. As another example, suppose \(\mathbb {A}\) is a Galois ring \(\mathbb {Z}[y]/(p^k,F(y))\), where F(y) is a monic polynomial of degree \(\delta \) whose image in \(\mathbb {Z}/(p)[y]\) is irreducible. Then \(\mathbb {A}\) contains an exceptional set of size \(p^\delta \). Such a set \(\mathcal {E}\) may be formed by taking any set of polynomials in \(\mathbb {Z}[y]\) whose images in \(\mathbb {Z}[y]/(p,F(y))\) are distinct, and setting \(\mathcal {E}\) to be the images of these polynomials in \(\mathbb {Z}[y]/(p^k,F(y))\).

So now consider an exceptional sequence of evaluation coordinates \(\varvec{e} = (e_1, \ldots , e_n) \in \mathbb {A}^n\). Because \(\varvec{e}\) is an exceptional sequence, polynomial interpolation with respect to these evaluation coordinates works just as expected. That is, for every \(\varvec{a} = (a_1, \ldots , a_n) \in \mathbb {A}^n\), there exists a unique polynomial \(f \in \mathbb {A}[x]_{< n}\) such that \(f(e_j) = a_j\) for all \(j\in [n]\). Indeed, the coefficient vector of f is given by \(\varvec{a} \cdot V^{-1}\), where \(V \in \mathbb {A}^{n\times n}\) is the Vandermonde matrix determined by the vector of evaluation coordinates \(\varvec{e}\). Because \(\varvec{e}\) is an exception sequence, the determinant of V is a unit, and hence V is invertible.

2.2 Reed–Solomon Codes

Let \(\mathbb {A}\) be a ring and \(\varvec{e}\in \mathbb {A}^n\) be an exceptional sequence. For a positive integer d, we define the (n, d)-Reed-Solomon code over \(\mathbb {A}\) (with respect to \(\varvec{e})\) to be the \(\mathbb {A}\)-subalgebra of \(\mathbb {A}^n\) consisting of the vectors

Elements of this subalgebra are called codewords. Let \(C \in \mathbb {A}^{n \times (n-d)}\) be the matrix consisting of the rightmost \(n-t\) columns of \(V^{-1}\). Then for each \(\varvec{a} \in \mathbb {A}^n\), we see that \(\varvec{a}\) is a codeword if and only if \(\varvec{a} \cdot C = 0\) (this just expresses the condition that the unique polynomial obtained by interpolation has degree less than d). The matrix C is called as a check matrix for the code.

2.3 Asynchronous Verifiable Secret Sharing

We now turn to secret sharing, specifically, asynchronous verifiable secret sharing (AVSS). We have n parties \(P_1, \ldots , P_n\), of which at most \(t < n/3\) may be corrupt. We assume static corruptions (although we claim, without a full proof, that one of our AVSS protocols is secure against adaptive corruptions in the random oracle model). Let \(\mathcal {H}\) denote the indices of the honest parties, and let \(\mathcal {C}\) denote the indices of the corrupt parties.

We assume the parties are connected by secure point-to-point channels, which provide both privacy and authentication. As we are working exclusively in the asynchronous communication model, there is no bound on the time required to deliver messages between honest parties.

Let \(\mathbb {A}\) be a ring and \(\varvec{e}\in \mathbb {A}^n\) be an exceptional sequence. An (n, d, L)-AVSS protocol over \(\mathbb {A}\) (with respect to \(\varvec{e}\)) should allow a dealer \(D\in \{P_1, \ldots , P_n\}\) to input polynomials \(f_1, \ldots , f_L \in \mathbb {A}[x]_{< d}\) so that these polynomials are disseminated among \(P_1, \ldots , P_n\) in such a way that each party \(P_j\) outputs the corresponding the shares \(f_1(e_j), \ldots , f_L(e_j)\). Such a protocol should satisfy the following security properties (informally stated):

- Correctness:

If any honest parties produce an output, then there must exist polynomials \(f_1, \ldots , f_L \in \mathbb {A}[x]_{< d}\) such that each honest \(P_j\) outputs \(\{ f_\ell (e_j) \}_{\ell =1}^L\), if it outputs anything at all. Moreover, if the dealer D is honest, these must be the same polynomials input by D.

- Privacy:

If D is honest, the protocol should reveal no more to the adversary than the values

$$\begin{aligned} \Big \{ \ f_\ell (e_j) \ \Big \}_{\begin{array}{c} \ell \in [L] \\ j \in \mathcal {C} \end{array}} , \end{aligned}$$that is, the shares of the corrupt parties.

Note that in the correctness condition, it is essential that the protocol constrains a corrupt dealer D so ensure that the polynomials \(f_1, \ldots , f_L\) have degree less than d.

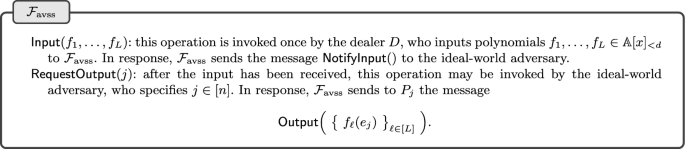

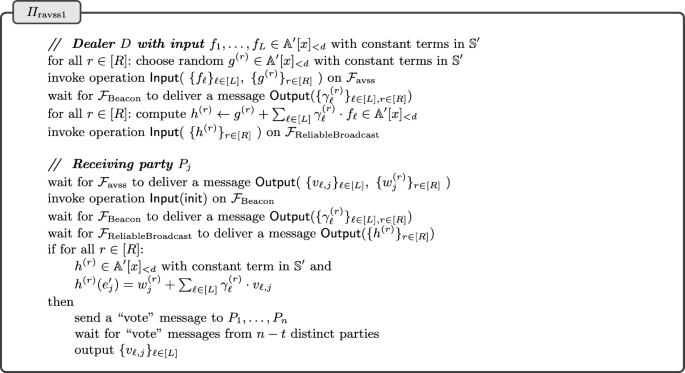

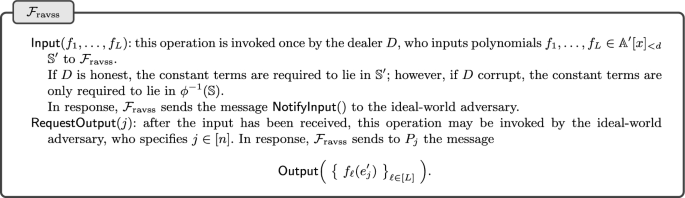

These security properties can be better captured by working in the universal composability (UC) framework [14] and defining an ideal functionality \(\mathcal {F}_{\textrm{avss}}\), see Fig. 1.

\(\mathcal {F}_{\textrm{avss}}\) captures the correctness property by the fact that a corrupt dealer D is constrained in the ideal world to input polynomials of the right degree. In a more detailed definition of \(\mathcal {F}_{\textrm{avss}}\), one might have the dealer D input a bit string to \(\mathcal {F}_{\textrm{avss}}\), and then \(\mathcal {F}_{\textrm{avss}}\) would parse this bit string (according to some standard convention) as a list of L polynomials over \(\mathbb {A}\) of degree less than d. If this failed for any reason, \(\mathcal {F}_{\textrm{avss}}\) would not accept this input from D. For the sake of clarity, we omit such details, and throughout this paper assume that ideal functionalities and other protocol machines make such “syntax checks” by default.

\(\mathcal {F}_{\textrm{avss}}\) in fact captures a stronger form of correctness, namely, input extractability. This property intuitively means that a corrupt dealer D must explicitly commit to all of it polynomials before any honest party outputs its own shares. This follows from the fact that in the UC framework, the ideal-world adversary (or simulator) must somehow extract, from the protocol messages it sees, the polynomials it needs to submit to \(\mathcal {F}_{\textrm{avss}}\) as an input on behalf of D before it can request that outputs are sent to any honest parties. This property is essential in some applications (including a protocol we present later in Sect. 6).

\(\mathcal {F}_{\textrm{avss}}\) captures the privacy property by the fact that when the dealer D is honest, the only information obtained by an adversary in the ideal world are the outputs sent from \(\mathcal {F}_{\textrm{avss}}\) to the corrupt parties, and these outputs consist of just the shares of these parties, as required.

2.3.1 Completeness

A protocol that securely realizes the ideal functionality \(\mathcal {F}_{\textrm{avss}}\) does not necessarily satisfy the completeness property mentioned in Sect. 1. Indeed, as stated, the ideal-world adversary may choose to have \(\mathcal {F}_{\textrm{avss}}\) deliver outputs to some honest parties but not others.

Intuitively speaking, the completeness property for an AVSS protocol says that if an honest dealer D inputs a value or if any honest party output a value, then eventually, all honest parties output a value. Here, “eventually” means if and when all messages sent between honest parties have been delivered. Thus, completeness is not an unconditional guarantee: in the asynchronous communication setting, we formally leave the scheduling of message delivery entirely to the adversary, who may decide to deliver messages sent between honest parties in an arbitrary order, or may choose not to deliver some of them at all.

Turning the above intuitive definition of completeness into a formal one is fairly straightforward. One simply defines an attack game in which the adversary (who controls the corrupt parties and the scheduling of message delivery) wins the game if he can drive the protocol to a state which violates the stated completeness condition, that is, a state such that:

-

(i)

an honest dealer D has input a value or some honest party has output a value,

-

(ii)

all messages sent between honest parties have been delivered, and

-

(iii)

some honest party has not output a value.

Completeness means that any efficient adversary wins this game with negligible probability.

This simple notion of completeness will be sufficient for our purposes. Note that one technical limitation of this definition is that it is only meaningful if the message complexity (that is, the total number of messages sent by any honest party to any other party) is uniformly bounded (that is, bounded by a polynomial that is independent of the adversary, at least with overwhelming probability). Indeed, the completeness property is vacuously satisfied by any protocol in which there are always more messages that need to be delivered (so condition (ii) in the previous paragraph can never be attained). Fortunately, all of the protocols we shall consider here have a uniformly bounded message complexity.

Our approach to modeling completeness closely adheres to the approach introduced in [17]. We note that the related work [20] studies AVSS and AMPC protocols in the UC framework, but makes use of a formal notion of time introduced in [35] to model completeness. Our view is that this extra (and somewhat complicated) machinery is unnecessary and, moreover (echoing the view of [33]), that notions such as liveness and related notions such as completeness are more simply and quite adequately modeled as properties of concrete protocols (as we have done so here) rather than as security properties captured by ideal functionalities.

2.4 Higher-Level Secret Sharing Interfaces

Our ideal functionality \(\mathcal {F}_{\textrm{avss}}\) essentially matches that in [20], and models a rather minimalistic, low-level interface. As given, the dealer inputs polynomials over \(\mathbb {A}\) and parties receives shares. However, there are no interfaces for encoding a secret value as a polynomial, or for performing various operations on shares, such as opening shares, combining shares to reconstruct a secret, or performing linear operations on sharings.

Our choice of this minimalistic interface is intentional, as it is simple and sufficient for our immediate needs. However, higher-level interfaces can easily be implemented on top of it using standard techniques. For example, the standard way to encode a secret \(s \in \mathbb {A}\) as a polynomial is to make s the constant term of the polynomial and choose the other coefficients at random. Doing this, the secret is essentially encoded as the value of the polynomial at the evaluation coordinate 0. For this to work, we require that \((0,e_1,\ldots ,e_n)\) is an exceptional sequence. If this requirement is satisfied, and if \(d > t\), then we know that the shares leaked to the adversary reveal no information about the secret s. Moreover, if \(n \ge d+2t\), we know we can reconstruct the polynomial, and hence the secret, using a protocol based on “online error correction” (originating in [5], but see [19] for a nice exposition of this and many other related protocols in the asynchronous setting). However, this is not the only mechanism that may be used to encode a secret. For example, one may in fact encode the secret as the leading coefficient, rather than the constant term—while this alleviates the requirement of extending the vector of evaluation coordinates to \(n+1\) elements, it may not be convenient in some applications. As another example, with “packed” secret sharing, several secrets may be encoded in a polynomial, by encoding these secrets at different evaluation coordinates [29]—while this can improve the performance of some higher-level protocols, it also reduces the resiliency of such protocols.

Also observe that our minimalistic interface also requires that the ring \(\mathbb {A}\) already has appropriate evaluation coordinates. In some applications, the secret may lie in some ring \(\mathbb {S}\) that does not contain a large enough exceptional sequence. For example, \(\mathbb {S}\) may be a finite field \(\mathbb {F}_q\) where q is very small, or a ring such as \({\mathbb {Z}}/(p^k)\), where p is very small. In this case, the standard technique is to secret share over a larger ring \(\mathbb {A}\supseteq \mathbb {S}\)—for example, a field extension in the case \(\mathbb {S}=\mathbb {F}_q\) or a Galois ring extension in the case \(\mathbb {S}={\mathbb {Z}}/(p^k)\). Note that a direct application of this technique allows a corrupt dealer to share a secret that lies in \(\mathbb {A}\setminus \mathbb {S}\). In some applications, this may be acceptable, while in others, it may not. In Sect. 6, we show how our basic AVSS protocol for secret sharing over \(\mathbb {A}\) can be extended to enforce the requirement that secrets do in fact lie in the subring \(\mathbb {S}\).

2.5 The Number of Roots of a Polynomial

The following result is standard. Since it is typically proved with respect to fields, for completeness, we give a proof here with respect to rings.

Lemma 2.1

(Schwartz–Zippel over rings) Let \(\mathbb {A}\) denote a commutative ring with identity and let \(P \in \mathbb {A}[x_1,x_2,\ldots ,x_n]\) be a nonzero polynomial of total degree \(\mathfrak {d} \ge 0\). Let \(\mathcal {E}\subseteq \mathbb {A}\) be an exceptional set, and let \(r_1,\ldots ,r_n\) be selected uniformly, and independently, from \(\mathcal {E}\). Then

Proof

We first consider the case of univariate polynomials. Let \(f \in \mathbb {A}[x]\) be of degree \(\mathfrak {d}\). We show that it can only have at most \(\mathfrak {d}\) roots in \(\mathcal {E}\). This is done by induction, with the base case of \(\mathfrak {d}=0\) being trivial. Now suppose f(x) is of degree \(\mathfrak {d}+1\), and the result is true for polynomials of degree \(\mathfrak {d}\). We work by contradiction and assume that f(x) has \(\mathfrak {d}+2\) distinct roots in \(\mathcal {E}\), which we label \(r_1,\ldots ,r_{\mathfrak {d}+2}\). We can write \(f(x) = (x-r_{\mathfrak {d}+2}) \cdot g(x)\) for some polynomial g(x) of degree \(\mathfrak {d}\). Since the roots come from an exceptional set we know that \(r_i -r_{\mathfrak {d}+2}\) is invertible for every \(i =1,\ldots ,\mathfrak {d}+1\). Hence \(r_1,\ldots ,r_{\mathfrak {d}+1}\) must be roots of g(x), and so g(x) has \(\mathfrak {d}+1\) distinct roots. This contradicts the inductive hypothesis.

We now prove the main result for multivariate polynomials by induction on n, where the base case of \(n=1\) is the univariate case we just considered. So we assume the statement holds for \(n \ge 1\), and consider the case of multivariate polynomial with \(n+1\) variables \(f(x_1,\ldots ,x_{n+1})\). We can write

where \(f_i\) is a multivariate polynomial in n variables. Since \(f(x_1,\ldots ,x_{n+1})\) is not identically zero there is at least one \(f_i(x_1,\ldots ,x_n)\) which is not identically zero. Let \(\mathfrak {d}'\) denote the largest such index i. We have \(\deg (f_{\mathfrak {d}'}) \le \mathfrak {d}-\mathfrak {d}'\) since f has degree at most \(\mathfrak {d}\).

We know, by the inductive hypothesis, that for randomly chosen \(r_1,\ldots ,r_n \in \mathcal {E}\).

Now if \(f_{\mathfrak {d}'}(r_1,\ldots ,r_n) \ne 0\) then \(f(r_1,\ldots ,r_n,x_{n+1})\) is a nonzero univariate polynomial of degree \(\mathfrak {d}'\). So by the base case we have, for randomly chosen \(r_{n+1} \in \mathcal {E}\),

By Bayes’ Theorem, and some manipulation, we therefore have

\(\square \)

3 Subprotocols

In this section, we review the subprotocols that our new AVSS protocol will need. Here and throughout the rest of this paper, we assume a network of n parties \(P_1, \ldots , P_n\), of which at most \(t < n/3\) of them may be statically corrupted, and which are connected by secure point-to-point channels (providing both privacy and authentication). We also assume network communication is asynchronous.

3.1 Random Beacon

A random bacon is a protocol in which each party initiates the protocol and outputs a common value \(\omega \) that is effectively chosen at random from an output space \(\varOmega \). Such a protocol should satisfy the following security properties (informally stated):

- Correctness:

All honest parties that output a value output the same value \(\omega \).

- Privacy:

The adversary learns nothing about \(\omega \) until at least one honest party initiates the protocol.

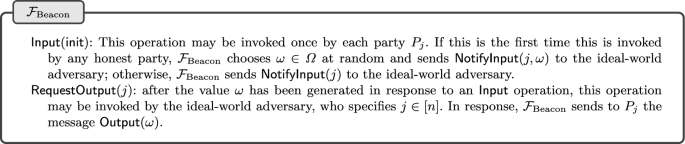

These security properties are best defined in terms of the ideal functionality \(\mathcal {F}_{\textrm{Beacon}}\), which is given in Fig. 2. Note that in \(\mathcal {F}_{\textrm{Beacon}}\), a party \(P_j\) initiates the protocol by explicitly supplying the input \(\textsf {init}\).

We also want such a protocol to satisfy the following completeness property: if all honest parties initiate the protocol, then eventually, all honest parties output a value. Just as in Sect. 2.3.1, “eventually” means if and when all messages sent between honest parties have been delivered.

While our main AVSS protocol relies on a random beacon, we will also give a simpler AVSS protocol (in Sect. 7) that does not need a random beacon at all, but instead is analyzed in the random oracle model. But even in our main AVSS protocol, we only need to run one instance of a random beacon protocol to distribute shares of a large batch of polynomials. As such, a random beacon can, in principle, be securely realized with any (not very efficient) protocol without impacting the overall amortized cost of the AVSS protocol (at least for a sufficiently large batch size).

3.1.1 Implementing a Random Beacon

One could implement a random beacon using a \((t+1)\)-out-of-n threshold BLS signature scheme [7, 9, 38]. Despite being based on “heavyweight” cryptography, this beacon may be efficient enough for use in our AVSS protocol as well as other applications. However, this beacon requires a hardness assumption that is not post-quantum secure, as well additional setup assumptions (including some kind of distributed key distribution step).

Very recently, [4] showed how to implement a random beacon using just “lightweight” cryptography and no setup assumptions (other than secure channels). Although the communication complexity of their HashRand protocol is super-linear, it is likely good enough for use in our AVSS protocol as well as other applications.

Consider a long-running system in which we will need an unlimited supply of random beacons. Suppose we prepare a sufficiently large initial batch I of beacons, using a protocol such as HashRand. Then we can in fact prepare an unlimited supply of beacons, with a linear amortized communication cost per beacon, as follows. We use the standard technique of having each party generate a batch of sharings of a random secret, agreeing on a set of such batches using a consensus protocol, and then adding up the batches in the set to obtain a batch of sharings of random secrets that are unknown to any party. In fact, we can obtain a linear number of such batches in one go by using well-known “batch randomness extraction” techniques (see [31]). The result of this step is one very large batch J of sharings of secrets that are unknown to any party. Each sharing in the batch J can now be used as a random beacon, by just opening the sharing when it is time to reveal the beacon. The construction of J requires an AVSS protocol and a consensus protocol. We could use our new AVSS protocol, which may or may not require a beacon (depending on the version used), and an efficient consensus protocol such as FIN [24], which definitely requires a beacon. With these protocols, so long as I is sufficiently large to run them, we can make J arbitrarily large in relation to I. So we can arrange that \(|J | \gg |I |\), and then partition J into two batches \(I'\) and \(J'\), where \(|I' | = |I |\). Then we can use the batch \(J'\) for applications and the batch \(I'\) to repeat the process again. The amortized communication complexity per sharing of our AVSS protocol is linear. Although we have to run it a linear number of times per beacon, by using the batch randomness extraction technique, the amortized communication complexity per beacon is still linear. Thus, except for an initial “bootstrapping phase”, we can prepare an unlimited supply of beacons with a linear amortized communication cost per beacon.

Note that while the technique in the previous paragraph allows to prepare batches of random beacons with a linear amortized communication cost per beacon, the communication cost to reveal one beacon is quadratic. There are batching techniques that allow one to reveal many such beacons at once at lower cost (see, for example, Section III of [19]), but it is not clear if this type of batching has many useful applications.

3.1.2 Extending the Output Space of a Random Beacon

Suppose we have a protocol \(\varPi \) that securely realizes a random beacon with output space \(\varOmega \). We can use \(\varPi \) to securely realize a random beacon with a larger output space. One way to do this is to run N instances of \(\varPi \) concurrently and concatenate the outputs. This immediately yields a protocol that securely realizes a random beacon with output space \(\varOmega ^N\).

Of course, the approach in the previous paragraph comes at a significant cost. A more practical approach is to use a cryptographic pseudorandom generator \(G: \varOmega \rightarrow \varOmega '\). If \(\varOmega \) is sufficiently large (so that \(1/|\varOmega |\) is negligible), and if we model G as a random oracle, then the protocol \(\varPi '\) that runs \(\varPi \) to obtain the output \(\omega \in \varOmega \) and then outputs \(\omega ' {:}{=}G(\omega ) \in \varOmega '\) securely realizes a random beacon with output space \(\varOmega '\). Indeed, in the random oracle model, a simulator that is given an output \(\omega '\in \varOmega '\) of the ideal functionality for the \(\varOmega '\)-beacon can generate \(\omega \in \varOmega \) at random and program the random oracle representing G so that \(G(\omega ) = \omega '\).

The above security proof relied heavily on the ability to program the random oracle representing G. If instead of modeling G as a random oracle, we just assume that G is a secure pseudorandom generator, then the above security proof falls apart, and in fact, protocol \(\varPi '\) will not securely realize a random beacon. However, the output of \(\varPi '\) will still have properties (such as unpredictability) that may be useful in certain applications. The typical setting where this works is one where the security analysis requires a certain failure event \(\mathcal {E}\) to occur with negligible probability for randomly chosen \(\omega '\in \varOmega '\), and the occurrence of \(\mathcal {E}\) can be detected efficiently as a function of \(\omega '\) and data that are available to the adversary prior to invoking protocol \(\varPi \).

3.2 Reliable Broadcast

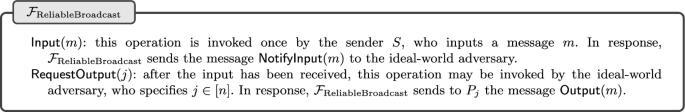

A reliable broadcast protocol allows a sender S to broadcast a single message m to \(P_1, \ldots , P_n\). Such a protocol should satisfy the following security property (informally stated):

- Correctness:

All honest parties that output a message must output the same message. Moreover, if the sender S is honest, that message is the one input by S.

This security property is best defined in terms of the ideal functionality \(\mathcal {F}_{\textrm{ReliableBroadcast}}\), which is given in Fig. 3.

We also want such a protocol to satisfy the following completeness property: if an honest sender S inputs a message or if any honest party outputs a message, then eventually, all honest parties output a message. As usual, “eventually” means if and when all messages sent between honest parties have been delivered.

3.2.1 Bracha Broadcast

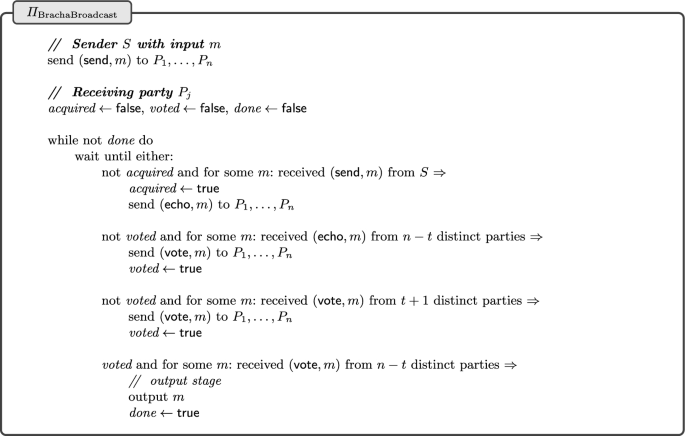

A simple reliable broadcast protocol is called Bracha Broadcast [11] and is given in Fig. 4. We express the logic for the sender as a separate process, even though the sending party is also one of the receiving parties. First note, that the communication complexity of Bracha broadcast is clearly \(O(n^2 \cdot |m |)\).

Since we will be using variants of Bracha broadcast later, we highlight some important properties of the echo/vote logic of this protocol. Suppose that there are \(t' \le t\) corrupt parties.

- Bracha Property B0:

-

If an honest party receives \(n-t\) votes for the same message, then all honest parties will eventually vote for some message.

Suppose an honest party receives \(n-t\) votes for the same message. Then at least \(n-2t \ge t+1\) honest parties must have voted for this message. Upon receipt of these \(t+1\) votes, each honest party will vote if they have not done so already.

- Bracha Property B1:

-

If an honest sender S inputs a message, then all honest parties will eventually vote for some message.

Eventually, all honest parties will echo the sender’s message, unless some honest party enters the output stage without doing so. In the former case, upon receipt of these echoes, each honest party will vote if they have not already done so. In the latter case, some honest party must have received \(n-t\) votes on the same message, and the property follows from Bracha Property B0.

- Bracha Property B2:

-

If an honest party votes for a message, then at least \(n-t-t'\) honest parties must have echoed the same message.

This is because the very first time any honest party votes for a given message it must be the case that this party received \(n-t\) echoes on that message from distinct parties, of which \(n-t-t'\) must be honest.

- Bracha Property B3:

-

If two honest parties vote for a message, they must vote for the same message.

This is because if two honest parties vote different messages, then by Bracha Property B2 we have one set of \(n-t-t'\) honest parties echoing one message, and a second, disjoint set of \(n-t-t'\) honest parties echoing a different message, which means \(2(n-t-t') \le n-t'\), which implies \(n \le 2t+t' \le 3t\).

The correctness property of Bracha broadcast easily follows from Bracha Properties B2 and B3 (B3 implies all honest parties must output the same message, and B2 implies that if the sender is honest, this message must be the one input by the sender). The completeness properties easily follows from Bracha Properties B0, B1, and B3. Since, B0 and B1 imply that if an honest sender inputs a message or an honest party outputs a message, then all honest parties eventually vote for a message, and B3 says they all vote for the same message, which implies all honest parties eventually output a message.

3.2.2 Compact Broadcast

The communication complexity of Bracha broadcast can be improved by the use of erasure codes. To this end, we need an \((n,n-2t)\) erasure code, which has the following properties: a message m can be efficiently encoded as a vector of n fragments \((f_1, \ldots , f_n)\) in such a way that m can be efficiently reconstructed (decoded) from any subset of \(n-2t\) fragments. An \((n, n-2t)\)-Reed-Solomon code can be used for this purpose, but other constructions are possible as well. In any reasonable construction, the size of each fragment will be about \(|m |/(n-2t)\).

We can use such an erasure code to build a reliable broadcast protocol with better communication complexity as follows. Given a long message m, the sender S encodes the message using an \((n, n-2t)\) erasure code, to obtain a vector of n fragments \((f_1, \ldots , f_n)\). Each fragment has size roughly \(|m |/(n-2t)\) — so assuming \(n > 3t\), the size of each fragment is at most roughly \(3 |m | / n\). The sender S then sends each fragment \(f_j\) to party \(P_j\), who then echoes that fragment to all other parties. Each party can then collect enough fragments to reconstruct m. To deal with dishonest parties, some extra steps must be taken.

This approach was initially considered in [21], who give a protocol with communication complexity \(O(n \cdot |m | + \lambda \cdot n^2 \cdot \log n )\). Here, \(\lambda \) is the output length of a collision-resistant hash function. The factor \(\log n\) arises from the use of Merkle trees. If \(|m | \gg \lambda \cdot n \cdot \log n\), this is essentially optimal—indeed, any reliable broadcast protocol must have communication complexity \(\varOmega (n \cdot |m |)\), since every party must receive m.

Recall that that a Merkle tree allows one party, say Charlie, to commit to a vector of values \((v_1, \ldots , v_k)\) using a collision resistant hash function by building a binary tree whose leaves are the hashes of \(v_1, \ldots , v_k\), and where each internal node of the tree is the hash of its (at most two) children. The root r of the tree is the commitment. Charlie may “open” the commitment at an index \(i \in [k]\) by revealing \(v_i\) along with a “validation path” \(\pi _i\), which consists of the siblings of all nodes along the path in the tree from the hash of \(v_i\) to the root r. We call \(\pi _i\) a validation path for \(v_i\) under r at i. Such a validation path is checked by recomputing the nodes along the corresponding path in the tree, and testing that the recomputed root is equal to the given commitment r. The collision resistance of the hash function ensures that Charlie cannot open the commitment to two different values at a given index.

We give here the details of a reliable broadcast protocol that is based on erasure codes and Merkle trees, and which achieves the same communication complexity as that in [21]. This protocol is similar to that presented in [21], but is a bit simpler and also bears some resemblance to a related protocol in the DispersedLedger system [40]. Our reasons for presenting this protocol are threefold: first, to make this paper more self contained; second, because this protocol has somewhat better communication complexity than the one in [21]; and third, because later in this paper, we will modify this protocol to achieve other goals. We call this reliable broadcast protocol \(\varPi _{\textrm{CompactBroadcast}}\) and it is given in Fig. 5.

The reader may observe that \(\varPi _{\textrm{CompactBroadcast}}\) has essentially the same structure as Bracha’s reliable broadcast protocol, where the message being broadcast is the root of a Merkle tree. The correctness property of \(\varPi _{\textrm{CompactBroadcast}}\) follows from Bracha Properties B2 and B3, and the collision resistance of the hash function used for building the Merkle trees. The completeness property of \(\varPi _{\textrm{CompactBroadcast}}\) follows from Bracha Properties B0, B1, B2 and B3. Specifically, Bracha Property B2 in this context ensures that in \(\varPi _{\textrm{CompactBroadcast}}\), if any honest party votes for a root r, then at least \(n-2t\) honest parties must have echoed r along with a corresponding validation path and fragment, so that all honest parties will eventually be able to reconstruct a message from these \(n-2t\) fragments in the output stage.

3.2.3 Other Reliable Broadcast Protocols

For somewhat shorter messages, a protocol such as that in [25] may be used, which achieves a communication complexity of \(O(n \cdot |m | + \lambda \cdot n^2 )\). The protocol \(\varPi _{\textrm{CompactBroadcast}}\) above uses only an erasure code, while the protocol in [25] requires an “online” error correcting code, which may be more computationally expensive than erasure codes. Another potential advantage of \(\varPi _{\textrm{CompactBroadcast}}\) over the protocol in [25] is that the former has a very balanced communication pattern, which can be important to prevent a communication bandwidth bottlenecks. The paper [26] improves on [25], obtaining the same communication complexity, but with a balanced communication pattern.

3.2.4 Relation to AVID

The design of \(\varPi _{\textrm{CompactBroadcast}}\) is based on the notion of Asynchronous Verifiable Information Dispersal, or AVID. In an AVID protocol, a sender S wants to send a message m to some or possibly all of the parties \(P_1, \ldots ,P_n\). There are two phases to such a protocol: the dispersal phase, where S disperses m (or fragments of m) among \(P_1, \ldots ,P_n\), and the retrieval phase, where individual \(P_j\)’s may retrieve m. The correctness property for such a protocol is essentially the same as that of a reliable broadcast protocol:

All honest parties that output a message in the retrieval phase output the same message. Moreover, if S is honest, that message is m.

The completeness property has two parts. First, in the dispersal phase:

If an honest sender S inputs a message or if one honest party completes the dispersal phase, then every honest party eventually completes the dispersal phase.

Second, in the retrieval phase:

If the dispersal phase has completed (for some honest parties), and the retrieval phase for an honest party \(P_j\) is initiated, then \(P_j\) eventually outputs a message.

Note that one can use any AVID protocol to implement reliable broadcast, by first dispersing the message and then having every party retrieve the message.

The point of using an AVID protocol is that in situations where only a small number of parties need to retrieve the message, communication complexity can be much lower than in a reliable broadcast protocol. For example, the dispersal phase of the AVID protocol in the DispersedLedger system [40] is very similar to our protocol \(\varPi _{\textrm{CompactBroadcast}}\), except that the echo messages in the former do not include the validation paths and fragments—rather, these data are only disseminated to those parties that actually need to retrieve the message. The resulting AVID protocol thus has a communication complexity of \(O(|m | + \lambda \cdot n^2)\) in the dispersal phase and \(O(|m | + \lambda \cdot n \cdot \log n)\) per retrieval.

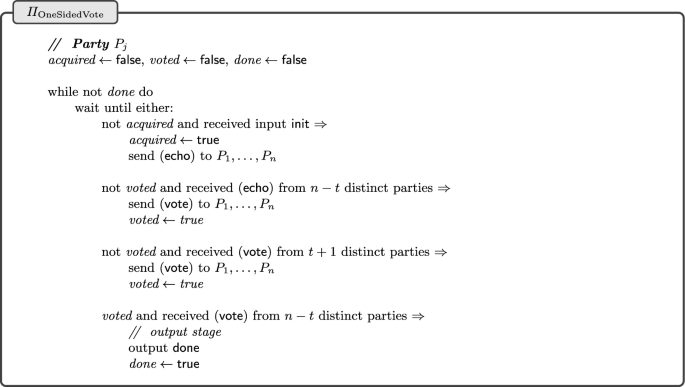

3.2.5 One-Sided Voting

A degenerate version of Bracha broadcast can be used as a simple one-sided voting protocol, see Fig. 6. In this protocol, each party may initiate the protocol and may output the value done. The key security property of this protocol (informally stated) is as follows:

- Correctness:

If any honest party outputs \(\textsf {done}\), then at least \(n-t-t'\) honest parties initiated the protocol, where \(t' \le t\) is the number of corrupt parties.

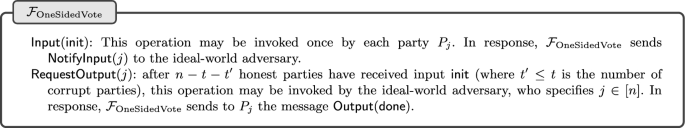

This security property is best defined in terms of the ideal functionality \(\mathcal {F}_{\textrm{OneSidedVote}}\), which is given in Fig. 7. Note that in \(\mathcal {F}_{\textrm{OneSidedVote}}\), a party \(P_j\) initiates the protocol by explicitly supplying the input \(\textsf {init}\).

This protocol also satisfies the following completeness property: if all honest parties initiate the protocol or some honest party outputs \(\textsf {done}\), then eventually, all honest parties output done. As usual, “eventually” means if and when all messages sent between honest parties have been delivered.

The correctness property follows from the analog of Bracha Property B2. The completeness property follows from the analogs of Bracha Properties B0 and B1.

3.3 Secure Message Distribution

We require a new type of protocol, which we call a secure message distribution protocol. Such a protocol enables a sender S to securely distribute a vector \(\textbf{m}= (m_1, \ldots , m_n)\) of messages, so that during an initial distribution phase, each party \(P_j\) outputs \(m_j\). After receiving its own message \(m_j\), party \(P_j\) may optionally forward this message to another party. Moreover, after receiving any message \(m_u\) (either its own or one forwarded to it), party \(P_j\) may optionally forward \(m_u\) to another party. This forwarding functionality will be needed to deal with the “unhappy” path of our AVSS protocol.

Such a protocol should satisfy the following security properties (informally stated):

- Correctness:

If any honest parties produce an output in the distribution or forwarding phases, those messages must be consistent with a message vector \(\textbf{m}=(m_1,\ldots ,m_n)\)—that is, the message output by honest \(P_j\) during the distribution phase must be \(m_j\), and any message output by some honest party during the forwarding phase as ostensibly belonging to to some party \(P_{u}\) must be \(m_{u}\). Moreover, if the sender S is honest, \(\textbf{m}\) must be the same message vector input by S.

- Privacy:

If the sender S and party \(P_{u}\) are honest, and no honest party forwards \(m_{u}\) to a corrupt party, then the adversary learns nothing about \(m_{u}\).

Note that whenever a party outputs a message (in either the distribution or forwarding phase), that message may be \(\bot \), which can only happen if the sender is corrupt.

It will also be convenient for us to allow a party to include an identifying tag along with the forwarded message.

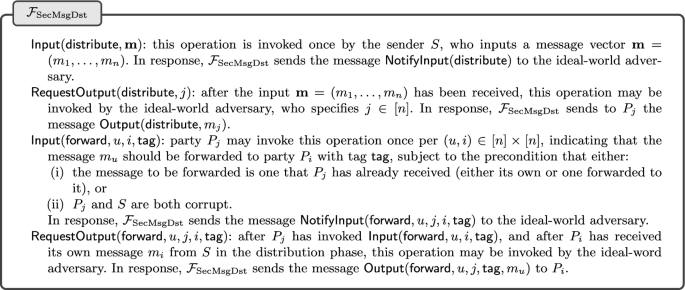

We may more precisely formulate the security properties for secure message distribution as the ideal functionality \(\mathcal {F}_{\textrm{SecMsgDst}}\), which is given in Fig. 8. We note that \(\mathcal {F}_{\textrm{SecMsgDst}}\) also captures an input extractability property that intuitively means that a corrupt sender S must explicitly commit to a vector of all input messages before any honest party outputs its own message in the distribution phase (or any forwarded message for that matter). This is a property that will be essential in the security analysis of our AVSS protocol.

We bring to the reader’s attention the logic in the ideal functionality \(\mathcal {F}_{\textrm{SecMsgDst}}\) for processing a request by a party \(P_j\) to forward a message \(m_u\) to another party. The logic enforces a precondition that requires that either (i) the message to be forwarded is one that \(P_j\) has already received (either its own or one forwarded to it), or (ii) \(P_j\) and S are both corrupt. In any implementation, an honest party will always simply ignore an input that requests it to forward a message it does not have. If we did not have such a precondition, an ideal-world adversary could trivially circumvent the privacy property by having a corrupt \(P_j\) simply ask the ideal functionality to forward a message \(m_u\) belonging to an honest party \(P_u\) to itself or to some other corrupt party.

In fact, \(\mathcal {F}_{\textrm{SecMsgDst}}\) is a bit stronger than we need, in the sense that we may assume that when the sender S is honest, no honest \(P_j\) will forward its message \(m_j\) to any other party. As we will see, this constraint will be satisfied by our AVSS protocol. In the UC framework, this can be captured by only considering restricted environments that satisfy this constraint. We will give an efficient protocol that securely realizes \(\mathcal {F}_{\textrm{SecMsgDst}}\) with respect to such constrained environments. As we will also see, in the random oracle model, essentially the same protocol is secure even without this constraint.

We also want a secure message distribution protocol to satisfy the following completeness property, which has two parts. First, in the distribution phase:

If an honest sender S inputs a vector of messages, or if one honest party outputs a message in the distribution phase, then eventually, all honest parties output a message in the distribution phase.

Second, in the forwarding phase:

If an honest party \(P_j\) forwards a message \(m_u\) to an honest party \(P_i\), then eventually, \(P_i\) receives \(m_u\).

As usual, “eventually” means if and when all messages sent between honest parties have been delivered.

In Sect. 4 we give a secure message distribution protocol that is built from “lightweight” cryptographic primitives, specifically, semantically secure symmetric key encryption and a hash function. The hash function needs to be collision resistant and to also satisfy a kind of related-key indistinguishability assumption (see Sect. 4.2 for more details). The communication complexity of the distribution phase of our protocol is \(O(|\textbf{m} | + \lambda \cdot n^2\cdot \log n )\). If an honest party forwards a message \(m_{u}\) to another party, this adds \(O(|m_{u} | + \lambda \cdot n\cdot \log n )\) to the communication complexity.

In our application to AVSS, we will only use the forwarding mechanism on the “unhappy path”, in which a corrupt sender provably misbehaves. In particular, unless we are on the “unhappy path”, the forwarding mechanism will not contribute to the communication complexity at all.

4 Building Secure Message Distribution

In this section we show how to implement the secure message distribution functionality from Sect. 3.3. Note that [39] and [30] show how to implement this type of functionality using “heavyweight” cryptographic primitives based on discrete logarithms. In particular, [30] rigorously defines a particular multi-encryption primitive with an appropriate notion of chosen ciphertext security and a verifiable decryption protocol and presents practical constructions that are provably secure in the random oracle model.

While such constructions may well yield acceptable performance in practice, we show here that one can implement this functionality using only “lightweight” cryptographic primitives. The resulting protocols are certainly more efficient than those based on discrete logarithms and also have the advantage of providing post-quantum security.

4.1 Reliable Message Distribution

We start out by considering the simpler notion of a reliable message distribution protocol, which satisfies all the properties of a secure message secure message distribution protocol except privacy.

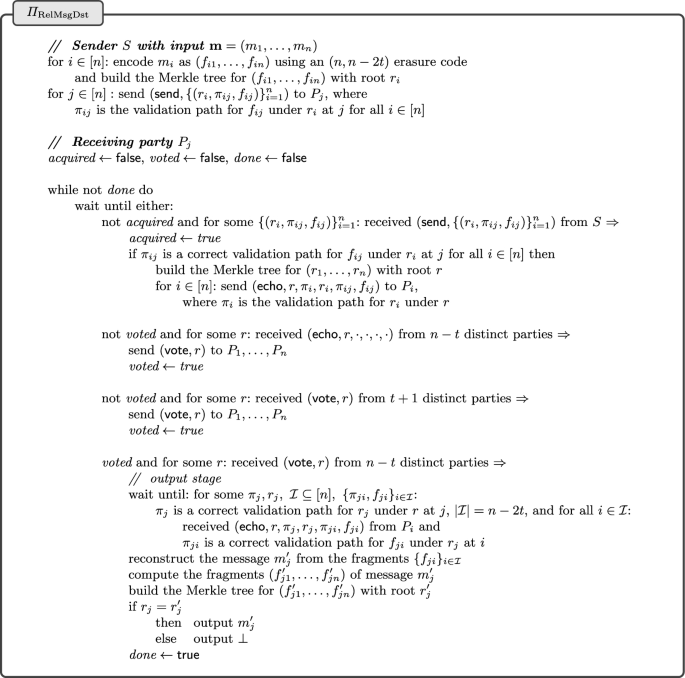

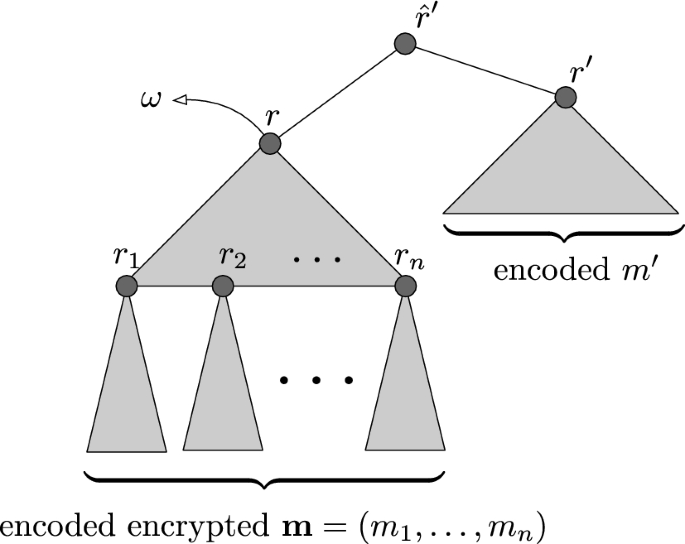

We implement this using a variant of the reliable broadcast protocol \(\varPi _{\textrm{CompactBroadcast}}\) in Sect. 3.2. In the distribution phase, the sender S starts with a vector of messages \(\textbf{m}=(m_1,\ldots ,m_n)\); it encodes each \(m_i\) as a vector of fragments \((f_{i1}, \ldots ,f_{i n})\) and then builds a Merkle tree for \((f_{i1}, \ldots ,f_{i n})\) with root \(r_i\); it then sends each \(P_j\) the collection of values \(\{ (r_i, \pi _{ij}, f_{ij}) \}_{i=1}^n\), where each \(\pi _{ij}\) is the validation path for \(f_{ij}\) under \(r_i\) at j. Thus, each \(P_j\) receives the jth fragment of all n messages. An echo message from \(P_j\) to \(P_i\) now includes \(r, \pi _i, r_i, \pi _{ij}, f_{ij}\), where r is the root of the Merkle tree built from \((r_1,\ldots ,r_n)\) and \(\pi _i\) is the validation path for \(r_i\) under r at i. The vote messages include just the root r. Once party \(P_j\) collects sufficiently many vote messages for a root r, it enters the output stage, and waits to collects sufficiently many echo messages that contain valid fragments of the jth message from which it can reconstruct that message. Details of the complete distribution phase are in Fig. 9.

When \(P_j\) completes the distribution phase of the protocol, it can optionally forward \(m_j\) to another party Q by sending to Q the values it obtained in the last step of the distribution phase, specifically, the values \(\pi _j, r_j\) along with the collection of \(n-2t\) values \(\{ \pi _{ji}, f_{ji} \}_i\). Party Q, who we assume has also completed the distribution phase, can validate this information and compute the message using the same logic used by \(P_j\). This validation consists of two parts:

-

In the first part, Q checks that the validation paths are correct with respect to the root r acquired when entering the output stage; if this check fails, the forwarding subprotocol fails and no output is delivered.

-

In the second part, Q reconstructs the fragments and their Merkle tree, and compares the Merkle tree roots. If this check fails, the forwarding subprotocol outputs \(\bot \); otherwise, it outputs the message.

The first part detects if the party forwarding the message is misbehaving, while the second detects if the sender S was misbehaving.

The same logic above can obviously be adapted to allow a party to forward any message that it has received, either its own or one that was forwarded to it.

4.1.1 Correctness and Completeness

The correctness and completeness properties for protocol \(\varPi _{\textrm{RelMsgDst}}\) follow from essentially the same argument used in Sect. 3.2.2 for the corresponding properties for protocol \(\varPi _{\textrm{CompactBroadcast}}\). Protocol \(\varPi _{\textrm{RelMsgDst}}\) also satisfies the input extractability property, which follows from the same argument used in Sect. 3.2.2 for the completeness property for protocol \(\varPi _{\textrm{CompactBroadcast}}\). Specifically, Bracha Property B2 ensures that when one honest party reaches the output stage in \(\varPi _{\textrm{RelMsgDst}}\), at least \(n-2t\) honest parties have echoed validation paths and fragments for all input messages \(m_1, \ldots , m_n\). Therefore, assuming collision resistance for the hash function used for building Merkle trees, all these messages are fully determined at this time.

4.1.2 Communication Complexity

The communication complexity of the distribution phase is \(O(|\textbf{m} | + \lambda \cdot n^2\cdot \log n )\). If an honest party forwards a message \(m_{u}\) to another party, this adds \(O(|m_{u} | + \lambda \cdot n\cdot \log n )\) to the communication complexity.

Note that when a corrupt party forwards a message to an honest party, this does not contribute anything to the communication complexity. This property will be important when we analyze the communication complexity of our AVSS protocol on the “happy path”. The reason this type of forwarding does not contribute anything is that in defining communication complexity, we count the number of bits sent by all honest parties to all parties. One might argue that this is a theoretical distinction, and that in practice, one should count all bits sent and received by honest parties. However, such a definition of communication complexity is unworkable, as it cannot be upper bounded at all—corrupt parties may “spam” their honest peers with an unbounded amount of data. In practice, honest parties would likely attempt to distribute their download bandwidth equitably among all of its peers and employ some kind of “spam prevention” strategy to protect itself against peers who try to monopolize its download bandwidth.

4.1.3 Relation to AVID

In Sect. 3.2.4 we briefly recalled the notion of an AVID protocol. In fact, the design of our protocol \(\varPi _{\textrm{RelMsgDst}}\) is inspired by the AVID protocol in the DispersedLedger system [40]. In principle, one could build a reliable message distribution protocol simply by running n instances of an AVID protocol concurrently, one for each input message \(m_j\): the retrieval mechanism of the AVID protocol could be used both to deliver \(m_j\) to \(P_j\) and to optionally forward \(m_j\) to other parties. So we could have simply implemented this generic strategy using the AVID protocol in [40]. There are several reasons we did not do this:

-

This generic strategy, instantiated with DispersedLedger’s AVID protocol, would result in a reliable message distribution protocol whose communication complexity in the distribution phase is \(O(|\textbf{m} | + \lambda \cdot n^3)\) rather than \(O(|\textbf{m} | + \lambda \cdot n^2\cdot \log n )\). Note that the same communication complexity would result if we instantiated with the AVID protocol in [26].

-

This generic strategy, instantiated with DispersedLedger’s AVID protocol, would result in a reliable message distribution protocol where the number of rounds of communication in the distribution phase was 4 rather than 3.

-

In this generic strategy, where we use the retrieval mechanism of AVID to implement the forwarding mechanism of reliable message distribution, we run into a subtle problem regarding communication complexity. As noted above, in our reliable message distribution protocol, when a corrupt party forwards its message to an honest party, this does not contribute anything to the communication complexity. However, if we use the retrieval mechanism of AVID for message forwarding, when a corrupt party attempts to forward its message to an honest party, all honest parties must participate in the protocol, which contributes to the communication complexity. So in this case, some additional mechanism would be required to prevent or at least detect misusing the forwarding mechanism.

In addition to the above, we wanted to present a concrete reliable message distribution protocol which we could then easily modify to add data privacy and so obtain a secure message distribution protocol.

4.2 Secure Key Distribution

The above reliable message distribution protocol does not provide any data privacy. This can be remedied by augmenting it with a protocol for secure key distribution and then using these secret keys to encrypt the messages and using \(\varPi _{\textrm{RelMsgDst}}\) to distribute the resulting ciphertexts.

We sketch briefly here the properties that a secure key distribution protocol should satisfy and how to build one, and then below in Sect. 4.3, we show in how to integrate this protocol into our protocol \(\varPi _{\textrm{SecMsgDst}}\) to obtain a secure message distribution protocol.

In a secure message distribution protocol, the goal is to have the sender S distribute a vector of keys \(\varvec{k} = (k_1, \ldots , k_n)\), so that each party \(P_j\) obtains \(k_j\). Here, if the sender S is honest, the keys \(k_1, \ldots , k_n\) are not input by the sender, but rather are generated by the protocol itself, and the sender obtains the vector \(\varvec{k}\) as an output of the protocol; however, a corrupt sender S may effectively choose and input an arbitrary vector \(\varvec{k}\). In addition, just like for reliable message distribution, the protocol should allow a party to forward a key that it has received to another party. Such a protocol should satisfy analogous correctness and completeness (as well as input extractability) properties. In addition, the following property should hold:

- Privacy:

If the sender S and party \(P_{u}\) are honest, and no honest party forwards \(k_{u}\) to a corrupt party, then the adversary learns nothing about \(k_{u}\).

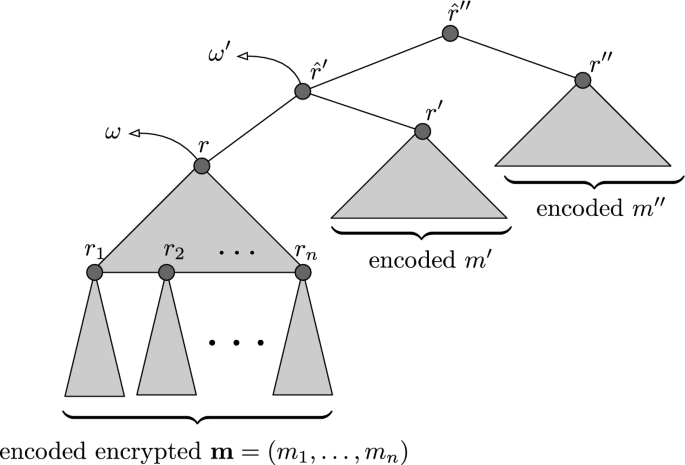

To implement such a scheme, which we call \(\varPi _{\textrm{SecKeyDst}}\), we modify the reliable message distribution protocol \(\varPi _{\textrm{RelMsgDst}}\) so that instead of encoding a key using an erasure code, we share it using Shamir secret sharing. In more detail, let \(H: [0..n] \times F \rightarrow \mathcal {K}\) be a cryptographic hash function, where F is a large finite field and \(\mathcal {K}\) is the key space. Let \((\eta _0, \eta _1, \ldots , \eta _n)\) be fixed sequence of distinct elements in F.Footnote 3

The sender proceeds as follows. For each \(i\in [n]\), the sender chooses a random polynomial \(\theta _i \in F_{< n-2t}\). Let \(s_{ij} {:}{=}\theta _i(\eta _j)\) for \(j\in [0..n]\). The key \(k_i\) is defined as \(k_i {:}{=}H(0,s_{i0})\). The sender builds a Merkle tree from \((\ (H(1,s_{i1}), \ldots , H(n,s_{in}) \ )\) with root \(r_i\). and sends to each \(P_j\) the collection of values \(\{ (r_i, \pi _{ij}, s_{ij}) \}_{i=1}^n\), where \(\pi _i\) is a validation path for \(H(j, s_{ij})\) under \(r_i\) at j.

Upon receiving such a message from S, and validating it is of the correct form, each party \(P_j\) then builds a Merkle tree for \((r_1, \ldots , r_n)\) with root r and echoes to each \(P_i\) the tuple \((r, \pi _i, r_i, \pi _{ij}, s_{ij})\), where \(\pi _i\) is a validation path for \(r_i\) under r. The voting logic works just as before. In the output stage, \(P_j\) waits for valid echo messages from a set of \(n-2t\) distinct parties \(P_i\), and then reconstructs a polynomial \(\theta '_j \in F[x]_{< n-2t}\) via polynomial interpolation from \(\{ s_{ij} \}_{i}\). It then builds a Merkle tree from \((\ H(1,\theta '_j(\eta _1)), \ldots , H(n,\theta _j(\eta _n)) \ )\) with root \(r'_j\). If \(r_j = r\), it outputs the key \(H(0, \theta '_j(0))\), and otherwise outputs \(\bot \).

When \(P_j\) completes the distribution phase of the protocol, it can optionally forward \(k_j\) to another party Q by sending to Q the values it obtained in the last step of the distribution phase, specifically, the values \(\pi _j, r_j\) along with the collection of \(n-2t\) values \(\{ \pi _{ji}, s_{ji} \}_i\). Party Q who can validate this information and compute \(k_j\) using the same logic used by \(P_j\). This strategy can obviously be adapted to allow a party to forward any key that it has received, either its own or one that was forwarded to it.

We note that \(\varPi _{\textrm{SecKeyDst}}\) has some rough similarities to the asynchronous weak VSS protocol in [23], but the goals and a number of details are quite different.

4.2.1 Correctness and Completeness

One can easily adapt the analysis of \(\varPi _{\textrm{RelMsgDst}}\) to show that \(\varPi _{\textrm{SecKeyDst}}\) satisfies the correctness and completeness (as well as input extractability) properties, assuming the hash function used to implement the Merkle trees, as well as H, are collision resistant.

4.2.2 Proving Privacy Under the Linear Hiding Assumption

To prove the privacy property for \(\varPi _{\textrm{SecKeyDst}}\), we make the following assumption on the hash function \(H: [0..n] \times F \rightarrow \mathcal {K}\), which we call the linear hiding assumption. This is a kind of indistinguishability assumption under a “related key attack”.

This assumption is defined by a game in which the adversary first chooses a collection of pairs \(\{ (a_i, b_i ) \}_{i \in \mathcal {I}}\), where \(\mathcal {I} \subseteq [0..n]\) and each \(a_i\) is nonzero. The task of the adversary is to distinguish the distribution

where \(s \in F\) is randomly chosen, from the uniform distribution on \(\mathcal {K}^\mathcal {I}\). The assumption states that no computationally bounded adversary can effectively distinguish these two distributions.

This assumption is certainly true in the random oracle model, assuming \(1/|F |\) is negligible. Indeed, if we model H as a random oracle, the best the adversary can do is evaluate H at many points \((i, s^*)\) for \(i \in \mathcal {I}\) and \(s^* \in F\), and hope that \(s^* = a_i \cdot s + b_i\). So it seems a reasonable assumption.

We can use this assumption to prove the privacy of \(\varPi _{\textrm{SecKeyDst}}\) as follows. Assume the sender S is honest and consider any one honest party. For this party, the sender chooses a random polynomial \(\theta \in F[x]_{< n-2t}\) and computes \(s_{j} {:}{=}\theta (\eta _j)\) for \(j\in [0..n]\). Let \(\mathcal {C}\) be the set of corrupt parties, which we are assuming is of cardinality \(\le t < n-2t\), and let \(\mathcal {H}{:}{=}[n] {\setminus } \mathcal {C}\) be the set of honest parties. During the execution of the protocol, the adversary learns \(s_{j}\) for \(j \in \mathcal {C}\). The only other information about the polynomial \(\theta \) that the adversary learns is derived as a function of \(H(i, s_{i})\) for \(i \in \mathcal {H}\). We want to argue that given this information, the adversary cannot distinguish the actual key \(H(0, s_{0})\) from a random key, under the linear hiding assumption.

Without loss of generality, we may give the adversary even more information, namely let \(\mathcal {C}' \subseteq [n]\) be an arbitrary set of size exactly \(n-2t-1\) containing \(\mathcal {C}\), and let us assume that the adversary is given \(s_{j}\) for \(j \in \mathcal {C}'\) and \(H(i, s_{i})\) for \(i \in \mathcal {H}' {:}{=}[n] {\setminus } \mathcal {C}'\). By Lagrange interpolation, for each \(i \in \mathcal {H}'\), there exist nonzero constants \(\{ \lambda _{ij} \}_{j \in \mathcal {C}' \cup \{0\}}\) in the field F such that

The indistinguishability of \(H(0, s_0)\) from random follows directly from the linear hiding assumption, where in the attack game for that assumption, we use the adversarially chosen pairs

where

That proves the privacy property of a single honest party’s key. The proof can easily be extended to cover all honest parties’ keys by a standard “hybrid” argument.

We note that the indistinguishability property for keys, and the fact that the key space itself must be large (as we are assuming the key space is the output space of a collision-resistant hash), implies that keys are unpredictable.

4.2.3 Domain Separation Strategies for H

Our construction uses the simple “domain separation” strategy for H, where we include both j and \(s_{ij}\) in the input to H. The inclusion of j is not strictly necessary, but it yields a simpler and quantitatively better security analysis in the random oracle model. In fact, if we include i as well in the input, we would obtain an even better concrete security bound (avoiding the “hybrid” argument mentioned above). Moreover, as a practical matter, one should include even more contextual information as an input to H that identifies the individual instance of the protocol, including the identity of the sender. This is not only good security practice, but will also yield better concrete security bounds for a system in which many instances of the protocol are run.

4.2.4 Communication Complexity

Assuming individual keys are of size \(O(\lambda )\), the communication complexity of the distribution phase is \(O(\lambda \cdot n^2\cdot \log n )\). If an honest party forwards a key to another party, this adds \(O(\lambda \cdot n\cdot \log n )\) to the communication complexity. Just as in Sect. 4.1.2, then a corrupt party forwards its key to an honest party, this does not contribute anything to the communication complexity.

4.3 A Secure Message Distribution Protocol