Abstract

In this paper, we have developed a novel approach for deriving shrinkage estimators of means without assuming normality. Our method is based on the equation of the first-order condition with a logarithmic penalty, and it introduces both one-step and two-step shrinkage estimators. The one-step estimator closely resembles the James–Stein estimator, while the differentiable two-step estimator exhibits similar performance to the positive-part Stein estimator. Although the latter does not satisfy Baranchik’s conditions, both estimators can be demonstrated to be minimax under normality assumptions. Furthermore, we have extended this method to handle cases involving an unknown scale. We have successfully applied this approach to the simultaneous estimation of Poisson means.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the simultaneous estimation of means of multivariate normal distributions, the so-called Stein problem has been studied in the literature since Stein (1956) established the inadmissibility of the maximum likelihood estimator when the dimension of means is larger than or equal to three. Especially, James and Stein (1961) provided a simple and concrete shrinkage estimator which improves on the maximum likelihood estimator, namely a minimax shrinkage estimator under the squared error loss. Then, a lot of minimax shrinkage estimators have been suggested for various models. For good account of this topic, see Stein (1981), Berger (1985), Brandwein and Strawderman (1990), Robert (2007) and Fourdrinier et al. (2018).

In particular, Efron and Morris (1972a, b) showed that the James–Stein estimator can be derived as an empirical Bayes estimator, and Strawderman (1971) discovered prior distributions which yield Bayes and minimax estimators. Strawderman’s estimator is an ultimate goal in the framework of decision theory, because it is admissible and minimax. Since then, Bayesian minimax estimation has been studied actively and extensively.

To explain the details, we consider random variable \({\varvec{X}}=(X_1, \ldots , X_p)^\top \) having a p-variate normal distribution \(\mathcal {N}_p({\varvec{\theta }}, {\varvec{I}})\) for \({\varvec{\theta }}=({\theta }_1, \ldots , {\theta }_p)^\top \), where estimator \(\widehat{{\varvec{\theta }}}=(\hat{{\theta }}_1, \ldots , \hat{{\theta }}_p)^\top \) of \({\varvec{\theta }}\) is evaluated by the risk relative to the squared error loss \(\Vert \widehat{{\varvec{\theta }}}-{\varvec{\theta }}\Vert ^2=\sum _{i=1}^p (\hat{{\theta }}_i-{\theta }_i)^2\). The James–Stein estimator is

which is improved on by the positive-part Stein estimator

Both estimators are minimax for \(p\ge 3\), but inadmissible. An admissible and minimax estimator is the generalized Bayes estimator against the shrinkage prior \(\Vert {\varvec{\theta }}\Vert ^{2-p}\), given by

for

Kubokawa (1991, 1994) showed that this Bayes estimator improves on the James–Stein estimator.

The joint density of \(({\varvec{X}}, {\varvec{\theta }})\) for the shrinkage prior \(\Vert {\varvec{\theta }}\Vert ^{2-p}\) is

Bayes estimators in the literature often arises as posterior means, but in this paper, we try to handle modes of posterior distributions. Since the log likelihood of the joint density is proportional to

This expression motivates us to consider the posterior mode, namely the minimization of

In linear regression models, ridge regression and lasso regression are regularized least squares methods with \(L_2\) and \(L_1\) penalties. These methods do not assume normality as the underlying distributions. Similarly, the minimization problem of (1.4) is set up without assuming normality. However, it may be hard to find the optimal solution of the minimization. Instead of that, in this paper, we utilize the equation of the first-order condition (FOC), and based on the FOC equation, we suggest shrinkage estimators.

The paper is organized as follows. In Sect. 2, we consider the estimation of means with known scale parameter, and set up the minimization problem with the logarithmic penalty. Using the optimal relation, we propose two kinds of shrinkage estimators, called the one-step shrinkage and the two-step shrinkage estimators. The one-step shrinkage estimator resembles the James–Stein estimator, and the two-step shrinkage estimator is differentiable and performs similarly to the positive-part Stein estimator. It is noted that the two-step shrinkage estimator does not belong to Baranchik’s class of minimax estimators, so that we need to evaluate the risk function directly. It can be shown that both estimators are minimax under normality. The estimators are numerically compared and it is revealed that the two-step shrinkage estimator has a good performance for non-normal distributions. The extension to the case of unknown scale is given in Sect. 3. In Sect. 4, we study the simultaneous estimation of means of discrete distributions. Two kinds of shrinkage estimators are derived and their minimaxity is established. In Sect. 5, we give remarks on extensions to estimation of matrix means and linear regression models. For numerical computations in this paper, we used the ox program by Doornik (2007).

2 Shrinkage estimators under the logarithmic penalties

Let \(X_1, \ldots , X_p\) be observable random variables generated from the model

where \({\varepsilon }_1, \ldots , {\varepsilon }_p\) are independently distributed with \(\textrm{E}[{\varepsilon }_i]=0\) and common finite variance. Let \({\varvec{X}}=(X_1, \ldots , X_p)^\top \) and \({\varvec{\theta }}=({\theta }_1, \ldots , {\theta }_p)^\top \). Estimator \(\widehat{{\varvec{\theta }}}\) of \({\varvec{\theta }}\) is evaluated in terms of the risk relative to the quadratic loss \(\Vert \widehat{{\varvec{\theta }}}-{\varvec{\theta }}\Vert ^2/{\sigma }^2\). The estimator \({\varvec{X}}\) is the optimal solution of \(\Vert {\varvec{X}}-{\varvec{\theta }}\Vert ^2/{\sigma }^2\), and the solutions of \(\Vert {\varvec{X}}-{\varvec{\theta }}\Vert ^2/{\sigma }^2+{\lambda }\sum _{i=1}^p |{\theta }_i|\) and \(\Vert {\varvec{X}}-{\varvec{\theta }}\Vert ^2/{\sigma }^2+{\lambda }\Vert {\varvec{\theta }}\Vert ^2\) with \(L_1\) and \(L_2\) penalties for positive \({\lambda }\) give shrinkage estimators. Those penalized procedures correspond to the ridge estimator and the lasso in the framework of linear regression models.

In this paper, we consider deriving estimators of \({\varvec{\theta }}\) through the minimization

for known positive constant c suitably chosen later. Since

the first-order condition (FOC) satisfies the equation

The optimal solution may be given by solving the FOC equation numerically. Instead of that, we want to provide estimators with closed forms by using the FOC equation. A simple method is to replace \(\Vert {\varvec{\theta }}\Vert ^2\) in RHS of (2.2) with \(\Vert {\varvec{X}}\Vert ^2\). Then we have

which we call the one-step shrinkage estimator. This type of shrinkage estimators were first treated by Stein (1956).

We next consider to use the FOC equation (2.2) twice. From (2.2), we have

Again substituting this quantity into (2.2) yields

Replacing \(\Vert {\varvec{\theta }}\Vert ^2\) with \(\Vert {\varvec{X}}\Vert ^2\), we have

which we call the two-step shrinkage estimator.

We here look into the two-step shrinkage estimator, which is rewritten as \(\widehat{{\varvec{\theta }}}^\textrm{TS}=\{1-\phi _\textrm{TS}(W)/W\}{\varvec{X}}\) for \(W=\Vert {\varvec{X}}\Vert ^2/{\sigma }^2\), where

The derivative of \(\phi _\textrm{TS}(w)\) is

Let \(w_0\) be the solution of \(\phi _\textrm{TS}'(w)=0\) or \((1+c/w)^3=2\). The solution \(w_0\) satisfies that \(c/w_0=2^{1/3}-1\) or \(w_0=c/(2^{1/3}-1)\), and the maximum value of \(\phi _\textrm{TS}(w)\) is given by

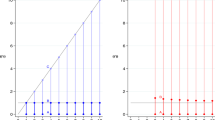

Thus, \(\phi _\textrm{TS}(w)\) is increasing for \(0\le w\le w_0\), and decreasing for \(w>w_0\), which implies that \(\phi _\textrm{TS}(w)\le \phi _\textrm{TS}(w_0)\) for any \(w\ge 0\). The function \(\phi _\textrm{TS}(w)\) is illustrated in Fig. 1.

An appropriate choice of c is \(c=p-2\), because both estimators \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) and \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) with \(c=p-2\) are minimax under the normal distribution as shown below. When \(c=p-2\), \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) resembles the James–Stein estimator \(\widehat{{\varvec{\theta }}}^\textrm{JS}\) in (1.1), while \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is close to the positive-part Stein estimator \(\widehat{{\varvec{\theta }}}^\textrm{PS}\) in (1.2). Although \(\widehat{{\varvec{\theta }}}^\textrm{PS}\) is not differentiable at some point, \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is differentiable. We compare the corresponding shrinkage coefficients of \({\varvec{X}}\) in \(\widehat{{\varvec{\theta }}}^\textrm{PS}\) and \(\widehat{{\varvec{\theta }}}^\textrm{TS}\). Let

for \(c=p-2\). These values for some small w’s are given in Table 2. For \(w\le 1.5\), \(\widehat{{\varvec{\theta }}}^\textrm{PS}\) is more shrunken than \(\widehat{{\varvec{\theta }}}^\textrm{TS}\), but less shrunken for \(w\ge 2\). Numerical investigations given below demonstrate that \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is more efficient than \(\widehat{{\varvec{\theta }}}^\textrm{PS}\) for small \(\Vert {\varvec{\theta }}\Vert ^2\).

It is noted that normal distributions are not used in the derivation of \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) and \(\widehat{{\varvec{\theta }}}^\textrm{TS}\). Under the normality, we can investigate minimaxity of these estimators. All the equivariant estimators under orthogonal transformations are of the form

for function \(\phi (\cdot )\). When estimator \(\widehat{{\varvec{\theta }}}\) is evaluated by the risk function \(R({\varvec{\theta }},\widehat{{\varvec{\theta }}})=\textrm{E}[\Vert \widehat{{\varvec{\theta }}}-{\varvec{\theta }}\Vert ^2/{\sigma }^2]\), Stein (1973, 1981) showed that the unbiased estimator of the risk difference \({\Delta }_\phi =R({\varvec{\theta }},\widehat{{\varvec{\theta }}}_\phi )-R({\varvec{\theta }},{\varvec{X}})\) is

for \(W=\Vert {\varvec{X}}\Vert ^2\). Baranchik (1970) demonstrated that \(\widehat{{\varvec{\theta }}}_\phi \) is minimax if (a) \(\phi (w)\) is nondecreasing and (b) \(0\le \phi (w)\le 2(p-2)\). A class of estimators satisfying these conditions is called Baranchik’s class. It can be easily seen that \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) belongs to Baranchik’s class of minimaxity for \(0<c\le 2(p-2)\). Concerning \(\widehat{{\varvec{\theta }}}^\textrm{TS}\), however, it does not belong to Baranchik’s class, because monotonicity of \(\phi _\textrm{TS}(w)\) is not satisfied as shown above. Thus, we need to investigate the unbiased estimator of the risk directly.

Theorem 2.1

Under the normality with \({\varvec{X}}\sim \mathcal {N}_p({\varvec{\theta }}, {\sigma }^2{\varvec{I}})\), the two-step shrinkage estimator \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is minimax relative to the quadratic loss \(\Vert \widehat{{\varvec{\theta }}}-{\varvec{\theta }}\Vert ^2/{\sigma }^2\) if \(0<c\le (3/2)(p-2)\) for \(p\ge 3\).

Proof

Without loss of generality, we set \({\sigma }^2=1\). We shall show that \(\widehat{{\Delta }}_{\phi _\textrm{TS}}(w)\le 0\) for any nonnegative w, where

Using the derivative given in (2.7), we can write \(\widehat{{\Delta }}_{\phi _\textrm{TS}}(w)\) as

which is further rewritten as

where

Then, g(w) is rewritten as

Note that \(1-{2(p-2)/ c}<0\), \(c-2p<0\) and \(c-6p<0\) for \(0<c<(3/2)(p-2)\). Thus, it is sufficient to show that the coefficient of \(w^2\) is not positive, namely, \(h(c)\le 0\) for

Let \(d=(3/2)(p-2)-c\). Substituting \(c=(3/2)(p-2)-d\) into h(c), we have

which is not positive for \(p\ge 3\) and \(d\ge 0\). Thus, one can see that \(\widehat{{\Delta }}_{\phi _\textrm{TS}}(w)\le 0\) for any \(w\ge 0\) when \(0<c\le (3/2)(p-2)\), and the minimaxity of \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is established. \(\square \)

We investigate the performance of the suggested shrinkage estimators and compare their risks with some existing shrinkage estimators in the model (2.1). The estimators we compare are the James–Stein estimator \(\widehat{{\varvec{\theta }}}^\textrm{JS}\) in (1.1), the positive-part Stein estimator \(\widehat{{\varvec{\theta }}}^\textrm{PS}\) in (1.2), the generalized Bayes estimator \(\widehat{{\varvec{\theta }}}^\textrm{GB}\) against the shrinkage prior \(\Vert {\varvec{\theta }}\Vert ^{2-p}\), given in (1.3), the one-step and two-step shrinkage estimators \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) and \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) with \(c=p-2\), which are denoted by JS, PS, GB, OS and TS, respectively. Since these estimators are orthogonally equivariant, their risks depend on \({\varvec{\theta }}\) only through \(\Vert {\varvec{\theta }}\Vert ^2\). Thus, the simulation experiments are conducted for \({\varvec{\theta }}=(k/3){\varvec{I}}\) for \(k=0, \ldots , 9\) and \({\sigma }=1\).

In the normal distribution \(\mathcal {N}({\varvec{\theta }}, {\varvec{I}})\), the exact values of the risk functions can be obtained by using numerical integration based on the noncentral chi-squared distribution. These values are illustrated in Fig. 2 and given in Table 2 for \(p=6\) and \({\lambda }=\Vert {\varvec{\theta }}\Vert ^2\).

We also investigate the performance when the underlying distributions of \({\varepsilon }_i\) are Student t-distribution with 5 degrees of freedom, logistic distribution and double exponential distribution, where these probability density functions are, respectively, given by

In these cases, the average losses based on 10,000 replications are calculated as approximation of risk functions and reported in Table 3.

From Fig. 2 and Tables 2 and 3, it is seen that the minimaxity of the five estimators is robust for the t-, logistic and double exponential distributions. It is also revealed that OS is close to, but worse than JS, and that TS is close to, but better than PS in most cases. As justified theoretically by Kubokawa (1991, 1994), GB is better than JS under the normality. However, it is confirmed numerically that this property does not hold for \(t_5\)- and logistic distributions with smaller \(\Vert {\varvec{\theta }}\Vert ^2\). Comparing TS and PS, we can see that TS is better than PS except for the cases of larger \(\Vert {\varvec{\theta }}\Vert ^2\) in the normal distribution. TS is the best of five in most cases. In particular, TS provides the smallest risk of five at \(\Vert {\varvec{\theta }}\Vert ^2=0\). TS is differentiable and the performance is recommendable in this setup of simulation. However, its admissibility is not guaranteed.

3 Extension to the case of unknown scale

We consider to extend the result of the previous section to the case of an unknown scale parameter. To this end, let us consider the random sampling model

where \({\varepsilon }_{ij}\)’s are independently and identically distributed as \(\textrm{E}[{\varepsilon }_{ij}]=0\) and \(\textrm{Var}({\varepsilon }_{ij})<\infty \), and \(\tau \) is an unknown scale parameter. Let \(X_i=m^{-1}\sum _{j=1}^mY_{ij}\), \(S=\sum _{i=1}^p\sum _{j=1}^m(Y_{ij}-X_i)^2/m\), \({\sigma }^2=\tau ^2/m\) and \(n=p(m-1)\). Then, \(X_1, \ldots , X_p\) are independent random variables with \(\textrm{E}[X_i]={\theta }_i\) and \(\textrm{Var}(X_i)={\sigma }^2\), \(i=1, \ldots , p\), and \(\textrm{E}[S]=p(m-1)\tau ^2/m=n{\sigma }^2\).

Consider the estimation of \({\varvec{\theta }}=({\theta }_1, \ldots , {\theta }_p)^\top \) based on \({\varvec{X}}=(X_1, \ldots , X_p)^\top \) and S. Let \(W=\Vert {\varvec{X}}\Vert ^2/S\). Replacing \(\Vert {\varvec{X}}\Vert ^2/{\sigma }^2\) in (2.3) and (2.5) with W, we have

Estimator \(\widehat{{\varvec{\theta }}}\) is evaluated in terms of the risk function given by \(R({\varvec{\theta }},\widehat{{\varvec{\theta }}})=\textrm{E}[\Vert \widehat{{\varvec{\theta }}}-{\varvec{\theta }}\Vert ^2/{\sigma }^2]\). Under the normality, we can show minimaxity of the suggested estimators. When we consider estimators of the general form

for function \(\phi (w)\), Efron and Morris (1976a) showed that the unbiased estimator of the risk difference \({\Delta }=R({\varvec{\theta }},\widehat{{\varvec{\theta }}}_\phi )-R({\varvec{\theta }},{\varvec{X}})\) is

for \(a=(p-2)/(n+2)\). Baranchik’s class of minimax estimators is characterized as (a) \(\phi (w)\) is nondecreasing and (b) \(0\le \phi (w)\le a=(p-2)/(n+2)\). The one-step shrinkage estimator \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) belongs to Baranchik’s class for \(0<c\le 2a\). Concerning \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) however, it does not belong to Baranchik’s class similarly to the case of Sect. 2.

Theorem 3.1

Under the normality with \({\varvec{X}}\sim \mathcal {N}_p({\varvec{\theta }}, {\sigma }^2{\varvec{I}})\) and \(S/{\sigma }^2\sim \chi _n^2\), the two-step shrinkage estimator \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is minimax relative to the quadratic loss \(\Vert \widehat{{\varvec{\theta }}}-{\varvec{\theta }}\Vert ^2/{\sigma }^2\) if \(0<c\le (4/3)(p-2)/(n+2)\) for \(p\ge 3\).

Proof

It is noted that \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is written as \(\widehat{{\varvec{\theta }}}^\textrm{TS}=\{1-\phi _\textrm{TS}(W)/W\}{\varvec{X}}\) for \(\phi _\textrm{TS}(w)\) given in (2.6). From (3.4), the unbiased estimator of the risk difference \({\Delta }=R({\varvec{\theta }},\widehat{{\varvec{\theta }}}^\textrm{TS})-R({\varvec{\theta }},{\varvec{X}})\) is

for \(a=(p-2)/(n+2)\). We shall show that \(\widehat{{\Delta }}_{\phi _\textrm{TS}}(w)\le 0\) for any nonnegative w. The proof is harder than that of Theorem 2.1, because of the term \(\{1+\phi _\textrm{TS}(W)\}\phi _\textrm{TS}'(W)\).

From the arguments around (2.8), for \(c\le (4/3)a\), it is observed that \(\phi _\textrm{TS}(w_0) \le 1.13 c \le 1.13\times {4\over 3}a=1.507 a < 2 a\), which implies that \(\phi _\textrm{TS}(w)\le \phi _\textrm{TS}(w_0) < 2a\). Then,

which is used to rewrite \(\widehat{{\Delta }}(w)\) as

where

Let \(A=\{1+\phi _\textrm{TS}(w_0)\}/(n+2)\) for simplicity. Using the same arguments as in the proof of Theorem 2.1, we can see that

which is rewritten as

Thus, we have

where

Then, g(w) is rewritten as

Note that \(1-{2a/ c}<0\), \(c-2a-4A<0\) and \(c-6a-12A<0\) for \(0<c<(4/3)a\). Thus, it is sufficient to show that the coefficient of \(w^2\) is not positive, namely, \(h(c)\le 0\) for

Let \(d=(4/3)a-c\). Substituting \(c=(4/3)a-d\) into h(c), we have

We can verify that \((2/3)a^2 + 4aA - 2A^2\ge 0\) for \(p\ge 3\) and \(d\ge 0\). In fact, it is observed that

and from (2.8),

From this inequality, it follows that

Thus, one can see that \(\widetilde{{\Delta }}(w)\le 0\) or \(\widehat{{\Delta }}_{\phi _\textrm{TS}}(w)\le 0\) for any \(w\ge 0\) when \(0<c\le (4/3)(p-2)/(n+2)\), and the minimaxity of \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is established. \(\square \)

We investigate the performance of the suggested shrinkage estimators in the random sampling model (3.1). As a distribution of \({\varepsilon }_{ij}\), we handle standard normal distribution, t-distribution with 5 degrees of freedom, logistic distribution and double exponential distribution. Although \({\varvec{X}}\) and S are independent under the normality, they are not independent for the other distributions.

Let \(W=\Vert {\varvec{X}}\Vert ^2/S\) and \(c=(p-2)/(n+2)\). The estimators we compare are the James–Stein estimator \(\widehat{{\varvec{\theta }}}^\textrm{JS}=(1-c/W){\varvec{X}}\), the positive-part Stein estimator \(\widehat{{\varvec{\theta }}}^\textrm{PS}=\max (0, 1-c/W){\varvec{X}}\), the one-step and two-step shrinkage estimators \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) and \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) with \(c=(p-2)/(n+2)\), which are denoted by JS, PS, OS and TS, respectively. Based on simulation experiments with 50,000 replications for \({\varvec{\theta }}=(k/3){\varvec{I}}\) for \(k=0, \ldots , 9\) and \(\tau =1\), their average losses are calculated as approximation of risk functions and reported in Table 4.

Overall, Table 4 shows that the performances of JS, PS, OS and TS are similar to the case of known scale in Tables 2 and 3. Comparing OS and TS, we can see that TS is better for smaller \(\Vert {\varvec{\theta }}\Vert ^2\), but OS is slightly better for larger \(\Vert {\varvec{\theta }}\Vert ^2\). Comparing the values at \(\Vert {\varvec{\theta }}\Vert ^2=0\) in Tables 2, 3 and 4, we also see that the cases of known scale give smaller risks than the cases of unknown scale in the normal distribution, but the cases of unknown scale give smaller risks in the non-normal distributions.

4 Estimation of means of discrete distributions

We here consider the simultaneous estimation of means of discrete distributions. Let \(X_1, \ldots , X_p\) be independent nonnegative random variables with \(\textrm{E}[X_i]={\theta }_i\) and finite variances. In the estimation of \({\varvec{\theta }}=({\theta }_1, \ldots , {\theta }_p)^\top \) based on \({\varvec{X}}=(X_1, \ldots , X_p)^\top \), we consider the minimization of

for positive constant c. The first term of \(L({\varvec{\theta }})\) is a special case of the Bregman loss treated in Banerjee et al. (2005), and is often referred to as a generalized Kullback–Leibler loss. The partial derivative of \(L({\varvec{\theta }})\) with respect to \({\theta }_i\) is

and \(\partial L({\varvec{\theta }})/ \partial {\theta }_i=0\) gives the equation

Replacing \(\sum _{j=1}^p{\theta }_j\) with \(Z=\sum _{j=1}^pX_j\), we have the one-step shrinkage estimator

Similarly to (2.4), we have

Replacing \(\sum _{j=1}^p{\theta }_j\) with \(Z=\sum _{j=1}^pX_j\), we have the two-step shrinkage estimator

These estimators belong to the class of estimators

for positive function \(\phi (\cdot )\). It is noted that the one-step shrinkage estimator \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) with \(c=p-1\) is identical to the shrinkage estimator suggested by Clevenson and Zidek (1975), denoted by \(\widehat{{\varvec{\theta }}}^\textrm{CZ}\).

We demonstrate that \(\hat{{\theta }}^\textrm{OS}\) and \(\hat{{\theta }}^\textrm{TS}\) improve on \({\varvec{X}}\) relative to the loss \(\sum _{i=1}^p(\hat{{\theta }}_i-{\theta })^2/{\theta }_i\) when \(X_i\) has the Poisson distribution with mean \({\theta }_i\), \(Po({\theta }_i)\) for \(i=1, \ldots , p\). Clevenson and Zidek (1975) showed that \(\widehat{{\varvec{\theta }}}^\textrm{CZ}\) improves on \({\varvec{X}}\) and Tsui (1984) proved that this improvement still holds for negative binomial distributions. Using the same arguments as in Clevenson and Zidek (1975), we can show that the unbiased estimator of the risk difference \({\Delta }=R({\varvec{\theta }}, \widehat{{\varvec{\theta }}}_\phi )-R({\varvec{\theta }},{\varvec{X}})\) for \(X_i\sim Po({\theta }_i)\), \(i=1, \ldots , p\), is

Then, it can be seen that \(\widehat{{\Delta }}(z)\le 0\) if (a) \(\phi (z)\) is nondecreasing and (b) \(0\le \phi (z)\le 2c\) for \(0<c\le p-1\) and \(0\le \phi (z)\le 2(p-1)\) for \(c>p-1\). In fact, since \(\phi (z)\le \phi (z+1)\) from (a), we have

so that \(\widehat{{\Delta }}(z)\le 0\) if

This inequality is satisfied by condition (b).

Concerning the one-step shrinkage estimator, it corresponds to \(\phi (z)=c\), so that it is verified that \(\widehat{{\varvec{\theta }}}^\textrm{OS}\) improves on \({\varvec{X}}\) if \(0< c\le 2(p-1)\) for \(p\ge 2\). For the two-step shrinkage estimator, however, it is harder to show the improvement, because the corresponding function \(\phi (z)\) is not nondecreasing. To further investigate, we restrict ourselves to \(c=p-1\) for simplicity.

Theorem 4.1

When \(X_i \sim Po({\theta }_i)\) for \(i=1, \ldots , p\), the two-step shrinkage estimator \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) with \(c=p-1\) improves on \({\varvec{X}}\) relative to the quadratic loss \(\sum _{i=1}^p(\hat{{\theta }}_i-{\theta })^2/{\theta }_i\) for \(p\ge 2\).

Proof

Let \(c=p-1\) or \(p=c+1\). It is noted that \(c\ge 1\) for \(p\ge 2\). From (4.3),

where

Since the derivative of \(\phi (z)\) is

\(\phi (z)\) is increasing for \(z\le c\) and decreasing for \(z>c\). This means that

In the case of \(z\le c\), since \(\phi (z)\le \phi (z+1)\), we have

In the case of \(z>c\), note that \(\phi (z)> \phi (z+1)\). \(\widehat{{\Delta }}(z)\) is rewritten as

It is here observed that

so that \(\widehat{{\Delta }}(z)\) is evaluated as

Since \((z^2+z-c^2)/(z^2+cz+c^2)\) is increasing in z for \(c\ge 1\), we have \((z^2+z-c^2)/(z^2+cz+c^2)<1\), so that for \(z>c\ge 1\),

which is zero. Thus, \(\widehat{{\Delta }}(z) \le 0\), and the improvement of \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) over \({\varvec{X}}\) is established. \(\square \)

We compare the risk performances of the three estimators \({\varvec{X}}\), \(\widehat{{\varvec{\theta }}}^\textrm{CZ}\) and \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) with \(c=p-1\) under the three distributions of \(X_i\): the Poisson distribution \(Po({\theta }_i)\), geometric distribution \(Geo(1/({\theta }_i+1))\) and negative binomial distribution \(Nbin(5, 5/({\theta }_i+5)\). The estimators \(\widehat{{\varvec{\theta }}}^\textrm{CZ}\) and \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) are denoted by CZ and TS. The estimator \(\widehat{{\varvec{\theta }}}=(\hat{{\theta }}_1, \ldots , \hat{{\theta }}_p)^\top \) of \({\varvec{\theta }}=({\theta }_1, \ldots , {\theta }_p)^\top \) is evaluated by the risk function relative to the loss \(\sum _{i=1}^p(\hat{{\theta }}_i-{\theta }_i)^2/{\theta }_i\). The simulation experiment has been conducted with \(p=6\) and \({\theta }_i=k/2\) for \(i=1, \ldots , p\) and \(k=1, \ldots , 10\), and the average losses based on simulation with 100,000 replications are reported Table 5. From Table 5, it is seen that the improvements of \(\widehat{{\varvec{\theta }}}^\textrm{CZ}\) and \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) over \({\varvec{X}}\) are significant. In the Poisson distribution, \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is slightly worse than \(\widehat{{\varvec{\theta }}}^\textrm{CZ}\), while \(\widehat{{\varvec{\theta }}}^\textrm{TS}\) is slightly better in the geometric and negative binomial distributions.

5 Remarks on extensions

We would like to conclude the paper with remarks on extensions of the results in the previous sections. An interesting extension is the estimation of an \(m\times p\) matrix mean \({\varvec{{\Theta }}}\) of a random matrix \({\varvec{X}}\) with \(\textrm{E}[{\varvec{X}}]={\varvec{{\Theta }}}\) and \(\textrm{E}[({\varvec{X}}-{\varvec{{\Theta }}})({\varvec{X}}-{\varvec{{\Theta }}})^\top ]={\varvec{I}}_m\). As an extension of the result of Sect. 2, we consider minimizing

for known c. The optimal solution satisfies the equation

When \({\varvec{X}}{\varvec{X}}^\top \) is of full rank and \(p\ge m\), replacing \({\varvec{{\Theta }}}{\varvec{{\Theta }}}^\top \) with \({\varvec{X}}{\varvec{X}}^\top \) gives the one-step shrinkage estimator

Similarly to (2.4), we have the two-step shrinkage estimator

It is noted that \(\widehat{{\varvec{{\Theta }}}}^\textrm{OS}\) is close to the Efron and Morris (1976b) estimator \(\widehat{{\varvec{{\Theta }}}}^\textrm{EM}={\varvec{X}}-(p-m-1)({\varvec{X}}{\varvec{X}}^\top )^{-1}{\varvec{X}}\) and it can be verified to be minimax for a suitable condition on c. Although a numerical investigation shows that \(\widehat{{\varvec{{\Theta }}}}^\textrm{TS}\) is better than \(\widehat{{\varvec{{\Theta }}}}^\textrm{EM}\) by simulation, we could not give an analytical proof for minimaxity of \(\widehat{{\varvec{{\Theta }}}}^\textrm{TS}\).

Another interesting extension is the linear regression model \({\varvec{y}}={\varvec{X}}{\varvec{\beta }}+{\sigma }{\varvec{\epsilon }}\), where \({\varvec{y}}\) is an \(n\times 1\) vector of observations, \({\varvec{X}}\) is an \(n\times p\) matrix of known variables for \(p<n\), \({\varvec{\beta }}=({\beta }_1, \ldots , {\beta }_p)^\top \) is a \(p\times 1\) vector of unknown coefficients, \({\sigma }\) is an unknown scale parameter and \({\varvec{\epsilon }}=({\varepsilon }_1, \ldots , {\varepsilon }_n)^\top \) is an \(n\times 1\) vector of random errors with \(\textrm{E}[{\varepsilon }_i]=0\) and \(\textrm{E}[{\varepsilon }_i^2]<\infty \). When \({\varvec{X}}\) is of full rank, \({\varvec{\beta }}\) is estimated by the least squares estimator \(\widehat{{\varvec{\beta }}}=({\varvec{X}}^\top {\varvec{X}})^{-1}{\varvec{X}}^\top {\varvec{y}}\).

In the case of multicollinearity, \({\varvec{X}}\) is not of full rank and we can not use the least squares estimator. A feasible solution is the estimator \(\widehat{{\varvec{\beta }}}^\textrm{MP}=({\varvec{X}}^\top {\varvec{X}})^{+}{\varvec{X}}^\top {\varvec{y}}\), where \({\varvec{A}}^+\) is the Moore-Penrose inverse of matrix \({\varvec{A}}\). Alternative approaches to address the problem of multicollinearity are the regularized least squares methods. The minimization of

yields the ridge regression estimator \(\widehat{{\varvec{\beta }}}^\textrm{RR}=({\varvec{X}}^\top {\varvec{X}}+{\lambda }{\sigma }^2{\varvec{I}})^{-1}{\varvec{X}}^\top {\varvec{y}}\), and the minimization of

yields the lasso regression estimator. As a regularized method with a logarithmic penalty, we consider minimizing

for positive constant c. The optimal solution satisfies the equation

Using the same arguments as in Sect. 2 based on the above equation and replacing \(\Vert {\varvec{\beta }}\Vert ^2\) with \(\Vert \widetilde{{\varvec{\beta }}}\Vert ^2\) for some stable estimator \(\widetilde{{\varvec{\beta }}}\) like \(\widehat{{\varvec{\beta }}}^\textrm{MP}\) or \(\widehat{{\varvec{\beta }}}^\textrm{RR}\), one gets the one-step and two-step estimators, given by

where \(\hat{{\sigma }}^2={\varvec{y}}^\top ({\varvec{I}}-{\varvec{X}}({\varvec{X}}^\top {\varvec{X}})^-{\varvec{X}}^\top ){\varvec{y}}/(n-r)\) for \(r=\textrm{rank}\,({\varvec{X}})\). The minimaxity of these estimators is an interesting query to address in a future.

References

Banerjee, A., Merugu, S., Dhillon, I. S., & Ghosh, J. (2005). Clustering with Bregman divergences. Journal of Machine Learning Research, 6, 1705–1749.

Baranchik, A. J. (1970). A family of minimax estimators of the mean of a multivariate normal distribution. Annals of Mathematical Statistics, 41, 642–645.

Berger, J. O. (1985). Statistical decision theory and Bayesian analysis. Springer Verlag.

Brandwein, A. C., & Strawderman, W. E. (1990). Stein estimation: The spherically symmetric case. Statistical Science, 5, 356–369.

Clevenson, M. L., & Zidek, J. V. (1975). Simultaneous estimation of the means of independent Poisson laws. Journal of the American Statistical Association, 70, 698–705.

Doornik, J. A. (2007). Object-oriented matrix programming using Ox (3rd ed.). Timberlake Consultants Press and Oxford.

Efron, B., & Morris, C. (1972a). Empirical Bayes on vector observations: An extension of Stein’s method. Biometrika, 59, 335–347.

Efron, B., & Morris, C. (1972b). Limiting the risk of Bayes and empirical Bayes estimators—Part II: The empirical Bayes case. Journal of the American Statistical Association, 67, 130–139.

Efron, B., & Morris, C. (1976a). Families of minimax estimators of the mean of a multivariate normal distribution. Annals of Statistics, 4, 11–21.

Efron, B., & Morris, C. (1976b). Multivariate empirical Bayes and estimation of covariance matrices. Annals of Statistics, 4, 22–32.

Fourdrinier, D., Strawderman, W. E., & Wells, M. T. (2018). Shrinkage estimation. Springer Nature.

James, W., & Stein, C. (1961). Estimation with quadratic loss. In: Proceedings of the Fourth Berkeley Symposium on mathematical statistics and probability, 1, 361–379. University of California Press, Berkeley.

Kubokawa, T. (1991). An approach to improving the James-Stein estimator. Journal of Multivariate Analysis, 36, 121–126.

Kubokawa, T. (1994). A unified approach to improving equivariant estimators. Annals of Statistics, 22, 290–299.

Robert, C. P. (2007). The Bayesian choice: From decision-theoretic foundations to computational implementation (2nd ed.). Springer Verlag.

Stein, C. (1956). Inadmissibility of the usual estimator for the mean of a multivariate normal distribution. In: Proceedings of the Third Berkeley Symposium on mathematical statistics and probability, 1, 197–206. University of California Press, Berkeley.

Stein, C. (1973). Estimation of the mean of a multivariate normal distribution. In: Proceedings of the Prague Symposium on asymptotic statistics, II, 345–381. Charles University, Prague.

Stein, C. (1981). Estimation of the mean of a multivariate normal distribution. Annals of Statistics, 9, 1135–1151.

Strawderman, W. E. (1971). Proper Bayes minimax estimators of the multivariate normal mean. Annals of Mathematical Statistics, 42, 385–388.

Tsui, K.-W. (1984). Robustness of Clevenson-Zidek-type estimators. Journal of the American Statistical Association, 79, 152–157.

Acknowledgements

We would like to thank the Editor, the Associate Editor and the reviewer for many valuable comments and helpful suggestions which led to an improved version of this paper. This research was supported in part by Grant-in-Aid for Scientific Research (18K11188, 22K11928) from Japan Society for the Promotion of Science.

Funding

Open access funding provided by The University of Tokyo.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kubokawa, T. Shrinkage estimation with logarithmic penalties. Jpn J Stat Data Sci 7, 341–360 (2024). https://doi.org/10.1007/s42081-023-00225-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42081-023-00225-y