Abstract

Feature selection (FS) is an important preprocessing step in machine learning and data mining. In this paper, a new feature subset evaluation method is proposed by constructing a sample graph (SG) in different k-features and applying community modularity to select highly informative features as a group. However, these features may not be relevant as an individual. Furthermore, relevant in-dependency rather than irrelevant redundancy among the selected features is effectively measured with the community modularity Q value of the sample graph in the k-features. An efficient FS method called k-features sample graph feature selection is presented. A key property of this approach is that the discriminative cues of a feature subset with the maximum relevant in-dependency among features can be accurately determined. This community modularity-based method is then verified with the theory of k-means cluster. Compared with other state-of-the-art methods, the proposed approach is more effective, as verified by the results of several experiments.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Feature selection (FS) is widely investigated and utilized in machine learning and data mining research. In this context, a feature, also called attribute or variable, represents a property of a process or system. The goal of FS is to select the feature subsets of informative attributes or variables to build models that describe data and to eliminate redundant or irrelevant noise features to improve predictive accuracy1. FS not only maintains the original intrinsic properties of the selected features but also facilitates data visualization and understanding2. FS has been extensively applied to many applications, such as bio-informatics3, image retrieval4, and text classification5, because of its capabilities.

Traditional methods in FS can be broadly categorized into two approaches6, namely, filter and wrapper approaches. Filter algorithms7,8,9,10 utilize a simple weight score criterion to estimate the goodness of features. As a result, filter methods are classifier-independent and effective in terms of computational cost. However, filter methods disregard the correlations between features and provide feature subsets that may contain redundant information, which reduces classification accuracy. The correlation of concern in this study is a measure of the relationship between two mathematical variables (called features) or measured data values. In wrapper approaches11,12,13,14, feature subset selection depends on a classifier, which results in superior classification accuracy but requires high computational cost for repeated training of classifiers. Filter methods are eliciting an increasing amount of attention because of their efficiency and simplicity. This study focuses on filter methods only.

FS involves two major approaches: individual evaluation (univariate) and subset evaluation (multivariate). The former, which is also known as variable ranking, assesses an individual feature by using a scoring function for relevance. Subset evaluation produces candidate feature subsets through a certain search strategy. Each candidate subset is evaluated by a certain evaluation measure and compared with the previous best subset based on this measure. Individual evaluation only selects relevant features as an individual. However, a variable that is completely useless by itself can result in a significant performance improvement when combined with others15. Therefore, individual evaluation methods have been criticized for disregarding these features with strong discriminative power as a group but with weak power as an individual16. Furthermore, individual evaluation cannot eliminate redundant features because redundant features are likely to have similar rankings. Subset evaluation can handle feature redundancy with feature relevance17. The combination of several best individual features selected by individual evaluation methods does not generally lead to satisfactory classification results because the redundancy among selected features is not eliminated by individual evaluation methods18. Thus, subset evaluation method is considered the better approach between the two. Generally, the solution of a feature optimal subset is NP-hard19. To avoid the combinatorial search problem to find an optimal subset, variable selection methods are employed. The most popular of these methods mainly include forward20, backward21, and floating sequential schemes22, which adopt a heuristic search procedure to provide a sub-optimal solution.

In the subset evaluation method, evaluation of the relevance of a feature subset, including relevance and redundancy in a feature subset, is important in multivariate methods; however, this task is difficult in practice. Relevance evaluation methods based on mutual information (MI) have become popular recently23,24,25,26,27,28. However, these algorithms approximately estimate the discriminative power of a feature subset because loss of intrinsic information in raw data can occur while estimating the probability distribution of a feature vector by the discretization of a feature variable27,28.

A good feature subset should contain features that are highly correlated with the class but uncorrelated with one another29. In other words, in a good feature subset, the samples in different classes can be separated well; that is, the within-class distance in samples is small and between-classes distance is large. Therefore, if the samples are shown in a graph (also referred to as a complex network), the graph should exhibit obvious community structures30 and a high community modularity Q value31,32. Thus, the community modularity Q value can be utilized to evaluate the relevance of a feature subset with regard to the class. In this paper, a novel method is proposed to address the feature subset relevance evaluation problem by introducing a new evaluation criterion based on community modularity. The method accurately assesses the relevance independency of a feature subset by constructing a sample graph in different k-features. To the best of our knowledge, this work is the first to employ community modularity in feature subset relevance evaluation. The proposed method indiscriminately selects relevant features through the forward search strategy. This method not only selects relevant features as a group and eliminates redundant features but also attempts to retain intrinsic interdependent feature groups. The effectiveness of the method is validated through experiments on many publicly available datasets. Experimental results confirm that the proposed method exhibits improved FS and classification accuracy. The discriminative capacity of the selected feature subset is significantly superior to that of other methods.

Related Work

FS has elicited increasing attention in the last few years. In the early stage, individual evaluation methods were more popular, such as those in7,8,9,10, which measure the discriminate ability of each feature according to a related evaluation criterion. Based on class information, these methods belong to the supervised FS algorithm. An unsupervised feature ranking algorithm has also been proposed; this algorithm considers not only the variance of each feature but also the locality preserving ability, such as the Laplacian score33.

A known limitation of individual evaluation methods is that the feature subset selected by these methods may contain redundancy15,34, which degrades the subsequent learning process. Thus, several subset evaluation-based filter methods, such as those in17,29,35,36,37, have been proposed to reduce redundancy during FS.

MI is gaining popularity because of its capability to provide an appropriate means of measuring the mutual dependence of two variables; it has been widely utilized to develop information theoretic-based FS criteria, such as MIFS23,38, CMIM39, CMIF24, MIFS-U25, mrmr27, NMIFS28, and FCBF40. MI is calculated with a Parzen window41, which is less computationally demanding and provides better estimation. The Parzen window method is a non-parametric method to estimate densities. It involves placing a kernel function on top of each sample and evaluating density as the sum of the kernels. The author in42 pointed out that common heuristics for information-based FS (including Markov Blanket algorithms43 as a special case) approximately and iteratively maximize the conditional likelihood. The author presented a unifying framework for information theoretic-based FS, bringing almost two decades of research on heuristic filter criteria under a single theoretical interpretation. Analysis of the redundancy among selected features is performed by computing the relevant redundancy between the features and the target. However, MI-based FS methods have been criticized for their limitations. First, loss of intrinsic information in raw data could occur because the probability distribution of the feature vector is estimated by the discretization of the feature variable. The second limitation is that these methods only select relevant features as an individual and disregard these informative features as a group44. Several researchers have also found that combining optimal features as an individual does not provide excellent classification performance45.

Graph-based methods, such as the Laplacian score33 and improved Laplacian score-based FS methods46,47,48,49, have been widely applied to feature learning because these approaches can evaluate the similarity among data. Generally, the graph-based method includes two phases. First, a graph is constructed in which each node corresponds to each feature, and each edge has a weight based on a criterion between features. Second, several clustering methods are implemented to select a highly coherent set of features50. Optimization-based FS algorithms are preferred by many researchers. R. Tibshirani51 proposed a new method called “lasso” for estimation in linear models. Based on graphical lasso (GL), a new multilink, single-task approach that combines GL with neural network (NN) was proposed to forecast traffic flow52.

Statistical methods have been widely applied to FS. Two popular feature ranking measures are t-test53 and F-statistics54. Well known statistic-based feature selection algorithms include χ2-statistic55, odds ratio56, bi-normal separation57, improved Gini index58, measure using Poisson distribution59, and ambiguity measure60. Most of these methods calculate a score based on the probability or frequency of each feature in bag-of-words to rank features according to a feature’s score; the top features are selected. Yan Wang61 introduced the concept of feature forest and proposed feature forest-based FS algorithm.

Results

Experiments on artificial datasets, including binary class and multi-class datasets, were conducted to test the proposed approach. The proposed approach was also compared with several popular FS algorithms, including MIFS_U, mrmr, CMIM, Fisher, Laplacian score33, RELIEF62, Simba-sig63, and Greedy Feature Flip (G-Flip-sig)63. Off-the-shelf codes42 were used to implement MIFS_U, mrmr, and CMIM methods.

To evaluate the effectiveness of the proposed method, the nearest neighborhood classifier (1NN) with Euclidean distance and support vector machine (SVM)64 using the radial basis function and the penalty parameter c = 100 were employed to test the performance of the FS algorithms. We utilized the LIBSVM package65 for SVM classification. All experiments were conducted on a PC with Intel(R) Core(TM) i3-2310 CPU@2.10 GHz and 2G main memory.

Datasets and preprocessing

To verify the effectiveness of the proposed method, six continuous datasets from the LIBSVM datasets65, two cancer microarray datasets, and two discrete datasets from UCI were utilized in the simulation experiments. All the features in the datasets, except discrete features, were uniformly scaled to zero mean and unit variance. The details of the 10 datasets are shown in Table 1.

Feature selection and classification results

Classification performance was utilized to validate the FS method, and tenfold cross validation was employed to avoid the over-fitting problem. To reduce unintentional effects, all the experimental results are the average of 10 independent runs. In comparing the different methods, the feature subset was produced by picking the top s selected features to access each method in terms of classification accuracy (s = 1, ..., P). We discretized continuous features to nine discrete levels as performed in66,67 by converting the feature values between μ − σ/2 and μ + σ/2 to 0, the four intervals of size σ to the right of μ + σ/2 to discrete levels from 1 to 4, and the four intervals of size σ to the left of μ − σ/2 to discrete levels from −1 to −4. Extremely large positive or small negative feature values were truncated and discretized to ±4 appropriately.

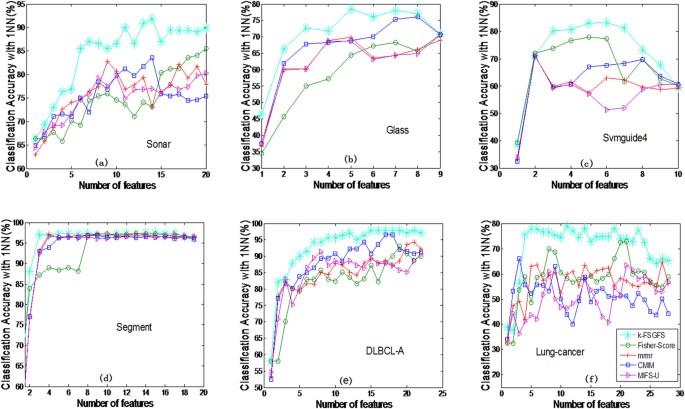

Table 2 indicates the average classification accuracy of both 1NN and SVM classifiers at different s . A bold value indicates the best among the FS methods under the same classifier and the same number of selected features. To avoid the influence of data scarcity, the average value of accuracy at different s for all datasets in the same selector is shown in the bottom line of Table 2 (Avg.). The results in Table 2 indicate that the proposed method (k-FSGFS) exhibits the best average performance compared with other methods in both classifiers. The Avg. values are 83.65% and 83.97% in 1NN and SVM classifiers, respectively. These values are higher than those of the other methods. CMIM is superior to mrmr and MIFS_U. Figures 1 and 2 show the performance of SVM and 1NN at different s of selected features for six datasets, namely, Sonar, Glass, Svmguide4, Segment, DLBCL_A, and Lung-cancer. The six datasets were selected because they cover a diverse range of characteristics, including continuous and discrete data, in terms of the number of features and number of examples.

For different methods, (a) is the classification accuracy with 1NN in data Sonar, (b) is the classification accuracy with 1NN in data Glass, (c) is the classification accuracy with 1NN in data Svmguide 4, (d). Is the classification accuracy with 1NN in data Segment, (e) is the classification accuracy with 1NN in data DLBCL-A, (f) is the classification accuracy with 1NN in data Lung-cancer.

For different method, (a) is the classification accuracy with SVM classifier in data Sonar, (b) is the classification accuracy with SVM classifier in data Glass, (c) is the classification accuracy with SVM classifier in data Svmguide 4, (d) is the classification accuracy with SVM classifier in data Segment, (e) is the classification accuracy with SVM classifier in data DLBCL-A, (f) is the classification accuracy with SVM classifier in data Lung-cancer.

Figures 1 and 2 show that the proposed method (k-FSGFS) outperforms the other methods. In most cases, the average accuracy of the two classifiers is significantly higher than that of other selectors. High classification accuracy is commonly achieved with minimal selected features, which indicates that our evaluation criterion based on community modularity Q not only selects the most informative features but also provides the solution of relevant independency among selected features. The proposed method can evaluate the discriminatory power of a feature subset.

Additionally, the proposed approach was compared with other popular FS methods, including Laplacian score33, Relief62, Simba-sig63, and Greedy Feature Flip (G-Flip-sig)63. Relief62, Simba-sig63, and G-Flip-sig63 are margin-based FS or feature weighting methods, in which a large nearest neighbor hypothesis margin ensures a large sample margin. Thus, these algorithms find a feature weight vector to minimize the upper bound of the leave-one-out cross-validation error of a nearest-neighbor classifier in the induced feature space. For fairness, only the 1NN classifier was utilized to evaluate the performance of the compared FS algorithms in all the datasets. Figure 3 shows that the proposed method is also superior or comparable to other methods in most cases. Particularly, the proposed method can achieve significantly higher classification accuracy in the first several features than the other methods in most cases. To verify, the classification accuracy results with the 1NN classifier at different selected features s (s = 2, 3, 4) for different methods are illustrated in Table 3. The table clearly indicates that our method significantly improves the classification results with fewer selected features. Thus, our method achieves optimal performance with an acceptable number of features.

(a) is the classification accuracy with 1NN classifier in data Sonar, (b) is the classification accuracy with 1NN classifier in data Glass, (c) is the classification accuracy with 1NN classifier in data Svmguide 4, (d) is the classification accuracy with 1NN classifier in data DLBCL-A, (e) is the classification accuracy with 1NN classifier in data Lung-cancer, (f) is the classification accuracy with 1NN classifier in data Segment, (g) is the classification accuracy with 1NN classifier in data Breast-A, (h) is the classification accuracy with 1NN classifier in data Vehicle, (i) is the classification accuracy with 1NN classifier in data Wine, (j) is the classification accuracy with 1NN classifier in data SPECTF.

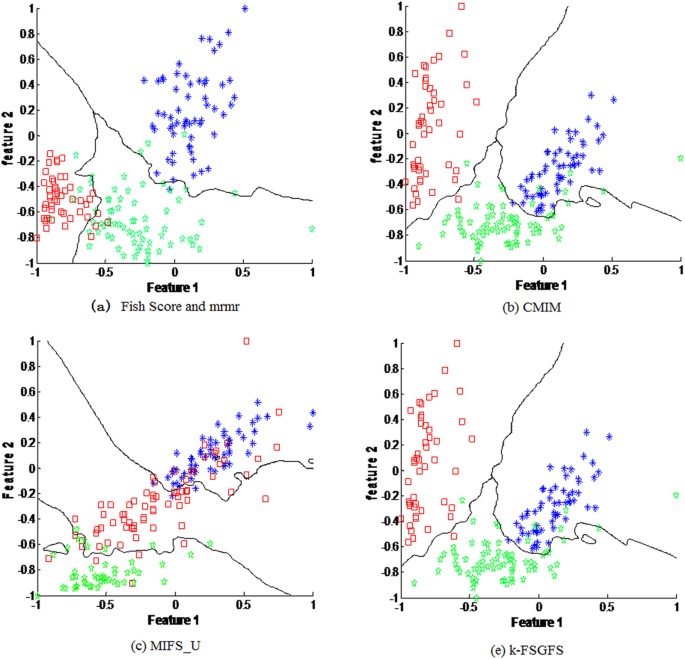

To further confirm the effectiveness of this feature evaluation criterion, the decision boundary of the 1NN classifier in 2D feature spaces from the Wine database was used, as shown in Fig. 4(a–d). The indicated dimensions are the two best features selected by each method. The two features selected by k-FSGFS and CMIM are relatively informative (Fig. 4(d)) and help in effectively separating the sample data. Both Fish Score and mrmr selected the same top two features, as indicated in Fig. 4(a), and separated the samples better than MIFS_U in Fig. 4(c). The proposed approach achieves high accuracy in classifying the samples in the two best informative feature spaces based on the results of the Wine dataset in Table 2.

Three colors represent three classes. (a) the decision boundary of 1NN classifier of samples in the two best informative features by Fish Score and mrmr. (b) the decision boundary of 1NN classifier of samples in the two best informative features by CMIM. (c) the decision boundary of 1NN classifier of samples in the two best informative features by MIFS_U. (d) the decision boundary of 1NN classifier of samples in the two best informative features by our method. From the results, both our method and CMIM have the lower classification error.

The capability of k-FSGFS to obtain the discriminatory attribute of a feature subset and the relevant independency among features is so effective that it can select these informative features with fewer redundancies. Thus, k-FSGFS performs better than other FS algorithms. For parameter K during the construction of k-FSG in our method, numerous experiments demonstrate that a value of K selected from 2 to 11 is effective for most datasets for either SVM or 1NN classifier. In this study, K was set to 2.

Statistical test

The classification experiments demonstrated that the proposed framework outperforms the other FS algorithms. However, the results also indicate that k-FSGFS does not perform better than several algorithms in a number of cases. Therefore, paired sample one-tailed test was used to assess the statistical significance of the difference in accuracy. In this test, the null hypothesis states that the average accuracy of k-FSGFS at different numbers of subsets is not greater than that of the other FS algorithms in terms of classification. Meanwhile, the alternative hypothesis states that k-FSGFS is superior to other FS algorithms in terms of classification. For example, if the performance of k-FSGFS is to be compared with that of Fisher Score method (k-FSGFS vs. Fish Score), the null and alternative hypotheses can be defined respectively as follows: H0: μk−FSGFS ≤ μFish_Score and H1: μk−FSGFS > μFish_Score, where μk−FSGFS and μFish_Score are the average classification accuracy of k-FSGFS and Fish Score methods at different numbers of selected features, respectively. The significance level was set to 5%. Tables 4 and 5 indicate that regardless of whether 1NN or SVM is used, the p-values obtained by the pair-wise one-tailed t-test are substantially less than 0.05, which means that the proposed k-FSGFS significantly outperforms the other algorithms.

Justification of k-FSGFS based on K-means cluster

The justification of the proposed feature evaluation criterion based on community modularity was demonstrated by adopting the theory of K-means cluster to determine why k features with a higher Q value are more discriminative.

The K-means cluster68 is the most well-known clustering algorithm. It iteratively attempts to address the following objective: given a set of points in a Euclidean space and a positive integer c (the number of clusters), the points are split into c clusters to minimize the total sum of the Euclidean distances of each point to its nearest cluster center, which can be defined as follows:

where xi and  are the i-th sample point and its nearest cluster center, respectively, and

are the i-th sample point and its nearest cluster center, respectively, and  is the L2-norm.

is the L2-norm.

In the feature weighting K-means, the feature that minimizes within-cluster distance and maximizes between-cluster distance is preferred, thus obtaining higher weight56. Confirming whether the features with a high community modularity Q value in our method can minimize within-cluster distance and maximize between-cluster distance is necessary.

According to Equation (7),  . Increasing the Q value equivalently maximizes inner edges lc and minimizes outer edges dc,

. Increasing the Q value equivalently maximizes inner edges lc and minimizes outer edges dc,  , dc = dout). In other words, each community of k-FSGs in k-features exhibits a large inner-degree din(small out-degree dout), and the sample points in the k-features space with the same labels can be correctly classified as many as possible into the same class and as few as possible into different classes while these k features are good features as a group. The expected number of sample points in the k-features space that are correctly classified can be calculated through Neighborhood components analysis69.

, dc = dout). In other words, each community of k-FSGs in k-features exhibits a large inner-degree din(small out-degree dout), and the sample points in the k-features space with the same labels can be correctly classified as many as possible into the same class and as few as possible into different classes while these k features are good features as a group. The expected number of sample points in the k-features space that are correctly classified can be calculated through Neighborhood components analysis69.

Given the selected feature subset S and candidate features f, each sample point i in S ∪ f feature space selects another sample point j as its neighbor with probability Pij. Pij can be defined by a soft max over Euclidean distances as follows:

Under this stochastic selection rule, we can compute the probability Pi that point i will be correctly classified (denote the set of points in the same class as i by Ct = { j|ct = cj}).

Hence, the expected number of sample points in the S ∪ f space correctly (ENC) classified into the same class is defined by

Feature f with larger ENC is more discriminative.

According to Eqs. 2, 3, 4, maximizing ENC is mutually equivalent to minimizing the K-means cluster objective J(c, μ).

(1) Proof: minimizing J(c, μ) ⇒ maximizing ENC(f ∪ S)

Given feature f ∈ F − S, Eq.2 is substituted into Eq.4. Thus,

Dmax = max{D1, D2, ..., Dn}

Dmax = max{D1, D2, ..., Dn}  c is the number of clusters.

c is the number of clusters.

The lower bound of ENC( f ∪ S) is defined by ENCL_bound.

ENC( f ∪ S) can be maximized simultaneously by maximizing its lower bound ENCL_bound and equivalently minimizing  .

.

As we know,  , which denotes that lower bound ENCL_bound has been maximized. ENC(f ∪ S) obtains the maximum value when the K-means objective (Eq. 1) is optimized for the minimum.

, which denotes that lower bound ENCL_bound has been maximized. ENC(f ∪ S) obtains the maximum value when the K-means objective (Eq. 1) is optimized for the minimum.

(2) Proof: maximizing ENC(f ∪ S) ⇒ minimizing J(c, μ)

Based on the results in proof (1),  Dmin = min{D1, D2, ..., Dn}

Dmin = min{D1, D2, ..., Dn}  is equivalent to minimize while maximizing the ENC(f ∪ S), and because

is equivalent to minimize while maximizing the ENC(f ∪ S), and because  Hence, k-means cluster function J(c, μ) is minimized while

Hence, k-means cluster function J(c, μ) is minimized while  is minimized and ENC(f ∪ S) is maximized.

is minimized and ENC(f ∪ S) is maximized.

J(c, μ) in the S ∪ f space must be minimized when the community modularity Q value of SG in S ∪ f space obtains a high value, which indicates that the features selected by the proposed method can minimize within-cluster distance. Similarly, the expected number of points incorrectly classified is defined by ENIC(f ∪ S) = n − ENC(f ∪ S), where n is the number of samples. A small ENIC(f ∪ S) results in a few edges between communities and large between-cluster distance. The feature subset with a high Q value is highly relevant, which not only minimizes within-cluster distance but also maximizes between-cluster distance.

Discussion

In this study, a novel feature subset evaluation criterion using the community modularity Q value by constructing k-features sample graphs (k-FSGs) is presented to measure the relevance of the feature subset with target variable C. To address the redundancy problem of ranking in filter methods, the sample graph in k-features that captures the relevant independency among feature subsets is utilized rather than the conditional MI criteria. By combining the two points above, a new FS method, namely, k-FSGFS, is developed for feature subset selection. The method effectively retains as many interdependent groups as possible during FS. The proposed k-FSGFS works well and outperforms other methods in most cases. The method remarkably or comparatively improves FS and classification accuracy with a small feature subset, which demonstrates the ability of the proposed method to select a discriminative feature subset. The experimental results also verify that interdependent groups commonly exist in the real dataset and play an important role in classification. Unlike the other methods used for comparison, the proposed method accurately evaluates the discriminative power of a feature subset as a group. The Fisher method, which is an individual evaluation criterion, cannot eliminate the redundancy in a feature subset, thereby reducing classification performance. The experiment results for the Fisher method verify this finding. The MI-based methods, such as mrmr, MIFS_U, and CMIM, consider the relevance and redundancy among feature subsets as a group and are superior to the Fisher method. However, these MI-based methods can only approximately estimate the relevance and redundancy in a feature subset (such as considering all the redundancy between pair-wise features to estimate the redundancy among a feature subset as a group in mrmr method) because of the difficulties in accurately computing the probability density function. The results in Table 2 and Figs 1, 2 indicate that mrmr, MIFS_U, and CMIM methods perform better than the Fisher method but worse than the proposed method.

From the mentioned above, our method perform better than MI-based methods in most cases. In our method, larger inter-class distance implies that the local margin of any sample should be large enough. By the large margin theory70, the upper bound of the leave-one-out cross-validation error of a nearest-neighbor classifier in the feature space is minimized and usually generalizes well on unseen test data70,71. However, traditional mutual information based relevance evaluation between feature and class can not accurately measure the discriminative power of a feature. In order to better illustrate this, for simplicity, the features f1, f2and the class vector C are defined by as following:

According to MI-based methods, the feature f1 has the same relevancy as f2. In our method, the feature f2 has more discriminative power than f1 because the community modularity Q in feature f2 is larger than feature f1. Intuitively, feature f2 should be more relevant than f1 due to its between-class distance is larger than f1. However, the MI-based method can not capture the difference between f1 and f2. Therefore, our relevancy evaluation criterion based on community modularity Q is more efficient and accurate.

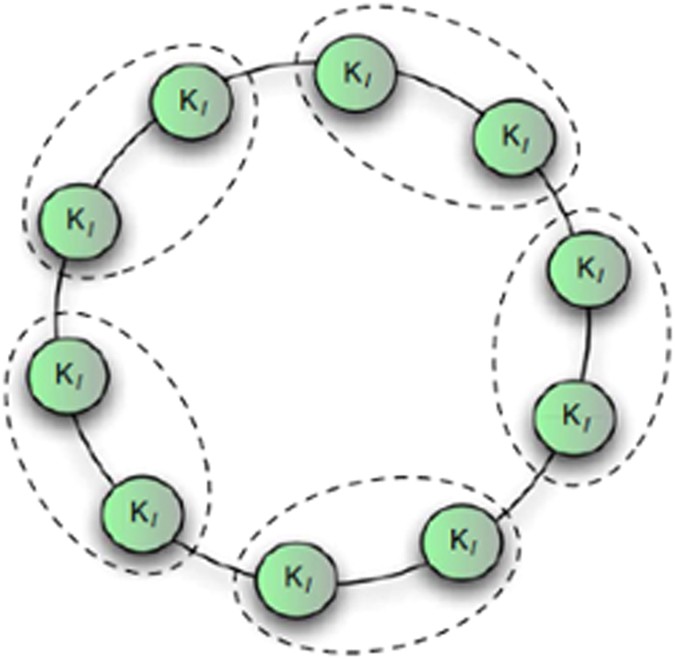

However, in practice, the proposed method is not always efficient for all types of datasets, such as imbalanced datasets, especially when a few samples in one class are compared with other classes. For example, in the dataset Lung-cancer, our method performs worse than simba-sig and G-flip-sig. Because, modularity optimization is widely criticized for its resolution limit72 illustrated in Fig. 5, which may prevent the approach from detecting clusters. The clusters are comparatively small with respect to the graph as a whole, which results in maximum modularity Q not corresponding to a good community structure, that is, features with a high Q value may be irrelevant. The KNN searching needs to be conducted iteratively in our method, thus, the efficiency of our method is low for larger data amounts in real applications with regard to time complexity. Our future work will focus on resolving these problems.

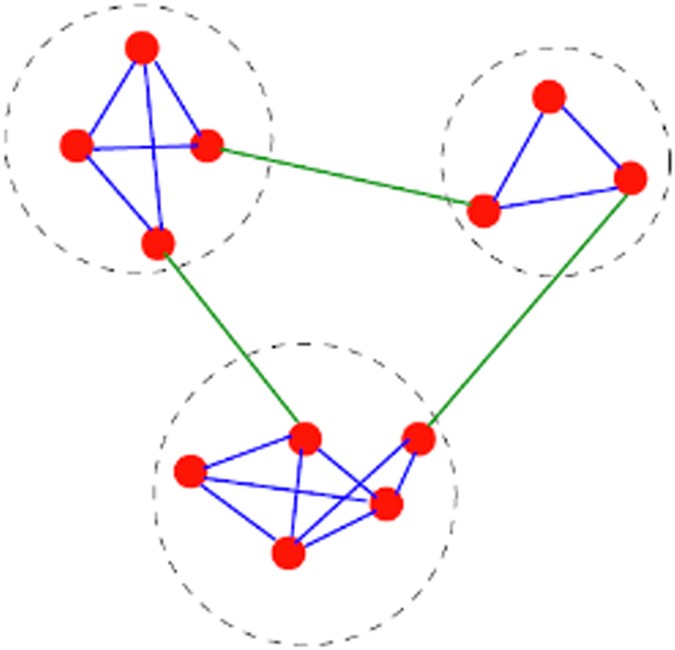

The natural community structure of the graph, represented by the individual cliques (circles), is not recognized by optimizing modularity, if the cliques are smaller than a scale depending on the size of the graph. In this case, the maximum modularity corresponds to a partition whose clusters include two or more cliques (like the groups indicated by the dashed contours)72.

Methods

In this paper, a new feature evaluation criterion based on the community modularity Q value is proposed to evaluate the class-dependent correlation73 of features as a group instead of identifying the discriminatory power of a single feature. Detailed information on our method is presented in Algorithm 2. The innovations of our work mainly include the following points.

-

1

The discriminatory power of features as a group can be evaluated exactly based on the community modularity Q value of sample graphs in k-features.

-

2

The proposed method can select features that have discriminatory power as a group but have weak power as an individual.

-

3

Relevant independency instead of irrelevant redundancy between features is measured using the community modularity Q value rather than information theory.

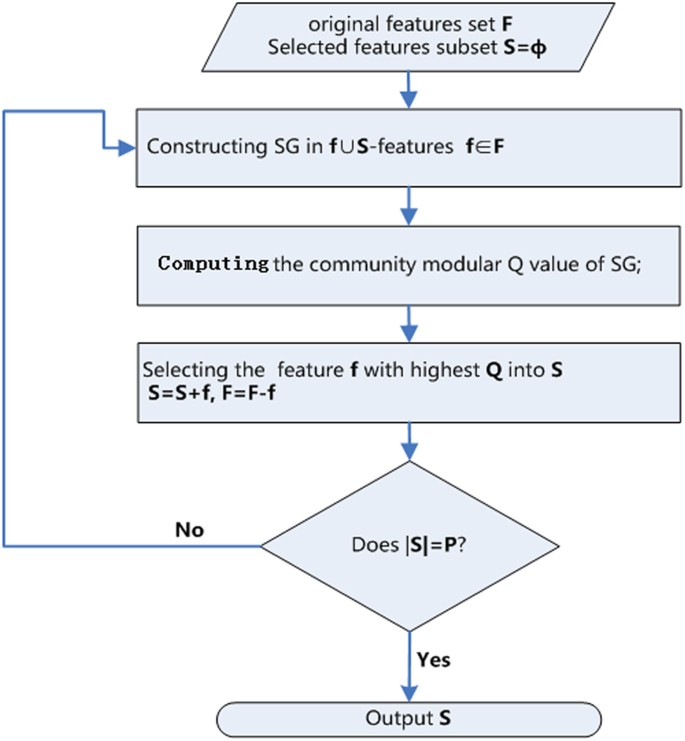

The proposed framework is presented in a flow diagram in Fig. 6.

Community modularity Q

The community structure in an undirected graph exhibits close connections within the community but sparse connections among various communities relatively31,32. Figure 7 shows a schematic example of a graph with three communities to demonstrate the community structure.

Reprinted figure with permission from72.

Thus far, the most regarded quality function is the modularity of Newman and Girvan32. Modularity Q can be written as follows:

where the sum runs over all pairs of nodes, A is the adjacency matrix, e is the total number of edges of the graph, and di and dj represent the degree of nodes i and j, respectively. The δ-function is equal to one if nodes i and j are in the same community and equal to zero otherwise. Another popular description of modularity Q can be written as follows:

where nc is the number of communities, lc is the total number of edges joining the nodes of module C, and dc is the sum of the degrees of the nodes of C. The range of modularity Q is [−1, 1]. Modularity-based methods23 assume that a high value of modularity indicates good partitions. In other words, the higher modularity Q is, the more significant the community structure is.

Based on the definition of community, the within-class distance in a community is small and the between-class distance is large. Thus, if a graph has a clear community structure, the nodes in different communities can be locally and linearly separated easily, as shown in Fig. 7. The features that minimize within-cluster distance and maximize between-cluster distance are preferred and obtain a high weight. If the sample graph in k-features (k-FSG) has an apparent community structure, these k features will have strong discriminative power as a group because intra-class distance is small and inter-class distance large. This condition can be proven sequentially with the theory of K-means cluster.

Sample graph in k-features (k-FSG)

Given an m × n dataset matrix (m corresponding to samples and n corresponding to features), the sample graph in k-features (k-FSG) can be constructed as follows: an edge A(i, j) (A(i, j) = 1) exists between samples Xi and Xj if Xi ∈ K − NN(Xj) or Xj ∈ K − NN(Xi).where Xi is the node i corresponding to the sample i, K − NN(Xi) is the K-neighborhood set of node i, and A is the adjacency matrix, which is symmetrical. K is the predefined parameter and does not have large values, which generally range within {3–11}.

The discussion above indicates that if k-FSG in k-features exhibits clear community structures corresponding to a large Q value, these k features are highly informative as a group. The algorithm of constructing k-FSG is shown as Algorithm 1.

Algorithm 1: Pseudo-code for constructing k-FSG

Feature subset selection with sample graph in k-features

In this subsection, a novel k-FSG-based feature selection method (k-FSGFS) for ranking features is proposed based on k-FSG and community modularity Q. First, all the sample graphs in 1D feature space (k = 1) can be constructed based on Algorithm 1. The most informative feature is f1, where the sample graph in f1(1-feature) enables the largest community modularity Q value to be selected. Given feature f1,all the sample graphs in a two-feature space (k = 2) (f1 and q ∈ F − f1 space) and all the community modularity  values of the two FSGs are calculated. Feature q with the highest

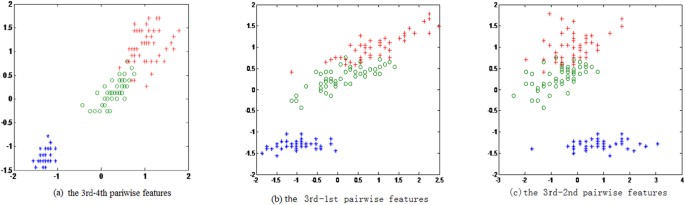

values of the two FSGs are calculated. Feature q with the highest  values will be selected in feature subset S. The procedure will not stop until the number of selected features satisfies |S| = P. To facilitate understanding of our evaluation scheme, we regard a UCI dataset, iris, as an example. The dataset consists of 150 samples and four features. The dataset is divided into three classes with 50 samples in each class. The iris dataset is processed with zero mean and unit variance according to 1-FSG in one feature. The 3rd feature with the highest Q value is the most informative as an individual. Given the 3rd feature, Fig. 8 illustrates the sample scatter points in 2-FSGs for the remaining features {1 2 4} in dataset iris. Three community modularity Q3↔q values are shown in Table 6 (q = 1, 2, 4). Figure 8 clearly indicate that the 2-FSG in 3 ↔ 4 feature space exhibits more obvious community structures, and the sample points in different classes in 3 ↔ 4 features can be easily separated. The results in Table 6 show that the 2-FSG in 3 ↔ 4 feature space provides the largest community modularity Q value. Thus, the 4th feature has strong informative power combined with the 3rd feature. Given the 3rd and the 4th features, the 1st and the 2nd features can be selected according to the 3-FSGs and 4-FSGs, respectively. The selected feature subset in iris using our method is {3 4 1 2}, which is the selected features of most of the methods.

values will be selected in feature subset S. The procedure will not stop until the number of selected features satisfies |S| = P. To facilitate understanding of our evaluation scheme, we regard a UCI dataset, iris, as an example. The dataset consists of 150 samples and four features. The dataset is divided into three classes with 50 samples in each class. The iris dataset is processed with zero mean and unit variance according to 1-FSG in one feature. The 3rd feature with the highest Q value is the most informative as an individual. Given the 3rd feature, Fig. 8 illustrates the sample scatter points in 2-FSGs for the remaining features {1 2 4} in dataset iris. Three community modularity Q3↔q values are shown in Table 6 (q = 1, 2, 4). Figure 8 clearly indicate that the 2-FSG in 3 ↔ 4 feature space exhibits more obvious community structures, and the sample points in different classes in 3 ↔ 4 features can be easily separated. The results in Table 6 show that the 2-FSG in 3 ↔ 4 feature space provides the largest community modularity Q value. Thus, the 4th feature has strong informative power combined with the 3rd feature. Given the 3rd and the 4th features, the 1st and the 2nd features can be selected according to the 3-FSGs and 4-FSGs, respectively. The selected feature subset in iris using our method is {3 4 1 2}, which is the selected features of most of the methods.

The different color corresponds to different classes. (a) The sample scatter points in features 3 and 4. (b) The sample scatter points in features 3 and 1. (c) The sample scatter points in features 3 and 2. From the sample scatter points results, it can be concluded that the sample points in features 3 and 4 can be easily separated, which means the features 3 and 4 as a group have more discriminative power.

In short, given selected feature subset S, feature f selected by our criterion can be defined as follows:

where Qf∪S is the community modularity value of SG in features f ∪ S and F and S are the set of all features and selected feature subset, respectively.

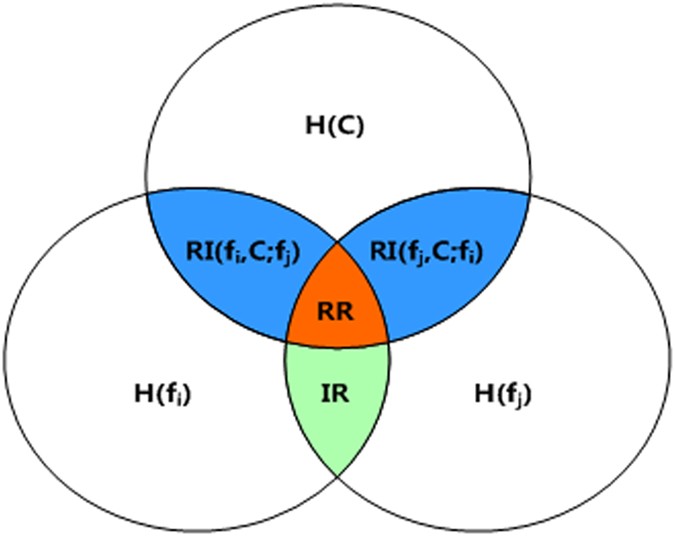

Relevancy analysis

Ranking-based filter methods cannot handle high redundancy among the selected features. To solve this problem, conditional MI (CMI) is applied in this study to obtain the relevant independency (RI) or relevant redundancy74 instead of the irrelevant redundancy between features, as shown in Fig. 9. RI(fi, C; fj) is now the amount of information features fi that can predict target variable C when feature fj is given; RI(fi, C; fj) = I(fi, C | fj). Similarly, RI(fj, C; fi). In other words, if RI(fi, C; fj) between features fi and fj is large, the combination of feature fi can provide informative information when feature fj is selected. However, calculating RI(fi, C; S) when selected feature subset S is given is difficult for MI-based methods. The first reason is that examples are often insufficient. Second, accurate estimation for multivariate density P(f1, f2, ..., fn, C) and P(f1, f2, ..., fn) is difficult. For the MI-based methods, such as MIFS, mrmr, MIFS_U, CMIM, and CMIF, RI(fi, C; fj) are often approximated in different ways. Therefore, MI-based methods cannot exactly evaluate RI(fi, C; S).

The shaded area IR is the class-independent correlation between features fi and fj the shaded area is the class-dependent correlation between features fi and fj with respect to classy. The shaded area RI to what we refer as relevant independency, that is, the amount of information two random variables can predict about a relevant one and it is not shared by each other. See text for details.

In this study, the discriminative capability of k features as a group was evaluated using the community modularity Q value of the constructed k-FSG. A high Q value of k-FSG denotes large RI among the k features as a group, and the sample points in different classes can be separated well. Thus, the community modularity Q value of k-FSG in k-features can accurately illustrate relevant independency RI(fi, C; S) in selected feature subset S. The community modularity Q value of k-FSG was utilized to measure relevant independency instead of MI theory. For verification, the iris dataset was used as an example. Different RI(fi, C; f3) values were calculated, and the third feature was selected (i = 1, 2, 4), as indicated in Table 7 The table clearly indicates that RI(f4, C; f3) is the largest, which demonstrates that fourth feature f4 can provide more informative information when the third feature is given. Similarly, the Q3↔4 value in Table 6 is also the highest in Table 7, which demonstrates that the community modular Q value of k-FSG in k-features can replace MI to effectively evaluate the RI of feature subset S. Thus, our method can resolve relevant redundancy among selected features. CMI can be computed with the FEAST tool42.

Relevant independency RI(fi, C; S) between feature fi and selected feature set S was replaced by the community modularity Q value of SG in fi ∪ S, which can be defined as follows:

A larger value of RI(fi, C; S) indicates that fi is highly independent with features in S but relevant in terms of target variable C and has strong informative power combined with features in S. These results indicate that our method can select these features with more relevancy as a group in terms of class and larger RI among selected features.

The details of k-FSGFS are presented in Algorithm 2.

Algorithm 2: k-FSGFS: k-features sample graph based feature selection

Time complexity of k-FSGFS

Algorithm 2 shows that k-FSGFS mainly includes two steps. The first step is to construct k-FSG in k-features space. The second step is to calculate the community modularity Q value of each k-FSG. The most time-consuming step is establishing k-FSG, whose time complexity is about ο(Pnm2), where n is the number of features in feature space, m is the number of samples in the dataset, and P is the number of predefined selected features. Fortunately, fast K-nearest neighbor graph construction methods75,76 can be applied to the construction of k-FSGs; such application would reduce the time complexity from ο(Pnm2) to ο(Pnm1.14). In the second step, the spending time is approximately ο(mlog m). Thus, the overall time cost of k-FSGFS is approximately  .

.

Additional Information

How to cite this article: Zhao, G. and Liu, S. Estimation of Discriminative Feature Subset Using Community Modularity. Sci. Rep. 6, 25040; doi: 10.1038/srep25040 (2016).

References

Kalousis, A., Prados, J. & Hilario, M. Stability of feature selection algorithms: a study on high-dimensional spaces. Knowl Inf Syst. 12, 95–116 (2007).

Kamimura, R. Structural enhanced information and its application to improved visualization of self-organizing maps. Appl. Intell. 34, 102–115 (2011).

Saeys, Y., Inza I. & Larrañaga, P. A review of feature selection techniques in bioinformatics. Bioinformatics. 23, 1367–4803 (2007).

Dy, J. G., Brodley, C. E., Kak, A., Broderick, L. S. & Aisen, A. M. Unsupervised feature selection applied to content-based retrieval of lung images. IEEE Trans. Pattern Anal. Mach. Intell. 25, 373–378 (2003).

Forman, G. & Alto, P. An extensive empirical study of feature selection metrics for text classification. J. Mach. Learn. Res. 3, 1289–1305 (2003).

Guyon, I., Weston, J., Barnhill, S. & Vapnik, V. Gene selection for cancer classification using support vector machines. Mach. Learn. 46, 389–422 (2002).

Bishop, C. M. In Neural Networks for Pattern Recognition 1st edn. Vol. 1 Ch. 5, 237–289 (Clarendon Press, Oxford. 1995).

Hall, M. A. & Smith, L. A. Practical feature subset selection for machine learning. J. Comput. Sci. 98, 4–6 (1998).

Kira, K. & Rendell, L. A. A practical approach to feature selection. Proc. Mach. Learn. UK 92, 1-55860-247-X (1992).

Kononenko, I. Estimating features: analysis and extension of RELIEF. Proc. Mach. Learn. 1994, Italy, Springer (1994).

Xia, H. & Hu, B. Q. Feature selection using fuzzy support vector machines. Fuzzy Optim Decis Mak. 5, 187–192 (2006).

Kohavi, R. & John, G. H. Wrappers for feature subset selection. Artif Intell. 97, 273–324 (1997).

Kohavi, R. in Wrappers for Performance Enhancement and Oblivious Decision Graphs 1st edn, Ch. 2, 125–235 (Stanford University, 1995).

Kohavi, R. & John, G. Wrappers for feature subset selection. Artif. Intell. 97, 273–324 (1997).

Guyon, I. & Elisseeff, André . An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 3, 1157–1182 (2003).

Sun, X. et al. Feature evaluation and selection with cooperative game theory. Pattern Recogn. 45, 2992–3002 (2012).

Verónica, Noelia & Amparo . A review of feature selection methods on synthetic data. Knowl. Inf. Syst. 34, 483–519 (2013).

Cover, Thomas, M. & Joy, A. In Telecommunications and Signal Processing in Elements of Information Theory 2nd edn. Vol. 3 Ch. 4, 230–302 (Wiley, 1991).

Blum, A. L. & Rivest, R. L. Training a 3-node neural networks is NP-complete. Neural. Netw. 5, 117–127 (1992).

Cedeno, M., Dominguez, J. Q., Cortina-Januchs, M. G. & Andina, D. Feature selection using sequentail forward selection and classification applying artificial metaplasticity neural network. IEEE Conf. Ind. Electron. Soc. USA 2010, IEEE press (2010).

Kugler, M., Aoki, K., Kuroyanagi, S., Iwata, A. & Nugroho, A. S. Feature Subset Selection for Support Vector Machines using Confident Margin. IJCNN Int. Canada 2005, IEEE press (2005).

Zhou, X. & Mao, K. Z. LS bound based gene selection for DNA microarray data. Bioinformatics. 21, 1559–1564 (2005).

Jorge, R. et al. A review of feature selection methods based on mutual information. Neural Comput & Applic. 24, 175–186 (2014).

Cheng, H. R. et al. Conditional Mutual Information-Based Feature Selection Analyzing for Synergy and Redundancy. ETRI Journal. 33, 211–218 (2011).

Kwak, N. & Choi, C. H. Input feature selection for classification problems. IEEE Trans. Neural. Netw. 13, 143–159 (2002).

Cang, S. & Yu, H. Mutual information based input feature selection for classification problems. Dcis. support syst. 54, 691–698 (2012).

Peng, H., Long, F. & Ding, C. Feature selection based on mutual information: criteria of max-dependency, max-relevance and min-redundancy. IEEE Trans Pattern Anal. Mach. Intell. 27, 1226–1238 (2005).

Estévez, P. A., Tesmer, M., Perez, C. A. & Zurada, J. M. Normalized mutual information feature selection. IEEE Trans. Neural. Netw. 20, 189–201 (2009).

Hall, M. A. Correlation-based feature selection for discrete and numeric class machine learning. Proc. Mach. Learn. USA 2000, ACM press (2000).

Zhao, G. D. et al. Effective feature selection using feature vector graph for classification. Neurocomp. 151, 376–389 (2015).

Zhao, G. D. et al. EAMCD: an efficient algorithm based on minimum coupling distance for community identification in complex networks. Eur. Phys. J. B. 86, 14 (2013).

Newman, M. E. J. & Girvan, M. Finding and evaluating community structure in networks. Phys. Rev. E. 69, 026113 (2004).

He, X., Deng, C. & Niyogi, P. Laplacian score for feature selection. Proc. NIPS Canada 2005, MIT Press (2005).

Wang, J., Wu, L., Kong, J., Li, Y. & Zhang, B. Maximum weight and minimum redundancy: A novel framework for feature subset selection. Pattern Recogn. 46, 1616–1627 (2013).

Dash, M. & Liu, H. Consistency-based search in feature selection. J Artif Intell. 1, 155–176 (2003).

Zhao, Z. & Liu, H. Searching for interacting features. Proc. IJCAI. India 2007, IEEE press (2007).

Liu. H. & Yu, L. Feature selection for high-dimensional data: a fast correlation-based filter solution. Proc. ICML USA 2003, AAAI Press (2003).

Battiti, R. Using mutual information for selecting features in supervised neural net learning. IEEE Trans. Neural Netw. 5, 537–550 (1994).

Fleuret, F. Fast binary feature selection with conditional mutual information. J. Mach. Learn. Res. 5, 1531–1555 (2004).

Yu, L. & Liu, H. Efficient feature selection via analysis of relevance and redundancy. J. Mach. Learn. Res. 5, 1205–1224 (2004).

Parzen, E. On the estimation of probability density function and the mode. Ann. of Math. Stat. 33, 1065 (1962).

Brown, G., Pocock, A., Zhao, M. J. & Luján, M. Conditional Likelihood Maximisation: A Unifying Framework for Information Theoretic Feature Selection. J. Mach. Learn. Res. 13, 27–66 (2012).

Koller, D. & Sahami, M. Toward optimal feature selection. Proc. ICML Italy 1996, ACM press(1996).

Cheng, H., Qin, Z., Qian, W. & Liu, W. Conditional Mutual Information Based Feature Selection. KAM Int. 2008, China, ACM press (2008).

Cover, T. M. The best two independent measurements are not the two best. IEEE Trans . Syst Man Cyber. 4, 116–117 (1974).

Ren, Y. Z., Zhang, G. J., Yu, G. X. & Li, X. Local and global structure preserving based feature selection. Neurocomp. 89, 147–157 (2012).

Hu, W., Choi, K.-S., Gu, Y. & Wang, S. Minimum-Maximum Local Structure Information for Feature Selection. Pattern Recogn. Lett. 34, 527–535 (2013).

Zhang, Z. & Hancock, E. A graph-based approach to feature selection. Graph-Based Represent. Pattern Recogn., 5, 205–214 (2011).

Zhang, Z. & Hancock, E. R. Hypergraph based information-theoretic feature selection. Pattern Recogn. Lett. 33, 1991–1999 (2012).

Zhang, Z. H. & Hancock, E. R. A Graph-Based Approach to Feature Selection. Proc. GbRPR Germany 2011, Springer press (2011).

Tibshirani, R. Regression Shrinkage and Selection Via the Lasso. JRSS-B. 58, 267–288 (1996).

Sun, S., Huang, R. & Gao, Y. Network-Scale Traffic Modeling and Forecasting with Graphical Lasso and Neural Networks. J. Transp. Eng. 138, 1358–1367 (2012).

Devore, J. & Peck, R. In Statistics: The Exploration and Analysis of Data 3th edn, Vol. 10, Ch. 3, 341–468 (Duxbury, 1997).

Wright, S. The interpretation of population structure by F-statistics with special regard to systems of mating. Evolu. 19, 395–420 (1965).

Yang, Y. & Pedersen, J. O. A comparative study on feature selection in textcategorization. Proc. ICML USA 1997, ACM press (1997).

Mladenic, D. & Grobelnik, M. Feature selection for unbalanced class distribution and Naive Bayes. Proc. ICML Slovenia 1999, ACM press (1999).

Forman, G. An extensive empirical study of feature selection metrics for text classification. J. Mach. Learn. Res. 3, 1289–1305 (2003).

Shang, W., Huang, H. & Zhu, H. A novel feature selection algorithm for text categorization. Exp. Syst. with Appl. 33, 1–5 (2007).

Ogura, H., Amano, H. & Kondo, M. Feature selection with a measure of deviations from Poisson in text categorization. Exp. Syst. with Appl. 36, 6826–6832 (2009).

Mengle, S. S. R. & Goharian, N. Ambiguity measure feature-selection algorithm. J. Am. Soc. Inf. Sci. Tec. 60, 1037–1050 (2009).

Wang, Y. & Ma, L. Z. FF-Based Feature Selection for Improved Classification of Medical. COMP. 2, 396–405 (2009).

Kira, K. & Rendell, L. A. A Practical Approach to Feature Selection. Proc. ICML UK 1992, 1-55860-247-X (1992).

Gilad-Bachrach, R., Navot, A. & Tishby, N. Margin Based Feature Selection-Theory and Algorithms. Proc. ICML Canada 2004, ACM press (2004).

Shawe-Taylor, J. & Sun, S. L. A review of optimization methodologies in support vector machines. Neurocomp. 74, 3609–3618 (2011).

Hsu, C. W. & Lin, C. J. A comparison of methods for multi-class support vector machines. IEEE Trans. Neural. Netw. 13, 415–425 (2002).

Sakar, C. O. A feature selection method based on kernel canonical correlation analysis and the minimum Redundancy-Maximum Relevance filter method. Exp. Syst. with Appl. 39, 3432–3437 (2012).

Kursun, O., Sakar, C. O., Favorov, O., Aydin, N. & Gurgen, F. Using covariates for improving the minimum redundancy maximum relevance feature selection method. Tur. J. Elec. Eng. & Comp. Sci. 18, 975–989 (2010).

Boutsidis, C., Drineas, P. & Mahoney, M. W. Unsupervised feature selection for the k-means clustering problem. Adv. Neural Inf. Process Syst. 6, 153–161 (2009).

Goldberger, J., Roweis, S., Hinton, G. & Salakhutdinov, R. Neighbourhood components analysis. Adv. Neural Inf. Process Syst. 17, 513–520 (2005).

Sun, Y. Iterative RELIEF for Feature Weighting: Algorithms, Theories, and Applications. IEEE Trans. Pattern Anal. Mach. Intell. 29(6), 1035–1051 (2007).

Chen, B., Liu, H. & Chai, J. Large Margin Feature Weighting Method via Linear Programming. IEEE T knowl Data En. 21(10), 1475–1488 (2009).

Fortunato, S. Community detection in graphs. Phys. Rep. 486, 75–174 (2010).

Qu, G., Hariri, S. & Yousif, M. A new dependency and correlation analysis for features. IEEE T. Knowl. Data En. 17, 1199–1207 (2005).

Martínez Sotoca, J. & Pla, F. Supervised feature selection by clustering using conditional mutual information-based distances. Pattern Recogn. 43, 2068–2081 (2010).

Garcia, V., Debreuve, E. & Barlaud, M. Fast k nearest neighbor search using GPU. Proc. IEEE Conf. Comput. Vision and Patter. Recog. USA 2008. IEEE Computer Society press (2008).

Dong, W., Charikar, M. & Li, K. Efficient K-Nearest Neighbor Graph Construction for Generic Similarity Measures. World Wide Web Int. 2011 India. IEEE press (2011).

Hoshida, Y. J. et al. Subclass Mapping: Identifying Common Subtypes in Independent Disease Data Sets. PLos One. 2, e1195 (2007).

Acknowledgements

This work was supported by Innovation Program of Shanghai Municipal Education Commission (No. 15ZZ106, No. 14YZ157), Shanghai Natural Science Foundation (No. 14ZR1417200, No. 15ZR1417300), Climbing Peak Discipline Project of Shanghai Dianji University(No. 15DFXK01).

Author information

Authors and Affiliations

Contributions

G.Z. wrote the main text and prepared all the tables and figures. S.L. provided valuable suggestions and guidance during the progress of rewriting and modified the revised paper. All the authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Zhao, G., Liu, S. Estimation of Discriminative Feature Subset Using Community Modularity. Sci Rep 6, 25040 (2016). https://doi.org/10.1038/srep25040

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep25040

- Springer Nature Limited

This article is cited by

-

Feature subset selection combining maximal information entropy and maximal information coefficient

Applied Intelligence (2020)

-

Efficient Large Margin-Based Feature Extraction

Neural Processing Letters (2019)