Abstract

Two new iterative methods for the simultaneous determination of all multiple as well as distinct roots of nonlinear polynomial equation are established, using two suitable corrections to achieve a very high computational efficiency as compared to the existing methods in the literature. Convergence analysis shows that the orders of convergence of the newly constructed simultaneous methods are 10 and 12. At the end, numerical test examples are given to check the efficiency and numerical performance of these simultaneous methods.

Similar content being viewed by others

1 Introduction

A wide range of theoretical and practical problems arise in various fields of mathematical, economical, physical, and engineering sciences which can be formulated as a polynomial equation of degree n with arbitrary real or complex coefficient:

where \(\zeta _{1}\cdots \zeta _{n}\) denote all the simple or complex roots of (1). Approximating all roots of the nonlinear polynomial equation using simultaneous methods has a lot of applications in sciences and engineering because simultaneous iterative methods are less time consuming since they can be implemented for parallel processing as well. Further details about their convergence properties, computational efficiency, and parallel processing may be found in [1–25] and the references cited there in. The main objective of this paper is to develop simultaneous methods which have a higher convergence order and are more efficient as compared to the existing methods. A very high computational efficiency is achieved by using two suitable corrections [26, 27] with convergence orders equal to ten and twelve with a minimal number of function evaluations in each step.

1.1 Construction of simultaneous methods for multiple roots

Consider two-step fourth-order Newton’s method [26] for finding multiple roots of nonlinear equation (1)

where σ is the multiplicity of exact root, say ζ, of (1). We would like to convert (2) into a simultaneous method for extracting all the distinct as well as multiple roots of (1). We use the third-order Dong et al. method [26] as a correction to increase the efficiency and convergence order requiring no additional evaluation of the function:

Suppose that the nonlinear polynomial equation (1) has n roots. Then

This implies

This gives

where \(\frac{1}{N_{i}(x_{i})}=\frac{f^{{\prime }}(x_{i})}{f(x_{i})}\) or

The multiple root equation (5) can be written as

Replacing \(x_{j}\) by \(x_{j}^{\ast }\) in (6), we have

where

Using (7) in the first step of (2), we have

Thus we have constructed a new simultaneous method (8) abbreviated as MNS10M for extracting all distinct as well as multiple roots of polynomial equation (1).

1.2 Convergence analysis

In this section, the convergence analysis of a family of two-step simultaneous methods (8) given in a form of the following theorem is presented.

Theorem 1

Let \(\zeta _{{1}},\ldots,\zeta _{n}\) be simple roots of (1). If \(x_{1}^{(0)},\ldots, x_{n}^{(0)}\) are the initial approximations of the roots respectively and sufficiently close to the actual roots, then the order of convergence of method (8) equals ten.

Proof

Let \(\epsilon _{i}=x_{i}-\zeta _{i},\epsilon _{i}^{\prime }=y_{i}-\zeta _{i} \), and \(\epsilon _{i}^{{\prime \prime }}=z_{i}-\zeta _{i}\) be the errors in \(x_{i}\), \(y_{i}\), and \(z_{i}\) approximations respectively. Consider the first step of (8), which is

where \(N(x_{i})=\frac{f(x_{i})}{f^{\prime }(x_{i})}\). Then, obviously, for distinct roots, we have

Thus, for multiple roots, we have from (8)

where \(x_{j}^{\ast }-\zeta _{j}=\epsilon _{j}^{3}\) [26] and \(E_{i}= \frac{-\sigma _{j}}{(x_{i}-\zeta _{j})(x_{i}-x_{j}^{\ast })}\).

Thus

If it is assumed that absolute values of all errors \(\epsilon _{j}\ (j=1,2,3,\ldots)\) are of the same order as, say, \(\vert \epsilon _{j} \vert =O \vert \epsilon \vert \), then from (9) we have

From the second equation of (8), we get

where \(F_{i}=\frac{-\sigma _{j}}{(y_{i}-\zeta _{j})(y_{i}-y_{j})}\). This implies

where \(C_{i}= \frac{\sum_{\overset{j=1}{j\neq i}}^{n}\epsilon _{j}^{{\prime }}F_{i}-\alpha }{\sigma _{i}+\epsilon _{i}^{{\prime }}\sum_{\overset{j=1}{j\neq i}}^{n}(\epsilon _{j}^{{\prime }}F_{i}-\epsilon _{i}^{{\prime }}\alpha )}\). By (10), \(\epsilon _{i}^{{\prime }}=O(\epsilon )^{5}\) and thus

which shows that the convergence order of method (8) is ten. Hence we have proved the theorem. □

1.3 Improvement of efficiency and convergence order

To improve the convergence order of method (8) from 10 to 12, using same function evaluation, we use

instead of \(x_{j }^{\ast }=\) \(Z_{j}^{\ast }\) in (7), i.e.,

where \(Z_{j}^{\ast }\) is a fourth-order method [27]. Using (11) in the first step of (2), we have

Thus we have constructed a new simultaneous method (12), abbreviated as MNS12M for extracting all multiple roots of polynomial equation (1). For multiplicity unity, we used method (12) for determing all the distinct roots of (1), abbreviated as MNS12D.

1.4 Convergence analysis

In this section, the convergence analysis of a family of two-step simultaneous methods (12) is given in a form of the following theorem.

Theorem 2

Let \(\zeta _{{1}},\zeta _{{2}},\ldots,\zeta _{n}\) be simple roots of (1). If \(x_{1}^{(0)}\), \(x_{2}^{(0)}\), \(x_{3}^{(0)},\ldots, x_{n}^{(0)}\) are the initial approximations of the roots respectively and sufficiently close to the actual roots, then the order of convergence of method (12) equals twelve.

Proof

Let \(\epsilon _{i}=x_{i}-\zeta _{i},\epsilon _{i}^{\prime }=y_{i}-\zeta _{i} \), and \(\epsilon _{i}^{{\prime \prime }}=z_{i}-\zeta _{i}\) be the errors in \(x_{i}\), \(y_{i}\), and \(z_{i}\) approximations respectively. Consider the first step of (12), which is

where \(N(x_{i})=\frac{f(x_{i})}{f^{\prime }(x_{i})}\). Then, obviously, for distinct roots, we have

Thus, for multiple roots, we have from (6)

where \(Z_{j}^{\ast }-\zeta _{j}=\epsilon _{j}^{4}\) [27] and \(G_{i}=\frac{-\sigma _{j}}{(x_{i}-\zeta _{j})(x_{i}-Z_{j}^{\ast })}\). Thus

If it is assumed that absolute values of all errors \(\epsilon _{j}\ (j=1,2,3,\ldots)\) are of the same order as, say, \(\vert \epsilon _{j} \vert =O \vert \epsilon \vert \), then from (13) we have

From the second equation of (12), we have

where \(H_{i}=\frac{-\sigma _{j}}{ (y_{i}-\zeta _{j})(y_{i}-y_{j})}\). This implies

If it is assumed that absolute values of all errors \(\epsilon _{j}\ (j=1,2,3,\ldots)\) are of the same order as, say, \(\vert \epsilon _{j} \vert =O \vert \epsilon \vert \), then we have

where \(D_{i}= \frac{\sum_{\overset{j=1}{j\neq i}}^{n}H_{i}}{\sigma _{i}+(\epsilon _{i}^{{\prime }})^{2}\sum_{\overset{j=1}{j\neq i}}^{n}H_{i}}\). By (14), \(\epsilon _{i}^{{\prime }}=O(\epsilon )^{6}\) and thus

which shows that the convergence order of method (12) is twelve. Hence we have proved the theorem. □

2 Computational analysis

Here we compare the computational efficiency and convergence behavior of the Petkovic et al. [28] method (abbreviated as PJM10D) and the new simultaneous iterative methods (8) and (12). As presented in [28], the efficiency of an iterative method can be estimated using the efficiency index given by

where D is the computational cost and r is the order of convergence of the iterative method. The number of addition and subtraction, multiplications, and divisions per iteration for all n roots of a given polynomial of degree m is denoted by \(AS_{m}\), \(M_{m}\), and \(D_{m}\). The computational cost can be approximated as

and thus (15) becomes

Applying (17) and by data given in Table 1, we calculate the percentage ratio \(\rho (\text{(8)},(X))\) and \(\rho (\text{(12)},(X))\) [28] given by

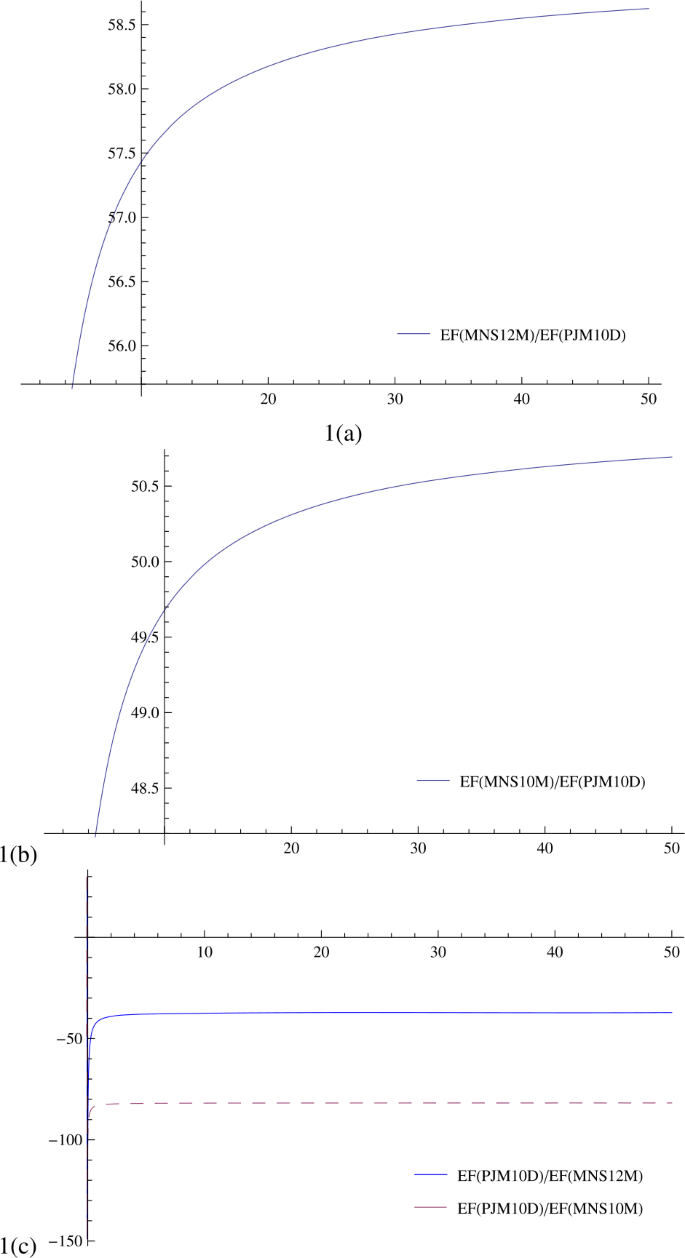

where X is the Petkovic method PJM10D. These ratios are graphically displayed in Fig. 1(a), (b), (c). It is evident from Fig. 1(a), (b), (c) that the new methods (8) and (12) are more efficient as compared to the Petkovic method PJM10D.

We also calculate the CPU execution time, as all the calculations are done using Maple 18 on (Processor Intel(R) Core(TM) i3-3110m CPU@2.4 GHz with 64-bit operating system. We observe that CPU times of the methods MMS10M and MNS12M are less than those of PJM10D, showing the dominant efficiency of our methods (8) and (12) as compared to them.

3 Numerical results

Here some numerical examples are considered in order to demonstrate the performance of our family of two-step tenth-order simultaneous methods, namely \(MNS10M\) (8) and \(MNS12M\) (12). We compare our family of methods with the Petkovic et al. [28] method of convergence of order ten for finding all distinct roots of (1) (abbreviated as PJM10D). All the computations are performed using Maple 15 with 64 digits floating point arithmetic. We take \(\in =10^{-30}\) as a tolerance and use the following stopping criteria for estimating the roots:

where \(e_{i}\) represents the absolute error of function values in \((i)\)

Numerical test examples from [10, 28, 29] are provided in Tables 2, 3, and 4. In all tables, CO represents the convergence order, n represents the number of iterations, and CPU represents execution time in seconds. All calculations are done using Maple 15 on (Processor Intel(R) Core(TM) i3-3110m CPU@2.4 GHz with 4 GB (3.89 GB USABLE)) with 64-bit operating system. For multiplicity unity in MNS10M and MNS12M, we get the numerical results for distinct roots, i.e., MNS10D and MNS12D respectively. We observed that numerical results of the methods MNS10D, MNS10M, MNS12D, and MNS12M are comparable with those of the PJM10D method but have a lower number of iterations.

Example 1

Consider

with exact roots

The initial approximations have been taken as

Example 2

Consider

with exact roots

The initial approximations have been taken as

Example 3

Consider

with exact roots

The initial approximations have been taken as

3.1 Results and discussion

From Tables 2–4 and from Fig. 1(a)–(c), we conclude that

-

Our methods MNS10D and MNS12D are more efficient as compared to PJM10D in terms of the number of iterations and CPU time.

-

Our methods MNS10M and MNS12M are applicable for multiple as well as distinct roots, whereas PJM10D is applicable for distinct roots only.

4 Conclusion

We have developed here two simultaneous two-step methods of order ten and twelve, namely MNS10D, MNS10M, MNS12D, and MNS12M for determination of all the distinct as well as multiple roots of nonlinear polynomial equation (1). From Tables 1–4, we observed that our methods are very effective and more efficient as compared to the existing method PJM10D [28].

Availability of data and materials

Not applicable.

References

Cosnard, M., Fraigniaud, P.: Finding the roots of a polynomial on an MIMD multicomputer. Parallel Comput. 15, 75–85 (1990)

Kanno, S., Kjurkchiev, N., Yamamoto, T.: On some methods for the simultaneous determination of polynomial zeros. Jpn. J. Appl. Math. 13, 267–288 (1995)

Proinov, P.D., Cholakov, S.I.: Semilocal convergence of Chebyshev-like root-finding method for simultaneous approximation of polynomial zeros. Appl. Math. Comput. 236, 669–682 (2014)

Proinov, P.D., Vasileva, M.T.: On the convergence of family of Weierstrass-type root-finding methods. C. R. Acad. Bulg. Sci. 68, 697–704 (2015)

Sendov, B., Andereev, A., Kjurkchiev, N.: Numerical Solutions of Polynomial Equations. Elsevier, New York (1994)

Wang, X., Liu, L.: Modified Ostrowski’s method with eight-order convergence and high efficiency index. Appl. Math. Lett. 23, 549–554 (2010)

Li, T.F., Li, D.S., Xu, Z.D., Fang, Y.I.: New iterative methods for non-linear equations. Appl. Math. Comput. 197, 755–759 (2008)

Shams, M., Rafiq, N., Ahmad, B., Mir, N.A.: Inverse numerical iterative technique for finding all roots of nonlinear equations with engineering applications. J. Math. 2021, Article ID 6643514 (2021)

Proinov, P.D., Vasileva, M.T.: On the convergence of high-order Ehrlich-type iterative methods for approximating all zeros of polynomial simultaneously. J. Inequal. Appl. 2015, Article ID 336 (2015)

Mir, N.A., Muneer, R., Jabeen, I.: Some families of two-step simultaneous methods for determining zeros of non-linear equations. ISRN Appl. Math. 2011, Article ID 817174 (2011)

Aberth, O.: Iteration methods for finding all zeros of a polynomial simultaneously. Math. Comput. 27, 339–344 (1973)

Nourein, A.W.M.: An improvement on two iteration methods for simultaneously determination of the zeros of a polynomial. Int. J. Comput. Math. 6, 241–252 (1977)

Proinov, P.D., Vasileva, M.T.: On the convergence of high-order Gargantini–Farmer-Loizou type iterative methods for simultaneous approximation of polynomial zeros. Appl. Math. Comput. 361, 202–214 (2019)

Agarwal, P., Filali, D., Akram, M., Dilshad, M.: Convergence analysis of a three-step iterative algorithm for generalized set-valued mixed-ordered variational inclusion problem. Symmetry 13, Article ID 444 (2021)

Sunarto, A., Agarwal, P., Sulaiman, J., Chew, J.V.L., Aruchunan, E.: Iterative method for solving one-dimensional fractional mathematical physics model via quarter-sweep and PAOR. Adv. Differ. Equ. 2021, Article ID 147 (2021)

Attary, M., Agarwal, P.: On developing an optimal Jarratt-like class for solving nonlinear equations. Ital. J. Pure Appl. Math. 43, 523–530 (2020)

Kumar, S., Kumar, D., Sharma, J.R., Cesarano, C., Agarwal, P., Chu, Y.M.: An optimal fourth order derivative-free numerical algorithm for multiple roots. Symmetry 12, Article ID 1038 (2020)

Agarwal, P., Agarwal, R.P., Ruzhansky, M.: Special Functions and Analysis of Differential Equations. Chapman and Hall/CRC, London (2020)

El-Sayed, A.A., Agarwal, P.: Numerical solution of multiterm variable-order fractional differential equations via shifted Legendre polynomials. Math. Methods Appl. Sci. 42, 3978–3991 (2019)

Shah, N.A., Agarwal, P., Chung, J.D., El-Zahar, E.R., Hamed, Y.S.: Analysis of optical solitons for nonlinear Schrodinger equation with detuning term by iterative transform method. Symmetry 12, Article ID 1850 (2021)

Ugur, D., Mehmet, A., Serkan, A.: On some polynomials derived from \((p, q)\)-calculus. J. Comput. Theor. Nanosci. 13, 7903–7908 (2016)

Agarwal, P., El-Sayed, A.A.: Vieta–Lucas polynomials for solving a fractional-order mathematical physics model. Adv. Differ. Equ. 2020, Article ID 626 (2020)

Agarwal, P., Dragomir, S.S., Jleli, M., Samet, M.B.: Advances in Mathematical Inequalities and Applications. Birkhäuser, Basel (2018)

Ruzhansky, M., Cho, Y.J., Agarwal, P., Area, I.: Advances in Real and Complex Analysis with Applications. Birkhäuser, New York (2017)

Agarwal, P., Singh, A., Kilicman, A.: Development of key-dependent dynamic S-boxes with dynamic irreducible polynomial and affine constant. Adv. Mech. Eng. 10(7), 1–17 (2018)

Wu, Z., Li, X.: A fourth-order modification of Newton’s method for multiple roots. Int. J. Res. Rev. Appl. Sci. 10, 166–170 (2012)

Dong, C.: A basic theorem of constructing an iterative formula of the higher order for computing multiple roots of an equation. Math. Numer. Sin. 11, 445–450 (1982)

Petkovic, M.S., Petkovic, L.D., Džunic, J.: On an efficient simultaneous method for finding polynomial zeros. Appl. Math. Lett. 28, 60–65 (2014)

Farmer, M.R.: Computing the Zeros of Polynomials using the Divide and Conquer Approach. Ph.D. Thesis, University of London (2014)

Funding

The authors declare that there is no funding available for this paper.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shams, M., Rafiq, N., Kausar, N. et al. Efficient iterative methods for finding simultaneously all the multiple roots of polynomial equation. Adv Differ Equ 2021, 495 (2021). https://doi.org/10.1186/s13662-021-03649-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-021-03649-6