Abstract

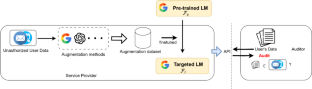

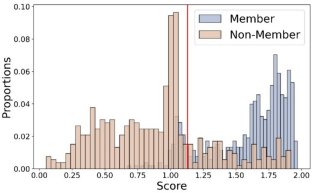

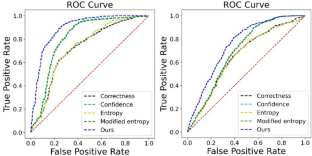

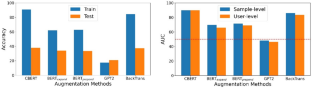

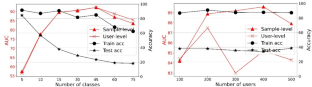

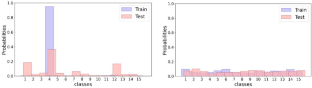

The burgeoning practice of unauthorized acquisition and utilization of personal textual data (e.g., social media comments and search histories) by certain entities has become a discernible trend. To uphold data protection regulations such as the Asia–Pacific Privacy Initiative (APPI) and to identify instances of unpermitted exploitation of personal data, we propose a novel and efficient audit framework that helps users conduct cognitive analysis to determine if their textual data was used for data augmentation. and training a discriminative model. In particular, we focus on auditing models that use BERT as the backbone for discriminating text and are at the core of popular online services. We first propose an accumulated discrepancy score, which involves not only the response of the target model to the auditing sample but also the responses between pre-trained and finetuned models, to identify membership. We implement two types of audit methods (i.e., sample-level and user-level) according to our framework and conduct comprehensive experiments on two downstream applications to evaluate the performance. The experimental results demonstrate that our sample-level auditing achieves an AUC of 89.7% and an accuracy of 83%, whereas the user-level method can audit membership with an AUC of 89.7% and an accuracy of 88%. Additionally, we undertake an analysis of how augmentation methods impact auditing performance and expound upon the underlying reasons for these observations.

Similar content being viewed by others

Data Availability

No datasets were generated or analyzed during the current study.

References

Devlin J, Chang MW, Lee K, Toutanova K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805. [Preprint] 2018. Available from: https://arxiv.org/abs/1810.04805.

Chalkidis I, Fergadiotis M, Malakasiotis P, Androutsopoulos I. Large-scale multi-label text classification on EU legislation. In: Annual Meeting of the Association for Computational Linguistics. 2019;pp. 6314–6322.

Rajpurkar P, Zhang J, Lopyrev K, Liang P. SQuAD: 100,000+ questions for machine comprehension of text. In: Empirical Methods in Natural Language Processing. 2016;pp. 2383–2392.

Brown T, Mann B, Ryder N, Subbiah M, Kaplan JD, Dhariwal P, et al. Language models are few-shot learners. Adv Neural Inf Process Syst. 2020;33:1877–901.

Sun Y, Wang S, Li Y, Feng S, Tian H, Wu H, et al. Ernie 2.0: A continual pre-training framework for language understanding. In: AAAI Conference on Artificial Intelligence. 2020;pp. 8968–8975.

Kumar V, Choudhary A, Cho E. Data augmentation using pre-trained transformer models. arXiv:2003.02245 [Preprint]. 2020. Available from: https://arxiv.org/abs/2003.02245.

Shorten C, Khoshgoftaar TM, Furht B. Text data augmentation for deep learning. Big Data. 2021;8(1):101.

Hedderich MA, Lange L, Adel H, Strötgen J, Klakow D. A survey on recent approaches for natural language processing in low-resource scenarios. arXiv:2010.12309 [Preprint]. 2020. Available from: https://arxiv.org/abs/2010.12309.

Guo S, Xie C, Li J, Lyu L, Zhang T. Threats to pre-trained language models: survey and taxonomy. arXiv:2202.06862 [Preprint]. 2022. Available from: https://arxiv.org/pdf/2202.06862.

Xiang T, Liu H, Guo S, Liu H, Zhang T. Text’s armor: optimized local adversarial perturbation against scene text editing attacks. In: ACM International Conference on Multimedia. 2022; pp. 2777–2785.

He J, Zhang Z, Li M, Zhu L, Hu J. Provable data integrity of cloud storage service with enhanced security in the internet of things. IEEE Access. 2018;7:6226–39.

Xu G, Li H, Liu S, Yang K, Lin X. Verifynet: secure and verifiable federated learning. IEEE Trans Inf Forensics Secur. 2020;15:911–26.

Xu G, Li H, Zhang Y, Xu S, Ning J, Deng RH. Privacy-preserving federated deep learning with irregular users. IEEE Trans Dependable Secure Comput. 2020;19(2):1364–81.

Carlini N, Tramer F, Wallace E, Jagielski M, Herbert-Voss A, Lee K, et al. Extracting training data from large language models. In: USENIX Security Symposium. 2021; pp. 2633–2650.

Panchendrarajan R, Bhoi S. Dataset reconstruction attack against language models. In: CEUR Workshop. 2021.

Vakili T, Dalianis H. Are clinical BERT models privacy preserving? The difficulty of extracting patient-condition associations. In: AAAI 2021 Fall Symposium on Human Partnership with Medical AI: Design, Operationalization, and Ethics (AAAI-HUMAN 2021). 2021.

Shokri R, Stronati M, Song C, Shmatikov V. Membership inference attacks against machine learning models. In: IEEE Symposium on Security and Privacy. 2017; pp. 3–18.

Jagielski M, Nasr M, Lee K, Choquette-Choo CA, Carlini N, Tramer F. Students parrot their teachers: membership inference on model distillation. Advances in Neural Information Processing Systems. 2024; 36.

Yeom S, Giacomelli I, Fredrikson M, Jha S. Privacy risk in machine learning: analyzing the connection to overfitting. In: IEEE Computer Security Foundations Symposium. 2018; pp. 268–282.

Congzheng Song and Ananth Raghunathan. Information leakage in embedding models. In: ACM SIGSAC Conference on Computer and Communications Security. 2020; pp. 377–390.

Mireshghallah F, Uniyal A, Wang T, Evans D, Berg-Kirkpatrick T. Memorization in nlp fine-tuning methods. arXiv:2205.12506 [Preprint]. 2022. Available from: https://arxiv.org/abs/2205.12506.

Mattern J, Mireshghallah F, Jin Z, Schölkopf B, Sachan M, Berg-Kirkpatrick T. Membership inference attacks against language models via neighbourhood comparison. arXiv:2305.18462 [Preprint]. 2023. Available from: https://arxiv.org/abs/2305.18462.

Debenedetti E, Severi G, Carlini N, Choquette-Choo CA, Jagielski M, Nasr M, Wallace E, Tramèr F. Privacy side channels in machine learning systems. arXiv:2309.05610 [Preprint]. 2023. Available from: https://arxiv.org/pdf/2309.05610.

Hisamoto S, Post M, Duh K. Membership inference attacks on sequence-to-sequence models: is my data in your machine translation system? Transactions of the Association for Computational Linguistics. 2020;8:49–63.

Song C, Shmatikov V. Auditing data provenance in text-generation models. In: ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2019; pp. 196–206.

Tan S, Caruana R, Hooker G, Lou Y. Distill-and-compare: auditing black-box models using transparent model distillation. In: AAAI/ACM Conference on AI, Ethics, and Society. 2018; pp. 303–310.

Adler P, Falk C, Friedler SA, Nix T, Rybeck G, Scheidegger C, et al. Auditing black-box models for indirect influence. Knowl Inf Syst. 2018;54:95–122.

Koh PW, Liang P. Understanding black-box predictions via influence functions. In: International Conference on Machine Learning, 2017; pp. 1885–1894.

Mireshghallah F, Goyal K, Uniyal A, Berg-Kirkpatrick T, Shokri R. Quantifying privacy risks of masked language models using membership inference attacks. arXiv:2203.03929 [Preprint]. 2022. Available from: https://arxiv.org/abs/2203.03929.

Medhat W, Hassan A, Korashy H. Sentiment analysis algorithms and applications: a survey. Ain Shams engineering journal. 2014;5(4):1093–113.

Uchida S. Image processing and recognition for biological images. Dev Growth Differ. 2013;55(4):523–49.

Niu T and Bansal M. Adversarial over-sensitivity and over-stability strategies for dialogue models. In: Conference on Computational Natural Language Learning. 2018; pp. 486–496.

Xie Q, Dai Z, Hovy E, Luong T, Le Q. Unsupervised data augmentation for consistency training. Adv Neural Inf Process Syst. 2020;33:6256–68.

Miao Z, Li Y, Wang X, Tan WC. Snippext: semi-supervised opinion mining with augmented data. In: Proceedings of The Web Conference. 2020; pp. 617–628.

He J, Gu J, Shen J, Ranzato MA. Revisiting self-training for neural sequence generation. arXiv:1909.13788 [Preprint]. 2019. Available from: https://www.arxiv.org/abs/1909.13788.

Chen J, Wu Y, Yang D. Semi-supervised models via data augmentation for classifying interactive affective responses. arXiv:2004.10972 [Preprint]. 2020. Available from: https://arxiv.org/pdf/2004.10972.

Cai H, Chen H, Song Y, Zhang C, Zhao X, Yin D. Data manipulation: towards effective instance learning for neural dialogue generation via learning to augment and reweight. arXiv:2004.02594 [Preprint]. 2020. Available from: https://arxiv.org/abs/2004.02594.

Anaby-Tavor A, Carmeli B, Goldbraich E, Kantor A, Kour G, Shlomov S, et al. Do not have enough data? deep learning to the rescue! In: AAAI Conference on Artificial Intelligence. 2020; pp. 7383–7390.

Yang Y, Malaviya C, Fernandez J, Swayamdipta S, Le Bras R, Wang JP, et al. Generative data augmentation for commonsense reasoning. In: Findings of the Association for Computational Linguistics: EMNLP. 2020; pp. 1008–1025.

Miyato T, Dai AM, Goodfellow I. Adversarial training methods for semi-supervised text classification. arXiv:1605.07725 [Preprint]. 2016. Available from: https://arxiv.org/abs/1605.07725.

Cheng Y, Jiang L, Macherey W. Robust neural machine translation with doubly adversarial inputs. arXiv:1906.02443 [Preprint]. 2019. Available from: https://arxiv.org/abs/1906.02443.

Chen J, Shen D, Chen W, Yang D. Hiddencut: simple data augmentation for natural language understanding with better generalizability. In: the Association for Computational Linguistics and the International Joint Conference on Natural Language Processing. 2021; pp. 4380–4390.

Shen D, Zheng M, Shen Y, Qu Y, Chen W. A simple but tough-to-beat data augmentation approach for natural language understanding and generation. arXiv:2009.13818 [Preprint]. 2020. Available from: https://arxiv.org/abs/2009.13818.

Malandrakis N, Shen M, Goyal A, Gao S, Sethi A, Metallinou A. Controlled text generation for data augmentation in intelligent artificial agents. In: Proceedings of the 3rd Workshop on Neural Generation and Translation. 2019; pp. 90–98.

Joyce JM. Kullback-leibler divergence. In: International Encyclopedia of Statistical Science. 2011; pp. 720–722.

Shejwalkar V, Inan HA, Houmansadr A, Sim R. Membership inference attacks against nlp classification models. In: NeurIPS 2021 Workshop Privacy in Machine Learning. 2021.

Baumgartner J, Zannettou S, Keegan B, Squire M. The pushshift Reddit dataset. In: AAAI Conference on Web and Social Media. 2020; pp. 830–839.

Ni J, Li J, McAuley J. Justifying recommendations using distantly-labeled reviews and fine-grained aspects. In: Empirical Methods in Natural Language Processing and International Joint Conference on Natural Language Processing. 2019; pp. 188–197.

Wu X, Lv S, Zang L, Han J, Hu S. Conditional bert contextual augmentation. In: Computational Science. 2019; pp. 84–95.

Keskar NS, McCann B, Varshney LR, Xiong C, Socher R. Ctrl: A conditional transformer language model for controllable generation. arXiv:1909.05858 [Preprint]. 2019. Available from: https://arxiv.org/abs/1909.05858.

Johnson M, Schuster M, Le QV, Krikun M, Wu Y, Chen Z, et al. Google’s multilingual neural machine translation system: enabling zero-shot translation. Transactions of the Association for Computational Linguistics. 2017;5:339–51.

Sennrich R, Haddow B, Birch A. Improving neural machine translation models with monolingual data. arXiv:1511.06709 [Preprint]. 2015. Available from: https://arxiv.org/abs/1511.06709.

Liu X, He J, Liu M, Yin Z, Yin L, Zheng W. A scenario-generic neural machine translation data augmentation method. Electronics. 2023;12(10):2320.

Pellicer LF, Ferreira TM, Costa AH. Data augmentation techniques in natural language processing. Appl Soft Comput. 2023;132: 109803.

Ng N, Yee K, Baevski A, Ott M, Auli M, Edunov S. Facebook FAIR’s WMT19 news translation task submission. In: Proceedings of the Fourth Conference on Machine Translation. 2019; pp. 314–319.

Nasr M, Shokri R, Houmansadr A. Comprehensive privacy analysis of deep learning: passive and active white-box inference attacks against centralized and federated learning. In: Symposium on Security and Privacy. 2019; pp. 739–753.

Song L, Mittal P. Systematic evaluation of privacy risks of machine learning models. In: USENIX Security Symposium. 2021; pp. 2615–2632.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grants 62102052, U21A20463, and 62302071 and the Natural Science Foundation of Chongqing, China, under Grants cstc2021jcyj-msxmX0744 and CSTB2023NSCQ-MSX0693.

Author information

Authors and Affiliations

Contributions

Zhirui Zeng proposed an accumulated discrepancy score to determine if the user’s textual data was used for data augmentation and training a discriminative model and wrote the paper in latex. Jialing He constructed the audit framework that determines the specific processes, completed the audit program, and fixed some bugs. Tao Xiang helped conduct the experiments with user-level audit on the Amazon dataset and optimized the writing logic of the thesis. Ning Wang further conducted the experiments for sample-level audit on the Reddit dataset and optimized the presentation of the experimental results. Biwen Chen designed the theoretical approach to determine the threshold for the accumulated discrepancy score and helped conduct the experiments for the impact of data augmentation methods. Shangwei Guo finalized the paper by conducting evaluation discussion, logic validation, format checking, and language polishing.

Corresponding author

Ethics declarations

Competing Interests

The authors have no competing interests as defined by Springer, or other interests that might be perceived to influence the results and/or discussion reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zeng, Z., He, J., Xiang, T. et al. Cognitive Tracing Data Trails: Auditing Data Provenance in Discriminative Language Models Using Accumulated Discrepancy Score. Cogn Comput (2024). https://doi.org/10.1007/s12559-024-10315-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12559-024-10315-y