The current CheckUser tool places limits on the maximum potential cost of accessing data, in case a result set is large. We need to set similar limits for the Special:Investigate Compare tab.

The current version of the query essentially does:

SELECT cuc_user, cuc_user_text, cuc_ip, cuc_ip_hex, cuc_agent, MIN(cuc_timestamp), MAX(cuc_timestamp), COUNT(*) FROM cu_changes WHERE cuc_type IN (0,1) AND (cuc_user_text IN <users> OR cuc_ip IN <IPs> OR cuc_ip_hex BETWEEN <min IP> AND <max IP>) GROUP BY cuc_user_text, cuc_ip, cuc_agent ORDER BY cuc_user_text, cuc_ip, cuc_agent LIMIT <limit>

This performs a filesort operation on an arbitrarily large number of rows before imposing any limit. Although an index on, say, (cuc_user, cuc_ip, cuc_agent, cuc_timestamp) (and changing cuc_user_text -> cuc_user) could avoid the filesort, there would still be no cap on how many rows are grouped before the limit is applied.

After discussing the problem with @Catrope, here are some proposed changes to place limits on the worst potential performance.

UI changes

- Place a low limit on the number of users and IPs that can be investigated at once by the check user

- Allow the check user to narrow the time range for their investigation (the current CheckUser tool does this)

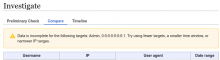

- Show warnings and advice if data are truncated

Query changes

One thing we could do would be to use efficient subqueries to access a limited number of rows, and perform the grouping and pagination on this size-limited subset. We would have to decide how to limit the subset, e.g. would we rather have some data for each target, or prioritise the most recent data, etc. For example, to get some data for each target, we could do something like:

SELECT a.cuc_user_text, a.cuc_ip, a.cuc_agent, MIN(a.cuc_timestamp), MAX(a.cuc_timestamp), COUNT(*) FROM ( -- for each user ( SELECT cuc_user_text, cuc_ip, cuc_agent, cuc_timestamp FROM cu_changes WHERE cuc_type IN (0,1) AND cuc_user = <user> AND cuc_timestamp >= <timestamp> ORDER BY cuc_timestamp DESC LIMIT <limit> ) UNION -- for each IP ( SELECT cuc_user_text, cuc_ip, cuc_agent, cuc_timestamp FROM cu_changes WHERE cuc_type IN (0,1) AND cuc_ip_hex = <IP> AND cuc_timestamp >= <timestamp> ORDER BY cuc_timestamp DESC LIMIT <limit> ) UNION -- for each IP range (NB highest first, to make use of cuc_ip_hex_time index) ( SELECT cuc_user_text, cuc_ip, cuc_agent, cuc_timestamp FROM cu_changes WHERE cuc_type IN (0,1) AND cuc_ip_hex >= <min IP> AND cuc_ip_hex <= <max IP> AND cuc_timestamp >= <timestamp> ORDER BY cuc_ip_hex DESC, cuc_timestamp DESC LIMIT <limit> ) ) AS a GROUP BY cuc_user_text, cuc_ip, cuc_agent ORDER BY cuc_user_text, cuc_ip, cuc_agent LIMIT <pager limit>;

- Separate subqueries for each target entered ensure we get some data per target. Each has a limit (e.g. if the limit of rows we should access overall is 5,000 and 5 targets have been entered, each could have a limit of 1000); order by descending timestamp

- The outer query performs a filesort, but on a limited number of rows. (Grouping could be done in PHP but would require customization of the pager and involve more data transfer)

- Repeating each subquery with a count can tell us whether each one hit the limit (this can't be told from the final aggregated results due to the union)

The biggest problem with making these (or similar) changes to the query is that a set of results may be incomplete if any of the subqueries hit their limit (and the missing results will not be accessible on the next page). Some ways to mitigate this are:

- See if we can do some analysis on the largest cu_changes tables to find a limit that is unlikely to be hit often but which will perform tolerably

- Reduce the likelihood of hitting the limit by limiting the number of targets that can be input at once

- Display a warning that the results may be incomplete, whenever any subquery hits the limit

- Display general advice to check users that they can reduce the likelihood of getting incomplete results by reducing the number of targets, reducing the width of IP ranges, and/or reducing the time window.