Running your mobile eye tracking study in Pupil Cloud: A Demo Workspace walkthrough

Research Digest

Author(s): Richard Dewhurst & Clara Kuper

July 15, 2022

At Pupil Labs we want to give you eye tracking “superpowers”. One aspect of this is making it really easy to set up and run a study. The best way to learn is to explore, so we have made a fun mock-up study at a Gallery in Denmark and uploaded the data to a Demo Workspace that you can check out and play around with! This blog post will guide you through the concepts we use to make it all work. Big thanks to Gallery V58 for making this possible, and all the visitors who took part!

In this article you will learn to:

Explore demo data in Pupil Cloud: The Demo Workspace is a place where you - and anyone on the internet - can explore real world eye tracking data captured with Pupil Invisible eye tracking glasses. Playback recordings, investigate workflows, and download enriched data.

Collect and organise Pupil Invisible eye tracking data: Use Wearers and Templates to streamline data collection, and organise recordings easily.

Prepare for analysis with Projects and Events: Mark when important things happen in recordings with timestamps, and define Sections to focus on for further processing.

Enrich data with advanced algorithms: Reliably map gaze to features of the environment. No more frame-by-frame manual coding.

Visualise & Download: View heatmaps of gaze on paintings, download and work with the enriched data yourself.

Tracking picture viewing in an art gallery

We took Pupil Invisible glasses into a small art gallery in Denmark to provide you with real eye movement recordings from the field. You can use this as a guide when running a study with our tools yourself. We recommend you open the Workspace and check it out as we tag along in this post!

Multiple paintings with heatmap overlay; Ingerlise Vikne painting with fixations overlaid.

Here’s the breakdown of what we did and how the data is represented

Five visitors browsed the gallery reflecting on artwork whilst wearing Pupil Invisible glasses. We set up 5 wearers in the Pupil Invisible Companion App and selected the correct one when we made recordings.

We gave people two slightly different activities, so we could hone in on paintings vs. browsing artwork in general. Therefore, one activity was standing in front of selected paintings, and the other was walking around freely.

There was one exhibition downstairs in the gallery and another upstairs. We made separate recordings for each. This makes for 4 recordings per visitor: 20 recordings in total. Take a look at how this is reflected in the Drive view of the Workspace. Recording names will tell you who the visitor was, which activity they had, and where in the gallery they were. This information is also reflected in the labels.

This is an example recording of a visitor walking through the first exhibition downstairs in the gallery, looking at whatever they found interesting. There are other recordings where the visitor is standing in front of particular paintings. Feel free to explore and familiarise yourself with the recordings and set-up!

Collect additional metadata directly in the Companion App

For each study, it's super useful to make your own recording Template. This saves you from having to make a separate questionnaire, or carry a notepad. Any metadata you need can be stored consistently together with each recording. You build Templates in Pupil Cloud, publish them to the App, and fill in the fields at recording time.

We’ve made a Template that reflects the design of this study, and captures it in the recording names. Answers to the fields below make sure we know what people were asked to do, and which exhibition they visited (upstairs or downstairs).

Set up dynamic recording name.

Create an Activity section with multiple choice.

Create a Location section with multiple choice.

You can add other form fields too, like checkboxes and text. See the full Template in the Workspace.

Prepare for analysis with Projects and Events

It is within Projects that the foundations are laid for more advanced analysis. See this getting started guide which covers the basics on how to create Projects and work with the data they contain. All the recordings from the art gallery are added to a Project called ‘Viewing Paintings’.

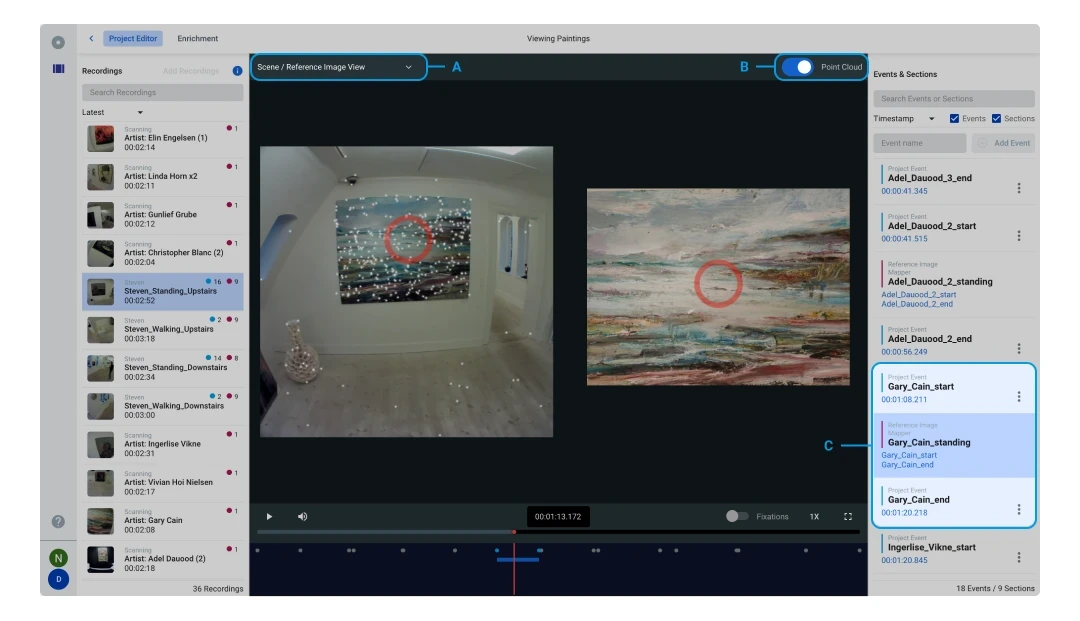

A: Add Events to annotate recordings in Viewing Paintings project.

B: Sections between Events.

The first thing to do in a Project is add some Events. Events are used in Projects to annotate recordings. In this example, and for all the visitors walking through the gallery, we’ve simply added two Events, one for when they started walking around and another when they finished. Click and jump to events for easier navigation.

When you have defined start and end events, you can do powerful data analysis on the Sections of time between them. If several recordings share the same events, you can run the analysis on all of the recordings simultaneously.

The blue bar at the bottom of the recording in the image above indicates the Section where this visitor was walking around the exhibition downstairs in the gallery.

Okay, having two events is quite straightforward, but there are other recordings in this Project with loads more Events. Why is that? It’s because we don’t always want to analyse all our data. Quite often we just want to concentrate on specific parts, particularly if we have long recordings. Remember that in this study we also want to zero in on particular paintings, that’s why we included the standing activity where visitors were told which pictures to look at. So we have start and end events for each of these too. Take a look at this example and how the events reflect standing in front of particular paintings.

We’ve annotated the whole Project with Events. Now let’s get the data enriched with Reference Image Mapper.

Mapping eye movements automatically onto the art pieces using the Reference Image Mapper Enrichment

Let’s return to the Sections created in the Project. These are chunks of time within which we can now execute aggregated data analysis. Because we used the same Events consistently for every visitor, they all have the same Sections - one long Section for each exhibition, where the activity was to walk around freely, and lots of small Sections for each exhibition, where the activity was to stand in front of specific paintings. Pupil Cloud can run sophisticated algorithms we call Enrichments on each Section, streamlining analysis by crunching the data for all of our visitors in one go!

We used the Reference Image Mapper Enrichment in the gallery to automatically map gaze to the paintings people looked at. If you are observant you might have noticed that there are a group of recordings added to the Project that are simply scannings of the artist's paintings with no gaze circle present. Under the hood the Reference Image Mapper algorithm computes a 3D model of parts of the gallery using these scanning recordings (which are made with Pupil Invisible Glasses). It automatically locates a reference image that we took of each painting within the 3D model, and maps gaze onto these images for every Section where the painting is present. Information overload?! Get up to speed with Reference Image Mapper by watching this explainer video. It’s also much easier to grasp if you select a Section in a recording containing a Reference Image Mapper Enrichment, turn on the Point Cloud toggle and explore the different views. The visualisations represent the 3D model generated, and where gaze is mapped to on the reference image of the painting.

A: Switch between scene view and Reference Image view or veiw them both side by side.

B: Point Cloud representation of 3D model.

C: Reference Image Mapper section is currently selected.

Reference Image Mapper is the tool that allows you to transform the time-varying and consistently changing data that comes from Pupil Invisible to an easy-to-grasp 2D image. What's great about this is that your usual approaches of analysing gaze data on a static setup are going to work here. This is a huge time saver. Imagine having to map an average of 10,000 frames yourself, just for one recording, one by one. With Reference Image Mapper we give you gaze mapping super-powers!

Show me the data!

When heading over to the Enrichments view in Pupil Cloud you can see all the Enrichments created for a Project, whether they have been processed yet or not, or if something went wrong.

A: All enrichments are shown in the Enrichment view.

B: Right click to open enrichment menu.

From here just right click one of the Enrichments to view heatmaps of gaze on the paintings’ reference images. You can also download all of the data in convenient .csv formats for your own custom post-hoc analysis.

Open the Heatmap modal to view a heatmap mapped onto the reference image.

We encourage you to explore any of the data contained in this Project, and this Demo Workspace as a whole, it’s open to all. Playback recordings, download raw data and Enrichments. You can also make your own projects and add new enrichtments! (This is really useful since the exsitings enrichtments cannot be changed.)

Can you help me work with this data myself?

We can. For those who want to dive deeper and do further analysis themselves on the data exports from this art gallery study, check out the second part of this post: Exploring mobile eye tracking Data: A Demo Dataset Walkthrough