Abstract

Objective

While the use of medical scribes is rapidly increasing, there are not widely accepted standards for their training and duties. Because they use electronic health record systems to support providers, inadequately trained scribes can increase patient safety related risks. This paper describes the development of desired core knowledge, skills, and attitudes (KSAs) for scribes that provide the curricular framework for standardized scribe training.

Materials and Methods

A research team used a sequential mixed qualitative methods approach. First, a rapid ethnographic study of scribe activities was performed at 5 varied health care organizations in the United States to gather qualitative data about knowledge, skills, and attitudes. The team’s analysis generated preliminary KSA related themes, which were further refined during a consensus conference of subject-matter experts. This was followed by a modified Delphi study to finalize the KSA lists.

Results

The team identified 90 descriptions of scribe-related KSAs and subsequently refined, categorized, and prioritized them for training development purposes. Three lists were ultimately defined as: (1) Hands-On Learning KSA list with 47 items amenable to simulation training, (2) Didactic KSA list consisting of 32 items appropriate for didactic lecture teaching, and (3) Prerequisite KSA list consisting of 11 items centered around items scribes should learn prior to being hired or soon after being hired.

Conclusion

We utilized a sequential mixed qualitative methodology to successfully develop lists of core medical scribe KSAs, which can be incorporated into scribe training programs.

Keywords: patient safety, electronic health records, sociotechnical systems, medical scribes, qualitative research, Delphi method

INTRODUCTION

After passage of the Health Information Technology for Economics and Clinical Health (HITECH) Act, health care organizations quickly responded to United States (US) federal incentives to implement electronic health record (EHR) systems.1 Many providers found that they were spending increased time documenting encounters as opposed to direct clinical care.2,3 An unintended consequence of widespread adoption of EHRs and increased provider burnout was a burgeoning medical scribe industry, which offers documentation assistance to providers.4,5 Scribes are individuals who do not need clinical licensure, and assist providers in documenting patient visits in the EHR. Medical scribes may have disparate clinical licensure, such as medical assistants or nurses; however, most are preprofessionals, with no clinical training, and are often college students hoping to pursue a medical or other clinical degree in the future. Between 2015 and 2020, the scribe workforce grew from 15 000 to 100 0006 and continues to grow; one commercial scribing business reported an increase in remote scribe hires during the Covid-19 pandemic.7 Research has shown that CMS documentation changes have had no impact on note length8 and because of that it is anticipated that scribe use will continue to have a continuing significant presence in the medical workforce.

Currently, there are few regulations governing the scribe industry. The Centers for Medicare & Medicaid Services (CMS) and The Joint Commission have released guidelines for scribe activities9,10 but these are neither mandatory nor enforced. The current regulations are focused on discouraging unlicensed personnel from submitting orders and they do not address the wide variety of functions scribes sometimes perform independently, including data entry,11 order entry,12 and documentation,13 which might produce risk to patients if providers do not carefully monitor the scribes’ work. Commercial scribe companies supply the majority of scribes14,15 and often conduct their own training for scribes.16 However, many organizations and individual providers prefer to hire scribes directly and train them according to their own needs.17 Therefore, the combination of multiple training models and a lack of standard training benchmarks for scribes raises questions about the quality and safety of scribe use.18

Central to establishing training objectives is the process of creating a set of competencies based on core knowledge, skills, and attitudes (KSAs) descriptions that can guide training and evaluation.19 Establishing KSAs allows educators to develop a set of best practices guidelines, which can be used to develop competencies. At this time, there are no rigorously developed KSAs for medical scribes.

Our goal was to outline KSAs for scribes that can inform the creation of EHR-simulation based training tools as well as didactic best practices lectures. In this paper, we describe the process of creating scribe-related KSAs using rapid ethnography, an expert consensus conference consisting of subject matter experts (SMEs), and a modified Delphi approach.

MATERIALS AND METHODS

Ethical review

The Institutional Review Boards (IRBs) or equivalent bodies at OHSU and each study site approved the study.

Research process

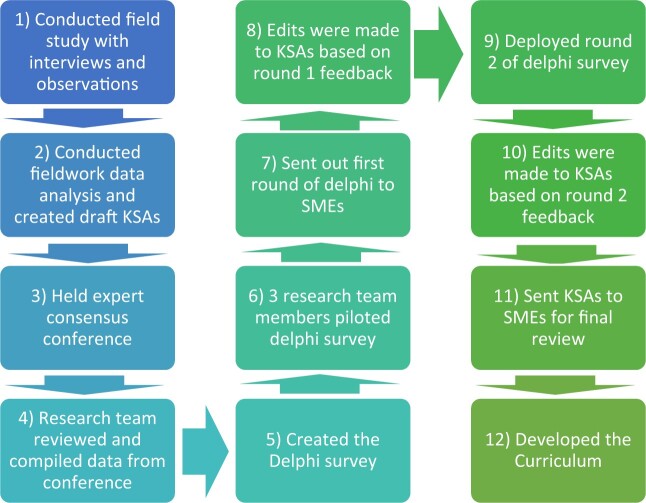

Major steps of the research process included field studies to identify scribe activities, an expert conference to generate a draft list of desirable KSAs, a modified Delphi process to refine the KSAs to become actionable competencies, and, ultimately, the development of a training curriculum by the research team (Figure 1).

Figure 1.

Diagram of the research process.

(1) Conducted field study with interviews and observations (October 2017–January 2019)

To better understand the scribe role, we conducted ethnographic site visits using standard purposive sampling to identify suitable organizations across the United States and gathered data through interviews and observations about scribe activities in hospital, emergency department, and ambulatory settings.20,21 These organizations represented the scope of scribe activities in disparate geographic settings with varied workflows as well as various scribing models (in-person, preprofessional, professional, and remote). See Table 1 for a breakdown of the varied attributes of each site and description of scribing models. Our research team was interdisciplinary, representing different perspectives and backgrounds, including social scientists, informaticians, providers, qualitative researchers, and scribe managers.

Table 1.

Breakdown of variation of scribe programs at each site

| Site A | Site B | Site C | Site D | Site E | |

|---|---|---|---|---|---|

| Location (all in United States) | Northwest | Northwest | East | Midwest | Northwest |

| Scribe model used | Preprofessional scribes | Preprofessional scribes | Medical assistants and nurses as scribes | Preprofessional scribes | Preprofessional scribes |

| Industry vs Internal scribes | Internal scribes | Industry scribes | Internal scribes | Industry scribes | Industry scribes |

| In-person vs Remote scribing | In-person scribinga | In-person scribinga | Remote scribinga | In-person scribinga | In-person scribinga |

The term “In-person scribing” implies that the provider and medical scribe worked together in person to document the patient encounter. “Remote scribing” implies that the scribe was in another location distant from the provider.

(2) Conducted fieldwork data analysis and created draft KSAs (February 2019–April 2019)

We transcribed and analyzed qualitative data from the site visits to inform the next stage of the study, which was convening an expert consensus conference. Three team members (SC, NS, and JA) extricated an initial list of attributes that were mentioned in the data as desirable KSAs for scribes to possess. Data were analyzed using an inductive grounded theory approach, meaning that we did not start with a list of preconceived terms to use while coding the data. Codes arose directly from the data; themes were generated after patterns were analyzed. The competency-related texts were further analyzed to identify subthemes. For example, if a theme had 50 text items categorized under that theme, those 50 items were coded again to identify patterns within that theme. During another exercise, the research team reviewed all coding and sorted individual items into 2 broad lists: those that were clearly EHR related and those that were not EHR related.

(3) Held expert consensus conference (April 2019)

In the consensus development phase, we invited a panel of subject matter experts (SMEs) to review the 2 lists, with the goal of reaching a consensus regarding optimal scribe-related KSAs. A two-day face-to-face in-person conference of SMEs was held to discuss, evaluate, and prioritize the fieldwork results, and provide input into the KSAs. Having previously utilized an expert consensus conference format during another study successfully,22,23 we felt that this approach would benefit the process of achieving SME consensus about KSAs. The end goal of the conference was to produce a prioritized list of KSAs that could later be refined to inform a training toolkit for scribes and organizations to use.

The research team purposively selected and invited SMEs who were prominent in their professional domains within informatics, the scribe industry, patient safety, risk management, and medical education. We also invited scribe users (providers) and former scribes to gain the perspective of those on the front lines of clinical care delivery. A list of expert guests and their expertise as of 2019 is included in Supplementary Table S1. The group was presented with the qualitative study results and 5 breakout groups evaluated, clarified, and prioritized sections of the KSA list pertaining to the EHR. Each group was predetermined so that multiple perspectives were represented within each group. Participants then reconvened as a whole to review and refine the small group results. The same process was followed for evaluation of the non-EHR related KSA list.

(4) Research team reviewed and compiled data from conference (May 2019–June 2019)

The conference group sessions were recorded and audio transcripts were analyzed using an inductive approach and an open coding method for developing definitive KSA intent and wording. In addition to audio transcripts, the groups had generated flip charts during their discussions, and these were saved as artifacts and compared for analysis. The flip charts had bullet points of medical scribe KSAs that were discussed in each smaller group discussion. Each individual group then did a “dot” exercise, where they were given 5–10 dots and were told to put a dot next to the KSAs they felt were the most important ones discussed in the small group sessions to mark prioritization. Post conference, one research team member took the flipchart information and transformed it into Word documents. As part of the coding process, 8 team members (SC, JA, JB, JG, KW, BO, VM, and CH) worked in dyads to read and review transcripts and parse pertinent quotes regarding each KSA item. Dyads were chosen so at least 1 researcher that was present for each of the small group discussions was also a part of the dyad. The output from the dyads was correlated with the content of participant-generated flip charts to ensure continuity. We met as a team after dyad discussions to reach a team consensus on these lists.

(5–11) Created the Delphi survey (June 2019–May 2020)

We utilized a modified Delphi framework to iteratively develop the KSA lists in the months following the expert conference24–26 (see Figure 1). A Delphi methodology is a structured approach to reaching agreement or consensus within a multidisciplinary group of SMEs, often across various geographical locations25 and has been used in numerous disciplines, including medicine.27–29 A Delphi approach typically consists of multiple iterations before a final consensus is achieved, thus producing both accurate and reliable information.25

The first step involved the creation of the initial Delphi survey instrument. The surveys, developed using Qualtrics (Qualtrics, Seattle, WA), asked participants to denote each item as “Keep,” “Not Keep,” or “Change.” Branched-chain logic led to the next question. For example, if the experts clicked “Not Keep” or “Change,” they were prompted to the next box where they could comment on each of their choices. Please see Supplementary Appendix A for a copy of one of our Delphi survey instruments.

After piloting the survey with 3 informatics faculty members and graduate students, we sent the first round to the SMEs, and made edits based on the first round of initial feedback. Then, we iteratively deployed a second round of the Delphi survey and made edits to KSAs based on this iteration of feedback from the second round.

With the final lists of EHR-related and non-EHR related KSAs available, the research team assessed each statement and classified it within a framework used by the Accreditation Council for Graduate Medical Education (ACGME), which is universally utilized in the education and evaluation of house officers30–33 in the United States. This framework has also been validated as a template for qualitative data analysis and crafting competency statements.32–35 The ACGME framework is a standard format for framing medical competencies, and we chose to put the KSAs into this format for consistency purposes. The final iteration of both KSA lists was organized to conform to the standard ACGME format of core competencies i.e.: (1) patient care, (2) medical knowledge, (3) practice-based learning and improvement, (4) interpersonal and communication skills, (5) professionalism, and (6) system-based practice.35 However, since medical scribes do not engage in direct patient care, the “patient care” category was replaced with a new category called “foundational skills.” After the research team completed the classification process, the KSAs were sent to the SMEs for a final review and there were no further comments or suggested changes.

(12) Developed the curriculum (February 2020–April 2020)

We needed to organize the KSAs in such a way that they could be taught. Therefore, the research team next assessed each KSA item for appropriateness for either simulation or didactic (lecture-based) training. We met as a team virtually multiple times to review our KSA items one by one and determine how we could best measure this KSA through simulation or through didactic lectures. We thoroughly discussed each item until a consensus was reached about how we would assess this item. Simulation is an ideal delivery method for allowing students to be taught effectively with controlled hands-on training.36–38 Simulations allow for repeated iterations, so participants can run clinical scenarios numerous times. Simulations also allow participants to practice in a safe environment where no real patients can be harmed.36,39–41

There are some types of knowledge, skills, and attitudes, that do not lend themselves to simulation, but can be best be presented in didactic lectures, which comprise the non-EHR KSAs lists. Didactic lectures are a common methodology for teaching medicine.42,43

RESULTS

Data demographics

A total of 81 people were interviewed during site visits, resulting in 86 interview transcripts and 30 sets of observational field notes. Please see Table 2 of our data from the site visits. These constituted the raw data from which 3 team members (SC, NS, and JA) extracted an initial list of statements from subjects related to desirable scribe competencies and were validated by team members. The competencies were divided into lists of KSAs that were: (1) EHR related KSAs (53 items) and (2) non-EHR related KSAs (46 items) for a total of 99 items.

Table 2.

Site visit data

| Site A | Site B | Site C | Site D | Site E | |

|---|---|---|---|---|---|

| Settings of care | Teaching hospital and clinics | Community health system | Otolaryngology clinic | Teaching hospitals and emergency departments | Urgent care |

| Number of clinics observed | 2 clinics | 3 clinics | 1 clinics | 3 clinics | 3 clinics |

| Total number of hours observed | 17 h | 20 h | 6 h | 25 h | 12 h |

| Total hours interviewed | 12 h | 7 h | 11 h | 12 h | 5 h |

| Total number of people interviewed | 14 people (4 providers, 4 scribes, 6 administrators) | 18 people (6 providers, 5 scribes, 7 administrators) | 18 people (8 providers, 6 scribes, 4 administrators) | 19 people (6 providers, 7 scribes, 6 administrators) | 12 people (6 providers, 5 scribes, 1 administrator) |

Conference results

Twenty SMEs attended the conference of experts to review the KSAs. The lists presented to SMEs at the conference were those described above. After each list was discussed in small and large groups at the conference with SMEs, results were summarized by the research team, and the total number increased to 109 items: 66 were categorized as EHR-related KSAs and 43 as non-EHR (didactic) KSAs. See Table 3 for the lists of subcategories of KSAs, developed from fieldwork and expert consensus.

Table 3.

EHR and non-EHR KSA subcategories

| Non-EHR related KSA list subcategories | EHR-related KSA list subcategories |

|---|---|

| General knowledge needed by scribes | Knowledge directly related to the EHRs |

| General attitudes of scribes: cognition | Attitudes of scribing related to EHR |

| General attitudes of scribes: affect | Attitudes of scribing related to EHR: prerequisites |

| General attitudes of scribes: behavior | Skills directly related to the EHR: documentation |

| More general skills needed by scribes (i.e. more foundational items) | Skills directly related to the EHR: clinical decision support |

EHR: electronic health record; KSA: knowledge, skills, and attitudes.

Delphi results

The survey was pilot-tested with 3 nonparticipants/research team members (JG, VM, RB) and the first round of the Delphi survey was subsequently sent to all 20 SMEs who attended the conference. Seventeen experts completed the first survey and reviewed 89 KSAs.

After tabulating results from the first survey, the research team made recommended changes to the KSAs and iteratively deployed a second survey, which was redistributed to the SMEs. Twelve of the SMEs participated in the second survey. In round two, the SMEs were presented with 73 KSAs. Based on the SME’s participation in round one of the Delphi, certain items were deleted or changed, which is why in round two, there were only 73 KSAs. Finally, after results of the second survey were tabulated and incorporated within the KSA lists, we distributed the third and final KSA lists to SMEs to member check the final product.

After both Delphi rounds and after final edits with SME’s member checking of the final list, there were 90 total KSA items. Based on the SME and research team edits, it was decided that some KSAs contained multiple components and as a result, we disaggregated and parsed these out into individual KSAs to allow for better future assessment. This is why the number of KSAs increased from round two to round three. The research team then divided the KSAs into 3 lists based on the best way to teach medical scribes about them. For the 47 EHR-related KSAs, the research team determined that a hands-on simulation-based learning experience would be the best mode to teach these items. Because of this, we named this list of items “Hands-On Learning KSAs.” Out of the original 43 non-EHR related KSAs list, 32 items were determined to be best taught using didactic lectures and thus, we named this list “Didactic KSAs.” 11 KSA items were considered prerequisites to scribe training and could be taught as introductory lectures or even prior to hiring. We named this list “Prerequisite KSAs.” Fifteen experts reviewed these final items and approved them. Please see Figure 2, which depicts changes in the number of KSA items in each stage of the process.

Figure 2.

Changes in number of KSAs throughout entire process. KSA: knowledge, skills, and attitudes.

Please see the Supplementary Appendices B, C, and D for the full lists. Summaries are below.

Hands-On Learning KSAs

Elements within the Hands-On Learning KSA list can best be taught using simulation-based activities. The research team divided this list using the ACGME categorization as well as an adapted version of the subcategorization strategy we created in Table 2. Please see Table 4, which describes the ACGME categorization and subcategorizations used for the Hands-On Learning list.

Table 4.

ACGME and subcategorization for the Hands-On Learning KSA items

| Hands-On Learning KSA list | |

|---|---|

| ACGME categorization | Subcategorization |

| Medical knowledge | General EHR knowledge |

| EHR documentation systems | |

| General knowledge | |

| Practice based learning and improvement | General EHR knowledge |

| EHR clinical decision support | |

| Attitudes of scribes directly related to the EHR | |

| Prerequisites EHR skills | |

| General skills | |

| Interpersonal and communication skills | Attitudes of scribes directly related to the EHR |

| EHR documentation systems | |

| Professionalism | General EHR knowledge |

| Systems-based practice | General EHR knowledge |

| Cognition | |

| Foundational skills | General EHR knowledge |

| Prerequisite EHR Skills | |

| EHR documentation systems | |

| EHR clinical decision support | |

EHR: electronic health record; KSA: knowledge, skills, and attitudes.

The first set of KSAs within this list focused on medical knowledge, including learning medical terminology, the spelling of clinically relevant words, common medication dosages, and abbreviations approved by the scribe’s organization. The second KSA category was practice-based learning and improvement, which involved scribe familiarity with organization and department-specific documentation standards (note types, sections, formatting, etc.). The third KSA category was that of interpersonal communication skills. Elements within this category included the need for scribes to be able to synthesize information from all sources during the patient-provider encounter, including verbal and nonverbal cues from both provider and patient. The fourth KSA category was professionalism, involving the need for scribes to be formally trained regarding concepts associated with compliance, HIPAA, patient privacy, and security. For the system-based practice KSA items, scribes need to be aware of organizational expectations and the limitations of their role and have the ability to focus and pay attention in all clinical environments. Finally, some EHR KSAs were background competencies on which the performance of many of those KSAs depended. For these foundational KSAs, scribes need to be proficient with basic technical skills such as typing with speed and accuracy, using a cogent writing style, and completing common computer-based tasks. Scribes also need to have expertise navigating the EHR. It is critical that a scribe’s documentation is consistent with standards and the style set forth by the organization and provider. For a full list of Hands-On Learning KSAs, please see Supplementary Appendix B.

Didactic KSAs

Elements within the Didactic KSA list can best be taught using lectures. For the final Didactic KSA list, the research team divided this list using the ACGME categorization as well as an adapted subcategorization strategy. Please see Table 5, which describes the ACGME categorization and subcategorizations used for the Didactic list.

Table 5.

Categorization and subcategorization of Didactic KSAs

| Didactic KSA list | |

|---|---|

| ACGME categorization | Subcategorization |

| Medical knowledge | General EHR knowledge |

| EHR documentation systems | |

| Practice-based learning and improvement | General EHR knowledge |

| Attitudes of scribes directly related to the EHR | |

| Prerequisites EHR skills | |

| Affect | |

| Interpersonal and communication skills | Attitudes of scribes directly related to the EHR |

| Cognition | |

| Behavior | |

| Professionalism | General EHR knowledge |

| Cognition | |

| Behavior | |

| System-based practices | General EHR knowledge |

| Foundational skills | General EHR knowledge |

| Prerequisite EHR skills | |

| EHR documentation systems | |

| EHR clinical decision support | |

EHR: electronic health record; KSA: knowledge, skills, and attitudes.

The KSA elements within this didactically oriented list included specialty-specific knowledge such as the types of patients being seen as well as common medical issues for the demographics of their providers’ patients. Didactic KSA elements within the domain of practice-based learning and improvement primarily focused on the relationship between documentation and coding. For example, Didactic KSAs in the interpersonal and communication skills domain highlighted the need for scribes to possess the ability to collaborate in a team environment and seek clarification when necessary. Didactic KSAs in the professional domain emphasized the need for scribes to show respect for patients, exhibit emotional regulation, be honest with the health care team, and be flexible. In addition, the systems-based practice domain included the need for scribes to prepare themselves appropriately prior to patient visits and understand their scope of practice. For the entire list of Didactic KSAs, please see Supplementary Appendix C.

Prerequisite KSAs

With guidance from SMEs, the research team defined a set of foundational prerequisite KSAs, i.e. KSAs that scribes should possess very soon after hiring, or even prior to being hired. The research team determined that this would be best taught through a series of didactic lectures.

Please see Table 6, which describes the ACGME categorization and the team’s adapted subcategorizations used for the Prerequisite KSA list.

Table 6.

Categorization and subcategorization of prerequisite KSAs

| Prerequisite KSA list | |

|---|---|

| ACGME categorization | Subcategorization |

| Practice based learning and improvement | General EHR knowledge |

| Affect | |

| General skills | |

| Interpersonal and communication skills | Cognition |

| Professionalism | General knowledge |

| Cognition | |

| Affect | |

| System-based practice | Cognition |

| Behavior | |

EHR: electronic health record; KSA: knowledge, skills, and attitudes.

Practice based learning and improvement-related Prerequisite KSAs include the need for scribes to possess mental stamina and demonstrate an eagerness and passion to learn. Interpersonal communication-related Prerequisite KSAs involve the need for scribes to demonstrate patience and compassion when dealing with patients and equanimity when communicating with other members of their care delivery team. Professionalism-related Prerequisite KSAs highlight the need for scribes to have on-boarding training to learn about organizational rules as soon as possible after being hired. System-based practice-related Prerequisite KSAs include the importance of the scribe participating in activities that improve efficiency and quality of the clinical care that is being delivered. Scribes should also receive organization-specific training on how to perform their roles and know how to report safety and quality concerns. For a full list of Prerequisite KSAs, please see Supplementary Appendix D.

DISCUSSION

This process, for the first time, created discrete lists of scribe KSAs that have emanated from ethnographic site visits and have been further developed, validated, and refined by a group of subject matter experts during a consensus conference and Delphi exercise. These KSAs also promote efficient and effective scribe use and foster the ability of scribes to perform their tasks depending on role, context, and environment. There are few national oversight and regulation policies related to scribes.18,44 There is also no national organization or national standardization of the training programs for scribes, which make it difficult to assess the quality of scribing programs.18,44 With the lack of regulation, our research team aimed to create a standard framework to assess scribes’ KSAs and readiness for entering the workforce. We ultimately created 3 lists of KSAs, to be delivered through either simulation or didactic lectures.

These KSAs offer a comprehensive and contemporary description of scribe activities and were forged by utilizing a Delphi process that allowed for the development of consensus by SMEs and were anchored in qualitative data from previous site visits. By gathering qualitative data utilizing semistructured interviews and observations, the team learned what a purposively selected variety of scribe programs in the United States were allowing scribes to do. We also gained an overall understanding of the landscape of scribes and from that understanding we built an initial grounded framework for the KSAs. The SMEs examined the KSAs from different perspectives using disparate professional lenses. By identifying scribe activities through site visits, a SME consensus conference, and the Delphi, we are confident the KSA lists are as encompassing as possible.44–47

There are some limitations to this study. The first surrounds our selection of SMEs. While we did consider diversity of perspectives and expertise when purposively selecting SMEs, the number was limited by the in-person conference format. Most often the Delphi method anonymizes respondents to decrease bias, but our respondents knew one another after attending the conference together. While this may bias responses, it also served to educate respondents prior to the Delphi and help assure their responding to the surveys. After the conference, some attendees, including the industry representatives, did not respond to the Delphi, so their input was not consistent throughout the process. Another downside to the Delphi methodology was that we experienced some attrition. However, the pool of SMEs who did complete all rounds of the Delphi was still geographically diverse and role diverse.

There are, of course, other limitations to this study. Because of the lack of standardization and wide variability in scribing activities, there is a possibility that we missed important scribe KSAs during site visits and SME discussions. The industry is changing quickly, so some KSAs may become outdated over time or were not discovered during the study period. Future studies should validate the research team’s KSAs and update them over time. Also, given the lack of oversight from accreditation or governing body, these KSAs may not be adapted in toto or be used in a standardized way.

Specifically, the KSAs were developed before the advent of the COVID-19 pandemic, so the landscape of care delivery as well as some of the established paradigms have been significantly and possibly irrevocably altered by the ongoing pandemic.46 COVID-19 caused a rapid shift to tele-medicine and also tele-scribing. As tele-scribing becomes more dominant in the future, the KSAs might need to adapt to fit this shift in workflow. Therefore, future studies could investigate how tele-medicine has changed scribe KSAs.

Finally, the lists of KSAs developed during this study are in the process of being turned into simulation cases and didactic lectures, which can provide standardized training to be used by individuals or organizations hiring scribes. Our research team will be developing the training toolkit. Future research should include assessment of adoption and effectiveness of these training materials. As scribes continue to be a major part of the workforce, the need for training scribes in these KSA areas becomes increasingly urgent.

CONCLUSION

With the assistance of the SMEs, the research team has developed lists of knowledge, skills, and attitudes for scribes. The strategy of conducting site visits to identify needed attributes, holding an expert conference for verification and conducting a modified Delphi exercise for refinement resulted in 3 agreed-upon lists: (1) Hands-On Learning KSAs, (2) Didactic KSAs, and (3) Prerequisite KSAs. This study, for the first time, elicits a series of KSAs to allow for standardization of scribe training and assessment. Integration of these KSAs into a comprehensive training curriculum will provide the needed foundation to ensure safe and effective scribe use and establish the methodology for development of additional KSAs as the scribe industry continues to evolve.

FUNDING

This work was supported by the Agency for Healthcare Research and Quality (AHRQ) grant number HHSA290200810010.

AUTHOR CONTRIBUTIONS

SC was lead author on this paper. JSA was colead author on this paper. All authors reviewed and edited the paper before submission. JSA, JAG, and VM came up with research project, design, and methodology. SC, JSA, NS, JB, RB, BO, and CH conducted ethnographic site visits. All authors aided in the development of the knowledge, skills, and attitudes lists for medical scribes.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

We would like to thank Jeremy Liu, Renee Kostrba, Peter Lundeen, and Marcia Sparling for their help at each site, as well as administrators, providers, and scribes for their participation in this project.

CONFLICT OF INTEREST STATEMENT

None declared.

Contributor Information

Sky Corby, Department of Pulmonary and Critical Care Medicine, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Joan S Ash, Department of Medical Informatics and Clinical Epidemiology, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Keaton Whittaker, Department of Medical Informatics and Clinical Epidemiology, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Vishnu Mohan, Department of Medical Informatics and Clinical Epidemiology, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Nicholas Solberg, Department of Pulmonary and Critical Care Medicine, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

James Becton, Department of Medical Informatics and Clinical Epidemiology, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Robby Bergstrom, Department of Medical Informatics and Clinical Epidemiology, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Benjamin Orwoll, Department of Medical Informatics and Clinical Epidemiology, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA; Department of Pediatric Critical Care, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Christopher Hoekstra, Department of Medical Informatics and Clinical Epidemiology, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Jeffrey A Gold, Department of Pulmonary and Critical Care Medicine, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA; Department of Medical Informatics and Clinical Epidemiology, School of Medicine, Oregon Health & Science University, Portland, Oregon, USA.

Data Availability

The data underlying this article cannot be shared publicly due to the privacy of individuals who participated in the study. The data will be shared on reasonable request to the corresponding author.

REFERENCES

- 1. Buntin MB, Burke MF, Hoaglin MC, Blumenthal D.. The benefit of health information technology: a review of the recent literature shows predominantly positive results. Health Aff (Millwood) 2011; 30 (3): 464–71. [DOI] [PubMed] [Google Scholar]

- 2. Bates DW, Landman AB.. Use of medical scribes to reduce documentation burden: are they where we need to go with clinical documentation? JAMA Intern Med 2018; 178 (11): 1472–3. [DOI] [PubMed] [Google Scholar]

- 3. Downing NL, Bates DW, Longhurst CA.. Physician burnout in the electronic health record era: are we ignoring the real cause? Ann Intern Med 2018; 169 (1): 50–1. [DOI] [PubMed] [Google Scholar]

- 4. Kroth PJ, Morioka-Douglas N, Veres S, et al. The electronic elephant in the room: physicians and the electronic health record. JAMIA Open 2018; 1 (1): 49–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Bossen C, Chen Y, Pine KH.. The emergence of new data work occupations in healthcare: the case of medical scribes. Int J Med Inform 2019; 123: 76–83. [DOI] [PubMed] [Google Scholar]

- 6. With scribes, doctors think medically instead of clerically. Daily Brief 2014. https://www.advisory.com/daily-briefing/2014/01/14/with-scribes-doctors-think-medically-instead-of-clerically Accessed October 27, 2020. [Google Scholar]

- 7. Kwon S. To free doctors from computers, far-flung scribe are now taking notes for them. Kaiser Health News 2020. https://www.healthleadersmedia.com/telehealth/free-doctors-computers-far-flung-scribes-are-now-taking-notes-them Accessed October 29, 2020. [Google Scholar]

- 8. Apathy NC, Hare AJ, Fendrich S, Cross DA.. Early changes in billing and notes after evaluation and management guideline change. Ann Intern Med 2022; 175 (4): 499–504. [DOI] [PubMed] [Google Scholar]

- 9.“Reducing provider and patient burden by improving prior authorization processes, and promoting patients’ electronic access to health information” CMS.gov. December 18, 2020. https://www.cms.gov/Regulations-and-Guidance/Guidance/Interoperability/index Accessed February 3, 2021.

- 10.“Documentation assistance provided by scribes: what guidelines should be followed when physicians or other licensed independent practitioners use scribes to assist with documentation?” jointcommisson.org. April 6, 2020. https://www.jointcommission.org/standards/standard-faqs/nursing-care-center/record-of-care-treatment-and-services-rc/000002210/ Accessed February 3, 2021.

- 11. Heaton HA, Wang R, Farrell KJ, et al. Time motion analysis: impact of scribes on provider time management. J Emerg Med 2018; 55 (1): 135–40. [DOI] [PubMed] [Google Scholar]

- 12. Sinsky CA, Willard-Grace R, Schutzbank A, Sinsky TA, Margolius D, Bodenheimer T.. In search of joy in practice: a report of 23 high-functioning primary care practices. Ann Fam Med 2013; 11 (3): 272–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Mishra P, Kiang JC, Grant RW.. Association of medical scribes in primary care with physician workflow and patient experience. JAMA Intern Med 2018; 178 (11): 1467–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Shuaib W, Hilmi J, Caballero J, Rashid I, et al. Impact of a scribe program on patient throughput, physician productivity, and patient satisfaction in a community-based emergency department. Health Informatics J 2019; 25 (1): 216–24. [DOI] [PubMed] [Google Scholar]

- 15. Zallman L, Finnegan K, Roll D, Todaro M, Oneiz R, Sayah A.. Impact of medical scribes in primary care on productivity, face-to-face time, and patient comfort. J Am Board Fam Med 2018; 31 (4): 612–9. [DOI] [PubMed] [Google Scholar]

- 16.“It’s vital that all medical scribes certify with ACMSS” scribeamerica.com. December 31, 2013. https://www.scribeamerica.com/blog-post/its-vital-that-all-medical-scribes-certify-with-acmss/ Accessed February 4, 2021.

- 17. Martel M, Imdieke BH, Holm KM, et al. Developing a medical scribe program at an academic hospital: the Hennepin county medical center experience. Jt Comm J Qual Patient Saf 2018; 44 (5): 238–49. [DOI] [PubMed] [Google Scholar]

- 18. Gellert GA, Ramirez R, Webster SL.. The rise of the medical scribe industry: implications for the advancement of electronic health records. JAMA 2015; 313 (13): 1315–6. [DOI] [PubMed] [Google Scholar]

- 19. McGinty MD, Castrucci BC, Rios DM.. Assessing the knowledge, skills, and abilities of public health professionals in big city governmental health departments. J Public Health Manag Pract 2018; 24 (5): 465–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Corby S, Gold JA, Mohan V, et al. A sociotechnical multiple perspectives approach to the use of medical scribes: a deeper dive into the scribe-provider interaction. AMIA Annu Symp Proc 2020; 2019: 333–42. [PMC free article] [PubMed] [Google Scholar]

- 21. Ash JS, Corby S, Mohan V, et al. Safe use of the EHR by medical scribes: a qualitative study. J Am Med Inform Assoc 2021; 28 (2): 294–302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Starvi Z, Ash JS.. Does failure breed success: narrative analysis of stories about computerized provider order entry. Int J Med Inform Assoc 2003; 10 (3): 229–34. [DOI] [PubMed] [Google Scholar]

- 23. Ash JS, Stavri PZ, Kuperman GJ.. A consensus statement on considerations for a successful CPOE implementation. J Am Med Inform Assoc 2003; 10 (3): 229–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Forrest CB, Chesley FD, Tregear ML, Mistry KB.. Development of the learning health system researcher core competencies. Health Serv Res 2018; 53 (4): 2615–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. McPherson SR, Wendler C, Cecilia M.. Methodology update Delphi studies. Nurs Res 2018; 67 (5): 404–10. [DOI] [PubMed] [Google Scholar]

- 26. Silverman HD, Steen EB, Carpenito JN, Ondrula CJ, Williamson JJ, Fridsma DB.. Domains, tasks, and knowledge for clinical informatics subspecialty practice: results of a practice analysis. J Am Med Inform Assoc 2019; 26 (7): 586–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Gadd CS, Steen EB, Caro CM, Greenberg S, Williamson JJ, Fridsma DB.. Domains, tasks, knowledge for health informatics practice: results of a practice analysis. J Am Med Inform Assoc 2020; 27 (6): 845–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Keeney S, Hasson F, Mckenna HP.. A critical review of the Delphi technique as a research methodology for nursing. Int J Nurs Stud 2001; 38 (2): 195–200. [DOI] [PubMed] [Google Scholar]

- 29. Engelman D, Fuller LC, Steer AC; International Alliance for the Control of Scabies Delphi Panel. Consensus criteria for the diagnosis of scabies: a Delphi study of international experts. PLoS Negl Trop Dis 2018; 12 (5): e0006549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Gruppen LD, Mangrulkar RS, Kolars JC.. The promise of competency-based education in the health professions for improving global health. Hum Resour Health 2012; 10: 43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Fernandez N, Dory V, Ste-Marie LG, Chaput M, Charlin B, Boucher A.. Varying conceptions of competence: an analysis of how health sciences educators define competence. Med Educ 2012; 46 (4): 357–65. [DOI] [PubMed] [Google Scholar]

- 32. Nordhues HC, Bashir MU, Merry SP, Sawatsky AP.. Graduate medical education competencies for international health electives: a qualitative study. Med Teach 2017; 39 (11): 1128–37. [DOI] [PubMed] [Google Scholar]

- 33. Melender H-L, Hökkä M, Saarto T, Lehto JT.. The required competencies of physicians within palliative care from the perspectives of multi-professional expert groups: a qualitative study. BMC Palliat Care 2020; 19 (1): 65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Martins JCL, Martins CL, Oliveira L.. Attitudes, knowledge and skills of nurses in the Xingu Indigenous Park. Rev Bras Enferm 2020; 73 (6): e20190632. [DOI] [PubMed] [Google Scholar]

- 35. Batalden P, Leach D, Swing S, Dreyfus H, Dreyfus S.. General competencies and accreditation in graduate medical education. Health Aff (Millwood) 2002; 21 (5): 103–11. [DOI] [PubMed] [Google Scholar]

- 36. Miller ME, Scholl G, Corby S, Mohan V, Gold JA.. The impact of electronic health record–based simulation during intern boot camp: interventional study. JMIR Med Educ 2021; 7 (1): e25828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Mohan V, Woodcock D, McGrath K, et al. Using simulations to improve electronic health record use, clinician training and patient safety: recommendations from a consensus conference. AMIA Annu Symp Proc 2016; 2016: 904–13. [PMC free article] [PubMed] [Google Scholar]

- 38. Bordley J, Sakata KK, Bierman J, et al. Use of a novel, electronic health record-centered, interprofessional ICU rounding simulation to understand latent safety issues. Crit Care Med 2018; 46 (10): 1570–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Rhodes ML, Curran C.. Use of the human patient simulator to teach clinical judgment skills in a baccalaureate nursing program. Comput Inform Nurs 2005; 23 (5): 256–62. [DOI] [PubMed] [Google Scholar]

- 40. Mohan V, Scholl G, Gold JA.. Intelligent simulation model to facilitate EHR training. AMIA Annu Symp Proc 2015; 2015: 925–32. [PMC free article] [PubMed] [Google Scholar]

- 41. Gold JA, Stephenson LE, Gorsuch A, Parthasarathy K, Mohan V.. Feasibility of utilizing a commercial eye tracker to assess electronic health record use during patient simulation. Health Informatics J 2016; 22 (3): 744–57. [DOI] [PubMed] [Google Scholar]

- 42. Stephenson LS, Gorsuch A, Hersh WR, Mohan V, Gold JA.. Participation in EHR based simulation improves recognition of patient safety issues. BMC Med Educ 2014; 14: 224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Khan KS, Coomarasamy A.. A hierarchy of effective teaching and learning to acquire competence in evidenced-based medicine. BMC Med Educ 2006; 6: 59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Shaffer K, Small JE.. Blended learning in medical education: use of an integrated approach with web-based small group modules and didactic instruction for teaching radiologic anatomy. Acad Radiol 2004; 11 (9): 1059–70. [DOI] [PubMed] [Google Scholar]

- 45. Gold JA. Take note: how medical scribes are trained- and used- varies widely. The Doctors Company. 2017. www.thedoctors.com Accessed June 24, 2021.

- 46. Gold JA, Becton J, Ash JS, Corby S, Mohan V.. Do you know what your scribe did last spring? The impact of COVID-19 on medical scribe workflow. Appl Clin Inform 2020; 11 (5): 807–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Shultz CG, Holmstrom HL.. The use of medical scribes in health care settings: a systematic review and future directions. J Am Board Fam Med 2015; 28 (3): 371–81. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article cannot be shared publicly due to the privacy of individuals who participated in the study. The data will be shared on reasonable request to the corresponding author.