Abstract

Peer-based aggression following social rejection is a costly and prevalent problem for which existing treatments have had little success. This may be because aggression is a complex process influenced by current states of attention and arousal, which are difficult to measure on a moment to moment basis via self report. It is therefore crucial to identify nonverbal behavioral indices of attention and arousal that predict subsequent aggression. We used Support Vector Machines (SVMs) and eye gaze duration and pupillary response features, measured during positive and negative peer-based social interactions, to predict subsequent aggressive behavior towards those same peers. We found that eye gaze and pupillary reactivity not only predicted aggressive behavior, but performed better than models that included information about the participant’s exposure to harsh parenting or trait aggression. Eye gaze and pupillary reactivity models also performed equally as well as those that included information about peer reputation (e.g. whether the peer was rejecting or accepting). This is the first study to decode nonverbal eye behavior during social interaction to predict social rejection-elicited aggression.

I. INTRODUCTION

Each year, violence around the world exacts a staggering personal and financial cost [1][2][3]. This burden is disproportionately born by adolescents and young adults, for whom homicide linked to peer-based rejection (e.g., bullying, romantic relationship failures) remains a leading cause of death [4][5]. One catalyst for this surge in rejection-related aggression may be a developmentally normative increase in the desire for social status and acceptance during adolescence [6][7][8][9][10]. In fact, 87 percent of US school shooters felt rejected by their peers - a better predictor of violence than mental illness, interest in weapons, or a fascination with death [11].

Given the common and costly nature of aggression, novel, empirically derived prevention programs are needed. Traditional intervention programs that target those at risk for perpetrating aggression based on self-report questionnaires measuring aggressive traits and exposure to harsh parenting have had only limited success [26]. This may be because aggression is the product of a complex decision-making process influenced by current states of attention and arousal [27]. An essential first step in understanding these complexities is to isolate patterns of attention and arousal that reliably predict forthcoming rejection-elicited aggression. Eye gaze and pupillary response are strong candidates given that they are well-established indices of attention and arousal states [12][13]. Yet, the few studies that demonstrate more avoidant gaze [14] and pupillary reactivity [15][16] to social rejection than acceptance did not attempt to link these behaviors to subsequent peer-based aggression.

Despite the relatively common use of eye gaze and pupillary measures to quantify responses to affect-eliciting stimuli [12][18][19], several constraints have hampered their measurement during social rejection. First, computer-based paradigms are needed for accurate gaze and pupillary recording, yet laboratory-based social rejection is commonly elicited with face-to-face interactions [20][21]. Second, most computer-based social rejection paradigms include visuospa-tial confounds. For example, a ‘cyber-ball’ task [22][23] where participants are excluded from a ball tossing game, is poorly suited for measuring rejection-related gaze patterns because ball movements capture visual attention.

In this paper, we show that nonverbal behavior elicited during an ecologically valid peer interaction paradigm is predictive of subsequent peer-based physical aggression. More specifically, we provide empirical evidence that patterns of eye gaze duration and pupillary response engaged during social rejection and aggression-based decision-making can be used to predict subsequent aggression. To the best of our knowledge, ours is the first work that predicts aggression based on nonverbal behaviours observed prior to its expression. This ability to predict peer-based aggression could inform the development of interventions in which individuals are trained to prevent aggression by interceding the moment its behavioral precursors are detected.

II. Related Work

Eye tracking data has been used extensively as a measure of human behavior in a variety of contexts. For example, [37] showed a sudden increase in pupil size 400ms from the onset of both negative and positive auditory stimuli versus neutral stimuli. [36] studied the effect of different video types on pupil diameter, gaze distance, blink rate, blink length and length of longest blink. They found that the average blink rate was higher in calmer videos while the maximum blink length was higher for unpleasant videos. [35] and [36] found eye tracking data predicted arousal and valance classifications of a video. Eye tracking data has also been used to predict consumer behavior. [30] and [32] found consumer decisions were predicted by dwell time and number of fixations. These and other prior studies demonstrate that eye gaze and pupillary response patterns are predictive of both their affect and behavior. Therefore, the utility of nonverbal behaviors to predict individual behavioral responses has been well-established. However, these methods have not been applied to predict aggressive behavior following social rejection. It is important to address this gap as objective and empirically-derived predictors of aggressive behavior are sorely needed to inform the design of novel interventions. Thus, for the first time, we utilize these well-established predictive methods to predict aggressive behavior following social rejection.

III. Methods

A. Participants

College students (n = 95; females = 70.8%) over the age of 18 (M = 20.76; SD = 2.56) were recruited through an online database (SONA systems). Informed written consent was obtained prior to participation, and all procedures were approved by the Institutional Review Board at Stony Brook University.

B. Procedures

1). Questionnaires:

Because we aimed to compare the predictive ability of our nonverbal behavioral model to known psychosocial predictors of aggression, we collected self-report questionnaire data which measured 1) experience with harsh parenting (Parenting Styles and Dimensions Questionnaire [29]), and 2) trait aggression (Buss Perry Aggression Questionnaire [28]).

2). Behavioral Tasks:

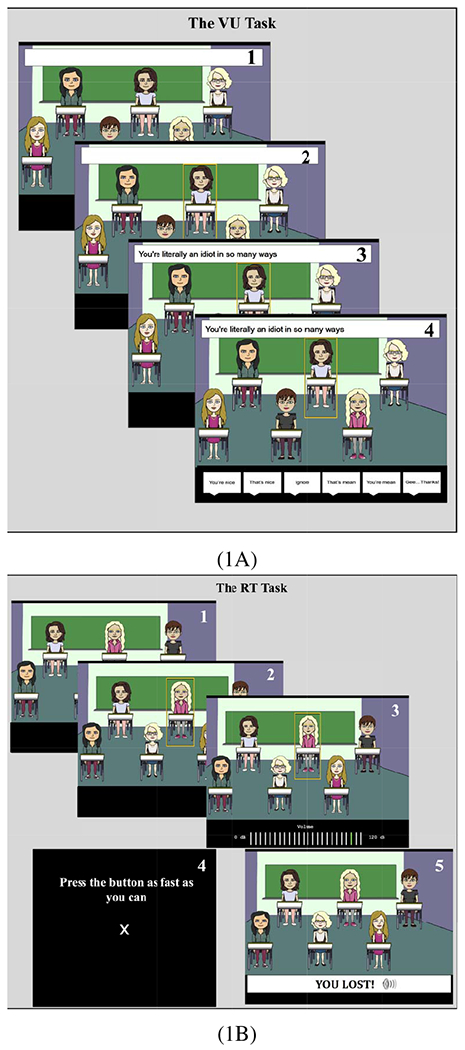

Upon arrival at the study visit, participants were told that they would be the “freshman” in a Virtual University and interact with six other students online. They first answered questions about themselves and created an avatar so that their peers could get to know them. They then learned the reputation of the other peers (2 nice, 2 mean, 2 unpredictable) using “Yelp-like” reviews purportedly left by previous participants. During the Virtual University (VU) task (Fig. 1A), participants entered twelve classrooms populated by the purported peers. Each peer interacted with the participant twelve times (24 interactions per peer type). Order of interactions were randomized to remove potential order effects that may influence aggressive behavior. Peer avatars were randomly assigned to different reputations and seating locations. Each trial included four temporal epochs. First, the participant viewed a classroom with each of the six peers they would be interacting with (Fig. 1A1). Next, the participant was cued to which peer was about to give them feedback by a yellow box (Fig 1A2). Third, the participant received social feedback, which was either positive or negative (Fig 1A3). Finally, the participant had the opportunity to respond to that peer by selecting one of several pre-programmed responses (Fig 1A4). After the VU task, participants completed a novel variant of the Taylor Aggression Paradigm [24][25], the Reaction Time (RT) task (Fig. 1B). The average level of aversive noise blast delivered to other peers by the participant was used to quantify aggressive behavior. Prior to the RT task, participants heard a noise blast characterized as moderately loud to ensure all participants had the same frame of reference. In this task, participants played a game against the purported peers they just interacted with. Each trial had five temporal epochs. Participants first viewed the same 6 peers they just interacted with (Fig 1B1), then were cued to which peer they were to set the volume (noise blast scale ranged from 1 to 24) of an aversive noise blast (Fig 1B2). They then decided how aggressive to be towards each peer as indexed by the volume of the white noise blast they chose deliver to each peer (Fig 1B3). They then had 5 sec to press a button as many times as they could (Fig 1B4); whoever had the most presses won the round and had the opportunity to aggress against losing peers. Finally, they learned if they won or lost the game (Fig 1B5). To increase believability, participants always won 6 rounds of the game (order randomized). On winning rounds, participants believed their purported peers heard the noise blasts they chose. Participants completed 12 rounds of this game, resulting in 72 instances of contemplated retribution and enacted aggression (24 per reputation type).

Fig. 1:

Experimental paradigm. (A) Virtual University (VU) task. (B) Reaction Time (RT) task. See text for details.

3). Eye gaze and pupillary response:

Eye gaze data was captured during the VU and RT paradigms. Eye position and pupil dilation were sampled at 1000 Hz using an Eye-link 1000 eye tracker (SR Research, Mississauga, Ontario, Canada). This table-mounted tracker consists of a video camera and infrared light source pointed that tracks the location and size of the pupil.

Although eye gaze and pupillary reactivity data were collected throughout both tasks, some data loss occurred due to participants looking away from the screen. Participants who were missing more than 5 trials of data (N=6) were excluded. Thus, we utilized 6165 data points across 89 subjects.

C. Data Analysis

In this paper, we aim to predict the level of participants’ aggressive behavior (indexed by level of noise blast they chose to deliver to each peer) from their fixation duration and pupil size. For simplicity, we binned the noise blast scale to operationalize four classes of aggression: 1) none (1); 2) low (2-11); 3) medium (12); and 4) high (13-24).

After grouping, the percent of trials in each class of aggression was as follows: 7% = none, 33% = low, 34% = medium, and 26% = high. In all analyses, we aimed to classify these four classes of aggression. The learning algorithm we used was the Support Vector Machine (SVM) [39] and we used SVM [39], with an RBF kernel as the classifier. We compared several classifiers including Random Forest, linear SVM, and RBF SVM, and selected the classifier with the highest accuracy. To address the imbalance in classes of aggression, we weighted the classes as inversely proportional to class frequencies. Prior to training the classifier, we standardized features. We implemented the models using Python and the Scikit-learn library [38].

Reputation-agnostic model.

In each epoch of the VU task and in each of the first three epochs in the RT task, we measured the normalized averaged pupil size and cumulative fixation duration (defined below) of the participant on each peer. For each trial, the normalized averaged pupil size for peer a and epoch e ∈ {1,2,3,4} was calculated as follows:

| (1) |

In this equation, pi is the pupil diameter of the i-th fixation, Ei and Ai denotes the epoch of the i-th fixation and the reputation of the peer on which the i-th fixation lands, respectively. L is the number of fixations in the first epoch of either task (Fig 1A1 and Fig 1B1). In this first summation of Equation (1), i: Ai = a, Ei = e we only count the fixations which are in epoch e and on peer a. counts the number of fixations that are in epoch e and on peer a. The second summation measures the pupil size baseline of the participant by averaging the last four fixations in the first epoch.

For each trial, the cumulative fixation duration (dwell time) for peer a and epoch e is calculated as follows:

| (2) |

In this equation, di is the fixation duration of the i-th fixation. Equation (2) sums the dwell time the participant spent fixating on peer a in epoch e.

In summary, we obtained an 84-dimensional feature vector for each trial, each epoch (four epochs in the VU task and three in the RT task), and each of the six peers for our two nonverbal behavioral features (pupil size and dwell time ).

Reputation-aware model.

The above model was reputation agnostic in that it had no information about the reputation of the peers (e.g. mean, nice, unpredictable). However, in this study participants learned the reputation of each peer prior to their interaction. Therefore, to test whether information pertaining to reputation improved predictive value of non-verbal behavior, we also included the reputation in the features and then tested this reputation-aware model.

We represented the reputation of each peer using one-hot encoding (i.e., a 3D vector with value one at the index of the reputation and zeros elsewhere) and concatenated the reputation vectors of all six peers to the features used in the reputation-agnostic model. The reputation-aware model therefore included the original nonverbal 84-dimensional features plus an additional 18-dimensional reputation vector.

IV. RESULTS

In our evaluation, we performed 10-fold cross-validation and report the mean and standard deviation of the classification accuracy. Specifically, we tested two models: 1) a reputation-agnostic model using eye gaze (dwell time) and pupillary reactivity (pupil size) as features; and 2) a reputation-aware model using eye gaze (dwell time) and pupillary reactivity (pupil size) features in addition to reputation of peer (mean, nice, unpredictable) as features. In addition to these two nonverbal models, we also created three questionnaire-based models, based on 1) participant experience with harsh parenting 2) participant self-reported trait aggression, and 3) both questionnaires combined. These questionnaires provide scalar values for each participant, which are used as features for classification. Table I presents the results from this model comparison. Both reputation-agnostic and reputation-aware nonverbal models solidly outperformed the questionnaire-based models. This is significant in that it shows that nonverbal features (i.e., dwell time and pupil size) are stronger predictors of aggressive behavior self report measures.

TABLE I:

Classification results of questionnaires. Three models are included: 1) experience with harsh parenting; 2) self-reported trait aggression and 3) both combined. Mean and standard deviation of 10-fold Cross-Validation results are reported.

| Parenting Data | Aggression Questionnaire | Parenting Data + Aggression Questionnaire | Reputation-agnostic Model | Reputation-aware Model | |

|---|---|---|---|---|---|

| Accuracy | 44.8 (+/−2.80) | 29.7 (+/−2.93) | 46.7 (+/−2.65) | 53.3 (+/−2.26) | 57.7 (+/−2.42) |

The previous comparison of reputation-agnostic and reputation-aware model performance collapsed across both the VU and RT tasks, and across epochs within each task. To compare prediction success for the reputation-aware and reputation-agnostic models across each task and epoch, we built individual models for each epoch in each task. Table II shows each epoch model predictions, as well as the nonverbal model predictions from Table I labeled as ”All Epochs”. Although the reputation-aware models were more predictive than the reputation-agnostic models, at the epoch level this difference was small. Given that the reputation-aware model required extra information about the peer’s reputation, the reputation-agnostic model is more parsimonious and therefore, we believe, preferred.

TABLE II:

Classification results for reputation-agnostic and reputation-aware models for each epoch in the VU and RT tasks. Mean and standard deviation of 10-fold Cross-Validation results are reported.

| Reputation-agnostic | Reputation-aware | ||

|---|---|---|---|

| All Epochs | 53.3 (+/−2.26) | 57.7 (+/−2.42) | |

| VU | Epoch 1 | 56.7 (+/−1.85) | 57.3 (+/−1.65) |

| Epoch 2 | 52.0 (+/−2.26) | 52.9 (+/−1.84) | |

| Epoch 3 | 48.4 (+/−1.69) | 53.8 (+/−1.61) | |

| Epoch 4 | 47.5 (+/−2.11) | 48.8 (+/−1.30) | |

| RT | Epoch 1 | 54.8 (+/−2.02) | 55.4 (+/−2.04) |

| Epoch 2 | 46.6 (+/−1.86) | 48.6 (+/−1.44) | |

| Epoch 3 | 51.6 (+/−1.34) | 56.2 (+/−1.21) | |

Focusing on just the reputation-agnostic results from Table II, the best performing model used eye response patterns within first epoch of the VU task (Fig. 1A1). The second most predictive epoch was an analogous period in the RT task (Fig. 1B1). Models for these epochs were substantially more predictive than for other epochs, and even outperformed the model combining all epochs.

V. Discussion

To our knowledge, this is the first study to show that eye gaze and pupillary response patterns during social interactions can predict subsequent aggressive behavior. Prior work examining nonverbal correlates of social rejection found that adolescents and young adults exhibit more avoidant gaze [14] and pupillary reactivity [15][16] in response to social rejection when compared to acceptance. This suggests that adolescents and young adults, for whom peer relationships are highly salient and important, exhibit differential eye gaze and pupillary reactivity when feeling rejected. However, no prior study has linked these nonverbal behaviors during social rejection to subsequent aggressive behavior.

We evaluated the prediction success of these nonverbal models both with and without features of peer reputation. We showed that models using nonverbal behavior features were more predictive of aggression than models based on self-report questionnaires of trait aggression and harsh parenting. We also found that models including the reputation of the peer (mean, nice, unpredictable) did not predict aggressive behavior better than eye gaze and pupillary patterns alone, suggesting that explicit knowledge of a peer’s reputation is not necessary to predict aggressive behavior.

Interestingly, we did not find eye gaze and pupillary response during receipt of the social rejection feedback (i.e. Fig. 1A3) to be most predictive of subsequent aggressive behavior. Rather, in a comparison of models trained on individual task epochs, we found that the epochs most predictive of aggressive behavior were those at the start of the tasks when attention was unconstrained. We speculate that when events during the task constrained participant attention (such as during the receipt of text-based social rejection, or the appearance of a box around a peer), nonverbal behaviors across participants were more similar. This would make predictions based on individual differences in nonverbal behavior difficult. However, participant attention in non-constrained epochs (similar to free viewing), may evoke more individual differences in eye gaze and pupillary reactivity patterns, thereby facilitating the prediction of forthcoming aggressive behavior. If true, this finding would further highlight the importance of utilizing naturalistic social interaction and aggression paradigms in which nonverbal behavioral patterns can be probed.

Despite the many strengths of this study, there are some limitations that should be addressed in future work. First, we did not have a large enough sample to apply deep neural network analyses to these data. Furthermore, we did not have the power to examine if participant age, gender, or race influence patterns of nonverbal behaviors that predict aggression. Therefore, our results may not be generalizable to samples of different ages, ethnic, or racial backgrounds.

In summary, when participants are free to look at their peers with minimal constraints on their attention, their nonverbal behavior can be a more powerful predictor of aggressive behavior than information about the other peers or the participant’s self-reported aggressive traits and experience with harsh parenting.

Given the common and costly nature of aggression, novel, empirically derived prevention programs are needed. This study lays the groundwork to achieve this broader objective. Next, we will utilize similar methods to investigate the ability of other nonverbal behaviors, such as participants’ facial expressions during the tasks, to better predict aggressive behavior. Future studies could then potentially train people to recognize key nonverbal behaviors that our computational models found best predict aggression.

Acknowledgments

This work was supported by NIH R21HD093912 grant awarded by the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the Partner University Fund, and the SUNY2020 Infrastructure Transportation Security Center, as well as a gift from Adobe.

References

- [1].Corso PS, Mercy JA, Simon TR, Finkelstein EA, Miller TR. Medical costs and productivity losses due to interpersonal and self-directed violence in the United States. Am J Prev Med. 2007, 32(6), pp. 474–482. [DOI] [PubMed] [Google Scholar]

- [2].Krug EG, Mercy JA, Dahlberg LL, Zwi AB. The world report on violence and health. Lancet. 2002, 360(9339), pp. 1083–1088. [DOI] [PubMed] [Google Scholar]

- [3].Waters HR, Hyder AA, Rajkotia Y, Basu S, Butchart A. The costs of interpersonal violence–an international review. Health Policy. 2005, 73(3), pp. 303–315. [DOI] [PubMed] [Google Scholar]

- [4].Xu J, Murphy SL, Kochanek KD, Bastian BA. Deaths: Final Data for 2013. Natl Vital Stat Rep. 2016, 64(2), pp. 1–119. [PubMed] [Google Scholar]

- [5].Centers for Disease Control and Prevention NCfIPaC. Web-based Injury Statistics Query and Reporting System (WISQARS) 2014.

- [6].Brown BB, Eicher SA, Petrie S. The importance of peer group (“crowd”) affiliation in adolescence. J Adolesc. 1986, 9(1), pp. 73–96. [DOI] [PubMed] [Google Scholar]

- [7].Steinberg L and Morris AS. Adolescent development. Annu Rev Psychol. 2001, 52, pp. 83–110. [DOI] [PubMed] [Google Scholar]

- [8].Crone EA and Dahl RE. Understanding adolescence as a period of social-affective engagement and goal flexibility. Nat Rev Neurosci. 2012, 13(9), pp. 636–650. [DOI] [PubMed] [Google Scholar]

- [9].Steinberg L and Silverberg SB. The vicissitudes of autonomy in early adolescence. Child Dev. 1986, 57(4), pp. 841–851. [DOI] [PubMed] [Google Scholar]

- [10].Rubin KH, Bukowski WM, Bowker JC. Children in Peer Groups. Handbook of Child Psychology and Developmental Science: John Wiley Sons, Inc.; 2015. [Google Scholar]

- [11].Leary MR, Kowalski RM, Smith L, Phillips S. Teasing, rejection, and violence: Case studies of the school shootings. Aggressive Behav. 2003, 29(3), pp. 202–214. [Google Scholar]

- [12].Bradley MM, Miccoli L, Escrig MA, Lang PJ. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology. 2008, 45(4), pp. 602–607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Langton SRH, Watt RJ, Bruce V. Do the eyes have it? Cues to the direction of social attention. Trends Cogn Sci. 2000, 4(2), pp. 50–59. [DOI] [PubMed] [Google Scholar]

- [14].Nansel TR, Overpeck M, Pilla RS, Ruan WJ, Simons-Morton B, Scheidt P. Bullying behaviors among US youth: prevalence and association with psychosocial adjustment. JAMA. 2001, 285(16), pp. 2094–2100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Stone LB, Silk JS, Siegle GJ, et al. Depressed Adolescents’ Pupillary Response to Peer Acceptance and Rejection: The Role of Rumination. Child Psychiatry Hum Dev. 2016, 47(3), pp. 397–406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Silk JS, Stroud LR, Siegle GJ, Dahl RE, Lee KH, Nelson EE. Peer acceptance and rejection through the eyes of youth: pupillary, eye tracking and ecological data from the Chatroom Interact task. Soc Cogn Affect Neurosci. 2012, 7(1), pp. 93–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Moor BG, Guroglu B, Op de Macks ZA, Rombouts SA, Van der Molen MW, Crone EA. Social exclusion and punishment of excluders: neural correlates and developmental trajectories. Neuroimage. 2012, 59(1), pp. 708–717. [DOI] [PubMed] [Google Scholar]

- [18].Nummenmaa L, Hyona J, Calvo MG. Eye movement assessment of selective attentional capture by emotional pictures. Emotion. 2006, 6(2), pp. 257–268. [DOI] [PubMed] [Google Scholar]

- [19].Mauss I and Robinson M. Measures of emotion: A review. Cognition Emotion. 2009, 23(2), pp. 209–237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Hubbard JA. Emotion expression processes in children’s peer interaction: The role of peer rejection, aggression, and gender. Child Development. 2001, 72(5), pp. 1426–1438. [DOI] [PubMed] [Google Scholar]

- [21].Stroud LR, Foster E, Papandonatos GD, et al. Stress response and the adolescent transition: Performance versus peer rejection stressors. Development and Psychopathology. 2009, 21(1), pp. 47–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Hartgerink CH, van Beest I, Wicherts JM, Williams KD. The ordinal effects of ostracism: a meta-analysis of 120 Cyberball studies. PLoS One. 2015, 10(5), pp. e0127002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Williams KD, Cheung CK, Choi W. Cyberostracism: effects of being ignored over the Internet. J Pers Soc Psychol. 2000, 79(5), pp. 748–762. [DOI] [PubMed] [Google Scholar]

- [24].Taylor SP. Aggressive behavior and physiological arousal as a function of provocation and the tendency to inhibit aggression1. Journal of Personality. 1967, 35(2), pp. 297–310. [DOI] [PubMed] [Google Scholar]

- [25].Giancola PR and Parrott DJ. Further evidence for the validity of the Taylor aggression paradigm. Aggressive Behav. 2008, 34(2), pp. 214–229. [DOI] [PubMed] [Google Scholar]

- [26].Matjasko JL, Vivolo-Kantor AM, Massetti GM, Holland KM, Holt MK, Dela Cruz J. A systematic meta-review of evaluations of youth violence prevention programs: Common and divergent findings from 25 years of meta-analyses and systematic reviews. Aggress Violent Beh. 2012, 17(6), pp. 540–552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].DeWall CN, Anderson CA, Bushman BJ. The General Aggression Model: Theoretical Extensions to Violence. Psychol Violence. 2011, 1(3), pp 245–258. [Google Scholar]

- [28].Buss AH and Perry M. Journal of Personality and Social Psychology. 1992, 63(3), pp 452–459. [DOI] [PubMed] [Google Scholar]

- [29].Robinson C, Mandleco B, Roper S, Hart C. The Parenting Styles and Dimensions Questionnaire (PSDQ). Handbook of Family Measurement Techniques. 2001, 3, pp 319–321. [Google Scholar]

- [30].Gidlof K, Wallin A, Dewhurst R, Holmqvist K (2013). Using Eye Tracking to Trace a Cognitive Process: Gaze Behaviour During Decision Making in a Natural Environment. Journal of Eye Movement Research, 6(1). [Google Scholar]

- [31].Terburg D, Hooiveld N, Aarts H, Kenemans JL, van Honk J (2011). Eye Tracking Unconscious Face-to-Face Confrontations: Dominance Motives Prolong Gaze to Masked Angry Faces. Psychological Science, 22(3), 314–319. [DOI] [PubMed] [Google Scholar]

- [32].Goyal S, Miyapuram KP and Lahiri U, ‘Predicting Consumer’s Behavior Using Eye Tracking Data,” 2015 Second International Conference on Soft Computing and Machine Intelligence (ISCMI), Hong Kong, 2015, pp. 126–129. [Google Scholar]

- [33].Alhargan A, Cooke N and Binjammaz T, ‘Affect recognition in an interactive gaming environment using eye tracking,” 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, 2017, pp. 285–291. doi: 10.1109/ACII.2017.8273614 [DOI] [Google Scholar]

- [34].Lanata A, Armato A, Valenza G and Scilingo EP, ”Eye tracking and pupil size variation as response to affective stimuli: A preliminary study,” 2011 5th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth) and Workshops, Dublin, 2011, pp. 78–84. doi: 10.4108/icst.pervasivehealth.2011.246056 [DOI] [Google Scholar]

- [35].Soleymani M, Lichtenauer J, Pun T and Pantic M, ”A Multimodal Database for Affect Recognition and Implicit Tagging,” in IEEE Transactions on Affective Computing, vol. 3, no. 1, pp. 42–55, Jan-Mar 2012. doi: 10.1109/T-AFFC.2011.25 [DOI] [Google Scholar]

- [36].Soleymani M, Pantic M and Pun T, ”Multimodal emotion recognition in response to videos (Extended abstract),” 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, 2015, pp. 491–497. doi: 10.1109/ACII.2015.7344615 [DOI] [Google Scholar]

- [37].Partala Timo, Surakka Veikko, Pupil size variation as an indication of affective processing, International Journal of Human-Computer Studies, Volume 59, Issues 1–2, 2003, Pages 185–198, ISSN 10715819, 10.1016/S1071-5819(03)00017-X. [DOI] [Google Scholar]

- [38].Pedregosa F and Varoquaux G and Gramfort A and Michel V and Thirion B and Grisel O and Blondel M and Prettenhofer P and Weiss R and Dubourg V and Vanderplas J and Passos A and Cournapeau D and Brucher M and Perrot M and Duchesnay E Scikit-learn: Machine Learning in Python, Journal of Machine Learning Research, 2011, pp. 2825–2830. [Google Scholar]

- [39].Cortes C, Vapnik V (1995). Support-vector networks. Machine learning, 20(3), 273–297. [Google Scholar]