Abstract

Objective:

A computer vision method was developed for estimating the trunk flexion angle, angular speed, and angular acceleration by extracting simple features from the moving image during lifting.

Background:

Trunk kinematics is an important risk factor for lower back pain, but is often difficult to measure by practitioners for lifting risk assessments.

Methods:

Mannequins representing a wide range of hand locations for different lifting postures were systematically generated using the University of Michigan 3DSSPP software. A bounding box was drawn tightly around each mannequin and regression models estimated trunk angles. The estimates were validated against human posture data for 216 lifts collected using a laboratory-grade motion capture system and synchronized video recordings. Trunk kinematics, based on bounding box dimensions drawn around the subjects in the video recordings of the lifts, were modeled for consecutive video frames.

Results:

The mean absolute difference between predicted and motion capture measured trunk angles was 14.65°, and there was a significant linear relationship between predicted and measured trunk angles (R2 = 0.80, p < 0.001). The training error for the kinematics model was 2.34°.

Conclusion:

Using simple computer vision extracted features, the bounding box method indirectly estimated trunk angle and associated kinematics, albeit with limited precision.

Application:

This computer vision method may be implemented on hand-held devices such as smartphones to facilitate automatic lifting risk assessments in the workplace.

Keywords: job risk assessment, kinematics, low back, manual materials handling, work physiology

PRÉCIS

A practical lifting assessment method was developed to estimate trunk kinematics using simple computer vision bounding box features from video recordings. Results showed good agreement with motion capture measurement.

INTRODUCTION

Low-back disorders (LBDs) are a major occupational health problem. Accounting for 38.5% of all work-related musculoskeletal disorders, LBDs were the most prominent among all work-related musculoskeletal disorder cases in 2016 (BLS, 2018). LBDs also has a significant socioeconomic impact, costing over $100 billion per year through direct costs, such as medical expenses, and indirect costs such as lost productivity and wages in the U.S. (Katz et al., 2006).

The risk factors of LBDs are multifactorial, consisting of personal, workplace psychosocial, and job physical factors (Bernard and Putz-Anderson, 1997; da Costa and Vieira, 2010). Epidemiological studies have identified heavy physical work (Eriksen et al., 1999; Engkvist et al., 2000; Kerr et al., 2001), awkward static and dynamic working postures (Homstrom et al., 1992; van Poppel et al., 1998; Myers et al., 1999), and manual material handling (Spengler et al., 1986; Snook et al., 1989; Hoogendoorn et al., 2000; Peek-Asa et al., 2004; Bigos, et al., 1986) as physical risk factors for LBDs. Particular task-level physical risk factors include work intensity, poor posture, frequent bending and twisting, and repetition (Marras et al., 1995; Kuiper et al., 1999).

The association between trunk kinematics (e.g. trunk flexion, lateral bending, and twisting velocities) and lower back pain in manual materials handling activities has been reported in many studies. Trunk dynamics during a lift are associated with significantly increased loading of the spine (Frievalds et al., 1984; McGill and Norman, 1986). Specifically, increased trunk velocity was related to increased spinal compression, shear, and torsional loading (Marras and Sommerich, 1991). Lavender et al. (2003) found speed was also a significant contributing factor for spine loading at the L5/S1 disc during lifting. Marras et al. (1993) examined the association between trunk dynamics and risk of LBDs and found that trunk kinematics including lateral trunk velocity, twisting trunk velocity, and sagittal flexion angle could be used to identify jobs associated with high risk for LBDs. It is therefore important to consider trunk kinematics to comprehensively evaluate the risk of LBDs.

In a recent study that investigated the popularity of the observational risk assessment methods used by certified professional ergonomists in five English speaking countries, Lowe et al. (2019) found that the revised NIOSH lifting equation (RNLE) was the most popular observational method used for assessing lifting risks in the U.S and ranked among top three in the United Kindom, Canada, New Zealand and Australia, respectively. To use the RNLE, several lifting, parameters are needed, including the horizontal and vertical distances of the loaded hands relative to the feet during lifting, trunk asymmetry angle; hand coupling for the load at the origin and destination of each lift; the displacement of load for each lifting task; and the frequency of performing each lifting task (Waters, et al. 1993). Trunk kinematics are not explicitly accounted for in the RNLE, but have been recommended as additional parameters for improving the risk predictability of the RNLE for highly dynamic lifting tasks (Lavender et al., 1999; Marras et al., 1999; Arjmand et al., 2015). The RNLE lifting frequency parameter may serve a surrogate measure for trunk speed, as lifting tasks with higher frequencies are likely to require faster trunk movements, however this relationship has not been fully studied. Additionally, the biomechanical criterion used to develop the RNLE were based on static models that may not be valid for dynamic lifting motion (Waters et al., 1994). Another widely used observational method, the Rapid Entire Body Assessment (REBA), includes the trunk flexion angle as part of the postural analysis (Hignett & McAtamney, 2000). To assess ergonomic risk information by the observational methods, intensive labor and lengthy processing time may be required. This is because observational methods require information collection of the whole body postural specification, such as angles and speed of each body segment. Moreover, observational risk assessment methods are oftern conducted based on short sampling periods to minimize the labor intensive observation process, and are therefore unable to characterize the all-day risk exposure for constantly changing lifting conditions, that have now become common in distribution centers and jobs involving handling objects of varying shapes and weights (Callaghan et al., 2001; Lavender et al., 2012; Lu et al., 2015).

Direct measurement can provide high-volume and accurate measurements of trunk kinematics (David, 2005). Inertial measurement unit (IMU) sensors are small, inexpensive and wearable, and possess great potential for assessing postures and measuring kinematics at work (Breen et al., 2009). Recent studies have demonstrated the application of IMU sensors on the measurement of body joint angles (Battini et al., 2014; Dahlqvist et al., 2016) and lifting task factors such as lifting duration and hand horizontal and vertical positions (Barim et al., 2019; Lu et al., 2019). In addition to IMUs, another method used to measure trunk kinematics is the lumbar motion monitor (LMM). The LMM is an exoskeleton of the spine attached to the shoulder and hips using a harness, which has been found to provide reliable measurements of the position, velocity, and acceleration of the trunk (Marras et al., 1992). Many research studies have used LMMs for quantifying trunk kinematics as lifting risk factors (e.g. Ferguson et al., 2011; Lavender et al., 2017; Norasi et al., 2018). Efforts have been made to improve the wearability and usability of the direct measurement device for trunk motion to facilitate their application in the industry. For example, Nakamoto et al. (2018) demonstrated the prototype of an LMM with lightweight, stretchable-strain sensors. Direct measurement, however, has many limitations. Instrumentation such as the LMM may not be readily available for job analysis (Marras et al., 1999). Although application in the field is possible, it is often difficult to identify the context of complex signals without relying on external measures such as synchronized video. Although used for research, the practical application of such direct measurement devices may be limited for practitioner use due to training and expertise required, and the interruption imposed on the worker’s regular tasks (Patrazi et al., 2016). According to an international survey of professional ergonomists, electrogoniometers such as the LMM had the lowest percentage of users (Lowe et al., 2019).

With the advantages of low-cost, non-intrusiveness and ease of use, computer vision has become a popular technology for measuring body postural angle and task repetition. Seo et al. (2016) demonstrated extracting silhouette information using computer vision to classify postures for ergonomics applications. Mehrizi et al. (2018) used a computer vision method to assess joint kinematics of lifting tasks. Ding et al. (2019) proposed a computer vision method for real-time upper-body posture assessment using features directly extracted from video frames. However, when applied to the industrial setting, computer vision methods are prone to various sources of noise in the workplace, such as obstructions, clothing, lighting, occlusion, and dynamic backgrounds. Optimal camera placement for computer vision in the industrial setting can be challenging. Some computer vision methods require markers attached to the worker for creating a skeleton figure for further motion analysis. The attachment of the markers, however, can be time-consuming and can interfere with the work.

We have previously developed an efficient computer vision lifting monitor algorithm that segments the subject’s silhouette from the background and applies a rectangular bounding box tightly around the silhouette (Wang et al., 2019). Based on the spatial and temporal characteristics of the segmented foreground and background, the algorithm also detects lifting instances and estimates hand and feet locations. Rather than fitting skeleton models to the worker’s image, our approach uses extracted simple features from videos. This method does not depend on tracking specific body linkages or measuring joint locations. Wang et al. (2019) demonstrated the application of the lifting monitoring algorithm for measuring task factors and computing the recommended RNLE weight limit (RWL). The horizontal and vertical dimensions of a tightly bounded and elastic rectangular bounding box were also used by Greene et al. (2019) for classifying lifting postures. Rather than obtaining precise measurements of posture based on joint angles, this method relaxed the need for high-precision tracking so that it was more tolerable of the variations that could be encountered in the workplace.

The current study describes a computationally efficient method that leverages simple video features, including bounding box dimensions and hand locations, obtained using computer vision to predict the angle, velocity, and acceleration of the trunk in the sagittal plane during two-handed symmetrical lifting. This study explores indirect estimation of both trunk flexion angle (T) and spine flexion angle (S). Trunk flexion is defined as the forward bending of the trunk from the vertical line and the center of motion about the hip. Spine flexion is the forward bending of the spine around the L5/S1 disc, accounting for sacral rotation. Since the L5/S1 disc incurs the greatest moment during lifting, this disc is often used as a landmark to represent lumbar stress (Chaffin et al., 2006). Anderson et al. (1986) reported a function to calculate the spine flexion around L5/S1 using the trunk and knee angles. With the benefits of non-invasiveness, objectiveness and low-cost, the computer vision method may measure trunk kinematics and facilitate more comprehensive lifting assessment by incorporating these risk factors in lifting analysis.

METHODS

Trunk Angle at the Lifting Instance (Static Model)

Modeled data.

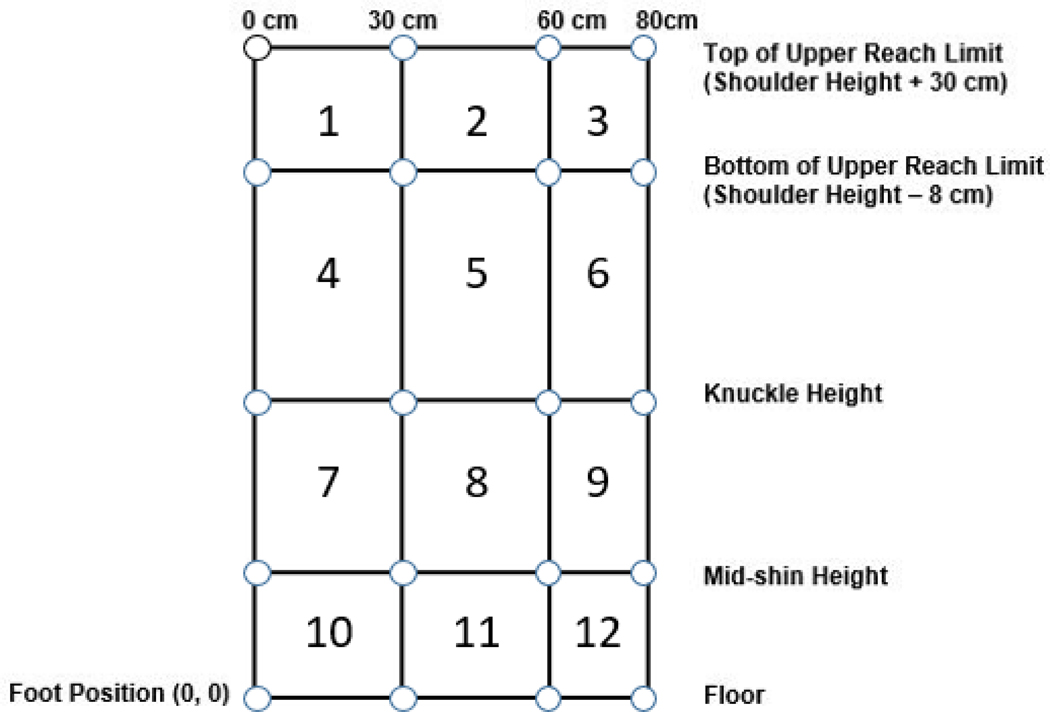

The lifting postures of simulated human images (mannequins) were generated using the 3D Static Strength Prediction Program Version 7.0.0 (3DSSPP) from the University of Michigan (2019). To include a wide range of lifting postures, the American Conference of Governmental Industrial Hygienists (ACGIH) manual lifting Threshold Limit Value (TLV) zones were used to determine the lifting locations of the mannequins (ACGIH, 2018). The TLV classifies lifting postures into twelve distinct zones including three horizontal and four vertical zones. The horizontal zones were determined by the horizontal distance of the hands using cutoff lines of 30 cm, 60 cm, and 80 cm. The vertical zones are determined by the vertical distance of the hands to the ground using landmarks of mid-shin, knuckle, shoulder, shoulder height – 8 cm, and shoulder height + 30cm. These landmarks are calculated based on the subject’s anthropometry. To account for the variation in lifting among different anthropometries, data of the 5th, 50th, and 95th percentile male and female from the 2012 Anthropometric Survey of U.S. Army Personnel was used to calculate the locations of the 20 intersection points of the zone markers in the TLV diagram (Gordon et al., 2014). A representation of the 20 zone intersections is shown in Figure 1.

Figure 1.

Representation of intercepts of 3 Horizontal and 4 Vertical Zones.

The hand locations were subsequently entered into 3DSSPP based on the mannequin’s anthropometry, and the posture prediction feature was applied to generate lifting postures (Chaffin, 2008; Hoffman, Reed, & Chaffin, 2007). After right-facing the mannequins, the bounding box dimensions (height and width) and the horizontal and vertical hand locations relative to the ankles of the mannequins in the images were measured using the University of Wisconsin MVTA software (Yen & Radwin, 1995). Since these features were measured in pixels, which was dependent on the variation across different anthropometries and image output from 3DSSPP, they were converted into centimeters based on the ratio of each measurement to the mannequin’s standing height. Each ratio was then multiplied by the mannequin’s standing height in centimeters.

The trunk flexion angle T and spine flexion angle S for each mannequin were recorded from the body segment angles window in 3DSSPP. After excluding 15 data points where the hand locations were unreachable by the corresponding anthropometry, data on bounding box dimensions and hand locations of 105 distinct lifting postures across six anthropometries remained in the training dataset.

Algorithm development.

To estimate trunk angle T at lifting the instance, a regression model was created based on various geometric features of the bounding box. The features in the training dataset included bounding box height (BH), bounding box width (BW), hand horizontal location (H), hand vertical location (V), and the ratio of BH to BW (R). A correlation matrix of all features was first calculated to investigate which features were redundant. If the correlation between the two features was greater than 0.8, the features were considered highly correlated. The variable with a higher mean absolute correlation with all other variables was removed from the dataset, and H, V, and BW remained in the training data. After inspecting the distribution of the features, log transformation was applied to H and V. A second-degree polynomial regression was then generated for T and S using the aforementioned features. Residual plots of the model were investigated to verify model validity. The trunk flexion model was validated by comparing the bounding box estimates obtained for the 216 lifting instances for videos from the laboratory experiments conducted by NIOSH against the trunk angles measured using 3D motion capture. Due to the absence of appropriate validation data, the spine flexion model was not tested for human lifting tasks.

Trunk Kinematics for Consecutive Video Frames (Dynamic Model)

Modeled data.

Since the mannequin lifting posture simulation in 3DSSPP does not account for the dynamic aspects of lifting, the model developed in the previous section was not sufficient for estimating trunk angles over a series of consecutive video frames and for calculating trunk kinematics such as trunk speed and acceleration. Therefore, video recordings and motion tracking data from a study conducted at NIOSH were utilized to model the trunk angle based on their temporal features. Videos of symmetrical lifts in which hand locations were defined by centers of the twelve ACGIH TLV lifting zones performed by six participants were recorded. For every lifting zone, each participant performed three repeated trials.

Whole-body motion data was recorded by a motion capture system (Optritrack 12 IR camera system, model Flex 13 with the Motion Monitor data acquisition program, Innovative Sports, Inc., Chicago, USA). Before data collection, a stylus was used to identify joint locations relative to the markers placed on the subject (Innovative Sports, 2019). The trunk was represented by the C7-T1 segment, and the trunk flexion angle T relative to the earth vertical line was calculated by subtracting the trunk angle at the neutral position from the measured trunk angle. Wang et al. (2019) previously detailed the laboratory experiment protocol and participant information. An observation window of 0.67s (20 frames) after each lifting instance captured the characteristics of each lift without including motions such as twisting, turning, and walking. The mean stature of the study participants was within 0.8 cm difference from that of the 2012 US Army Anthropometric Survey (Gordon et al., 2014) for both males and females while the standard deviation of stature in the NIOSH study was smaller.

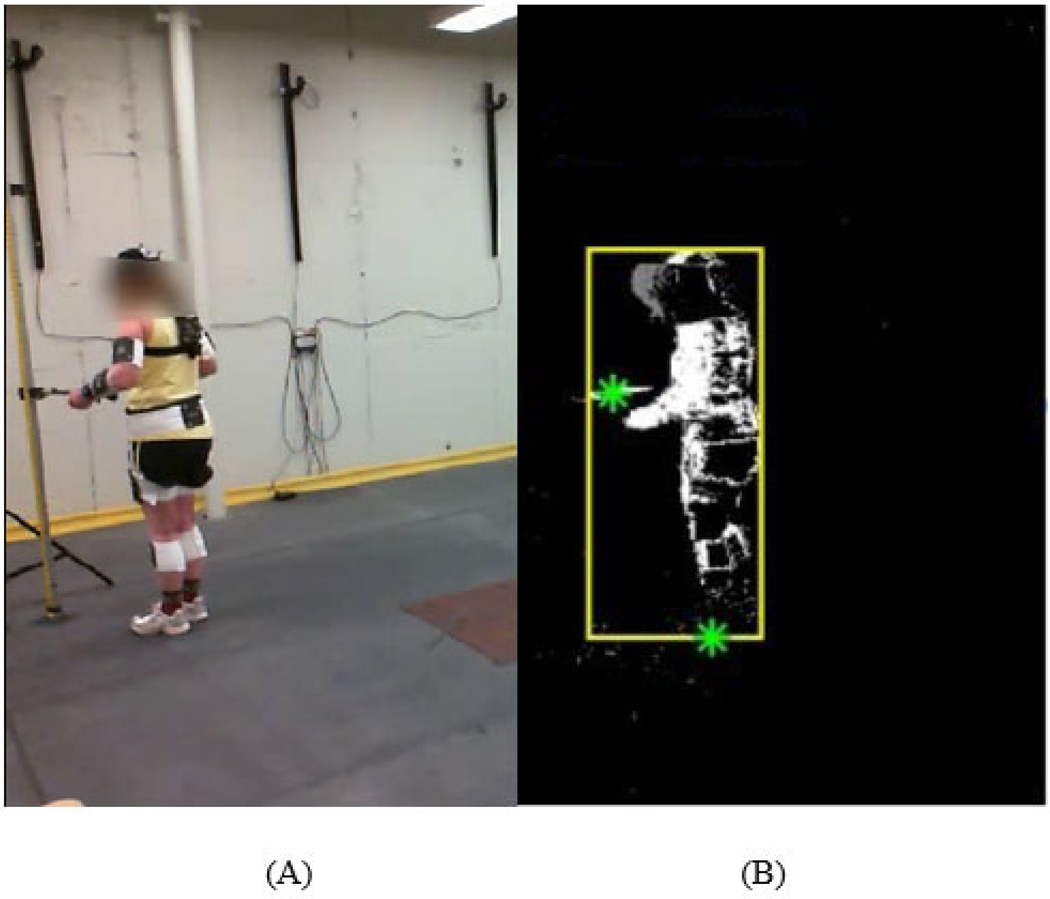

To determine lifting instances and to calculate H, V, BH, BW, and R, the videos were processed using the lifting monitor algorithm described in Wang et al. (2019). This algorithm detects dynamic scenes from the background resulting in a “ghost effect”. The “ghost effect” indicates the very instance when an object is lifted or released, which subsequently identifies hand and feet locations at the origin and destination of a lift. The lifting monitor algorithm creates a rectangular bounding box tightly around the subject and calculates the dimensions of the bounding box while it changes during a lift. A demonstration of the processing output of this computer vision algorithm is presented in Figure 2.

Figure 2.

Video image (A) and image processed by the lifting monitor algorithm (B) of a subject lifting a small object from a shelf. A rectangular bounding box encloses the subject. The hands and the ankles are identified and represented by green asterisks.

Some of the video recordings were not included due to inconsistent camera auto-focusing for some participants in the study. A total of 216 recordings of six subjects (36 recordings for each subject) without auto-focusing were analyzed using the lifting monitor algorithm to test the models. Trunk angles T were derived from marker locations in the motion capture data.

Algorithm development.

The algorithm for estimating trunk angles consecutively consists of three steps: 1) subtracting the trunk flexion angle at frame 0 to mitigate the variability of motion tracking between trials; 2) fitting each series of trunk flexion angle over 20 frames as an exponential function of time in the form:

| (1) |

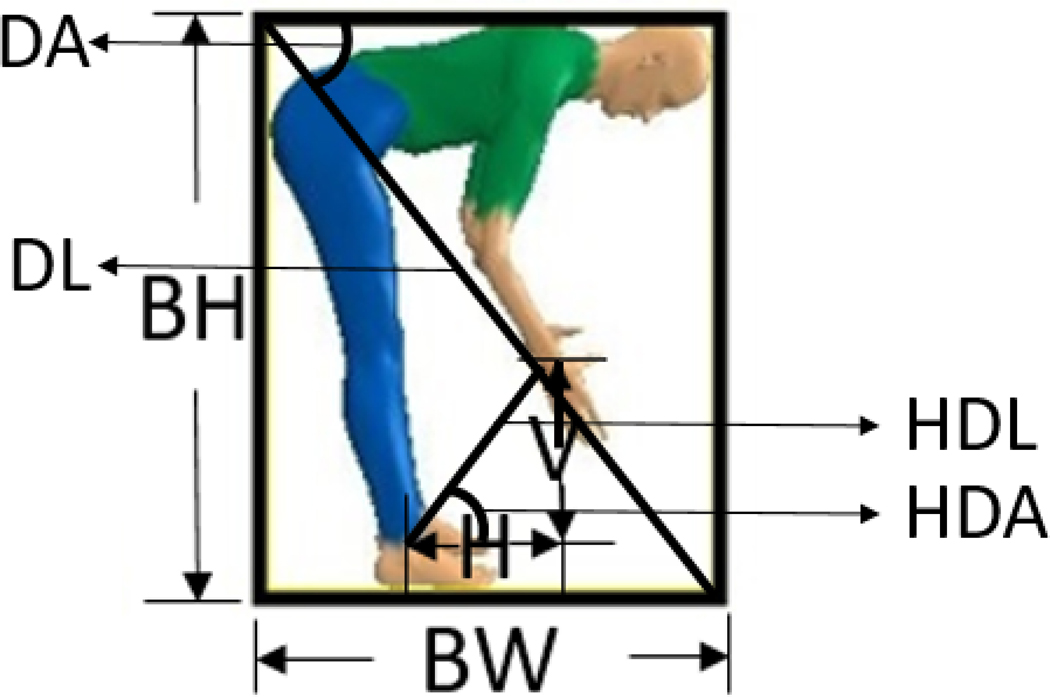

where x is the frame number from the lifting instance; 3) developing a linear regression model to estimate the coefficients α and β for each series, based on H, V, BH, BW, and R, and features derived from these measurements. The initial trunk angle was estimated using the static trunk angle estimation model, and was then added back to the predicted series of trunk angles. The variables in the training set are summarized in Table 1. A visual representation of the features used in the models is presented in Figure 3. The variables for predicting exponential equation parameters are the average, maximum, and standard deviation of the values of these features and their respective speed and acceleration over the 20 frames.

Table 1:

Variables of the Training Set for Estimating Trunk Angles During Consecutive Frames

| Variable | Description | Method Determined |

|---|---|---|

| H | Hand horizontal location relative to feet | Lifting Monitor Algorithm |

| V | Hand vertical location relative to feet | Lifting Monitor Algorithm |

| BH | Bounding box height | Lifting Monitor Algorithm |

| BW | Bounding box width | Lifting Monitor Algorithm |

| DUL | Displacement of bounding box upper-left corner | Lifting Monitor Algorithm |

| DUR | Displacement of bounding box upper-right corner | Lifting Monitor Algorithm |

| R | Ratio of BH to BW | |

| DA | Angle between bounding box diagonal and the bottom | |

| DL | Bounding box diagonal length | |

| HA | Angle between the line segment between hands and feet and the floor | |

| HD | Length of the line segment between hands and feet |

Figure 3.

Visual representation of features used in the models estimating trunk angles over consecutive video frames.

To account for the variability between different lifting postures, the dataset was first classified into stoop, squat, or stand postures based on the trunk and knee angles measured by motion tracking at frame 0 using the following rules: a squat was classified when the included knee angle between the thigh and lower leg (K) was K < 130 degrees; a stoop was classified when the trunk angle (T) was T > 40 degrees flexion from the vertical. If neither of these conditions was true, the posture was classified as a stand. If both K < 130 degrees and T > 40 degrees, the posture was classified as a squat (Greene, et al., 2019). The definitions of trunk and knee angles are illustrated in Figure 4. For each subset of postures, a linear regression model was created to predict α and β respectively (Equation 1) using variables listed in Table 1. The optimal set of variables was determined using stepwise regression. A backward elimination approach was adopted and the Akaike Information Criterion (AIC) was used as the evaluation criteria (Venables & Ripley, 2002).

Figure 4.

3DSSPP mannequins demonstrating (A) trunk angle(T) and (B) knee angle (K).

For each of the 216 lifts, the exponential function parameters α and β (Equation 1) were estimated using the regression model for the posture associated with the lift. Trunk flexion angles over the 20 frames were subsequently calculated based on the predicted exponential function parameters α and β (Equation 1).

RESULTS

Trunk Angle Estimation at the Lifting Instance (Static Model)

The videos were processed using the lifting monitor algorithm to determine lifting instances and calculate H, V, BH, BW and R at these time instances. A summary of the features of the regression model to estimate trunk flexion is presented in Table 2.

Table 2:

Summary of the Regression Model for Static Trunk Flexion T.

| Variable | Beta | Standard Error | t Value | p-Value |

|---|---|---|---|---|

| ln(H) | −0.11 | 11.92 | −3.58 | <0.001*** |

| (ln(H))2 | 0.09 | 17.77 | 2.10 | 0.04* |

| Ln(V) | 0.53 | 12.33 | 16.23 | <0.001*** |

| (Ln(V))2 | 0.35 | 12.80 | 10.35 | <0.001*** |

| BW | −0.52 | 19.17 | −10.13 | <0.001*** |

| BW2 | −0.05 | 11.34 | −1.79 | 0.08 |

p < .05.

p < .001.

The regression model for trunk flexion T (Adjusted R2 = 0.88, F(6,98) = 176.9, p < 0.001) was:

| (2) |

A summary of the features of the regression model to estimate spine flexion S is presented in Table 3.

Table 3:

Summary of the Regression Model for Static Spine Flexion S.

| Variable | Beta | Standard Error | t Value | p-Value |

|---|---|---|---|---|

| ln(H) | −0.02 | 38.11 | −0..23 | 0.82 |

| (ln(H))2 | 0.19 | 28.22 | 2.51 | 0.01** |

| Ln(V) | 0.52 | 22.30 | 8.90 | <0.001*** |

| (Ln(V))2 | 0.18 | 28.49 | 2.40 | 0.02* |

| BW | −0.72 | 36.10 | −7.49 | <0.001*** |

| BW2 | −0.05 | 22.32 | −0.84 | 0.4 |

p < .05.

p<0.01.

p < .001.

The regression model for spine flexion S (Adjusted R2 = 0.72, F(6,98) = 43.02, p < 0.001) was:

| (3) |

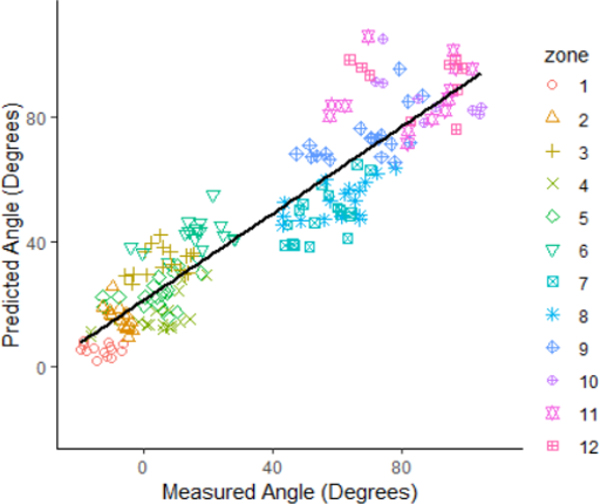

A plot of predicted trunk flexion angles versus motion capture measured trunk angles is presented in Figure 5. The overall mean absolute error of prediction on the validation set was 14.65°. The linear relationship between the model prediction and the measured trunk angle is plotted in Figure 5 (R2= 0.80, p < 0.001). The prediction errors summarized for each 10-degree increment of measured trunk angles are presented in Table 4.

Figure 5.

Predicted trunk flexion angles (calculated by the computer vision algorithms) vs. ground truth trunk flexion angles (calculated by the motion capture system). ACGIH lifting zones are represented by colors and shapes.

Table 4:

Prediction error summarized for 10-degree increments of measured trunk angles.

| Measured Trunk Angle (Degrees) | Mean Absolute Prediction Error (Degrees) | Standard Deviation of Prediction Error (Degrees) |

|---|---|---|

| (−∞, 0) | 24.35 | 8.23 |

| (0,10] | 18.96 | 9.86 |

| (10,20] | 19.23 | 8.57 |

| (20,30] | 19.63 | 8.67 |

| (40,50] | 5.59 | 7.73 |

| (50,60] | 11.51 | 12.44 |

| (60,70] | 16.05 | 18.81 |

| (70,80] | 10.51 | 13.70 |

| (80,90] | 5.92 | 5.35 |

| (90,100] | 11.49 | 15.04 |

| (100,110] | 17.96 | 20.98 |

Trunk Kinematics Estimation for Consecutive Video Frames (Dynamic Model)

The resulting mean absolute error between trunk angles fitted using exponential equations (Equation 1) and those measured through motion tracking was 1.00 (N = 216, SD = 0.11) degrees. A plot of comparison between fitted and measured trunk flexion angles of one lift in the dataset is presented in Figure 6.

Figure 6.

Comparison between fitted trunk flexion angles (calculated using exponential equations, represented by dashed lines) and measured trunk flexion angles (calculated using the motion capture data, represented by solid lines) over 10 consecutive frames.

Regression models were created for predicting α and β (Equation 1) to approximate the time series of trunk angles for stoops (adjusted R2 = 0.37, p < 0.001for α model; adjusted R2 = 0.11, p = 0.05 for β model), squats (adjusted R2 = 0.40 p = 0.13 for α model; adjusted R2 = 0.72, p = 0.01 for β model), and standing lifts (adjusted R2 = 0.30 p< 0.001 for α model). Beta of each fitted exponential curve of standing lifts is a constant (0) since minimum trunk flexion is involved in standing lifts. A total of six models were developed. A summary of the regression models to predict parameters of exponential equations α and β (Equation 1) to estimate trunk flexion angles over consecutive lifting frames is presented in Table 5.

Table 5:

Summary of regression models for estimating exponential parameters to predict trunk angles over consecutive video frames.

| Variables | Estimates | CI | p | |

|---|---|---|---|---|

| Coefficient (α) | ||||

|

| ||||

| stoop | (Intercept) | 2.37 | −1.48 – 6.22 | 0.222 |

|

| ||||

| SD DA speed | 14.79 | 3.45 – 26.14 | 0.012* | |

| Mean DL acceleration | −0.07 | −0.18 – 0.04 | 0.198 | |

| SD DL speed | 0.07 | −0.03 – 0.16 | 0.161 | |

| Mean HA speed | −4.71 | −8.43 – −0.99 | 0.014* | |

| Mean DA acceleration | −0.91 | −1.78 – −0.05 | 0.039* | |

| Max DA acceleration | −0.13 | −0.22 – −0.04 | 0.004** | |

| SD DL acceleration | 0 | 0.00 – 0.00 | <0.001*** | |

| Mean HA acceleration | −0.33 | −0.69 – 0.03 | 0.069 | |

| SD HA acceleration | 0.04 | −0.02 – 0.10 | 0.148 | |

| Mean HL acceleration | −0.01 | −0.01 – 0.00 | 0.1 | |

| DUR | −0.02 | −0.05 – 0.01 | 0.143 | |

|

| ||||

| squat | (Intercept) | 13.54 | 4.39 – 22.69 | 0.01** |

| SD DA acceleration | −0.24 | −0.53 – 0.05 | 0.088 | |

| Mean DL acceleration | 0.02 | 0.00 – 0.04 | 0.048* | |

| SD DL acceleration | 0 | −0.00 – 0.00 | 0.066 | |

| Max HA acceleration | −0.1 | −0.19 – −0.00 | 0.046* | |

| SD HD acceleration | 0.2 | −0.06 – 0.47 | 0.111 | |

|

| ||||

| stand | (Intercept) | 1.37 | −2.80 – 5.54 | 0.514 |

| Mean DL speed | 0.06 | −0.00 – 0.12 | 0.062 | |

| Max HA speed | 0.06 | 0.03 – 0.10 | <0.001*** | |

| SD HA speed | −7.42 | −15.51 – 0.66 | 0.071 | |

| Mean HA speed | 0.17 | −0.05 – 0.38 | 0.137 | |

| SD HA speed | −0.31 | −0.52 – −0.10 | 0.004** | |

| Mean DA acceleration | 1.11 | −0.26 – 2.48 | 0.109 | |

| Mean DL acceleration | 0.01 | 0.00 – 0.02 | 0.016* | |

| Max HD acceleration | 0.09 | 0.01 – 0.16 | 0.021* | |

| DUL | −0.02 | −0.05 – 0.01 | 0.18 | |

|

| ||||

| Coefficient (β) | ||||

|

| ||||

| stoop | (Intercept) | 0.1 | 0.05 – 0.15 | <0.001*** |

| Mean DA acceleration | 1.57e−2 | 0.00 – 0.03 | 0.034* | |

| Max DA acceleration | 2.26e−3 | 0.00 – 0.00 | 0.035* | |

| SD DA acceleration | 4.36e−3 | −0.01 – 0.00 | 0.082 | |

| Mean DL acceleration | 9.40e−5 | −0.00 – 0.00 | 0.063 | |

| SD DL acceleration | −1.65e−5 | −0.00 – −0.00 | 0.025* | |

|

| ||||

| squat | (Intercept) | −0.02 | −0.11 – 0.06 | 0.544 |

| Mean DA acceleration | 3.52e−2 | 0.01 – 0.06 | 0.012* | |

| Mean DL acceleration | −2.94e−4 | −0.00 – −0.00 | 0.012* | |

| SD DL acceleration | 4.21e−5 | 0.00 – 0.00 | 0.009** | |

| Max HA acceleration | 2.21e−3 | 0.00 – 0.00 | 0.002** | |

| SD HA acceleration | −5.34e−3 | −0.01 – −0.00 | 0.004** | |

|

| ||||

| stand | (Intercept) | 1.00e−1 | 0.05 – 0.15 | <0.001*** |

Note: SD refers to standard deviation.

p < .05.

p<0.01.

p < .001.

The mean absolute differences between the predicted and fitted trunk angles for each frame was 2.34 degrees (N = 216, SD = 2.52). Based on the predicted trunk flexion angles and video frame rate (30 frames/s), trunk speed and acceleration in each frame were calculated. The comparisons between predicted and fitted trunk flexion angle, speed, and acceleration for a stoop lift are presented in Figure 7. A summary of the differences between predicted and measured trunk kinematics is provided in Table 6. Histograms of the differences between predicted and measured values are presented in Figure 8.

Figure 7.

Fitted trunk kinematics (calculated using exponential equations, represented by solid lines) and measured trunk kinematics (calculated using the motion capture data, represented by dashed lines) over 20 consecutive frames.

Table 6:

Differences between average and maximum predicted and measured trunk flexion angle, speed, and acceleration during 20-frame periods.

| Mean Absolute Error | Standard Deviation of Error | |

|---|---|---|

| Average Trunk Angle (degrees) | 2.60 | 2.52 |

| Maximum Trunk Angle (degrees) | 2.37 | 2.35 |

| Average Trunk Speed (degrees/s) | 4.39 | 5.74 |

| Maximum Trunk Speed (degrees/s) | 5.36 | 7.5 |

| Average Trunk Acceleration (degrees/s2) | 9.8 | 15.85 |

| Maximum Trunk Acceleration (degrees/s2) | 13.14 | 21.69 |

Figure 8.

Histograms of differences between predicted and measured average trunk angles (A), maximum trunk angles (B), average trunk speed (C), maximum trunk speed (D), average trunk acceleration (E), and maximum trunk acceleration (F).

DISCUSSION

The objective of the current study was to develop a computer-vision method for predicting trunk angles, speed, and acceleration using simple features that can be extracted from videos. Without requiring direct instrumentation on the worker or fitting skeleton models of the image, the method may be tolerant of a range of visual intererrence while capable of objective lifting measurements with reasonable precision.

The lifting monitor algorithm used in this study can extract features including bounding box dimensions and hand locations in a series of consecutive video frames. The computer vision-based direct reading system can continuously acquire trunk kinematics information for comprehensive lifting risk assessments, which may lead to new risk models based on cumulative risk information that has not been adequately investigated in previous studies.

This study utilized the posture prediction feature of 3DSSPP which generated simulated postures according to the locations of the TLV zone intersections without extensive time and necessity of recruiting human participants for training data. There are limitations as a result of training the algorithm with simulated postures. The simulated postures may not be representative of the full range of variations in lifting motion. Additionally, the full range of lifting motion may be influenced by factors such as the worker’s training, individual preferences, and environmental constraints (University of Michigan Center for Ergonomics, 2019).

The videos of real-life lifting tasks were recorded in a laboratory setting and the only lifting feature that varies is the hand location. In these videos, the lighting and camera angle were well-controlled, the movement patterns of the subjects were designed before the experiment, and the object lifted was the same across all experiments. Therefore, the tasks in the test dataset may lack sufficient variation for comprehensive validation. Furthermore, to ensure the performance of the motion tracking system, the object used in the experiments was a hollow rectangular frame that was small and lightweight. The pattern of lifting for such an object may be different from those that are found in manual material handling tasks in the industry. For improved validity, the lifting monitor algorithm needs to be tested on videos in actual industrial settings. Additionally, large between-trial differences (up to 27.35 degrees) were observed in the trunk flexion angles measured using motion capture for the three repeated trials performed by each subject at each lifting location (M = 7.19, SD = 5.85). To mitigate the between-trial differences, we subtracted the initial trunk angle before modeling trunk angles over consecutive frames, and then added the predicted initial angle back in the prediction. Deep learning models may serve as an alternative means of measuring trunk angles by identifying joint locations in video frames (Xiao et al., 2018). Our future work will explore the application of deep learning on measuring trunk angles in video data of the laboratory study and compare the difference of measurements provided by motion capture and deep learning.

The bimodal distribution of the trunk flexion angles in both the 3DSSPP dataset (demonstrated in Figure 5) and laboratory study dataset is another concern. The frequency of observations reaches the local minimum between 30 and 40 degrees. There are no observations for trunk flexion angles in the validation dataset in the range from 30 to 40 degrees. Furthermore, differences in the range are also present between the two datasets. The ranges in centimeters were 0–93.03 in the 3DSSPP dataset and 2–83.93 in the laboratory study dataset for hand horizontal location (H), 0–154.82 in the 3DSSPP dataset and 0 – 175.25 in the laboratory study dataset for hand vertical location (V), and 36.24 – 114.34 in the 3DSSPP dataset and 48.76 – 124.46 in the laboratory study dataset for box width (BW).

A linear relationship between the static model predicted and measured trunk flexion angles T was present, indicating that the model discussed in the current paper can reproduce the results of a motion tracking system that requires enormous setup, operation, and data processing to some extent. In the current model, a discrepancy (15.85°) exists between the predicted trunk angles and the angles measured through motion capture. After summarizing the prediction error for each 10-degree increment of measured trunk angle, it was revealed that increased errors occur at very small (<30°) and extreme trunk flexion angles (> 100°). The large error at small trunk flexion angles may be attributed to the lack of training data in this range in the static model, and we anticipate that the model accuracy in this range will improve when additional training data is collected. Overestimating small trunk flexion angles may misclassify low-risk tasks as high-risk, however this model favors the safe side and avoids overlooking high-risk tasks. Trunk postures greater than 90° are not often observed in the industry, as indicated by previous studies on industrial lifting jobs by Marras et al (1993) and Lavender at al. (2012). Therefore, the overall accuracy of the model is anticipated to improve for assessing most lifting conditions in the workplace. Although high precision measurement of trunk angle is desirable, defining a range for the trunk angle is usually what is necessary in current ergonomic assessment methods. For example, in the REBA, the trunk angle only needs to be classified into extension, 0°–20°, 20°–60°, and greater than 60°. Another highly used lifting assessment tool, the NIOSH Lifting Equation, also only requires classifying each factor into a range. Trunk angle is not a factor for the NIOSH Lifting Equation. However, the asymmetry angle, the only body angle that is incorporated into the NIOSH Equation, needs to be classified into a 15-degree interval ranging from 0 to 135 degrees. Although the current model has not reached the accuracy of various laboratory studies, it possesses the potential to provide data input for ergonomic assessment methods such as the REBA.

The static model for estimating spine flexion angle S revealed a statistically significant relationship (p < 0.001) between the spine flexion and the computer vision extracted features. However, the relatively low R2 (adjusted R2 = 0.72) suggests that this model does not explain some of the variance in the spine flexion data. One possible explanation is that the included knee angle, a factor that contributes to the spine flexion, is not well-captured by the current set of variables in the model. Additionally, due to the lack of spine flexion data available to us, this model was not validated using human lifting tasks. Since the disc between L5 and S1 incurs the greatest potential during lifting and is susceptable for force-induced injuries, estimating the biomechanical stress for the L5/S1 disc could provide valuable information for lifting analysis (Waters et al., 1993). If the spine flexion around L5/S1 can be estimated using variables measured by our lifting monitor algorithm which is fast and does not require instrumentation, the difficulty for quantifying the biomechanical stress at L5/S1 may be significantly reduced. Future work will investigate the relationship between included knee angle and computer vision measured variables. Additional experiments will be conducted to collect spine flexion data during human lifting tasks to collect data for model validation.

Exponential functions closely approximated the progression of trunk flexion angle for each lift, and exponential equation parameters were estimated using regression models to predicted trunk angle, speed, and acceleration over consecutive video frames with reasonable errors (Table 6). Despite the small differences between predicted and measured trunk kinematics, further improvements of the models are crucial. Although small p-values (p < 0.05) were found for four out of the six dynamic models indicating a high probability of linear relationships between the variables and the exponential equation parameters, the low R2 values suggest a large amount of variance in these parameters were not explained by the models. One possible explanation for this is the measurement variability of the motion capture system between trials. Furthermore, due to the lightweight of the object lifted in the experiments, the lifting movements may not be fully representative of typical lifting tasks performed in the industrial setting, and the range of trunk speed in the dataset is very limited.

The computer vision method used in automating measurements of lifting risk factors in this study may save practitioners substantial time for data collection. This computer vision method is also able to address the inability of many observation-based postural classification methods to quantify trunk kinematics. The consistency and objectivity of the computer vision method are particularly important in today’s work conditions where lifting occurs in numerous locations involving varying body postures throughout the workday. Video image processing is non-invasive and does not necessitate putting instruments or sensors on the worker so it will not interfere with the work. The algorithm developed for this research is designed to be insensitive to occlusion from the objects handled and other variabilities in the workplace, such as obstructions, clothing, lighting, and dynamic backgrounds. These workplace constraints often impede the ability of skeletal models to recognize the whole body posture and measure joint angles effectively (Seo et al., 2016; Mehrizi et al., 2018).

Compared to deep learning methods, the simple feature computer vision method we used relaxes the need for precise tracking of human joint locations. However, as a result of analyzing fewer features and estimating trunk kinematics indirectly, this method yields less precision than human posture analysis which requires identifying joint locations and overlaying a skeleton on the human image (e.g. Mehrizi et al., 2019). While being able to offer relatively high-precision measurements, deep learning involves matching a large number of feature points and is computationally intensive. Compared to methods that require precise tracking of joint locations, our method requires significantly less computation power and can be implemented on devices like smartphones and personal computers. The aim of our method is to provide fast and reliable estimations for surveillance purposes in the industrial setting . For this purpose, the current level of precision may be sufficient. The method ultimately needs to be tested for industrial lifting tasks with associated health outcomes to determine whether the estimated trunk kinematics are sufficient to identify high-risk lifting tasks.

Future work will improve model external validity. Lifting tasks performed by workers in industry will be utilized to train the model. This will not only be more representative of human lifting in the workplace than lifts in the laboratory study but incorporate a larger variety of lifting tasks, objects lifted, and lifting postures.

The current research has demonstrated the feasibility of predicting trunk flexion angles for various lifting postures using simple computer vision extracted features from video recordings. Findings from this study expand the application of the bounding box computer vision method in the area of manual lifting analysis. We anticipate implementing the method on portable handheld devices making it widely accessible to practitioners.

KEY POINTS.

Models were developed for estimating trunk kinematics using simple bounding box computer vision features from video recordings.

Trunk kinematics calculated using the models showed agreement with those measured by a research-grade motion capture system.

The computer vision-based method is automated, non-intrusive, computationally efficient, and with the potential to serve as a practical lifting assessment method over extended periods.

ACKNOWLEDGEMENTS

This study was funded, in part, by a grant from the National Institute for Occupational Safety and Health (NIOSH/CDC) R01OH011024 (Radwin), NIOSH/CDC contract 5D30118P01249 (Radwin), and a pilot training grant from the NIOSH-funded MCOHS-ERC Pilot Research Training Program OH008434 (Greene). The authors would like thank Dwight Werren and other NIOSH associates for assisting in data collection.

The contents of this effort are solely the responsibility of the authors and do not necessarily represent the official view of the National Institute for Occupational Safety and Health, Centers for Disease Control and Prevention, or other associated entities.

BIOGRAPHIES

Runyu L. Greene is a PhD candidate in industrial and systems engineering at the University of Wisconsin-Madison (Madison, WI). She has BS and MS degrees in industrial and systems engineering from the University of Wisconsin-Madison.

Ming-Lun Lu is a certified professional ergonomist and the manager for the Musculoskeletal Health Cross-sector Research Program at the National Institute for Occupational Safety and Health. He holds a Ph.D. in industrial hygiene with a concentration on ergonomics from the University of Cincinnati (Cincinnati, OH). His primary research interests include occupational biomechanics and field-based ergonomic risk assessments for prevention of work-related musculoskeletal disorders.

Menekse Salar Barim earned her doctorate in Industrial and Systems Engineering with an emphasis in Occupational Injury Prevention in 2017 from Auburn University. In her new capacity as a Research Fellow at NIOSH, she works on projects related to ergonomic surveillance, the development of next generation ergonomic assessment tools and the effect of back assist exoskeletons in manual handling in the WRT sector.

Xuan Wang earned her doctorate in electrical and computer engineering at the University of Wisconsin-Madison. She is a data scientist at 3M, focusing on image and tabular data processing. She has a BS degree in School of Electronics and Information from Northwestern Polytechnic University (Xi’an, China). She has a MS degree in Electrical and Computer Engineering from the University of Wisconsin-Madison.

Marie Hayden earned her master’s in Industrial and Systems Engineering focusing on Occupational Safety in 2016 from Ohio University. She has been working at NIOSH as a research fellow since 2017 and she is reviewing OS&H intervention case studies of Robotic Equipment in Manufacturing.

Yu Hen Hu has broad research interests ranging from design and implementation of signal processing algorithms, computer-aided design and physical design of VLSI, pattern classification and machine learning algorithms, and image and signal processing. He is a professor in electrical and computer engineering at the University of Wisconsin-Madison. He has a BS degree from the National Taiwan University and a Ph.D. from the University of Southern California (Los Angeles, CA).

Robert G. Radwin is Duane H. and Dorothy M. Bluemke Professor in the College of Engineering at the University of Wisconsin-Madison, where he advances new methods for research and practice of human factors engineering and occupational ergonomics. He has a BS degree from New York University Polytechnic School of Engineering, and MS and PhD degrees from the University of Michigan (Ann Arbor, MI).

REFERENCES

- American Conference of Governmental Industrial Hygienists. (2018). TLV®/BEI® introduction. Retrieved from https://www.acgih.org/tlv-bei-guidelines/tlv-bei-introduction.

- Arjmand N, Amini M, Shirazi-Adl A, Plamondon A, & Parnianpour M. (2015). Revised NIOSH Lifting Equation May generate spine loads exceeding recommended limits. International Journal of Industrial Ergonomics, 47, 1–8. [Google Scholar]

- Barim MS, Lu ML, Feng S, Hughes G, Hayden M, & Werren D. (2019, November). Accuracy of An Algorithm Using Motion Data Of Five Wearable IMU Sensors For Estimating Lifting Duration And Lifting Risk Factors. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting (Vol. 63, No. 1, pp. 1105–1111). Sage CA: Los Angeles, CA: SAGE Publications. [Google Scholar]

- Battini D, Persona A, & Sgarbossa F. (2014). Innovative real-time system to integrate ergonomic evaluations into warehouse design and management. Computers & Industrial Engineering, 77, 1–10. [Google Scholar]

- Bernard BP, & Putz-Anderson V. (1997). Musculoskeletal disorders and workplace factors; a critical review of epidemiologic evidence for work-related musculoskeletal disorders of the neck, upper extremity, and low back, National Institute for Occupational Safety and Health. . [Google Scholar]

- Bigos SJ, Spengler DM, Martin NA, Zeh J. ., Fisher L., Nachemson A, & Wang MH (1986). Back injuries in industry: a retrospective study. II. Injury factors. Spine, 11(3), 246–251. [DOI] [PubMed] [Google Scholar]

- Breen PP, Nisar A, & ÓLaighin G. (2009, September). Evaluation of a single accelerometer based biofeedback system for real-time correction of neck posture in computer users. In 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (pp. 7269–7272). IEEE. [DOI] [PubMed] [Google Scholar]

- Callaghan JP, Salewytsch AJ, & Andrews DM (2001). An evaluation of predictive methods for estimating cumulative spinal loading. Ergonomics, 44(9), 825–837. [DOI] [PubMed] [Google Scholar]

- Chaffin DB, Andersson GB, & Martin BJ (2006). Occupational biomechanics. John Wiley & Sons. [Google Scholar]

- Chaffin DB (2008). Digital human modeling for workspace design. Reviews of Human Factors and Ergonomics, 4(1), 41–74. [Google Scholar]

- da Costa BR, & Vieira ER (2010). Risk factors for work‐related musculoskeletal disorders: a systematic review of recent longitudinal studies. American journal of industrial medicine, 53(3), 285–323. [DOI] [PubMed] [Google Scholar]

- Dahlqvist C, Hansson GÅ, & Forsman M. (2016). Validity of a small low-cost triaxial accelerometer with integrated logger for uncomplicated measurements of postures and movements of head, upper back and upper arms. Applied ergonomics, 55, 108–116. [DOI] [PubMed] [Google Scholar]

- David GC (2005). Ergonomic methods for assessing exposure to risk factors for work-related musculoskeletal disorders. Occupational medicine, 55(3), 190–199. [DOI] [PubMed] [Google Scholar]

- Ding Z, Li W, Ogunbona P, & Qin L. (2019). A real-time webcam-based method for assessing upper-body postures. Machine Vision and Applications, 1–18. [Google Scholar]

- Engkvist IL, Hjelm EW, Hagberg M, Menckel E, & Ekenvall L. (2000). Risk indicators for reported over-exertion back injuries among female nursing personnel. [DOI] [PubMed] [Google Scholar]

- Eriksen W, Natvig B, & Bruusgaard D. (1999). Smoking, heavy physical work and low back pain: a four-year prospective study. Occupational Medicine, 49(3), 155–160. [DOI] [PubMed] [Google Scholar]

- Ferguson SA, Marras WS, Allread WG, Knapik GG, Vandlen KA, Splittstoesser RE, & Yang G. (2011). Musculoskeletal disorder risk as a function of vehicle rotation angle during assembly tasks. Applied ergonomics, 42(5), 699–709. [DOI] [PubMed] [Google Scholar]

- Freivalds A, Chaffin DB, Garg A, & Lee KS (1984). A dynamic biomechanical evaluation of lifting maximum acceptable loads. Journal of Biomechanics, 17(4), 251–262. [DOI] [PubMed] [Google Scholar]

- Gordon CC, Blackwell CL, Bradtmiller B, Parham JL, Barrientos P, Paquette SP, ... & Mucher M. (2014). 2012 Anthropometric survey of US Army personnel: Methods and summary statistics (No. NATICK/TR-15/007). Army Natick Soldier Research Development And Engineering Center MA. [Google Scholar]

- Greene RL, Hu YH, Difranco N, Wang X, Lu ML, Bao S, Lin JH & Radwin RG (2019). Predicting Sagittal Plane Lifting Postures From Image Bounding Box Dimensions. Human factors, 61(1), 64–77. [DOI] [PubMed] [Google Scholar]

- Hignett S, & McAtamney L. (2000). Rapid entire body assessment (REBA). Applied ergonomics, 31(2), 201–205. [DOI] [PubMed] [Google Scholar]

- Hoffman SG, Reed MP, & Chaffin DB (2007). Predicting force-exertion postures from task variables (No. 2007–01-2480). SAE Technical Paper. [Google Scholar]

- Holmström EB, Lindell J, & Moritz U. (1992). Low back and neck/shoulder pain in construction workers: occupational workload and psychosocial risk factors. Part 2: Relationship to neck and shoulder pain. Spine, 17(6), 672–677. [DOI] [PubMed] [Google Scholar]

- Hoogendoorn WE, van Poppel MN, Bongers PM, Koes BW, & Bouter LM (1999). Physical load during work and leisure time as risk factors for back pain. Scandinavian journal of work, environment & health, 387–403. [DOI] [PubMed] [Google Scholar]

- Katz JN (2006). Lumbar disc disorders and low-back pain: socioeconomic factors and consequences. JBJS, 88, 21–24. [DOI] [PubMed] [Google Scholar]

- Kerr MS, Frank JW, Shannon HS, Norman RW, Wells RP, Neumann WP, ... & Ontario Universities Back Pain Study Group. (2001). Biomechanical and psychosocial risk factors for low back pain at work. American journal of public health, 91(7), 1069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuiper JI, Burdorf A, Verbeek JH, Frings-Dresen MH, van der Beek AJ, & Viikari-Juntura ER (1999). Epidemiologic evidence on manual materials handling as a risk factor for back disorders: a systematic review. International Journal of Industrial Ergonomics, 24(4), 389–404. [Google Scholar]

- Lavender SA, Andersson GB, Schipplein OD, & Fuentes HJ (2003). The effects of initial lifting height, load magnitude, and lifting speed on the peak dynamic L5/S1 moments. International Journal of Industrial Ergonomics, 31(1), 51–59. [Google Scholar]

- Lavender SA, Marras WS, Ferguson SA, Splittstoesser RE, & Yang G. (2012). Developing physical exposure-based back injury risk models applicable to manual handling jobs in distribution centers. Journal of occupational and environmental hygiene, 9(7), 450–459. [DOI] [PubMed] [Google Scholar]

- Lavender SA, Nagavarapu S, & Allread WG (2017). An electromyographic and kinematic comparison between an extendable conveyor system and an articulating belt conveyor used for truck loading and unloading tasks. Applied ergonomics, 58, 398–404. [DOI] [PubMed] [Google Scholar]

- Lavender SA, Oleske DM, Nicholson L, Andersson GB, & Hahn J. (1999). Comparison of five methods used to determine low back disorder risk in a manufacturing environment. Spine, 24(14), 1441. [DOI] [PubMed] [Google Scholar]

- Lowe BD, Dempsey PG, & Jones EM (2019). Ergonomics assessment methods used by ergonomics professionals. Applied Ergonomics, 81, 102882. [DOI] [PubMed] [Google Scholar]

- Lu ML, Feng S, Hughes G, Barim MS, Hayden M, & Werren D. (2019, November). Development of an algorithm for automatically assessing lifting risk factors using inertial measurement units. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting (Vol. 63, No. 1, pp. 1334–1338). Sage CA: Los Angeles, CA: SAGE Publications. [Google Scholar]

- Lu ML, Waters T, & Werren D. (2015). Development of human posture simulation method for assessing posture angles and spinal loads. Human Factors and Ergonomics in Manufacturing & Service Industries, 25(1), 123–136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marras WS, Fathallah FA, Miller RJ, Davis SW, & Mirka GA (1992). Accuracy of a three-dimensional lumbar motion monitor for recording dynamic trunk motion characteristics. International Journal of Industrial Ergonomics, 9(1), 75–87. [Google Scholar]

- Marras WS, Fine LJ, Ferguson SA, & Waters TR (1999). The effectiveness of commonly used lifting assessment methods to identify industrial jobs associated with elevated risk of low-back disorders. Ergonomics, 42(1), 229–245. [DOI] [PubMed] [Google Scholar]

- Marras WS, Lavender SA, Ferguson SA, Splittstoesser RE, & Yang G. (2010). Quantitative dynamic measures of physical exposure predict low back functional impairment. Spine, 35(8), 914–923.Meeting. 10.1177/1541931215591205 [DOI] [PubMed] [Google Scholar]

- Marras WS, Lavender SA, Leurgans SE, Fathallah FA, Ferguson SA, Gary Allread W, & Rajulu SL (1995). Biomechanical risk factors for occupationally related low back disorders. Ergonomics, 38(2), 377–410. [DOI] [PubMed] [Google Scholar]

- Marras WS, Lavender SA, Leurgans SE, Rajulu SL, Allread SWG, Fathallah FA, & Ferguson SA (1993). The Role of Dynamic Three-Dimensional Trunk Motion in Occupationally-Related. Spine, 18(5), 617–628. [DOI] [PubMed] [Google Scholar]

- Marras WS, & Sommerich CM (1991). A three-dimensional motion model of loads on the lumbar spine: I. Model structure. Human factors, 33(2), 123–137. [DOI] [PubMed] [Google Scholar]

- McGill SM, & Norman RW (1986). Partitioning of the L4-L5 dynamic moment into disc, ligamentous, and muscular components during lifting. Spine, 11(7), 666–678. [DOI] [PubMed] [Google Scholar]

- Mehrizi R, Peng X, Metaxas DN, Xu X, Zhang S, & Li K. (2019). Predicting 3-D lower back joint load in lifting: A deep pose estimation approach. IEEE Transactions on Human-Machine Systems, 49(1), 85–94. [Google Scholar]

- Mehrizi R, Peng X, Tang Z, Xu X, Metaxas D, & Li K. (2018, May). Toward marker-free 3D pose estimation in lifting: A deep multi-view solution. In 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018) (pp. 485–491). IEEE. [Google Scholar]

- Myers AH, Baker SP, Li G, Smith GS, Wiker S, Liang KY, & Johnson JV (1999). Back injury in municipal workers: a case-control study. American journal of public health, 89(7), 1036–1041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamoto H, Yamaji T, Yamamoto A, Ootaka H, Bessho Y, Kobayashi F, & Ono R. (2018). Wearable Lumbar-Motion Monitoring Device with Stretchable Strain Sensors. Journal of Sensors, 2018. [Google Scholar]

- Norasi H, Koenig J, & Mirka G. (2018, September). Effect of Load Weight and Starting Height on the Variability of Trunk Kinematics. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting (Vol. 62, No. 1, pp. 905–909). Sage CA: Los Angeles, CA: SAGE Publications. [Google Scholar]

- Patrizi A, Pennestrì E, & Valentini PP (2016). Comparison between low-cost marker-less and high-end marker-based motion capture systems for the computer-aided assessment of working ergonomics. Ergonomics, 59(1), 155–162. [DOI] [PubMed] [Google Scholar]

- Peek-Asa C, McArthur DL, & Kraus JF (2004). Incidence of acute low-back injury among older workers in a cohort of material handlers. Journal of occupational and environmental hygiene, 1(8), 551–557. [DOI] [PubMed] [Google Scholar]

- Seo J, Yin K, & Lee S. (2016). Automated postural ergonomic assessment using a computer vision-based posture classification. In Construction Research Congress 2016 (pp. 809–818). [Google Scholar]

- Snook SH (1989). The control of low back disability: the role of management. Manual material handling: Understanding and preventing back trauma. [Google Scholar]

- Spengler DM, Bigos SJ, Martin NA, Zeh JUDITH, Fisher LLOYD, & Nachemson A. (1986). Back injuries in industry: a retrospective study. I. Overview and cost analysis. Spine, 11(3), 241–245. [DOI] [PubMed] [Google Scholar]

- Van Poppel MNM, Koes BW, Deville WLJM, Smid T, & Bouter LM (1998). Risk factors for back pain incidence in industry: a prospective study. Pain, 77(1), 81–86. [DOI] [PubMed] [Google Scholar]

- Venables WN & Ripley BD (2002) Modern Applied Statistics with S. Fourth Edition. Springer, New York. ISBN 0–387-95457–0 [Google Scholar]

- University of Michigan Center for Ergonomics. (2019). 3DSSPP Software. Retrieved from https://c4e.engin.umich.edu/toolsservices/3dsspp-software/.

- U.S. Bureau of Labor Statistics (2018), Back injuries prominent in work-related musculoskeletal disorder cases in 2016. Retrieved from https://www.bls.gov/opub/ted/2018/back-injuriesprominent-in-work-related-musculoskeletal-disorder-cases-in-2016.htm.

- Wang X, Hu YH, Lu ML, & Radwin RG (2019). The accuracy of a 2D video-based lifting monitor. Ergonomics, 62(8), 1043–1054. [DOI] [PubMed] [Google Scholar]

- Waters TR, Putz-Anderson V, & Garg A. (1994). Applications manual for the revised NIOSH lifting equation. [Google Scholar]

- Waters TR, Putz-Anderson V, Garg A, & Fine LJ (1993). Revised NIOSH equation for the design and evaluation of manual lifting tasks. Ergonomics, 36(7), 749–776. [DOI] [PubMed] [Google Scholar]

- Xiao B, Wu H. and Wei Y. (2018). Simple baselines for human pose estimation and tracking Proceedings of the European Conference on Computer Vision, 466–481. [Google Scholar]

- Yen TY, & Radwin RG (1995). A video-based system for acquiring biomechanical data synchronized with arbitrary events and activities. IEEE transactions on Biomedical Engineering, 42(9), 944–948. [DOI] [PubMed] [Google Scholar]