1336

IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, VOL. 46, NO. 5, MAY 2008

Model-Based Satellite Image Fusion

Henrik Aanæs, Johannes R. Sveinsson, Senior Member, IEEE, Allan Aasbjerg Nielsen,

Thomas Bøvith, and Jón Atli Benediktsson, Fellow, IEEE

Abstract—A method is proposed for pixel-level satellite image

fusion derived directly from a model of the imaging sensor. By

design, the proposed method is spectrally consistent. It is argued

that the proposed method needs regularization, as is the case for

any method for this problem. A framework for pixel neighborhood

regularization is presented. This framework enables the formulation of the regularization in a way that corresponds well with

our prior assumptions of the image data. The proposed method

is validated and compared with other approaches on several data

sets. Lastly, the intensity-hue-saturation method is revisited in

order to gain additional insight of what implications the spectral

consistency has for an image fusion method.

Index Terms—Generative model, image fusion, satellite images,

sensor fusion, spectral consistency.

tion to solve the problem. Thus, in a sense, we have to “make

up” the missing pieces of information, which, in practice, implies the use of prior knowledge either implicitly or explicitly.

To address this problem, the approach taken in this paper is to

consider the generative models of the data, i.e., models of how

the images are formed. This, in turn, allows us to distinguish

between exactly what we do know and what we do not know

regarding the desired fused image. This has the advantage that

the proposed method uses the available information and we can

deliberately construct prior models dealing with what we do

not know.

A. Relation to Other Works

I. I NTRODUCTION

S

ATELLITES provide very valuable data about the Earth,

e.g., for environmental monitoring, weather forecasting,

map making, and military intelligence. Satellites are expensive

both to build and operate. This implies that we are somehow

obliged to do the best with the data we get from existing

satellites, e.g., by combining the output from different sensors

and to increase the knowledge we can infer. In this paper, we

deal with the merging of low spatial and high spectral resolution

satellite images with high spatial and low spectral ones with

the aim of creating high spatial and high spectral images. This

is also known as image fusion, which is a subset of sensor or

data fusion.

Image fusion can be done at several levels: pixel, feature,

object, and decision levels, depending on the intended use of the

fused image. In this paper, we are only concerned about pixellevel fusion, and when the terms “image fusion” or “fusion”

are used, pixel-level fusion is intended. In the current context,

fusion is the next step after preprocessing and the step before

further processing, such as segmentation and classification.

As argued hereafter, satellite image fusion is an ill-posed

inverse problem, implying that we do not have enough informa-

Manuscript received May 31, 2007; revised October 30, 2007. This work was

supported in part by the Research Fund of the University of Iceland.

H. Aanæs is with the Department of Informatics and Mathematical

Modelling, Technical University of Denmark, 2800 Lyngby, Denmark.

J. R. Sveinsson and J. A. Benediktsson are with the Department of Electrical

and Computer Engineering, University of Iceland, 101 Reykjavík, Iceland.

A. A. Nielsen is with the National Space Institute, Technical University of

Denmark, 2800 Lyngby, Denmark.

T. Bøvith is with the National Space Institute, Technical University of

Denmark, 2800 Lyngby, Denmark, and also with the Danish Meteorological

Institute, 2100 Copenhagen, Denmark.

Digital Object Identifier 10.1109/TGRS.2008.916475

During the past two decades, several fusion techniques have

been proposed. Most of these techniques are based on the compromise between the desired spatial enhancement and the spectral consistency. The intensity-hue-saturation (IHS) method

[1]–[4] has been widely used for red, green, and blue (RGB)

color-composite fusion. The IHS is probably the most popular

image fusion approach because of its low computational cost.

The Brovey [5] sharpening method is a simple technique, and

it can be performed on individual bands. Principal-componentanalysis [6] and wavelet-based fusion methods [7]–[10] have

also been proposed. All these methods fall in the category of

component-substitution methods. All of them can cause color

distortion if the spectral range of the intensity replacement

image is different from the spectral range covered by the bands

used in a color composite. That is to say, the fused images

obtained by these methods have high spatial quality, but they

suffer from spectral distortions. To achieve an image with high

fidelity to the original spectral information, the smoothing filterbased intensity modulation fusion technique was proposed by

Liu [8]. However, the images obtained by using that method are

blurred compared with other fusion techniques. It was observed

that this blurring was a direct result of inaccurate coregistration

between the up-scaled multispectral and captured panchromatic

images. A good introduction to satellite image fusion can be

found in [11].

In this paper, a novel multisensor image fusion technique is

presented. The method is model based, and the fused images

obtained spectrally are consistent by design. The problem of

satellite image fusion is an ill-posed problem, and therefore,

regularization is necessary for its solution. For this purpose,

the framework for pixel neighborhood regularization is presented in this paper. Using this framework, we can do the

regularization consciously and also formulate it in a way that

corresponds well with our prior assumptions on the image data.

0196-2892/$25.00 © 2008 IEEE

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�AANÆS et al.: MODEL-BASED SATELLITE IMAGE FUSION

B. Notation and Paper Outline

The scope of this paper is to estimate a high-resolution

multichannel image from both low-resolution multichannel and

high-resolution panchromatic images. The presented theory and

methodology are valid for an arbitrary number of channels

and an arbitrary ratio between the spatial resolution of the lowand high-resolution images. For ease of presentation and without loss of generality, it is assumed (if nothing else is stated)

that, in this paper, we work with three channels (red, green,

and blue) and the ratio between high and low spatial resolution

pixels is one to four by four, i.e., 1 : 16. The notation used in this

high

high

paper is as follows: We want to estimate (Rhigh

ij , Gij , Bij )

low

low

from a low-resolution RGB image (Rlow

i , Gi , Bi ) and a

high

high-resolution panchromatic image Pij . The indices are

such that the first one i enumerates the low-resolution pixels

and the second one j ∈ {1, . . . , 16} enumerates the associated

high-resolution pixels.

The outline of this paper is as follows. In Section II, it is

argued that the satellite image fusion is inherently ill posed. In

Section III, this is followed by the introduction of our sensor or

observation model with the constraints that we draw from it. An

initial solution applying these constraints is given in Section IV.

Section V presents additional ways of regularizing the solution

by neighborhood comparison. In Section VI, the IHS method

is set in the context of this paper, giving additional insight. An

experimental comparison of our proposed method and a few

selected other approaches from the literature are reported in

Section VII. This is followed by a conclusion of this paper and

an Appendix dealing with computational issues relating to the

proposed method.

II. I LL -P OSED P ROBLEM

As mentioned earlier, we argue that any satellite image fusion

method needs regularization. This is due to the image fusion

problem being ill posed or under constraint, which can be seen

by simple variable counting. That is, for each low-resolutionimage pixel i, we have three values from the low-resolution

low

low

pixel (Rlow

i , Gi , Bi ) and 16 panchromatic values, i.e.,

19(= 16 + 3) in all. On the other hand, we need to estimate

16 RGB values, i.e., 48(= 16 · 3), which give us a shortage

of 29(= 48 − 19) values. Because no other information is

available, we have to produce these 29 dimensions or values by

regularization, preferably by modeling our prior assumption of

the data. This regularization can either be explicit or implicit.

For example, in the IHS method, regularization is implicitly

done by assuming that the hue and saturation values of all highresolution pixels are equal to the low-resolution pixel. That is

not necessarily the right solution, and probably, it is not.

III. O BSERVATION M ODEL

The methods presented here take their outset in an “assumed”

observation model for the satellite image sensor, which is also

seen as a generative model of the data.

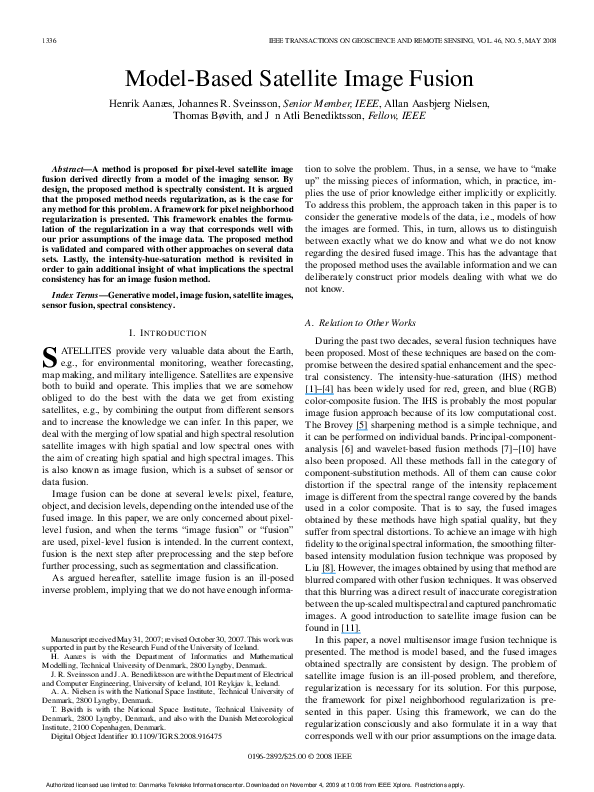

This observation model, which is based on the response of

a given pixel and a data channel, is given by the frequency

1337

composition and intensity of the incoming light L(x, ω), where

x and ω denote position and frequency, respectively, and the

spectral response function for that channel F (ω),1 cf., Fig. 1.

This response can be expressed as

� �

L(x, ω)F (ω) dω dx

(1)

A Ω

the frequency runs over a relevant spectral range Ω. The integral

(1) runs over the area A corresponding to the sensor area related

to the pixel.

A. Inner Product Space

The response in (1) is a convolution between the spectrum

of the incoming light L(x, ω) and the response function of the

channels F (ω). This induces an inner product, e.g.,2

�F red , F pan � =

�

F red (ω)F pan (ω) dω

(2)

Ω

corresponding to the L2 norm3 [13] which introduces a measure

of angle and distance. In using this, we are only interested in

the observable 4-D subspace spanned by the R, G, B, and P

channels. Therefore, as an example, a measure of how much

information about Ghigh is contained in Phigh is given by4

α(G, P ) = �

�F green , F pan �

�F green , F green ��F pan , F pan �

(3)

which is equal to the cosine of the angle between the two

spectra. Collecting these normalized inner products between all

channels gives

⎡

α(R, R) α(R, G)

⎢ α(G, R) α(G, G)

Σ=⎣

α(B, R) α(B, G)

α(P, R) α(P, G)

α(R, B)

α(G, B)

α(B, B)

α(P, B)

⎤

α(R, P )

α(G, P ) ⎥

⎦

α(B, P )

α(P, P )

(4)

which, in some sense, describes the information between the

different channels. In Section IV, this matrix will be interpreted

as a correlation matrix, for ease of computation, without us

claiming that the spectra are normally distributed.

B. Spectral Consistency

Assuming that the low- and high-resolution pixels are perfectly aligned, i.e., the areas associated with the different pixels

1 F (ω)

is usually supplied by the sensor manufacturer.

response functions are independent of position. Therefore, there is no

need to integrate over area.

3 This is an extension of the regular two-norm to an infinite dimensional

space. Later, we will just refer to this as the two-norm.

4 We assume that there is an individual linear stretch of each channel, such

that it best fits the dynamic range. Thus, we normalize this criterion to account

for this.

2 The

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�1338

IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, VOL. 46, NO. 5, MAY 2008

Fig. 2. Here, it is illustrated that, because the same light is supposedly hitting

the low and high spatial resolution sensors, the color of the low-resolution

pixel is equal to the average of the corresponding high-resolution pixels, as

expressed in (5).

Fig. 1. Spectral response of the IKONOS spectral bands in [12].

satisfy (see Fig. 2)

Ai =

Aij

and

j

∅=

Aij .

j

Another property derived from (1) is that of spectral consistency. This property is very important for satellite image fusion

because spectral distortion may result in unreliable interpretation for applications. By considering any channel, e.g., the blue,

spectral consistency can be derived as follows:

=

Blow

i

� �

L(x, ω)F (ω) dω dx

Ai Ω

16

=

� �

L(x, ω)F (ω) dω dx

j=1A Ω

ij

16

Bhigh

ij .

=

j=1

Therefore, the spectral consistency constraint states that5

⎡ high ⎤

⎡ low ⎤

Rij

16

Ri

1

⎢ high ⎥

⎣ Glow

⎦

=

⎣ Gij ⎦ .

i

16 j=1

Blow

i

Bhigh

ij

(5)

channel but highly different on, e.g., the red channel. Thus,

Phigh and (5) do not give enough information to fully determine

the individual high-resolution multispectral components, e.g.,

Rhigh .

Here, it is assumed that the “unknown” part6 of the signal

is small. Therefore, we regularize the solution by penalizing

it with the two-norm, because this is the norm induced by

the inner product. This implicitly corresponds to casting the

problem in a statistical setting, assuming that an “observation”

in (Rhigh , Ghigh , Bhigh , Phigh ) space is normally distributed

with covariance Σ (4). Thus, the conditional means method [14]

is used to solve the problem. Due to the spectral consistency,

high

high

we know that the mean of (Rhigh

ij , Gij , Bij ) is equal to

low

low

low

(Ri , Gi , Bi ). This gives the following solution to the

fusion problem:

⎡�

⎤

⎡ low ⎤ ⎡

⎤

Rhigh

Ri

α(R, P ) �

ij

�

⎢ high ⎥

high

µ

⎦

⎣

⎦

P

+

α(G,

P

)

−

P

⎣ Gij ⎦ = Fij = ⎣ Glow

i

ij

i

Blow

α(B, P )

i

Bhigh

ij

(6)

where Piµ denotes the mean of the Phigh

w.r.t. the lowij

resolution pixel i. For ease of notation, this solution is denoted

by Fij . The results of this proposed method can be seen in

Figs. 5–7 (where this solution is referred to as no smoothing).

A couple of comments should be made w.r.t. the solution in

(6). First, we do not claim that the observations are normally

distributed, just that a reasonable regularization corresponds

to it. Second, if there is no “overlap” between the spectral

responses of the panchromatic and one of the low-resolution

channels, then α(·, P ) will be zero. This indicates that there

is no information between the two channels and, in effect,

omits the last term in (6) for the channel in question. Lastly,

it is seen that the solution is spectrally consistent, cf., (5), by

construction, because

IV. I NITIAL S OLUTION

Deriving an image fusion method from the aforementioned

observation model is hindered by (Rhigh , Ghigh , Bhigh ), not

being fully observable from the data, as argued in Section II.

Therefore, regularization is required. The same is also illustrated by the spectral response functions of the sensor, cf.,

Fig. 1. Here, it is seen that there are several compositions of

incoming light, giving the same response on the panchromatic

5 1/16

is due to the appropriate normalization of the channels.

Piµ =

1

16

16

16

Phigh

⇒

ij

j=1

j=1

�

�

Phigh

− Piµ = 0.

ij

V. S MOOTHING P RIORS

Although the aforementioned initial solution is spectrally

consistent, it still leaves something to be desired, particularly

because there is a pronounced blocking effect originating from

6 This

“unknown” part is orthogonal to the panchromatic channel.

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�AANÆS et al.: MODEL-BASED SATELLITE IMAGE FUSION

1339

the low-resolution pixels, as seen in Figs. 5–7. As argued in

Section VI, this is likely to be the best that can be done on a

pixelwise basis. In order to improve this, we propose the augmentation of the method with a spatial prior. This spatial prior

should express our expectation that the fused image should be

homogeneous or piecewise homogeneous, which corresponds

to our general expectation of images.

Specifically, by applying a Markov random-field setting [15],

[16] to the problem, the (conditional) smoothing of the image

can be expressed as penalizing deviations between neighboring

pixels. That is, define the errors

⎡ high ⎤ ⎡

⎤

Rij

Rhigh

⎢ high ⎥ ⎣ khigh ⎦

,

k ∈ Nij

(7)

ǫijk = ⎣ Gij ⎦ − Gk

high

high

B

Bij

k

where Nij is the four neighborhood of pixel ij. We then

regularize by adding the term

ρ(ǫijk )wijk

(8)

i,j k∈Nij

to (6), where the wijk denotes appropriate weights, and ρ is the

error function

� −1 ǫijk .

ρ(ǫijk ) = ǫTijk Σ

(9)

� is the RGB part of Σ, cf., (4). This regularizer is seen

Here, Σ

as a wijk -dependent smoothing of the image. The reason that

� is included in (8) is that ρ(ǫijk ) should measure differences

Σ

in incoming light and not be biased by the correlation of the

measured channels.

The spatial prior is merged with the solution in (6) by

combining (6) and (8), i.e., define the deviation from Fij by

⎞

⎛⎡ high ⎤

⎤

⎞T

Rij

Rhigh

ij

⎟

⎥

⎟ � −1 ⎜⎢ high ⎥

⎜⎢

Dij = ⎝⎣ Ghigh

⎝⎣ Gij ⎦ − Fij ⎠ (10)

⎦ − Fij ⎠ Σ

ij

Bhigh

Bhigh

ij

ij

⎛⎡

then, the combined objective function becomes

⎛

min

Rhigh ,Ghigh ,Bhigh

ij

⎝Dij + γ

k∈Nij

⎞

ρ(ǫijk )wijk ⎠

(11)

where γ is a tuning parameter encapsulating the weight of the

smoothness term that is relative to the data term. Note that this

is a least squares (LS) problem in Rhigh , Ghigh , Bhigh and (11)

is under the condition that the solution is spectrally consistent.

This latter characteristic is dealt with in Section V-A. In the

experiments relating to this paper, γ was selected in the range

of one to five. The value of γ, however, depends highly on the

intrinsic scaling of the two combined terms. Different range for

the γ may be appropriate in other problems.

The problem is now reduced to setting the appropriate

weights wijk to best capture the prior assumption of the fused

image. Usually, these weights are set simultaneously with solving (11), because the smoothed solution is the best clue to where

the edges in the image should be, cf., e.g., [15] and [16]. In

general, the optimization problem in (11) is thus “big” and

nonlinear. This is not the case here because we have the highresolution panchromatic image, which is a very good source of

information on where edges should be. Here, the weights wijk

are thus based on the panchromatic image, such that the fused

image shall have homogeneous regions where the panchromatic

image has them. To illustrate this, we have tried two general

approaches, cf., Sections V-B and C. The results with uniform

smoothing (all wijk = 1) are also presented for comparison.

The results are given in Section VII. By general approaches,

we mean that the two investigated approaches are good typical examples of what might be used; thus, they are not the

results of a long investigation of what is the best weighting

scheme.

A. Ensuring Spectral Consistency

Extending (6)–(11) implies that the LS solution is not spectrally consistent, cf., (5), and has to be added as a specific

condition to the solution. This is done by noting that (5) defines

a 15-D hyperplane in 16 dimensions on which the solution

should be located. We can thus reparameterize the solution

space such that the only possible values are on this hyperplane.

This is accomplished by introducing

high

high

Rhigh

ij , Gij , Bij ,

j ∈ {1, . . . , 15}

which map to the pixel of the fused image and variables in (1)

as follows:

⎡ high ⎤ ⎡ high ⎤

Rij

Rij

⎢ high ⎥ ⎢ high ⎥

j ∈ {1, . . . , 15}

⎣ Gij ⎦ = ⎣ Gij ⎦ ,

high

Bij

Bhigh

ij

⎡ high ⎤

⎤

⎡ low ⎤

Rij

Rhigh

15

Ri

i,16

⎢ high ⎥

⎢ high ⎥

low ⎦

⎣

−

⎣ Gij ⎦ .

⎣ Gi,16 ⎦ = 16 Gi

low

high

high

j=1

B

i

Bij

Bi,16

⎡

(12)

An important property of this mapping is that it is affine.

By plugging (12) into (11), a new objective function

high

high

in Rhigh

ij , Gij , Bij , which is spectrally consistent by design,

and an LS system are obtained. This, in turn, has nice implications for computing a solution (see Appendix). It should be

noted that solving (11) without this parameterization (or another expression of the spectral consistency constraint) will, in

general, yield nonspectrally consistent solutions. It is noted that

this parameterization is intrinsic in the predictor for satellite

image fusion presented in [17], where it is also noted that it

has the desirable characteristic of spectral consistency.

B. Edge-Induced Weights

We are, in a sense, trying to transfer edges from the panchromatic image to the fused RGB image. Thus, the first of the

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�1340

IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, VOL. 46, NO. 5, MAY 2008

Fig. 3. Sample from satellite image data set. (Top left) Panchromatic image. (Top center) Extracted edges via the Canny edge detector. (Top right) Weight set via

(13) with white being zero. Here, a λ of 0.05 is used. (Bottom left) Low-resolution multispectral image. (Bottom center) Resulting fused image with weight set

according to the Canny weights—see top center. (Bottom right) Resulting fused image with weight set via (13)—see top right.

general approaches we use for setting the weights in (11) are

as follows.

1) Extract edges in the panchromatic image, see Fig. 3, top

center.

2) If there is an edge between pixel ij and its kth neighbor,

set the weight to zero, or else, set the appropriate weight

to one.

The extracted edges are obtained via the Canny edge detector [18]. There are, however, a multitude of methods for

edge extraction, many of which could be used successfully,

e.g., via the total variation method [19], as used in [20].

The results using the Canny edges are shown in Fig. 3 and

Figs. 5–7.

C. Gradient-Induced Weights

Setting the weights via edge extraction gives results that are

somewhat blocky to sharp edges, as can be seen in Fig. 3 and

Figs. 5–7. This is partly due to the weights being either zero

or one. Therefore, the algorithm either smooths fully the one

between two pixels or does not smooth at all. Thus, it seems

natural to let the weights have a continuous transition from

zero to one and, by that, introducing the concept of “degree

of edginess.” That is the basis for our second general weighting

scheme.

The simplest form of the second weighting scheme would

be letting the weights be proportional to the inverse gradient

magnitude of the panchromatic image, i.e.,

wijk

1

�

∝�

�

high �

�∇Pij �

which however did not turn out too successful on our data.

Instead, we adapted a method from nonlinear image diffusion

Fig. 4.

Function in (13) as a function of the gradient magnitude �∇Phigh

ij �

with λ = 0.5, which is denoted by the dotted line.

[21], which is proposed in [22]. Here, the amount of smoothing

is a nonlinear function of the image gradient magnitude—here,

the panchromatic image. The function in question is

⎛

⎞

⎜ −3.31488 ⎟

⎜

⎟

wijk = 1 − exp ⎜ �

�4 ⎟

⎠

⎝ ∇Phigh

ij

(13)

λ

where λ is a steering parameter, indicating how large the

gradient should be in order to be an edge, see Fig. 4. The results

of this scheme can be seen in Fig. 3 and Figs. 5–7. A note

on (13): This function, including the −3.31488, has proven

successful in the nonlinear diffusion literature; for more on

this matter, see [21].

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�AANÆS et al.: MODEL-BASED SATELLITE IMAGE FUSION

1341

Fig. 5. Results of the image fusion strategies. A sample of the resulting

images of the IKONOS data set. (Top left) Input panchromatic image. (Top

right) No smoothing. (Row 2 left) Uniform weights. (Row 2 right) Edgeinduced weighting. (Row 3 left) Gradient-induced weighting. (Row 3 right)

IHS method. (Bottom left) Mean-corrected IHS method. (Bottom right) Brovey

method. The images have been stretched for better visualization.

Fig. 6. Results of the image fusion strategies. A sample of the resulting

images of the QuickBird data set. (Top left) Input panchromatic image. (Top

right) No smoothing. (Row 2 left) Uniform weights. (Row 2 right) Edgeinduced weighting. (Row 3 left) Gradient-induced weighting. (Row 3 right)

IHS method. (Bottom left) Mean-corrected IHS method. (Bottom right) Brovey

method. The images have been stretched for better visualization.

VI. N OTE ON THE IHS M ETHOD

Probably, the most popular method for image fusion is the

IHS method, where the fusion is performed in IHS space by

letting the hue and saturation of a fused pixel be equal to

the corresponding low-resolution pixel and the intensity Iij be

equal to the panchromatic channel, i.e.,

Iij =

Rhigh

ij

+

Ghigh

ij

3

+

Bhigh

ij

= Phigh

ij .

(14)

Combining (5) and (14), it is seen that

1

16

16

Phigh

=

ij

j=1

=

1

16

16

j=1

Rhigh

+ Ghigh

+ Bhigh

ij

ij

ij

3

Rlow

+ Glow

+ Blow

i

i

i

= Ii .

3

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

(15)

�1342

IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, VOL. 46, NO. 5, MAY 2008

That leads us to propose a modification of the IHS method,7

which consists of appropriately correcting the mean of the

� high

panchromatic channel. The correction is done by using P

ij

high

high

�

instead of Pij , where P

is

defined

as

follows:

ij

� high = Phigh

P

ij

ij

1

16

Ii

�16

j=1

Phigh

ij

= Iij .

(16)

� high instead of Phigh in the IHS method

It is seen that using P

gives a spectrally consistent result.

The results of using this mean-corrected IHS method are seen

in Figs. 5–7. It is seen that this method, although spectrally

consistent, yields a blocky result that is similar to that of our

initial solution presented in Section IV. This seems to indicate

that in order to get a spectrally consistent result with a simple

per pixel method, you need to endure a blocking effect. An

explanation is that the discrepancy between the RGB response

functions and the panchromatic response function, as seen in

Fig. 1, gives a varying mean offset for each block.

This mean-corrected IHS method, in some sense, resembles

the Brovey method or transform, cf., [5] and [23], which is thus

included for experimental comparison. The Brovey method is

given by

⎤ ⎡

⎡�

⎤

Rhigh

Rlow

ij

Phigh

⎢ high ⎥ ⎣ ilow ⎦

ij

.

⎣ Gij ⎦ = Gi

low + Glow + Blow

R

i

i

i

Blow

i

Bhigh

ij

(17)

It should also be noted that other methods have been proposed

for addressing the issue of spectral consistency with the IHS

method, cf., e.g., [24].

VII. E XPERIMENTAL C OMPARISONS AND D ISCUSSION

To experimentally investigate and compare the proposed

methods, we have applied them to three different data sets from

three different types of satellites, each depicting a different

type of landscape. The proposed methods were compared with

the IHS and Brovey methods for comparison. The investigated

methods are summarized in Table I. The three data sets are as

follows.

Fig. 7. Results of the image fusion strategies. A sample of the resulting

images of the Meteosat data set. (Top left) Input panchromatic image. (Top

right) No smoothing. (Row 2 left) Uniform weights. (Row 2 right) Edgeinduced weighting. (Row 3 left) Gradient-induced weighting. (Row 3 right)

IHS method. (Bottom left) Mean-corrected IHS method. (Bottom right) Brovey

method. The images have been stretched for better visualization.

This implies that if the IHS method should be spectrally

consistent, the mean of the panchromatic channel over a

low-resolution pixel should equal the corresponding lowresolution intensity. This will generally not be the case, as

shown in Fig. 1, where the spectral response function of the

panchromatic channel is far from the sum of the red, green, and

blue response functions. This fact is also validated experimentally, as reported in Table II.

1) IKONOS. An image of an urban area is taken by the

IKONOS earth imaging satellite [12]. The low-resolution

image consists of four bands R, G, B, and near infrared

(NIR) (in this paper, we only use R, G, and B), and

the ratio between the panchromatic and low-resolution

images is 16 or four by four.

2) QuickBird. An image of a forest landscape is bisected

by roads, and it contained a few large buildings. A city

emerges toward the right side. This image is taken by

the QuickBird satellite [25]. The low-resolution image

consists of four bands R, G, B, and NIR (in this paper, we use only R, G, and B), and the ratio between

7 Partly for gaining insight into what spectral consistency implies for satellite

image fusion methods.

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�AANÆS et al.: MODEL-BASED SATELLITE IMAGE FUSION

1343

TABLE I

FOLLOWING METHODS WERE APPLIED IN THE EXPERIMENTS

TABLE II

SPECTRAL CONSISTENCY OF THE IHS AND BROVEY METHODS,

WHICH IS MEASURED AS THE CROSS CORRELATION BETWEEN THE

LOW-RESOLUTION RGB AND APPROPRIATELY DOWN-SAMPLED

FUSED IMAGES. ALL OTHER APPLIED METHODS, CF., TABLE I,

ARE SPECTRALLY CONSISTENT BY DESIGN, WHICH HAS

BEEN CONFIRMED EXPERIMENTALLY

the panchromatic and low-resolution images is 16 or

four by four.

3) Meteosat. A weather satellite image depicting Europe

from the Meteosat satellite [26]. We use the R, G, and

B bands of low resolution, and the ratio between the

panchromatic and low-resolution images is nine or three

by three.

Using these different data sets gives us an idea of how a given

method will work on data sets with different contents. The

reason that we did not use the fourth band in the IKONOS and

QuickBird cases was for visualization purposes. We have, however, implemented and applied our fusion methods successfully

to all four bands.

In evaluating the different methods, we were faced with

the problem that we did not have any ground truth data, i.e.,

a high-resolution color image, to compare with. Due to this

shortcoming, we have chosen two different strategies. First, we

have applied the methods to the original data sets, computed

the spectral consistency, see Table II, and visually evaluated

the results, see Figs. 5–7. Second, we have down-sampled the

original data to generate a new data set with ground truth,

albeit with different intrinsic scale. The difference between this

ground truth and the fused results was compared with different

metrics, see Table III.

In the experiments, the γ in (11) was set to five and one

for the original and down-sampled data,8 respectively. In the

Canny edge detector, which was used for generating the edgeinduced weights, the smoothing σ was set to one and 0.05 for

the original and down-sampled data, respectively. As for the

gradient-induced weights, the parameters σ and λ were selected

8 This

was used only to generate the values in Table III.

TABLE III

RESULT OF COMPARING THE FUSED RESULT WITH THE GROUND TRUTH

WITH THE DOWN-SAMPLED DATA. HERE, BOLD DENOTES THE BEST

RESULT FOR THE DATA SET; IF THIS IS NOT THE ONE DERIVED IN (11),

THE B EST OF T HESE I S D ENOTED BY I TALICS . S EE T ABLE I

FOR A D ESCRIPTION OF THE A PPLIED M ETHODS

as (0.5, 0.05) and (0.5,0.007) for the original and down-sampled

data, respectively.9

We have chosen five different metrics in evaluating the downsampled experiments, as reported in Table III. Many of the popular metrics are further developments of each other, which, in

part, explain the strong correlation among the best performing

9 The images are smoothed slightly with a Gaussian kernel before the gradient

is calculated.

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�1344

IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, VOL. 46, NO. 5, MAY 2008

methods. None or all of these metrics, however, encompass the

ground truth of what a good fusion is; thus, visual inspection

also needs to be taken into account.10 The used metrics, which

are among the popular in the literature, are as follows (the

boldface acronym indicate its entry in Table III).

1) MSE is the mean square error on all bands. It takes on

values between zero and one with zero being the best

value.

2) CC is the mean cross correlation between bands. It takes

on values between zero and one with one being the best

value.

3) WB is the mean Wang and Bovik measure between

bands, cf., [27]. It takes on values between −1 and one

with one being the best value.

4) SSIM is a quality metric which measures the degradation of structural information between two images. This

metric combines three independent components of the

images: Luminance, contrast, and structure, and the value

one is only achieved if the compared images are identical.

The lowest value is zero if the images show no similarity

at all.

5) Q4 is an extension of the Wang and Bovik measure to

multiple dimensions, giving a combined metric, cf., [28].

It takes on values between zero and one with one being

the best value.

In Table III, most of the metrics (MSE, CC, WB, and Q4)

give significant numerical differences for the individual methods which were applied on the different data sets. However, that

is not the case for the SSIM metric, which gives similar results

for all the methods that were applied on the different data sets.

As a general observation, it is seen that the proposed methods

work well. Specifically, the gradient-induced weight method

should be noted, in that, it gives good visual results. Also, it

performs well in the down-sample experiment. In comparison,

the mean-corrected IHS method performs excellently in the

down-sampled experiment but gives a too blocky result with

the visual comparison. The Brovey and IHS methods give a

very pleasing visual result but perform poorly with respect to

the down-sampled experiment and are clearly not spectrally

consistent.

Comparing the edge-induced and gradient-induced weighting schemes visually, it is seen that the edge-induced scheme

gives an oversegmented image and the gradient-induced

scheme visually gives the best results, although the latter image

is a bit blurry. As mentioned in Section V, we have only

presented two general approaches, and the search for the best

weighting scheme is still ongoing. In this light, a mixture of the

edge-induced and gradient-induced schemes seems well worth

investigating.

The fact that the mean-corrected IHS method gives blocky

images and the gradient-induced weight method gives a somewhat smoothed image is well in line with our current experience. This experience states that with the proposed method,

10 Note, for example, that the mean-corrected IHS method performs best

when the metrics are used for the comparison in our experiments, cf. Table III,

but its visual quality leaves a lot to be desired. This motivates further investigation into image metrics, which is beyond the scope of this paper.

if spectral consistency is sought, either a blocking effect is

endured, as discussed in Section VI, or a slightly blurred

image is obtained because of the removal of the blockiness by

smoothing. This can be explained by the panchromatic channel

containing information that is not present in the RGB channels,

cf., Fig. 1.

As a note for future work, neither our initial solution (also

termed as the “no weight” solution) nor the mean-corrected IHS

method uses a spatial prior, but both are spectrally consistent.

Comparing the two, it is seen that the mean-corrected IHS

method clearly outperforms the former in terms of the quality

measures. This indicates that Fij in (10) could possibly be

replaced with the result of the mean-corrected IHS method. On

the other hand, the IHS method is less related to the underlying

imaging physics. Also, the IHS method, in some sense, works in

the one-norm, see (14), instead of the induced two-norm. Using

different norms also seems worth investigating, although these

are also less tied to the imaging physics.

VIII. C ONCLUSION

A framework has been presented for pixel-level fusion of

satellite images, which is derived by considering the underlying

imaging physics. This, among other characteristics, ensures

spectral consistency by design. We have also argued that the

satellite image fusion problem is inherently ill posed, and as

such, our framework explicitly states how regularization should

be performed to address that. Several schemes for regularization have been presented in the proposed framework. These

schemes have been experimentally tested on several data sets.

For all these data sets, the methods gave good performance.

The schemes also held their ground when compared with other

popular methods for satellite image fusion. Also, in this paper,

the IHS method was revisited in order to gain additional insight

into the implications of spectral consistency for image fusion

methods. The results obtained with the proposed framework are

promising, and further research on the framework is ongoing.

A PPENDIX

N UMERICAL S OLUTION

It is noted that (11) is an LS system, a property which is

retained after the reparameterization in (12) because it is just

an affine mapping. In principal, the solution can be obtained by

solving this LS system via the usual methods, e.g., the normal

equations in [30]. However, this poses the problem that the

dimension of the solution will often be very large. It can be

computed as the number of high-resolution pixels times the

number of bands. Therefore, LS methods become numerically

prohibitive. As an example, the IKONOS data set requires

1.873.920 variables with just three bands. Thus, something

else needs to be considered, e.g., exploiting the sparsity of the

problem. It is noted that the LS property in (11) ensures that a

nice and convex error surface is obtained with only one welldefined optimum.11

11 Since the problem is regularized properly, the optimum is unique. Otherwise, the optimum would be a linear subspace.

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�AANÆS et al.: MODEL-BASED SATELLITE IMAGE FUSION

Our solution is solving (11) iteratively for all the highresolution pixels corresponding to a low-resolution pixel. Thus,

we keep all other pixels constant in relation to the neighborhood

term. The blocks were updated in a checkerboard fashion [31],

[32]. The process was run until convergence, i.e., it was stopped

when very small changes were observed in the solution.

ACKNOWLEDGMENT

The authors would like to thank A. Vésteinsson for spuring

this work via his masters thesis [29] and the reviewers whose

comments greatly improved this paper.

R EFERENCES

[1] T. Carper, T. Lillesand, and R. Kiefer, “The use of intensity-huesaturation transformations for merging SPOT panchromatic and multispectral image data,” Photogramm. Eng. Remote Sens., vol. 56, no. 4,

pp. 459–467, 1990.

[2] P. Chavez and J. Bowell, “Comparison of the spectral information content of Landsat Thematic Mapper and SPOT for three different sites in

the Phoenix, Arizona region,” Photogramm. Eng. Remote Sens., vol. 54,

no. 12, pp. 1699–1708, 1988.

[3] K. Edwards and P. Davis, “The use of intensity-hue-saturation transformation for producing color shaded-relief images,” Photogramm. Eng.

Remote Sens., vol. 60, no. 11, pp. 1369–1373, 1994.

[4] T. Tu, P. Huang, C. Hung, and C. Chang, “A fast intensity-hue-saturation

fusion technique with spectral adjustment for IKONOS imagery,” IEEE

Geosci. Remote Sens. Lett., vol. 1, no. 4, pp. 309–312, Oct. 2004.

[5] A. Gillespie, A. Kahle, and R. Walker, “Color enhancement of highly

correlated images. II. Channel ratio and ‘chromaticity’ transformation techniques,” Remote Sens. Environ., vol. 22, no. 3, pp. 343–365,

Aug. 1987.

[6] P. Chavez, S. Sides, and J. Anderson, “Comparison of three different

methods to merge multiresolution and multispectral data: Landsat TM and

SPOT panchromatic,” Photogramm. Eng. Remote Sens., vol. 57, no. 3,

pp. 295–303, 1991.

[7] B. Aiazzi, L. Alparone, S. Baronti, and A. Garzelli, “Context-driven fusion of high spatial and spectral resolution images based on oversampled

multiresolution analysis,” IEEE Trans. Geosci. Remote Sens., vol. 40,

no. 10, pp. 2300–2312, Oct. 2002.

[8] J. Liu, “Smoothing filter-based intensity modulation: A spectral preserve

image fusion technique for improving spatial details,” Int. J. Remote Sens.,

vol. 21, no. 18, pp. 3461–3472, Dec. 2000.

[9] J. Nunez, X. Otazu, O. Fors, A. Prades, V. Pala, and R. Arbiol,

“Multiresolution-based image fusion with additive wavelet decomposition,” IEEE Trans. Geosci. Remote Sens., vol. 37, no. 3, pp. 1204–1211,

May 1999.

[10] T. Ranchin and L. Wald, “Fusion of high spatial and spectral resolution

images: The ARSIS concept and its implementation,” Photogramm. Eng.

Remote Sens., vol. 66, no. 1, pp. 49–61, 2000.

[11] M. J. Canty, Image Analysis, Classification and Change Detection in

Remote Sensing: With Algorithms for ENVI/IDL. Boca Raton, FL: CRC

Press, 2006.

[12] GeoEye, Geoeye Imaging Products Ikonos. [Online]. Available:

http://www.geoeye.com/products/imagery/ikonos/

[13] M. Pedersen, Functional Analysis in Applied Mathematics and Engineering. Boca Raton, FL: CRC Press, 1999.

[14] T. Anderson, An Introduction to Multivariate Statistical Analysis, 2nd ed.

New York: Wiley, 1984.

[15] S. Li, Markov Random Field Modeling in Image Analysis, 2nd ed.

New York: Springer-Verlag, 2007.

[16] G. Winkler, Image Analysis, Random Fields and Markov Chain Monte

Carlo Methods: A Mathematical Introduction (Applications of Mathematics). New York: Springer-Verlag, 2003.

[17] R. Nishii, S. Kusanobu, and S. Tanaka, “Enhancement of low spatial

resolution image based on high resolution-bands,” IEEE Trans. Geosci.

Remote Sens., vol. 34, no. 5, pp. 1151–1158, Sep. 1996.

[18] J. Canny, “A computational approach to edge detection,” IEEE Trans.

Pattern Anal. Mach. Intell., vol. PAMI-8, no. 6, pp. 679–698, Nov. 1986.

[19] L. Rudin, S. Osher, and E. Fatemi, “Nonlinear total variation based

noise removal algorithms,” Physica D, vol. 60, no. 1–4, pp. 259–268,

Nov. 1992.

1345

[20] J. Sveinsson, H. Aanæs, and J. Benediktsson, “Smoothing of fused spectral consistent satellite images with TV-based edge detection,” in Proc.

IGARSS, 2007, pp. 318–321.

[21] J. Weickert, Anisotropic Diffusion in Image Processing, ser. ECMI Series.

Stuttgart, Germany: Teubner-Verlag, 1998.

[22] P. Perona and J. Malik, “Scale-space and edge detection using anisotropic

diffusion,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 12, no. 7,

pp. 629–639, Jul. 1990.

[23] J. Zhou, D. Civco, and J. A. Silander, “A wavelet transform method to

merge Landsat TM and SPOT panchromatic data,” Int. J. Remote Sens.,

vol. 19, no. 4, pp. 743–757, Mar. 1998.

[24] M. Choi, “A new intensity-hue-saturation fusion approach to image fusion

with a tradeoff parameter,” IEEE Trans. Geosci. Remote Sens., vol. 44,

no. 6, pp. 1672–1682, Jun. 2006.

[25] Quickbird Imagery Products. Product Guide, DigitalGlobe, Inc.,

Longmont, CO, 2006. Tech. Rep. 4.2.7. [Online]. Available:

http://www.digitalglobe.com/downloads/QuickBird Imagery ProductsProduct Guide.pdf

[26] J. Schmetz, P. Pili, S. Tjemkes, D. Just, J. Kerkmann, S. Rota, and

A. Ratier, “An introduction to Meteosat second generation (MSG),” Bull.

Amer. Meteorol. Soc., vol. 83, no. 7, pp. 977–992, Jul. 2002.

[27] Z. Wang and A. C. Bovik, “A universal image quality index,” IEEE Signal

Process. Lett., vol. 9, no. 3, pp. 81–84, Mar. 2002.

[28] L. Alparone, S. Baronti, A. Garzelli, and F. Nencini, “A global quality measurement of pan-sharpened multispectral imagery,” IEEE Trans.

Geosci. Remote Sens. Lett., vol. 1, no. 4, pp. 313–317, Oct. 2004.

[29] A. Vesteinsson, J. Sveinsson, J. Benediktsson, and H. Aanæs, “Spectral

consistent satellite image fusion: Using a high resolution panchromatic

and low resolution multi-spectral images,” in Proc. IGARSS, 2005, vol. 4,

pp. 2834–2837.

[30] Å. Björck, Numerical Methods for Least Squares Problems.

Philadelphia, PA: SIAM, 1996.

[31] J. Besag, “On the statistical analysis of dirty pictures,” J. R. Stat. Soc. B,

vol. 48, no. 3, pp. 259–302, 1986.

[32] S. Geman and D. Geman, “Stochastic relaxation, Gibbs distributions, and

Bayesian restoration of images,” IEEE Trans. Pattern Anal. Mach. Intell.,

vol. PAMI-6, no. 6, pp. 721–741, Nov. 1984.

Henrik Aanæs received the M.Sc. degree in engineering and the Ph.D. degree from the Technical

University of Denmark, Lyngby, in 2000 and 2003,

respectively.

He is currently an Assistant Professor in computer

vision and image analysis with the Department of

Informatics and Mathematical Modelling, Technical

University of Denmark. His main research interests

are estimation of 3-D shape to form 2-D images,

object recognition and tracking, statistical inference

from images, data fusion, and robust statistics.

Johannes R. Sveinsson (S’86–M’90–SM’02) received the B.S. degree from the University of

Iceland, Reykjavík, and the M.S. and Ph.D. degrees

from Queen’s University, Kingston, ON, Canada, all

in electrical engineering.

He is currently an Associate Professor with the

Department of Electrical and Computer Engineering, University of Iceland, where he was with the

Laboratory of Information Technology and Signal

Processing from 1981 to 1982 and, from November

1991 to 1998, the Engineering Research Institute and

the Department of Electrical and Computer Engineering as a Senior Member

of the research staff and a Lecturer, respectively. He was a Visiting Research

Student with the Imperial College of Science and Technology, London, U.K.,

from 1985 to 1986. At Queen’s University, he held teaching and research

assistantships. His current research interests are in systems and signal theory.

Dr. Sveinsson received the Queen’s Graduate Awards from Queen’s

University.

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�1346

IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, VOL. 46, NO. 5, MAY 2008

Allan Aasbjerg Nielsen received the M.Sc. and

Ph.D. degrees from the Technical University of

Denmark, Lyngby, in 1978 and 1994, respectively.

He is currently an Associate Professor with the

National Space Institute, Technical University of

Denmark, where he worked on energy conservation

in housing with the Thermal Insulation Laboratory

from 1978 to 1985, with the section for image

analysis in the Department of IMM from 1985 to

2001, as a Part-Time Acting Professor from 1995

to 2001, and with the section for geoinformatics

in the Department of IMM from 2001 to 2006. He was with the Danish

Defense Research Establishment from 1977 to 1978. Since 1985, he has been

working on several national and international projects on the development,

implementation, and application of statistical methods and remote sensing in

mineral exploration, mapping, geology, agriculture, environmental monitoring, oceanography, geodesy, and security funded by industries, the European

Union, the Danish International Development Agency, and the Danish National

Research Councils. He is the Reviewer of several international journals and

conferences.

Thomas Bøvith received the M.S. degree in engineering from the Technical University of Denmark,

Lyngby, in 2002, where he is currently working

toward the Ph.D. degree in remote sensing in the

National Space Institute, Technical University of

Denmark, which is in collaboration with the Danish

Meteorological Institute, Copenhagen.

His research interests include remote sensing of

precipitation, data fusion, image classification, and

scientific visualization.

Jón Atli Benediktsson (S’84–M’90–SM’99–F’04)

received the Cand.Sci. degree in electrical engineering from the University of Iceland, Reykjavík,

in 1984 and the M.S.E.E. and Ph.D. degrees from

Purdue University, West Lafayette, IN, in 1987 and

1990, respectively.

He is currently the Director of Academic Development and Innovation and a Professor with the Department of Electrical and Computer Engineering, University of Iceland. He has held visiting positions with

the Department of Information and Communication

Technology, University of Trento, Trento, Italy (2002–present); School of Computing and Information Systems, Kingston University, Kingston upon Thames,

U.K. (1999–2004); the Joint Research Center of the European Commission,

Ispra, Italy (1998); Technical University of Denmark, Lyngby (1998); and the

School of Electrical and Computer Engineering, Purdue University (1995). He

was a Fellow with the Australian Defense Force Academy, Canberra, A.C.T., in

August 1997. From 1999 to 2004, he was the Chairman of the energy company

Metan Ltd. His research interests are in remote sensing, pattern recognition,

neural networks, image processing, and signal processing, and he has published

extensively in those fields.

Dr. Benediktsson is the Editor of the IEEE TRANSACTIONS ON

GEOSCIENCE AND REMOTE SENSING (TGARS) and Associate Editor of the

IEEE GEOSCIENCE AND REMOTE SENSING LETTERS. He was an Associate

Editor of TGARS from 1999 to 2002. He coedited (with Prof. D. A. Landgrebe)

a special issue on data fusion of TGARS (May 1999). In 2002, he was appointed

as Vice President of Technical Activities in the Administrative Committee

of the IEEE Geoscience and Remote Sensing Society (GRSS) and (with

P. Gamba and G. G. Wilkinson) a special issue on urban remote sensing from

satellite (October 2003). From 1996 to 1999, he was the Chairman of the GRSS

Technical Committee on Data Fusion and was elected to the Administrative

Committee of the GRSS for the term 2000 to 2002. In 2002, he was appointed as

Vice President of Technical Activities of GRSS. He was the founding Chairman

of the IEEE Iceland Section and served as its Chairman from 2000 to 2003. He

was the Chairman of the Quality Assurance Committee, University of Iceland

(2006–present), the Chairman of the University’s Science and Research Committee (1999–2005), a member of Iceland’s Science and Technology Council

(2003–2006), and a member of the Nordic Research Policy Council (2004). He

was a member of the North Atlantic Treaty Organization Advisory Panel of the

Physical and Engineering Science and Technology Subprogram (2002–2003).

He received the Stevan J. Kristof Award from Purdue University in 1991 as an

outstanding graduate student in remote sensing. In 1997, he was the recipient of

the Icelandic Research Council’s Outstanding Young Researcher Award, and in

2000, he was granted the IEEE Third Millennium Medal. In 2007, he received

the Outstanding Service Award from the IEEE Geoscience and Remote Sensing

Society. He is a member of Societas Scinetiarum Islandica and Tau Beta Pi.

Authorized licensed use limited to: Danmarks Tekniske Informationscenter. Downloaded on November 4, 2009 at 10:06 from IEEE Xplore. Restrictions apply.

�

Johannes Sveinsson

Johannes Sveinsson