Tutorial 9.15a - Independence, Variance, Normality and Linearity

14 Jan 2013

Overview

Up until now (in the proceeding tutorials and workshops), the focus has been on models that adhere to specific assumptions about the underlying populations (and data). Indeed, both before and immediately after fitting these models, I have stressed the importance of evaluating and validating the proposed and fitted models to ensure reliability of the models.

It is now worth us revisiting those fundamental assumptions and exploring the options that area available when the populations (data) do not conform. Let's explore a simple linear regression model to see how each of the assumptions relate to the model.

The above simple statistical model models the linear relationship of $y_i$ against $x_i$. The residuals ($\varepsilon$) are assumed to be normally distributed with a mean of zero and a constant (yet unknown) variance ($\sigma$, homogeneity of variance). The residuals (and thus observations) are also assumed to all be independent.

Homogeneity of variance and independence are encapsulated within the single symbol for variance ($\sigma^2$). Indeed in assuming equal variances and independence, we are actually making an assumption about the covariance structure of the populations (and thus residuals). Specifically, we assume that all populations are equally varied and thus can be represented well by a single variance term (all diagonal values are the same, $\sigma$) and the covariances between each population is zero (off diagonals).

In simple regression, each observation (data point) represents a single observation drawn (sampled) from an entire population of possible observations. The above covariance structure thus assumes that the covariance between each population (observation) is zero - that is, each observation is completely independent of each other observation.

Whilst it is mathematically convenient when data conform to these conditions (normality, homogeneity of variance, independence and linearity), data often violate one or more of these assumptions. In this tutorial, I want to discuss the causes and options for dealing with non-compliance to each of these conditions.

Dealing with heterogeneity

To reiterate, the validity and reliability of linear models are very much dependent on variance homogeneity. In particular, variances that increase (or decrease) with a change in expected values are substantial violations.

Whilst non-normality can also be a source of heterogeneity and therefore normalizing can address both issues, heterogeneity can also be independent of normality. Similarly for many response types unequal variances might be expected. For example, the variance of count data (number of individuals per quadrat etc) tend to increase with increasing mean. When the expected number of individuals counted per quadrat is low (1, 2, 4,...), the variation in these counts is low. However, when the expected counts are high (100, 150, 250, ...), the expected variance of these counts is high.

Consequently, for many such situations it is arguably better to model the data against an error distribution better suited to the data (for example Poisson data is typically more suitable for count data than normal distribution). Generalized linear models (GLM) (that accommodate alternative residual distributions - such as Poisson, Binomial, Gamma etc) can be useful for more appropriate modelling of of both the distribution and variance of a model.

However, for Gaussian (normal) models in which there is evidence of heterogeneity of variance, yet no evidence of non-normality, it is also possible to specifically model in an alternative variance structure. For example, we can elect to allow variance to increase proportionally to a covariate.

To assist us in the following demonstration, we will generate another data set - one that has heteroscedasticity (unequal variance) by design. Rather than draw each residual (and thus observation) from a normal distribution with a constant standard deviation), we will draw the residuals from normal distributions whose variance is proportional to the X predictor.

set.seed(15) n <- 16 a <- 40 #intercept b <- 1.5 #slope x <- 1:n #values of the year covariate sigma <- 1.5*x eps <- rnorm(n, mean = 0, sd = sigma) #residuals y <- a + b * x + eps #response variable #OR y <- (model.matrix(~x) %*% c(a,b))+eps data1 <- data.frame(y=round(y,1), x) #dataset head(data1) #print out the first six rows of the data set

y x 1 41.9 1 2 48.5 2 3 43.0 3 4 51.4 4 5 51.2 5 6 37.7 6

#scatterplot of y against x library(car) scatterplot(y~x, data1)

#regular simple linear regression data1.lm <- lm(y~x, data1) #plot of standardized residuals plot(rstandard(data1.lm)~fitted(data1.lm))

#plot of standardized residuals against the predictor plot(rstandard(data1.lm)~x)

- The above scatterplot suggests that variance may increase with increasing X.

- The residual plot (using standardized residuals) suggests that mean and variance could be related - there is more than a hint of a wedge-shaped pattern

- Importantly, the plot of standardized residuals against the predictor shows the same pattern as the residual plot implying that heterogeneity is likely to be due a relationship between variance and X. That is, an increase in X is associated with an increase in variance.

In response to this, we could incorporate an alternative variance structure. The simple model we fit earlier assumed that the expected values were all drawn from normal distributions with the same level of variance ($\sigma^2$). $$ \mathbf{V} = cov = \underbrace{ \begin{pmatrix} \sigma^2&0&0&0&0&0&0&0&0&0&0&0&0&0&0\\ 0&\sigma^2&0&0&0&0&0&0&0&0&0&0&0&0&0\\ 0&0&\sigma^2&0&0&0&0&0&0&0&0&0&0&0&0\\ 0&0&0&\sigma^2&0&0&0&0&0&0&0&0&0&0&0\\ 0&0&0&0&\sigma^2&0&0&0&0&0&0&0&0&0&0\\ 0&0&0&0&0&\sigma^2&0&0&0&0&0&0&0&0&0\\ 0&0&0&0&0&0&\sigma^2&0&0&0&0&0&0&0&0\\ 0&0&0&0&0&0&0&\sigma^2&0&0&0&0&0&0&0\\ 0&0&0&0&0&0&0&0&\sigma^2&0&0&0&0&0&0\\ 0&0&0&0&0&0&0&0&0&\sigma^2&0&0&0&0&0\\ 0&0&0&0&0&0&0&0&0&0&\sigma^2&0&0&0&0\\ 0&0&0&0&0&0&0&0&0&0&0&\sigma^2&0&0&0\\ 0&0&0&0&0&0&0&0&0&0&0&0&\sigma^2&0&0\\ 0&0&0&0&0&0&0&0&0&0&0&0&0&\sigma^2&0\\ 0&0&0&0&0&0&0&0&0&0&0&0&0&0&\sigma^2\\ \end{pmatrix}}_\text{Variance-covariance matrix} $$ Which is often summarized as: $$ \mathbf{V} = \sigma^2\times \underbrace{ \begin{pmatrix} 1&0&\cdots&0\\ 0&1&\cdots&\vdots\\ \vdots&\cdots&1&\vdots\\ 0&\cdots&\cdots&1\\ \end{pmatrix}}_\text{Identity matrix} = \underbrace{ \begin{pmatrix} \sigma^2&0&\cdots&0\\ 0&\sigma^2&\cdots&\vdots\\ \vdots&\cdots&\sigma^2&\vdots\\ 0&\cdots&\cdots&\sigma^2\\ \end{pmatrix}}_\text{Variance-covariance matrix} $$ However, rather than assume that each observation is drawn from a normal distribution with the same amount of variance, we could alternatively assume that the variance is proportional to the level of the covariate. For mathematical reasons, it actually makes more sense that variance be inversely proportional to the square-root of the covariate values (we want more extreme observations to have less influence). $$ \mathbf{V} = \sigma^2\times X\times \underbrace{ \begin{pmatrix} 1&0&\cdots&0\\ 0&1&\cdots&\vdots\\ \vdots&\cdots&1&\vdots\\ 0&\cdots&\cdots&1\\ \end{pmatrix}}_\text{Identity matrix} = \underbrace{ \begin{pmatrix} \sigma^2\times \frac{1}{\sqrt{X_1}}&0&\cdots&0\\ 0&\sigma^2\times \frac{1}{\sqrt{X_2}}&\cdots&\vdots\\ \vdots&\cdots&\sigma^2\times \frac{1}{\sqrt{X_i}}&\vdots\\ 0&\cdots&\cdots&\sigma^2\times \frac{1}{\sqrt{X_n}}\\ \end{pmatrix}}_\text{Variance-covariance matrix} $$

Hence, what we are really doing is multiplying $\sigma^2$ by a matrix of weights ($\omega$): $$ \mathbf{V} = \sigma^2\times \omega, \hspace{1cm} \text{where } \omega = \underbrace{ \begin{pmatrix} \frac{1}{\sqrt{X_1}}&0&\cdots&0\\ 0&\frac{1}{\sqrt{X_2}}&\cdots&\vdots\\ \vdots&\cdots&\frac{1}{\sqrt{X_i}}&\vdots\\ 0&\cdots&\cdots&\frac{1}{\sqrt{X_n}}\\ \end{pmatrix}}_\text{Weights matrix} $$

Incorporating different variance structures into a linear model

In R, alternative variance structures are incorporated by specifying model weights. So we can re-write out the model as:

$$ \begin{align*} y_{i} &= \beta_0 + \beta_1 \times x_{i} + \varepsilon_i \hspace{1cm} &\epsilon_i\sim\mathcal{N}(0, \sigma^2) \\ y_{i} &= \beta_0 + \beta_1 \times x_{i} + \varepsilon_i \hspace{1cm} &\epsilon_i\sim\mathcal{N}(0, \sigma^2\times\omega) \\ \end{align*} $$

We will start by refitting the linear model incorporating weights that indicate that the variance is fixed to be proportional to X. Since the values of x are 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15, the a fixed variance structure would be: $$ \mathbf{V} = cov = \sigma^2 \times \underbrace{ \begin{pmatrix} \frac{1}{\sqrt{1}}&0&0&0&0&0&0&0&0&0&0&0&0&0&0\\ 0&\frac{1}{\sqrt{2}}&0&0&0&0&0&0&0&0&0&0&0&0&0\\ 0&0&\frac{1}{\sqrt{3}}&0&0&0&0&0&0&0&0&0&0&0&0\\ 0&0&0&\frac{1}{\sqrt{4}}&0&0&0&0&0&0&0&0&0&0&0\\ 0&0&0&0&\frac{1}{\sqrt{5}}&0&0&0&0&0&0&0&0&0&0\\ 0&0&0&0&0&\frac{1}{\sqrt{6}}&0&0&0&0&0&0&0&0&0\\ 0&0&0&0&0&0&\frac{1}{\sqrt{7}}&0&0&0&0&0&0&0&0\\ 0&0&0&0&0&0&0&\frac{1}{\sqrt{8}}&0&0&0&0&0&0&0\\ 0&0&0&0&0&0&0&0&\frac{1}{\sqrt{9}}&0&0&0&0&0&0\\ 0&0&0&0&0&0&0&0&0&\frac{1}{\sqrt{10}}&0&0&0&0&0\\ 0&0&0&0&0&0&0&0&0&0&\frac{1}{\sqrt{11}}&0&0&0&0\\ 0&0&0&0&0&0&0&0&0&0&0&\frac{1}{\sqrt{12}}&0&0&0\\ 0&0&0&0&0&0&0&0&0&0&0&0&\frac{1}{\sqrt{13}}&0&0\\ 0&0&0&0&0&0&0&0&0&0&0&0&0&\frac{1}{\sqrt{14}}&0\\ 0&0&0&0&0&0&0&0&0&0&0&0&0&0&\frac{1}{\sqrt{15}}\\ \end{pmatrix}}_\text{Weights matrix} $$

Hence the weights are:

#calculate the weights (wts <- 1/sqrt(data1$x))

[1] 1.0000 0.7071 0.5774 0.5000 0.4472 0.4082 [7] 0.3780 0.3536 0.3333 0.3162 0.3015 0.2887 [13] 0.2774 0.2673 0.2582 0.2500

However, to incorporate this this properly into a linear model, we need to swap from the simple lm() (linear models via ordinary least squares) function to a linear modelling engine based on generalized least squares.

Generalized least squares (GLS) is a routine that uses ordinary least squares (OLS) to obtain estimates of the fixed effects parameters and then uses these estimates to generate maximum likelihood (ML) estimates of the variance parameters which in turn are used to update estimates of the fixed effects parameters. Unlike OLS, which assumes equal variance and zero correlation, GLS allows us to offer a variance-covariance structure for the model fit and is thus suitable for dealing with heteroscadacity and dependency issues.

Maximum likelihood (ML, that maximizes the likelihood of the data given the parameters) estimates of random effects parameters (including residual variance) are biased when sample sizes are small (although it is not clear how small is 'small'). Essentially the ML estimates are the sum of squares of residuals divided by the sample size. This is anti-conservative (small) as it does not account for the need to estimate fixed parameters (which uses some degrees of freedom).

An alternative to ML is restricted or residual maximum likelihood (REML). REML maximizes the likelihood of the residuals (rather than data) and therefore takes into account the number of estimated fixed parameters (equivalent to dividing residual sums of squares by the sample size minus the number of fixed effects parameters). Whilst REML yields unbiased random effects parameter estimates, REML models differing in fixed effects cannot be compared via log-likelihood ratio tests or information criteria (such as AIC). For relatively simple regression models, the gls() function in the nlme package is sufficient.

library(nlme) summary(gls(y~x, data1))

Generalized least squares fit by REML

Model: y ~ x

Data: data1

AIC BIC logLik

127.6 129.6 -60.82

Coefficients:

Value Std.Error t-value p-value

(Intercept) 40.33 7.189 5.610 0.0001

x 1.57 0.744 2.113 0.0531

Correlation:

(Intr)

x -0.879

Standardized residuals:

Min Q1 Med Q3 Max

-2.00006 -0.29320 -0.02283 0.35358 2.29100

Residual standard error: 13.71

Degrees of freedom: 16 total; 14 residual

data1.gls <- gls(y~x, data=data1, weights=varFixed(~x))

Now we will have a look at the weights that were applied (these are stored in the fitted model). Fixed weights are just the inverse of the square-root of the covariate (x).

#the model weights used are stored in the model attr(data1.gls$model$varStruct, "weights")

[1] 1.0000 0.7071 0.5774 0.5000 0.4472 0.4082 [7] 0.3780 0.3536 0.3333 0.3162 0.3015 0.2887 [13] 0.2774 0.2673 0.2582 0.2500

#the model weights are the inverse of the variances 1/attr(data1.gls$model$varStruct, "weights")

[1] 1.000 1.414 1.732 2.000 2.236 2.449 2.646 [8] 2.828 3.000 3.162 3.317 3.464 3.606 3.742 [15] 3.873 4.000

#or we could express these in standard deviation units (1/attr(data1.gls$model$varStruct, "weights"))^2

[1] 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [16] 16

Fitting a linear model (earlier) that assumed homogeneity of variance yielded clear wedge shaped standardized residuals. Hence we should once again explore the standardized residuals to evaluate the fit of the generalized least squares model.

plot(resid(data1.gls,"normalized")~fitted(data1.gls))

plot(resid(data1.gls,"normalized")~data1$x)

At this point we should compare the fit of the above model to that of a model that assumes variance homogeneity. AIC would seem an appropriate metric to use for such a comparison.

AIC(data1.lm, data1.gls)

df AIC data1.lm 3 133.0 data1.gls 3 121.1

121.0828 vs 133.0489),

it is arguably a more appropriate.

It is also possible to compare the two models via ANOVA. This is effectively testing the hypothesis: $$H_0: \sigma_1^2 = \sigma_2^2 = \sigma_3^2 = ... = \sigma^2$$ Since $\sigma^2$ is bounded at the lower end by 0 (values cannot be lower than zero), such a hypothesis test is said to be testing at the boundary and the p-values are likely to be conservative. Many recommend halving the p-values to compensate.

ANOVA can only compare like models. Thus in order to compare a model with two alternative variance functions, they need to be fitted with the same algorithms. In this case, it means that we need to fit the simple model again, this time with the gls function.

data1.gls2 <- gls(y~x, data=data1) anova(data1.gls,data1.gls2, test=TRUE)

Model df AIC BIC logLik data1.gls 1 3 121.1 123.0 -57.54 data1.gls2 2 3 127.6 129.6 -60.82

To see what impact we had on the modelling outcome, we can contrast the estimates and standard errors of both models.

summary(data1.lm)

Call:

lm(formula = y ~ x, data = data1)

Residuals:

Min 1Q Median 3Q Max

-27.420 -4.020 -0.313 4.847 31.409

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 40.330 7.189 5.61 6.4e-05

x 1.571 0.744 2.11 0.053

(Intercept) ***

x .

---

Signif. codes:

0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 13.7 on 14 degrees of freedom

Multiple R-squared: 0.242, Adjusted R-squared: 0.188

F-statistic: 4.46 on 1 and 14 DF, p-value: 0.0531

|

summary(data1.gls) Generalized least squares fit by REML

Model: y ~ x

Data: data1

AIC BIC logLik

121.1 123 -57.54

Variance function:

Structure: fixed weights

Formula: ~x

Coefficients:

Value Std.Error t-value p-value

(Intercept) 41.50 3.332 12.455 0.0000

x 1.43 0.525 2.727 0.0164

Correlation:

(Intr)

x -0.746

Standardized residuals:

Min Q1 Med Q3 Max

-1.74684 -0.42653 -0.05467 0.56196 2.04503

Residual standard error: 4.079

Degrees of freedom: 16 total; 14 residual

|

Conclusion: the estimate of slope in the model incorporating the fixed variance structure is slightly lower than that of the model that assumes equal variance. Importantly, the estimated parameter standard errors of the former model are substantially lower. In this particular case, the more appropriate variance structure also lead to a change in conclusion. That is, the null hypothesis of no relationship between y and x would be rejected based on the GLS model, yet not based on the LM model.

Heteroscadacity in ANOVA

For regression models that include a categorical variable (e.g. ANOVA), heterogeneity manifests as vastly different variances for different levels (treatment groups) of the categorical variable. Recall, that this is diagnosed from the relative size of boxplots. Whilst, the degree of group variability may not be related to the means of the groups, having wildly different variances does lead to an increase in standard errors and thus a lowering of power.

In such cases, we would like to be able to indicate that the variances should be estimated separately for each group. That is the variance term is multiplied by a different number for each group. The appropriate matrix is referred to as an Identity matrix.

Again, to assist in the explanation some fabricated ANOVA data - data that has heteroscadasticity by design - will be useful.

set.seed(1) ngroups <- 5 #number of populations nsample <- 10 #number of reps in each pop.means <- c(40, 45, 55, 40, 30) #population mean length sigma <- rep(c(6,4,2,0.5,1),each=nsample) #residual standard deviation n <- ngroups * nsample #total sample size eps <- rnorm(n, 0, sigma) #residuals x <- gl(ngroups, nsample, n, lab=LETTERS[1:5]) #factor means <- rep(pop.means, rep(nsample, ngroups)) X <- model.matrix(~x-1) #create a design matrix y <- as.numeric(X %*% pop.means+eps) data1 <- data.frame(y,x) boxplot(y~x, data1)

plot(lm(y~x, data1), which=3)

It is clear that there is gross heteroscedasticity. The residuals are obviously more spread in some groups than others yet there is no real pattern with means (the residual plot does not show an obvious wedge). Note, for assessing homogeneity of variance, it is best to use the standardized residuals.

It turns out that if we switch over to maximum (log) likelihood estimation methods, we can model in a within-group heteroscedasticity structure rather than just assume one very narrow form of variance structure.

Lets take a step back and reflect on our simple ANOVA (regression) model that has five groups each with 10 observations: $$y = \mu + \alpha_i + \varepsilon, \quad \varepsilon \sim \mathcal{N}(0,\sigma^2)$$ This is shorthand notation to indicate that the response variable is being modelled against a specific linear predictor and that the residuals follow a normal distribution with a certain variance (that is the same for each group).

Rather than assume that the variance of each group is the same, we could relax this a little so as to permit different levels of variance per group: $$\varepsilon \sim \mathcal{N}(0,\sigma_i^2)$$

To achieve this, we actually multiply the variance matrix by a weighting matrix, where the weights associated with each group are determined by the inverse of the ratio of each group to the first (reference) group: $$\varepsilon \sim \mathcal{N}(0,\sigma_i^2\times \omega)$$

So returning to our five groups of 10 observations example, the weights would be determined as:

data1.sd <- with(data1, tapply(y,x,sd)) 1/(data1.sd[1]/data1.sd)

A B C D E 1.00000 0.91343 0.40807 0.08632 0.12720

In order to incorporate these weights, we need to shift over to a different linear modelling function (gls within the nlme package). This function fits analogous models to lm(), yet allows us to describe the within-group heteroscedasticity and correlation structure.

library(nlme) #Fit the standard linear model (assuming homogeneity of variances) data1.gls <- gls(y~x, data=data1, method="REML") #Fit a linear model with different relative variance weights for each group. data1.gls1 <- gls(y~x, data=data1,weights=varIdent(form=~1|x), method="REML")

To determine whether allowing for separate variances per group is worth the extra degrees of freedom it costs, we can either:

- compare the fit of the two models (with and without separate variances)

- investigate the following hypothesis (that the variances of each groups are equal): $$\text{H}_0: \sigma_1^2 = \sigma_2^2 = \sigma_3^2 = \sigma_4^2 = \sigma_5^2 = \sigma^2$$

AIC(data1.gls,data1.gls1)

df AIC data1.gls 6 249.5 data1.gls1 10 199.2

anova(data1.gls,data1.gls1, test=TRUE)

Model df AIC BIC logLik Test L.Ratio p-value data1.gls 1 6 249.5 260.3 -118.8 data1.gls1 2 10 199.2 217.3 -89.6 1 vs 2 58.29 <.0001

Both approaches offer the same conclusion: that the model allowing separate variances per group is substantially better. We can further confirm this, by exploring the standardized residuals.

plot(data1.gls1)

At this point, it might also be instruction to compare the two models (equal and separate variances) side by side:

summary(data1.gls) Generalized least squares fit by REML

Model: y ~ x

Data: data1

AIC BIC logLik

249.5 260.3 -118.7

Coefficients:

Value Std.Error t-value p-value

(Intercept) 40.79 0.9424 43.29 0.0000

xB 5.20 1.3328 3.90 0.0003

xC 13.94 1.3328 10.46 0.0000

xD -0.73 1.3328 -0.55 0.5851

xE -10.66 1.3328 -8.00 0.0000

Correlation:

(Intr) xB xC xD

xB -0.707

xC -0.707 0.500

xD -0.707 0.500 0.500

xE -0.707 0.500 0.500 0.500

Standardized residuals:

Min Q1 Med Q3 Max

-3.30654 -0.24626 0.06468 0.39047 2.94559

Residual standard error: 2.98

Degrees of freedom: 50 total; 45 residual

plot(data1.gls)  |

summary(data1.gls1) Generalized least squares fit by REML

Model: y ~ x

Data: data1

AIC BIC logLik

199.2 217.3 -89.6

Variance function:

Structure: Different standard deviations per stratum

Formula: ~1 | x

Parameter estimates:

A B C D E

1.00000 0.91342 0.40807 0.08632 0.12720

Coefficients:

Value Std.Error t-value p-value

(Intercept) 40.79 1.481 27.543 0.0000

xB 5.20 2.006 2.593 0.0128

xC 13.94 1.600 8.714 0.0000

xD -0.73 1.487 -0.493 0.6244

xE -10.66 1.493 -7.139 0.0000

Correlation:

(Intr) xB xC xD

xB -0.738

xC -0.926 0.684

xD -0.996 0.736 0.922

xE -0.992 0.732 0.918 0.988

Standardized residuals:

Min Q1 Med Q3 Max

-2.3034 -0.6179 0.1065 0.7597 1.8743

Residual standard error: 4.684

Degrees of freedom: 50 total; 45 residual

plot(data1.gls1)  |

- Notice that allowing for separate variances per group has had no influence on the parameter estimates, only the standard deviation (and thus standard error) of these estimates. Note that each parameter has a unique standard error.

- The variance structure is provided for the separate variances - these are the weights.

A more thorough explanation

A more thorough explanation follows

However, a more accurate way of writing the model would be to use matrix or vector notation:

$$\mathbf{Y}_{i} \sim \mathcal{N}(\mathbf{X}_i\times \boldsymbol{\beta}, \mathbf{V}_{i})$$

In other words, the values of Y are expected to be drawn from a normal distribution with an expected value equal to the linear predictor

($\mathbf{X}_i\times \boldsymbol{\beta}$) and a standard deviation of V.

where $i$ denotes each of the groups and thus $V_i$ represents the variance matrix for associated with the observations of each of the groups.

Let us focus more on the $\varepsilon \sim \mathcal{N}(0,\sigma^2)$.

Actually, the $\sigma^2$ should really be

- normally distributed

- independent

- equally varied - note that in the above representation there is only a single $\sigma^2$ (variance) term. This implies that there is a single variance that represents the variance for each observation (and thus within each group).

The bold symbols represent:

- $\mathbf{X}$ - the model matrices for the data in each group. The first column of each matrix is a vector or 1's and then there are additional columns for each of the effects ($\alpha_2$, $\alpha_3$, ...).

- $\boldsymbol{\beta}_i$ - the regression parameter associated with $i^{th}$ group.

- $\mathbf{V}_i$ - the variance matrix for the observations within each of the $i$ groups

In the above variance-covariance matrix, all of the off-diagonals are 0. This reflects the independence assumption. That is

Other variance structures

It is also possible (though less common), that variance is non-linearly proportional to covariate - either exponentially or via a power function. To accommodate these situations there are also a couple of additional inbuilt variance functions available. Each of the available variance functions available are described in the following table:

| Variance function | Variance structure | Description |

|---|---|---|

| varFixed(~x) | $V = \sigma^2\times x$ | variance proportional to x the covariate |

| varExp(form=~x) | $V = \sigma^2\times e^{2\delta\times x}$ | variance proportional to the exponential of x multiplied by a constant |

| varPower(form=~x) | $V = \sigma^2\times |x|^{2\delta}$ | variance proportional to the absolute value of x raised to a constant power |

| varConstPower(form=~x) | $V = \sigma^2\times (\delta_1 + |x|^{\delta_2})^2$ | a variant on the power function |

| varIdent(form=~|A) | $V = \sigma_j^2\times I$ | when A is a factor, variance is allowed to be different for each level ($j$) of the factor |

| varComb(form=~x|A) | $V = \sigma_j^2\times x$ | combination of two of the above |

Finally, heterogeneity can also be caused by other underlying differences in the sampled populations that are otherwise not controlled for in the sampling design. For example, perhaps some other characteristic of the sampling units also changes with increasing X. In such situations, incorporating this other characteristic into the model as a covariate or using it as a model offset could help standardize for this effect and thus even up the variances.

Dealing with non-independence

In order for linear models to generate unbiased estimates of parameters and their standard errors, the residuals (and thus observations) are assumed to be independent. In the proceeding tutorials and workshops, the independence of data was considered to be a design rather than analysis issue. That is, a sound experimental design was supposed to ensure that all observations were independent.

So what can lead to observations not being independent?

- One obvious cause of non-independence is when the response of one sampling unit influences the response of other sampling units. For example, the response of one individual bird to a visual stimulus might be to emit an alarm call and fly away. This may promote a similar response in other nearby birds. Hence, each of the birds are not responding independently - their response is biased towards the response of other individuals. In this case, we should only record the response of one individual in the group. It is this type of example that we have previously indicated should be avoided by good experimental design.

- Less obvious, yet potentially no less important is the lack of independence caused by other non-measured confounding influences that vary spatially or temporally.

For example, the research might be interested in the relationship between the abundance of a particular species and annual rainfall. To explore this relationship,

the workers go out and measure the abundance of the species in a large number of locations distributed throughout a large region (over which the annual rainfall varies).

However, the abundance of this species is likely to be influenced by a large number of other environmental conditions that vary over the landscape. Importantly, the closer together the sampling locations are in space, the more similar the underlying environmental conditions are likely to be. Consequently, the observed species abundances are likely to exhibit some degree of spatial (distance) dependency - sites closer together will generally be more similar is species abundances.

Similarly, when responses are recorded over time, observations collected closer together in time are likely to be more similar than those further apart temporally. Thus, if for example we were again interested in the relationship between the abundance of a species and rainfall and so measured the abundance of the species at a single location over time (since rainfall patterns change over time), the data is likely to a temporal dependency structure.

When a response is potentially impacted on by underlying spatial or temporal contagious processes, we say that the data are auto-correlated - the closer the observations are in space or time, the more highly correlated they are.

- To reduce sources of unexplained variability without the need to increase replication enormously, experiments can be designed such that observations are repeatedly collected from the same sampling units (e.g. same individuals, same sites etc) - so called nested and blocking/repeated measures designs. Grouping together sampling units in space and/or time also introduces non-independence. For example, the response (e.g. blood pressure) of a particular individual to a treatment (blood pressure drug) at one time is unlikely to be completely independent of its response to this or some other treatment (e.g. placebo) at some other time.

Recall that the linear model assumes that the off-diagonals of the variance-covariance matrix are all 0. $$ \mathbf{V} = \underbrace{ \begin{pmatrix} \sigma^2&0&\cdots&0\\ 0&\sigma^2&\cdots&\vdots\\ \vdots&\cdots&\sigma^2&\vdots\\ 0&\cdots&\cdots&\sigma^2\\ \end{pmatrix}}_\text{Variance-covariance matrix} $$ When residuals are correlated to one another, the off-diagonals are not equal to zero. One simple case is when the covariances are all a constant, non-zero value. This is referred as compound symmetry. The degree of correlation between residuals ($\rho$) is equal to $p=\theta/(\theta + \sigma^2)$. Compliance to this relatively simple correlation structure is also known as sphericity due spherical nature of the variance-covariance matrix. $$ cor(\varepsilon)= \underbrace{ \begin{pmatrix} 1&\rho&\cdots&\rho\\ \rho&1&\cdots&\vdots\\ \dots&\cdots&1&\vdots\\ \rho&\cdots&\cdots&1\\ \end{pmatrix}}_\text{Correlation matrix} $$ $$ \mathbf{V} = \underbrace{ \begin{pmatrix} \theta + \sigma^2&\theta&\cdots&\theta\\ \theta&\theta + \sigma^2&\cdots&\vdots\\ \vdots&\cdots&\theta + \sigma^2&\vdots\\ \theta&\cdots&\cdots&\theta + \sigma^2\\ \end{pmatrix}}_\text{Variance-covariance matrix} $$

There are various other correlation structures and statistical techniques that can be adopted to accommodate different dependency structures in data. The following sections demonstrate the most common of these.

Temporal autocorrelation

As a motivating example for exploring temporal auto-correlation, I will fabricate a data set that features a response (y) and a continuous predictor (x) collected over a 20 year period.

set.seed(1) n <- 100 # Create a sequence of dates between Jan 01 1999 and Jan 01 2010 Date <- seq(from=as.Date('1990-01-01'), to=as.Date('2010-01-01'),l=n) #100 equal dates between 1990 and 2010 # load the lubridate library library(lubridate) # Convert the dates into decimal formats (better in analyses) year <- decimal_date(Date) x <- sample(1:n) # continuous predictor # negative relationship between y and year y <- rev(c(-57+0.03*year[1:(n/4)], 944-0.47*year[(1+n/4):(n/2)],413-0.2*year[(1+n/2):(n*0.75)], 441-0.21*year[(1+n*0.75):(n)])) library(nlme) # Create a first order auto-regressive structure for the temporal data aa <- corAR1(0.8) bb <- Initialize(aa, data = data.frame(year)) M <- corMatrix(bb)*30 Vmat <- function(n, mu = 0, V = matrix(1)) { p <- length(mu) if (any(is.na(match(dim(V), p)))) stop("Dimension problem!") D <- chol(V) t(matrix(rnorm(n * p), ncol = p) %*% D + rep(mu, rep(n, p))) } y <- scale(y,scale=FALSE) + 10+ 0.02*x + Vmat(1, rep(0, n), M) data.t <- data.frame(y=y,x=x,year=year) write.table(data.t, file='../downloads/data/data.t.csv', sep=',', quote=F, row.names=FALSE) pairs(data.t)

There is a clear relationship between y and time - clearly, y has declined over time. Any relationship between y and x is not obvious. Nevertheless, it is possible that when we account for the effect of time (partial out time), there may also be an effect of x. It is also possible, that observations collected closer together in time are more correlated to one another than those more separated in time.

Lets start by fitting a simple linear regression model, and exploring the model fitting diagnostics.

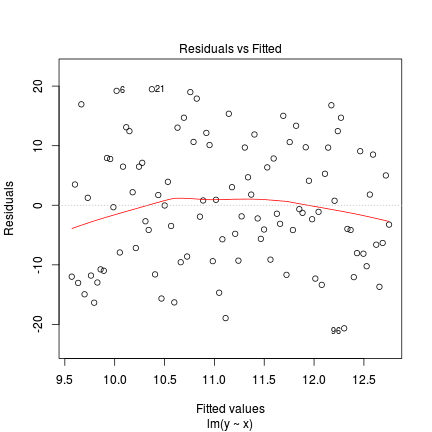

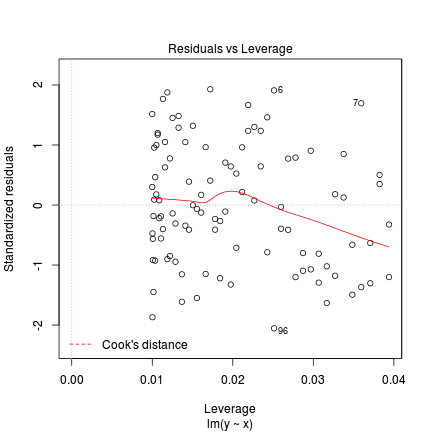

data.t.lm <- lm(y~x, data=data.t) plot(data.t.lm)

plot(resid(data.t.lm)~data.t$x)

plot(resid(data.t.lm)~data.t$year)

Conclusions - there is clearly a pattern in the residuals that relates to year. Lets incorporate ,sampyear into the linear model. Prior to fitting a multiple regression model we need to confirm that there is no collinearity between the covariates.

library(car) vif(lm(y~x+year, data.t))

x year 1.04 1.04

Lets now fit the multiple linear regression model. And explore the diagnostics

data.t.lm1 <- lm(y~x+year, data.t) plot(data.t.lm1)

Testing for temporal auto-correlation

There are a number of ways of detecting auto-correlation

- Patterns in (standardized/normalized) residual plots or plots of residuals against time (year). In particular, diagonal stripes.

Conclusions - whilst not overly obvious, there are possibly some stripes (heading diagonally down and slightly to the right) in the residuals. Slightly more obvious is the saw blade pattern in the plot of residuals against year possibly indicating that the variability of the residuals is related to time.

plot(rstandard(data.t.lm1) ~ fitted(data.t.lm1))

plot(rstandard(data.t.lm1) ~ data.t$year)

- Auto-correlation (acf) plots - plots of correlation of residuals from increasingly larger lags (differences between times). The horizontal dashed lines define approximate regions

outside of which a correlation value would be considered "significant".

Conclusions - the degree of correlation between observations collected on consecutive times (lag=1) is nearly 0.8 which is very high. The oscillating pattern of the acf plot suggests that the degree of correlation in the residuals changes in time. The residuals go from strongly positively correlated at a lag of 1 to negatively correlated at a lag of 10 years after which they gradually become more positive (to approximately 25 years).

acf(rstandard(data.t.lm1), lag=40)

As indicated earlier, observations collected closer together in time have a strong likelihood of being more closely correlated to one another - particularly when we know that y does change over time.

In the introduction to dealing with non-independence, I introduced one variance-covariance structure (compound symmetry) that did not assume zero covariance between residuals. However, for temporal auto-correlation (such as that depicted by the above auto-correlation plot), usually a more useful variance-covariance structure is one build around a first order auto-correlation structure.

The first order auto-regressive (AR1) structure defines a correlation structure in which the degree of correlation between two observations is proportional to the relative amount of elapsed time. Specifically, the correlation between two observations is defined as $\rho^{|t-s|}$ where $|t-s|$ is the absolute difference between the current time ($t$) and the previous time ($s$). So if the correlation between two consecutive time periods is $\rho^1=\rho$ then the correlation for a lag of 2 is $\rho^2$ and so on.

$$ cor(\varepsilon) = \underbrace{ \begin{pmatrix} 1&\rho&\cdots&\rho^{|t-s|}\\ \rho&1&\cdots&\vdots\\ \vdots&\cdots&1&\vdots\\ \rho^{|t-s|}&\cdots&\cdots&1\\ \end{pmatrix}}_\text{First order autoregressive correlation structure} $$Lets then incorporate this first order autoregressive structure into the model. To do so, we have to switch to gls() and use the correlation= argument. In addition to the model incorporating a first-order auto-regressive structure, we will refit the model with no correlation structure with the gls() function so that we can directly compare the fits of the two models.

library(nlme) data.t.gls <- gls(y~x+year, data=data.t, method='REML') data.t.gls1 <- gls(y~x+year, data=data.t, correlation=corAR1(form=~year),method='REML')

As before, we should explore all of the model fit diagnostics.

plot(residuals(data.t.gls, type="normalized")~fitted(data.t.gls))

plot(residuals(data.t.gls, type="normalized")~data.t$x)

plot(residuals(data.t.gls, type="normalized")~data.t$year)

acf(residuals(data.t.gls, type='normalized'), lag=40)

plot(residuals(data.t.gls1, type="normalized")~fitted(data.t.gls1))

plot(residuals(data.t.gls1, type="normalized")~data.t$x)

plot(residuals(data.t.gls1, type="normalized")~data.t$year)

acf(residuals(data.t.gls1, type='normalized'), lag=40)

We can now compare the two models via AIC or a likelihood ratio test.

AIC(data.t.gls, data.t.gls1)

df AIC data.t.gls 4 626.3 data.t.gls1 5 536.7

anova(data.t.gls, data.t.gls1)

Model df AIC BIC logLik Test L.Ratio p-value data.t.gls 1 4 626.3 636.6 -309.2 data.t.gls1 2 5 536.7 549.6 -263.4 1 vs 2 91.58 <.0001

Normally, we would only then pursue this best model. However, for the purpose of this demonstration, it might be informative to compare the output and conclusions resulting from both models.

summary(data.t.gls) Generalized least squares fit by REML

Model: y ~ x + year

Data: data.t

AIC BIC logLik

626.3 636.6 -309.2

Coefficients:

Value Std.Error t-value p-value

(Intercept) 3052.5 180.94 16.870 0.0000

x 0.0 0.02 1.539 0.1271

year -1.5 0.09 -16.800 0.0000

Correlation:

(Intr) x

x 0.191

year -1.000 -0.196

Standardized residuals:

Min Q1 Med Q3 Max

-2.09938 -0.73906 0.07781 0.65271 2.49073

Residual standard error: 5.178

Degrees of freedom: 100 total; 97 residual

anova(data.t.gls,type='marginal') Denom. DF: 97

numDF F-value p-value

(Intercept) 1 284.60 <.0001

x 1 2.37 0.1271

year 1 282.23 <.0001

|

summary(data.t.gls1) Generalized least squares fit by REML

Model: y ~ x + year

Data: data.t

AIC BIC logLik

536.7 549.6 -263.4

Correlation Structure: ARMA(1,0)

Formula: ~year

Parameter estimate(s):

Phi1

0.9127

Coefficients:

Value Std.Error t-value p-value

(Intercept) 4389 1232.6 3.560 0.0006

x 0 0.0 3.297 0.0014

year -2 0.6 -3.546 0.0006

Correlation:

(Intr) x

x 0.009

year -1.000 -0.010

Standardized residuals:

Min Q1 Med Q3 Max

-1.423 1.711 3.378 4.373 6.624

Residual standard error: 3.375

Degrees of freedom: 100 total; 97 residual

anova(data.t.gls1, type='marginal') Denom. DF: 97

numDF F-value p-value

(Intercept) 1 12.68 0.0006

x 1 10.87 0.0014

year 1 12.57 0.0006

|

Spatial autocorrelation

For statistical purposes, time is a one dimensional autocorrelation influence. Differences in time between any sets of time points, is the same no matter which time point is considered first and which is second. By contrast, contagious processes occurring in space operate in two dimensions (influences can radiate out around a point in any direction). So the degree to which sampling units are correlated is influenced by the two dimensional euclidean distance between the two units. Thus, spatial autocorrelation can be thought of as a two dimensional generalization of temporal autocorrelation where the correlation between two locations ($\rho$) is proportional to the euclidean distance between the locations.

One simple correlation structure that works well for spatial autocorrelation defines the covariances as being proportional to the exponential of the product of

the distance ($D$) multiplied by the (negative) of the rate of correlation decay ($\delta$). If the rate of decay ($\delta=0.08$), then at a distance of 1 unit,

the degree of correlation would be $e^{-0.08}=$0.9231 and the correlation at a distance of 2 would be $e^{-0.08\times 2}=$0.8521

and so on in a deminishing manner.

An important consideration when it comes to spatial influences is how regular the sampling units are distributed in space. The following figure demonstrates a range of configurations from a regular grid to a random scatter to clumped arrangement.

As is the way of these tutorials, we will now generate some fabricated data in order to explore spatial autocorrelation. We will generate one hundred locations (latitude and longitude) from within a spatial grid

set.seed(3) #4,8,12,15,16,33,47,49,50 n <- 100 #simgrid <- expand.grid(LAT=runif(10,144,150),LONG=runif(10,-26,-20)) #n <- nrow(simgrid) #library(sp) loc <- data.frame(LAT=runif(n,144,150),LONG=runif(n,-26,-20)) library(sp) grid <- expand.grid(LAT=seq(144,150,l=100), LONG=seq(-26,-20,l=100)) coordinates(grid) <- ~LAT+LONG #loc <- spsample(grid, n=n, type="random") #loc <- data.frame(spsample(grid, n=n, type="regular")) #names(loc) <- c("LAT","LONG") #coordinates(loc) <- ~LAT+LONG # Set up distance matrix distance <- as.matrix(dist(as.data.frame(loc))) #* 1/min(distance[lower.tri(distance)]) # Generate random variable delta <- 0.5 V <- Vmat(1, rep(0, n), exp(-delta * distance)) x <- rnorm(n,30,10) #image(cbind(simgrid, V)) y <- 50+1.0*x + 60*V #+ rnorm(n,0,1) data.s <- data.frame(y,x,loc) write.table(data.s, file='../downloads/data/data.s.csv', sep=',', quote=FALSE, row.names=FALSE) pairs(data.s)

#coordinates(data.s) <-~LAT+LONG #bubble(data.s,'y')

As the primary focus of the analyses is to investigate the relationship between y and x, we will begin by fitting a standard simple linear regression and then explore the model fit diagnostics.

data.s.lm <- lm(y~x, data.s) plot(data.s.lm)

Detecting spatial autocorrelation

The bubble plot

The observations have been collected across a wide spatial scale. Wide in the sense that the total area is likely to span many underlying environmental gradients that will represent multiple contageous processes - thereby potentially resulting in substantial spatial autocorrelation. Therefore, it is worth investigating whether there is any evidence of spatial autocorrelation in the data.

One relatively simple way of detecting spatial autocorrelation is to explore whether there are any spatial patterns in the residuals. To do this, we plot the sampling unit coordinates (latitude and longitude) such that the size, shape and or colors of the points reflect the residuals associated with these observations. Such a plot is referred to as a bubble plot.

The sp package is a large collection of functions and classes specifically for working with spatial data. To leverage these functions, we must first define the spatial nature of the data. That is, we need to declare which variables represent the spatial coordinates. Note, it is also possible to define the coordinate reference (or projection) system, however this is only required if you intend to perform complex spatial operations or conversions to other projection systems.

Bubble plots are generated via the bubble() function (of the sp package). At a minimum, this function requires a spatial data frame (a data frame with defined coordinates) that contains the model residuals.

data.s$Resid <- rstandard(data.lm)

Error: object 'data.lm' not found

library(sp) coordinates(data.s) <- ~LAT+LONG #effectively convert the data into a spatial data frame bubble(data.s,'Resid')

Error: undefined columns selected

The semi-variogram

A more formal and quantitative alternative to exploring spatial autocorrelation is to calculate semivariance. Semivariance is a measure of the degree of similarity between pairs of points separated by a specific distance. If the data are spatial autocorrelated, then sampling units that are closer together in space might be expected to yield similar responses and thus similar residuals. There are numerous ways to calculate semivariance, yet in all cases, it is essentially the variance of residuals from points separated by a particular distance. Points that are closer together in space are likely to have smaller values of semivariance.

As every set of actual points are likely to represent a unique distance, distances are effectively binned such that all points spanning a band of distances in a particular direction (say north of each point in turn) are aggregated together when calculating the variance in residuals for that distance.

The following figure loosely describes the calculations. For each point (in this instance, a point 3 units left and 3 units north on the grid), all other points within a certain tolerance band from a distance (lag, in this case 40 units) in a particular direction (in this case north, or an angle of 0 degrees) from the reference point are aggregated together. The tolerance band is bound by a shape that is the lag plus and minus the lag tolerance (in this case 5) and the direction angle plus or minus the tolerance in the angle (22.5 degrees in this case).

For situations in which the underlying contagious processes (that cause spatial autocorrelation) radiate out uniformly, the orientation (N, NE, E, SE etc) that we elect to calculate semivariance will be inconsequential (the semivariances are said to be isotropic). However, when the contagious process(es) spread asymmetrically (for example, due to wind direction and speed), the orientation will be important and the semivariances will depend on the direction of measurement (anisotropy).

Semivariance is then plotted against distance as a variogram.

Points separated by small distances are similar and thus have relatively small values of semivariance. In spatially correlated data, semivariance increases with increasing distance up to a point (the sill). The span of distances over which points are correlated is called the range.

Whilst we might expect the value of semivariance at a distance of zero to be zero, in reality we rarely have sampling units that approach such a small distance from one another. The value of semivariance when distance is equal to zero (the nugget is often estimated to be higher than zero. Typically this is the result of unexpected variability in your data that spatial patterns alone cannot account for.

It is also possible for the semivariogram values to peak at a value of close to 1 before declining again. This would imply sampling unit similarity declines with increasing spatial separation up to a certain distance above which sampling units spread further apart begin to become more similar. Such a situation can arise when the underlying environmental gradients governing the radiation of contagious processes cycle across the landscape. For example, imagine sampling throughout a landscape that features numerous different vegetation communities. At some point the local processes driving small scale spatial autocorrelation will be overtaken by the larger scale processes driving broad community changes.

So having explained all that, lets now generate a variogram plot and to formally assess spatial autocorrelation. As with temporal autocorrelation, it is best to switch from using the lm() function to using the Generalized least Squares (GLS: gls()) function from the nlme package.

We should also explore the usual suite of model diagnostics.

data.s.gls <- gls(y~x, data.s, method='REML') plot(data.s.gls)

Conclusions - there are no obvious signs of issues with normality or homogeneity of variance so far. However as this section is primarily concerned with spatial autocorrelation, it is this issue that we will now focus on.

There are two similar functions for generating variograms in R. The first we will explore is a function called Variogram() that comes with the nlme package.

plot(nlme:::Variogram(data.s.gls, form=~LAT+LONG, resType="normalized"))

The second, is the more flexible variogram function from the gstat package. Along with the default variagram calculated along a vertical direction (orientation angle of zero), there is the option to indicate additional orientations (via a alpha= parameter). Useful additional orientations would be North-East, East, South-East which respectively translate to alpha values of 45,90,135. Note, a alpha of 180 (South) would be the same as that of North.

By calculating the variogram separately at different orientations, we can get a handle on whether the underlying contagious process(es) (and thus spatial autocorrelation) is likely to be symmetrical (isotrophy) or asymmetrical (anisotropy).

library(gstat) plot(variogram(residuals(data.s.gls,"normalized")~1, data=data.s, cutoff=6))

plot(variogram(residuals(data.s.gls,"normalized")~1,data=data.s, cutoff=6,alpha=c(0,45,90,135)))

Accommodating spatial autocorrelation in the model

If we were primarily interested in the relationship between y and x and wished to standardize for spatial patterns, then we could attempt to incorporate into the model some additional covariates that we believe drives the underlying spatial patterns. However, meaningful and causal influences are often either very difficult to identify or else extremely difficult to measure - particularly if spatial autocorrelation is not considered until after the data have been collected.

As an alternative we could add the multiplicative effects of latitude and longitude to the model as proxies for the underlying contagion. However, the resulting hypothesis associated with the spatial factors are somewhat meaningless - latitude is highly unlikely to be the direct cause of variation in responses. Furthermore, adding these terms will absorb 3 degrees of freedom from the model.

More importantly, the above solutions do not directly address the issues of dependency structure. It is for this reason, that it is arguably better to address spatial autocorrelation by modelling in some form of spatial dependence correlation structure.

In the introduction to spatial autocorrelation, I illustrated an exponential autoregressive correlation structure. Recall that this is a correlation structure in which the degree of correlation degrades according to the exponential of the product of the distance and the rate of correlation decay ($e^{-\delta D}$).

This is not the only correlation structure useful for accommodating spatial autocorrelation. The causes of spatial autocorrelation are many and varied and thus so too are the theoretical manners in which sampling units could be correlated over space. Additionally, the configuration of sampling units across space (recall the gridded, random and clustered configurations) also impact on the manner in which autocorrelation manifests in data.

The following table and figure illustrate the correlation structures built into the nlme package as well as the general form of variogram they accommodate. Note all of these assume isotrophy.

| correlation function | Correlation structure | Description |

|---|---|---|

| corExp(form=~lat+long) | Exponential | $\Phi = 1-e^{-D/\rho}$ |

| corGaus(form=~lat+long) | Gaussian | $\Phi = 1-e^{-(D/\rho)^2}$ |

| corLin(form=~lat+long) | Linear | $\Phi = 1-(1-D/\rho)I(d<\rho)$ |

| corRatio(form=~lat+long) | Rational quadratic | $\Phi = (d/\rho)^2/(1+(d/\rho)2)$ |

| corSpher(form=~lat+long) | Spherical | $\Phi = 1-(1-1.5(d/\rho) + 0.5(d/\rho)^3)I(d<\rho)$ |

Based on the variogram we generated from the first fitted model we tried, it would seem like the exponential or rational quadratic are good candidate correlation structures for our data. That said, most argue that it is appropriate to fit all alternative models and then select the model with the lowest AIC.

data.s.glsExp <- gls(y~x, data=data.s, correlation=corExp(form=~LAT+LONG, nugget=TRUE), method='REML') data.s.glsGaus <- gls(y~x, data=data.s, correlation=corGaus(form=~LAT+LONG, nugget=TRUE), method='REML') data.s.glsLin <- gls(y~x, data=data.s, correlation=corLin(form=~LAT+LONG, nugget=TRUE), method='REML') data.s.glsRatio <- gls(y~x, data=data.s, correlation=corRatio(form=~LAT+LONG, nugget=TRUE), method='REML') data.s.glsSpher <- gls(y~x, data=data.s, correlation=corSpher(form=~LAT+LONG, nugget=TRUE), method='REML') AIC(data.s.gls, data.s.glsExp, data.s.glsGaus, data.s.glsLin, data.s.glsRatio, data.s.glsSpher)

df AIC data.s.gls 3 1013.9 data.s.glsExp 5 974.3 data.s.glsGaus 5 976.4 data.s.glsLin 5 975.6 data.s.glsRatio 5 974.8 data.s.glsSpher 5 975.5

AIC(data.s.gls, data.s.glsExp, data.s.glsGaus, data.s.glsRatio, data.s.glsSpher)

df AIC data.s.gls 3 1013.9 data.s.glsExp 5 974.3 data.s.glsGaus 5 976.4 data.s.glsRatio 5 974.8 data.s.glsSpher 5 975.5

Prior to exploring the parameter estimates, it is (as always) a good idea to examine the model diagnostics.

plot(residuals(data.s.glsExp, type="normalized")~fitted(data.s.glsExp))

plot(nlme:::Variogram(data.s.glsExp, form=~LAT+LONG, resType="normalized"))

Finally, lets compare the output of the original model (that did not incorporate any correlation structure) with the model incorporating an exponential correlation structure.

summary(data.s.gls) Generalized least squares fit by REML

Model: y ~ x

Data: data.s

AIC BIC logLik

1014 1022 -504

Coefficients:

Value Std.Error t-value p-value

(Intercept) 87.47 12.482 7.008 0.0000

x 0.41 0.381 1.082 0.2818

Correlation:

(Intr)

x -0.951

Standardized residuals:

Min Q1 Med Q3 Max

-2.06257 -0.61771 0.09788 0.65807 2.68272

Residual standard error: 38.59

Degrees of freedom: 100 total; 98 residual

anova(data.s.gls) Denom. DF: 98

numDF F-value p-value

(Intercept) 1 675.7 <.0001

x 1 1.2 0.2818

|

summary(data.s.glsExp) Generalized least squares fit by REML

Model: y ~ x

Data: data.s

AIC BIC logLik

974.3 987.2 -482.2

Correlation Structure: Exponential spatial correlation

Formula: ~LAT + LONG

Parameter estimate(s):

range nugget

1.6957 0.1281

Coefficients:

Value Std.Error t-value p-value

(Intercept) 65.90 21.825 3.020 0.0032

x 0.95 0.286 3.304 0.0013

Correlation:

(Intr)

x -0.418

Standardized residuals:

Min Q1 Med Q3 Max

-1.6019 -0.3508 0.1609 0.6452 2.1332

Residual standard error: 47.69

Degrees of freedom: 100 total; 98 residual

anova(data.s.glsExp) Denom. DF: 98

numDF F-value p-value

(Intercept) 1 23.46 <.0001

x 1 10.92 0.0013

|

Hierarchical dependency structures - mixed effects modelling

If landscapes, individuals (subjects) etc were homogeneous scientific experiments would be much easier to conduct. One of the greatest challenges in designing experiments (particular those in the field or those involving organisms) is to be able to minimize unexplained variability (noise) so as to maximize the likelihood of detecting any signals (effects). Collecting additional covariates in an attempt to standardize or control for other influences is one of the common strategies used to attempt to reduce unexplained variability.

When the source of heterogeneity is difficult to identify or measure, collecting additional covariates may not be effective. For example, in designing a field experiment that will involve measuring some property of a natural system (such as the abundance of a taxa within quadrats), we may be concerned that the underlying landscape varies enormously and that this variation could mask any treatment effects. That is, the unexplained variability will reduce the power to detect effects.

One way to compensate for this is to provision a very large number of replicates. However, this will necessarily require substantially more resources and effort. An alternative is to group together sets of sampling units at sufficiently small scales that the underlying conditions are relatively homogeneous. For example, rather than distribute the sampling units randomly (or haphazardly) throughout the landscape, clusters of sampling units (called blocks or plots) could be distributed throughout the landscape. Similarly, rather than subject each individual to a single treatment, each individual could be subjected to each of the treatments sequentially. In this way, the unexplained variability is based on variation within a cluster or individual - clusters and individuals partially act as their own control.

Such nested, blocking, repeated measures designs (collectively referred to as hierarchical designs as a result of sampling units being grouped within other 'larger scale' spatial or temporal units) are popular ways to reduce unexplained variability and thus increase power.

Obviously it is important that the groups of sampling units not be so close to one another that they directly influence one another. Nevertheless, sampling units within the groups are not strictly independent of one another. The unique underlying characteristics of the individual subject or block means that the multiple observations will likely be more similar to one another than they are to the observations collected from other subjects or blocks. Each subject or block will have their own unique baseline (e.g. heat rate) upon which the treatments act and it is the same baseline for all observations within the group.

This dependency issue can be tackled in much the same way as a lack of independence due to temporal or spatial autocorrelation. That is, by incorporating a variance structure that approximates the patterns of dependency. Indeed when fitting a hierarchical model, we are essentially fitting a model that incorporates a separate compound symmetry correlation structure for each group. Yet in order to leverage some other statistical information, rather than interact directly with the correlation structure, we typically engage a procedure that allows us to define the hierarchical structure of the data and the model will take care of the correlation structure for us.

Central to any discussion of hierarchical models in a frequentist paradigm is the notion of fixed and random factors or effects.

- Fixed factors

- these are factors (continuous or categorical) whose measured levels you are specifically interested in. For example, we might have a treatment with levels 'high', 'medium' and 'low'. These are the only levels that we are interested in - we are not interested in extrapolating to other possible levels. Fixed effects are usually expressed as differences (or slopes).

- Random factors

- these are factors (usually categorical) that represent groups sampling units (such as sites, subjects etc). We are typically not only interested in the effects of any one level of a random factor (such as how one individual compares to another), as the levels of random factors (the selected subjects or blocks) are assumed to be random representatives of a larger population. Instead, we are more interested in estimating how variable the different groups are to one another (how varied was the landscape or subjects). Note, the individual replicates are always considered random factors (since they are intended to be randomly selected representatives of which we are more interested in their collective variability than individual differences).

Hierarchical models contain a mixture of 'fixed' and 'random' effects and are therefore also referred to as Mixed Effects Models.

Before proceeding any further, it might be a good idea to generate some data to assist us to visualize the concepts. We will generate a response (y) and continuous predictor (x) from 10 sampling units in each of 6 groups or blocks (block). An unit increase in x will be associated with a 1.5 times increase in y. The baseline of each block will be drawn from a normal distribution with a mean of 230 and a standard deviation of 20.

set.seed(1) n.groups<- 6 n.sample <- 10 n <- n.groups*n.sample block <- gl(n=n.groups, k=n.sample, lab=paste("Block",1:n.groups,sep="")) x <- runif(n,0,70) mn <- mean(x) sd <- sd(x) cx<- (x-mn)#/sd Xmat <- model.matrix(~block*cx-1-cx) #intercepts and slopes Xmat <- model.matrix(~-1+block+x) #intercepts and slopes intercept.mean <- 230 intercept.sd <- 20 slope.mean<-1.5 #slope.sd <- 0.3 intercept.effects <- rnorm(n=n.groups, mean=intercept.mean, sd=intercept.sd) #slope.effects <- rnorm(n=n.groups, mean=slope.mean, sd=slope.sd) #intercepts and slopes slope.effects <- slope.mean all.effects <- c(intercept.effects, slope.effects) lin.pred <- Xmat[,] %*% all.effects eps <- rnorm(n=n, mean=0, sd=10) y<-lin.pred + eps data <- data.frame(y=y, x=cx+mn, block=block) head(data)

y x block 1 281.1 18.59 Block1 2 295.7 26.05 Block1 3 328.3 40.10 Block1 4 360.2 63.57 Block1 5 276.7 14.12 Block1 6 349.0 62.89 Block1

If we ignore the grouping dependency structure for a moment and proceed with simple linear regression (will will perform this via gls() so that we can directly compare it to models that incorporate correlation structures).

library(car) scatterplot(y~x, data)

library(nlme) data.gls <- gls(y~x, data, method='REML')

As always, we will explore the residuals. Firstly, we will explore the usual residual plot, then we will plot residuals against block. If there was no dependency structure (observations in groups were no more correlated to one another than they are to units in other groups), the spread of residuals should be similarly centered around zero for each block.

plot(data.gls)

plot(residuals(data.gls, type='normalized') ~ data$block)

Detecting such issues in the residuals is perhaps not surprising, after all this design is employed in situations where there are expected to be substantial differences between blocks. The model fitted above was not appropriate for two reasons.

Firstly, it failed to account for the differences between different blocks and therefore the unexplained variation was inflated. We might be tempted to add the grouping variable (block) to the model in an attempt to account for some of this unexplained variability. In other words, could we not treat the analysis as a ANCOVA?

library(ggplot2) ggplot(data, aes(y=y, x=x, color=block))+geom_smooth(method="lm")+geom_point()

data.gls1 <- gls(y~x+block, data, method="REML") plot(data.gls1)

plot(residuals(data.gls1, type='normalized') ~ data$block)

A more appropriate alternative is to model in a correlation structure that accounts for the expected dependency. For a simple example such as this, we can construct the model that incorporates a separate compound symmetry correlation structure for each block. The somewhat peculiar 1|block notation in the corCompSymm() function indicates that the compound symmetry correlation structure should be allowed to differ for each block.

data.gls2<-gls(y~x,data, correlation=corCompSymm(form=~1|block), method="REML") plot(residuals(data.gls2, type='normalized') ~ fitted(data.gls2))

plot(residuals(data.gls2, type='normalized') ~ data$block)

Whilst the above technique is adequate for enabling appropriate estimates and tests of the main effect of interest ( the relationship between y and x), it does not allow us to explore the breakdown of variance across the different hierarchical levels. It also makes it hard to impose additional correlation structures (such as those used to address temporal or spatial autocorrelation) on the data within each group.

It is here that linear mixed effects models (LMM) models come in. There are two main packages for LMM's in R. There is the original implementation offered in the nlme package and there is the more recent developments in the lme4 package. Although the newer package does offer some computational efficiency's and the ability to fit crossed random effects, it does not yet permit specific temporal or spatial autocorrelation structures (such as those described above). Therefore, I will stick to the original implementation.

data.lme <- lme(y~x, random=~1|block, data, method='REML') plot(data.lme)

The hierarchical random effects structure is defined by the random= parameter. In this case, random=~1|block indicates that blocks are random effects and that the intercept should be allowed to vary per block. If we wished to allow the intercept and slope to vary for each block then the argument would have been something like random=~x|block. Note, that the constrained scatterplot above indicated that the slopes were similar for each group, so there was no real need for us to fit a random slope and intercepts model.

summary(data.lme)

Linear mixed-effects model fit by REML

Data: data

AIC BIC logLik

459 467.2 -225.5

Random effects:

Formula: ~1 | block

(Intercept) Residual

StdDev: 18.11 8.905

Fixed effects: y ~ x

Value Std.Error DF t-value p-value

(Intercept) 232.82 7.823 53 29.76 0

x 1.46 0.064 53 22.87 0

Correlation:

(Intr)

x -0.292

Standardized Within-Group Residuals:

Min Q1 Med Q3 Max

-2.09947 -0.57994 -0.04874 0.56685 2.49464

Number of Observations: 60

Number of Groups: 6

summary(data.lme)

Linear mixed-effects model fit by REML

Data: data

AIC BIC logLik

459 467.2 -225.5

Random effects:

Formula: ~1 | block

(Intercept) Residual

StdDev: 18.11 8.905

Fixed effects: y ~ x

Value Std.Error DF t-value p-value

(Intercept) 232.82 7.823 53 29.76 0

x 1.46 0.064 53 22.87 0

Correlation:

(Intr)

x -0.292

Standardized Within-Group Residuals:

Min Q1 Med Q3 Max

-2.09947 -0.57994 -0.04874 0.56685 2.49464

Number of Observations: 60

Number of Groups: 6

anova(data.lme)

numDF denDF F-value p-value (Intercept) 1 53 1452.3 <.0001 x 1 53 523.2 <.0001

intervals(data.lme)

Approximate 95% confidence intervals

Fixed effects:

lower est. upper

(Intercept) 217.128 232.819 248.511

x 1.331 1.459 1.587

attr(,"label")

[1] "Fixed effects:"

Random Effects:

Level: block

lower est. upper

sd((Intercept)) 9.598 18.11 34.17

Within-group standard error:

lower est. upper

7.362 8.905 10.773

vc<-as.numeric(as.matrix(VarCorr(data.lme))[,1]) vc/sum(vc)

[1] 0.8052 0.1948

The standard output of a linear mixed effects model is a little different to that of a regular linear model or even a generalized least squares model. The effects are broken up into the 'Random effects' and the 'Fixed effects'.

- the 'Random effects' display estimates of the variance (actually standard deviation) components. This is the added variance introduced

by each level of the hierarchical structure. In this case, there are

18.11units of standard deviation variability between blocks and8.9units of variability between the sampling units within blocks. - the 'Fixed effects' display the parameter estimates, their standard deviations and associated hypothesis tests. In this case

we would conclude that an increase in x was associated with a significant linear increase in y.

The intercept (

232.82) and slope (1.46) represent the average intercept and slope across all blocks and it is worth noting that they are very similar to the initial values used to generate the data (it would have been disappointing if this had not been the case!). - the 'Correlation' component indicates the degree of correlation between the slope and intercept. Ideally this should not be close to either 1 or -1. Often in such hierarchical models the estimated slopes and intercepts can be related. Consider it this way: calculated trendlines always pass through the mean of the y and x values - they are lines that rotate around this multivariate mean. Hence, when the model allows both fixed and random slopes, a slope of greater magnitude will also have a lower or higher intercept (depending on whether the trend is positive or negative). Centering the predictor variable can help reduce this correlation between slope and intercept.

- the anova table displays the F-ratio for the fitted model against a null model (model without the fixed term). It is worth mentioning that more recent developments have cast rather severe doubts on the validity of these F-ratio tests. In particular, many argue that for hierarchical models, identifying the appropriate null model (and thus denominator degrees of freedom) is an un-achievable task.

- as an alternative to working with the parameter estimates, their standard errors and p-values, increasingly, workers are electing to place greater inferential emphasis on the 95% confidence intervals. Intervals that do not overlap zero are considered to provide evidence for a 'significant' effect.

The mixed effects model took care of the dependency structure by defining a separate correlation structure for each group. However this structure assumes no spatial or temporal autocorrelation within each group. Sampling units are assumed to be randomly assigned within each group. However, if sampling units are always arranged in a particular non-random configuration (e.g. a sequence of repeated measurements over time or spatial distance from a disturbance) then the data within groups may well be temporally or spatially autocorrelated.

Issues of temporal or spatial autocorrelation above those caused by the dependency structure of the hierarchical groupings must be addressed (via the correlation= parameter) via the same techniques as was demonstrated in the sections on temporal and spatial independence.

The above demonstration has really only just scratched the surface of linear mixed effects models. These models will be considered more thoroughly in tutorials 9.7-9.9 and then again in the context of generalized linear mixed effects models in tutorials 11.4.