Abstract

Factor models are widely used for dimension reduction in the analysis of multivariate data. This is achieved through decomposition of a \(p \times p\) covariance matrix into the sum of two components. Through a latent factor representation, they can be interpreted as a diagonal matrix of idiosyncratic variances and a shared variation matrix, that is, the product of a \(p \times k\) factor loadings matrix and its transpose. If \(k \ll p\), this defines a parsimonious factorisation of the covariance matrix. Historically, little attention has been paid to incorporating prior information in Bayesian analyses using factor models where, at best, the prior for the factor loadings is order invariant. In this work, a class of structured priors is developed that can encode ideas of dependence structure about the shared variation matrix. The construction allows data-informed shrinkage towards sensible parametric structures while also facilitating inference over the number of factors. Using an unconstrained reparameterisation of stationary vector autoregressions, the methodology is extended to stationary dynamic factor models. For computational inference, parameter-expanded Markov chain Monte Carlo samplers are proposed, including an efficient adaptive Gibbs sampler. Two substantive applications showcase the scope of the methodology and its inferential benefits.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Factor models are widely used as a tool for dimension reduction in explaining the covariance structure of multivariate data. Let \(\varvec{y}_i = (y_{i1}, \ldots , y_{ip})^{\mathrm {\scriptscriptstyle T}}\) be a p-dimensional random vector with mean \(\varvec{\mu }\) and covariance matrix \(\varvec{\Omega }\) for observation unit \(i=1,\ldots ,n\). The classic factor model posits that the covariances between the p components of \(\varvec{y}_i\) can be explained by their mutual dependence on a smaller number k of unknown common factors \(\varvec{\eta }_i = (\eta _{i1}, \ldots , \eta _{ik})^{\mathrm {\scriptscriptstyle T}}\). Specifically, the model expresses \(\varvec{y}_i\) as a noisy affine transformation of \(\varvec{\eta }_i\),

in which the \(p \times k\) matrix \(\varvec{\Lambda }\) is called the factor loadings matrix. The components of the error term \(\varvec{\epsilon }_i\) are often called the specific factors (or “uniquenesses”) and their variances are often termed the idiosyncratic variances. In (1), we take the specific and common factors, \(\varvec{\epsilon }_i\) and \(\varvec{\eta }_i\), to be uncorrelated, zero-mean multivariate normal random variables, with

In general, the common factors are assumed to explain all the shared variation between the components of \(\varvec{y}_i\) and so \(\varvec{\Sigma }\) is constrained to be a diagonal matrix with positive diagonal elements, \(\varvec{\Sigma }=\textrm{diag}(\sigma _1^2, \ldots , \sigma _p^2)\). It then follows from (1) that the marginal covariance matrix \(\varvec{\Omega }\) can be expressed as

in which the product \(\varvec{\Delta } = (\delta _{ij}) = \varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) will henceforth be termed the shared variation matrix. If \(k \ll p\), the right-hand-side of (3) constitutes a parsimonious factorisation of the full covariance matrix.

Factor models originally found widespread use in psychology and other social sciences (e.g. Goldberg 1990), where a primary motivation was the prospect of domain insight through interpretation of the latent factors. Since their introduction, factor models have been extended in a variety of directions, for example, by allowing sparsity in the matrix of factor loadings for high-dimensional problems (Conti et al. 2014) or by modelling the temporal evolution of variances in stochastic volatility models (Lopes and Carvalho 2007) or the latent factors in dynamic factor models (Sáfadi and Peña 2008). Accordingly, the use of factor models and their extensions has spread into a diverse array of other fields, such as finance (Aguilar and West 2000) and genetics (Carvalho et al. 2008).

In many of these application areas, the modeller is likely to hold prior beliefs about the nature of the dependence between variables. For example, when the observation vector represents repeated measures data, or observations at a collection of spatial locations, it would be reasonable to expect stronger associations between measurements that are closer together in time or space. Indeed, such ideas underpin the class of functional factor models which are tailored to variables that might be better represented as functions over a continuous domain, rather than vector-valued random quantities. Some functional factor models, and their dynamic extensions, have functional factors (e.g. Castellanos et al. 2004; Taylor-Rodriguez et al. 2019) while others have functional factor loadings. In the latter case, if we regard the p observations on unit i as measurements on a function over a continuous domain, say \(\tau \in \mathbb {R}\), then we replace (1) with

for \(j=1,\ldots ,p\). The key idea is then to treat the factor loading curves \(\lambda _m(\tau )\) as smooth unknown functions of \(\tau \). This can be achieved by regarding \(f_i(\tau _j) = \sum \lambda _m(\tau _j) \eta _{im}\) as a linear combination of (orthogonal) basis functions, often modelled using splines, which then implies a particular covariance function for \(f_i(\tau )\). Although some of the research in this direction uses a Bayesian approach to inference (Kowal et al. 2017; Kowal and Canale 2022), the frequentist treatment of the problem is more common, particularly in financial applications to forecasting yield curves (Hays et al. 2012; Jungbacker et al. 2014). An alternative approach in models with functional factor loadings is to assume each \(\lambda _m(\tau )\) to be a realisation of a stochastic process, for instance, a Gaussian process with a chosen covariance function; see, for example, the dynamic spatial model of Lopes et al. (2008).

Beyond analyses of functional data, most of the work in the Bayesian literature on prior specification for factor models has focused on the problem of developing priors that are exchangeable with respect to the order of the variables in the observation vector (e.g. Leung and Drton 2016; Chan et al. 2018) with more recent work focusing additionally on allowing sparsity in the factor loadings matrix (Frühwirth-Schnatter et al. 2023). There has been very little work on constructing non-exchangeable prior specifications in a general setting. A notable exception is the structured increasing shrinkage process prior of Schiavon et al. (2022) under which each factor loading in an infinite factorisation model is assigned a spike-and-slab prior where variable-specific meta-covariates are incorporated in the spike probabilities. Other related work appears in the context of vector error correction models, which are another class of reduced rank models, where Koop et al. (2009) develop an informative prior for the matrix of cointegration vectors based on economic theory about the likely cointegration relationships between variables.

In this paper, we treat the factor model as a tool for providing a dimension-reduced parameterisation of a covariance matrix. Based on the insight that the shared variation matrix \(\varvec{\Delta } = \varvec{\Lambda } \varvec{\Lambda }^T\) in (3) is more amenable to the specification of prior beliefs than the factor loadings matrix \(\varvec{\Lambda }\), our main contribution is a framework for incorporating initial beliefs about \(\varvec{\Delta }\) in the prior for \(\varvec{\Lambda }\) by exploiting the algebraic relationship between the two quantities. By switching the focus from properties of the prior for \(\varvec{\Lambda }\) to properties of the prior for \(\varvec{\Delta }\) we obtain a methodology that is more flexible than alternatives described in the literature. The prior for the shared variation matrix \(\varvec{\Delta }\) is induced through a structured prior distribution for the factor loadings matrix \(\varvec{\Lambda }\) which can encode ideas of order-invariance as well as non-exchangeable structure, modelled through dependence on covariance kernels, meta-covariates, pairwise distance metrics and more, within a single principled framework. In particular, the mean of the prior for \(\varvec{\Delta }\) can be any positive definite matrix of relevance. For example, it could be a phylogenetic distance matrix whose entries encode distances between nodes on a tree, or even the Gram matrix arising from a Gaussian process covariance function. The interpretability of this prior expectation facilitates straightforward incorporation of meaningful domain expertise. Moreover, the flexible class of priors allows data-informed shrinkage towards a region around the mean within a framework that facilitates inference on the number of factors and hence the extent to which dimension-reduction can be achieved. New theoretical results outline the nature of this shrinkage in the rank-reduced space over which \(\varvec{\Delta }\) has support while shedding light on some of the identifiability issues that can hamper computational inference for factor models.

Based on ideas from the literature on infinite factorisation models and parameter-expansion algorithms, we propose an efficient scheme for computational inference where a single Markov chain Monte Carlo (MCMC) run yields information about both the continuous model parameters and the number of factors. Using an unconstrained reparameterisation of stationary vector autoregressions, the methodology is also extended to a class of stationary dynamic factor models for which the shared variation matrix remains meaningfully defined. To the best of our knowledge, this is the first prior, with associated inferential scheme, for a general class of dynamic factor models that constrains inference to the stationary region without imposing further restrictions. This constitutes an important contribution to the Bayesian time-series literature.

The remainder of this paper is organised as follows. After introducing our parameterisation of Bayesian factor models in Sect. 2, Sect. 3 presents the general class of structured priors and its components. In Sect. 4, we extend these ideas to a class of stationary dynamic factor models. Posterior computation is discussed in Sect. 5, then Sect. 6 presents a simulation experiment and two substantive applications that illustrate the scope of the methodology and its inferential benefits.

2 Parameterisation of the Bayesian factor model

It is well known that the parameters \(\varvec{\Lambda }\) and \(\varvec{\Sigma }\) in the marginal covariance matrix \(\varvec{\Omega }= \varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}+ \varvec{\Sigma }\) of a factor model are not identifiable from the likelihood without imposition of constraints. Indeed, even with the scale of the factors fixed at \(\text {Var}(\varvec{\eta }_i)=\varvec{I}_k\) in (2), there remains a rotational invariance. Consider any \(k \times k\) orthogonal matrix \(\varvec{Q}\). We can pre-multiply the factors in (1) by \(\varvec{Q}\) and post-multiply the factor loadings matrix by \(\varvec{Q}^{\mathrm {\scriptscriptstyle T}}\) and this gives the transformed factors in (2) the same distribution as the original factors and so the marginal variance in (3) remains unchanged. The factor loadings matrix can therefore only be identified up to an orthogonal transformation. This means there are \(pk - k(k-1)/2 + p\) degrees of freedom determining the marginal variance \(\varvec{\Omega }\). In an unconstrained model for \(\varvec{y}_i\), the number of degrees of freedom would be \(p(p+1)/2\) and so the reduction in the number of parameters is \(p (p+1) / 2 - \{ pk - k (k-1) / 2 + p \}\) which is positive if \(k < \varphi (p)\) where

is called the Ledermann bound. Notwithstanding rotational invariance, the first identification issue is therefore whether \(\varvec{\Sigma }\) can be identified uniquely from \(\varvec{\Omega }\). Fortunately, Bekker and ten Berge (1997) proved that if \(k < \varphi (p)\) then \(\varvec{\Sigma }\), and therefore \(\varvec{\Delta }=\varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\), is almost surely globally identifiable. Given identification of \(\varvec{\Delta }\), solving the rotation problem would then guarantee unique identification of the factor loadings matrix \(\varvec{\Lambda }\).

In the Bayesian literature, the most common solution to the rotation problem uses the positive diagonal, lower triangular (PLT) constraint; denoting the constrained matrix by \(\varvec{\tilde{\Lambda }}\) and corresponding factors by \(\tilde{\varvec{\eta }}_1,\ldots ,\tilde{\varvec{\eta }}_n\), this demands \(\tilde{\lambda }_{ij}=0\) for \(j>i\) and \(\tilde{\lambda }_{ii}>0\) for \(i=1,\ldots ,k\). Although historically this approach has been widely used in the Bayesian literature (e.g. Geweke and Zhou 1996; Lopes and West 2004), we choose not to address the rotational invariance and instead parameterise the model using the unidentified and unconstrained factor loadings matrix \(\varvec{\Lambda }\). There are two main motivations.

First, recent work provides theoretical and empirical evidence that imposition of the PLT constraint can adversely affect computational inference. Writing \(\varvec{\tilde{\Lambda }}^{(k)}\) to denote the lower triangular matrix comprising the first k rows and columns of \(\varvec{\tilde{\Lambda }}\), problems arise when \(\varvec{\tilde{\Lambda }}^{(k)}\) is near singular, which can occur when variables in \(\varvec{y}_i\) are highly correlated. When the jth diagonal element of \(\varvec{\tilde{\Lambda }}^{(k)}\) is close to zero, the signs of \((\tilde{\lambda }_{j+1,j}, \ldots , \tilde{\lambda }_{pj})\) are only very weakly identified, producing multiple modes in the posterior corresponding to the different sign flips (e.g. Aßmann et al. 2016; Man and Culpepper 2022). This can cause poor mixing in MCMC samplers and poor approximation of the posterior for the number of factors (Chan et al. 2018). These problems have been addressed successfully by parameter-expansion techniques, which target a posterior over an expanded space with fewer pathological features (e.g. Ghosh and Dunson 2009; Chan et al. 2018). Parameterising the model and framing the problem of computational inference in terms of the unidentified factor loadings matrix \(\varvec{\Lambda }\) falls into this class of methods.

Second, parameterisation in terms of the unconstrained and unidentified factor loadings matrix \(\varvec{\Lambda }\) delivers an additional benefit in terms of prior specification. Direct elicitation of a prior for the identified factor loadings in \(\varvec{\tilde{\Lambda }}\) is difficult as they quantify relationships with latent factors whose interpretation is generally unclear a priori. In contrast, beliefs about the shared variation \(\varvec{\Delta }\) in linear Gaussian models can be related directly to beliefs about observable quantities. As a result, \(\varvec{\Delta }\) is generally a more intuitive parameter for the quantification of covariation. For example, in a spatial problem where the elements of \(\varvec{y}_i\) correspond to observations at different locations, a modeller might reasonably structure their beliefs through a covariance matrix for which covariance decays as a function of distance. Fortunately, subject to \(k < \varphi (p)\), identifiability of \(\varvec{\Lambda }\) is not necessary for identification of \(\varvec{\Delta }\). Further, under various standard distributions for an unconstrained random matrix \(\varvec{\Lambda }\), the first and second order moments of the shared variation matrix \(\varvec{\Delta }=\varvec{\tilde{\Lambda }}\varvec{\tilde{\Lambda }}^{\mathrm {\scriptscriptstyle T}}=\varvec{\Lambda }\varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) can be calculated in closed form. Through careful specification of the prior for \(\varvec{\Lambda }\), a modeller can therefore capture their beliefs about the moments in the prior for the shared variation matrix.

3 Structured prior distributions

3.1 Significance of prior expectation

The prior expectation of the shared variation matrix \(\varvec{\Delta }=\varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) is defined by \( E (\varvec{\Delta }) = \{ E (\delta _{ij}) \} = E (\varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}})\). As we detail in Sect. 3.4, \( E (\varvec{\Delta })\) will be chosen to reflect beliefs about the covariation amongst the p elements of \(\varvec{y}_i\). In general, it will be a rank p matrix.

For \(k < p\), denote by \(\mathcal {S}_{p,k}^+\) the set of rank k, \(p \times p\) symmetric, positive semi-definite matrices and write \(\mathcal {S}_{p}^+\) for the space of \(p \times p\) symmetric, positive definite matrices. The factor loadings matrix \(\varvec{\Lambda }\) is rank \(k< \varphi (p) < p\) and so the prior distribution for the shared variation matrix \(\varvec{\Delta }=\varvec{\Lambda }\varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) only offers non-zero support to \(\mathcal {S}_{p,k}^+\). Because \(\mathcal {S}_{p,k}^+\) is not a convex set, it need not contain the prior expectation of \(\varvec{\Delta }\). Indeed, as stated previously, \( E (\varvec{\Delta })\) will generally be rank p. Therefore, although \( E (\varvec{\Delta })\) represents the centre of prior mass, in general there will be zero density at this point. The significance of \( E (\varvec{\Delta })\) is thus not completely clear. We will elucidate its meaning via an alternative, constrained expectation, as follows.

The Frobenius norm of a matrix is simply the Euclidean norm of its vectorised form. Define the (squared) Frobenius distance between two matrices \(\varvec{A}\) and \(\varvec{B}\) as the squared Frobenius norm of their difference:

By analogy with the classic Euclidean expectation, we now define the constrained expectation of \(\varvec{\Delta }\) as \( E _F(\varvec{\Delta }) = \varvec{L}_0 \varvec{L}_0^{\mathrm {\scriptscriptstyle T}}\in \mathcal {S}_{p,k}^+\) where

Theorem 1 below asserts that if the kth and \((k+1)\)th eigenvalues of \( E (\varvec{\Delta })\) are distinct, the constrained expectation of \(\varvec{\Delta }\) is the point in \(\mathcal {S}_{p,k}^+\) that minimises the Frobenius distance to \( E (\varvec{\Delta })\). However, before proving Theorem 1, we need Proposition 1.

Proposition 1

Let the spectral decomposition of a matrix \(\varvec{D} \in \mathcal {S}_p^+\) be \(\varvec{U} \varvec{M} \varvec{U}^{\mathrm {\scriptscriptstyle T}}\), where \(\varvec{M} = \textrm{diag}(m_1, \ldots , m_p)\) is a diagonal matrix of ordered eigenvalues, \(m_1 \ge m_2 \ge \cdots \ge m_p > 0\), and \(\varvec{U}\) is an orthogonal matrix whose columns comprise the corresponding eigenvectors. Assume that \(m_k \ne m_{k+1}\). Then, for \(\varvec{\Lambda } \in \mathbb {R}^{p \times k}\), the matrix product \(\varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) which minimises the Frobenius distance to \(\varvec{D}\) is \(\varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}= \varvec{D}^{1/2} \varvec{U}^{(k)} \varvec{U}^{(k)\, {\mathrm {\scriptscriptstyle T}}} \varvec{D}^{1/2}\) where \(\varvec{U}^{(k)}\) is the sub-matrix comprising the first k columns of \(\varvec{U}\). Moreover, the minimum squared Frobenius distance is equal to the sum of the squares of the last \(p-k\) eigenvalues of \(\varvec{D}\).

The proof of Proposition 1 is provided in the Supplementary Materials.

Theorem 1

If \(\varvec{U} \varvec{M} \varvec{U}^{\mathrm {\scriptscriptstyle T}}\) denotes the spectral decomposition of \( E (\varvec{\Delta }) \in \mathcal {S}_p^+\) and \(m_k \ne m_{k+1}\), then the constrained expectation of \(\varvec{\Delta }\), \( E _F(\varvec{\Delta }) \in \mathcal {S}_{p,k}^+\), is equal to the matrix product which minimises the Frobenius distance to \( E (\varvec{\Delta })\). That is, \( E _F(\varvec{\Delta }) = E (\varvec{\Delta })^{1/2} \varvec{U}^{(k)} \varvec{U}^{(k)\, {\mathrm {\scriptscriptstyle T}}} E (\varvec{\Delta })^{1/2}\).

The proof of Theorem 1 is given in the Supplementary Materials. Its significance lies in the suggestion that the prior for \(\varvec{\Delta }\) encourages shrinkage towards the closest matrix in \(\mathcal {S}_{p,k}^+\) to the rank p prior expectation \( E (\varvec{\Delta })\), hence for a given structure for \( E (\varvec{\Delta })\), approximating it as closely as possible in rank-reduced form. The Supplementary Materials also consider the case \(m_k = m_{k+1}\) and the implications for computational inference.

3.2 A matrix normal prior

A random matrix \(\varvec{\Lambda }\) has a matrix normal distribution with location matrix \(\varvec{M} \in \mathbb {R}^{p \times k}\), among-row scale matrix \(\varvec{\Phi } \in \mathcal {S}_p^+\) and among-column scale matrix \(\varvec{\Psi } \in \mathcal {S}_k^+\), written \(\varvec{\Lambda } \sim \textrm{N}_{p,k}(\varvec{M}, \varvec{\Phi }, \varvec{\Psi })\), if \(\textrm{vec}(\varvec{\Lambda })\) is multivariate normal and such that \(\textrm{vec}(\varvec{\Lambda }) \sim \textrm{N}_{pk} \Bigl ( \textrm{vec}(\varvec{M}), \varvec{\Psi } \otimes \varvec{\Phi } \Bigr )\). Since \((\alpha \varvec{\Psi }) \otimes (\alpha ^{-1} \varvec{\Phi }) = \varvec{\Psi } \otimes \varvec{\Phi }\) for any scalar \(\alpha > 0\), the overall scale of either \(\varvec{\Phi }\) or \(\varvec{\Psi }\) can be fixed without loss of generality.

Suppose that we take \(\varvec{\Lambda } \sim \textrm{N}_{p,k}(\varvec{0}, \varvec{\Phi }, \varvec{\Psi })\) as a prior for the unconstrained factor loadings matrix and fix the scale of the among-row scale matrix by taking \(\textrm{tr}(\varvec{\Phi })=p\) or \(\textrm{tr}(\varvec{\Phi }^{-1})=p\). As remarked in Sect. 2, the shared variation matrix \(\varvec{\Delta } = (\delta _{ij}) = \varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) is a quantity which is amenable to the specification of prior beliefs, and so our goal is to derive its moments. Using standard theory for the matrix normal distribution (e.g. Gupta and Nagar 2000, Chapter 2), it can be shown that the expectation of \(\varvec{\Delta }\) is given by

while the covariance between \(\delta _{ij}\) and \(\delta _{k\ell }\) is \(\text {Cov}(\delta _{ij}, \delta _{k\ell }) = \textrm{tr}(\varvec{\Psi }^2) (\phi _{ik} \phi _{j\ell } + \phi _{i\ell } \phi _{jk})\). In the special case where \(i=k\) and \(j=\ell \), the variance of \(\delta _{ij}\) is then

The derivations of the moments above are provided in the Supplementary Materials.

The result in (5) is significant because of the interpretation it bestows on the among-row scale matrix \(\varvec{\Phi }\) as a standardised version of our prior expectation for the shared variation matrix \(\varvec{\Delta }\). Parametric forms are convenient models for covariance matrices because they provide a parsimonious representation of dependence and a way to model the relationships with as-yet unobserved variables. We can therefore model the among-row scale matrix \(\varvec{\Phi }\) using a parametric form. This encourages shrinkage of the shared variation matrix \(\varvec{\Delta }\) towards an area around that parametric form but without enforcing the structure as a model constraint.

Suppose that \(\varvec{\Phi }=\varvec{R}(\varvec{\vartheta })\) in which \(\varvec{\vartheta }\) is a low-dimensional vector of hyperparameters on which the parametric form \(\varvec{R}(\cdot )\) depends. We complete our prior for the factor loadings matrix by taking \(\varvec{\Psi }=\textrm{diag}(\psi _1, \ldots , \psi _k)\) and assigning a prior to \(\varvec{\vartheta }\) and the \(\psi _i\). Parametric forms \(\varvec{R}(\cdot )\) and the prior for the \(\psi _i\) are discussed in Sects. 3.4 and 3.5, respectively.

3.3 A matrix-t prior

The expressions for the means and variances in (5) and (6) under the matrix normal prior reveal a lack of flexibility; recalling that the scale of \(\varvec{\Phi }\) is fixed, once the mean \( E (\varvec{\Delta })\) has been chosen, it is clearly not possible to scale up or down all the variances by scaling \(\varvec{\Psi }\). As such, we cannot assess independently the overall scale of the mean and uncertainty around that mean. A matrix-t prior for \(\varvec{\Lambda }\) remedies this problem through the introduction of a degree of freedom parameter.

Let \(\varvec{S} \sim \textrm{W}_p(\varsigma +p-1, \varvec{\Phi }^{-1})\) and \(\varvec{X} \sim \textrm{N}_{p,k}(\varvec{0}, \varvec{I}_p, \varvec{\Psi })\) be independent random matrices, where \(\textrm{W}_n(q, \varvec{U})\) denotes the n-dimensional Wishart distribution with \(q>0\) degrees of freedom and scale matrix \(\varvec{U} \in \mathcal {S}_n^+\). If we define

where \(\varvec{S}^{1/2} (\varvec{S}^{1/2})^{\mathrm {\scriptscriptstyle T}}=\varvec{S}\) and \(\varvec{M} \in \mathbb {R}^{p \times k}\), then the random matrix \(\varvec{\Lambda }\) has a matrix-t distribution, written \(\varvec{\Lambda } \sim \textrm{t}_{p,k}(\varsigma , \varvec{M}, \varvec{\Phi }, \varvec{\Psi })\). Again, one can fix the scale of either the among-row scale matrix \(\varvec{\Phi } \in \mathcal {S}_p^+\) or the among-column scale matrix \(\varvec{\Psi } \in \mathcal {S}_k^+\) without loss of generality.

Suppose that we adopt \(\varvec{\Lambda } \sim \textrm{t}_{p,k}(\varsigma , \varvec{0}, \varvec{\breve{\Phi }}, \varvec{\Psi })\) as a prior for the factor loadings matrix. Expressions for the means, variances and covariances of \(\varvec{\Delta }=\varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) are derived in the Supplementary Materials. In particular, we show that \( E (\varvec{\Delta })=\textrm{tr}(\varvec{\Psi }) \varvec{\Phi }\) for \(\varsigma >2\) where \(\varvec{\Phi } = \varvec{\breve{\Phi }} / (\varsigma -2)\), which is identical to the expression derived under the matrix normal prior. We also show that for a fixed value of \( E (\varvec{\Delta })\), the variance,

can be increased or decreased by decreasing or increasing \(\varsigma \), respectively. Therefore, by treating \(\varsigma >4\) as an unknown and assigning it a prior, we can allow the data to influence the degree of shrinkage of the shared variation matrix towards the closest rank k matrix to its mean. Writing \(\check{\varsigma } = 1 / (\varsigma -4) \in \mathbb {R}^+\), a matrix normal distribution for \(\varvec{\Lambda }\) is recovered as \(\check{\varsigma } \rightarrow 0\). We can therefore allow controlled relaxation of the fixed shrinkage of the matrix normal prior by specifying a prior for \(\check{\varsigma }\) with its mode at zero. To this end, we recommend an exponential distribution \(\check{\varsigma } \sim \textrm{Exp}(a_0)\). In the special case when \(\varvec{\Phi }=\varvec{I}_p\) and \(\varvec{\Psi }=\psi \varvec{I}_k\), we have \(\text {Var}(\delta _{ij}) = s_k(\check{\varsigma }) E (\delta _{ij})\) where \(s_k(\check{\varsigma }) = (1+2\check{\varsigma }) \left\{ 1 + (2+k)\check{\varsigma } \right\} / (1+3\check{\varsigma })\) is an increasing function in \(\check{\varsigma }\) which takes its minimum value of 1 when \(\check{\varsigma }=0\). By trial and improvement, we can therefore use the quantiles of the \(\textrm{Exp}(a_0)\) distribution to choose a value for \(a_0\) which is consistent with our prior beliefs about a chosen quantile in the distribution for the scale factor \(s_k(\check{\varsigma })\) for various k. This is illustrated in the application in Sect. 6.2.

3.4 Model and prior for \(\varvec{\Phi }\)

3.4.1 General form

Under either a matrix normal or matrix-t prior for \(\varvec{\Lambda }\), \( E (\varvec{\Delta } | \varvec{\Phi }, \varvec{\Psi })=\textrm{tr}(\varvec{\Psi }) \varvec{\Phi }\). As explained in Sect. 3.2, since we choose to fix \(\textrm{tr}(\varvec{\Phi })=p\) or \(\textrm{tr}(\varvec{\Phi }^{-1})=p\), we can therefore interpret \(\varvec{\Phi }\) as a standardised version of the prior expectation of \(\varvec{\Delta }\). For most of the parametric forms described in this section, it will be possible to factorise \(\varvec{\Phi }\) or \(\varvec{\Xi }=\varvec{\Phi }^{-1}\) as \(\varvec{\Phi }=\varvec{R}(\varvec{\vartheta })\) or \(\varvec{\Xi }=\varvec{R}(\varvec{\vartheta })\), respectively, where \(\varvec{R}(\cdot )\) yields a positive definite matrix with 1 s on the diagonal. The vector \(\varvec{\vartheta }\) typically contains a small number of unknown hyperparameters to which we assign a prior.

3.4.2 Specific examples

The simplest structure for \(\varvec{\Phi }\) would take \(\varvec{\Phi } = \varvec{I}_p\) giving \( E (\varvec{\Delta } | \varvec{\Phi }, \varvec{\Psi })=\textrm{tr}(\varvec{\Psi }) \varvec{I}_p\). In the matrix normal case when, additionally, \(\varvec{\Psi }=\psi \varvec{I}_k\), we obtain the order-invariant prior presented in Leung and Drton (2016) for the identified factor loadings matrix in which \(\tilde{\lambda }_{ij} \sim \textrm{N}(0, \psi )\) for \(i \ne j\) and \(\tilde{\lambda }_{ii}^2 \sim \textrm{Gam}\{(k-i+1)/2, 1 / (2 \psi )\}\) for \(i=1,\ldots ,k\). Although taking \(\varvec{\Phi }=\varvec{I}_p\) will give an order-invariant prior for \(\varvec{\Delta }\), a more general distribution that is exchangeable with respect to the order of the variables in \(\varvec{y}_i\) arises by taking \(\varvec{\Phi }\) to be a two-parameter exchangeable matrix. Since we constrain \(\textrm{tr}(\varvec{\Phi })=p\) this yields \(\varvec{\Phi }=(1 - \vartheta ) \varvec{I}_p + \vartheta \varvec{J}_p\), where \(-1/(p-1)< \vartheta < 1\), \(\varvec{J}_p = \varvec{1}_p \varvec{1}_p^{\mathrm {\scriptscriptstyle T}}\) and \(\varvec{1}_p\) is a p-vector of 1 s. This is the most general form for a \(p \times p\) symmetric, positive definite matrix with \(\textrm{tr}(\varvec{\Phi })=p\) which is invariant under a common permutation of the rows and columns. If a modeller has nothing in their prior beliefs to distinguish among the p elements of \(\varvec{y}_i\), the advantage of this specification over the order-invariant prior of Leung and Drton (2016) is that it allows shrinkage of the \(p(p-1)/2\) off-diagonal elements of the shared variation matrix \(\varvec{\Delta }\) towards non-zero values. This can be particularly helpful when the number of observations is small relative to the dimension of \(\varvec{y}_i\).

In confirmatory factor analysis, structural zeros are often introduced in the factor loadings matrix based on prior beliefs about the relationships between the k factors and the variables in \(\varvec{y}_i\). For example, in the prototypical two-factor model, the first \(p_1\) variables load only on factor 1 whilst the remaining \(p-p_1\) variables load only on factor 2. This would correspond to a block diagonal shared variation matrix \(\varvec{\Delta }\). Rather than imposing these beliefs about independent group structure as a model constraint, one could instead encourage shrinkage towards the corresponding structure through the prior. With appropriate ordering of the variables, this could be achieved by taking \(\varvec{\Phi } = \textrm{blockdiag}(\varvec{\Phi }_1, \ldots , \varvec{\Phi }_k)\) in which each \(\varvec{\Phi }_i\) block is a two-parameter exchangeable matrix, as described above.

Beyond these simple examples, there are many fields of statistics in which parametric forms are adopted for correlation matrices or their inverses. Typically, they are intended to capture the idea that observations from variables that are closer together, often in time or space, tend to be more strongly (positively) correlated. For instance, the precision matrix for a vector of longitudinal measurements might be assumed to be that of a stationary autoregressive process of order one. We could centre the prior for \(\varvec{\Delta }\) on a matrix of this form by taking \(\varvec{\Xi }=(\xi _{ij})\) to be a tridiagonal matrix with \(\xi _{i,i+1}=\xi _{i+1,i}=-\vartheta \) for \(i=1,\ldots ,p-1\), \(\xi _{ii}=1+\vartheta ^2\) for \(i=2,\ldots ,p-1\) and \(\xi _{11}=\xi _{pp}=1\) where \(\vartheta \in (-1, 1)\).

In spatial statistics, it is very common to use a spatial correlation function, such as one from the Matérn class. A conventional spatial correlation function will depend only on the distance, and possibly direction, between sites. However, Schmidt et al. (2011) introduced the idea of recording distances in a space of dimension greater than two, by introducing covariates into the correlation function. A special case, termed the projection model, measures the distance between sites j and k in this augmented space using the Mahalanobis metric with respect to an (unknown) \(c \times c\) symmetric, positive definite matrix \(\varvec{\Theta }\), that is,

Here \(\varvec{x}_j\) is a vector of coordinates for site j, comprising its two spatial coordinates and the values of \(c-2\) meta-covariates. This is then combined with an exponential spatial correlation function to give \(\phi _{jk} = \exp (-d_{C,jk})\). Omitting the spatial coordinates, these ideas can be extended to non-spatial settings if there are meta-covariates associated with the p variables that are thought to influence the relationships between deviations from the mean \((y_{ij} - \mu _j)\) and \((y_{ik} - \mu _k)\) in any vector measurement. Indeed, any Gaussian process correlation matrix could be used for \(\varvec{\Phi }\) which has huge scope for the incorporation of meaningful domain expertise.

More generally, if the distances between variables can be measured by some metric, say \(d_{jk}\), this could be used to structure the prior through the specification \(\phi _{jk} = \exp (-d_{jk} / \vartheta )\). An ecological example is provided in Sect. 6.2 which uses phylogenetic distances between species in a joint model for species occurrence.

3.5 Model and prior for \(\varvec{\Psi }\)

Except in confirmatory factor analysis, an appropriate number of factors is not usually known a priori. Various methods have been proposed in the literature to address this source of uncertainty. This includes techniques to approximate the marginal likelihood of models with different numbers of factors, including path sampling (Dutta and Ghosh 2013) and Chib’s method (Chib et al. 2006). However, these methods are very computationally expensive, requiring separate Monte Carlo simulations for models with \(k=1,\ldots ,H\) factors, where H is the maximum number of factors that a modeller is prepared to entertain. Lopes and West (2004) propose a reversible jump MCMC algorithm but the proposals for transdimensional moves require pilot MCMC runs for models with \(k=1,\ldots ,H\) factors which rapidly becomes untenable for high-dimensional problems. Other methods that attempt to allow simultaneous inference of the number of factors and the model parameters rely on identification of individual zeros in the factor loadings matrix (Frühwirth-Schnatter and Lopes 2010; Conti et al. 2014; Frühwirth-Schnatter et al. 2023). The approach taken here in similar in spirit, relying on the removal of columns whose contribution to \(\varvec{\Omega }\) is negligible.

Due to the challenges in approximating the posterior for k, a recent approach that has become popular relies conceptually on an overfitted factor model (Bhattacharya and Dunson 2011; Legramanti et al. 2020; Schiavon et al. 2022). In theory, infinitely many factors are permitted but cumulative shrinkage priors are used to increasingly shrink columns of the factor loadings matrix towards zero as the column index increases. This allows the infinite factor loadings matrix to be approximated with a finite matrix by omitting columns that have been shrunk close enough to zero. The posterior for the number of non-discarded columns \(k^{*}\) is then used as a proxy for the posterior for k. As such, a single MCMC sample yields information about both the model parameters and the number of latent factors.

A popular increasing shrinkage prior is the multiplicative gamma process (MGP) (Bhattacharya and Dunson 2011). Conditional on a set of hyperparameters, the factor loadings within each column are assumed to be independent normal random variables with zero mean and a column-specific precision parameter. The precisions are a cumulative product of gamma random variables and their prior expectation increases with the column index. A factor is deemed inactive a posteriori if the contribution of its loadings to \(\varvec{\Omega }\) is negligible under a chosen truncation criterion. In order to apply these ideas to our structured prior for the shared variation matrix, we take the among-column scale matrix \(\varvec{\Psi }\) to be diagonal and assign the reciprocals of its elements a MGP prior

in which the \(\varrho _{\ell }\) are independent. To ensure identifiability of the shared variation matrix \(\varvec{\Delta }=\varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) and the idiosyncratic variances \(\varvec{\Sigma }\) in the likelihood, we choose to truncate the prior at \(H \le \lceil \varphi (p) \rceil - 1\) where \(\varphi (p)\) was defined in (4). Guidelines on the choice of \(a_1\) and \(a_2\) to ensure the \(1/\psi _h\) are stochastically increasing in a reasonable neighbourhood of zero are provided in Durante (2017).

4 Stationary dynamic factor models

4.1 Bayesian dynamic factor models

In the classic vector autoregressive (VAR) model, the number of parameters grows quadratically with the dimension of the observation vector. In contrast, the number of parameters in a dynamic factor model only grows quadratically with the dimension of the latent factors. This makes them a valuable tool for dimension-reduction in multivariate time series analysis. A dynamic factor model has the same observation equation as a static model,

However, the factors in a dynamic model evolve over time according to an order m vector autoregression (VAR \(\text {(}{m}\text {)}\)). This yields the state equation as

for \(t=1,2,\ldots \) in which the observation and factor innovations \(\varvec{\epsilon }_t\) and \(\varvec{\zeta }_t\) are serially and mutually uncorrelated. As in the static case, it is generally assumed that the common factors explain all of the shared variation and so \(\varvec{\Sigma }\) is taken to be diagonal. Various extensions of this model have been presented, for example, Peña and Poncela (2006) incorporate a moving average component in state equation, Aßmann et al. (2016) introduce lagged factors in (10) and Kaufmann and Schumacher (2019) allow the components of the idiosyncratic innovations to evolve independently as univariate stationary autoregressions.

4.2 A stationary dynamic factor model

The methodology described in this paper relies on the use of a structured prior distribution for the shared variation component of the marginal variance. The marginal variance of a dynamic factor model is only meaningfully defined when the factors evolve according to a stationary process; hereafter we focus only on situations where this is a reasonable assumption. The manner by which stationarity is enforced is discussed in Sect. 4.3.

In order to identify the scale of the loadings matrix in a dynamic factor model, the scale of the factors is normally constrained by fixing \(\varvec{\Pi }=\text {Var}(\varvec{\zeta }_t)=\varvec{I}_k\). However, to retain the interpretation of \(\varvec{\Delta }=\varvec{\Lambda }\varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}\) as a shared variation matrix, we instead constrain the stationary covariance matrix so that \(\varvec{G}_0=\text {Var}(\varvec{\eta }_t)=\varvec{I}_k\) and hence the marginal variance of \(\varvec{y}_t\) remains as \(\varvec{\Omega }=\varvec{\Lambda } \varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}+ \varvec{\Sigma }\). We can therefore assign a prior to the unconstrained factor loadings matrix \(\varvec{\Lambda }\) using precisely the ideas discussed in Sect. 3 for the static model.

To complete specification of the stationary dynamic factor model, the state equation (11) must be initialised at its stationary distribution. To this end, we augment the parameter space with m auxiliary factors at times \(t=1-m, \ldots , 0\). Generalising the definition of the stationary variance \(\varvec{G}_0\) above, we define \(\varvec{G}_i = \text {Cov}(\varvec{\eta }_t, \varvec{\eta }_{t+i})\) as the ith autocovariance of \(\varvec{\eta }_t\). The initial distribution can then be expressed as \((\varvec{\eta }_{1-m}^{\mathrm {\scriptscriptstyle T}}, \ldots , \varvec{\eta }_0^{\mathrm {\scriptscriptstyle T}})^{\mathrm {\scriptscriptstyle T}}\sim \textrm{N}_{km}(\varvec{0}, \varvec{G})\) where \(\varvec{G}\) is a positive definite block Toeplitz matrix with \(\varvec{G}_{j-i}\) as the block in rows \(\{k(i-1)+1\}\) to ki and columns \(\{k(j-1)+1\}\) to kj (\(i,j=1,\ldots ,m\)) and \(\varvec{G}_{-\ell }=\varvec{G}_\ell ^{\mathrm {\scriptscriptstyle T}}\) (\(\ell =1,\ldots ,m-1\)).

4.3 Enforcing the stationarity constraint

Denote by B the backshift operator, \(B \varvec{\eta }_t = \varvec{\eta }_{t-1}\). The VAR model governing evolution of the factors can then be written as \(\varvec{\zeta }_t = (\varvec{I}_k - \varvec{\Gamma }_1 B - \cdots - \varvec{\Gamma }_m B^m) \varvec{\eta }_t = \varvec{\Gamma }(B) \varvec{\eta }_t\) where \(\varvec{\Gamma }_i \in \mathbb {R}^{k \times k}\) for \(i=1,\ldots ,m\) and \(\varvec{\Gamma }(u) = \varvec{I}_k - \varvec{\Gamma }_1 u - \cdots - \varvec{\Gamma }_m u^m\), \(u \in \mathbb {C}\), is termed the characteristic polynomial. The process is stable if and only if all the roots of \(\det \{ \varvec{\Gamma }(u) \} = 0\) lie outside the unit circle. Because all stable processes are stationary and unstable stationary processes are not generally of interest, this subset of the Cartesian product space in which the \(\varvec{\Gamma }_i\) lie is often referred to as the stationary region, \(\mathcal {C}_{m, k}\). Even for the simplest vector case of an order-1 bivariate autoregression, it has a highly complex geometry.

Various authors have considered Bayesian inference for stationary dynamic factor models (e.g. Sáfadi and Peña 2008; Lopes et al. 2008). However, due to the difficulties of designing efficient MCMC samplers with state space constrained to \(\mathcal {C}_{m, k}\), the autoregressive coefficient matrices are often assumed to be diagonal, which simplifies the stationarity condition to that of a univariate autoregression. Fortunately, recent work by Heaps (2023) presents a prior for the autoregressive coefficient matrices that is constrained to \(\mathcal {C}_{m, k}\) and facilitates routine computational inference. This is based on an unconstrained reparameterisation of the model through two bijective transformations. First, the model parameters \(\{ (\varvec{\Gamma }_1, \ldots , \varvec{\Gamma }_m), \varvec{\Pi } \}\) undergo a recursive mapping which yields a new parameter set \(\{ (\varvec{P}_1, \ldots , \varvec{P}_m), \varvec{\Pi } \}\). Here \(\varvec{P}_{i+1}\) is the \((i+1)\)th partial autocorrelation matrix, that is, a standardised version of the conditional cross-covariance between \(\varvec{\eta }_{t+1}\) and \(\varvec{\eta }_{t-i}\) given the i intervening variables \((\varvec{\eta }_{t}, \ldots , \varvec{\eta }_{t-i+1})\). Each partial autocorrelation matrix lies in the space of \(k \times k\) square matrices with singular values less than one. By mapping the singular values of \(\varvec{P}_i\) from [0, 1) to the positive real line, a second mapping then constructs an unconstrained \(k \times k\) square matrix \(\varvec{A}_i\) through \(\varvec{A}_i=(\varvec{I}_k - \varvec{P}_i \varvec{P}_i^{\mathrm {\scriptscriptstyle T}})^{-1/2} \varvec{P}_i\), \(i=1,\ldots ,m\), in which \(\varvec{X}^{-1/2}\) denotes the inverse of the symmetric matrix square root of \(\varvec{X}\). Specification of a prior for the \(\varvec{A}_i\), discussed in Sect. 4.4, and computational inference is now routine owing to the Euclidean geometry of the parameter space.

The inverse mapping from the intermediate reparameterisation \(\{ (\varvec{P}_1, \ldots , \varvec{P}_m), \varvec{\Pi } \}\) to the original parameters \(\{ (\varvec{\Gamma }_1, \ldots , \varvec{\Gamma }_m), \varvec{\Pi } \}\) involves two recursions. The first outputs the stationary covariance matrix \(\varvec{G}_0\) from \(\{ (\varvec{P}_1, \ldots , \varvec{P}_m), \varvec{\Pi } \}\) and the second outputs \((\varvec{\Gamma }_1, \ldots , \varvec{\Gamma }_m)\) (and recovers \(\varvec{\Pi }\)) from \((\varvec{P}_1, \ldots , \varvec{P}_m)\) and \(\varvec{G}_0\). It is therefore trivial to modify the reparameterisation to accommodate the constraint that \(\varvec{G}_0=\varvec{I}_k\); one simply omits the first recursion, and calculates \(\{ (\varvec{\Gamma }_1, \ldots , \varvec{\Gamma }_m), \varvec{\Pi } \}\) from \((\varvec{P}_1, \ldots , \varvec{P}_m)\) and \(\varvec{G}_0=\varvec{I}_k\) in the second recursion. We note that the autocovariance matrices \(\varvec{G}_1, \ldots , \varvec{G}_{m-1}\) needed to characterise the initial distribution of \(\varvec{\eta }_{(1-m):0}\) are also by-products of this second recursion.

When \(\varvec{\eta }_t\) follows a VAR \(\text {(}{1}\text {)}\) process, there is a closed form expression for the original model parameters in terms of \(\varvec{P}_1\) or, equivalently, \(\varvec{A}_1\). Dropping the 1-subscript for brevity, we can write \(\varvec{\Gamma }=\varvec{P}=(\varvec{I}_k+\varvec{A}\varvec{A}^{\mathrm {\scriptscriptstyle T}})^{-1/2} \varvec{A}\) and \(\varvec{\Pi }=\varvec{I}_k-\varvec{P} \varvec{P}^{\mathrm {\scriptscriptstyle T}}=(\varvec{I}_k+\varvec{A} \varvec{A}^{\mathrm {\scriptscriptstyle T}})^{-1}\).

4.4 Prior for the transformed partial autocorrelation matrices

The stationary dynamic factor model described in the previous sections is invariant under rotations of the factors when compensatory transformations are applied to the factor loadings and parameters of the state equation. In particular, marginalising over the common factors, it can readily be shown that the likelihood evaluated at \((\varvec{\mu },\varvec{\Lambda },\varvec{\Sigma },\varvec{A}_1,\ldots ,\varvec{A}_m)\) is the same as the likelihood evaluated at \((\varvec{\mu },\varvec{\Lambda } \varvec{Q}^{\mathrm {\scriptscriptstyle T}},\varvec{\Sigma },\varvec{Q} \varvec{A}_1 \varvec{Q}^{\mathrm {\scriptscriptstyle T}},\ldots ,\varvec{Q} \varvec{A}_m \varvec{Q}^{\mathrm {\scriptscriptstyle T}})\) for any orthogonal matrix \(\varvec{Q} \in \mathcal {O}(k)\). In the absence of any meaningful information to distinguish \(\varvec{A}_i\) from \(\varvec{Q} \varvec{A}_i \varvec{Q}^{\mathrm {\scriptscriptstyle T}}\) a priori, we assign a prior which is rotatable, that is \(\varvec{A}_i\) and \(\varvec{Q} \varvec{A}_i \varvec{Q}^{\mathrm {\scriptscriptstyle T}}\) have the same distribution for any orthogonal matrix \(\varvec{Q}\). To this end, we take the \(\varvec{A}_i\) to be independent with \(\varvec{A}_i \sim \textrm{N}_{k,k}(\varvec{0}, \varvec{I}_k, \varvec{I}_k)\), \(i=1,\ldots ,m\).

5 Posterior computation

5.1 Samplers with fixed truncation level

Consider first the static factor model. For computational inference, we use MCMC to sample from the posterior associated with our unconstrained parameterisation of the model. When the truncation level in the factor loadings matrix is fixed at H, we propose a straightforward Gibbs sampler. The full conditional distributions for most unknowns have standard forms and can be sampled directly. However, depending on the structure chosen for the among-row scale matrix \(\varvec{\Phi }=\varvec{R}(\varvec{\vartheta })\), a semi-conjugate prior for the correlation parameter(s) in \(\varvec{\vartheta }\) is not generally available and so we update \(\varvec{\vartheta }\) in a Metropolis-Hastings step. Computational inference is slightly complicated if a matrix-t, rather than matrix normal, prior is adopted for \(\varvec{\Lambda }\) because it is not conjugate to the likelihood. However, sampling becomes straightforward if we make use of the representation of a matrix-t random variable in (7) and augment the state space of the sampler with the matrix \(\varvec{S}\). The full conditional distribution for \(\varvec{\Lambda }\) is then matrix-normal while the full conditional distribution for \(\varvec{S}\) is Wishart. The only other additional unknown is the degree of freedom parameter \(\varsigma \). If \(\varsigma \) is assigned the prior from Sect. 3.3, its full conditional distribution is non-standard. Rather than updating the transformed degree of freedom parameter \(\check{\varsigma }\) through its own Metropolis-Hastings step, mixing can be greatly improved by performing a joint update of \(\varvec{S}\) and \(\check{\varsigma }\). The proposal density takes the form \(q(\varvec{S}^*, \check{\varsigma }^*| \varvec{S}, \check{\varsigma },\ldots )=q_1(\check{\varsigma }^*| \check{\varsigma })q_2(\varvec{S}^*| \check{\varsigma }^*,\ldots )\) in which \(q_1(\cdot )\) is a random walk proposal on the log-scale and \(q_2(\cdot )\) is the full conditional density for \(\varvec{S}\).

Extension of the Gibbs sampler to handle the dynamic factor model is straightforward. The latent factors \(\varvec{\eta }_{1-m}, \ldots , \varvec{\eta }_n\) can be sampled efficiently from their full conditional distribution in a single block using a variety of sampling approaches (Wilkinson and Yeung 2002, 2004). In the example in Sect. 6.3 we used the forward-filtering backward-sampling algorithm (e.g. Frühwirth-Schnatter 1994) but other methods that rely on banded or sparse matrix algorithms will be more efficient in higher-dimensional applications; see, for example, Chan and Jeliazkov (2009). The full conditional distributions for the transformed partial autocorrelation matrices \(\varvec{A}_1, \ldots , \varvec{A}_m\) are non-standard. Given a truncation level of H, each \(\varvec{A}_i\) contains \(H^2\) parameters and so it is not generally feasible to update the whole matrix in a single Metropolis-Hastings step. We therefore propose dividing the elements of each \(\varvec{A}_i\) into blocks of length b and updating the blocks one-at-a-time. In the application in Sect. 6.3, we found mixing to be greatly improved by using Metropolis-adjusted Langevin (MALA) steps rather than Gaussian random walks.

Given a large enough fixed truncation level \(H \le \lceil \varphi (p) \rceil - 1\), the MGP prior increasingly shrinks the factor loadings in columns with higher indices if the data suggest their likelihood contribution is negligible. At least conceptually, these columns can be discarded. If there are \(m^{[i]}\) discardable columns on iteration i of the MCMC sampler, the effective number of factors is defined as \(k^{*\, [i]}=H - m^{[i]}\). The posterior for the effective number of factors can then be used as a proxy for the posterior for k. There are various truncation criteria for deciding which columns can be omitted. Bhattacharya and Dunson (2011) consider as inactive any factor whose loadings are all within \(\epsilon \) of zero for some chosen threshold, say \(\epsilon =10^{-4}\). Schiavon and Canale (2020) suggest a more interpretable criterion which, unlike the choice of threshold \(\epsilon \), is unaffected by the scale of the data and its dimension. Denote by \(\varvec{\Lambda }_{k^{*}}\) the factor loadings matrix obtained by discarding the columns of \(\varvec{\Lambda }\) from \(k^{*}+1\) onwards. The basic idea is that if \(\varvec{\Omega }_{k^{*}} = \varvec{\Lambda }_{k^{*}} \varvec{\Lambda }_{k^{*}}^{\mathrm {\scriptscriptstyle T}}+ \varvec{\Sigma }\) can explain 100T% of the total variability of the data for some \(T \in (0, 1)\), then one decides there are \(k^{*}\) active factors. The choice of proportion T then replaces the choice of threshold \(\epsilon \). This is the truncation criterion we adopt in the applications in Sect. 6.

When the truncation level H is fixed, an alternative to Gibbs sampling is to implement off-the-shelf computational inference using standard probabilistic programming software. Code for the applications in Sect. 6, written in Stan (Carpenter et al. 2017), is given in the Supplementary Materials. Stan uses Hamiltonian Monte Carlo to perform joint updates of all unknowns and so tends to converge faster and mix better than a Gibbs sampler. The caveat is the necessity to fix H; although this can be addressed in a bespoke implementation of Gibbs sampling (see Sect. 5.2 below), Stan cannot be customised in this way.

5.2 Adaptive Gibbs sampler

Especially in applications where p is large, the number of effective factors is often considerably less than the Ledermann bound \(\varphi (p)\). Therefore fixing a truncation level H which is less than, but in the vicinity of, \(\varphi (p)\) can be computationally inefficient. In the literature on infinite factor models, a common pragmatic solution is to use an adaptive Gibbs sampler which tunes the truncation level H as it proceeds. Adaptation occurs at iteration i with probability \(p(i)=\exp (\alpha _0+\alpha _1 i)\) where \(\alpha _0 \le 0\) and \(\alpha _1 < 0\) such that the probability of adaptation decreases over the course of the simulation. This is necessary to satisfy the diminishing adaptation condition of Roberts and Rosenthal (2007).

During an adaptation step, \(k^{*\, [i]}\) is compared to the current value of H. The basic idea is to delete any inactive factors or to add an extra factor if all H factors are active. In the static model, implementation is straightforward. If \(k^{*\, [i]}<H\), H is reduced to \(k^{*\, [i]}\) and any inactive factors are deleted along with the corresponding columns of \(\varvec{\Lambda }\) and components of \(\varvec{\varrho }\) and \(\varvec{\Psi }\). If \(k^{*\, [i]}=H\) and \(H < \lceil \varphi (p) \rceil - 1\), H is increased by 1 then an extra factor, column of factor loadings, and component of \(\varvec{\varrho }\) are sampled from their priors. In the dynamic model, the adaptation step is slightly more involved because of the additional complexity in the distribution of the latent factors. Full details of the adaptive Gibbs samplers for both static and dynamic models are given in the Supplementary Materials, along with R code for the applications in Sect. 6. We also provide details on how the posterior output can be post-processed to obtain samples under a parameterisation in which the factor loadings matrix is identified by the PLT constraint. This is incredibly valuable for parameter interpretation and the assessment of MCMC diagnostics.

6 Applications

6.1 Simulation experiment

6.1.1 Simulation settings

In order to investigate posterior sensitivity to the prior specification in the idealised setting in which we know the data were generated from a factor model with known shared variation matrix \(\varvec{\Delta }\), we carried out a series of simulation experiments. In the simplified setting in which \(\varvec{\Sigma }=\sigma ^2 \varvec{I}_p\), denote by \(\beta \) the proportion of the total variation in \(\varvec{\Omega } = \varvec{\Delta } + \varvec{\Sigma }\) that is explained by the common factors, that is, \(\beta =\textrm{tr}(\varvec{\Delta }) / \textrm{tr}(\varvec{\Omega })\). Then for given \(\beta \) and \(\varvec{\Delta }\), the corresponding value of \(\sigma ^2\) can be computed as \(\sigma ^2=(1-\beta ) \textrm{tr}(\varvec{\Delta }) / (k \beta )\). Three different combinations of p and k, representing different degrees of dimension reduction, were considered: \((p=24, k=6)\), \((p=48, k=9)\) and \((p=72, k=9)\) so that k/p was equal to 25, 18.75 and 12.5%, respectively. For each combination of p and k, we also considered three values for \(\beta \), \(\beta \in \{0.9, 0.95, 0.99\}\), giving nine sets of values for \(\{(p, k), \beta \}\) in total. We refer to these as simulation settings. Note that we only set \(\varvec{\Sigma }=\sigma ^2 \varvec{I}_p\) for the purposes of simulating the data; for inference, we still allow \(\varvec{\Sigma } = \textrm{diag}(\sigma _1^2, \ldots , \sigma _p^2)\).

Under each simulation setting, we simulated 24 data sets of size \(n=50\) based on an exponential correlation matrix for \(\varvec{\Phi }\), taking \(\varvec{\Delta }\) to be the closest rank-k matrix to \(\varvec{\Phi }\) (see Proposition 1). In the simulation of each data set, in order to fix the value of \(\varvec{\Delta }\), we simulated p pairs of coordinates \(\varvec{x}_j = (x_{j1}, x_{j2})^{\mathrm {\scriptscriptstyle T}}\), \(j=1,\ldots ,p\), by sampling uniformly at random from the unit square, and then set the expected shared variation matrix equal to \(\varvec{\Phi }\) with (j, k) element \(\phi _{jk}=\exp (-\Vert \varvec{x}_j - \varvec{x}_k \Vert _2 / \vartheta )\) where the length-scale parameter was equal to

For a distance of 1 in the unit square, the correlations therefore ranged from 0.05 to 0.20.

6.1.2 Prior distributions

Each data set generated under each simulation setting was used to update five prior distributions for \(\varvec{\Lambda }\). Three are structured matrix normal priors, with different assumptions about \(\varvec{\Phi }\), and two are widely used priors in factor analysis which allow inference on the number of factors:

- SN (expcov.):

-

A structured matrix normal prior in which \(\varvec{\Phi }\) was taken to have the same form as that used to simulate the data, that is, an exponential correlation matrix. The length-scale parameter was taken to be unknown and assigned the distribution \(\log (\vartheta ) \sim \textrm{N}(0, 1)\).

- SN (fixed):

-

A structured matrix normal prior in which \(\varvec{\Phi }\) was taken to have the same form as that used to simulate the data, that is, an exponential correlation matrix. The length-scale parameter was fixed at the value used to simulate the data.

- SN (exch.):

-

A structured matrix normal prior in which \(\varvec{\Phi }\) was taken to have a different form to that used to simulate the data, specifically we took \(\varvec{\Phi }=(1-\vartheta )\varvec{I}_p + \vartheta \varvec{J}_p\), \(-1/(p-1)< \vartheta < 1\), representing a two-parameter exchangeable matrix with trace fixed at p. The correlation parameter was taken to be unknown and assigned the distribution \(\textrm{logit}(\check{\vartheta }) \sim \textrm{N}(0, 1)\) where \(\check{\vartheta } = \{1 + (p-1) \vartheta \} / p\).

- MGP:

-

The original (exchangeable) multiplicative gamma process prior described in Bhattacharya and Dunson (2011), which is a global–local prior. We took \(a_1=1\) and \(a_2=2\) in the multiplicative gamma process for the global precision parameters and \(\nu =3\) in the distribution for the local precision parameters.

- CUSP:

-

A cumulative shrinkage process prior (Legramanti et al. 2020), which is a slab-and-spike prior. We took \(a_{\theta }=b_{\theta }=2\) in the distribution for the slab, \(\theta _{\infty }=0.05\) for the position of the spike and \(\alpha =5\) in the cumulative stick-breaking construction controlling the probability assigned to the spike.

For the three structured matrix normal priors, we chose \(a_1=1\) and \(a_2=2\) in the multiplicative gamma process for the diagonal elements of \(\varvec{\Psi }\). In all cases, the idiosyncratic variances were assigned the prior \(1 / \sigma _j^2 \sim \gamma (1, 0.3)\) independently for \(j=1,\ldots ,p\).

6.1.3 Analysis and results

All analyses were run using an adaptive Gibbs sampler, allowing adaptation after 500 iterations and setting the parameters in the diminishing adaptation condition at \(\alpha _0=-1\) and \(\alpha _1=-5 \times 10^{-4}\). The chains were run for 10K iterations, following a burn-in of 10K, and thinned to retain every fifth sample to reduce computational overheads. For the three structured matrix normal priors and the original MGP prior, we applied the truncation criterion of Schiavon and Canale (2020), retaining as active enough factors to explain at least \(T=0.95\) of the total variation in the data.

The (squared) geodesic distance between two symmetric positive definite matrices \(\varvec{A} \in \mathcal {S}_p^+\) and \(\varvec{B} \in \mathcal {S}_p^+\) is defined as

in which \(\lambda _i(\varvec{X})\) denotes the ith eigenvalue of \(\varvec{X}\) (Lim and Sepulchre 2019). For simulation experiments in which \(\beta =0.99\), Table 1 reports the median and interquartile range across the 24 data sets of the posterior mean of \(d_G(\varvec{\Omega }, \varvec{\Omega }_0)\) where \(\varvec{\Omega }_0\) denotes the value used to simulate the data. Corresponding Tables for the simulations where \(\beta =0.95\) and \(\beta =0.9\) are presented in the Supplementary Materials. We chose the distance metric \(d_G(\cdot ,\cdot )\) because, by construction, \(\varvec{\Omega }\) is close to singular and so the geodesic distance will be better suited than a metric, such as the Frobenius distance, which ignores the geometry of the space. In particular, it will give large values whenever \(\varvec{\Omega }\) and \(\varvec{\Omega }_{0}\) do not approximately span the same subspace of \(\mathbb {R}^{p}\), that is when their null spaces are not approximately the same. We also report the median and interquartile range for the posterior mean of the effective number of factors \(k^*\).

Focusing first on the posterior mean of the geodesic distance \(d_G(\varvec{\Omega }, \varvec{\Omega }_0)\), it is immediately clear from Table 1 and the corresponding tables in the Supplementary Materials that both the medians and interquartile ranges across data sets are typically smallest when we use a prior in which \(\varvec{\Phi }\) is based on an exponential correlation matrix. As we would expect, this is especially true when the length-scale parameter in the prior is fixed at the value used in simulating the data. Nevertheless, the modest differences between posterior inferences under SN (expcov.) and SN (fixed) are reassuring; in the analysis of a real data set, though we may have strong prior beliefs about a suitable structure for \( E (\varvec{\Delta })\), we are likely to be more uncertain about the values of the hyperparameters in that structure. We also observe that the gains from assuming the correct form for \(\varvec{\Phi }\) in this simulation experiment are greatest when the total variation in \(\varvec{\Delta }\) makes up a larger proportion of the total variation in \(\varvec{\Omega }\) (that is, when \(\beta =0.99\) and \(\beta =0.95\)) and when the degree of dimension reduction is larger. This is as expected because in both cases, the prior for \(\varvec{\Lambda }\), and therefore \(\varvec{\Delta }\), is likely to impart more influence on the posterior for \(\varvec{\Omega }\). It is interesting to observe that when we adopt a structured matrix normal prior but assume an incorrect structure for \(\varvec{\Phi }\), the posterior mean for \(d_G(\varvec{\Omega }, \varvec{\Omega }_0)\) still tends to be smaller than under the MGP or CUSP priors. This is likely to be because we simulated all data sets based on exponential correlation matrices for \(\varvec{\Phi }\) which have strictly positive diagonal elements. Under SN (exch.), we are shrinking \(\varvec{\Delta }\) towards a rank-reduced approximation to a two-parameter exchangeable matrix with a common (potentially positive) off-diagonal element whereas the prior expectation for \(\varvec{\Delta }\) under the MGP and CUSP priors is diagonal.

A discussion of the results concerning the posterior mean of the effective number of factors \(k^*\) can be found in the Supplementary Materials.

6.2 Co-occurrence of Finnish birds

6.2.1 Model and prior

As discussed in Sect. 1, most of the literature on prior specification for factor models focuses on constructing a distribution for the factor loadings matrix that is exchangeable with respect to the order of the components in the observation vector. An exception is the structured increasing shrinkage (SIS) process (Schiavon et al. 2022). This prior allows meta-covariates to inform the within-column sparsity structure of the factor loadings matrix in an infinite factorisation model. In this section, we re-examine the Finnish birds data set analysed in Schiavon et al. (2022) to illustrate the extra flexibility afforded by our approach.

The Finnish bird data comprise information on the co-occurrence of the 50 most common bird species in Finland from 137 sampling areas in 2014 (Lindström et al. 2015). The occurrences are arranged into a \(n \times p\) binary matrix \(\varvec{Y}=(y_{ij})\) where \(y_{ij}=1\) if bird species j was observed in area i and \(y_{ij}=0\) otherwise. Information available to explain the variation in the mean \(\varvec{\mu }_i\) at each sampling area include a \(n \times c\) matrix of environmental covariates \(\varvec{W}\) containing measurements on spring temperature and its square and a five-level habitat type (broadleaved forests, coniferous forests, open habitats, urban habitats, wetlands) so that \(c=7\). Information available to structure the prior for the marginal variance \(\varvec{\Omega }\) include a phylogenetic tree, indicating the evolutionary relationships amongst the 50 species, and a \(p \times q\) matrix of meta-covariates \(\varvec{X}\) consisting of two species traits: logarithm of typical body mass and a three-level migratory strategy (short-distance migrant, resident species, long-distance migrant). Following Schiavon et al. (2022), we model species presence or absence using a multivariate probit regression model where \(y_{ij} = \mathbb {I}(z_{ij}>0)\) and we assume the latent variables \(\varvec{z}_i = (z_{i1}, \ldots , z_{ip})^{\mathrm {\scriptscriptstyle T}}\) are such that \(\varvec{z}_i = \varvec{B}^{\mathrm {\scriptscriptstyle T}}\varvec{w}_i + \varvec{\Lambda } \varvec{\eta }_i + \varvec{\epsilon }_i\) for \(i=1,\ldots ,n\). Here \(\varvec{w}_i^{\mathrm {\scriptscriptstyle T}}\) is the ith row of \(\varvec{W}\), \(\varvec{B}=(\beta _{ij})\) is a \(c \times p\) matrix of regression coefficients, and the idiosyncratic variances on the diagonal of \(\text {Var}(\varvec{\epsilon }_i)=\varvec{\Sigma }\) are set equal to 1 to prevent compensatory rescaling of \(\varvec{B}\) and \(\varvec{\Omega }=\varvec{\Lambda }\varvec{\Lambda }^{\mathrm {\scriptscriptstyle T}}+ \varvec{\Sigma }\). Our prior for the coefficients in \(\varvec{B}\) is identical to that of Schiavon et al. (2022) and described in the Supplementary Materials.

The SIS process prior for \(\varvec{\Lambda }\) is built on a series of assumptions of conditional independence. As a consequence, the expectation of the shared variation matrix \(\varvec{\Delta }\) is diagonal, irrespective of the priors assigned to its hyperparameters. Moreover, every factor loading \(\lambda _{ij}\) has a spike-and-slab distribution in which the meta-covariates only enter through the logit of the spike probability. As such, there is no mechanism for incorporating the rich information on evolutionary relationships that is expressed through the phylogenetic tree.

In contrast, using the approach outlined in Sect. 3.4 we can structure the prior for the shared variation so that it incorporates the information from the phylogenetic tree in addition to the two meta-covariates. The former can be encoded as a matrix of phylogenetic distances between the 50 species, whose (i, j)th entry, say \(d_{P,ij}\), is the sum of the branch lengths along the path between the leaves for species i and j. This distance function is a metric over the set of leaves (Steel 2016, Chapter 6). Another measure of the dissimilarity between species can be obtained using the generalised distance function from the projection model in (8). As we only have binary response data, which is unlikely to be as informative as continuous data, we simplify (8) by taking the matrix \(\varvec{\Theta }\) to be diagonal. This yields \(d_{C,ij} = \left\{ \sum _{k=1}^4 (x_{ik} - x_{jk})^2 / \vartheta _k^2 \right\} ^{1/2}\) as a metric for the distance between the meta-covariates for species i and j, where \(x_{i1}\), \(x_{i2}\) and \(x_{i3}\) are indicators for whether species i is a short-distance migrant, resident or long-distance migrant, respectively, while \(x_{i4}\) is the logarithm of typical body mass for species i. Since \(d_{P,ij}\) and \(d_{C,ij}\) are metrics over a set comprising the species and their associated traits, the sum is also a metric. We can therefore take as a generalised measure of distance between species i and j, \(d_{ij} = d_{C,ij} + d_{P,ij} / \vartheta _5\), in which \(\vartheta _i>0\) for \(i=1,\ldots ,5\). So that all the length-scale parameters \(\vartheta _i\) operate on a comparable scale, the meta-covariates and branch lengths are standardised prior to calculating \(d_{C,ij}\) and \(d_{P,ij}\). Finally, we relate the generalised distance \(d_{ij}\) to the among-row scale matrix \(\varvec{\Phi }\) by using the exponential correlation function, \(\phi _{ij}=\exp (-d_{ij})\). We assume the length-scale parameters to be independent in the prior and assign distributions \(\log \vartheta _i \sim \textrm{N}(0, 10)\).

Based on available phylogenetic and ecological information, this prior encourages shrinkage towards a rank-reduced approximation of an expected shared variation matrix \( E (\varvec{\Delta })\) with higher correlations between types of bird that are more closely related in terms of their evolutionary history and species traits. Since we allow the length-scale parameters \(\vartheta _i\) to be unknown we are also able to learn which variables are more important in explaining relationships between species. However, the structure for the expected shared variation matrix represented through \(\varvec{\Phi }\) is conjectural and so we elect to use the matrix-t prior whose extra degree of freedom parameter \(\varsigma \) allows the data to influence the degree of shrinkage. As discussed in Sect. 3.3, we induce a prior for \(\varsigma > 4\) by specifying a distribution for \(\check{\varsigma } = 1 / (\varsigma -4) \in \mathbb {R}^+\). Specifically, we take \(\check{\varsigma } \sim \textrm{Exp}(1)\) which implies that when the number of factors k is 5, 10 and 15, the probability of the scale factor \(s_k(\check{\varsigma })\) lying between 1 and x is 0.75 for \(x=7.8\), \(x=12.9\) and \(x=18.0\), respectively. For the diagonal elements in \(\varvec{\Psi }\), we assign a MGP prior (9) with \(a_1=2\), \(a_2=6\) and a maximum truncation point H that satisfies \(H \le \lceil \varphi (50) \rceil - 1 = 40\).

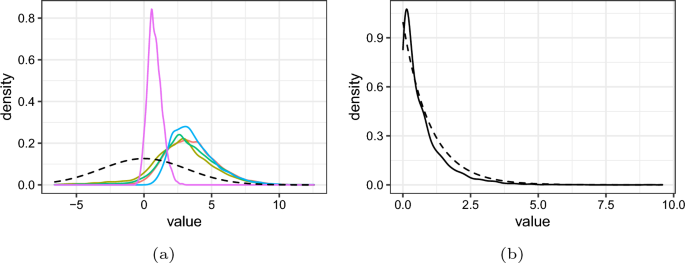

Marginal posterior density for a the logarithms of the length scale parameters \(\vartheta _1\) ( ), \(\vartheta _2\) (

), \(\vartheta _2\) ( ), \(\vartheta _3\) (

), \(\vartheta _3\) ( ), \(\vartheta _4\) (

), \(\vartheta _4\) ( ), \(\vartheta _5\) (

), \(\vartheta _5\) ( ) and b the transformed degree of freedom parameter \(\check{\varsigma }\). Also shown are the priors (

) and b the transformed degree of freedom parameter \(\check{\varsigma }\). Also shown are the priors ( ). (color figure online)

). (color figure online)

6.2.2 Analysis and results

We ran two chains, initialised at different starting points, using the adaptive Gibbs sampler discussed in Sect. 5.2 and another two chains using Stan’s implementation of the (non-adaptive) Hamiltonian Monte Carlo sampler with a fixed truncation level of \(H=10\). In the Gibbs sampler, adaptation was allowed after 500 iterations and, following Schiavon et al. (2022), the parameters in the diminishing adaptation condition were set at \(\alpha _0=-1\) and \(\alpha _1=-5 \times 10^{-4}\). After a burn-in of 20K iterations, each chain was run for a further 20K iterations, thinning to store every fifth draw in order to reduce computational overheads. Using the truncation criterion of Schiavon and Canale (2020), we retain as active enough factors to explain at least \(T=0.999\) of the total variation in the data. The posterior mode (and median) for the number of effective factors in each case was 5 with 95% credible interval (3, 6). Comparing the output of identified parameters across the chains from both samplers using the usual graphical diagnostic checks gave no evidence of any lack of convergence.

To gauge performance against another non-exchangeable prior, the model was also fitted under the SIS process prior using an adaptive Gibbs sampler, with the hyperparameters in the prior and tuning parameters in the sampler set in line with the specification from Section 5 of Schiavon et al. (2022). In this case, more or less all the posterior mass for the number of effective factors was stacked at 4.

The marginal prior and posterior densities for the logarithms of the length-scale parameters \(\vartheta _1,\ldots ,\vartheta _5\) and the transformed degree of freedom parameter \(\check{\varsigma } = 1 / (\varsigma -4)\) under the structured matrix-t prior are displayed in Fig. 1. Absence of a relationship between a component of the generalised distance metric and the expected shared variation between species would be indicated by an infinite value for the corresponding length-scale parameter. Therefore the concentration of all posterior densities in Fig. 1a over a finite interval near the origin highlights the role played by the generalised distance metric in explaining the structure of the shared variation matrix. It is also clear that the phylogenetic distances, whose length-scale parameter is \(\vartheta _5\), have the greatest influence. For the transformed degree of freedom parameter \(\check{\varsigma }\) in Fig. 1b, the similarity between the prior and posterior indicate that the information learned from the data is modest. Nevertheless, the shift in the position of the mode from 0 in the prior to around 0.1 in the posterior suggests that the data support less shrinkage towards the expected shared variation matrix than would be implied by a matrix normal prior for which \(\check{\varsigma }=0\).

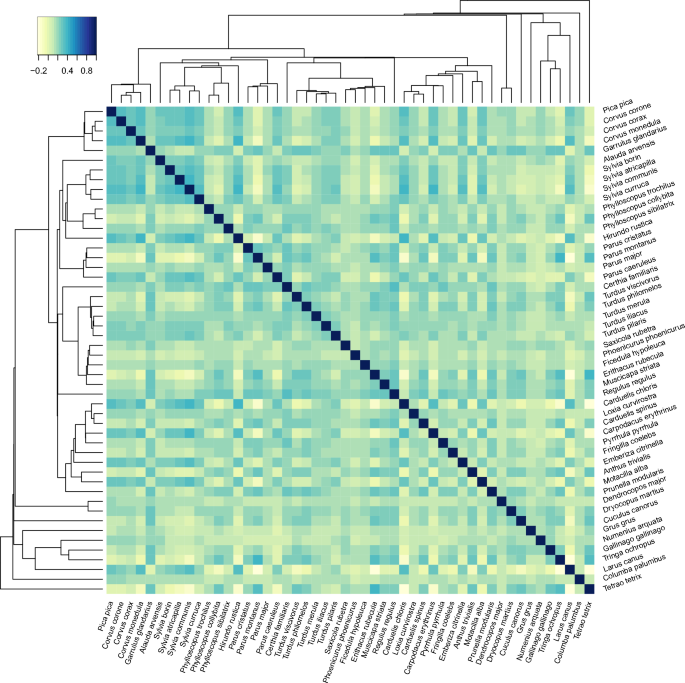

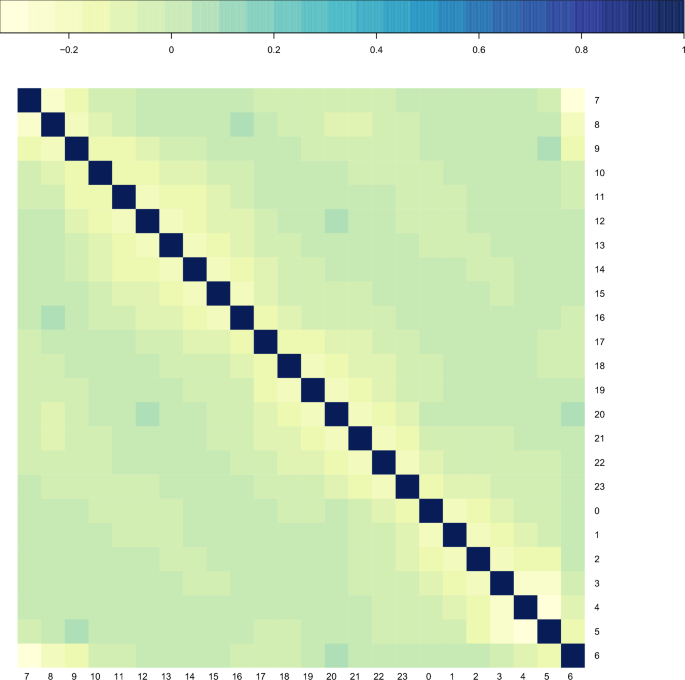

Further qualitative evidence of the importance of the phylogenetic information on the structure of the marginal covariance matrix \(\varvec{\Omega }=(\omega _{ij})\) is demonstrated in Fig. 2 which shows the mean of the posterior for the marginal correlation matrix \(\varvec{S}_{\Omega }^{-1} \varvec{\Omega } \varvec{S}_{\Omega }^{-1}\), where \(\varvec{S}_{\Omega } = \textrm{diag}(\surd \omega _{11}, \ldots , \surd \omega _{pp})\). The order of the 50 bird species is based on their phylogenetic tree, which is shown in the margins of the plot. There is clear structure in the matrix with several groups of species that share a recent common ancestor displaying strong positive correlations. This includes the species in the first ten rows, which correspond to birds from the Corvoidea superfamily and the Sylviidae family; the species in rows 19 to 30, which correspond largely to birds from the Musicapoidea superfamily; and the species in rows 42 to 47, which correspond mostly to birds from the Scolopacidae superfamily. It is also clear that most of the rows and columns predominated by near-zero correlations correspond to basal species, like Columba palumbus and Grus grus. Nevertheless, the structure of the matrix is clearly not dictated by evolutionary proximity alone. Interestingly, the corresponding plot from the analysis under the SIS process prior appears to be very sparse; see the Supplementary Materials. At least in part, this can be attributed to shrinkage of \(\varvec{\Delta }\) towards its prior mean, which is a diagonal matrix.

In order to provide a more formal comparison between the two model-prior combinations, we consider measures of goodness-of-fit and predictive performance. In the former case, we follow Schiavon et al. (2022) by computing the pseudo marginal likelihood (PML), defined as the product of conditional predictive ordinates (Gelfand and Dey 1994). In terms of predictive performance, we consider the Brier score, which is a widely used proper scoring rule for categorical variables, calculated using a 4-fold cross-validation approach (e.g. Gneiting and Raftery 2007). In our case, the score is positively oriented so that large values indicate better performance. The results are shown in Table 2 from which we can conclude that the combination of the factor model and structured matrix-t prior gives the best-fit to the data and, more markedly, the best predictive performance. Further details on the calculation of the PML and Brier score can be found in the Supplementary Materials.

6.3 Hourly demand for natural gas

6.3.1 Model and prior

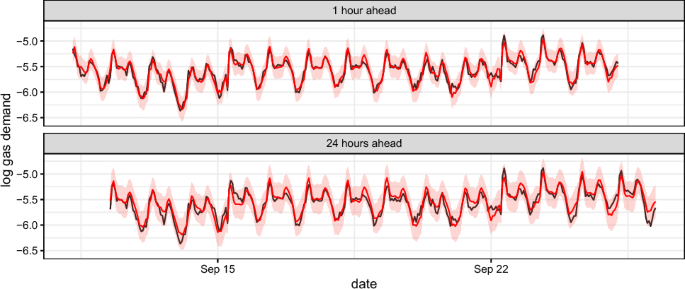

To illustrate the use of our methodology in the context of a dynamic model, we consider an application to modelling the hourly demand for natural gas. In the UK, gas in the national transmission system is transported to individual customers through eight regional distribution networks which are responsible for maintaining a reliable gas supply to customers at all times. This requires accurate short-term forecasts of the hourly demand for gas at individual points in the network called offtakes, where gas is taken off to supply locally. We consider data from a single offtake site in a regional distribution network in the North of England. Hourly gas demand data are available for the period from May 2012 to June 2015 along with the average daily temperature at the offtake site. In addition to the weather, the other main factors that are known to influence the mean level of residential demand for gas are seasonal and calendar effects (Soldo 2012). We therefore additionally incorporate covariates that represent the day of the year, day of the week and whether or not a day is a public holiday.

The measurement of the demand for gas at an hourly resolution makes construction of a model challenging because the underlying dynamics of the process are likely to vary over a much longer time scale. For example, consider a dynamic linear model for hourly gas demand that allows for calendar and weather effects and incorporates a time-varying local level. Suppose that this local level is modelled as evolving according to a random walk or autoregression. Deviations from behaviour that would be considered typical given the calendar and weather effects would likely persist over days, rather than hours, and so hourly evolution of the local level would demand a near-zero innovation variance. Similarly, if calendar effects such as the day of the week, were regarded as dynamic, hourly evolution would demand vanishingly small innovation variances.