US20140228701A1 - Brain-Computer Interface Anonymizer - Google Patents

Brain-Computer Interface Anonymizer Download PDFInfo

- Publication number

- US20140228701A1 US20140228701A1 US14/174,818 US201414174818A US2014228701A1 US 20140228701 A1 US20140228701 A1 US 20140228701A1 US 201414174818 A US201414174818 A US 201414174818A US 2014228701 A1 US2014228701 A1 US 2014228701A1

- Authority

- US

- United States

- Prior art keywords

- brain

- bci

- neural signals

- computer interface

- anonymized

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Abandoned

Links

- 230000001537 neural effect Effects 0.000 claims abstract description 164

- 210000004556 brain Anatomy 0.000 claims abstract description 135

- 238000000034 method Methods 0.000 claims abstract description 70

- 238000001914 filtration Methods 0.000 claims abstract description 17

- 230000000694 effects Effects 0.000 claims abstract description 9

- 230000006870 function Effects 0.000 claims description 59

- 238000012545 processing Methods 0.000 claims description 41

- 238000000605 extraction Methods 0.000 claims description 21

- 238000000354 decomposition reaction Methods 0.000 claims description 14

- 230000009467 reduction Effects 0.000 claims description 14

- 238000004519 manufacturing process Methods 0.000 claims description 6

- 238000004891 communication Methods 0.000 description 25

- 230000004044 response Effects 0.000 description 21

- 238000000537 electroencephalography Methods 0.000 description 17

- 230000015654 memory Effects 0.000 description 14

- 230000008569 process Effects 0.000 description 14

- 238000006243 chemical reaction Methods 0.000 description 13

- 230000005540 biological transmission Effects 0.000 description 11

- 238000010586 diagram Methods 0.000 description 9

- 230000003750 conditioning effect Effects 0.000 description 8

- 230000008901 benefit Effects 0.000 description 7

- 238000007781 pre-processing Methods 0.000 description 7

- 230000001143 conditioned effect Effects 0.000 description 6

- 238000013500 data storage Methods 0.000 description 6

- 238000011160 research Methods 0.000 description 6

- 239000000284 extract Substances 0.000 description 5

- 238000013475 authorization Methods 0.000 description 4

- 230000001149 cognitive effect Effects 0.000 description 4

- 238000001514 detection method Methods 0.000 description 4

- 239000012636 effector Substances 0.000 description 4

- 230000001960 triggered effect Effects 0.000 description 4

- 230000000007 visual effect Effects 0.000 description 4

- 230000009471 action Effects 0.000 description 3

- 238000013459 approach Methods 0.000 description 3

- 230000033001 locomotion Effects 0.000 description 3

- 230000001953 sensory effect Effects 0.000 description 3

- 238000012549 training Methods 0.000 description 3

- 230000001131 transforming effect Effects 0.000 description 3

- 208000019901 Anxiety disease Diseases 0.000 description 2

- 238000012935 Averaging Methods 0.000 description 2

- 208000003443 Unconsciousness Diseases 0.000 description 2

- 238000004458 analytical method Methods 0.000 description 2

- 230000036506 anxiety Effects 0.000 description 2

- 230000002596 correlated effect Effects 0.000 description 2

- 230000000875 corresponding effect Effects 0.000 description 2

- 238000011840 criminal investigation Methods 0.000 description 2

- 238000002566 electrocorticography Methods 0.000 description 2

- 238000002567 electromyography Methods 0.000 description 2

- 230000002996 emotional effect Effects 0.000 description 2

- 230000006397 emotional response Effects 0.000 description 2

- 238000003384 imaging method Methods 0.000 description 2

- 238000002513 implantation Methods 0.000 description 2

- 238000007477 logistic regression Methods 0.000 description 2

- 239000000463 material Substances 0.000 description 2

- 238000012986 modification Methods 0.000 description 2

- 230000004048 modification Effects 0.000 description 2

- 230000037452 priming Effects 0.000 description 2

- 230000008439 repair process Effects 0.000 description 2

- 238000005070 sampling Methods 0.000 description 2

- 210000003625 skull Anatomy 0.000 description 2

- 230000003238 somatosensory effect Effects 0.000 description 2

- 238000012795 verification Methods 0.000 description 2

- 206010010904 Convulsion Diseases 0.000 description 1

- 208000027534 Emotional disease Diseases 0.000 description 1

- 230000003044 adaptive effect Effects 0.000 description 1

- 206010002026 amyotrophic lateral sclerosis Diseases 0.000 description 1

- 230000003416 augmentation Effects 0.000 description 1

- 230000003542 behavioural effect Effects 0.000 description 1

- 210000004027 cell Anatomy 0.000 description 1

- 210000003169 central nervous system Anatomy 0.000 description 1

- 230000036992 cognitive tasks Effects 0.000 description 1

- 238000010219 correlation analysis Methods 0.000 description 1

- 238000013075 data extraction Methods 0.000 description 1

- 238000011161 development Methods 0.000 description 1

- 230000003467 diminishing effect Effects 0.000 description 1

- 208000037265 diseases, disorders, signs and symptoms Diseases 0.000 description 1

- 208000035475 disorder Diseases 0.000 description 1

- 238000004070 electrodeposition Methods 0.000 description 1

- 238000005538 encapsulation Methods 0.000 description 1

- 238000005516 engineering process Methods 0.000 description 1

- 206010015037 epilepsy Diseases 0.000 description 1

- 238000011156 evaluation Methods 0.000 description 1

- 230000003203 everyday effect Effects 0.000 description 1

- 230000000193 eyeblink Effects 0.000 description 1

- 239000004744 fabric Substances 0.000 description 1

- 230000001339 gustatory effect Effects 0.000 description 1

- 230000008676 import Effects 0.000 description 1

- 230000006872 improvement Effects 0.000 description 1

- 239000004973 liquid crystal related substance Substances 0.000 description 1

- 230000007774 longterm Effects 0.000 description 1

- 238000012423 maintenance Methods 0.000 description 1

- 230000007246 mechanism Effects 0.000 description 1

- 230000003446 memory effect Effects 0.000 description 1

- 230000006996 mental state Effects 0.000 description 1

- 239000000203 mixture Substances 0.000 description 1

- 230000008450 motivation Effects 0.000 description 1

- 210000003205 muscle Anatomy 0.000 description 1

- 208000018360 neuromuscular disease Diseases 0.000 description 1

- 210000002569 neuron Anatomy 0.000 description 1

- 230000007996 neuronal plasticity Effects 0.000 description 1

- 230000003287 optical effect Effects 0.000 description 1

- 230000001936 parietal effect Effects 0.000 description 1

- 230000037361 pathway Effects 0.000 description 1

- 230000000737 periodic effect Effects 0.000 description 1

- 230000002093 peripheral effect Effects 0.000 description 1

- 210000000578 peripheral nerve Anatomy 0.000 description 1

- 230000002085 persistent effect Effects 0.000 description 1

- 201000003040 photosensitive epilepsy Diseases 0.000 description 1

- 230000002265 prevention Effects 0.000 description 1

- 238000011002 quantification Methods 0.000 description 1

- 201000005070 reflex epilepsy Diseases 0.000 description 1

- 230000002441 reversible effect Effects 0.000 description 1

- 238000012163 sequencing technique Methods 0.000 description 1

- 230000001568 sexual effect Effects 0.000 description 1

- 230000011664 signaling Effects 0.000 description 1

- 230000035943 smell Effects 0.000 description 1

- 208000020431 spinal cord injury Diseases 0.000 description 1

- 230000002269 spontaneous effect Effects 0.000 description 1

- 230000000638 stimulation Effects 0.000 description 1

- 239000013589 supplement Substances 0.000 description 1

- 230000000946 synaptic effect Effects 0.000 description 1

- 230000002123 temporal effect Effects 0.000 description 1

- 239000013598 vector Substances 0.000 description 1

- 230000002618 waking effect Effects 0.000 description 1

- 239000011240 wet gel Substances 0.000 description 1

Images

Classifications

-

- A61B5/04012—

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/24—Detecting, measuring or recording bioelectric or biomagnetic signals of the body or parts thereof

- A61B5/316—Modalities, i.e. specific diagnostic methods

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/011—Arrangements for interaction with the human body, e.g. for user immersion in virtual reality

- G06F3/015—Input arrangements based on nervous system activity detection, e.g. brain waves [EEG] detection, electromyograms [EMG] detection, electrodermal response detection

-

- A61B5/0478—

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/24—Detecting, measuring or recording bioelectric or biomagnetic signals of the body or parts thereof

- A61B5/25—Bioelectric electrodes therefor

- A61B5/279—Bioelectric electrodes therefor specially adapted for particular uses

- A61B5/291—Bioelectric electrodes therefor specially adapted for particular uses for electroencephalography [EEG]

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B19/00—Programme-control systems

- G05B19/02—Programme-control systems electric

- G05B19/18—Numerical control [NC], i.e. automatically operating machines, in particular machine tools, e.g. in a manufacturing environment, so as to execute positioning, movement or co-ordinated operations by means of programme data in numerical form

- G05B19/409—Numerical control [NC], i.e. automatically operating machines, in particular machine tools, e.g. in a manufacturing environment, so as to execute positioning, movement or co-ordinated operations by means of programme data in numerical form characterised by using manual data input [MDI] or by using control panel, e.g. controlling functions with the panel; characterised by control panel details or by setting parameters

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F21/00—Security arrangements for protecting computers, components thereof, programs or data against unauthorised activity

- G06F21/60—Protecting data

- G06F21/62—Protecting access to data via a platform, e.g. using keys or access control rules

- G06F21/6218—Protecting access to data via a platform, e.g. using keys or access control rules to a system of files or objects, e.g. local or distributed file system or database

- G06F21/6245—Protecting personal data, e.g. for financial or medical purposes

- G06F21/6254—Protecting personal data, e.g. for financial or medical purposes by anonymising data, e.g. decorrelating personal data from the owner's identification

Definitions

- a Brain-Computer Interface is a communication system between the brain and the external environment. In this system, messages between an individual and an external world do not pass through the brain's normal pathways of peripheral nerves and muscles. Instead, messages are typically encoded in electrophysiological signals.

- Brain-computer interfaces can be classified as invasive, or involving implantation of devices; e.g., electrodes into the brain, partially invasive, or involving implantation of devices into a skull surrounding the brain, and non-invasive, or involving use of devices that can be removed; i.e., no devices are implanted into the brain or the skull.

- Brain-computer interfaces were first developed for assistance, augmentation and repair of cognitive and sensorimotor capabilities of people with severe neuromuscular disorders, such as spinal cord injuries or amyotrophic lateral sclerosis. More recently, however, BCIs have had a surge in popularity for non-medical uses, such as gaming, entertainment and marketing.

- BCIs Commonly supported applications include (i) accessibility tools, such as mind-controlled computer inputs, such as a mouse or a keyboard, (ii) “serious games”, i.e., games with purpose other than pure entertainment, such as attention and memory training, and (iii) “non-serious” games for pure entertainment. Other applications are possible as well.

- EEG electroencephalography

- Event-Related Potentials can be neurophysiological phenomena measured by EEG.

- An ERP is defined as a brain response to a direct cognitive, sensory or motor stimulus, and it is typically observed as a pattern of signal changes after the external stimulus.

- An ERP waveform consists of several positive and negative voltage peaks related to the set of underlying components. A sum of these components is caused by the “higher” brain processes, involving memory, attention or expectation.

- ERP components can be used to infer things about a person's personality, memory and preferences. For example, data about a P300 ERP component has been used to recognize the person's name in a random sequence of personal names, to discriminate familiar from unfamiliar faces, and for lie detection. As another example, data about a N400 ERP component has been used to infer what the person was thinking about after he/she was primed on a specific set of words.

- a brain-computer interface receives a plurality of brain neural signals.

- the plurality of brain neural signals are based on electrical activity of a brain of a user and the plurality of brain neural signals include signals related to a BCI-enabled application.

- the brain-computer interface determines one or more features of the plurality of brain neural signals related to the BCI-enabled application.

- a BCI anonymizer of the brain-computer interface generates anonymized neural signals by at least filtering the one or more features to remove privacy-sensitive information.

- the brain-computer interface generates one or more application commands for the BCI-enabled application from the anonymized neural signals.

- the brain-computer interface sends the one or more application commands.

- a brain-computer interface in another aspect, includes a signal acquisition component and a signal processing component.

- the signal acquisition component is configured to receive a plurality of brain neural signals based on electrical activity of a brain of a user.

- the plurality of brain neural signals includes signals related to a BCI-enabled application.

- the signal processing component includes a feature extraction component, a BCI anonymizer, and a decoding component.

- the feature extraction component is configured to determine one or more features of the plurality of brain neural signals related to the BCI-enabled application.

- the BCI anonymizer is configured to generate anonymized neural signals by at least filtering the one or more features to remove privacy-sensitive information.

- the decoding component is configured to generate one or more application commands for the BCI-enabled application from the anonymized neural signals.

- an article of manufacture includes a non-transitory tangible computer readable medium configured to store at least executable instructions, wherein the executable instructions, when executed by a processor of a brain-computer interface, cause the brain-computer interface to perform functions.

- the functions include: determining one or more features of a plurality of brain neural signals related to an BCI-enabled application; generating anonymized neural signals by at least filtering the one or more features to remove privacy-sensitive information; generating one or more application commands for the BCI-enabled application from the anonymized neural signals; and sending the one or more application commands from the brain-computer interface.

- the herein-disclosed brain-computer interface provides at least the advantage of enabling a BCI user to control aspects of their privacy that might otherwise be obtained via the BCI.

- the brain-computer interface uses a BCI anonymizer to prevent releasing information.

- the control of information is provided on a per-component basis, where an example component is an event-related potential (ERP) component.

- ERP event-related potential

- the BCI anonymizer both protects brain-computer interface users and aids adoption of the use of BCI's by minimizing user risks associated with brain-computer interfaces.

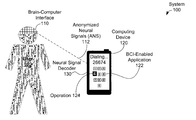

- FIG. 1A illustrates a system where a user is using a brain-computer interface to communicate with a computing device, in accordance with an embodiment

- FIG. 1B illustrates another system where a user is using a brain-computer interface to communicate with a computing device, in accordance with an embodiment

- FIG. 1C illustrates a system where a user is using a brain-computer interface to communicate with a robotic device, in accordance with an embodiment

- FIG. 2A shows an example EEG graph, in accordance with an embodiment

- FIG. 2B shows a graph of simulated voltages over time indicating various ERPs, in accordance with an embodiment

- FIG. 2C shows a graph of example P300 ERP potentials plotted with respect to time, in accordance with an embodiment

- FIG. 3 is a block diagram of a system for transforming brain neural signals to application-specific operations, in accordance with an embodiment

- FIG. 4 is a flowchart of a calibration method, in accordance with an embodiment

- FIG. 5A is a block diagram of an example computing network, in accordance with an embodiment

- FIG. 5B is a block diagram of an example computing device, in accordance with an embodiment.

- FIG. 6 is a flow chart of an example method, in accordance with an embodiment.

- a person using a brain-computer interface can put their privacy at risk.

- Research has been directed to potential benefits of using brain neural signals data for user identification; e.g., selecting a user's identity out of a set of identities, and authentication; verification that a claimed identity is valid, based on the observation that brain neural signals of each individual are unique.

- EEG signals such as those signals captured by brain-computer interface, have shown to be particularly useful for these applications. That is, the EEG signals captured from a user of a brain-computer interface could be used to identify the user and/or authenticate the user's identity, perhaps even without the user's consent.

- EEG signals can be used to extract private information about a user. With sufficient computational power, this information can be exploited to determine about privacy-sensitive information, such as memory, intentions, conscious and unconscious interests, and emotional reactions of a brain-computer interface user.

- privacy-sensitive information such as memory, intentions, conscious and unconscious interests, and emotional reactions of a brain-computer interface user.

- brain spyware for brain-computer interfaces has been developed that uses ERP components, particularly the P300 ERP component, to exploit privacy-sensitive information to obtain data about a person's finances, biographical details, and recognized colleagues.

- Some examples can illustrate some uses, both legitimate and malicious, of privacy-sensitive information obtainable from brain-computer interfaces:

- a BCI anonymizer can be used to address these privacy concerns.

- the BCI anonymizer includes software, and perhaps hardware, for decomposing brain neural signals in into a collection of characteristic signal components.

- the BCI anonymizer can extract information corresponding to intended BCI commands and remove private side-channel information from these signal components. Then, the BCI anonymizer can provide a suitably configured application with only the signal components related to the BCI commands for the application. That is, the BCI anonymizer can provide BCI-command-related information to an application without providing additional privacy-sensitive information.

- the BCI anonymizer can process brain neural signals in real time and provide only signal components required by the application, rather than providing the entire brain neural signal. This real time approach mitigates privacy attacks that might occur during storage, transmission, or data manipulation by a BCI application. For example, if complete brain neural signals were transmitted, an eavesdropper can intercept the transmission, save the brain neural signals, and decompose the saved brain neural signals to obtain privacy-sensitive information. Thus, real time operation of the BCI anonymizer can significantly decrease the risk to privacy-sensitive information since complete brain neural signals are neither stored by nor transmitted from the BCI anonymizer.

- the BCI anonymizer reduces, if not eliminates, risk to privacy-sensitive information by removing side-channel information about additional ERP components not related to the BCI commands. This side channel information can be used to increase the success rate of extracting privacy-sensitive information.

- the BCI anonymizer can provide information only about specifically authorized ERP components and/or filter out information about other ERP components.

- the BCI anonymizer can be configured to provide (a) information about specific ERP component(s) that may be tied to specific applications; e.g., information about the ERN (error-related negativity) component to a document-management program or information about the N100 component to a game, (b) exclude providing information specific ERP component(s); e.g., exclude information about the P300 component, and/or (c) provide information about ERP component(s) after specific authorization by the user.

- the BCI anonymizer protects brain-computer interface users from unintentionally providing privacy-sensitive information.

- the BCI anonymizer acts in real-time to provide requested ERP component information without sharing or storing complete brain neural signals. By providing this protection, the BCI anonymizer can ensure private thoughts remain private even during use of the brain-computer interface. This assurance can reduce the risks of using brain-computer interfaces, and speed adoption and use of the brain-computer interface.

- a brain-computer interface can be used to decode (or translate) electrophysiological signals, reflecting activity of central nervous system, into a user's intended messages that act on the external world.

- a brain-computer interface can act as a communication system, with inputs about a user's neural activity, outputs related to external world commands, and components decoding inputs to outputs.

- a brain-computer interface can include electrodes, as well as signal acquisition and signal processing components for decoding inputs to outputs. Brain-computer interfaces often have relatively low transmission rates; e.g., a transmission rate between 10 and 25 bits/minute.

- brain-computer interfaces can be attacked to obtain information, such as privacy-sensitive information.

- information such as privacy-sensitive information.

- brain-computer interfaces such as non-invasive brain-computer interfaces intended for consumer use, for extracting privacy-sensitive information.

- two types of attackers can use brain-computer interfaces to extract privacy-sensitive information, where the two types are based on the way an attacker analyzes recorded brain neural signals.

- the first type of attacker extracts users' private information by hijacking the legitimate components of the brain-computer interface; e.g., exploiting legitimate outputs of the brain-computer interface for the attacker's own purposes.

- the second type of an attacker extracts users' private information by adding or replacing legitimate brain-computer interface components.

- the second type of attacker can implement additional feature extraction and/or decoding algorithms, and either replaces or supplements the existing BCI components with the additional malicious code.

- the difference between the two attacker types is only in the structure of a malicious component—the first type of attacker attacks outputs produced by the brain-computer interface, while the second type of attacker attacks the brain-computer interface components.

- the attacker can interact with users by presenting them with specific sets of stimuli, and recording their responses to the presented stimuli.

- Some example techniques that the attacker can present stimuli to users include:

- an attacker can use these techniques, and perhaps others, to facilitate extraction of private information.

- an attacker can present malicious stimuli in an overt (conscious) fashion, as well as in a subliminal (unconscious) way, with subliminal stimulation defined as the process of affecting people by visual or audio stimuli of which they are completely unaware; e.g., the attacker can reduce a stimulus intensity or duration below the required level of conscious awareness by the user.

- each brain-computer interface further includes a BCI anonymizer.

- a BCI anonymizer can thwart attackers such as discussed above, and so enhance neural privacy and security.

- the BCI anonymizer can pre-process brain neural signals before they are stored and transmitted to only communicate information related to intended BCI commands.

- the BCI anonymizer can prevent unintended information leakage by operating in real-time without transmitting or storing raw brain neural signals or signal components that are not explicitly needed for the purpose of legitimate application-related data; e.g., commands to the application generated by the brain-computer interface.

- FIG. 1A illustrates system 100 with user 102 using brain-computer interface 110 to communicate with computing device 120 .

- FIG. 1A shows that user 102 is in the process of making a phone call using BCI-enabled application 122 of computing device 120 .

- User 102 has dialed the digits “26674” as displayed by application 122 , which also indicates operation 124 of dialing the digit “4”.

- Brain-computer interface 110 can include electrodes that capture brain neural signals generated by a brain of user 102 , as shown in FIG. 1A .

- Brain-computer interface 110 can convert analog brain neural signals obtained via the included electrodes to digital brain neural signals, anonymize the digital brain neural signals using the BCI anonymizer of brain-computer interface 110 , and send anonymized neural signals (ANS) 112 to computing device 120 .

- ANS anonymized neural signals

- Anonymized neural signals 112 can be correlated to information an application of computing device 120 , such as BCI-enabled application 122 .

- BCI-enabled application 122 can include, or communicate with, neural signal decoder 130 .

- Neural signal decoder 130 can decode anonymized neural signals 112 into commands recognizable by BCI-enabled application 122 .

- the commands can correspond to application operations, such as touching a specific digit on a keypad, such as keypad displayed by BCI-enabled application 122 and shown in FIG. 1A .

- FIG. 1A shows example operation 124 of touching the number “4” on the keypad.

- BCI-enabled application 122 can act as if user 102 touched the number “4” on the keypad with a finger. That is, BCI-enabled application 122 can update the display of the keypad to show the touch of the number “4” and has added the digit 4 to digits dialed as shown on computing device 120 .

- FIG. 1B illustrates system 150 with user 152 using brain-computer interface 160 to communicate with computing device 170 .

- FIG. 1B shows that user 152 is playing a game using application 172 of computing device 170 .

- User 152 can generate analog brain neural signals using brain-computer interface 160 .

- brain-computer interface 160 can include electrodes that can acquire brain neural signals from the brain of a user, such as user 152 .

- Brain-computer interface 160 can convert analog brain neural signals obtained via the included electrodes to digital brain neural signals, anonymize the digital brain neural signals using the BCI anonymizer of brain-computer interface 160 , decode the anonymized brain neural signals to application commands 162 , and send application commands 162 to computing device 170 .

- Computing device 170 can provide application commands 162 to application 172 so that user 152 can play the game provided by application 172 .

- Users can use brain-computer interfaces to communicate with devices other than computing devices 120 and 170 , such as, but not limited to, remotely-controllable devices and robotic devices.

- FIG. 1C illustrates systems 180 a , 180 b with user 182 using brain-computer interface 190 to communicate with robotic device 194 .

- System 180 a shown in the upper portion of the sheet for FIG. 1C , shows user 182 using brain-computer interface 190 to communicate with robotic device 194 .

- Brain-computer interface 190 can convert analog brain neural signals obtained via the included electrodes to digital brain neural signals, anonymize the digital brain neural signals using the BCI anonymizer of brain-computer interface 190 , and send anonymized neural signals 192 to robotic device 194 .

- Anonymized neural signals 192 can be correlated to control information for robotic device 194 using neural signal decoder 196 . That is, neural signal decoder 196 can decode anonymized neural signals 192 into robotic operations recognizable by robotic device 194 .

- the robotic operations can include, such as, but are not limited to: (1) an operation to moving a robot in a direction; e.g., left, right, forward, backward, up, down, north, south, east, west; (2) an operation to rotate the robot in a direction, (3) moving an end effector; e.g., an arm of the robot in a direction; (4) rotating an end effector in a direction, (5) operating the end effector; e.g., opening a hand, closing a hand, rotating an end effector, (6) power up/down the robot, (7) and provide maintenance/status information about the robot; e.g., information to diagnose and repair the robot, battery/power level information.

- user 182 uses brain-computer interface 190 to provide anonymized neural signals 192 to robotic device 194 corresponding to a robotic operation to move robotic device 194 closer to user 182 ; e.g., move in a westward direction.

- System 180 b shows a configuration after robotic device 194 has carried out robotic operation 198 to move westward toward user 182 .

- a brain-computer interface with a BCI anonymizer can also include electrodes to capture electrical indicia of brain neural signals as well as signal acquisition and signal processing components for decoding (translating) inputs, such as the captured electrical indicia of brain neural signals, to outputs such as anonymized neural signals, as indicated with respect to FIGS. 1A and 1C and/or application commands, as indicated with respect to FIG. 1B .

- FIG. 2A shows example EEG graph 210 .

- brain neural signals can be collected that were generated in response to various stimuli.

- the brain neural signals can be collected as a number of time series; e.g., using a 16-channel EEG-based brain-computer interface, 16 different time series can be collected such as shown as time series 212 of FIG. 2A .

- Each time series can represent voltages, or other electrical characteristics, over time, where the voltages can be generated by neurons of a human brain as brain neural signals, and where the brain neural signals can be collected by electrode(s) of the brain-computer interface located over specific portion(s) of the human brain.

- the signal acquisition component can prepare input brain neural signals for signal processing.

- the signal acquisition component can amplify and/or otherwise condition input analog brain neural signals, convert the analog signals to digital, and perhaps preprocess either the analog brain neural signals or the digital brain neural signals for signal processing.

- acquired time series can be preprocessed.

- Preprocessing a time series can include adjusting the time series based on a reference value.

- Other types of preprocessing time series, analog brain neural signals, and/or digital brain neural signals are possible as well.

- preprocessing occurs during signal acquisition.

- preprocessing occurs during signal processing, while in still other embodiments, preprocessing occurs during both signal acquisition and signal processing.

- the time series can represent repeated actions; e.g., EEG signals captured after a brain-computer interface user was presented with the above-mentioned sequence of stimuli, the EEG signals can be segmented into time-based trials, and the signals for multiple trials averaged. Multiple-trial averaging can be carried out by the signal acquisition component and/or the signal processing component.

- the signal processing component can have a feature extraction component, a decoding component, and a BCI anonymizer.

- the brain-computer interface generally, and a BCI anonymizer of the brain-computer interface specifically, can be calibrated for a user of a brain-computer interface.

- the time series can be filtered; e.g. to isolate ERP components.

- ERPs can be relatively-low frequency phenomena, e.g., less than 30 Hz.

- ERPs can have a frequency less than a predetermined number of occurrences per second, where the predetermined number can depend on a specific ERP.

- high-frequency noise can be filtered out from time series by a low-pass filter.

- the specific low-pass filter can be determined by the parameters of the ERP component; e.g., N100, P300, N400, P600 and ERN ERP components.

- Other filters can be applied as well; e.g., filters to remove eye-blink and/or other movement data that can obscure ERP components. Filtering can be performed during signal acquisition and/or during feature extraction.

- the feature extraction component can process recorded brain neural signals in order to extract signal features that are assumed to reflect specific aspects of a user's current mental state, such as ERP components.

- FIG. 2B shows graph 220 of simulated voltage data from an EEG channel graphed over time indicating various ERPs.

- Graph 220 simulates reaction observed by an electrode of a brain-computer interface after a baseline time of 0 milliseconds (ms) when a stimulus was generated.

- Graph 220 includes waveform 222 with peaks of positive voltage at approximately 100 ms, 300 ms, and 400 ms, as well as valleys of negative voltage at approximately 200 ms and 600 ms. Each of these peaks and valleys can represent an ERP.

- Naming of ERPs can include a P for a positive (peak) voltage or N for a negative (valley) voltage and a value indicating an approximate number of ms after a stimulus; e.g., an N100 ERP would represent a negative voltage about 100 ms after the stimulus.

- the positive peaks of waveform 222 of FIG. 2B at approximately 100 ms, 300 ms, and 400 ms can each respectively represent a P100 ERP, a P300 ERP, and a P400 ERP and the valleys of waveform 222 at approximately 200 ms and 600 ms can each respectively represent a N200 ERP and N600 ERP.

- ERP components can be broadly be classified into: (a) visual sensory responses, (b) auditory sensory responses, (c) somatosensory, olfactory and gustatory responses, (d) language-related ERP components, (e) error detection, and (f) response-related ERP components.

- certain ERP components can be considered to relate to privacy-sensitive information, including but not limited to an N100 ERP component, a P300 ERP component, a N400 ERP component, a P600 ERP component, and error-detection ERN ERP component.

- a P300 ERP component typically observed over the parietal cortex as a positive peak at about 300 milliseconds after a stimulus, is typically elicited as a response to infrequent or particularly significant auditory, visual or somatosensory stimuli, when interspersed with frequent or routine stimuli.

- a P300-based brain-computer interface One of the important advantages of a P300-based brain-computer interface is the fact that the P300 is typical, or naive, response to a desired choice, thus requiring no initial user training.

- P300 response is a spelling application, the P300 Speller, proposed and developed by Farwell et al. in 1988. More recently, the P300 response has been used to recognize a subjects name in a random sequence of personal names, to discriminate familiar from unfamiliar faces, and for lie detection.

- N400 response Another well-investigated component is the N400 response, associated with semantic processing.

- the N400 has recently been used to infer what a person was thinking about, after he/she was primed on a specific set of words.

- This ERP component has also been linked to the concept of priming, an observed improvement in performance in perceptual and cognitive tasks, caused by previous, related experience.

- the N400 and the concept of priming have had important role in subliminal stimuli research.

- the P600 component has been used to make an inference about a user's sexual preferences, and the estimates of the anxiety level derived from the EEG signals has been used to draw conclusions about a person's religious beliefs.

- the feature extraction component can have a classifier to extract ERP components from the time series.

- a classifier based on a logistic regression algorithm can be used.

- a classifier based on stepwise linear discriminant analysis e.g., the BCI2000 P300 classifier.

- Other classifiers are possible as well.

- FIG. 2C shows graph 230 of example P300 ERP potentials plotted with respect to time.

- Graph 230 shows plots of potentials of the P300 ERP component over time resulting after presentation of two separate stimuli.

- Non-target-stimulus plot 232 represents responses from a non-target stimulus; e.g., a stimulus not expected to be categorized, while target-stimulus plot 234 represents responses from a target stimulus.

- the non-target stimulus was an image of a face unfamiliar to the brain-computer interface use, while the target stimulus was an image of a well-known person's face.

- the BCI anonymizer can extract reactions to target stimuli from only the P300 ERP components by using a high-pass filter that removes all P300 potentials below a cutoff level; e.g., cutoff level 236 as shown in FIG. 2C .

- the BCI anonymizer can be realized in hardware and/or software.

- the BCI anonymizer can use time-frequency signal processing algorithms for real time decomposition of brain neural signals into one or more functions.

- the BCI anonymizer can then construct anonymized brain neural signals by altering some or all of the one or more functions to protect user privacy.

- the BCI anonymizer can reconstruct the brain neural signals from the altered one or more functions. That is, the reconstructed brain neural signals can represent anonymized brain neural signals that contain less privacy-sensitive information than the input brain neural signals. Then, the anonymized brain neural signals can be provided to another component of a brain-computer interface and/or an application, such as a BCI-enabled application.

- the decoding component can use decoding algorithms to take the signal features as inputs, which may be represented as feature vectors.

- the decoding algorithms can transform the signal features into application-specific commands.

- many different decoding algorithms can be used by the brain-computer interface. For example, a decoding algorithm can adapt to: (1) individual user's signal features, (2) spontaneous variations in recorded signal quality, and (3) adaptive capacities of the brain (neural plasticity).

- FIG. 3 is a block diagram of system 300 for transforming brain neural signals to application-specific operations.

- System 300 includes a brain-computer interface 312 and a BCI-enabled application 360 .

- the brain-computer interface 312 includes signal acquisition component 320 and digital signal processing component 340 .

- Signal acquisition component 320 can receive analog brain neural signals 310 from a brain of a user, such as discussed above in the context of FIGS. 1A-1C , and generate digital brain neural signals 330 as an output.

- Signal acquisition component 320 can include electrodes 322 , analog signal conditioning component 324 , and analog/digital (A/D) conversion component 326 .

- Electrodes 322 can obtain analog brain neural signals 310 from brain of a user and can include, but are not limited to, some or all of non-invasive electrodes, partially invasive electrodes, invasive electrodes, EEG electrodes, ECoG electrodes, EMG electrodes, dry electrodes, wet electrodes, wet gel electrodes, and conductive fabric patches.

- Electrodes 322 can be configured to provide obtained analog brain neural signals to analog signal conditioning component 324 .

- the analog brain neural signals can be time series as discussed above in the context of FIGS. 1A-1C .

- Analog signal conditioning component 324 can filter, amplify, and/or otherwise modify the obtained analog brain neural signals to generate conditioned analog brain neural signals.

- Analog signal conditioning component 324 can include but is not limited to amplifiers, operational amplifiers, low-pass filters, band-pass filters, high-pass filters, anti-aliasing filters, other types of filters, and/or signal isolators.

- analog signal conditioning component 324 can receive multiple time series of data from the brain. These signals from the time series can be preprocessed by adjusting the signals based on a reference value. For example, let the values of n time series at a given time be SV1, SV2 . . . SVn, and let M, the mean signal value be

- Other reference values can include a predetermined numerical value or a weighted average of the signal values. Other reference values and other types of preprocessing are possible as well.

- the preprocessing is performed by digital signal processing component 340 .

- Analog signal conditioning component 324 can be configured to provide conditioned analog brain neural signals to analog/digital conversion component 326 .

- Analog/digital conversion component 326 can sample conditioned analog brain neural signals at a sampling rate; e.g., 256 samples per second, 1000 samples per second. The obtained samples can represent voltage, current, or another quantity.

- a sample can be resolved into a number of levels; e.g., 16 different levels, 256 different levels. Then, digital data such as a bitstream of bits for each sample representing a level for the sample can be output as digital brain neural signals 330 .

- the four levels can correspond to current in level 0 of 0.01 to 0.05999 . . . amperes, level 1 of 0.06 to 0.10999 . . . amperes, level 2 of 0.11 to 0.15999 . . . amperes, and level 3 of 0.16 to 0.21 amperes.

- These four levels can be represented using two bits; e.g., bits 00 for level 0, bits 01 for level 1, bits 10 for level 2, and bits 11 for level 3.

- analog/digital conversion component 326 can output each sample as four bits that represent the sixteen levels. Many other sampling rates, sampled quantities, and resolved number of levels are possible as well.

- Digital signal processing component 340 can receive digital brain neural signals 330 from signal acquisition component 320 as inputs and generate application commands 350 as output(s).

- digital signal processing component 340 can be part or all of one or more digital signal processors.

- feature extraction component 342 and BCI anonymizer 344 can be a single component.

- BCI anonymizer 344 and decoding component 346 can be a single component.

- decoding component 346 can be part of BCI-enabled application 360 ; in those embodiments, such as discussed above in the context of FIGS.

- brain-computer interface 312 can provide anonymized neural signals to BCI-enabled application 360 and the decoding component of the BCI-enabled application can decode the anonymized digital neural signals into application commands; e.g., commands equivalent to application commands 350 .

- Digital signal processing component 340 can include feature extraction component 342 , BCI anonymizer 344 , and decoding component 346 .

- Feature extraction component 342 can receive conditioned digital brain neural signals as inputs and generate extracted features, such as ERP components, as outputs.

- feature extraction component 342 can preprocess input time series that are in digital brain neural signals 330 by adjusting the time series based on a reference value, such as discussed above in the context of analog signal conditioning component 324 .

- feature extraction component 342 can perform operations, such as filtering, rectifying, averaging, transforming, and/or otherwise process, on digital brain neural signals 330 .

- feature extraction component 342 can use one or more high-pass filters, low-pass filters, band-pass filters, finite impulse response (FIRs), and/or other devices to filter out noise from digital brain neural signals unrelated to ERP components.

- FIRs finite impulse response

- feature extraction component 342 can have a classifier to extract features, such as ERP components, from noise-filtered digital brain neural signals 330 .

- a classifier based on a logistic regression algorithm can be used.

- a classifier based on stepwise linear discriminant analysis e.g., the BCI2000 P300 classifier.

- the classifier can be trained to extract multiple features; e.g., multiple ERP components, while in other embodiments, multiple classifiers can be used if multiple features are to be extracted; e.g., one classifier for N100 ERP components, one classifier for P300 ERP components, and so on.

- the extracted features can be provided to BCI anonymizer 344 , which can filter information to determine an amount of privacy-sensitive information to be provided to BCI-enabled application 360 .

- BCI anonymizer 344 can determine an amount of privacy-sensitive information to be provided to BCI-enabled application 360 based on data about privacy-sensitive information related to a user of brain-computer interface 312 , such as data about information-criticality metrics and relative reductions in entropy, and information about features utilized by BCI-enabled application 360 .

- Information-criticality metrics and relative reductions in entropy are discussed below in more detail in the context of FIG. 4 .

- BCI anonymizer 344 can allow much, if not all information, about E1 to BCI-enabled application 360 .

- BCI anonymizer 344 can restrict information about E1 to BCI-enabled application 360 , unless E1-related data can be legitimately used by BCI-enabled application 360 .

- BCI anonymizer 344 can allow data about the feature to be used by BCI-enabled application 360 with specific authorization from the user. This authorization can be provided at the time that the user uses BCI-enabled application 360 or during calibration or another time; e.g., provide default authorization to allow data about the feature.

- BCI anonymizer 344 can restrict information about non-target stimuli for an ERP component. For example, BCI anonymizer 344 can extract reactions to target stimuli by using a high-pass filter that removes all potentials below a cutoff level, under the assumption that data below the cutoff level relates to non-target stimuli, such as discussed above in the context of the P300 ERP component and FIG. 2C .

- the cutoff level can be based on importance values related to user privacy and to uses of BCI-enabled application 360 as well as signal-related aspects of the ERP component observed during calibration or other times.

- BCI anonymizer 344 can mix a clean signal with other signal(s) before transmission; e.g., a random signal whose potential is below the cutoff level, a recorded signal known not to carry any privacy-related information, a simulated signal of a non-target stimulus, and/or other signals partially or completely below the cutoff level.

- BCI anonymizer 344 can use time-frequency signal processing algorithms to decompose brain neural signals in real time into one or more functions. BCI anonymizer 344 can then construct anonymized brain neural signals by altering some or all of the one or more functions to protect user privacy. After altering the one or more functions, BCI anonymizer 344 can reconstruct the brain neural signals from the altered one or more functions. That is, the reconstructed brain neural signals can represent anonymized brain neural signals that contain less privacy-sensitive information than the input brain neural signals. Then, the anonymized brain neural signals can be provided to another component of a brain-computer interface; e.g., decoding component 346 , and/or an application; e.g., BCI-enabled application 360 .

- BCI anonymizer 344 can use time-frequency signal processing algorithms for real time decomposition of brain neural signals.

- the BCI anonymizer can use Empirical Mode Decomposition (EMD) to perform nonlinear and non-stationary data signal processing.

- EMD is a flexible data driven method for decomposing a time series into multiple intrinsic mode functions (IMFs) where IMFs can take on a similar role as basis functions in other signal processing techniques.

- IMFs intrinsic mode functions

- BCI anonymizer 344 can use Empirical Mode Decomposition with Canonical Correlation Analysis (CCA) to remove movement artifacts from EEG signals.

- CCA Canonical Correlation Analysis

- the real time decomposition of brain neural signals/time series can use other or additional time-frequency approach(es); e.g., wavelets and/or other time-frequency related signal processing approaches for signal decomposition.

- BCI anonymizer 344 can adjust properties of intrinsic mode functions, wavelets, basis functions, or other data about decomposed time series and/or decomposed signals containing extracted features. The properties can be adjusted based on importance values related to user privacy and to uses of BCI-enabled application 360 as well as signal-related aspects of the ERP component observed during calibration or other times. For example, suppose a time series or extracted features related to ERP component E2 was decomposed into functions F1, F2, . . . Fm, and functions F1 and F2 were related to privacy-sensitive information based on information-criticality metrics for the component, while functions F3 . . . Fm were not related to privacy-sensitive information. Then, BCI anonymizer 344 can reconstruct the input time series or extracted features related to ERP component using data about functions F3 . . . Fm but removing, or perhaps diminishing, data about functions F1 and F2. Other examples are possible as well.

- BCI anonymizer 344 can operate on features provided by feature extraction component 342 to remove privacy-sensitive information from signal features. In other embodiments, BCI anonymizer 344 can operate before signal extraction to remove privacy-sensitive information from time series and/or digital brain neural signals 330 . In these embodiments, feature extraction component 342 can generate extracted features without the removed privacy-sensitive information and provide the extracted features to decoding component 346 and/or BCI-enabled application 360 .

- Decoding component 346 can receive anonymized extracted features as input(s) from BCI anonymizer 344 and generate application commands 350 as output.

- Application commands 350 can control operation of BCI-enabled application 360 ; e.g., include commands to move a cursor for a graphical user interface acting as BCI-enabled application 360 , commands to replace a misspelled word for a word processor acting as BCI-enabled application 360 .

- decoding component 346 can be part of or related to BCI-enabled application 360 ; e.g., decoding component 346 can be implemented on a computing device running BCI-related application 360 .

- System 300 of FIG. 3 shows BCI-enabled application 360 as separate from brain-computer interface 312 .

- BCI-enabled application 360 can be a software application executing on a computing device, robotic device, or some other device.

- Brain-computer interface 312 can communicate anonymized extracted features and/or application commands 350 with BCI-enabled application 360 using communications that are based on a signaling protocol, such as but not limited to, a Bluetooth® protocol, a Wi-Fi® protocol, a Serial Peripheral Interface (SPI) protocol, a Universal Serial Bus (USB) protocol and/or a ZigBee® protocol.

- BCI-enabled application 360 can be a component of brain-computer interface 312 ; e.g., an alpha wave monitoring program executed by brain-computer interface 312 .

- FIG. 3 shows BCI-enabled application 360 including BCI interpretation component 362 and application software 364 .

- BCI interpretation component 362 can receive anonymized extracted features from brain-computer interface 312 and generate application commands to control application software 364 .

- BCI interpretation component 362 can receive application commands 350 from brain-computer interface 312 , modify application commands 350 as necessary for use with application software 364 , and provide received, and perhaps modified, application commands 350 to application software 364 .

- Application software 364 can carry out the application commands to perform application operations 370 , such as but not limited to word processing operations, game-related operations, operations of a graphical user interface, operations for a command-line interface, operations to control a remotely-controllable device, such as a robotic device or other remotely-controllable device, and operations to communicate with other devices and/or persons.

- application operations 370 such as but not limited to word processing operations, game-related operations, operations of a graphical user interface, operations for a command-line interface, operations to control a remotely-controllable device, such as a robotic device or other remotely-controllable device, and operations to communicate with other devices and/or persons.

- application operations 370 such as but not limited to word processing operations, game-related operations, operations of a graphical user interface, operations for a command-line interface, operations to control a remotely-controllable device, such as a robotic device or other remotely-controllable device, and operations to communicate with other devices and/or persons.

- a brain-computer interface such as brain-computer interfaces 110 , 160 , 190 , and/or 312 , can be calibrated.

- calibration information is computing using the brain-computing device, but other embodiments can use a device or service, such as but not limited to a computing device, network-based, or cloud service, connected to the brain-computing device to perform calibration and return the results to the brain-computing device.

- the system can use calibration data to distinguish features, such as ERP components, of input brain neural signals and/or to determine whether features include privacy-related information.

- the system can operate properly throughout the day despite fundamental changes to the inputs generated by the user; e.g., changes in electrode position, changes in sleep/waking state, changes in activity, etc. Accordingly, calibration can be performed frequently; e.g., several times a day. Calibration can be triggered periodically; i.e., at time increments, manually triggered by the user or other person, or automatically triggered. For example, calibration can be suggested or triggered when the brain-computer interface is initially powered up, or when a user indicates that they are first using the brain-computer interface.

- FIG. 4 is a flowchart of calibration method 400 .

- Method 400 can begin at block 410 , where calibration start input is received at one or more devices calibrating a system for operating a brain-computing interface, such as but not limited to brain-computer interface 110 , 160 , 190 , or 312 .

- the brain-computer interface can perform part or all of method 400

- one or more other devices, such as computing devices can perform part or all of calibration method 400 .

- the start calibration input can be periodic or otherwise time based; e.g., calibration process 400 can be performed every 30 minutes, every two hours, or every day.

- the start calibration input can be a manual input; e.g., a button is pressed or other operation performed by a user or other entity to initiate calibration process 400 .

- Calibration can be performed partially or completely automatically.

- calibration can be performed upon a manual input to power up the brain-computer interface; e.g., the power button is pressed or otherwise activated for the brain-computer interface.

- a “second power up” input can trigger calibration; that is, input to power up a brain-computer interface or an associated computing device performing by itself will not trigger calibration, but input for powering up the latter-powered-up of the brain-computer interface and associated computing device so that both devices are powered up can trigger calibration.

- identification of a new user of the brain-computer interface can trigger calibration for the new user.

- Example features include ERP components, including but not limited to the N100, P300, N400, P600 and ERN ERP components. Other features and/or ERP components can be calibrated as well.

- one or more stimuli related to feature F1 are provided to a user calibrating the brain-computer interface.

- the one or more stimuli related to FI can include visual stimuli, auditory stimuli, and/or touch-oriented stimuli.

- the stimuli intended to obtain certain feature-related responses, such as target-stimulus reactions or non-target-stimulus reactions.

- multiple trials of the stimuli are provided to the user, so that feature-related data from multiple trials can be averaged or otherwise combined to determine feature-related data for feature F1, FRD(F1).

- the brain-computer interface can receive brain neural signals related to the stimuli presented at block 440 .

- the brain neural signals can be acquired, conditioned, digitized, and feature-related data for F1, FRD(F1), can be extracted from the brain neural signals.

- the brain-computer interface can use a signal conditioning component and at least a feature extraction component of a digital signal processing component to extract feature-related data for F1 as discussed above in the context of FIG. 3 .

- feature-related data for F1, FRD(F1) can be attempted to be certified.

- FRD(F1) can be certified for suitability for calibration of feature F1.

- FRD(F1) may not be certified if FRD(F1) is: unrelated to feature F1, includes too much noise for calibration, is too weak or too strong for calibration, or for other reasons.

- FRD(F1) can be certified as signal-related data for calibration of feature F1.

- additional data can be obtained related to privacy-sensitive information related to feature F1, such as but not limited to, information-criticality metric data for ⁇ (F1) and/or exposure feasibility data for ⁇ (F1).

- a relative reduction in entropy defined based on relative reduction of Shannon's entropy with respect to a random guess of private information, can be used to quantify an amount of extracted privacy-sensitive information. Equation (1) can be used to quantify the relative reduction in entropy.

- ⁇ a ( rand ) ) 100 - 100 ⁇ ⁇ H ⁇ ( X ⁇

- r(clf) denotes the reduction in entropy

- clf is the classifier used

- a (rand) ) is the Shannon entropy of a random guess

- a (clf) ) is the Shannon entropy with classifier clf

- K the number of possible answers to the private information.

- Equation (1) defines the reduction in entropy as a function with respect to a chosen classifier clf.

- the reduction in entropy can be a function of a chosen ERP component; e.g., feature F1, the user's level of facilitating data extraction, and/or the user's awareness of presented stimuli.

- Another quantification of amounts of privacy-sensitive information is based on an assumption that not all privacy-sensitive information is equally important to a subject.

- the relative reduction in entropy can be defined as a function of a chosen ERP component and the information-criticality metric. This definition of relative reduction of entropy can be referred to as the exposure feasibility, ⁇ , as quantified in Equation (2), which can quantify the usefulness of a chosen ERP component ERP comp ; e.g., feature F1, in extracting the set of private information, S PI .

- ERP comp is the chosen ERP component such as feature F1

- S PI is the set of private information

- i is an index selecting a particular portion of information in S PI

- r(ERP comp ) i is a reduction in entropy for the chosen ERP component with respect to the particular portion of information

- ⁇ i is the information-criticality metric with respect to the particular portion of information.

- the brain-computer interface generally, and a BCI anonymizer of the brain-computer interface specifically, can be calibrated for a user of a brain-computer interface.

- attacks on privacy-sensitive information can be simulated—the user can inadvertently facilitate extraction of privacy-sensitive information by following simulated malicious applications' instructions.

- information about feature F1 can be presented to the user; e.g., information about how feature F1 relates to user privacy, and questions asked related to the user's opinion on the information-criticality of F1, ⁇ (F1).

- the system can protect the privacy of users and protect communications from interception.

- communications between the brain-computer interface and associated device(s) used to calibrate the brain-computer interface can be encrypted or otherwise secured.

- the brain-computer interface and/or associated device(s) can be protected by passwords or biometrics from unauthorized use.

- calibration method 400 can be used to provide biometric information to protect the brain-computer interface.

- the user can be requested to perform a calibration session to generate current input channel signal data.

- the current input channel signal data can be compared to previously-stored input channel signal data. If the current input channel signal data at least approximately matches the previously-stored input channel signal data, then the brain-computer interface can determine that the current user is the previous user, and assume the brain-computer interface is being used by the correct, and current, user.

- Point-to-point links e.g., a Bluetooth® paired link, a wired communication link

- a Bluetooth® paired link e.g., a Bluetooth® paired link

- a wired communication link can be used to reduce (inadvertent) interception of system communications.

- secure links and networks can be used to protect privacy and interception.

- the system can also use communication techniques, such as code sharing and time-slot allocation, that protect against inadvertent and/or intentional interception of communications. Many other techniques to protect user security and communication interception can be used by the system as well.

- FIG. 5A is a block diagram of example computing network 500 in accordance with an example embodiment.

- servers 508 and 510 are configured to communicate, via a network 506 , with client devices 504 a , 504 b , and 504 c .

- client devices can include a personal computer 504 a , a laptop computer 504 b , and a smart-phone 504 c .

- client devices 504 a - 504 c can be any sort of computing device, such as a workstation, network terminal, desktop computer, laptop computer, wireless communication device (e.g., a cell phone or smart phone), and so on.

- client devices 504 a - 504 c can include or be associated with a brain-computer interface; e.g., one or more of brain-computer interfaces 120 , 160 , 190 , and/or 312 .

- the network 506 can correspond to a local area network, a wide area network, a corporate intranet, the public Internet, combinations thereof, or any other type of network(s) configured to provide communication between networked computing devices. In some embodiments, part or all of the communication between networked computing devices can be secured.

- Servers 508 and 510 can share content and/or provide content to client devices 504 a - 504 c . As shown in FIG. 5A , servers 508 and 510 are not physically at the same location. Alternatively, servers 508 and 510 can be co-located, and/or can be accessible via a network separate from network 506 . Although FIG. 5A shows three client devices and two servers, network 506 can service more or fewer than three client devices and/or more or fewer than two servers. In some embodiments, servers 508 , 510 can perform some or all of the herein-described methods; e.g., method 400 and/or method 600 .

- FIG. 5B is a block diagram of an example computing device 520 including user interface module 521 , network-communication interface module 522 , one or more processors 523 , and data storage 524 , in accordance with an embodiment.

- computing device 520 shown in FIG. 5A can be configured to perform one or more functions of systems 100 , 150 , 180 a , 180 b , 300 , brain-computer interfaces 120 , 160 , 190 , 312 , computing devices 120 , 170 , signal acquisition component 320 , digital signal processing component 340 , BCI-enabled application 122 , 172 , 360 , client devices 504 a - 504 c , network 506 , and/or servers 508 , 510 .

- Computing device 520 may include a user interface module 521 , a network-communication interface module 522 , one or more processors 523 , and data storage 524 , all of which may be linked together via a system bus, network, or other connection mechanism 525 .

- Computing device 520 can be a desktop computer, laptop or notebook computer, personal data assistant (PDA), mobile phone, embedded processor, touch-enabled device, or any similar device that is equipped with at least one processing unit capable of executing machine-language instructions that implement at least part of the herein-described techniques and methods, including but not limited to method 400 described with respect to FIG. 4 and/or method 600 described with respect to FIG. 6 .

- PDA personal data assistant

- mobile phone embedded processor

- touch-enabled device or any similar device that is equipped with at least one processing unit capable of executing machine-language instructions that implement at least part of the herein-described techniques and methods, including but not limited to method 400 described with respect to FIG. 4 and/or method 600 described with respect to FIG. 6 .

- User interface 521 can receive input and/or provide output, perhaps to a user.

- User interface 521 can be configured to send and/or receive data to and/or from user input from input device(s), such as a keyboard, a keypad, a touch screen, a computer mouse, a track ball, a joystick, and/or other similar devices configured to receive input from a user of the computing device 520 .

- input devices can include BCI-related devices, such as, but not limited to, brain-computer interfaces 110 , 160 , 190 , and/or 312 .

- User interface 521 can be configured to provide output to output display devices, such as one or more cathode ray tubes (CRTs), liquid crystal displays (LCDs), light emitting diodes (LEDs), displays using digital light processing (DLP) technology, printers, light bulbs, and/or other similar devices capable of displaying graphical, textual, and/or numerical information to a user of computing device 520 .

- User interface module 521 can also be configured to generate audible output(s), such as a speaker, speaker jack, audio output port, audio output device, earphones, and/or other similar devices configured to convey sound and/or audible information to a user of computing device 520 .

- user interface can be configured with haptic interface 521 a that can receive haptic-related inputs and/or provide haptic outputs such as tactile feedback, vibrations, forces, motions, and/or other touch-related outputs.

- Network-communication interface module 522 can be configured to send and receive data over wireless interface 527 and/or wired interface 528 via a network, such as network 506 .

- Wireless interface 527 if present, can utilize an air interface, such as a Bluetooth®, Wi-Fi®, ZigBee®, and/or WiMAXTM interface to a data network, such as a wide area network (WAN), a local area network (LAN), one or more public data networks (e.g., the Internet), one or more private data networks, or any combination of public and private data networks.

- WAN wide area network

- LAN local area network

- public data networks e.g., the Internet

- private data networks e.g., or any combination of public and private data networks.

- Wired interface(s) 528 can comprise a wire, cable, fiber-optic link and/or similar physical connection(s) to a data network, such as a WAN, LAN, one or more public data networks, one or more private data networks, or any combination of such networks.

- a data network such as a WAN, LAN, one or more public data networks, one or more private data networks, or any combination of such networks.

- network-communication interface module 522 can be configured to provide reliable, secured, and/or authenticated communications.

- information for ensuring reliable communications i.e., guaranteed message delivery

- information for ensuring reliable communications can be provided, perhaps as part of a message header and/or footer (e.g., packet/message sequencing information, encapsulation header(s) and/or footer(s), size/time information, and transmission verification information such as CRC and/or parity check values).

- Communications can be made secure (e.g., be encoded or encrypted) and/or decrypted/decoded using one or more cryptographic protocols and/or algorithms, such as, but not limited to, DES, AES, RSA, Diffie-Hellman, and/or DSA.

- Other cryptographic protocols and/or algorithms can be used as well as or in addition to those listed herein to secure (and then decrypt/decode) communications.

- Processor(s) 523 can include one or more central processing units, computer processors, mobile processors, digital signal processors (DSPs), microprocessors, computer chips, and/or other processing units configured to execute machine-language instructions and process data.

- Processor(s) 523 can be configured to execute computer-readable program instructions 526 that are contained in data storage 524 and/or other instructions as described herein.

- Data storage 524 can include one or more physical and/or non-transitory storage devices, such as read-only memory (ROM), random access memory (RAM), removable-disk-drive memory, hard-disk memory, magnetic-tape memory, flash memory, and/or other storage devices.

- Data storage 524 can include one or more physical and/or non-transitory storage devices with at least enough combined storage capacity to contain computer-readable program instructions 526 and any associated/related data structures.

- Computer-readable program instructions 526 and any data structures contained in data storage 526 include computer-readable program instructions executable by processor(s) 523 and any storage required, respectively, to perform at least part of herein-described methods, including, but not limited to, method 400 described with respect to FIG. 4 and/or method 600 described with respect to FIG. 6 .

- FIG. 6 is a flow chart of an example method 600 .

- Method 600 can be carried out by a brain-computer interface, such as brain-computer interfaces 110 , 160 , 190 , and/or 312 , such as discussed above in the context of at least FIGS. 1A , 1 B, 1 C, and 3 .

- Method 600 can begin at block 610 , where a brain-computer interface can receive a plurality of brain neural signals, such as discussed above in the context of at least FIGS. 1A , 1 B, 1 C, and 3 .

- the plurality of brain neural signals can be based on electrical activity of a brain of a user and can include signals related to a BCI-enabled application.

- the brain-computer interface can determine one or more features of the plurality of brain neural signals related to the BCI-enabled application, such as discussed above in the context of at least FIG. 3 .

- the one or more features can include one or more ERP components of the plurality of brain neural signals, such as discussed above in the context of at least FIG. 3 .

- a BCI anonymizer of the brain-computer interface can generate anonymized neural signals by at least filtering the one or more features to remove privacy-sensitive information, such as discussed above in the context of at least FIG. 3 .

- the BCI anonymizer generating anonymized neural signals includes the BCI anonymizer generating anonymized neural signals from the one or more ERP components.

- the BCI anonymizer generating anonymized neural signals from the one or more ERP components includes the BCI anonymizer decomposing the one or more ERP components into a plurality of functions; modifying at least one function of the plurality of functions to remove the privacy-sensitive information from the plurality of functions; and generating the anonymized neural signals using the modified plurality of functions.

- the BCI anonymizer decomposing the one or more ERP components into a plurality of functions includes the BCI anonymizer performing real-time decomposition of the ERP components into the plurality of functions using a time-frequency signal processing algorithm.

- the time-frequency signal processing algorithm can include at least one algorithm selected from the group consisting of an algorithm utilizing wavelets and an algorithm utilizing empirical mode decomposition.

- the BCI anonymizer generating anonymized neural signals from the one or more ERP components includes the BCI anonymizer determining an information-criticality metric for at least one feature of the one or more features and filtering the one or more features to remove privacy-sensitive information based on the information-criticality metric for the at least one feature.

- the BCI anonymizer filtering the one or more features to remove privacy-sensitive information based on the information-criticality metric for the at least one feature includes the BCI anonymizer determining a relative reduction in entropy for the at least one feature based on the information-criticality metric for the at least one feature.

- the brain-computer interface can generate one or more application commands for the BCI-enabled application from the anonymized neural signals, such as discussed above in the context of at least FIG. 3 .

- the brain-computer interface can send the one or more application commands, such as discussed above in the context of at least FIG. 3 .

- each block and/or communication may represent a processing of information and/or a transmission of information in accordance with example embodiments.

- Alternative embodiments are included within the scope of these example embodiments.

- functions described as blocks, transmissions, communications, requests, responses, and/or messages may be executed out of order from that shown or discussed, including substantially concurrent or in reverse order, depending on the functionality involved.

- more or fewer blocks and/or functions may be used with any of the ladder diagrams, scenarios, and flow charts discussed herein, and these ladder diagrams, scenarios, and flow charts may be combined with one another, in part or in whole.

- a block that represents a processing of information may correspond to circuitry that can be configured to perform the specific logical functions of a herein-described method or technique.

- a block that represents a processing of information may correspond to a module, a segment, or a portion of program code (including related data).

- the program code may include one or more instructions executable by a processor for implementing specific logical functions or actions in the method or technique.