Practical Tips for Implementing AI Agents in Games

In May of 2024, I had the opportunity to give a guest lecture at UC Santa Cruz for their Game AI course, taught by Dr. Batu Aytemiz. This post is a quick summary of the talk, which did not focus on the models and architectures for AI agents in games, but rather how to implement agents in a practical and easy manner. Pulling from our own experience at Regression Games working with studios to implement agent systems, I’ve pulled together core principles and tips to follow for a pain-free experience implementing NPCs and bots within your game.

You can see the full presentation on Google Slides and the lecture recording on YouTube.

-- Aaron Vontell, Founder of Regression Games

Introduction

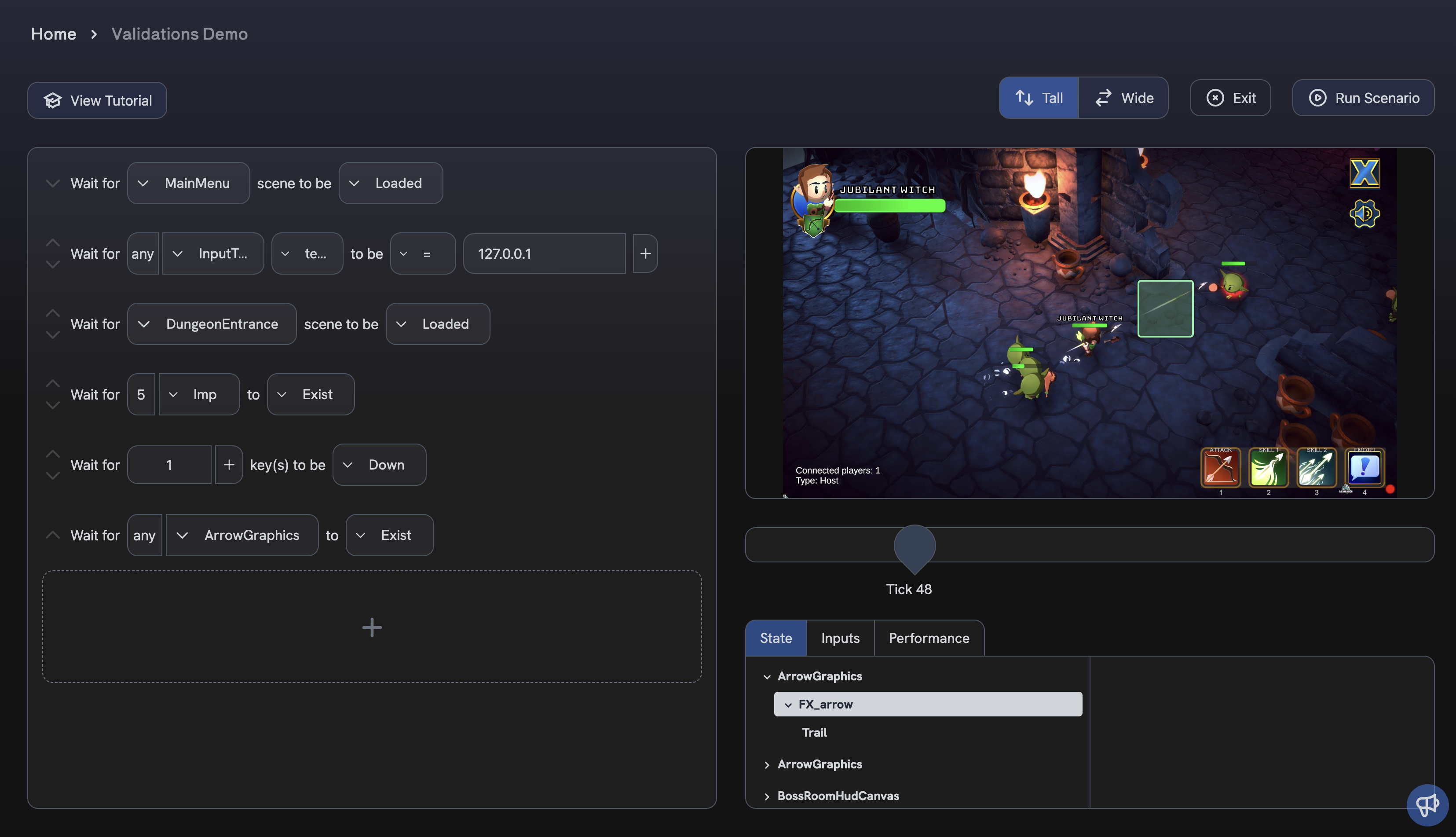

Building agents for games is hard. If you are a game developer, you may have experienced the pain of adding an NPC to your game, whether it be for interacting with players in combat, automating test scenarios, or building an engaging experience for multiplayer games. The inherent complexity of games makes agent implementation tricky. Let’s jump into discussing some principles and tricks to ensure that your agent implementation is smooth and easy!

Principle #1: Think About Agents Early On

When developing a game, it's crucial to think about agents early on. Imagine what use cases you may need agents for and define the behavior of these agents precisely.

The complexity of a game grows quickly during development - before you know it, core game logic, multiplayer networking, animation rigging, event systems, external APIs, and scene management can pile up. Think about these aspects of your project as you build to ensure that agents are easier to integrate:

- Separation of Concerns: Encapsulate your code, and ensure that non-character code is not tightly coupled to your character logic. For example, if multiplayer lobby information is tightly coupled to an avatar in your game, building a non-networked agent can become tricky.

- Connection Points: Identify where agents will connect within your game’s architecture. Think deeply about the interfaces that allow agents to understand and communicate with the core game logic.

- Agent Applications: Think about how agents will be used, such as for multiplayer bots, NPCs, QA/game balance, and player coaching.

Example: In Unity’s Boss Room project we wanted to implement agents for testing. Due to the way networked clients are mapped to characters in the game, a lot of work was needed to decouple this logic and allow local NPCs to “connect” as real players.

Principle #2: Start Simple

Too often, developers will attempt to implement complicated agents to solve a use case. Start with the goals of your agent and work backwards until you arrive at the simplest algorithm or approach that will get the job done. In a lot of cases, you will realize that your simple NPC does not need to be trained via reinforcement learning - it may be the case that a simple rule-based approach or behavior tree can get you 90% of the way there!

In general, try to follow this process:

- Think of the use case first - what is the goal of the agent?

- Design a simple algorithm to start - force yourself to think about the behavior

- Try the approach, and see where it falls short

- Improve the approach, and go back to step 2

- Only once you exhaust the possibilities of your approach, try a new technique.

As an example, let’s imagine a match 3 puzzle game, and you’d like an agent that can evaluate the difficulty of a level by attempting to solve it. You could train an agent using imitation learning, or an RL agent that continuously plays many levels and uses level completion as a reward function. However, wouldn’t randomly selecting a swap on the board and taking that move be a good enough start?

Principle #3: Think Precisely About States and Actions

Understanding the state and action space available to an agent is vital. Different types of state representations and action abstractions can impact not only how good the agent is, but also how difficult it is to integrate and build the agent in the first place.

Consider which of the following state spaces makes sense for your use case:

- Screenshots and video capture

- Ray tracing

- Direct game state (i.e. position, health, etc…)

If you are training an RL agent, maybe screenshots make sense. If you are building a behavior tree, direct game state might be better. The key point here is that it varies from game to game and agent to agent - take some time to really think about it!

The same applies to actions - you need to consider whether direct key inputs or function calling is better. Additionally, you’ll need to think about how abstracted those actions are, if your action space is continuous or discrete, and how often your agent can take actions.

As a quick example, imagine a farming simulator game, and you want to build an agent that will harvest a plant. In order to get to and harvest a plant, your action space can take many forms:

- Press the S key

- Press the ‘Move Down’ key

- Call a function that moves towards (X, Y)

- Call a function that path-finds towards (X, Y)

- Call a function that moves to the nearest plant

- Call a function that harvests a nearby plant

Many of these may work - think about these actions in the context of your agent goals and game setup, and then decide which is best.

Principle #4: Decide Where Agent Code Will Live

Especially for multiplayer games, the architecture of your game affects where agent code should run. For instance, if your agent will act as a player in a multiplayer game, does that agent run on the server, or on the client? If your game is peer to peer, what if the client running the agent disconnects? How does ownership of the agent code get transferred? Finally, in single player games, what if the agent relies on large systems that cannot be handled on-device, such as LLMs?

From our experience, we would recommend the following:

- Try to implement your agent to run locally on the machine that runs the core game logic (i.e. a game server). If this is not the case, the latency introduced to send an agent the state of the game can become a problem.

- Try to implement the agent within the game itself, rather than a separate process. In some cases, app stores and user devices will have issues with agents that run outside of the game process.

- If using an RL or imitation learning-based bot, these models can often be run on-device with tools such as Unity Sentis.

Wherever you do decide to run your agent code, make sure to understand the implications it will have for performance on player devices.

Principle #5: Evaluate Your Agents

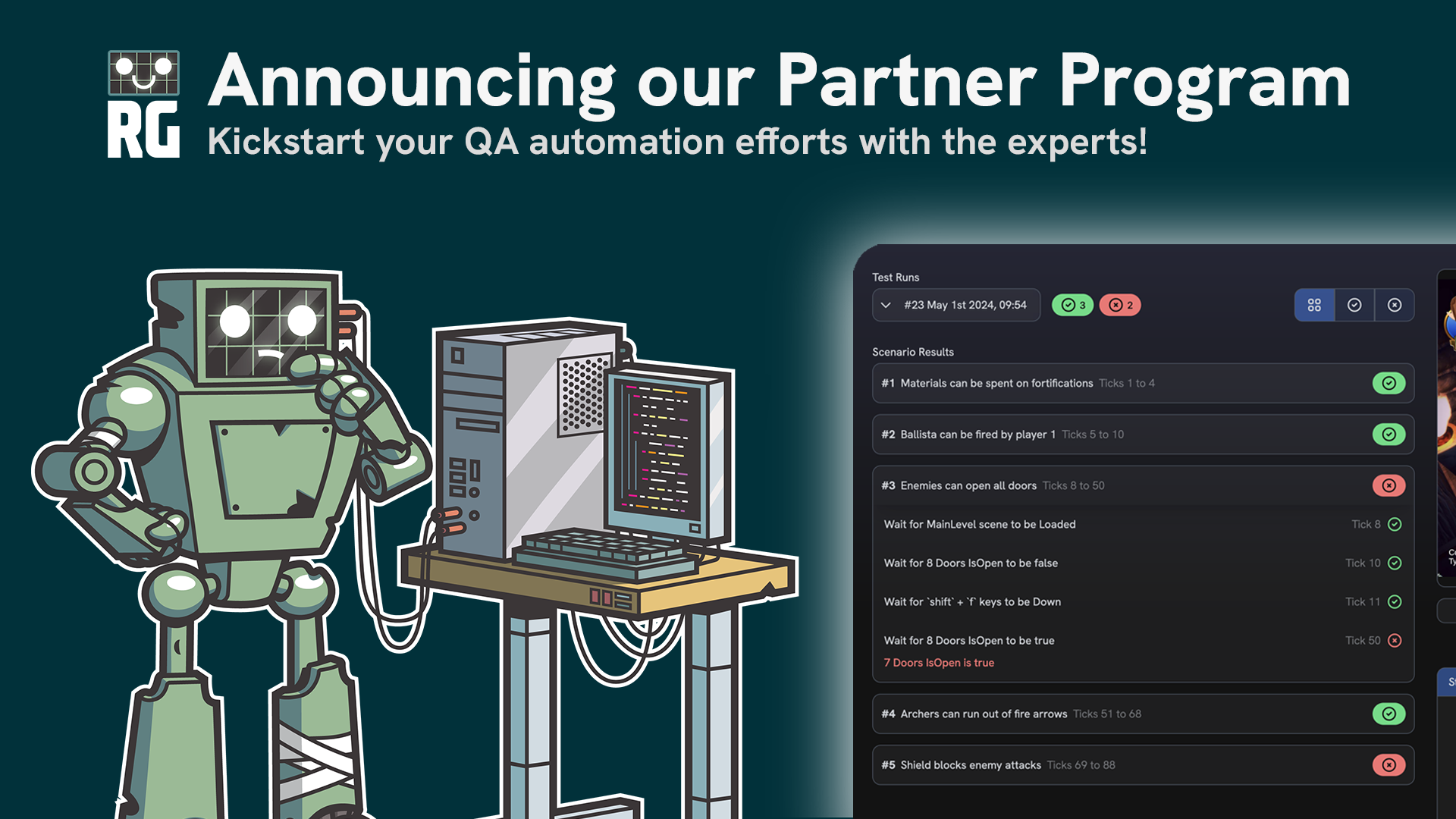

Evaluation should connect back to your agent goals. Create environments focused on both normal and edge cases and collect real data to iterate and improve your agents.

- Evaluation Metrics: Link evaluation metrics to agent goals.

- Testing Scenarios: Build diverse environments to test agent performance.

- Quantitative Metrics: Use A/B testing to refine agent behaviors.

Example: In a racing game, you might measure lap finish times, the number of collisions, and time spent turning to evaluate an agent’s performance.

Tips and Tricks

The principles above highlight the main considerations to think about when developing a game that can support agents. Here are a few more tips and tricks that we have found helpful in our own development.

1. Expose Extra State and Actions: Provide agents with additional information like inventory data and ability cooldowns. Even if a player can’t view certain game information, that doesn’t mean an agent shouldn’t be able to!

2. Grant More Power: Allow agents to perform actions beyond human player capabilities for testing purposes. For example, if your agent can be implemented more easily if it could jump 10% higher than the player, then allow it to do so.

3. Implement Debug Tools: Use in-game overlays and other tools to debug agent behavior.

4. Leverage Built-in Tools: Utilize existing tools and frameworks to streamline agent development, such as navigation meshes and behavior tree tools.

Conclusion

Early and thoughtful integration of AI agents will make your life much easier.

- Plan agent goals and integration early.

- Start with simple agents and iterate.

- Clearly define state and action spaces.

- Decide where agent code will run.

- Design robust evaluation methods.

By following these tips and leveraging the resources available, your experience implementing an agent will be far less painful than it could be, we guarantee it.

For more insights and resources, visit our website at regression.gg or follow us on Twitter. Thank you for reading!

.png)