The Banana Pi M5 Pro

I’m down with the first flu of the season, so I thought I’d write up my notes on the Banana Pi M5 Pro and how it’s fared as part of my increasingly eclectic collection of single-board computers in the post-Raspberry Pi age.

Disclaimer: Banana Pi sent me a review unit (for which I thank them), and this article follows my Review Policy. This piece was written after a month of (remotely) daily driving the board and is based on my own experiences with it.

Also known as the ArmSoM Sige5, this is a board along the lines of the Banana Pi M7 I reviewed a while back, and which I wanted to take a look at to get a better feeling for how its (theoretically) slower RK3576 chipset would perform.

This means there will be a lot of comparisons to the M7 in this review, so if you’re interested in that board, you might want to read that review first.

Hardware

Again, the general theme of the board is that it’s a little brother to the M7 I reviewed earlier:

- The CPU is a RK3576 with [email protected] and [email protected], and a 6 TOPS NPU, which is quite similar to the RK3588. The GPU, however, is a Mali G52, which is a little slower.

- Like with the M7, you get an underside M.2 2280 PCIe NVMe slot, but it’s 1xPCIe 2.0 only (still speedy, but not as fast as the one on the M7)

- The Ethernet ports are gigabit instead of 2.5Gb, although wireless connectivity is the same (802.11a/b/g/n/ac/ax WIFI6 and BT 5.0)

- Most of the other connectors on the board (MIPI, CSI, DSI, GPIOs, etc.) are the same as the M7.

- My board came with “only” 8GB of RAM (although you can get it up to 16GB) and a 64GB eMMC

I was sad that the 16GB model wasn’t available at the time, since that would have made for a better comparison to the M7.

But, as it is, at least connector-wise the board looks like a decent drop-in replacement for the M7 for less demanding industrial applications.

Operating System Support

As you would expect, the board has great Armbian support–unlike the M7 it’s not listed as “platinum” supported, but I had zero issues getting a fully up to date working image, and over the past month I have gotten regular updates from the rk35xx vendor branch, so as of this writing I’m running kernel 6.1.75:

_ _ _ _ _

/_\ _ _ _ __ | |__(_)__ _ _ _ __ ___ _ __ _ __ _ _ _ _ (_) |_ _ _

/ _ \| '_| ' \| '_ \ / _` | ' \ / _/ _ \ ' \| ' \ || | ' \| | _| || |

/_/ \_\_| |_|_|_|_.__/_\__,_|_||_|_\__\___/_|_|_|_|_|_\_,_|_||_|_|\__|\_, |

|___| |__/

v24.11 rolling for ArmSoM Sige5 running Armbian Linux 6.1.75-vendor-rk35xx

Packages: Debian stable (bookworm)

Support: for advanced users (rolling release)

IP addresses: (LAN) 192.168.1.111 192.168.1.168 (WAN) 161.230.X.X

Performance:

Load: 3% Up time: 0 min

Memory usage: 2% of 7.74G

CPU temp: 43°C Usage of /: 17% of 57G

Tips:

Support our work and become a sponsor https://github.com/sponsors/armbian

Commands:

System config : sudo armbian-config

System monitor : htop

Last login: Mon Sep 30 10:35:13 2024 from 192.168.1.160

me@black:~$ uname -a

Linux black 6.1.75-vendor-rk35xx #1 SMP Thu Aug 8 17:42:28 UTC 2024 aarch64 GNU/Linux

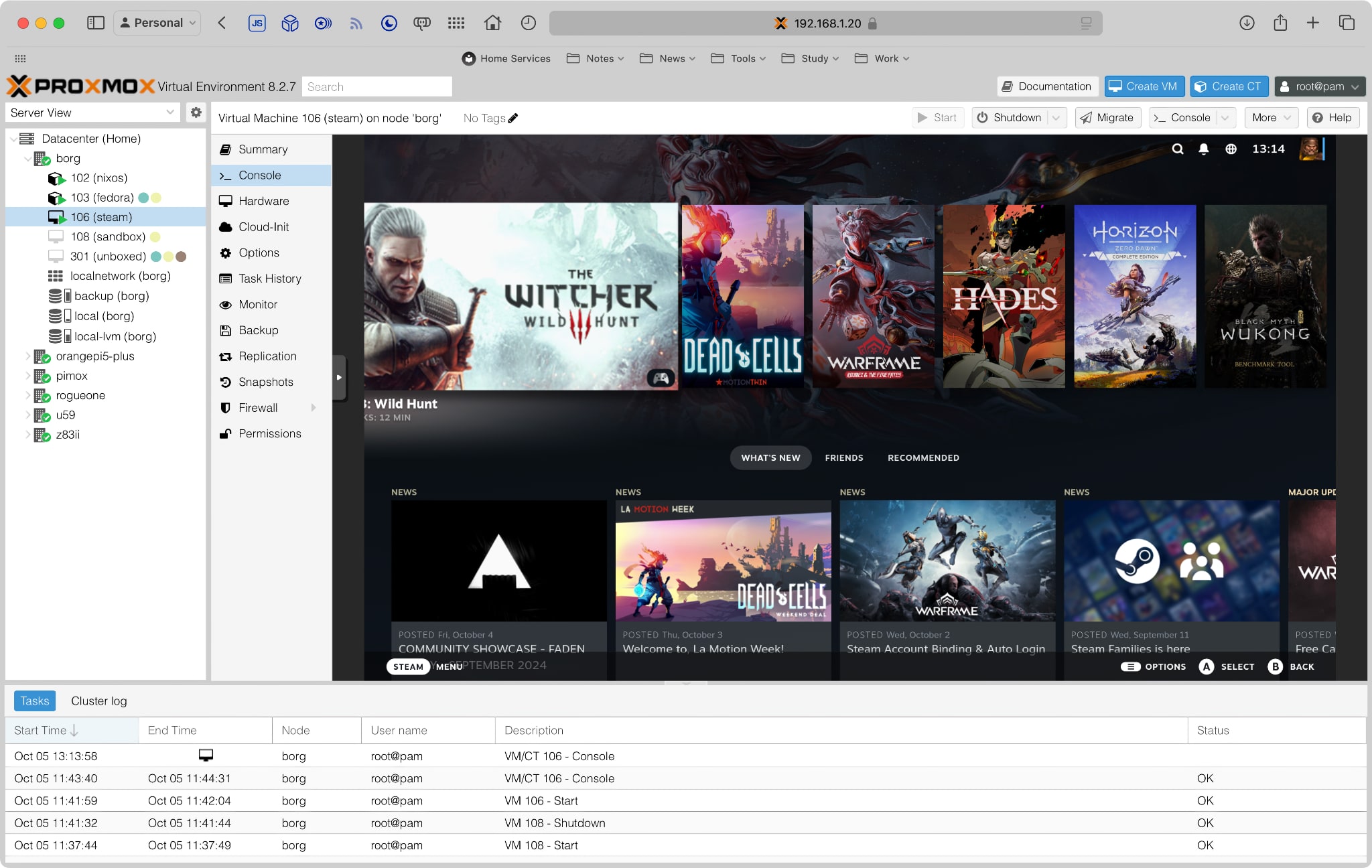

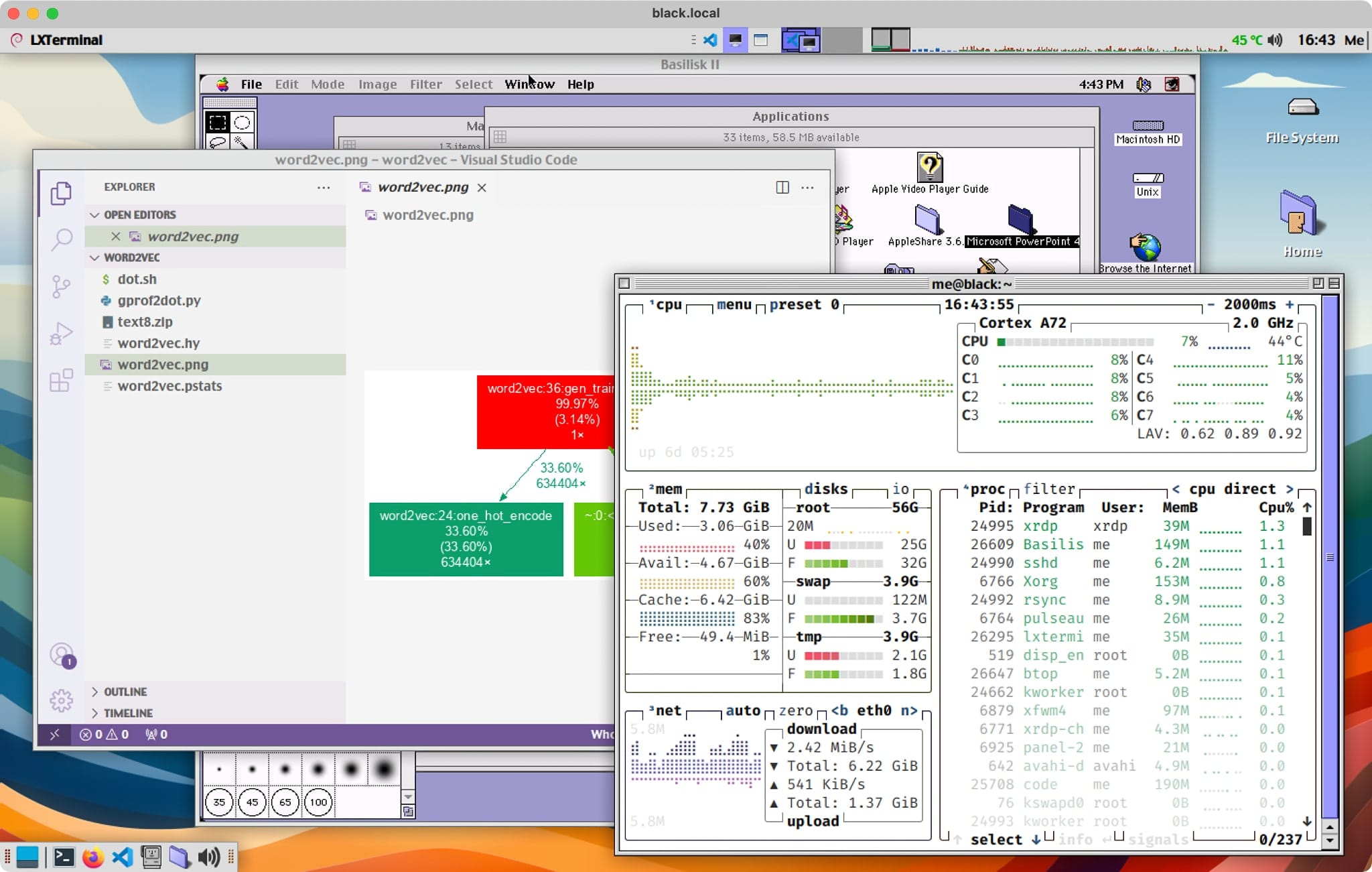

It was also trivial to set up my usual LXDE remote environment on it and working on it from my iPad, and I took the time to put myself through the paces of editing and building software on it, which was very smooth:

Benchmarking

This time around I had to skip my NVMe testing since I had no spare SSDs–however, given that the NVMe slot is only PCIe 2.0, I would expect the IOPS figures to be only around a quarter of what the M7 can do (i.e., 3000 IOPS), which would still be faster than a SATA SSD and thus more than enough for the vast majority of industrial use cases.

Also, I should point out that the stuff I ran had zero issues running off the EMMC, so I didn’t feel the need to push it to the limit.

Ollama

However, the ollama testing was more interesting than I expected.

for run in {1..10}; do echo "Why is the sky blue?" | ollama run tinyllama --verbose 2>&1 >/dev/null | grep "eval rate:"; done | \

awk '/^eval rate:/ {sum += $3; count++} END {if (count > 0) print "Average eval rate:", sum/count, "tokens/s"; else print "No eval rate found"}'

I was quite surprised when the M5 put out better results than the M7 originally had (11.12 tok/s, which was better than the 10.3 I had gotten), so I plugged in the M7 again, updated the kernel and ollama, and ran them again:

| Machine | Model | Eval Tokens/s |

|---|---|---|

Banana Pi M5 Pro |

dolphin-phi | 3.92 |

| tinyllama | 11.22 | |

Banana Pi M7 |

dolphin-phi | 5.73 |

| tinyllama | 15.37 |

…which goes to show you that all benchmarks should be taken with a grain of salt. There are several variants here:

- Both systems are running a newer kernel than when I tested the M7

- The M7 is now in a matching case to the M5, so heat dissipation should be slightly improved (although that was not a big factor in earlier testing)

ollamahas since been further optimized (even though it still doesn’t support the NPU on either system).

This seems like a good reason to try out sbc-bench when I get an NVMe drive to test with (it is, after all, a more static workload, and unlikely to be further optimized), but for now I’m happy with the results, and, again, I don’t really hold much stock in benchmark figures.

Update: I found the time to run

sbc-benchon both boards, and here are the results:

| Device / details | Clockspeed | Kernel | Distro | 7-zip multi | 7-zip single | AES | memcpy | memset | kH/s |

|---|---|---|---|---|---|---|---|---|---|

| Banana Pi M5 Pro | 2304/2208 MHz (throttled) | 6.1 | Armbian 24.11.0-trunk.190 bookworm | 11870 | 1842 | 1310870 | 5740 | 16650 | 18.03 |

| Banana Pi M7 | 2352/1800 MHz | 6.1 | Armbian 24.8.4 bookworm | 16740 | 3170 | 1314240 | 12740 | 29750 | - |

As you can see, there is a significant difference in performance, but that’s both due to the differences in SOC bandwidth and compounded by the fact that the M5’s defaults seem to throttle it more aggressively.

Power and Cooling

In general, the M5 Pro’s power profile was pretty similar to the M7’s but lower. The only thing I found odd was that the idle wattage (1.4W) was a little higher, but CPU governors might be the cause here.

In almost direct proportion with the compute performance, it peaked at 6.4W under heavy load (instead of the 10W I could get out of the M7) and quickly went down to 5.7W when thermal throttling–so on average it will always spend less than the M7 for generally similar (but slightly lower) performance, which makes its much lower pricing all the more interesting.

Since this time I got the aluminum case with the board (in a very discreet matte black), thermals were also directly comparable between both boards, but very predictable in this case:

Over several ollama runs, the reported CPU temperature peaked at 80oC, with the clock slowly throttling down from 1.8 to 1.6 and then 1.4GHz over 5 minutes (and bouncing right back up when the temp dipped below 79oC).

So I really liked the way this handled sustained load–the 400MHz drop isn’t nothing, but it’s quite acceptable.

Conclusion

First off, I need to spend a little more time with the M5 Pro to get a better feel for it–and yet the only thing that I really wish was that I’d gotten the 16GB RAM model, which would have made for a better comparison to the M7.

Considering all the above and the fact that during almost a month’s worth of testing (editing and building programs remotely on it) I had zero issues where it regards compatibility and responsiveness, I’d say the M5 Pro is a very nice, cost-effective alternative to the M7–like I mentioned above, you can probably use it as a drop-in replacement for most industrial applications, and unless you really need the additional networking and storage bandwidth, likely nobody will be the wiser.

I also need to take another look at the power envelope (I’m not really keen on almost 50% additional power draw on idle), but I suspect that’s fixable by tweaking CPU governors, so I’m not worried about it.