Introduction

In the rapidly evolving landscape of machine learning, the potential to generate responses and perform obligations with minimal records has become increasingly important. Innovations like zero-shot, one-shot, and few-shot prompting have revolutionized this aspect, permitting fashions to generalize, adapt, and research from a restricted wide variety of examples. These strategies have opened new opportunities, mainly in eventualities in which information is scarce, making them invaluable in diverse applications. This article on zero-shot prompting will explain how it works and cover its applications, advantages, and challenges.

Learn More: Zero Shot, One Shot, and Few Shot Learning

Overview

- Understand what zero-shot prompting is and how it works.

- Explore examples of using this technique.

- Know the advantages, limitations, and challenges of using this method.

Table of Contents

What is Zero-Shot Prompting?

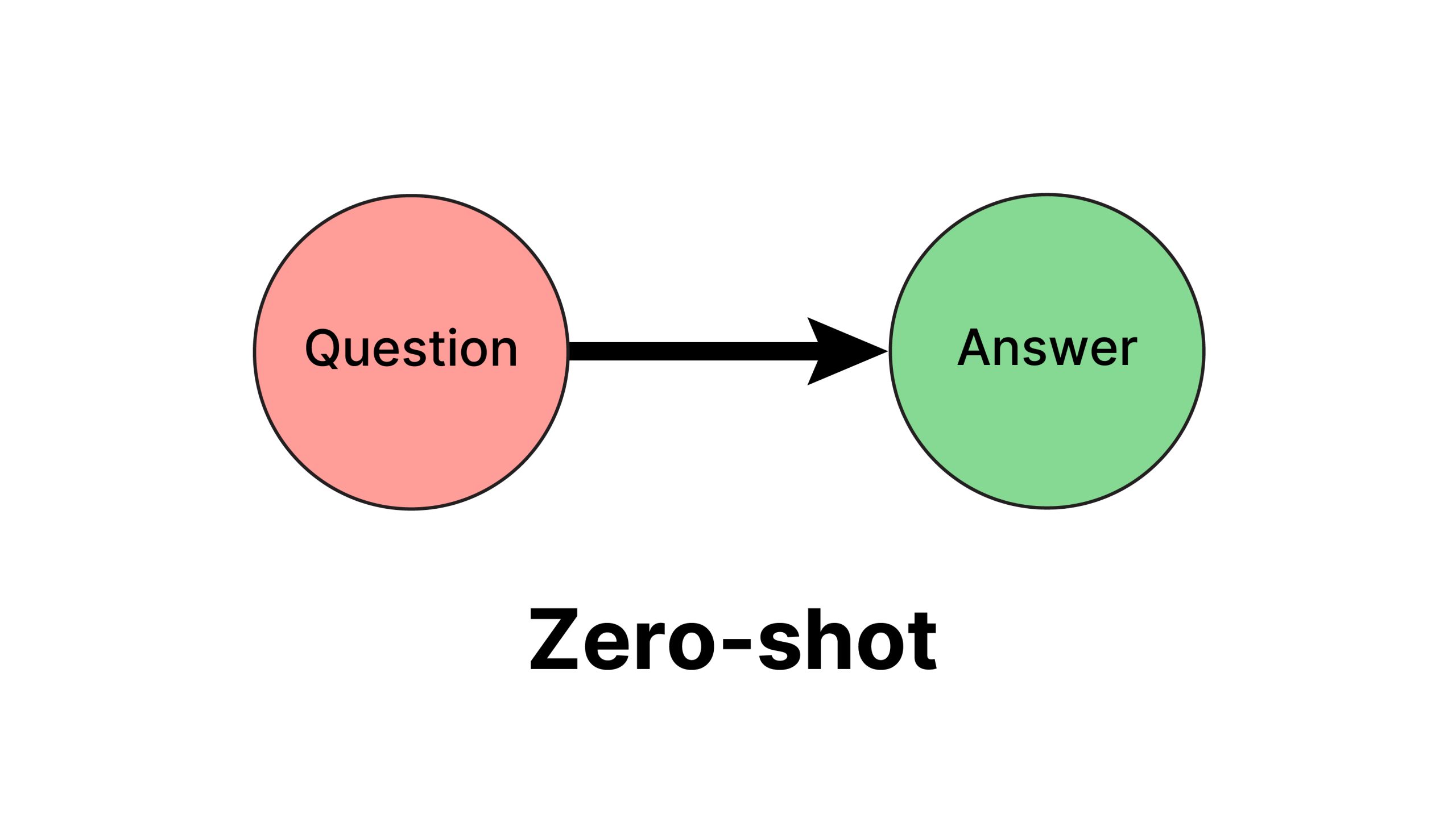

Zero-shot is a strategy utilized in natural language processing (NLP) to enhance the overall performance of the model with the limited data They allow models to recognize and generate responses for tasks with no need for big training data. It involves producing responses for tasks without any specific examples or fine-tuning, relying completely on the version’s current information.

How it Works

Zero-shot prompting enables models to generate responses to tasks they haven’t been explicitly trained on, without any examples or fine-tuning. By leveraging their pre-existing knowledge, these models can comprehend prompts and produce relevant outputs.

We can simply say that no examples are provided for the model to learn or copy from.

Examples

User:

Q: What is the capital of France?

Response:

The capital of France is Paris.The below examples are from ChatGPT of zero-short prompting

Example 1:

Example 2:

Advantages

- Versatility: Models can handle a wide range of tasks without needing specific training data for each task.

- Efficiency: Since it doesn’t require task-specific fine-tuning, it can save time and resources compared to traditional fine-tuning methods.

- Generalization: It promotes models to generalize their knowledge. This allows them to apply it to unseen tasks or prompts, fostering a deeper understanding of language.

Limitations and Challenges

While zero-shot prompting offers several advantages, the generated responses might not always be as accurate or detailed as those from models fine-tuned for specific tasks. Moreover, it can struggle with tasks that require specialized training or domain-specific knowledge, particularly those that are complex or nuanced.

Conclusion

Zero-shot prompting represents large advancements within the area of machine learning, particularly in natural language processing. This method has made it viable for models to perform tasks with minimal data, enhancing their versatility and performance. However, this additionally has limitations, particularly in terms of accuracy and dealing with complicated tasks. As studies continue to develop, this technique is anticipated to emerge as even more powerful, starting new avenues for applications in numerous fields.

Ready to transform your development skills? Enroll in our “Zero-Shot Prompting Mastery” course today and learn how to leverage this cutting-edge technique to enhance your machine learning models. Simplify your workflow and unleash your creativity—join us now!

Frequently Asked Questions

A. Zero-shot prompting is the technique of getting language models to generate responses for tasks without any new examples or fine-tuning. This relies solely on the model’s pre-existing knowledge.

A. One-shot prompting involves providing the model with one example to guide its response, whereas zero-shot prompting doesn’t provide any examples.

A. The main advantages include versatility, efficiency, and the ability to generalize knowledge to new, unseen tasks.

A. Challenges include potential inaccuracies in generated responses and difficulties in handling complex or nuanced tasks that require specialized training.

A. While versatile, zero-shot prompting may struggle with highly specialized or complex tasks that demand domain-specific knowledge or training.