Introduction

Forecasting is the approach of determining what the future holds. It is of tremendous value for enterprises to build informed business decisions.

Most forecasting problems involve the use of time series. A time series is a time-oriented or chronological sequence of observations on one or multiple variables of interest. The variable could be anything measurable with respect to time. Depending on the consistent frequency of its observations, a time series may typically be hourly, daily, weekly, monthly, quarterly, or annual.

Forecasting a time series can be mainly divided into two types dependently on the data: Univariate Time Series Forecasting & Multivariate Time Series Forecasting.

Due to its complex time-varying nature, multivariate time series makes forecasting a challenging task, which requires the use of more sophisticated models compared to univariate models.

With the help of Artificial Intelligence (AI), we are able to build strong models that can handle a large number of predictors with non-linear inter-dependencies, for the sake of accurate predictions.

Keywords

Time series – Univariate – Multivariate – Forecasting – Time dependency – interdependency – ARIMA – Artificial intelligence- CNN- RNN- LSTM – DFF – GRU – gates

Univariate Vs. Multivariate Time Series?

The term “Univariate Time Series” refers to a time series that consists of recorded observations of a single variable of interest over equal time increments. Forecasting future values is essentially based on those historical observations, and many statistical approaches exist to serve this goal including Auto-Regressive Integrated Moving Average (ARIMA), Exponential Smoothing (ES), and other variations like Seasonal ARIMA (SARIMA), BATS, and TBATS capable of modeling seasonal components of Univariate Time Series.

The univariate time series forecasting approach relies mainly on the assumption that all factors that might affect our unique target variable are continuously driving it in a similar manner.

This big assumption often breaks down in the real world which leads us to a complex situation that requires more than just univariate time series forecasting. That’s where a Multivariate Time Series forecasting comes into play!

A multivariate time series has more than one time-dependent variable. Each variable depends not only on its past values but also has some dependency on other variables. This dependency can be used to forecast future values.

Challenges associated with Multivariate Time Series Forecasting

As discussed, time series forecasting is often confronted with multivariate data. This approach has been embraced over the years, due to its ability to carry multiple variables along and to provide realistic and accurate forecasts as possible.

Don’t forget! Our main goal is to help enterprises build change-resistant and informed business decisions by providing trustful forecasts.

Yet multivariate time series forecasting is a challenging task for many reasons:

- Complexity: Multivariate time series constitute generally fast-flowing and complex high-dimensional data; Data is increasingly available at a high speed with multiple input variables, complex seasonality, non-stationarity, and irregular temporal structures in most real-life situations.

- Time dependency: Observations are time-framed, as we deal with time-oriented data, such that values at current time t are predicted given the values at times prior to t.

- Non-linearity: Multivariate time series involve non-linear interdependencies between the variables of interest, which makes it almost impossible for linear models to detect data patterns.

Performing accurate forecasts of future behavior demand the definition of robust and efficient techniques able to handle this complex, time-dependent, non-linear behavior of multivariate time series, rather than using the classical statistical methods which are not suitable for this kind of data.

AI- powered multivariate time series forecasting

As an important part of the field of artificial intelligence, Deep Learning neural networks can be a powerful tool to predict the future due to its ability to discover complex nonlinear dependencies between features from raw high-dimensional data.

The well-built deep neural networks of Deep Feed Forward (DFF), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs) have been widely applied in Multivariate Time Series forecasting, and are attributed to the open-source deep learning frameworks, such as Keras, TensorFlow, and including flexible and sophisticated mathematical libraries.

1. Deep Feed Forward (DFF) Neural Networks

One of the most popular neural net paradigms is the Feed-Forward Neural network (FNN), often called Multi-Layered Perceptron (MLP)

In an FNN, neurons are arranged in layers which contain input, hidden layers, and output. There can be more than one hidden layer to allow the neural net to learn more complex decision boundaries, which leads us to the Deep Feed Forward Neural Network.

Each neuron in one layer is connected to every neuron on the next layer. Therefore, information is constantly fed forward from the current state.

Because of their highly connected structure, neural networks exhibit some desirable features, such as high speed via parallel computing, resistance to hardware failure, robustness in handling different types of data, graceful degradation, which is the property of being able to process noisy or incomplete information, learning and adaptation.

A Deep Feed Forward Neural Network – Source: medium.com

2. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a category of neural networks that have proven very effective and successful in areas such as image recognition, image classifications, object detections …

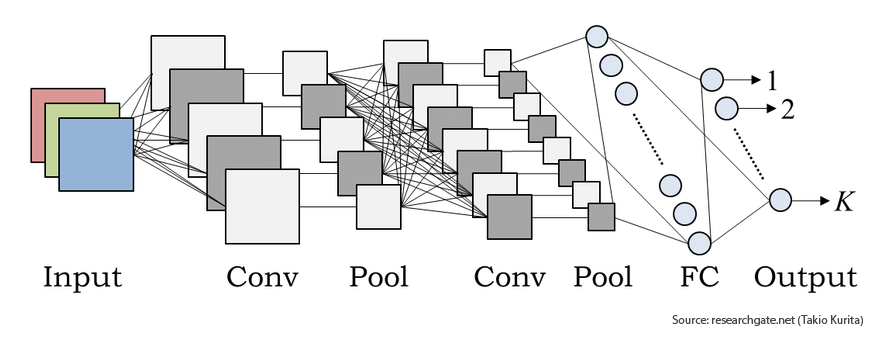

Typical CNN architecture

Convolutions preserve the spatial relationship between pixels by learning image features using small squares of input data, which makes the model learn how to automatically extract the features from the raw data which are most useful for the addressed problem.

They constitute a very natural and powerful tool in the CNN architecture for capturing spatially invariant patterns; Their primary purpose is to extract features from the input image and detect local relationships that are invariant across spatial dimensions. What about temporal patterns?

The ability of CNNs to learn and automatically extract features from raw input data can be applied to time series forecasting problems. A sequence of observations can be treated like a one-dimensional image that a CNN model can read and refine into the most relevant elements using multiple layers of causal convolutions that are convolutional filters designed to ensure only past information is used for forecasting.

This technique remains challenging when dealing with Multivariate Time Series data where long-term dependencies are significant.

There are specialized convolutional architectures that perform quite well in this case, including the dilated convolutions algorithm. The convolutional layers have various dilation factors that allow its receptive field to grow exponentially with depth and cover thousands of timesteps. This leads the output of each convolution to encompass rich information for long-term tracking.

The same concept is applied in DeepMind’s WaveNet, which was designed to advance the state of the art for text-to-speech systems. The WaveNet model’s architecture allows it to exploit the efficiencies of convolution layers while simultaneously alleviating the challenge of learning long-term dependencies across a large number of timesteps (1000+).

WaveNet – Source:deepmind.com

3. Recurrent Neural Networks (RNNs)

Recurrent Neural Network (RNN) is a class of artificial neural networks where connections between nodes form a directed graph along a temporal sequence.

With its ability to use their internal state (memory) to process variable-length sequences of input, RNNs can significantly help in multivariate time series forecasting, as well as a variety of Natural Language Processing (NLP) tasks.

RNN is a network with feedback loops that allows information to persist.

Typical Recurrent Neural Network Source: colah.github.io

In the above diagram, a chunk of the neural network, A, looks at some input xt outputs a value ht. A loop allows information to be passed from one step of the network to the next.

RNNs can suffer from limitations in learning long-range dependencies in the data due to issues with exploding and vanishing gradients.

Gated Recurrent Unit (GRU) and Long Short-Term Memory units (LSTM) deal with the vanishing gradient problem encountered by traditional RNNs, with LSTM being a generalization of GRU.

They both have a gating mechanism to regulate the flow of information like remembering the context over multiple time steps. They keep track of what information from the past to keep, and what information to forget. To achieve this, LSTM has three gates, Input, Forget, and Output, while GRU uses Update and Reset gates. Its simpler structure made GRU computationally more efficient, faster to train, and better performant than LSTM.

The significant achievement of both LSTM and GRU is their ability to make use of short-term memory over a long period, but because of its relatively simple structure, a GRU model is computationally more efficient, faster to train, and better performant than LSTM.

Conclusion

Analyzing time-oriented data and forecasting its future values are among the most critical problems that analysts face in many fields, especially when it comes to multivariate time series data.

Recently, Artificial Intelligence models have established themselves as serious contenders to classical statistical models in the forecasting community; Neural Networks from basic such as DFF to memory-based like RNNs have already proved their ability to process temporal patterns in addition to long-term dependencies in multivariate time series. A lot of research is nevertheless being invested into using hybrid models which represent a combination of several forecasting models leading to powerful and promising results.

Abbreviations

- ARIMA – Auto-Regressive Integrated Moving Average

- AI – Artificial Intelligence

- DFF – Deep Feed Forward

- FFNN – Feed-Forward Neural Network

- MLP – Multi-Layered Perceptron

- CNN – Convolutional Neural Network

- RNN – Recurrent Neural Network

- NLP – Natural Language Processing

- LSTM – Long Short-Term Memory

- GRU – Gated Recurrent Unit