We are initiating a series of articles on garbage collection with a progressive approach. Our goal is to spotlight the theoretical concepts and the practical implementation, providing clear illustrations of the associated challenges.

Introduction

Garbage collection is a process in computer programming and memory management where the system automatically identifies and frees up memory that is no longer in use by the program. The primary purpose of garbage collection is to reclaim memory occupied by objects or data structures that are no longer reachable or referenced by the program, preventing memory leaks and improving the efficiency of memory utilization.

We will delve into the core principles and advantages of this paradigm and simply implement (in C#) the theoretical concepts that we address, gaining insights into the limitations of each concept. Ultimately, we aim to develop a comprehensive understanding of how garbage collection works in modern programming languages.

The authoritative textbook on this topic is The Garbage Collection Handbook published in 2023. This book encapsulates the wealth of knowledge amassed by researchers and developers in the field of automatic memory management over the past sixty years.

This article was originally published here: Implementing a simple garbage collector in C#

What is Garbage Collection Exactly?

As said in the introduction, garbage collection is a process in computer programming and memory management where the system automatically identifies and frees up memory that is no longer in use by the program.

Key Concepts of Garbage Collection

-

Garbage collection automates the process of deallocating memory, eliminating the need for manual memory management by programmers. This helps prevent common issues such as memory leaks and dangling pointers (see below).

-

Objects are considered reachable if they are directly or indirectly referenced by the program. Garbage collection identifies and marks the objects that are no longer reachable, making them eligible for removal.

-

The garbage collector identifies and releases memory occupied by unreferenced objects, returning it to the pool of available memory for future use.

-

Garbage collection can be triggered by various events, such as when the system is running low on memory or when a specific threshold of allocated memory is reached.

Garbage collection is universally adopted by nearly all modern programming languages:

- Java utilizes the JVM's garbage collector, which includes various algorithms such as the generational garbage collector (G1 GC).

- .NET (C#) implements a garbage collector that uses a generational approach.

Garbage collection contributes to the overall robustness and reliability of programs by automating memory management and reducing the likelihood of memory-related errors.

What is the Problem with Manual (Explicit) Memory Management?

Manual memory management is not inherently problematic and is still employed in certain prominent programming languages such as C++. However, many are familiar with instances of critical bugs attributable to memory leaks. This is why, relatively early in the history of computer science (with the first technique dating back to the 1950s), methods for automatically managing memory were studied.

Manual memory management indeed introduces several challenges and potential issues:

-

Failure to deallocate memory properly can lead to memory leaks. Memory leaks occur when a program allocates memory but fails to release it, resulting in a gradual depletion of available memory over time.

-

Improper handling of pointers can result in dangling pointers, pointing to memory locations that have been deallocated. Accessing such pointers can lead to undefined behavior and application crashes.

-

Incorrect manipulation of memory, such as writing beyond the bounds of allocated memory, can cause memory corruption. Memory corruption can result in unexpected behavior, crashes, or security vulnerabilities.

-

Manual memory management increases code complexity, making programs more prone to bugs and harder to maintain. Developers need to carefully track and manage memory allocations and deallocations throughout the codebase.

-

Manual memory management can contribute to memory fragmentation, where free memory is scattered in small, non-contiguous blocks. This fragmentation can lead to inefficient memory utilization over time.

-

Mismatched allocation and deallocation operations can occur, leading to errors like double frees or memory leaks. These errors are challenging to identify and debug.

-

Explicit memory management can require additional code for allocation and deallocation, impacting program performance. Memory allocation and deallocation operations may become bottlenecks in performance-critical applications.

-

Manual memory management lacks safety features present in automatic memory management systems. Developers need to carefully handle memory-related operations, making programs more error-prone.

Automatic memory management addresses these issues by automating the process of memory allocation and deallocation, reducing the burden on developers and minimizing the likelihood of memory-related errors.

A more comprehensive overview of the benefits of garbage collection and drawbacks of explicit memory management is provided in The Garbage Collector Handbook.

What are Stacks, Heaps and More?

When discussing garbage collection, terms such as stack and heap are frequently mentioned to the extent that it may create the impression that they are inherent data structures embedded in the operating system, and thus, must be universally adopted without question. However, the reality is that heap and stacks are just implementation details. In this section, our aim is to elucidate and clarify all these concepts.

In the Beginning was the Word

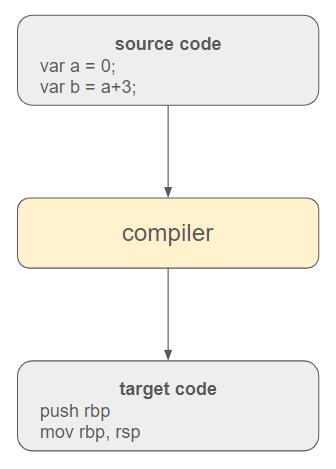

A program is essentially a text comprising instructions that a computer needs to comprehend. The responsibility of the compiler is to undertake the translation of these high-level instructions into executable code.

In contemporary programming languages, a virtual execution engine is often interposed between the source code and the operating system. Additionally, the source code is initially translated into an intermediate language to enhance portability and adaptability to different environments.

This execution engine encompasses the garbage collector and other techniques (like typechecking, exception management, etc.) to manage the program. The ultimate translation into target code is executed by the just-in-time (JIT) compiler.

Focus on the Garbage Collector

Certainly, the garbage collector stands out as one of the most crucial components of the execution engine. In this context, our focus will be on describing how memory is organized to facilitate effective management.

A program becomes valuable only when it generates a result, and inevitably, it needs to declare variables and allocate memory to store information at some point.

- The execution engine can anticipate the space it requires in advance, such as when dealing with simple types like integers. Conversely, certain data types, including custom classes or built-in types like strings or arrays of objects, do not allow for such straightforward inference.

- The compiler might only ascertain at runtime the specific location to store variables and reserve memory at that moment (dynamic allocation). If the compiler can determine the variable's lifetime, especially if it is local to a procedure, it becomes simpler to understand when the variable will no longer be necessary (i.e., when deallocation can occur). Conversely, data may persist beyond the call to a function or subroutine and endure indefinitely.

The disparity between a variable's lifetime and the space known in advance to be required necessitates the distinction between two memory areas. Although this differentiation is not obligatory, it has become universally accepted and implemented. A programming language that chooses not to adopt this abstraction may face the risk of fading into obsolescence, but it's essential to acknowledge that alternative schemes are conceivable. Moreover, it's crucial to note that this perspective is solely valid within the context of the execution engine. From the operating system's standpoint, everything eventually boils down to memory chunks allocated somewhere.

Conclusion

- When the execution engine can anticipate the lifetime of a variable and infer the required space, it allocates it in a memory area internally referred to as the stack.

- In other cases, where the lifetime cannot be definitively decided or the required space is unclear, the execution engine allocates variables in memory areas referred to as the heap.

Disclaimer: This depiction provides only a basic overview of what stacks and heaps are in various programming languages, and purists may find it oversimplified. It is by no means an official definition, as there could be various implementations and interpretations of these abstractions. The key takeaway is the necessity to delineate two memory areas at the execution engine level to facilitate efficient memory management.

Enough Theory, Code Please!

One should envision a scenario where a program is initially written in source code and subsequently compiled into an intermediate representation, manifested as a sequential list of instructions. We will assume that the IL compiler has generated this list of instructions. An example of such a list is provided below.

thread1;CREATE_THREAD;

thread1;PUSH_ON_STACK;Jubilant

thread2;CREATE_THREAD;

thread1;PUSH_ON_STACK;Radiant

thread1;POP_FROM_STACK

...

Each instruction consists of three components. The first indicates which thread is affected by the instruction, the second describes the operation to be performed, and the third (optional), provides a value to complement the operation. In the provided example, the compiler is initially instructed to create a thread named thread1 and then push a variable named Jubilant onto the stack of this thread. The third instruction calls for the creation of another thread named thread2 and so forth. The fifth instruction attempts to pop the stack of thread1.

This list of instructions can be envisioned as an intermediate representation (IR), as illustrated in Compilers: Principles, Techniques and Tools.

Our current task is to implement an execution engine (runtime) capable of handling these instructions, with a particular focus on memory allocation and deallocation.

Implementing the Runtime

Without further delay, here is how the runtime is implemented in our code.

public class Runtime

{

private List<Instruction> _instructions;

private Collectors.Interfaces.GarbageCollector _collector;

public Runtime(List<Instruction> instructions, string collectorStrategy)

{

_instructions = instructions;

_collector = GetCollector(collectorStrategy);

}

#region Public Methods

public void Run()

{

foreach (var instruction in _instructions)

{

_collector.ExecuteInstruction(instruction);

_collector.PrintMemory();

}

}

#endregion

}

The execution engine possesses the list of instructions to execute and a garbage collector (described below) to be utilized during memory deallocation. It also includes a Run method called by the main thread.

public class Program

{

static void Main(string[] args)

{

var instructions = new List<Instruction>();

var contents = File.ReadAllLines(AppContext.BaseDirectory + "/instructions.txt");

foreach (var line in contents)

{

var c = line.Split(';');

var intruction = new Instruction(c[0], c[1], c[2]);

instructions.Add(intruction);

}

var runtime = new Runtime(instructions, "MARK_AND_COMPACT");

runtime.Run();

}

}

The Runtime class also requires a memory collection strategy to apply. As we will explore, there are several possibilities for memory collection, and designing an effective garbage collector is indeed a subtle art.

Defining the GarbageCollector Interface

The Runtime class requires a GarbageCollection instance to operate. This instance implements the GarbageCollection abstract class, whose methods are detailed below:

public abstract class GarbageCollector

{

public abstract void ExecuteInstruction(Instruction instruction);

public abstract int Allocate(string field);

public abstract void Collect();

public abstract void PrintMemory();

}

In essence, the GarbageCollector class presented here serves as an orchestrator to execute the list of instructions and manage memory allocation and collection. In real-world implementations, these abstractions would typically be kept separate, but for simplicity's sake, the goal here is to streamline the representation.

The PrintMemory method is merely a helper function designed to visualize the content of the heap during debugging.

Defining a Thread

A thread refers to the smallest unit of execution within a process. A process is an independent program that runs in its own memory space, and within a process, multiple threads can exist. Each thread shares the same resources, such as memory, with other threads in the same process.

- Threads enable concurrent execution of tasks, allowing multiple operations to be performed simultaneously. They operate independently, with their own program counter, registers, and stack, but they share the same memory space. Threads within the same process can communicate and synchronize with each other.

- Threads are widely used in modern software development to improve performance, responsiveness, and resource utilization in applications ranging from desktop software to server applications and distributed systems.

public class RuntimeThread

{

private Collectors.Interfaces.GarbageCollector _collector;

private Stack<RuntimeStackItem> _stack;

private Dictionary<int, RuntimeStackItem> _roots;

private int _stackSize;

private int _index;

public string Name { get; set; }

public RuntimeThread(Collectors.Interfaces.GarbageCollector collector,

int stackSize, string name)

{

_collector = collector;

_stack = new Stack<RuntimeStackItem>(stackSize);

_roots = new Dictionary<int, RuntimeStackItem>();

_stackSize = stackSize;

_index = 0;

Name = name;

}

public Dictionary<int, RuntimeStackItem> Roots => _roots;

public void PushInstruction(string value)

{

_index++;

if (_index > _stackSize) throw new StackOverflowException();

var address = _collector.Allocate(value);

var stackItem = new RuntimeStackItem(address);

_stack.Push(stackItem);

_roots.Add(address, stackItem);

}

public void PopInstruction()

{

_index--;

var item = _stack.Pop();

_roots.Remove(item.StartIndexInTheHeap);

}

}

A thread is characterized by a name and executes its own list of instructions. The crucial point is that threads share the same memory. This translates into the fact that in a program, there can be multiple threads, and consequently, multiple stacks, but there is generally one heap shared among all threads.

In the provided example, when a thread executes an instruction, it is placed on its stack, and any potential reference to the heap is retained in an internal dictionary. This mechanism ensures that each thread maintains its own stack while references to the shared heap are tracked to manage memory allocation and deallocation effectively.

Defining the Stacks and the Heap

As mentioned earlier, stacks and heap are implementation details, so their code is deferred to the concrete classes of GarbageCollector. It could still be informative, nonetheless, to provide a brief description of them because, as we observed, these concepts are universally adopted.

Implementing the Stack

In C#, there already exists a data structure for stacks. For simplicity, we will use it, especially since it is well-suited to our requirements.

We will push instances of a RuntimeStackItem class onto this stack. RuntimeStackItem simply contains a reference to an address in the heap.

public class RuntimeStackItem

{

public int StartIndexInTheHeap { get; set; }

public RuntimeStackItem(int startIndexInTheHeap)

{

StartIndexInTheHeap = startIndexInTheHeap;

}

}

Implementing the Heap

A heap must represent a memory area, and we will simply model each memory cell with a char class. With this abstraction, a heap is nothing more than a list of cells that can be allocated. It also includes additional plumbing code, such as pointers, to manage which cells are occupied or can be reclaimed.

public class RuntimeHeap

{

public RuntimeHeap(int size)

{

Size = size;

Cells = new RuntimeHeapCell[size];

Pointers = new List<RuntimeHeapPointer>();

for (var i = 0; i < size; i++)

{

Cells[i] = new RuntimeHeapCell();

}

}

#region Properties

public int Size { get; set; }

public RuntimeHeapCell[] Cells { get; set; }

public List<RuntimeHeapPointer> Pointers { get; set; }

#endregion

}

Our task now is to define concrete classes of GarbageCollection to observe a garbage collector in action.

Implementing the Mark-and-Sweep Algorithm

For this strategy and the subsequent ones, we will allocate a heap of 64 characters, and we will consider it impossible to claim additional space from the operating system. In a real-world scenario, the garbage collector typically has the ability to request new memory, but for illustrative purposes, we impose this limitation. It is important to note that this limitation neither hinders nor detracts from the overall understanding.

Implementing the ExecuteInstruction Method

This method is nearly the same for all strategies. It processes one instruction at a time, pushing or popping variables on the stack.

public override void ExecuteInstruction(Instruction instruction)

{

if (instruction.Operation == "CREATE_THREAD")

{

AddThread(instruction.Name);

return;

}

var thread = _threads.FirstOrDefault(x => x.Name == instruction.Name);

if (thread == null) return;

if (instruction.Operation == "PUSH_ON_STACK")

{

thread.PushInstruction(instruction.Value);

}

if (instruction.Operation == "POP_FROM_STACK")

{

thread.PopInstruction();

}

}

Implementing the Allocate Method

If there is insufficient memory, the garbage collector attempts to reclaim memory and then retries the allocation process. If there is still not enough space, an exception is raised.

public override int Allocate(string field)

{

var r = AllocateHeap(field);

if (r == -1)

{

Collect();

r = AllocateHeap(field);

}

if (r == -1) throw new InsufficientMemoryException();

return r;

}

private int AllocateHeap(string field)

{

var size = _heap.Size;

var cells = field.ToArray();

var requiredSize = cells.Length;

var begin = 0;

while (begin < size)

{

var c = _heap.Cells[begin];

if (c.Cell != '\0')

{

begin++;

continue;

}

if ((size - begin) < requiredSize) return -1;

var subCells = _heap.Cells.Skip(begin).Take(requiredSize).ToArray();

if (subCells.All(x => x.Cell == '\0'))

{

for (var i = begin; i < begin + requiredSize; i++)

{

_heap.Cells[i].Cell = cells[i - begin];

}

var pointer = new RuntimeHeapPointer(begin, requiredSize);

_heap.Pointers.Add(pointer);

return begin;

}

begin++;

}

return -1;

}

Important: The allocation process consistently seeks contiguous free space (line 23 of the source code above). For instance, if it has to allocate 8 cells, it must locate 8 free contiguous cells. This observation will prove crucial in the subsequent sections (see below).

What is the Mark-and-Sweep Algorithm?

The mark-and-sweep algorithm consists of two main phases: marking and sweeping.

- In the marking phase, the algorithm traverses through all the objects in the heap, starting from the roots (typically global variables or objects directly referenced by the program). It marks each visited object as "reachable" or "live" by setting a specific flag or attribute. Objects not marked during this traversal are considered unreachable and are potentially candidates for deallocation.

- In the sweeping phase, the algorithm scans through the entire heap, identifying and deallocating memory occupied by unmarked (unreachable) objects. The memory occupied by marked (reachable) objects is retained. After sweeping, the heap is once again considered a contiguous block of free memory, ready for new allocations.

The mark-and-sweep algorithm is known for its simplicity and effectiveness in identifying and reclaiming memory that is no longer in use. However, it has some drawbacks as we will see next.

To sum up, an object is considered dead (and therefore reclaimable) until it demonstrates otherwise by being reachable from somewhere in the program.

public override void Collect()

{

MarkFromRoots();

Sweep();

}

Implementing the Marking Phase

The mark-and-sweep algorithm must commence its traversal from roots. What constitutes these roots? Typically, they encompass global variables or references contained in the frame of each stack. In our simplified example, we will define roots as solely comprising the objects referenced in the stack.

private void MarkFromRoots()

{

var pointers = _heap.Pointers;

foreach (var root in _threads.SelectMany(x => x.Roots))

{

var startIndexInTheHeap = root.Value.StartIndexInTheHeap;

var pointer = pointers.FirstOrDefault(x => x.StartCellIndex == startIndexInTheHeap);

if (pointer == null) return;

pointer.SetMarked(true);

}

}

At the conclusion of the procedure, live objects have been marked, whereas dead ones have not.

Implementing the Sweeping Phase

The sweeping phase scans through the entire heap and reclaims memory from objects that are not marked.

private void Sweep()

{

var cells = _heap.Cells;

var pointers = _heap.Pointers;

foreach (var pointer in pointers.Where(x => !x.IsMarked))

{

var address = pointer.StartCellIndex;

for (var i = address; i < address + pointer.AllocationSize; i++)

{

cells[i].Cell = '\0';

}

}

var list = new List<RuntimeHeapPointer>();

foreach (var pointer in pointers.Where(x => x.IsMarked))

{

pointer.SetMarked(false);

list.Add(pointer);

}

pointers = list;

}

In this procedure, we don't forget to reinitialize marked pointers and set them to unmarked to ensure that the next collection works again in a clean slate.

Running the Program

We will execute the program with two lists of instructions. The first one is identical to the one in the previous article. The second one will highlight a phenomenon specific to this algorithm known as fragmentation.

With the same set of instructions as before, we observe that the program runs until the end with no exception raised. The word "Cascade" was correctly allocated because the garbage collector succeeded in reclaiming sufficient memory.

This algorithm ensures that memory is correctly reclaimed when needed. However, in the example below, we will observe an undesirable effect when we slightly modify our instructions and attempt to allocate memory for a long word (16 letters).

...

thread1;PUSH_ON_STACK;Enigmatic

thread2;POP_FROM_STACK;

thread1;PUSH_ON_STACK;Cascade

thread2;POP_FROM_STACK;

thread2;PUSH_ON_STACK;GarbageCollector <= 16-letter words

Curiously, an exception is raised even though there appears to be enough free memory in the heap.

There are 19 free cells in the heap and we are attempting to allocate 16 cells, so why doesn't the garbage collector manage to perform the task ?

In reality, the garbage collector attempts to locate 16 free CONTIGUOUS cells, but it seems that the heap does not possess them. Although it has more than 16 free cells, these are not contiguous, rendering the allocation impossible. This phenomenon is known as fragmentation and has some undesirable effects.

More generally, heap fragmentation refers to a situation where the free memory in a heap is scattered in non-contiguous blocks, making it challenging to allocate a large, contiguous chunk of memory, even if the total free space is sufficient. While the total amount of free memory may be substantial, the scattered nature of these blocks hinders the allocation of large, contiguous portions of memory.

In the context of the mark-and-sweep algorithm, the need for contiguous memory blocks for certain allocations can be impeded by the scattered arrangement of free memory cells in the heap. As a consequence, even if the total free space is adequate, the inability to find a sufficiently large, contiguous segment can lead to allocation failures.

Addressing heap fragmentation is therefore crucial for optimizing memory usage and improving the performance of a garbage collector.

You can find the continuation of this article here.

History

- 18th November, 2023: Initial version