Auto-Scale Windows Azure Cloud Apps Using the Autoscaling Application Block

Use the Autoscaling Application Block to automatically scale Azure apps up or down based on rules

February 14, 2012

A few months ago in this column I provided some general guidance on how to build auto-scaling logic for your Azure hosted services (see "Auto-Scaling Windows Azure Roles: The Basics"). Recently, the Microsoft patterns & practices team (msdn.microsoft.com/en-us/practices) released the Enterprise Library Integration Pack for Windows Azure, which includes a robust auto-scaling implementation that's ready to use out of the box. This application has a long name -- Microsoft Enterprise Library Autoscaling Application Block for Windows Azure -- and the current version, Enterprise Library 5.0 - Autoscaling Application Block, is available for download at the NuGet website.

Enterprise Library has become a staple in the .NET developer's arsenal, and with this release the library was enhanced with two new "blocks" that address challenges specific to Azure: enabling application elasticity via auto-scaling and improving application resiliency with a robust approach to handling transient failures. In this article, I will introduce the concepts utilized by Autoscaling Application Block, to give you a jump start on using it in your own projects.

Autoscaling Application Block Architecture

The purpose of the Azure Autoscaling Application Block is primarily to monitor various metrics of an Azure role and scale up the instance count, scale down the instance count, or change service settings for the role (in order to throttle in some application-specific way) based on rules. Before diving further into the mechanics of the Autoscaler, I want to take a moment to introduce the major components involved.

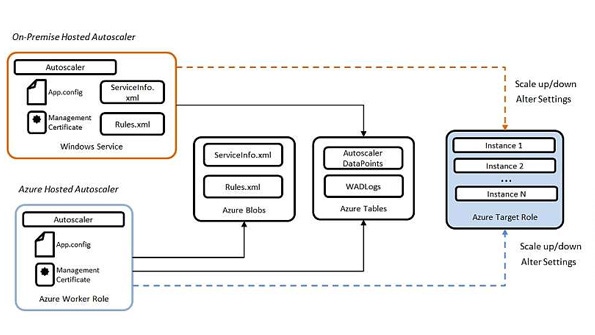

The Azure Autoscaling Application Block itself has a component, the Autoscaler, which must be hosted -- it can be hosted within an Azure web or worker role or within an on-premises Windows service, for example. Figure 1 shows how the major configuration and hosting pieces fit together in two common scenarios (on-premises and Azure-hosted).

Figure 1: Scenarios for hosting the Autoscaling Application Block

Let's begin by walking through the hosting scenarios in Figure 1. In the Azure Hosted Autoscaler scenario, an instance of the Autoscaler class can be instantiated and hosted within a web role or worker role. The Autoscaler communicates changes to target roles using the Azure management APIs, which is why any host of the Autoscaler will need access to the management certificate of the roles under its jurisdiction.

The actual configuration of the Autoscaling Block itself is stored within the application configuration of the host (web.config or app.config). This configuration includes what Azure storage accounts to use during execution, the thumbprint of the management certificate used during management operations, and settings used in sending email notifications regarding scaling events (as might be required by a systems administrator). The Enterprise Library includes a Visual Studio tool that you can use to graphically configure the settings for the blocks that modify these configuration files (see the Additional Resources box for the download link).

The service information store is another aspect of configuration that describes what Azure subscriptions, hosted services, and roles are managed by the Autoscaler. This store also names the storage account connection strings used to collect the various metrics employed in scaling decisions. This store (labeled ServiceInfo.xml in the figure) is typically just an XML file stored in Windows Azure Blob storage. Similarly, the rules store (Rules.xml in the figure) describes the criteria for what metrics are observed and what scaling actions can be taken in response. The rules file is also an XML file typically kept in Blob storage.

In Figure 1, you'll notice that two Azure tables are in use. The AutoscalerDataPoints table is where the collected metrics are stored. From there, the values of these metrics are used during rule evaluation. The WADLogs table is used when you have enabled the block to log runtime diagnostic messages using System.Diagnostics.

The only major differences in the on-premises scenarios are that you can choose to pull your service information and rules from the local file system instead of Blob storage and that you will likely host the Autoscaler instance in a process such as a Windows service.

Understanding Rules

Rules define the logic for taking some scaling action (e.g., increasing instance count) or throttling action (e.g., changing a setting). Each rule has a rank (defaults to 1), with positive integer values (1-n) as valid values where the higher the value of the rank, the greater the preference for that rule over others. Each rule must also define a name. A rule can optionally define a description (helpful for adding details for an administrator) and a Boolean expression controlling whether or not it is enabled.

Rules in the block come in two flavors, constraint rules and reactive rules. Figure 2 shows a complete rules configuration in XML. In the sections that follow, we will return to this figure to illustrate a particular aspect of rules definition.

Constraint Auto-Scaling Rules

Constraint rules define the upper and lower bound on the role instance count, optionally according to a schedule. On one end, these protect your baseline operational performance, and on the other, they protect your wallet by helping to control Azure usage costs.

Within a constraint rule, you define actions and timetables. In the case of a constraint rule, actions specify the range (in terms of min/max number of instances) allowed for a target role according to a schedule defined with a timetable. When no timetable is defined, and the constraint rule is not explicitly disabled, the range is assumed to be always in effect for the target role. A timetable at minimum specifies a start time and duration but can optionally specify a start date, end date, and utcOffset (to enable you to set values in local time). The timetable enables you to provide a child element that specifies periods during which the rule is active, which can take the form of

daily, weekly (naming which days), monthly (providing the day of the month), or yearly (providing the month and day of the month) or

relative monthly (providing the day of the week and position -- such as every second Thursday) and relative yearly (providing month and relative day -- such as first Wednesday in December)

In Figure 2, we define a single constraint rule named "Default." This constraint rule specifies for the "crawlers" target Azure role that there can never be fewer than four or more than eight instances, regardless of what the reactive rules might determine.

Reactive Auto-Scaling Rules

Reactive rules change instance count or apply throttling behavior in response to some condition. Reactive rules are defined with two parts:when and actions. In a nutshell, the elements within when define the condition governing when the actions are applied. A when may define one or more conditions as all, any, not, greater, greaterOrEqual, less, lessOrEqual, or equals. Each of these conditions refers to an operand by name (which identifies the source metric being evaluated against). We will return to operands momentarily.

The actions of a reactive rule can specify either a scale or changeSetting action. In the case of a scale action, the name of the target role to scale and the amount are both specified. Note that the amount of scaling can be absolute (e.g., -1 to scale down by one instance) or proportional (e.g., -25% to scale down by one instance a role that currently has four instances running). In the case of a changeSetting, you specify what service configuration setting to alter and what its new value should be.

Reactive rules evaluate the data collected by operands during the evaluation of conditions described in their when component.

Operands

The Autoscaling Block provides the following built-in heuristics, which supply the metrics from which reactive rules make their decision:

performance counters

Azure queue length

current role instance count

These "metrics datasources" are referred to as operands in the block.

In Figure 2, we define a performanceCounter operand that averages the CPU utilization over a period of five minutes for all instances in the "crawlers" Azure role. This operand is used by the "Crawlers high CPU" reactive rule, within its when element. There, a greaterOrEqual comparison is defined that returns true when the average CPU utilization over five minutes is greater than 20 percent. Upon matching that condition, the rule will scale the number of instances up in the "crawlers" role by two instances (as defined by the scale action).

Rule Evaluation and Extensibility

Constraint and reactive rules are used together. In fact, a constraint rule is required to be in place in order for a reactive rule to take effect. Moreover, a constraint rule always takes precedence over a reactive rule.

In terms of rule evaluation ordering, the highest ranked rule wins. Rules with the same rank are handled specially depending on what the outcome of the rules suggest:

Increase instance count scenario: Largest increase wins.

Decrease instance count scenario: Smallest decrease wins.

In regard to extensibility, the Autoscaling Block is highly extensible, including support for custom operands and custom actions.

Custom Operands

If you want to base your scaling decisions on something other than a performance counter or the length of a Windows Azure queue, you can define custom operands for use by reactive rules. For example, you might define a custom operand if you wanted to base your scaling decision on the message count of a Service Bus queue or wanted instead to base it on some application-specific metric (like the number of high-priority tickets).

Custom Actions

You can create custom reactive rule actions that do something other than alter instance counts (as the scale action does) or change settings (as enabled by the changeSetting action). For example, you might want to create an action that alters the maximum size of a SQL Azure database or sends an administrator an email notification.

Other Extensibility Points

The Autoscaling Block also enables you to define custom storage locations (such as a database) and formats (e.g., in a format other than XML) for your rules and service information. If you require it, you can also create your own logger, in case using System.Diagnostics or the Enterprise Library Logging Block is not sufficient.

Start Auto-Scaling!

With this conceptual background, getting started using the Autoscaling Block is very straightforward, particularly because of the excellent step-by-step guidance available on MSDN (see the "Adding the Autoscaling Block to a Host Project" link in the Additional Resources box). I won't reproduce those steps here; however, I will conclude with one caution that may save you some time getting started.

If you start from a new project (e.g., not one of the provided sample projects), when you add an XML file for your rules or service information using Visual Studio, you must carefully choose the encoding -- otherwise you might find yourself getting an odd Data at the root level is invalid error. This turns out to be caused by an incompatibility between the XMLSerializer handling UTF-8 encoded files that have byte-order-mark characters. Visual Studio will insist on adding these byte-order markers unless otherwise instructed. To correct this, after you add your XML file, within Visual Studio select File, Advanced Save Options and select in the Encoding list Unicode (UTF8 without signature) - Codepage 65001. This changes the encoding to UTF-8, updates the XML directive, and most importantly, does not introduce a byte-order marker, which makes it work with the XMLSerializer used by the scaling block to load your rules or service information file. Happy scaling!

About the Author

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)