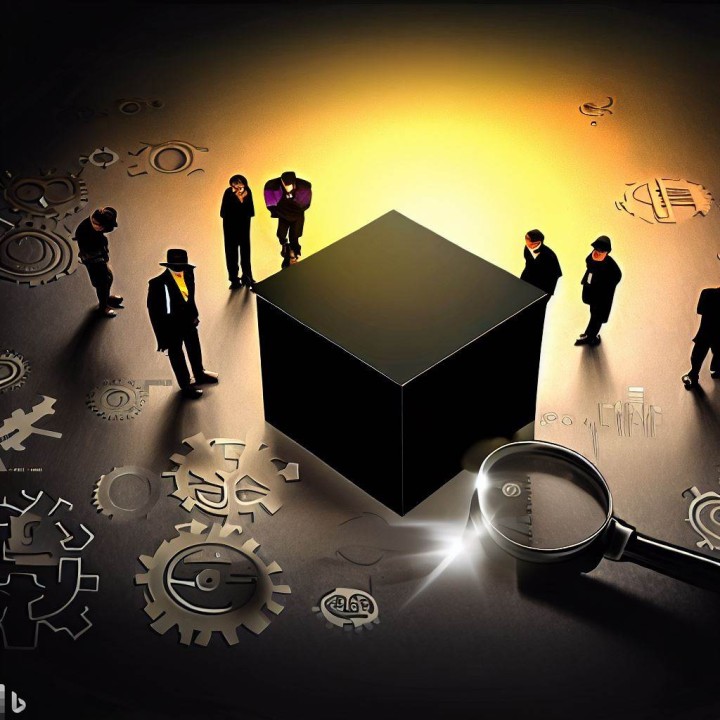

The Black Box Dilemma: Unraveling the Secrets of AI Decision-making

Abstract

In the last several years, artificial intelligence (AI) has advanced remarkably, transforming entire industries and influencing many facets of our daily lives. The "Black Box Dilemma" is a fundamental ethical dilemma that has emerged as a result of our increasing reliance on AI technology. The Black Box Dilemma is a complex issue, and this article explores its definition, consequences, and potential solutions to guarantee transparency, responsibility, and ethical AI use.

Introduction

Artificial intelligence (AI) has become a revolutionary technology that drives everything from virtual assistants to self-driving cars and medical diagnosis. These AI systems frequently make use of complex algorithms and deep learning neural networks, enabling them to learn from enormous datasets and make judgments based on patterns and correlations. While these abilities have allowed AI to outperform humans in a variety of jobs, they have also sparked worries about the transparency of its decision-making. This opacity is commonly referred to as the "Black Box Dilemma."

What is the Black Box Dilemma?

When an AI system's inner workings are kept secret from users or even its developers, the situation is referred to as the "Black Box Dilemma" in AI. This means that while we can witness the system's inputs and outputs, the processes by which the AI arrives at its decisions remain unclear, even to the designers of the system. This opaqueness presents significant challenges, as it raises questions about how AI arrives at its conclusions, what factors it considers, and whether biases are present in its decision-making.

Implications of the Black Box Dilemma

The opaqueness of AI decision-making has several far-reaching implications:

Lack of Explainability: When AI systems make important choices, such as granting a loan or diagnosing a health condition, there are questions regarding accountability because it is impossible to explain how the AI reached that conclusion. Users and stakeholders have a right to know the logic underlying outcomes produced by AI.

Bias and Fairness Concerns: AI systems learn from previous data, and if the training data contains biases, the AI system may continue to do so, producing biased results. Without knowing how the AI came to its findings, detecting and eliminating biases becomes challenging.

Regulatory Compliance: Transparency and accountability in decision-making processes are requirements in many sectors. The Black Box Dilemma can impede efforts to comply with regulations, making it difficult for corporations to implement AI systems in highly regulated industries.

Safety and Trust: The inability to review AI judgments in safety-critical applications like autonomous vehicles might undermine confidence in the system and reduce trust. If users are uncertain about the logic that is being used by AI systems, they could be hesitant to rely on them.

Solutions to the Black Box Dilemma

Addressing the Black Box Dilemma is crucial for the responsible and ethical use of AI. Several approaches can help tackle this challenge:

Explainable AI (XAI): The creation of AI systems with transparent decision-making processes is a current area of research. Human-comprehensible explanations for AI decisions are the goal of XAI techniques, which helps users grasp the elements that contributed to the decision's conclusion.

Interpretable Models: In some cases, interpretable models like decision trees or rule-based systems can be used in place of complicated deep learning models, which are often referred to as "black boxes." In exchange for some performance loss, these approaches provide transparency.

Algorithmic Auditing: AI systems can be regularly audited to help find biases and make sure they're following the law and ethical guidelines. Businesses must regularly assess the efficacy of AI and be open about the data used for training.

Regulations and Standards: Standards for accountability and transparency in AI systems can be greatly influenced by governments and regulatory agencies. Legislation that requires justifications for certain AI decisions may promote the adoption of more transparent methods.

Conclusion

The AI "Black Box Dilemma" poses a substantial obstacle to maintaining AI system accountability, transparency, and ethics. To increase confidence in AI technology and promote its safe and equitable adoption across a range of industries, it is imperative that this dilemma be resolved. In order to create and execute solutions that strike a balance between performance and explainability, researchers, legislators, and business leaders must work together. This will open the door for an AI-driven future that is more responsible. We can only fully realize AI's potential for the good of humanity by demystifying the "black box" and pouring light on its decision-making procedures. The future is here.

CISO | Board Member | AIML Security | CIS & MITRE ATT&CK | OWASP Top 10 for LLM Core Team Member | Incident Response |

10moGreat post Murtadha Noman!