Abstract

Health is very important for human life. In particular, the health of the brain, which is the executive of the vital resource, is very important. Diagnosis for human health is provided by magnetic resonance imaging (MRI) devices, which help health decision makers in critical organs such as brain health. Images from these devices are a source of big data for artificial intelligence. This big data enables high performance in image processing classification problems, which is a subfield of artificial intelligence. In this study, we aim to classify brain tumors such as glioma, meningioma, and pituitary tumor from brain MR images. Convolutional Neural Network (CNN) and CNN-based inception-V3, EfficientNetB4, VGG19, transfer learning methods were used for classification. F-score, recall, imprinting and accuracy were used to evaluate these models. The best accuracy result was obtained with VGG16 with 98%, while the F-score value of the same transfer learning model was 97%, the Area Under the Curve (AUC) value was 99%, the recall value was 98%, and the precision value was 98%. CNN architecture and CNN-based transfer learning models are very important for human health in early diagnosis and rapid treatment of such diseases.

Similar content being viewed by others

Introduction

The healthcare industry has been rapidly transformed by technological advances in recent years, and an important component of this transformation is artificial intelligence (AI) technology. AI is a computer system that simulates human-like intelligence and has many applications in medicine. One such area is the fight against brain tumors. Brain tumors are a major public health problem in the healthcare sector, and accurate diagnosis, treatment, and follow-up processes are critical. AI has become an important tool for improving these processes and has great potential for early diagnosis and treatment of brain tumors.

Brain tumors affect human health due to their location1. AI is designed to help diagnose and treat complex diseases such as brain tumors by combining technologies such as big data analytics, machine learning, and deep learning. AI has the ability to detect and classify tumors by analyzing brain imaging techniques, such as Magnetic Resonance Imaging (MRI). AI algorithms can help determine the size, location, class, and aggressiveness of tumors. This helps physicians make a more accurate diagnosis and treatment plan, and helps patients better understand their health.

AI can also be used to track a patient's progress through treatment. AI-based analytics can be used to assess treatment response and predict potential tumor recurrence. In this way, patients' treatment plans can be more effectively organized and individualized treatment approaches can be developed.

In this study, difference detection was performed on brain images. Classification was performed with multilayer CNN and CNN-based transfer learning methods on 4 classes labeled by physicians.

The contribution of the study is as follows.

-

We investigate the transfer learning method with the highest performance in the classification process of transfer learning methods on brain images.

-

We investigate the performance of CNN and transfer learning on brain images using CNN as a multi-layer without using transfer learning.

-

We investigate whether it is possible to achieve good results with a skewed and poor quality dataset.

The flow diagram of the study is shown in Fig. 1.

Studies on brain tumors in the last 5Â years will be scanned from indexes such as WOS and IEEE and the details of the related studies will be explained in this section.

In a study of 3064 MRI images from 233 patients belonging to 4 different tumor classes (meningioma, glioma, pituitary, tumor) with support vector machine (SVM) on datasets generated after various preprocessing, an accuracy value between 94% was obtained1. Santhosh and colleagues presented a classification model aimed at distinguishing between normal and abnormal brain tissue. This system relied on a combination of thresholding and watershed segmentation techniques. Using SVM, the classification accuracy reached an impressive 85.32% across all categories2. Rafael et al. achieved an accuracy rate of 89.6% using SVM for brain tumor classification3. Similarly, Gupta and Sasidhar achieved 87% accuracy using SVM4. Gumaei et al. proposed a classification framework that harnesses the power of regularized extreme learning machine (RELM) for the purpose of distinguishing between benign and malignant brain tumors. Their study involved the collection and preprocessing of MRI data related to meningioma, glioma, and pituitary tumors. The feature selection process was performed using GIST, Normalized GIST (NGIST), and PCA-NGIST methods. Using a meticulous fivefold cross-validation procedure, the RELM technique yielded an impressive overall accuracy of 92.61%5. 92% accuracy was achieved using SVM machine learning on a dataset of 90 normal and 154 tumor brain images6. 3264 brain tumor images from Kaggle were classified using CNN, LSTM and CNN-LSTM hybrid. The results obtained; CNN 89%, LSTM 90.02%, CNN-LSTM 92% accuracy7. Srinivas and co-authors conducted a comprehensive study involving a comparative performance analysis of transfer learning based CNN models pre-trained with VGG16, ResNet-50 and InceptionV3 architectures for brain tumor cell prediction. In particular, InceptionV3 had an accuracy of 78%, VGG16 had a high accuracy of 96%, and ResNet-50 had an accuracy of 95%8. In a CNN-based study of brain tumor images, Choudhury, Mahanty, Kumar, and Mishra achieved an accuracy of 96.08%9, while Martini and Oermann's CNN-based study achieved an accuracy of 93.9%10. Between 2005 and 2010, a study was conducted to predict the classes of meningioma, glioma, and pituitary gland from brain images of 233 patients in China. In this study, 4-layer CNN was used. The accuracy was 91.3%11. In a study of 200 brain tumor images, the accuracy of image segmentation was 92.14%12. Classification studies on 102 brain tumor patients using SVM and KNN machine learning methods achieved 85% and 88% accuracy, respectively13. In a classification study of 233 brain tumor patients, SVM and KNN were used. In this study, the accuracy result was 91.28%14. In a classification study using CNN on 233 patient images with meningioma, glioma or pituitary tumor, the accuracy was 91.43% with fivefold cross-validation15. The author introduced a novel approach known as a Capsule Network (CapsNet), which effectively integrates brain MRI images and approximate tumor boundaries for the purpose of brain tumor classification. This study achieved an impressive accuracy of 90.89% in accurately classifying brain tumors16. In this study, as seen in the literature, CNN and CNN-based transfer learning methods will be used for brain tumor detection.

Material method

This section describes the dataset, the classification algorithm (CNN) used in the study, and the transfer learning architectures VGG19, VGG16, InceptionV3, EfficientNetB4 developed based on this algorithm.

Data source

The dataset consists of a total of 2870 human brain MRI images systematically classified into four different categories: glioma, meningioma, no tumor and pituitary. The distribution of labeled images into these four classes is shown in Table 1 for reference17.

Glioma is the most common type of malignant brain tumor and typically occurs in glial cells in the brain and spinal cord. Meningioma is a benign type of brain tumor, but can become malignant without appropriate intervention. These classes are labeled by physicians. The size of the input images is 64âÃâ64. Table 1 shows the training, test and validation set discriminations by class.

Deep learning

Deep learning is a subset of machine learning that focuses on training artificial neural networks to perform complex tasks by learning patterns and representations directly from data. Unlike traditional machine learning approaches that require manual feature engineering, deep learning algorithms autonomously extract hierarchical features from data, leading to the creation of powerful and highly accurate models18,19,20. In this study, a CNN architecture is employed.

Convolution neural network

Convolutional neural networks represent a major breakthrough in deep learning and computer vision. These architectures are specifically designed to extract meaningful features from complex visual data, such as images and video. The inherent structure of the CNN, consisting of convolutional layers, pooling layers, and fully connected layers, mimics the ability of the human visual system to recognize patterns and hierarchical features. Convolutional layers use convolutional operations to detect local features, which are then progressively abstracted by pooling layers that condense the information. The resulting hierarchical representations are then fed into fully connected layers for classification or regression tasks. CNN have redefined the landscape of image recognition, achieving remarkable success in diverse domains ranging from image classification and object detection to face recognition and medical image analysis21.

Transfer learning

Transfer learning stands as a fundamental concept within both machine learning and deep learning, involving the utilization of knowledge garnered from training a model on a particular task and subsequently applying that knowledge to another related task. In the realm of neural networks, transfer learning manifests significant potency. It encompasses the process of employing a pre-trained model, typically trained on a comprehensive and varied dataset, and fine-tuning it on a fresh dataset or task 21,22,23.

In this study, transfer learning models InceptionV3, VGG16, VGG19, and EfficientNetB4 were used in the classification process.

VGG

This architecture stands as a notable CNN model introduced by24, which builds upon its predecessor, the AlexNet model. It achieves this enhancement by replacing the initial 11âÃâ11 and 5âÃâ5 kernels in the first two convolutional layers with a series of consecutive 3âÃâ3 kernels. The model occupies approximately 528 MB of storage space and has achieved a documented top-5 accuracy of 90.1% on ImageNet data, encompassing approximately 138.4 million parameters. The ImageNet dataset comprises approximately 14 million images categorized across 1000 classes. The training of VGG16 was conducted on robust GPUs over the span of several weeks. This study used VGG16 and VGG19.

EfficientNET

EfficientNet is a family of scalable and efficient CNN models. The main goal of this series is to achieve better performance with fewer parameters. The term "EfficientNet" is a combination of the words "efficiency" and "network". The model series is mainly used in visual processing tasks such as image classification.

EfficientNet is a family of models that delivers competitive results in both performance and computational cost. It offers variations of different size and complexity at different scales. Higher numbered models are typically larger and more complex, but require more computing power. It was the top performing model in the ImageNet competition24.

Inception

The Inception architecture is an architecture used in the field of deep learning and CNN. It is designed to perform feature extraction and classification tasks more efficiently. First introduced in a paper titled "Going Deeper with Convolutions", the Inception architecture aims to provide better performance when processing complex visual datasets 25. The Inception architecture has a structure that includes parallel convolution layers and combines the outputs of these layers. In this way, features of different sizes can be captured and processed simultaneously25.

Performance metric

Performance evaluation methods such as Accuracy, Precision, Recall, and F-score are used to evaluate models created for classification problems such as image processing. These methods are obtained from the confusion matrix. The confusion matrix is given in Table 226.

In Table 2, the symbols \({T}_{N}\), \({T}_{P}\), \({F}_{P}\), and \({F}_{N}\) correspond to the true negative, true positive, false positive, and false negative values, respectively. From Eqs. (1) to (4), Accuracy, Precision, Recall and F-score is given respectively.

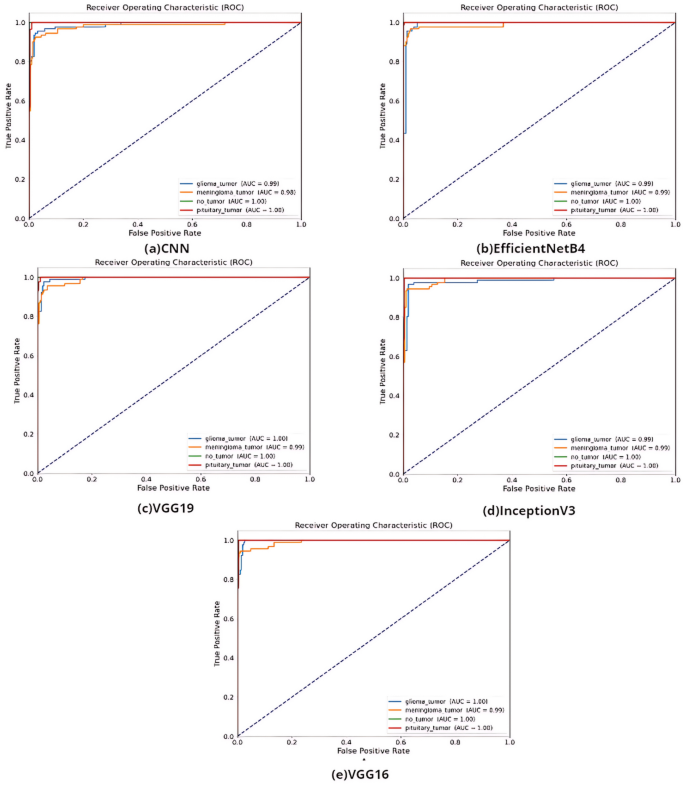

Receiver operating characteristic (ROC) curve

The ROC Curve is a graphical tool used to evaluate the performance of a classification model, particularly in binary classification scenarios. It provides a visualization of the sensitivity and specificity of the model, showing their variation as thresholds are changed 27. The ROC curve is plotted with the false positive rate on the x-axis and the True Positive Rate (TPR) on the y-axis. An optimal classifier, characterized by a TPR of one and a false positive rate of zero, lies in the upper left corner of the graph. The curve takes shape around this point, illustrating the performance of the model across different thresholds26.

In addition, the area under the receiver operating characteristic (ROC) curve, commonly referred to as the "area under the curve", succinctly summarizes the overall model performance in a single metric. The AUC value ranges from 0 to 1, with values closer to 1 indicating the increased discriminative ability of the model26. The ROC curve and AUC value serve as essential tools for comparing models and understanding classification model performance. A higher AUC value generally indicates superior model performance, while the curve illustrates the model's performance strengths and weaknesses at various thresholds26.

Approval for participation

As the data is open source, there are no experiments on humans conducted by the authors. Open source has been studied on MRI images.

Experimental settings and results

This study addresses the problem of image classification using deep learning methods. The most important and widely studied of these problems is that of health images. In this context, five different models (InceptionV3, EfficientNetB4, VGG16, VGG19, Multi-Layer CNN) were selected for the classification of brain tumors and their performances were compared on the same dataset. 10% of the dataset was used for testing, 15% for validation and 75% for training. All experimental setup and results were done at Google Colab.

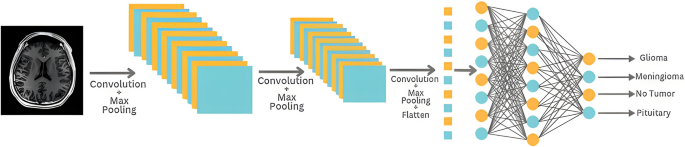

Multi-layer CNN

First, we need to determine the architecture of our model. The input form of our data is 400âÃâ400 and has 3 channels. Since we have a total of 4 different classes, the number of output classes is set to 4. Our model has a structure that includes convolutional and pooling layers. First, there is a 3âÃâ3 convolutional layer with 32 filters. This is followed by a 2âÃâ2 max pooling layer. This reduces the size by emphasizing lower-level features. To deepen our model, this structure is repeated twice, adding convolutional layers with 64 and 128 filters, respectively, and maximum pooling layers of size 2âÃâ2.

The resulting feature map is transformed into a flat vector with a flattening layer. A hidden (dense) layer of 128 neurons is then added. This layer deepens the learned features and increases generalization. Finally, the output layer has 4 neurons and calculates the probabilities between classes with the softmax activation function. To train our model, we need to determine the optimal function and metrics. In this paper, we use the Rectified Adam optimization algorithm. This algorithm dynamically adjusts the learning rate and helps to use gradients more efficiently. Also, categorical cross-entropy is used as the loss function during training, as it is widely used in multiclass classification task.

The metrics tracked during training are accuracy, as well as precision and recall. These metrics are important for evaluating the classification performance of the model. In addition, a reduced learning rate recall (ReduceLROnPlateau) is used to dynamically adjust the learning rate. This recall reduces the learning rate when the loss function flattens out during the training process, resulting in more stable training. The epoch is set to 14 and the batch size to 10.

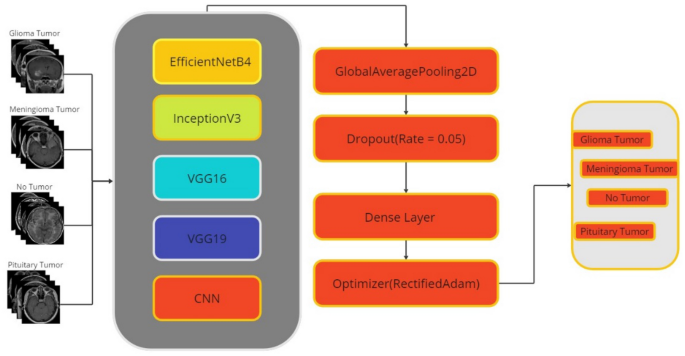

CNN-based transfer learning

In transfer learning architectures, all parameters and layers outside the model are the same, but after the last 3 layers of transfer learning models are removed, layers unique to the dataset are added instead: the GlobalAveragePooling2D layer contains fewer parameters than the Flatten layer, which reduces the risk of overfitting and helps build a more efficient model. Also, while the Flatten layer is used to organize the data, the GlobalAveragePooling2D layer is used for feature extraction, making the network learning process more efficient.

Due to the fact that the training data tends to learn very fast compared to the validation data, we modified the ratio of the dropout layers in the original architectures. For all models, the dilution rate was set to 0.05. During the model training process, the designated optimizer was "RectifiedAdam", with the optimizer parameters configured as follows: learning_rateâ=â0.0001, beta_1â=â0.9, beta_2â=â0.999, and epsilonâ=â1eâ08. The loss function selected is categorical_crossentropy, while the metrics used include precision, recall, categorical accuracy, and accuracy. This completes the pre-training of the model. The final layer of the model is the dense layer, which contains 4 neurons, which is usually the number of output classes in classification problems. The activation function of this layer is "softmax". The softmax function makes the output values interpretable as probabilities between classes. Furthermore, the data type of this layer is "float64", which means that the output values are of a 64-bit double precision type. The layer also applies regularization using the "kernel_regularizer" property. The L2 regularization used here aims to reduce the risk of overfitting by limiting the size of the weights. The regularization coefficient of 0.1, denoted by "regulars. l2 (0.1)", controls the effectiveness of the regularization.

During the model training process, the "ReduceLROnPlateau" function of the Keras library was used as a backpropagation algorithm. This function automatically reduced the learning rate when the model approached a local optimum or when the loss value did not decrease. The parameters of the "ReduceLROnPlateau" function are as follows monitor: The metric monitored is usually "val_loss" (validation loss). This is the metric used to determine if the learning rate should be reduced:

-

patience: The expected patience time for lowering the learning rate, i.e. how long the metric should not improve.

-

factor: The factor used to reduce the learning rate. For example, a value of 0.3 reduces the learning rate by 30%.

-

min_lr: Specifies the minimum achievable learning rate. This limits the learning rate without making it infinitesimal.

Using this feature allows for more stable and efficient model training, streamlining the process of fine-tuning training parameters without the need to manually adjust the learning rate. The training program was run over 14 epochs with batches of size 10. Details of the multilayer CNN model used in the study are presented in Fig. 2, which outlines its architectural features.

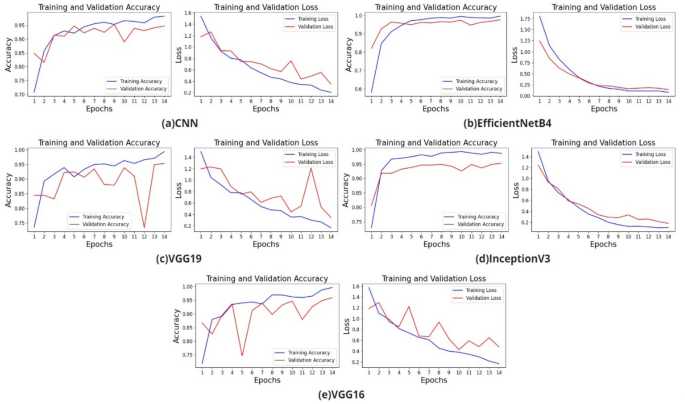

The training and validation accuracy loss graphs of the models created with VGG19, EfficientNetB4, InceptionV3 transfer learning, and CNN are shown in Fig. 3.

Table 3 shows the accuracy, F-score, Recall, Precision and AUC results of the models created in the study.

According to Table 3, the best accuracy result was obtained by VGG16 with 97%. It is ahead of other methods with F-score value of 97%, AUC value of 99%, recall value of 98% and precision values of 98%. The ROC curves of the models created in the study are shown in Fig. 4.

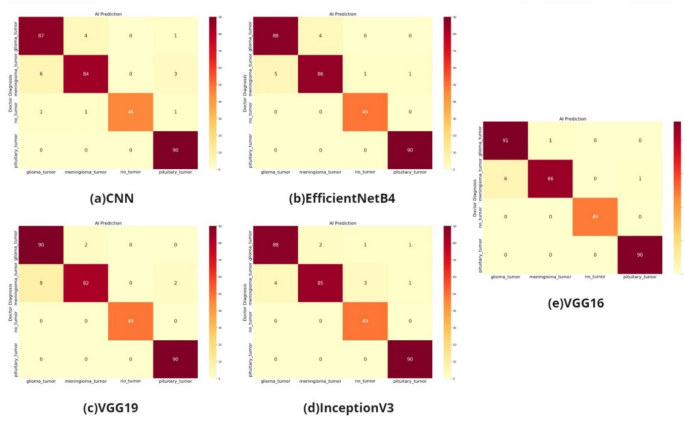

According to the AUC values in Fig. 4, the transfer learning models VGG, InceptionV3, and EfficientNetB4 and the models built with CNN have distinctive features. The confusion matrix of the study on the classification of glioma, meningioma, non-tumor normal patients, pituitary tumor patients in the dataset by tumor type is shown in Fig. 5.

As shown in the confusion matrix in Fig. 5, the classification performance is high for all four models (VGG16 and VGG19 models, CNN model, EfficientNetB4 model, InceptionV3 model).

Results and discussion

As part of the study, CNN and CNN-based transfer learning models such as InceptionV3, EfficientNetB4, VGG19 were trained on open-source shared brain tumor patients. The best accuracy result was obtained with EfficientNetB4 with 95%. The comparison of the brain tumor studies with the literature is shown in Table 4.

As shown in Table 4, the CNN-based transfer learning models used in the study performed better. AI in healthcare plays an important role in the management of complex diseases such as brain tumors. AI enables faster, more accurate, and more effective diagnosis and treatment processes. However, AI technology is not intended to completely replace doctors, but to support and enhance their work. To realize the full potential of AI, it is important to consider issues such as ethics, security and privacy. In the future, AI-based solutions will continue to contribute to better management of brain tumors and other health problems, and improve the quality of life for patients. As seen in this study, AI-based studies will increase their importance to human health, from early diagnosis to positive progress in the treatment process.

Based on the results of this study, transfer learning methods should be preferred especially in image processing-based applications to support health decision makers. The data obtained from MRI or CT can be used as an early warning system to help health decision makers make quick and accurate decisions. Therefore, in addition to empirical analysis, AI-based applications should take a more active role as soon as possible. To this end, the diagnosis of diseases from instant CT or MR images will be investigated in the coming years.

Limitation

With our motivation to investigate how it will work in single CNN and multilayer CNN based transfer learning models, we subjected the dataset to classification as it is without rotation and cropping operations, which is the most important limitation of our study.

Data availability

The dataset is shared open source. Availability Link: https://doi.org/https://doi.org/10.34740/kaggle/dsv/1183165.

References

Wallis, D. & Buvat, I. Clever Hans effect found in a widely used brain tumour MRI dataset. Med. Image Anal. 77, 102368. https://doi.org/10.1016/j.media.2022.102368 (2022).

Hatcholli Seere, S. K. & Karibasappa, K. Threshold segmentation and watershed segmentation algorithm for brain tumor detection using support vector machine. Eur. J. Eng. Technol. Res. 5(4), 516â519. https://doi.org/10.24018/ejeng.2020.5.4.1902 (2020).

Ortiz-Ramón, R., Ruiz-España, S., Mollá-Olmos, E. & Moratal, D. Glioblastomas and brain metastases differentiation following an MRI texture analysis-based radiomics approach. Phys. Med. 76, 44â54. https://doi.org/10.1016/j.ejmp.2020.06.016 (2020).

Gupta, M. & Sasidhar, K. Non-invasive brain tumor detection using magnetic resonance imaging based fractal texture features and shape measures. In 2020 3rd International Conference on Emerging Technologies in Computer Engineering: Machine Learning and Internet of Things (ICETCE), IEEE, 2020, 93â97. https://doi.org/10.1109/ICETCE48199.2020.9091756.

Gumaei, A., Hassan, M. M., Hassan, M. R., Alelaiwi, A. & Fortino, G. A hybrid feature extraction method with regularized extreme learning machine for brain tumor classification. IEEE Access 7, 36266â36273. https://doi.org/10.1109/ACCESS.2019.2904145 (2019).

Shahajad, M., Gambhir, D. & Gandhi, R. Features extraction for classification of brain tumor MRI images using support vector machine. In 2021 11th International Conference on Cloud Computing, Data Science and Engineering (Confluence), IEEE, 2021, 767â772. https://doi.org/10.1109/Confluence51648.2021.9377111.

Vankdothu, R., Hameed, M. A. & Fatima, H. A brain tumor identification and classification using deep learning based on CNN-LSTM method. Comput. Electr. Eng. 101, 107960. https://doi.org/10.1016/j.compeleceng.2022.107960 (2022).

Srinivas, C. et al. Deep transfer learning approaches in performance analysis of brain tumor classification using MRI images. J. Healthc. Eng. 2022, 1â17. https://doi.org/10.1155/2022/3264367 (2022).

Choudhury, C. L., Mahanty, C., Kumar, R. & Mishra, B. K. Brain tumor detection and classification using convolutional neural network and deep neural network. In 2020 International Conference on Computer Science, Engineering and Applications (ICCSEA), IEEE, 2020, 1â4. https://doi.org/10.1109/ICCSEA49143.2020.9132874.

Martini, M. L. & Oermann, E. K. Intraoperative brain tumour identification with deep learning. Nat. Rev. Clin. Oncol. 17(4), 200â201. https://doi.org/10.1038/s41571-020-0343-9 (2020).

Sarkar, S., Kumar, A., Aich, S., Chakraborty, S., Sim, J.-S., & Kim, H.-C. A CNN based approach for the detection of brain tumor using MRI scans prediction of idiopathic pulmonary fibrosis (IPF) disease severity in lungs disease patients view project IoT based cyber physical system view project A CNN based approach for the detection of brain tumor using MRI scans. 2020, [Online]. https://www.researchgate.net/publication/342048436.

Arunkumar, N. et al. Fully automatic model-based segmentation and classification approach for MRI brain tumor using artificial neural networks. Concurr. Comput. 32, 1. https://doi.org/10.1002/cpe.4962 (2020).

Zacharaki, E. I. et al. Classification of brain tumor type and grade using MRI texture and shape in a machine learning scheme. Magn. Reson. Med. 62(6), 1609â1618. https://doi.org/10.1002/mrm.22147 (2009).

Cheng, J. et al. Enhanced performance of brain tumor classification via tumor region augmentation and partition. PLoSÂ One 10(10), e0140381. https://doi.org/10.1371/journal.pone.0140381 (2015).

Paul, J. S., Plassard, A. J., Landman, B. A., & Fabbri, D. Deep learning for brain tumor classification. In (Krol, A. & Gimi, B., Eds.), 2017, 1013710. https://doi.org/10.1117/12.2254195.

Afshar, P., Plataniotis, K. N., & Mohammadi, A. Capsule networks for brain tumor classification based on MRI images and course tumor boundaries, 2018.

Sartaj, B., Ankita, K., Prajakta, B., Sameer, D., & Swati, K. Brain tumor classification (MRI). Kaggle (2020). https://doi.org/10.34740/kaggle/dsv/1183165.

Basarslan, M. S. & Kayaalp, F. Sentiment analysis with machine learning methods on social media. Adv. Distrib. Comput. Artif. Intell. J. 9(3), 5â15. https://doi.org/10.14201/ADCAIJ202093515 (2020).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521(7553), 436â444. https://doi.org/10.1038/nature14539 (2015).

Sevik, A., Erdogmus, P., & Yalein, E. Font and Turkish letter recognition in images with deep learning. In 2018 International Congress on Big Data, Deep Learning and Fighting Cyber Terrorism (IBIGDELFT), IEEE, 2018, 61â64. https://doi.org/10.1109/IBIGDELFT.2018.8625333.

Bal, F. & Kayaalp, F. A novel deep learning-based hybrid method for the determination of productivity of agricultural products: Apple case study. IEEE Access 11, 7808â7821. https://doi.org/10.1109/ACCESS.2023.3238570 (2023).

Kabakus, A. T. & Erdogmus, P. An experimental comparison of the widely used pre-trained deep neural networks for image classification tasks towards revealing the promise of transfer-learning. Concurr. Comput. https://doi.org/10.1002/cpe.7216 (2022).

BaÅarslan, M. S. & Kayaalp, F. MBi-GRUMCONV: A novel Multi Bi-GRU and Multi CNN-Based deep learning model for social media sentiment analysis. J. Cloud Comput. 12(1), 5. https://doi.org/10.1186/s13677-022-00386-3 (2023).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition, 2014.

Chollet, F. Xception: Deep learning with depthwise separable convolutions, 2016.

Kayaalp, F., Basarslan, M. S., & Polat, K. TSCBAS: A novel correlation based attribute selection method and application on telecommunications churn analysis. In 2018 International Conference on Artificial Intelligence and Data Processing (IDAP), IEEE, 2018, 1â5. https://doi.org/10.1109/IDAP.2018.8620935.

Gulmez, S., Kakisim, A. G., & Sogukpinar, I. Analysis of the dynamic features on ransomware detection using deep learning-based methods. In 2023 11th International Symposium on Digital Forensics and Security (ISDFS), Chattanooga, TN, USA, 2023, 1â6. https://doi.org/10.1109/ISDFS58141.2023.10131862.

Cheng, J. et al. Enhanced performance of brain tumor classification via tumor region augmentation and partition. PLoSÂ One 10(10), e0140381 (2015).

Author information

Authors and Affiliations

Contributions

M.Z.K., data analysis, experiments and evaluations, manuscript draft preparation M.S.B., conceptualization,defining the methodology, evaluations of the results, and original draft and reviewing, supervision. The authors affirm there is no figure of any participant in the article.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Khaliki, M.Z., BaÅarslan, M.S. Brain tumor detection from images and comparison with transfer learning methods and 3-layer CNN. Sci Rep 14, 2664 (2024). https://doi.org/10.1038/s41598-024-52823-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-52823-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.