Abstract

This paper addresses the current existence of attribute reduction algorithms for incomplete hybrid decision-making systems, including low attribute reduction efficiency, low classification accuracy and lack of consideration of unlabeled data types. To address these issues, this paper first redefines the weakly labeled relative neighborhood discernibility degree and develops a non-dynamic attribute reduction algorithm. In addition, this paper proposes an incremental update mechanism for weakly tagged relative neighborhood discernibility degree and introduces a new dynamic attribute reduction algorithm for increasing the set of objects based on it. Meanwhile, this paper also compares and analyses the improved algorithm proposed in this study with two existing attribute reduction algorithms using 8 data sets in the UCI database. The results show that the dynamic attribute reduction algorithm proposed in this paper achieves higher attribute reduction efficiency and classification accuracy, which further validates the effectiveness of the algorithm proposed in this paper.

Similar content being viewed by others

Introduction

Attribute reduction1,2,3,4,5,6,7,8, also known as feature selection, has garnered significant attention in the current era of continuous data expansion. It plays a crucial role in machine learning and data mining by aiming to diminish complexity and redundancy in data. This is achieved through the elimination of unnecessary or redundant attributes, simplifying the dataset and improving analysis efficiency. Consequently, numerous methods for attribute reduction have been developed and extensively utilized9,10,11,12,13,14,15,16,17,18,19.

In recent years, scholars have conducted in-depth research on the attribute reduction of traditional rough sets20,21,22 and have proposed many efficient algorithms for attribute reduction. However, traditional attribute reduction algorithms are only suitable for static datasets. In todayâs big data environment, data in practical applications is often dynamic, resulting in a common phenomenon of increasing the object set. As a result, scholars have conducted research on attribute reduction for changes in the object set. Shu et al.23 proposed a dependency-based dynamic attribute reduction algorithm for incomplete systems, while Wei et al.24 in their research on symbolic data, proposed a discriminative matrix-based algorithm and constructed a dynamic attribute reduction algorithm. Moreover, Yang et al.25 proposed a dynamic attribute reduction algorithm based on relative discriminative relationships in fuzzy rough sets, and Liang et al.26 proposed a dynamic attribute reduction algorithm based on information entropy for discrete data. Xiang27 further contributed to the field by proposing a dynamic attribute reduction algorithm that increases object sets for neighborhood systems, and Sheng et al.28 came up with a dynamic attribute reduction algorithm based on neighborhood discernibility degree for mixed data. These research efforts have significantly advanced the methodology and algorithms available for dynamic attribute reduction, addressing the challenges posed by the increasing object set in practical big data applications.

However, the aforementioned dynamic attribute reduction algorithm can only handle labeled data sets and does not consider unlabeled data types. In real-world applications, data often contains missing or omitted information due to factors such as data collection methods, human resources, and material resources. This leads to incomplete and unlabeled data, making dynamic attribute reduction in weakly labeled incomplete decision systems an important issue. However, there is limited research on this aspect by scholars.

Relative neighborhood discernibility degree can not only perform attribute reduction for labeled data, but also in unlabeled datasets, relative neighborhood discernibility degree can also perform attribute reduction for data without restriction to labeled datasets. Moreover, relative neighborhood discernibility degree has the features of high accuracy, adaptability, efficiency and robustness, and has a wide application prospect in the field of attribute reduction. Its advantages make it better able to meet the needs of users and improve the quality and effect of data when dealing with data in practical applications. Therefore, this paper proposes an improved dynamic attribute reduction algorithm based on weak label relative neighborhood discernibility degree to address this problem.

In reference29, an attribute reduction algorithm for incomplete mixed decision systems was given without providing an attribute reduction algorithm for dynamic changes in the object set and weakly labeled data type. Thus, inspired by reference29, this study improves the definition of weakly labeled relative neighborhood discernibility degree and proposes a non-dynamic attribute reduction algorithm for weakly labeled incomplete mixed decision systems. Furthermore, an incremental update mechanism for weakly labeled relative neighborhood discernibility degree is presented to address the addition of object sets and a dynamic attribute reduction algorithm is designed. Finally, experimental analysis on datasets from the UCI database verifies the higher efficiency of the proposed dynamic attribute reduction algorithm.

This article introduces several innovations: (1) a refinement of the definition of weakly labeled relative neighborhood discernibility degree based on relative neighborhood discernibility degree; (2) a non-dynamic attribute reduction algorithm for weakly labeled incomplete mixed decision systems; (3) the development of an incremental update mechanism for increasing object sets; (4) an dynamic attribute reduction algorithm for weakly labeled incomplete mixed decision systems with an increased object set.

The article is structured as follows: Section 2 introduces the basic concepts of rough sets and weakly-labeled incomplete mixed decision systems. Following this, Section 3 presents the non-dynamic attribute reduction algorithm for weakly-labeled incomplete mixed decision systems. In Section 4, the incremental update mechanism for increasing the object set with relative neighborhood discernibility degree and dynamic attribute reduction algorithm for weakly labeled incomplete mixed decision systems is described. Section 5 is dedicated to the presentation of the experimental analysis, and Section 6 summarizes and introduces future research directions.

Basic knowledge

In this section, we will cover the fundamental concepts of weakly labeled incomplete mixed decision systems and rough sets. For further details, please refer to reference35,36,37.

Definition 130

\(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\) is used to represent a weakly labeled incomplete mixed decision system; where \(U = \left\{ {x_{1} ,x_{2} , \cdots ,x_{n} } \right\}\) represents a non-empty finite set of objects, also called the universe;\(C\) represents a non-empty finite set of attributes,\(C = C_{d} \cup C_{r}\),\(C_{d}\) represents a discrete set of attributes,\(C_{r}\) represents a continuous set of attributes,\(D\) is decision attribute,\(C \cup D = \emptyset\),\(V = \mathop \cup \limits_{a \in C} V_{a}\),\(V_{a}\) is a set of all possible values of attributes \(a \in C\);\(f\) denotes the mapping function of \(U \times C \to V\),which assigns a value to each objectâs attributes, means \(\forall a \in C\),\(x \in U\),\(f(x,a) \in V_{a}\),and at least one attribute \(a \in C\),with \(f(a,x) = *\),that is the attribute has missing values. Among them, there are also missing values for the decision attribute \(d\),that is,\(\exists x_{i} \in U\),with \(d(x_{i} ) = *\),which represents the set of unlabeled objects. Therefore \(U = M \cup L\),where \(M\) represents the set of unlabeled objects and \(L\) represents the set of labeled objects.

Table 1 shows a weakly labeled incomplete mixed decision system, \(U = \left\{ {x_{1} ,x_{2} , \cdots ,x_{7} } \right\}\) is a domain, \(L = \left\{ {x_{1} ,x_{2} ,x_{3} ,x_{4} } \right\}\) is a set of labeled objects, and \(M = \left\{ {x_{5} ,x_{6} ,x_{7} } \right\}\) is a set of unlabeled objects; \(C = \left\{ {c_{1} ,c_{2} , \cdots ,c_{5} } \right\}\) is an attribute set, \(C_{d} = \left\{ {c_{1} ,c_{2} ,c_{3} } \right\}\) is a discrete attribute set, \(C_{r} = \left\{ {c_{4} ,c_{5} } \right\}\) is a continuous attribute set,\(D\) is decision attributes.

Definition 231

Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\),where \(B \subseteq C\) and \(B = B_{d} \cup B_{r}\),\(B_{d}\) represents a discrete attribute set,\(B_{r}\) represents a continuous attribute set,\(\forall a \in B\),the neighborhood tolerance relationship of the attribute set \(B\) and the neighborhood tolerance class of \(x_{i}\) with respect to attribute set \(B\) are defined as:

where \(\delta\) is the neighborhood radius, which is a non-negative constant.

For example, in Table 1\(NT_{{c_{1} }}^{\delta } (x_{1} ) = \{ x_{1} ,x_{2} ,x_{4} \}\);\(NT_{{c_{1} }}^{\delta } (x_{2} ) = \{ x_{1} ,x_{2} ,x_{3} ,x_{4} \}\);\(NT_{{c_{{}} }}^{\delta } (x_{3} ) = \{ x_{2} ,x_{3} \}\);

\(NT_{{c_{1} }}^{\delta } (x_{4} ) = \{ x_{1} ,x_{2} ,x_{4} \}\);\(NT_{{c_{1} }}^{\delta } (x_{5} ) = \{ x_{5} ,x_{7} \}\);\(NT_{{c_{1} }}^{\delta } (x_{6} ) = \{ x_{6} \}\);\(NT_{{c_{1} }}^{\delta } (x_{7} ) = \{ x_{5} ,x_{7} \}\).

Definition 3

28:Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\).In the unlabeled set of objects \(x_{i} \subseteq M\),where \(B \subseteq C\),then the relative neighbor-hood discernibility degree of \(B\) under \(M\) is:

Note:\(NDD_{B}^{M} (C) = 0\) when \(B = C\).

Definition 4

29:Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\).In the set of labeled objects \(x_{i} \subseteq L\),where \(B \subseteq C\),the decision class of \(x_{i}\) in \(D\) is \(\left[ {x_{i} } \right]_{D}\),then the relative neighborhood discernibility degree of \(B\) under \(L\) is:

Non-dynamic attribute reduction algorithm for a weakly labeled incomplete mixed decision system

Non-dynamic attribute reduction algorithm

The definitions 3 and 4 above provide definitions for unlabeled and labeled relative neighborhood discernibility degree. Building on definitions 3 and 4, this section improves the definition of weakly labeled relative neighborhood discernibility degree, laying the foundation for proposing a new algorithm for non-dynamic attribute reduction in weakly labeled incomplete decision systems.

Definition 5

Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\),where \(B \subseteq C\),then the relative neighborhood discernibility degree of \(B\) under \(U\) is:

Special attention:\(NDD_{B}^{M} (C) = 0\) when \(M = \emptyset\);\(NDD_{B}^{L} (D) = 0\) when \(L = \emptyset\).

Theorem 1

Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\),where \(B_{1} \subseteq B_{2} \subseteq C\), then: \(NDD_{{B_{1} }} (U) \ge NDD_{{B_{2} }} (U)\).

Proof

From Definition 5, we can directly obtain:

Due to \(B_{1} \subseteq B_{2}\),then \(\forall x_{i} \in U\) resulting in satisfaction \(NT_{{B_{2} }}^{\delta } (x_{i} ) \subseteq NT_{{B_{1} }}^{\delta } (x_{i} )\).

The conclusion reached is that \(NDD_{{B_{1} }} (U) \ge NDD_{{B_{2} }} (U)\).

The theorem 1 mentioned above shows that as the attribute set increases, the relative neighborhood discernibility degree of weak labeling does not increase monotonically. This provides a theoretical basis for attribute reduction in incomplete decision systems with weak labeling. Therefore, based on the monotonicity of relative neighborhood discernibility degree of weak labeling, a non-dynamic attribute reduction algorithm can be constructed.

Definition 6

Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\),\(\forall a \in B \subseteq C\),the significance of the internal attributes of \(a\) is:

Definition 7

Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\),\(\forall a \in C - B\),the significance of the external attributes of \(a\) is:

Note: When \(B = \emptyset\),\(NDD_{B}^{M} (C) = |M|^{2}\),\(NDD_{B}^{L} (D) = |L|^{2}\),then \(NDD_{B} (U) = |M|^{2} + |L|^{2}\).

Definition 8

Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\),if \(R \subseteq C\) is an attribute reduction set, then \(R\) satisfies:

The literature22 presents a dynamic attribute reduction algorithm based on discriminant matrix for increasing the object set; whereas, the literature24 presents a dynamic attribute reduction algorithm for increasing the object set based on information entropy. However, these algorithms are only designed for single, labeled data type attribute reduction, and are not applicable for mixed or unlabeled data. To address this issue, this paper proposes a non-dynamic attribute reduction algorithm for weakly labeled incomplete mixed decision systems based on weak label relative neighborhood discernibility degree, as shown in Algorithm 1.

Time complexity of non-dynamic Algorithm 1: Step 1 calculates the relative neighborhood discernibility degree of the entire set of attributes, whose time complexity is \(O(|U||C|)\). Step 2 selects the most important attributes in each loop to be added to the set of candidate attributes until the termination condition is satisfied, whose time complexity is \(O(|U|^{2} |C|^{3} )\), and Step 5 removes redundant attributes from the set of candidate attributes, whose time complexity is \(O(|U^{2} ||C|^{2} )\). Therefore, the time complexity of Algorithm 1 is \(O(|U|^{2} |C|)^{3}\).

Example analysis

To further elaborate on the weakly labeled incomplete mixed decision systemâs non-dynamic attribute reduction algorithm 1 proposed in this paper, the feasibility of the non-dynamic attribute reduction algorithm 1 is verified using the data in Table 2 as an example, with a neighborhood radius of \(\delta = 0.2\).

Table 2 represents a weakly labeled incomplete mixed decision system, where \(U = \left\{ {x_{1} ,x_{2} ,...,x_{7} } \right\}\),\(L = \left\{ {x_{1} ,x_{2} ,x_{3} ,x_{4} ,} \right.\left. {x_{5} } \right\}\) is a set of labeled objects and \(M = \left\{ {x_{6} ,x_{7} } \right\}\) is a set of unlabeled objects;\(C = \left\{ {C_{1} ,C_{2} , \cdots C_{5} } \right\}\) is attribute set,\(C_{d} = \left\{ {C_{1} ,C_{2} ,C_{3} } \right\}\) is discrete attribute set,\(C_{r} = \left\{ {C_{4} ,C_{5} } \right\}\) is continuous attribute set;\(D\) is decision attributes. From Table 2 it follows that the neighborhood tolerance classes of \(x_{i}\) with respect to \(C\) is: \(NT_{C}^{\delta } (x_{1} ) = \{ x_{1} \}\);\(NT_{C}^{\delta } (x_{2} ) = \{ x_{2} \}\);\(NT_{C}^{\delta } (x_{3} ) = \{ x_{3} \}\);\(NT_{C}^{\delta } (x_{4} ) = \{ x_{4} ,x_{5} \}\);\(NT_{C}^{\delta } (x_{5} ) = \{ x_{4} ,x_{5} \}\);\(NT_{C}^{\delta } (x_{6} ) = \{ x_{6} \}\);\(NT_{C}^{\delta } (x_{7} ) = \{ x_{7} \}\).In labeled datasets, the decision-making categories for \(x_{i}\) under \(D\) is:\([x_{1} ]_{D} = [x_{2} ]_{D} = [x_{5} ]_{D} = \{ x_{1} ,x_{2} ,x_{5} \}\);\([x_{3} ]_{D} = [x_{4} ]_{D} = \{ x_{3} ,x_{4} \}\).According to step 1: Command \(R = \emptyset\),calculated \(NDD_{C} (U) = 2\).According to step 2:\(\forall c \in C - R\),the calculated significance of external attributes is:\(sig^{outer} (c_{1} ,R) = 21\),\(sig^{outer} (c_{2} ,R) = 15\) ,\(sig^{outer} (c_{3} ,R) = 19\),\(sig^{outer} (c_{4} ,R) = 21\),\(sig^{outer} (c_{5} ,R) = 23\).According to step 3: Identify the attribute in \(C - R\) with the highest significance for external attributes is \(c_{5}\),then \(R = R \cup \left\{ {c_{5} } \right\}\),where \(NDD_{R} (U) = 6\).

According to step 4:\(NDD_{R} (U) \ne NDD_{C} (U)\),go to step 2; Continuing to calculate:\(\forall c \in C - R\),the calculated significance of external attributes is:\(sig^{outer} (c_{1} ,R) = 2\),\(sig^{outer} (c_{2} ,R){ = 0}\),\(sig^{outer} (c_{3} ,R) = 2\),\(sig^{outer} (c_{4} ,R) = 4\).The attribute with the highest significance of external attributes is \(c_{4}\),then \(R = R \cup \left\{ {c_{4} } \right\}\),where \(NDD_{R} (U) = 2\),satisfies \(NDD_{R} (U) = NDD_{C} (U)\),go to step 5.According to step 5:\(\forall c \in R\),calculated the significance of internal attributes of \(c\):\(sig^{inner} (c_{4} ,R) \ne 0\),\(sig^{inner} (c_{5} ,R) \ne 0\),then keep \(R\) unchanged and output the attribute reduction set \(R = \left\{ {c_{4} ,c_{5} } \right\}\) of the system \(WDIS\).

Dynamic attribute reduction algorithm for weakly labeled incomplete mixed decision systems with increased object sets

Dynamic attribute reduction algorithm

This section outlines an incremental update mechanism for improving the efficiency of attribute reduction in a weakly labeled incomplete mixed decision system when increasing the object set. It introduces an update mechanism based on the weakly labeled relative neighborhood discernibility degree, which calculates the weakly labeled relative neighborhood discernibility degree after increasing the object set. Utilizing the original weakly labeled relative neighborhood discernibility degree, this mechanism then derives the weakly labeled relative neighborhood discernibility degree of the data after increasing the object set. As a result, the attribute reduction of the systemâs properties after increasing objects was obtained. Ultimately, this section proposes a new dynamic attribute reduction algorithm for increasing the object set.

Theorem 2

Given a weakly labeled incomplete mixed decision system \(WDIS = \left\langle {U,C \cup D,V,f} \right\rangle\),where \(B \subseteq C\),\(U = \left\{ {x_{1} ,x_{2} , \cdots ,x_{n} } \right\}\),\(U = M \cup L\),the neighborhood radius is \(\delta\). Increased an object set in the system \(\Delta Y\),and the new weakly labeled incomplete mixed decision system is denoted as \(WDIS{\prime} = \left\langle {U \cup \Delta Y,C \cup D,V,f} \right\rangle\),\(\Delta Y = \Delta m \cup \Delta l\),where \(\Delta m\) is the newly increased unlabeled object set and \(\Delta l\) is the newly increased labeled object set. After increasing the object set, the incremental update formula for the weakly labeled relative neighborhood discernibility degree of \(B\) under \(U \cup \Delta Y\) after increasing the object set \(\Delta Y\) is:

Proof

Let the set of increased objects be denoted as \(\Delta Y = \left\{ {y_{1} ,y_{2} , \cdots ,y_{p} } \right\}\),\(Y_{B} (x)\) denotes that under set \(B\),the new object \(y\) belongs to the neighborhood relation of \(x_{i}\),then \(Y_{B} (x) = \left\{ {y \in \Delta Y|y \in NT_{B}^{\delta } (x_{i} ),x_{i} \in U} \right\}\),\(X_{B} (y)\) denotes that under set \(B\), the new object \(x\) belongs to the neighborhood relation of \(y_{i}\),then \(X_{B} (y) = \left\{ {x \in U|x \in NT_{B}^{\delta } (y_{i} ),y_{i} \in \Delta Y} \right\}\).

Due to symmetry, there are:

It can be concluded that:

When the weak labeling incomplete mixed decision system increases the object set, the non-dynamic attribute reduction algorithm repeatedly treats the data set with the addition of new object sets as a new data set and then re-conducts attribute reduction on the data set, leading to significant time and space consumption. To address this issue, this paper proposes an incremental updating mechanism for the relative neighborhood discernibility degree of the increased object set based on Theorem 2 and introduces a dynamic attribute reduction algorithm for the added object set as shown in algorithm 2.

Time Complexity of Dynamic Algorithm 2: Step 1 calculates the relative neighborhood differentiation after updating, and its time complexity is \(O(|U \cup \Delta Y||R{\prime} |)\), Step 4 gradually adds the important attribute set to the marquee attribute subset until the termination condition is satisfied, and its time complexity is \(O(|U \cup \Delta Y||R{\prime} ||C - R{\prime} |)\), Step 6 removes the redundant attributes in the set of candidate attributes, and its time complexity is \(O(|U \cup \Delta Y||R{\prime} |^{2} )\). Therefore, the time complexity of the dynamic Algorithm 2 is \(O(|U \cup \Delta Y||R{\prime} ||C|)\), and compared to the non-dynamic Algorithm 1 which has the time complexity Algorithm 1 with the time complexity \(O(|U \cup \Delta Y|^{2} |C|)^{3}\), the time complexity of the Algorithm 2 has been effectively reduced.

Example analysis

To further illustrate the dynamic attribute reduction algorithm 2 for the incomplete weakly labeled mixed decision system proposed in this paper, the feasibility of the algorithm is validated using the original data from Table 2 and the newly increased object set as shown in Table 3. From the aforementioned analysis, it can be concluded that the attribute reduction set \(R = \left\{ {c_{4} ,c_{5} } \right\}\) and \(NDD_{R} (U) = NDD_{C} (U) = 2\) for system \(WDIS\).

According to step 1: Command \(R{\prime} = R\),calculated \(NDD_{R^{\prime}} (U \cup \Delta Y) = 10\) and \(NDD_{C} (U \cup \Delta Y) = 4\). According to step 2:\(NDD_{{R{\prime} }} (U \cup \Delta Y) \ne NDD_{C} (U \cup \Delta Y)\), go to step 3.According to step 3:\(\forall c \in C - R{\prime}\),the calculated significance of external attributes of \(c\) is:\(sig^{outer} (c_{1} ,R{\prime} ) = 6\),\(sig^{outer} (c_{2} ,R{\prime} ) = 0\),\(sig^{outer} (c_{3} ,R{\prime} ) = 4\). According to step 4: Identify the attribute in \(C - R{\prime}\) with the highest significance for external attributes, denoted as \(c_{1}\),then \(R{\prime} = R{\prime} \cup \left\{ {c_{1} } \right\}\).According to step 5:\(NDD_{{R{\prime} }} (U \cup \Delta Y) = NDD_{C} (U \cup \Delta Y) = 4\), go to step 6;According to step 6:\(\forall c \in R{\prime}\),calculated the significance of internal attributes of \(c\):

\(sig^{inner} (c_{1} ,R{\prime} ) \ne 0\),\(sig^{inner} (c_{4} ,R{\prime} ) \ne 0\),\(sig^{inner} (c_{5} ,R{\prime} ) \ne 0\).

Thus, return the attribute reduction set \(R{\prime} = c_{1} ,c_{4} ,c_{5}\) of the system \(WDIS{\prime}\) after increasing the object set.

Experimental analysis

Experimental description

The algorithms proposed in this paper were validated using 8 data sets from the UCI32 database. Details of the specific datasets can be found in Table 4. The experiments were conducted on a computer with an Intel(R) Core (TM) i5-9500 CPU (3.00 GHz) and 8.0 GB of memory, running Windows 10 and using the Matlab2022a software platform.

In Table 4 eight datasets were subjected to minâmax normalization to eliminate scale differences. In addition, to accommodate weakly labelled incomplete data types, 20% random missing values are applied to the attribute values and decision values in the dataset, so that there are 20% missing values in the attribute values and 20% missing values in the decision values. Subsequently, the datasets were subjected to attribute reduction using the Semi-D algorithm33, Rsfs algorithm34, non-dynamic algorithm 1, and dynamic algorithm 2. Since the Semi-D algorithm can only handle discrete data, a discretization process was applied to the continuous data in the datasets. To evaluate the effectiveness of these four algorithms in attribute reduction, this study utilizes the classification accuracy under the RF classifier as the evaluation metric.

Comparison of performance of different algorithms when increasing object set

In order to verify the effectiveness of the dynamic attribute reduction algorithm 2 in augmenting the object set, the dataset was first randomly sorted to ensure that there was not only one categorical category in the extracted base data, since the dataset was initially sorted by decision value. The dataset was divided into two parts with the first 50% as the base data and the second 50% as the incremental dataset. The incremental dataset was increased in increments of 10% by adding 10 percent of the incremental dataset for the first time, 20% of the incremental dataset for the second time, and 30% of the incremental dataset for the third time, and so on for 10 iterations.

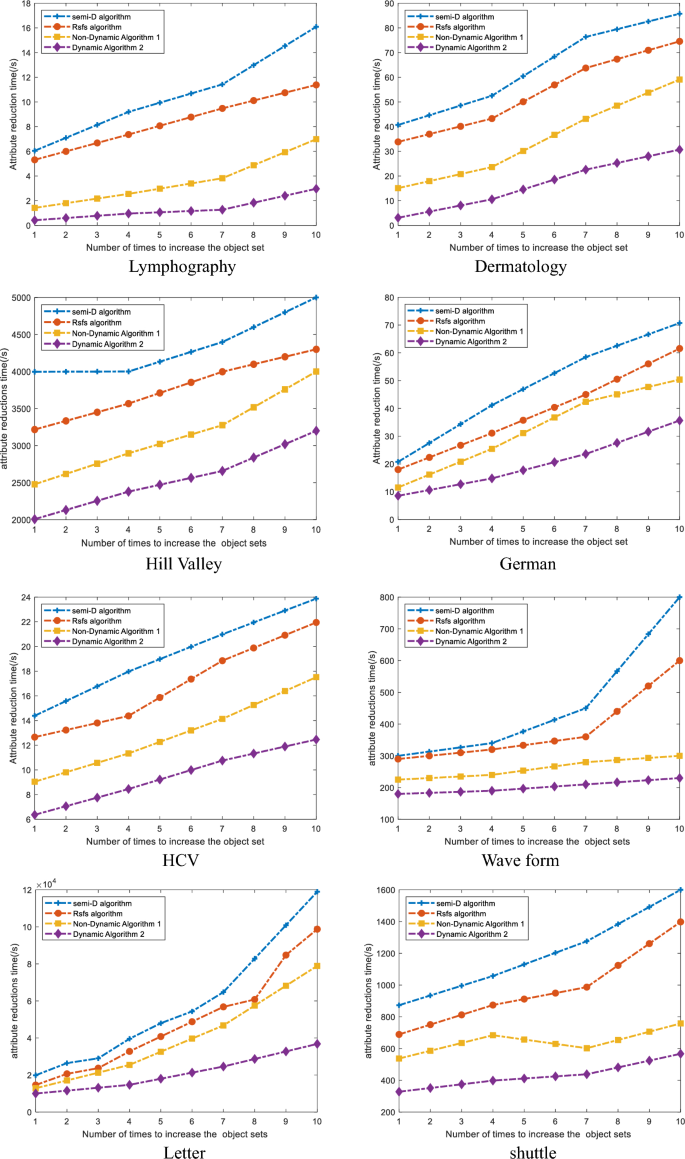

The Fig. 1 compares the time taken by the Semi-D algorithm, Rsfs algorithm, non-dynamic attribute reduction algorithm 1, and dynamic attribute reduction algorithm 2 for increasing object set attribute reduction on 8 data sets. The horizontal axis represents the number of times the object set is increased, and the vertical axis represents the time for attribute reduction, measured in seconds(s).

By observing Fig. 1, it becomes evident that the attribute reduction time of non-dynamic attribute reduction algorithm 1, dynamic attribute reduction algorithm 2, and the comparison algorithm gradually increases as the object set gradually increases in the weakly labeled incomplete mixed decision system. Notably, the attribute reduction time of dynamic attribute reduction algorithm 2 is significantly lower than that of the other three algorithms. For example, in the Dermatology dataset, when the object set was added for the tenth time, the reduction times for the Semi-D algorithm, Rsfs algorithm, non-dynamic attribute reduction algorithm 1, and dynamic attribute reduction algorithm 2 were 85.7269 s, 74.5495 s, 59.0794 s, and 30.6935 s, respectively. In comparison, the reduction times for dynamic attribute reduction algorithm 2 decreased by 64.19%, 58.82%, and 48.05%. Similarly, in the Lymphography dataset, when the object set was added for the fourth time, the reduction times for the Semi-D algorithm, Rsfs algorithm, non-dynamic attribute reduction algorithm 1, and dynamic attribute reduction algorithm 2 were 9.1935s, 7.3605 s, 2.5512 s, and 0.9562 s, respectively. The reduction times for dynamic attribute reduction algorithm 2 decreased by 89.59%, 87.01%, and 62.52%, respectively.

Based on the small data described above, the dynamic attribute reduction algorithm in this paper is also applicable to relatively large data. such as in the data Letter, when the object set was added for the 10th time, the reduction times for the Semi-D algorithm, Rsfs algorithm, non-dynamic attribute reduction algorithm 1, and dynamic attribute reduction algorithm 2 were 118,967.0 s, 98,746.0 s, 78,934.0 s, 36,786.0 s, respectively. The reduction times for dynamic attribute reduction algorithm 2 decreased by 69.08%, 62.75%, and 53.40%, respectively. Therefore, it can be clearly concluded that the attribute reduction time of the dynamic algorithm 2 in this paper is significantly reduced for both large and small datasets.

The results show that after adding the object set, Dynamic Attribute Reduction Algorithm 2 not only reduces reduction time compared to Semi-D Algorithm and Rsfs Algorithm, but also reduces reduction time significantly compared to Non-Dynamic Attribute Reduction Algorithm 1. This is because Non-Dynamic Attribute Reduction Algorithm 1, Semi-D Algorithm, and Rsfs Algorithm require recalculating the neighborhood class and weak label relative neighborhood discernibility degree of the data after increasing the object set, while Dynamic Attribute Reduction Algorithm 2 uses an incremental update mechanism to calculate the relative neighborhood discernibility degree based on the attribute reduction result of the original data set, thus reducing a significant amount of reduction time and verifying the effectiveness of Dynamic Attribute Reduction Algorithm 2 in this article.

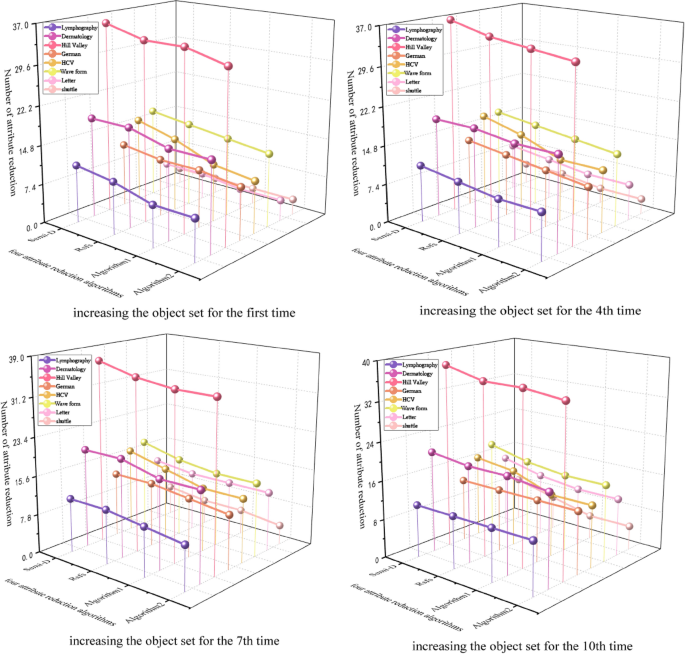

Figure 2 presents the attribute reduction numbers for four different algorithms (Semi-D algorithm, Rsfs algorithm, non-dynamic attribute reduction algorithm 1, and dynamic attribute reduction algorithm 2) as the object sets increase for the 1st, 4th, 7th, and 10th time across 8 data sets. It is evident from the figure that the dynamic attribute reduction algorithm 2 consistently yields the smallest number of reductions, with the reduction count gradually increasing as the object set grows. Specifically, in the Dermatology data, the number of attribute reductions for the four algorithms upon increasing the object set for the first time are 19, 19, 17, and 17 respectively. For the fourth time, the numbers are 19, 19, 18, and 18. When the object set is increased for the 7th time, the count stands at 20, 20, 18, and 18, while for the 10th time, it is 21, 20, 20, and 19. In the HCV data, the number of attribute reductions for the four algorithms upon increasing the object set for the first time are 16, 14, 11, and 10 respectively. For the fourth time, the numbers are 17, 15, 12, and 12. When the object set is increased for the 7th time, the count stands at 17, 15, 13, and 13, while for the 10th time, it is 17, 16, 13, and 13. These results support the conclusion that dynamic attribute reduction algorithm 2 proves more effective compared to the other algorithms.

Table 5 presents the classification accuracies of the Semi-D algorithm, the Rsfs algorithm, non-dynamic attribute reduction algorithm 1, and dynamic attribute reduction algorithm 2 under the RF classifier for eight datasets when the object set is incremented for the 10th time. The results in Table 5 indicate that, for the 10th increase in the object set, the Dermatology and Ecoli datasets achieve the highest classification accuracy with the non-dynamic attribute reduction algorithm 1. Conversely, the German, Breast Tissue, Lymphography, Ecoli, Ionosphere, Student Performance, and HCV datasets exhibit the highest classification accuracy with the dynamic attribute reduction algorithm 2. Notably, the average classification accuracy across all datasets significantly favors dynamic algorithm 2, highlighting its superior performance. Consequently, the findings substantiate the assertion that the proposed dynamic algorithm 2 achieves higher classification accuracy Therefore, this provides a new attribute reduction algorithm with higher classification accuracy for the dynamic attribute reduction of weakly labeled incomplete mixed decision systems that increase the object set.

Conclusion

For the dynamic attribute reduction of weakly labeled incomplete hybrid decision systems, this paper proposes a non-dynamic attribute reduction algorithm 1 based on weakly labeled relative neighborhood discernibility degree by improving the definition of weakly labeled relative neighborhood discernibility degree by using all the unlabeled and labeled datasets, and proposes a dynamic attribute reduction algorithm 2 by constructing an incremental updating mechanism of weakly labeled relative neighborhood discernibility degree in the presence of an increased set of objects Algorithm 2. Finally, it is experimentally verified that the dynamic attribute reduction algorithm 2 proposed in this paper can significantly improve the reduction efficiency, which can obtain faster reduction time as well as higher classification accuracy, thus verifying the effectiveness of the dynamic algorithm 2 proposed in this paper, and further providing a simpler and more accurate attribute reduction algorithm for dynamic attribute reduction algorithms of weakly labeled incomplete hybrid decision-making systems. However, the data in life is not only the object set changing dynamically, but also the object set and the attribute set changing at the same time, so on the basis of the dynamic attribute reduction algorithm for increasing the object set in this paper, the next step will be to study the attribute reduction when the attribute set and the object set change at the same time in the weakly labeled incomplete hybrid decision system.

Data availability

The datasets generated and/or analysed during the current study are not publicly available due [REASON WHY DATA ARE NOT PUBLIC] but are available from the corresponding author on reasonable request.

References

Qian, Y. H., Liang, X. Y. & Wang, Q. Local rough set: A solution to rough data analysis in big data. Int. J. Approximate Reason. 97, 38â63 (2018).

Qian, J., Miao, D. Q. & Zhang, Z. H. Parallel attribute reduction algorithms using MapReduce. Inf. Sci. 279, 671â690 (2014).

Su, N., An, X. J. & Yan, C. Q. Incremental attribute reduction method based on chi-square statistics and information entropy. IEEE Access. 8, 98234â98243 (2020).

Hu, Q. H., Zhang, L. J. & Zhou, Y. C. Large-scale multimodality attribute reduction with multi-Kernel Fuzzy rough sets. IEEE Trans. Fuzzy Syst. 26, 226â238 (2017).

Liu, C. H. Covering-based multi-granulation decision theoretic rough set approaches with new strategies. J. Intell. Fuzzy Syst. 1, 1â13 (2018).

Li, S. L. & Qin, K. Y. Attribute reduction in L-fuzzy formal contexts. Ann. Fuzzy Math. Inf. 19, 127â137 (2020).

Yang, L., Qin, K. Y. & Sang, B. B. A novel incremental attribute reduction by using quantitative dominance-based neighborhood self-information. Knowledge Based Syst. 261, 110200 (2023).

Su, H. R., Chen, J. K. & Lin, Y. J. A four-stage branch local search algorithm for minimal test cost attribute reduction based on the set covering. Appl. Soft Comput. 153, 111303 (2024).

Wang, C. Z., Qi, Y.L., & Shao, M. W. A fitting model for feature selection with fuzzy rough sets. IEEE Trans. Fuzzy Syst. 741â753 (2017).

Gu, X. P., Li, Y. & Jia, J. H. Feature selection for transient stability assessment based on kernelized fuzzy rough sets and memetic algorithm. Int. J. Electr. Power Energy Syst. 64, 664â670 (2015).

Hu, Q., Yu, D., Liu, J. & Wu, C. Neighborhood rough set based heterogeneous feature subset selection. Inf. Sci. 178, 3577â3594 (2008).

Zhao, Z., Wang, L., Liu, H. & Ye, J. On similarity preserving feature selection. IEEE Trans. Knowl. Data Eng. 25, 619â632 (2013).

Jensen, R. & Shen, Q. Fuzzyârough attribute reduction with application to Web categorization. Fuzzy Sets Syst. 141, 469â485 (2004).

Hu, Q., Zhang, L., Chen, D., Pedrycz, W. & Yu, D. Gaussian kernel based fuzzy rough sets: Model, uncertainty measures and applications. Int. J. Approx. Reason. 41, 453â471 (2010).

Sun, Q., Wang, C., Wang, Z., & Liu, X. A fault diagnosis method of smart grid based on rough sets combined with genetic algorithm and tabu search. Neural Comput. Appl. 23, 2023â2029 (2013).

Chebrolu, S., & Sanjeevi, S. G. Attribute reduction on real-valued data in rough set theory using hybrid artificial bee colony: Extended FTSBPSD algorithm. Soft Comput. 21, 7543â7569 (2017).

Zouache, D., Ben, A. F. A cooperative swarm intelligence algorithm based on quantum-inspired and rough sets for feature selection. Comput. Indus. Eng. 115, 26â36 (2018).

Shu, W. H., Li, S. P. & Qian, W. B. A composite entropy-based uncertainty measure guided attribute reduction for imbalanced mixed-type data. J. Intell. Fuzzy Syst. 3(46), 7307â7325 (2024).

Liang, B. H., Jin, E. L., Wei, L. F., & Hu, R. Y. Knowledge granularity attribute reduction algorithm for incomplete systems in a clustering context. Mathematics. 12(2), 333 (2024)

Ni, P., Zhao, S. Y. & Wang, X. Z. PARA: A positive-region based attribute reduction accelerator. Inf. Sci. 503, 533â550 (2019).

Pawlak, Z. Rough sets: Theoretical aspects of reasoning about data (Kluwer Academic Publishers, 1991).

Pawlak, Z., Wong, S. K. M. & Ziarko, W. Rough sets: Probabilistic versus deterministic approach. Int. J. Man-Mach. Stud. 29, 81â95 (1988).

Shu, W. H. & Shen, H. Incremental feature selection based on rough set in dynamic incomplete data. Pattern Recognit. 47, 3890â3906 (2014).

Wei, W., Wu, X. Y. & Liang, J. Y. Discernibility matrix based incremental attribute reduction for dynamic data. Knowledge Based Syst. 140, 142â157 (2018).

Yang, Y. Y., Chen, D. G. & Wang, H. Y. Incremental perspective for feature selection based on fuzzy rough sets. IEEE Trans. Fuzzy Syst. 26, 1257â1273 (2018).

Liang, J. Y., Wang, F. & Dang, C. Y. A group incremental approach to feature selection applying rough set technique. IEEE Trans. Knowledge Data Eng. 26, 294â308 (2013).

Xiang, W. Dynamic Attribute Reduction Algorithm for Object Changes in Neighborhood Systems. Comput. Applications and Software.35,278â282+329(2018).

Sheng, K., Wang, W. & Bian, H. Neighborhood-distinctive incremental attribute reduction algorithm for mixed data. Electron. Lett. 48, 682â696 (2020).

Sun, L., Li, M. M., & Xu, J. C. Attribute reduction method for incomplete mixed data based on neighborhood distinctiveness. J. Jiangsu Univ. Sci. Technol. (Nat. Sci. Edition). 36, 82â89 (2022).

Cheng, L., Qian, W. B. & Wang, Y. L. Incremental attribute reduction algorithm for weakly labeled incomplete decision systems. J. Intell. Syst. 15, 1079â1090 (2020).

Yuxin, Z., Da, M. S. & Fei, L. An incremental attribute reduction algorithm for incomplete hybrid data based on granular monotone. Comput. Appl. Softw. 38, 279â286 (2021).

UCI Machine Learning Repository, http://archive.ics.uci.edu/ml/datasets.html.

Dai, J. H., Hu, Q. H. & Zhang, J. H. Attribute selection for partially labeled categorical data by rough set approach. IEEE Trans. Cyber. 47, 2460â2471 (2017).

Liu, K. Y., Yang, X. B. & Yu, H. L. Rough set based semi-supervised feature selection via ensemble selector. Knowledge Based Syst. 165, 282â296 (2019).

Wan, J. & Chen, H. A novel hybrid feature selection method considering feature interaction in neighborhood rough set. Knowl-Based Syst. 227, 107167 (2021).

Shu, W. & Yan, Z. Information granularity-based incremental feature selection for partially labeled hybrid data. Intell. Data Anal. 26, 33â56 (2022).

Kim, K. & Jun, C. Rough set model-based feature selection for mixed-type data with feature space decomposition. Expert Syst. 103, 196â205 (2018).

Funding

This work was supported by the National Natural Science Foundation of China under Grant 12172280 and in by the Natural Science Foundation of Shaanxi Province under Grant 2020CGXNG-013.

Author information

Authors and Affiliations

Contributions

F, Methodology, writingâreview and editing. S, Conceptualization, writingâoriginal draft preparation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Feng, W., Sun, T. A dynamic attribute reduction algorithm based on relative neighborhood discernibility degree. Sci Rep 14, 15637 (2024). https://doi.org/10.1038/s41598-024-66264-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-66264-x

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.