Object Tracking Algorithms in OpenCV

Overview

In computer vision, object tracking is tracking a particular object in an image or a video sequence over time. Object tracking is challenging because the object's appearance can change due to lighting conditions, occlusion, and other factors. OpenCV is a widely used computer vision library that provides a variety of object-tracking algorithms.

Introduction

Object tracking is fundamental in computer vision, with applications in various fields such as security, surveillance, robotics, and self-driving cars. Object tracking aims to locate and track a particular object in a video sequence over time. Object tracking is challenging due to the object's motion, occlusion, and changes in the object's appearance.

OpenCV is a widely used computer vision library that provides a variety of object-tracking algorithms. This blog post will discuss different object-tracking algorithms available in OpenCV and their implementation.

Pre-requisites

- To understand this blog post, you should have basic knowledge of Python programming language and OpenCV library.

- It is helpful to have a foundational understanding of computer vision principles, including image processing, feature extraction, and image analysis techniques.

- While not mandatory, a basic understanding of mathematics and statistics, particularly concepts related to linear algebra, probability, and optimization, can help comprehend certain algorithms and their underlying principles.

Object Tracking Algorithms in OpenCV

Object tracking is locating and following a particular object or multiple objects over a sequence of frames in a video. It involves detecting an object in a frame and then estimating its location in the subsequent frames based on its appearance, motion, and other features.

The main objective of object tracking is to maintain the identity of the object(s) being tracked over time, even when there are changes in their size, orientation, lighting conditions, or occlusions.

There are different approaches to object tracking, such as template matching, optical flow, feature-based tracking, and deep learning-based tracking. These approaches use various algorithms and techniques to detect and track objects in different environments and conditions.

![]()

Need for Object Tracking Algorithms

Object tracking is essential in many applications, such as security, surveillance, robotics, and self-driving cars. Object tracking algorithms can help in detecting and tracking moving objects in a video sequence. Object tracking can also count the number of objects in a video sequence, which can be useful in traffic monitoring and crowd analysis.

- Real-time monitoring and surveillance:

Object tracking algorithms are crucial in surveillance systems where real-time monitoring is required. They enable the automated tracking of objects of interest, such as individuals or vehicles, allowing for efficient and automated surveillance tasks. - Video analysis and understanding:

Object tracking algorithms play a vital role in video analysis and understanding applications. By tracking objects across frames, these algorithms can provide valuable information about object behaviour, motion patterns, interactions, and scene dynamics. - Augmented reality and virtual reality:

Object tracking algorithms are essential in augmented reality (AR) and virtual reality (VR) applications. They enable the accurate overlay of virtual objects onto the real-world environment, ensuring that the virtual objects align and interact seamlessly with the real objects being tracked. - Human-computer interaction:

Object tracking algorithms contribute to various human-computer interaction systems. They enable gesture recognition, hand tracking, and object manipulation in interactive displays, gaming, and robotics applications, enhancing user interaction and control. - Autonomous vehicles and robotics:

Object tracking is crucial for autonomous vehicles and robotic systems. It allows them to perceive and track other vehicles, pedestrians, or objects in their surroundings, enabling tasks such as obstacle avoidance, path planning, and object manipulation in robotic arms.

Different Object Tracking Algorithms in OpenCV

OpenCV provides a variety of object-tracking algorithms. These algorithms can be categorized into two types:

- Template-based trackers:

These trackers use a template of the object to track it in the video sequence. The template is a region of interest (ROI) in the first frame of the video sequence, and the tracker tracks this template in subsequent frames. The template-based trackers are fast but can be affected by changes in the object's appearance. - Feature-based trackers:

These trackers use features such as corners, edges, and blobs to track the object in the video sequence. The Feature-based trackers are more robust to changes in the object's appearance but can be slower than template-based trackers.

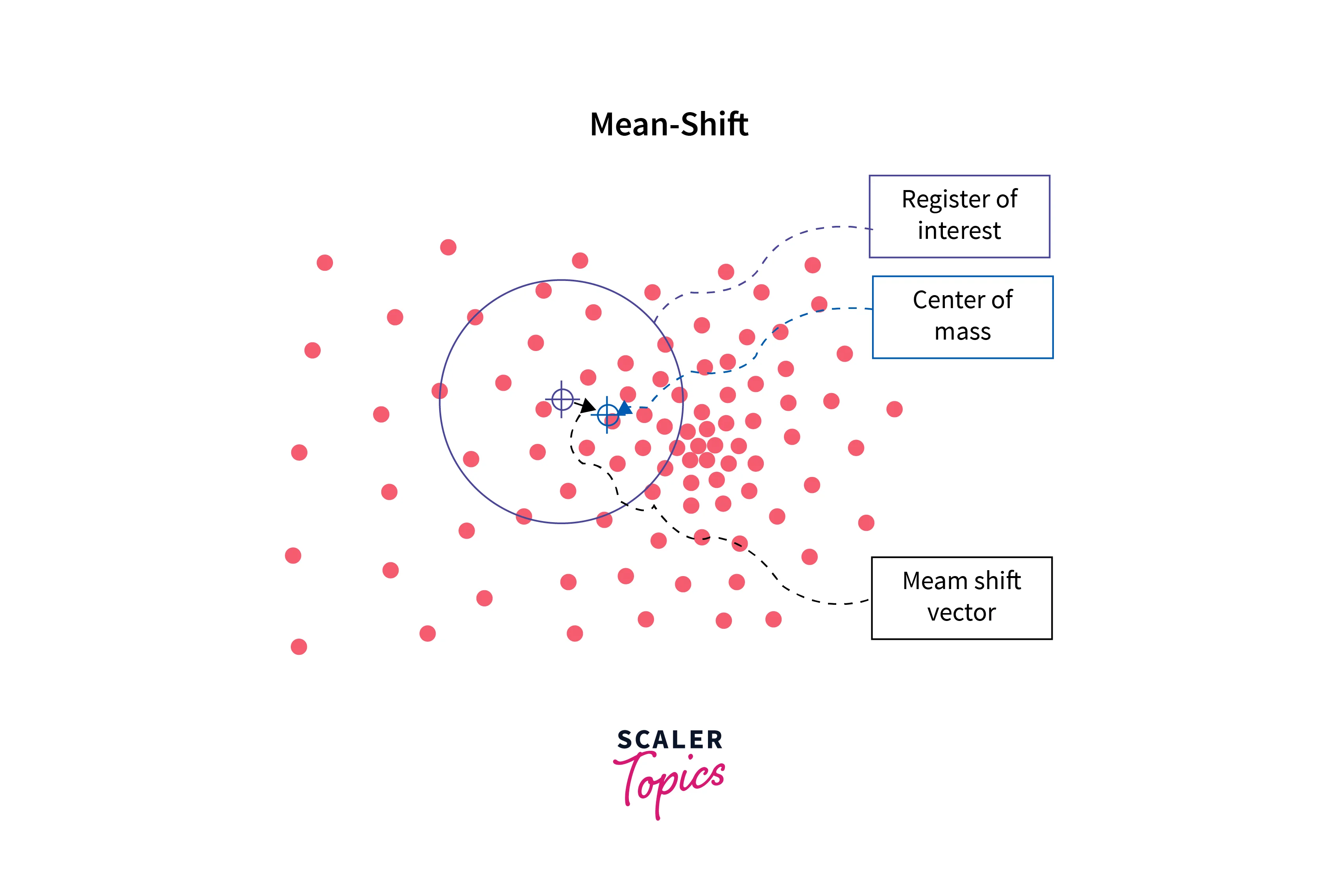

MeanShift Tracker

MeanShift is a template-based tracker that uses the histogram of the ROI in the first frame to track the object in subsequent frames. MeanShift calculates the centre of the ROI's histogram and shifts the ROI's window to this centre in each frame. MeanShift is fast but can be affected by changes in the object's appearance.

CAMShift Tracker

CAMShift is an extension of the MeanShift tracker that can handle changes in the object's appearance. CAMShift uses the mean shift algorithm to calculate the centre of the ROI's histogram in each frame. CAMShift then adjusts the ROI's size and orientation based on the object's orientation and size changes.

![]()

KCF Tracker

KCF (Kernelized Correlation Filters) is a feature-based tracker that uses correlation filters to track the object in the video sequence. KCF uses the kernel function to map the features into a higher-dimensional space, where the correlation operation is performed. KCF is more robust to changes in the object's appearance but can be slower than MeanShift and CAMShift trackers.

![]()

MOSSE Tracker

MOSSE (Minimum Output Sum of Squared Error) is a feature-based tracker that uses correlation filters to track the object in the video sequence. MOSSE is similar to the KCF tracker but uses the Minimum Output Sum of Squared Error (MOSSE) metric to train the correlation filters, which makes it faster than KCF. MOSSE is a lightweight tracker that can be used in real-time applications.

![]()

CSRT Tracker

CSRT (Channel and Spatial Reliability Tracking) is a feature-based tracker that uses spatial and channel information to track the object in the video sequence. CSRT is a more advanced version of the KCF tracker and is more robust to changes in the object's appearance.

![]()

Comparison of Different Trackers

The choice of tracker depends on the application's requirements, such as speed, robustness, and accuracy.

MeanShift and CAMShift trackers are fast but can be affected by changes in the object's appearance. KCF and MOSSE trackers are more robust to changes in the object's appearance but can be slower than MeanShift and CAMShift trackers. CSRT tracker a more advanced version of the KCF tracker and is more robust to changes in the object's appearance, but it can be slower than the KCF tracker.

| Tracker Algorithm | Speed | Robustness | Accuracy | Parameters |

|---|---|---|---|---|

| MeanShift | Fast | Low | Medium | Window Size |

| CAMShift | Fast | Low | Medium | Window Size, Hue Range |

| KCF | Medium | Medium | High | Kernel Type, Padding |

| MOSSE | Fast | Low | Medium | Learning Rate |

| CSRT | Slow | High | High | Kernel Type, Padding, Channel Weight |

This table compares tracker algorithms based on four criteria: speed, robustness, accuracy, and parameters. The first column lists the names of the tracker algorithms being compared.

- MeanShift:

This algorithm is fast but has low robustness and medium accuracy. It relies on a window size parameter to define the target region. - CAMShift:

Similar to MeanShift, CAMShift is fast but lacks robustness and offers medium accuracy. It uses a window size and hue range parameter to track objects. - KCF:

This algorithm offers medium speed and medium robustness but excels in accuracy. It lets users specify the kernel type and padding for better tracking performance. - MOSSE:

MOSSE is a fast algorithm with low robustness and medium accuracy. It relies on a learning rate parameter to improve tracking results. - CSRT:

While CSRT is slow, it compensates with high robustness and accuracy. It offers options to specify kernel type, padding, and channel weight, allowing for fine-tuning tracking behaviour.

How to Track Multiple Objects in OpenCV?

We can use the same object tracking algorithm on each object separately to track multiple objects in OpenCV. We can use object detection algorithms such as Haar Cascade, MultiTracker API and HOG+SVM to detect the objects in the video sequence and use object tracking algorithms to track them.

Here's an example implementation of multiple object tracking using OpenCV's MultiTracker API:

In the first part of the code, we import the OpenCV library and initialize a MultiTracker object using the cv2.MultiTracker_create() method. We then open a video file using the cv2.VideoCapture() method and define a list of tracker types we will use in our implementation. The available tracker types in OpenCV are 'BOOSTING', 'MIL', 'KCF', 'TLD', 'MEDIANFLOW', 'GOTURN', 'MOSSE', and 'CSRT'.

In this part of the code, we read the first frame of the video using the cap.read() method and select a region of interest (ROI) using the cv2.selectROI() method. The ROI is a rectangular region that defines the object we want to track.

We then initialize a tracker using the selected ROI and the cv2.TrackerMOSSE_create() method. MOSSE stands for Minimum Output Sum of Squared Error, which is a fast and accurate tracking algorithm. We add the tracker to the list of trackers using the trackers.append(tracker) method.

In this part of the code, we loop through the frames of the video using a while loop. We read each frame using the cap.read() method and update the trackers using the multi_tracker.update(frame) method. The update() method returns a Boolean value indicating whether the tracking was successful or not, and a list of bounding boxes around the tracked objects.

We then draw a rectangle around each tracked object using the cv2.rectangle() method and display the frame using the cv2.imshow() method. We exit the loop if the user presses the 'q' key using the cv2.waitKey() method.

In the final part of the code, we release the video file using the cap.release() method and close the window using the cv2.destroyAllWindows() method.

Output

![]()

Implementing Different Object Tracking Algorithms in OpenCV

In this article let's implement the meanshift and Camshift object tracking algorithms using python.

Meanshift Algorithm

Here is the code for the Meanshift Algorithm:

Import OpenCV and Numpy to implement the Meanshift Algorithm.

Step 1: Load the input video using the cv2.VideoCapture() method.

Step 2: Initialize the tracker

Initialize the tracker by specifying the initial location of the object to be tracked using a bounding box. Set the termination criteria for the Mean Shift algorithm.

Step 3: Extract the region of interest (ROI)

Extract the region of interest (ROI) from the first frame of the video using the bounding box coordinates. Convert the ROI to the HSV color space.

Step 4: Calculate the histogram of the ROI

Calculate the histogram of the ROI in the Hue channel. Normalize the histogram to a range of 0 to 255.

Step 5: Convert to the HSV color space.

Convert each frame of the video to the HSV color space. Backproject the histogram over the entire frame to obtain a probability distribution.

Step 6: Apply the Mean Shift algorithm

Apply the Mean Shift algorithm to the probability distribution to obtain the new location of the object.

Step 7: Draw a rectangle around the new location of the object

Step 8: Close the windows

Sample output

Camshift Algorithm

The Continuously Adaptive Mean Shift (CAMshift) algorithm is a color-based object tracking algorithm that is an extension of the Mean Shift algorithm. It uses Histogram Backprojection of the window of the target object's color distribution to track the object in subsequent frames1. Here is the code to implement the CAMshift algorithm in OpenCV Python:

Here is the code to implement the camshift object tracking algorithm:

We start by importing the OpenCV module to implement the camshift algorithm.

Step 1: Load the video or image sequence and initialize the video writer if needed.

Step 2: Select the object to be tracked by drawing a bounding box around it.

Step 3: Convert the selected region to the HSV color space.

Step 4: Calculate the histogram of the selected region in the Hue channel.

Step 5: Normalize the histogram to a range of 0 to 255.

Step 6: Backproject the histogram over the entire frame to obtain a probability distribution.

Step 7: Apply the CAMshift algorithm to the probability distribution to obtain the new location of the object.

Step 8: Draw a rectangle around the new location of the object.

Step 9: Display the frame

Step 10: Close all the windows

Sample output:

Conclusion

- Object tracking is a challenging task in computer vision, but OpenCV provides a variety of object tracking algorithms that can be used in different applications.

- In this blog post, we discussed different object tracking algorithms available in OpenCV, their implementation, and how to track multiple objects in OpenCV.

- We also compared different trackers based on their speed, robustness, and accuracy.

- By using object tracking algorithms, we can detect and track moving objects in a video sequence, which can be useful in various applications such as security, surveillance, and self-driving cars.