Review of Probability Theory

Review of Probability Theory

Uploaded by

girithik14Copyright:

Available Formats

Review of Probability Theory

Review of Probability Theory

Uploaded by

girithik14Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Review of Probability Theory

Review of Probability Theory

Uploaded by

girithik14Copyright:

Available Formats

Lecture Set 2, EE 679, Copyright 2000, Sanjay K.

Bose

Review of Probability Theory

Conditional Probability P{B|A} = P{event B occurs given that A has already occurred}

If BA (i.e. event B is independent of A), then P{B|A}=P{B} Since occurrence of A will not affect the chances of occurrence of B, given that B is independent of A.

Bayes Rule

Denoting

P{AB} = P(events A and B both occur}, we get that -

P{AB} = P{A}P{B|A} = P{B}P{A|B} This leads to Bayes Rule

P{B | A} =

P{B}P{ A | B} P{ A}

This relationship is extremely useful in probability calculations such as in changing conditioning of events. (Example: Changing from a priori to a posteriori probabilities)

Mutually Exclusive Events

Note that if A and B are Mutually Exclusive events then P{AB}=0 as in that case, probabilistically, events A and B do not occur together

We define P{AB} as the probability of the union of events A and B. This is the event when either A occurs or B occurs or both occur By definition, P{AB} = P{A} + P{B} P{AB} = P{A} + P{B} if A and B are mutually exclusive events = P{A} + P{B} P{A}P{B} if AB, i.e. are independent events Complementary Event For an event A, the complementary event AC refers to the event where A does not occur P{AC} = 1 P{A} Note also that, for any event B, P{B} = P{B|A}P{A} + P{B|AC}P{AC}

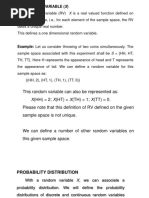

Discrete Random Variables

A discrete random variable X takes on discrete values xi with probabilities P{X=xi}>0 for i=1, 2, 3, ........ and P{X(x1, x2, .......)}=1 Examples of Distributions for Discrete Random Variables 1. Binomial Distribution P{ X = x} = p (1 p )

x

n x

n x

x=0, 1, ..., n

2. Poisson Distribution P{ X = x} = e

x for x=0, 1, 2, ...... x!

Lecture Set 2, EE 679, Copyright 2000, Sanjay K. Bose

Continuous Random Variables

In this case, the random variable X is not limited to discrete values but can take on any value x in a range [x1, x2], i.e. x[x1, x2], where the probability of the random variable X lying between x and x+dx is given by P{x X x+dx} = fX(x)dx where fX(x) is referred to as the Probability Density Function (pdf) of the random variable X The Cumulative Distribution Function FX(x) may also be used to describe the probability distribution of a continuous random variable. This is defined as FX ( x) = P{ X x} =

( x )dx

dFX ( x ) Note also that f X ( x ) = dx

Examples of Distributions of Continuous Random Variables -

2 1 2. Uniform Distribution - f X ( x ) = ba f X ( x) = 0 3. Exponential f X ( x ) = e x f X ( x) = 0

1. Normal Distribution -

f X ( x) =

1

2

( x )2 2 2

for x for a x b otherwise for 0 x otherwise

Memoryless Property

Let the random variable x0 be the length of service provided to a customer when service starts from the time instant t=0. Consider a customer who is still in service at time t and let {(X-t) | X>t} be the remaining service time for that customer. [Not that this random variable is the remaining service time when the customer is examined at time t, given (of course) that the customer is still in service at time t - i.e. the customer's service time X is greater than t]

Note that we can write P{(X-t)>x, X>t} = P{(X-t)>x | X>t}P{X>t} and that trivially P{(X-t)>x, X>t} = P{(X-t)>x} since x and t are both positive Therefore

P{( X t ) > x | X > t} =

P{( X t ) > x} P{( X > x + t} 1 FX (t + x ) = = P{ X > t} P{ X > t} 1 FX (t )

If the service distribution is memoryless, then that implies that when we examine the customer (who started service at t=0 and is still in service) at time t, the service given in the past during the interval (0,t) is forgotten! If this is indeed the case, then it follows that P{(X-t)>x | X>t} = P{X>x}= 1- FX(x)

Using this, we get that for a memory less distribution,

[1 FX (t + x )] = [1 FX ( x)][1 FX (t )]

The Exponential Distribution is an example of a memory less distribution. Note that for this distribution,

Lecture Set 2, EE 679, Copyright 2000, Sanjay K. Bose

f X ( x ) = e x and FX ( x ) = 1 e x for x0

Therefore, [1 FX (t + x )] = e Memoryless Property

(t + x )

= e x e t = [1 FX ( x)][1 FX (t )] as required by the

For integer valued random variable X={0, 1, 2, .......}, the corresponding memory less distribution is the Geometric Distribution where P{X=n} = qn(1-q) leading to P{Xx} = qx for x=0, 1, 2, ......, This may be verified by noting that P{Xx+N} = qx+N = qxqN = P{Xx}P{XN} as required.

Note that the memory less property of the exponential and geometric distributions make them easy to handle. These are therefore very useful in the analytical modeling of queuing systems and computer communications. Joint Distributions The joint distribution for continuous random variables X and Y is given in the following form cumulative distribution function (cdf) probability density function (pdf)

FXY ( x, y ) = P{ X x, Y y}

f XY ( x, y ) =

Note that

2 FXY ( x, y ) = P{x X x + dx, y Y y + dy} xy

FY(y) = FXY(, y)

FX(x) = FXY(x, )

f X ( x) =

f XY ( x, y )dy and f Y ( x ) =

XY

( x, y )dx

Note also that if XY, then fXY(x,y)=fX(x)fY(y) and FXY(x,y)=FX(x)FY(y) Functions of Random Variables For a random variable X, U=u(X) may be defined as another random variable which is a function of the random variable X. If u(x) is differentiable and monotone, then the pdf of the random variable U may be easily found as fU(u)=fX(x)/|u'(x)| or fU(u)|du|=fX(x)|dx| If u(x) is not monotone then one has to be more careful as the function X=u-1(U) may have multiple roots and these should be accounted for while finding fU(u). If u(x) is not differentiable at some point in the range, then delta functions will arise in the pdf of U. Example: Consider u(x) = x2 to generate the random varaible U from the random variable X, where X[-1, 1] From the form of the function u(x) and the range of X, we can see that fU(u)du = fX(x)dx + fX(-x)|dx| Since du=2xdx, we get that, dx=du/(2u) Therefore,

f U (u ) =

f X ( u ) + f X ( u ) 2 u

Lecture Set 2, EE 679, Copyright 2000, Sanjay K. Bose

If we consider the case where the random variable X is uniformly distributed in [-1, 1], then fX(x)=0.5 =0 for -1x1 otherwise

This will then give f U (u ) =

1 2 u

and FU (u ) =

u for 0u1

This approach may be extended using the Jacobian for the case of functions of more than one variables. As an example of this, consider z=g(x,y) and w=h(x,y) [Note that if only one function is given then the second function may be arbitrarily defined.] i.e. (xi , yi ) are the real solutions to these equations for given (z,w)

Let g(xi, yi ) = z and h(xi, yi )=w

g The Jacobian J(x,y) is then defined as J ( x, y ) = x h x

which may be used to get f ZW ( z , w) =

g y h y

f (x , y ) f XY ( x1 , y1 ) + ................ + XY n n + ........ J ( x1 , y1 ) J ( xn , y n )

z=g(x,y) and w=h(x,y)

where {xi, yi} i=1, 2,....., n,..... are the roots of

Note that if there are no real solutions for some values of (z,w), then for these fZW(z,w)=0

Lecture Set 2, EE 679, Copyright 2000, Sanjay K. Bose

Using Expectations and Transforms

Expectations

If X is a random variable, then we can define expectations for various functions of X as -

g ( X ) = E{g ( X )} =

=

g ( x) f ( x)dx for Continuous Random Variables g ( x) P{ X = x} for Discrete Random Variables

X x

Some useful results: E{cg(X)} = cE{g(X)} E{g(X)+h(Y)} = E{g(X)} + E{h(Y)} For XY, E{g(X)h(Y)} = E{g(X)}E{h(Y)} The nth moment of the random variable X is defined as E{ X } = X

n n

Specifically -

X = mean

2 X = var iance = E{( X X ) 2 } = X 2 X 2

X is referred to as the standard deviation of X

Note that X is really indicative of the dispersion of the random variable X around its mean This is a convenient tool to use for continuous random variables X such that X0 and is defined as

Laplace Transform

~ FX ( s ) = L( f X ( x )) = E{e sX } = e sx f X ( x )dx

0

Some useful properties of this transform are -

(a) Moment Generating Property

X n = (1) n

dn ~ FX ( s ) | s = 0 ds n

(b) Given a transform, inverting it will provide the corresponding pdf fX(x) of X (c) Multiplication in the Transform Domain would correspond to Convolution in the r.v. domain and vice-versa.

For example &

~ ~ L ( f 1 ( x ) * f 2 ( x ) ) = L f 1 ( ) f 2 ( x )d = F1 ( s ) F2 ( s ) ~ 1 ~ L F1 ( s ) * F2 ( s ) = f1 ( x) f 2 ( x )

(d) Transform of the sum of independent random variables = Product of the individual transforms If random variables X and Y are such that XY, then we can show that -

Lecture Set 2, EE 679, Copyright 2000, Sanjay K. Bose

~ ~ ~ FX +Y ( s ) = E{e s ( X +Y ) } = FX ( s ) FY (s )

Characteristic Function (Fourier Transform) This type of transform is useful for continuous random variables where X may take on negative values, i.e. - X

jX

This is defined as X ( ) = E{e

} = F [fX(x)]

i.e. the Fourier Transform of fX(x)

Properties similar to those described for Laplace Transforms above are also applicable here (a) X

n

= ( j ) n

dn X ( ) | =0 d n

Moment Generating Property

(b) Multiplication in Transform Domain corresponds to Convolution in the random variable domain and vice versa (c) The characteristic function of the sum of independent random variables is the product of the characteristic functions of the individual random variables

Generating Function or Probability Generating Function (Z-Transform of the Probability Distribution) This transform is used for a discrete random variable X, such that X0. It is defined as -

G X ( z ) = E{z X } = pi z i = Z [P{X=i}]

i =0

where pi = P{ X = i}

This also has properties similar to the transforms given earlier (a) Moment Generating Property X = G X (1) (b) If X1X2 and Y=X1+X2, then GY ( z ) = G X1 ( z )G X 2 ( z ) and

X ( X 1) = G X (1) ....... etc.

pY ( y ) = P{Y = y} = p X1 + X 2 ( y ) =

x1 = 0

X1

( x1 ) p X 2 ( y x1 )

Covariance & Correlation

Consider the random variables X and Y.

2 2 2 2 X + Y = E{( X + Y X Y ) } = E{( X X ) } + E{(Y Y ) } + 2 E{( X X )(Y Y )} 2 2 =X +Y + 2Cov ( X , Y )

Note that the Covariance of X and Y, Cov(X, Y) is defined as -

Cov ( X , Y ) = E{( X X )(Y Y )} = XY X .Y

(a) If XY, then Cov(X, Y) = 0 since XY = X .Y (b) If Cov(X, Y) = 0, then the random variables X and Y are uncorrelated Note that XY, implies Cov(X, Y) = 0. However, Cov(X, Y) = 0 does not imply XY but the much weaker condition that X and Y are uncorrelated

Lecture Set 2, EE 679, Copyright 2000, Sanjay K. Bose

Stochastic (Random) Processes

Definition of a Stochastic Process: {X(t); tT} is a stochastic process if X(t) is a random variable for each t in the index set T - usually t indicates time. Different ways of classifying Stochastic Processes: Continuous Time Processes when T is an interval and all times in that interval are possible choices for t. Discrete Time Processes when T is a set of discrete time points with Xn = X(tn ) constituting the process. Continuous State Process when X(t) can have a continuum of values possibly within a fixed range. Discrete State Process when X(t) can only have one of a discrete set of values. Note that X(t)=x implies that the random process is in state x at time t. The Stochastic Process X(t) is said to be well defined if the joint distribution of the random variables X(t1), X(t2), ........., X(tk) can be determined for every finite set of time instants t1, t2, ........, tk ~~ In a stochastic sense, the random process is completely specified if the joint distribution FX ~ (x, t )

exists for

~~ FX ~ ( x , t ) = P{ X (t1 ) x1 ,..............X (t n ) x n } (a) all ~ x = ( x1 ,............x n ) ~ (b) all t = (t ,............t )

1 n

(c) all possible values of n

~ If FX ~ ( x , t ) is known as above, then all possible stochastic dependencies between sample values of X(t)

may be found. This, however, is usually hard to get. Typically, only some limited dependencies will be known and different Stochastic Processes are classified based on these known dependencies. Examples of this leading to special processes are given next. (a) Independent Process: For this type of process, {X(tn)} or {Xn} are independent random variables, and therefore,

~~ ~ ( x ; t ) = f X ( xi ; t i ) fX i

i =1

Note that this is really a trivial case of a process as there are no dependencies between the various Xis. (b) Stationary Processes: These are processes where the joint distribution of the random variables corresponding to a set of time points is invariant to a time shift of all the time points. The process is considered Strictly Stationary if the property holds for any choice of the number of time points. If the property holds for any choice of n time points or less but not for any choice of n+1 time points then the process is referred to as being Stationary of Order n. The process is referred to as being Wide Sense Stationary (WSS) if (a) E{X(t)} is independent of t and (b) E{X(t)X(t+)} depends only on and not on t. Stationary Processes will not be used in our description of queues and will not be considered further here. (c) Markov Processes: Markov Processes are ones for which the Markov Property (given below) holds. This property states that -

Lecture Set 2, EE 679, Copyright 2000, Sanjay K. Bose

P{ X (t n +1 ) = x n +1 | X (t n ) = x n ,........., X (t1 ) = x1} = P{ X (t n +1 ) = x n +1 | X (t n ) = x n }

Note that when this property is satisfied, the state of the process/system at time instant tn+1 depends only on the state of the process/system at the previous instant tn and not on any of the earlier time instants. Alternatively, one can say that a process is termed a Markov Process if, given the present state of the process, its future evolution depends is independent of the past of the process. This effectively implies a one-set dependence feature for the Markov Process where older values are forgotten. Restricted versions of this property leads to special cases, such as (a) Markov Chains over a Discrete State Space (b) Discrete-Time and Continuous- Time Markov Processes and Markov Chains Markov Chains: The discrete random variables {Xn} form a Markov Chain if the probability that the next state is xn+1 depends only on the current state xn and not on any previous values. For the Discrete Time case, state changes are pre-ordained to occur only at the integer points 0, 1, 2, ......, n (that is at the time points t0, t1, t2,......, tn). For the Continuous Time case, state changes may occur anywhere in time. Homogenous Markov Chain: A Homogenous Markov Chain is one where the transition probabilities P{Xn+1=j | Xn=i} is the same for all n. Note that one can then write that Transition Probability from state i to state j = pij = P{Xn+1=j | Xn=i} "n It should be noted that for a homogenous Markov chain, the transition probability depends only on the terminal states (i.e. the initial state i and the final state j) but does not depend on when actually the transition (i j ) occurs.

(d) Semi-Markov Processes:

In a Semi-Markov Process, the distribution of time spent in a state can have an arbitrary distribution but the one-step memory feature of the Markovian property is retained. We will find processes of this type useful in some of our analyses. A Birth-Death Process is a special type of discrete-time or continuous-time Markov Chain with the restriction that at each step, the state transitions, if any, can occur only between neighboring states.

(e) Birth-Death Process:

(g) Renewal Processes:

These are related to random walks except that our interest here lies in counting the number of transitions that take place as a function of time. State at time t = Number of transitions in (0, t) where (ti - ti-1) i are i.i.d. random variables Let Xi = (ti - ti-1) i be a set of i.i.d. random variables. Subject to the conditions that they are independent and have identical distributions, the random variables {Xi} can have any distribution. Note that this corresponds to a SemiMarkov Process.

You might also like

- TOBB ETU ELE471: Lecture 1Document7 pagesTOBB ETU ELE471: Lecture 1Umit GudenNo ratings yet

- Second Order Partial Derivatives The Hessian Matrix Minima and MaximaDocument12 pagesSecond Order Partial Derivatives The Hessian Matrix Minima and MaximaSyed Wajahat AliNo ratings yet

- Chapter 5Document19 pagesChapter 5chilledkarthikNo ratings yet

- An Informal Introduction To Stochastic Calculus With ApplicationsDocument10 pagesAn Informal Introduction To Stochastic Calculus With ApplicationsMarjo KaciNo ratings yet

- RV IntroDocument5 pagesRV IntroBalajiNo ratings yet

- Advanced StatisticsDocument131 pagesAdvanced Statisticsmurlder100% (1)

- Prob ReviewDocument19 pagesProb ReviewSiva Kumar GaniNo ratings yet

- Theories Joint DistributionDocument25 pagesTheories Joint DistributionPaul GokoolNo ratings yet

- Chap2 PDFDocument20 pagesChap2 PDFJacobNo ratings yet

- Elementary Mathematics: WwlchenandxtduongDocument16 pagesElementary Mathematics: WwlchenandxtduongMauro PérezNo ratings yet

- PRP - Unit 2Document41 pagesPRP - Unit 2Rahul SinghNo ratings yet

- Lecture Notes Week 1Document10 pagesLecture Notes Week 1tarik BenseddikNo ratings yet

- Elements of Probability Theory: 2.1 Probability, Random Variables and Random MatricesDocument7 pagesElements of Probability Theory: 2.1 Probability, Random Variables and Random MatricesGerardOo Alexander SNo ratings yet

- CHAPTER TWO (2) SDocument69 pagesCHAPTER TWO (2) Skaleabs321No ratings yet

- Some Common Probability DistributionsDocument92 pagesSome Common Probability DistributionsAnonymous KS0gHXNo ratings yet

- CH 3Document22 pagesCH 3Agam Reddy MNo ratings yet

- Mass Functions and Density FunctionsDocument4 pagesMass Functions and Density FunctionsImdadul HaqueNo ratings yet

- Distribution Theory IDocument25 pagesDistribution Theory IDon ShineshNo ratings yet

- Vectors of Random Variables: Guy Lebanon January 6, 2006Document2 pagesVectors of Random Variables: Guy Lebanon January 6, 2006Ngọc ChiếnNo ratings yet

- Set1 Probability Distribution RK PDFDocument38 pagesSet1 Probability Distribution RK PDFrajesh adhikariNo ratings yet

- Partial DerivativeDocument71 pagesPartial Derivative9898171006No ratings yet

- Review of Basic Probability: 1.1 Random Variables and DistributionsDocument8 pagesReview of Basic Probability: 1.1 Random Variables and DistributionsJung Yoon SongNo ratings yet

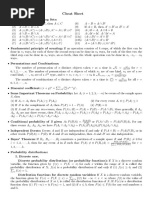

- Cheat SheetDocument2 pagesCheat SheetVarun NagpalNo ratings yet

- Stochastic ProcessesDocument46 pagesStochastic ProcessesforasepNo ratings yet

- Week 3Document20 pagesWeek 3Venkat KarthikeyaNo ratings yet

- Learning Hessian Matrix PDFDocument100 pagesLearning Hessian Matrix PDFSirajus SalekinNo ratings yet

- Practice Final Exam Solutions: 2 SN CF N N N N 2 N N NDocument7 pagesPractice Final Exam Solutions: 2 SN CF N N N N 2 N N NGilberth Barrera OrtegaNo ratings yet

- Stationary Points Minima and Maxima Gradient MethodDocument8 pagesStationary Points Minima and Maxima Gradient MethodmanojituuuNo ratings yet

- Chapter 2 - Random VariablesDocument26 pagesChapter 2 - Random VariablesNikhil SharmaNo ratings yet

- RVSP NotesDocument123 pagesRVSP NotesSatish Kumar89% (9)

- Homogeneous and Homothetic Functions PDFDocument8 pagesHomogeneous and Homothetic Functions PDFSatyabrota DasNo ratings yet

- KJM 2013 282Document12 pagesKJM 2013 282satitz chongNo ratings yet

- Probability Distribution Function-1Document22 pagesProbability Distribution Function-1Soumen KunduNo ratings yet

- Elementary Probability TheoryDocument18 pagesElementary Probability TheoryIkhwan ZulhilmiNo ratings yet

- Solutions To Sheldon Ross SimulationDocument66 pagesSolutions To Sheldon Ross SimulationSarah Koblick50% (12)

- Probability ReviewDocument12 pagesProbability Reviewavi_weberNo ratings yet

- Lectures 26-27: Functions of Several Variables (Continuity, Differentiability, Increment Theorem and Chain Rule)Document4 pagesLectures 26-27: Functions of Several Variables (Continuity, Differentiability, Increment Theorem and Chain Rule)Saurabh TomarNo ratings yet

- MIT18 S096F13 Lecnote3Document7 pagesMIT18 S096F13 Lecnote3Yacim GenNo ratings yet

- 1.10 Two-Dimensional Random Variables: Chapter 1. Elements of Probability Distribution TheoryDocument13 pages1.10 Two-Dimensional Random Variables: Chapter 1. Elements of Probability Distribution TheoryAllen ChandlerNo ratings yet

- MIT14 381F13 Lec1 PDFDocument8 pagesMIT14 381F13 Lec1 PDFDevendraReddyPoreddyNo ratings yet

- Joint Random Variables 1Document11 pagesJoint Random Variables 1Letsogile BaloiNo ratings yet

- Ade NPTL NotesDocument207 pagesAde NPTL NotesSaad MalikNo ratings yet

- UNIT I - Random VariablesDocument12 pagesUNIT I - Random VariablesShubham VishnoiNo ratings yet

- Math 319 Notes, A.SDocument13 pagesMath 319 Notes, A.SNahitMacitGuntepeNo ratings yet

- Chapter 4-6Document39 pagesChapter 4-6abiysemagn460No ratings yet

- Random Variable and Mathematical ExpectationDocument31 pagesRandom Variable and Mathematical ExpectationBhawna JoshiNo ratings yet

- The Fundamental Postulates of Quantum MechanicsDocument11 pagesThe Fundamental Postulates of Quantum MechanicsMohsin MuhammadNo ratings yet

- LECT3 Probability TheoryDocument42 pagesLECT3 Probability Theoryhimaanshu09No ratings yet

- Differentials, Slopes, and Rates of ChangeDocument4 pagesDifferentials, Slopes, and Rates of ChangeManisha AdliNo ratings yet

- Distributions and Normal Random VariablesDocument8 pagesDistributions and Normal Random Variablesaurelio.fdezNo ratings yet

- Introductory Probability and The Central Limit TheoremDocument11 pagesIntroductory Probability and The Central Limit TheoremAnonymous fwgFo3e77No ratings yet

- Mca4020 SLM Unit 02Document27 pagesMca4020 SLM Unit 02AppTest PINo ratings yet

- STA124 Complete Note (Edward Cares)Document41 pagesSTA124 Complete Note (Edward Cares)kudiratomolaraadekolaNo ratings yet

- SI_Chapter-1Document30 pagesSI_Chapter-1Sangeetha VNo ratings yet

- UNIT-3: 1. Explain The Terms Following Terms: (A) Mean (B) Mean Square Value. AnsDocument13 pagesUNIT-3: 1. Explain The Terms Following Terms: (A) Mean (B) Mean Square Value. AnssrinivasNo ratings yet

- Elgenfunction Expansions Associated with Second Order Differential EquationsFrom EverandElgenfunction Expansions Associated with Second Order Differential EquationsNo ratings yet

- Differentiation (Calculus) Mathematics Question BankFrom EverandDifferentiation (Calculus) Mathematics Question BankRating: 4 out of 5 stars4/5 (1)

- CXA Series Signal AnalyzerDocument60 pagesCXA Series Signal Analyzergirithik14No ratings yet

- SCADE Training Catalogue 2014Document33 pagesSCADE Training Catalogue 2014girithik14No ratings yet

- Tutorial Antenna Design CSTDocument37 pagesTutorial Antenna Design CSTLiz BenhamouNo ratings yet

- AFDX Training October 2010 FullDocument55 pagesAFDX Training October 2010 FullHugues de PayensNo ratings yet

- Case Study DO-254Document9 pagesCase Study DO-254girithik14No ratings yet

- Safety-Critical Software Development: DO-178B: Prof. Chris Johnson, School of Computing Science, University of GlasgowDocument38 pagesSafety-Critical Software Development: DO-178B: Prof. Chris Johnson, School of Computing Science, University of Glasgowgirithik14No ratings yet

- Experimental Design, Sensitivity Analysis, and OptimizationDocument24 pagesExperimental Design, Sensitivity Analysis, and Optimizationgirithik14No ratings yet

- Orbital Rendezvous Using An Augmented Lambert Guidance SchemeDocument0 pagesOrbital Rendezvous Using An Augmented Lambert Guidance Schemegirithik14No ratings yet

- ECE 461: Homework #5: Water Level Control: 1) The Dynamics of A 2-Tank System With Proportional (P K) Feedback AreDocument1 pageECE 461: Homework #5: Water Level Control: 1) The Dynamics of A 2-Tank System With Proportional (P K) Feedback Aregirithik14No ratings yet

- EE 461 - Homework Set #4Document4 pagesEE 461 - Homework Set #4girithik14No ratings yet

- HW3 2003Document2 pagesHW3 2003girithik14No ratings yet