On The Markov Chain Monte Carlo (MCMC) Method: Rajeeva L Karandikar

On The Markov Chain Monte Carlo (MCMC) Method: Rajeeva L Karandikar

Uploaded by

Diana DilipCopyright:

Available Formats

On The Markov Chain Monte Carlo (MCMC) Method: Rajeeva L Karandikar

On The Markov Chain Monte Carlo (MCMC) Method: Rajeeva L Karandikar

Uploaded by

Diana DilipOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

On The Markov Chain Monte Carlo (MCMC) Method: Rajeeva L Karandikar

On The Markov Chain Monte Carlo (MCMC) Method: Rajeeva L Karandikar

Uploaded by

Diana DilipCopyright:

Available Formats

S adhan a Vol. 31, Part 2, April 2006, pp. 81104.

Printed in India

On the Markov Chain Monte Carlo (MCMC) method

RAJEEVA L KARANDIKAR

7, SJS Sansanwal Marg, Indian Statistical Institute, New Delhi 110 016, India

e-mail: rlk@isid.ac.in

Abstract. Markov Chain Monte Carlo (MCMC) is a popular method used to

generate samples from arbitrary distributions, which may be specied indirectly.

In this article, we give an introduction to this method along with some examples.

Keywords. Markov chains; Monte Carlo method; random number generator;

simulation.

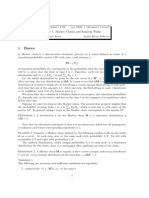

1. Introduction

In this article, we give an introduction to Monte Carlo techniques with special emphasis on

Markov Chain Monte Carlo (MCMC). Since the latter needs Markov chains with state space

that is R or R

d

and most text books on Markov chains do not discuss such chains, we have

included a short appendix that gives basic denitions and results in this case.

Suppose X is a random variable (with known distribution, say with density f ) and we are

interested in computing the expected value

E[g(X)] =

_

g(x)f (x)dx (1)

for a given function g. If the functions f, g are such that the integral in (1) cannot be computed

explicitly (as a formula for the indenite integral may not be available in closed form) then

we can do as follows.

Assuming that we can generate a random sample from the distribution of X, generate a

random sample of size n:

x

1

, x

2

. . . x

n

,

from this distribution and compute

a

n

=

1

n

n

i=1

g(x

i

).

Then by the law of large numbers, a

n

approximates E[g(X)].

Moreover, the central limit theorem gives the order of error; the error here is of the order of

O(n

1

2

).

81

82 Rajeeva L Karandikar

As of nowwe have not said anything about the randomvariable X it could be taking values

in Ror R

d

for any dimension d. The important thing to note is that the order of error does not

depend upon the dimension. This is very crucial if d is high as most of the numerical analysis

techniques do not fare well in higher dimension. This technique of generating x

1

, x

2

, . . . x

n

to

approximate quantities associated with the distribution of X is called Monte Carlo simulation

or just Simulation.

The most crucial part of the procedure described above is the generation of a randomsample

from the distribution of X. We deal here with the case where the state space is a subset of R

or R

d

and the distribution is given by its density f .

We assume that we have access to a good randomnumber generator which gives us a way

of generating a randomsample fromUniform(0,1). For example, one could use the Mersenne

Twister random number generator (see http://www.math.keio.ac.jp/matumoto/emt.html).

There is a canonical way of generating a univariate randomvariable fromany distribution F:

Let F

1

be the inverse of F and let U be a sample from Uniform (0,1). Then X = F

1

(U)

is a random sample from F.

Often, the distribution is described by a density and a closed form for the distribution func-

tion F is not available, and so the method described above fails. Another method is trans-

formation of variables: Thus, if we have a method to generate N(0, 1) random variables,

then to generate a sample from t distribution with k degrees of freedom, we can generate

independent samples X, Y

1

, . . . , Y

k

from N(0, 1) and then take

t = X

_

_

k

j=1

Y

2

j

/k

_

1/2

Indeed, the common method to generate samples from N(0, 1) also uses the idea of trans-

formation of variables: Generate U, V from Unifrom (0,1), U, V independent) and dene

X = [2 log(U)]

1/2

cos(2V)

Y = [2 log(U)]

1/2

sin(2V).

Then X, Y are independent samples from N(0, 1).

Of course, transformation of variables is a powerful method, but given a distribution, it is

not clear how to use this method. So even when density is known we may have difculty in

generating samples from the distribution corresponding to it.

There are many situations where f may not be explicitly known but is described indirectly.

For example, f may be known only upto a normalizing constant. Another possibility is that the

distribution of interest is a multivariate distribution that is not known, but all the conditional

distributions are specied.

Suppose we know f

1

(x) = Kf (x) but do not explicitly know K. Of course, K equals

the integral of f

1

. Numerically computing K and then proceeding to numerically compute

_

g(x)f (x)dx can inate the error. It would be much better if just knowing f

1

, we can device

a scheme to generate randomsample fromf = (1/K)f

1

and thereby compute

_

g(x)f (x)dx

approximately.

Bayesian framework: Suppose that given , X has a density p(x | ) and the prior on is

given by a density (). Then the posterior density ( | x) of given an observation X = x

is given by

( | x) = [p(x | )()]

___

p(x | )()d

_

.

On the Markov Chain Monte Carlo (MCMC) method 83

Often it is difcult to obtain exact expression for

_

p(x | )()d,

but given p(x | ), () we know ( | x) upto a normalizing constant!

Note that once there is a method to generate samples from the posterior density, there is no

need for a practitioner to restrict the choice of prior to a conjugate prior (roughly, these are

priors for which the the posterior can be computed in closed form). Even though the posterior

density of may not be available in closed form, all quantities of interest could be obtained

by simulation.

2. Rejection sampling

In 1951 von Neumann gave a method to generate samples from a density f = (1/K)f

1

knowing only f

1

if there is a density h such that (it is possible to generate samples fromh and)

f

1

(x) Mh(x) x.

The algorithm, given below, is known as rejection sampling.

(1) Generate a random sample from the distribution with density h. Let it be y.

(2) Accept y as the sample with probability [f

1

(y)/Mh(y)].

(3) If step (2) is not a success, then go to step (1).

Here f (or f

1

) is called the target density and h(x) is called a majorizing function or an

envelope or in some contexts the proposal density. We can repeat the steps (1)(3) several

times to generate i.i.d. samples from f .

The algorithmcanbe describedas follows (as pseudo-code). (Here andinthe sequel, succes-

sive calls to the random number generator are assumed to yield independent observations.)

The following algorithm generates a random sample z

1

, . . . , z

N

from the distribution f .

Rejection sampler algorithm:

i = 0

do

{

i = i +1

k = 0

do

k = k +1;

Generate u

k

from Uniform (0,1) and x

k

from h

} while

_

u

k

>

f

1

(x

k

)

Mh(x

k

)

_

z

i

= x

k

} while i N

We will now prove that the algorithm described above yields a random sample from f .

84 Rajeeva L Karandikar

Theorem 1 (Rejection sampling). Suppose we are given f

1

, such that f

1

(x) = Kf (x) for

a density f . Suppose there exists a density h(x) and a constant M such that

f

1

(x) Mh(x) x. (2)

Let X

k

be i.i.d. with common density h, U

k

be i.i.d. Uniform (0,1). Let B be given by

B = {(x, u) : u f

1

(x)/Mh(x)}

and be the rst m such that (X

m

, U

m

) B and let W = X

. Then W has density f .

Proof. Take Z

k

= (X

k

, U

k

). Note that

P( = m) = P(Z

1

/ B, . . . Z

m1

/ B, Z

m

B)

= P(Z

1

/ B)

m1

P(Z

m

B)

= (1 P(Z

1

B))

m1

P(Z

1

B),

and hence P( < ) = 1. Now

P(Z

m

A | = m) = P(Z

m

A | Z

1

/ B, . . . Z

m1

/ B, Z

m

B)

= P(Z

m

A | Z

m

B)

= P(Z

1

A | Z

1

B),

and hence

P(Z

A) =

m

P(Z

m

A | = m)P( = m)

=

m

P(Z

1

A | Z

1

B)P( = m)

= P(Z

1

A | Z

1

B).

Taking A = (, a] [0, 1] for a R, we have (using {Z

A} = {W a}),

P(W a) = P(X

1

a | Z

1

B)

=

P(X

1

a, Z

1

B)

P(Z

1

B)

=

__

a

_

1

0

1

B

(x, u)h(x)dudx

____

_

1

0

1

B

(x, u)h(x)dudx

_

=

__

a

f

1

(x)

Mh(x)

h(x)dx

____

f

1

(x)

Mh(x)

h(x)dx

_

=

__

a

f

1

(x)dx

____

f

1

(x)dx

_

=

_

a

f (x)dx.

On the Markov Chain Monte Carlo (MCMC) method 85

We have used

_

1

0

1

B

(x, u)du = f

1

(x)/Mh(x) and also that f

1

is proportional to the density

f . This completes the proof.

Let us examine what happens if we use the rejection sampling algorithmwhen the envelope

condition (2) is not true:

The integral

_

a

_

1

0

1

B

(x, u)h(x)dudx now equals,

_

a

min

_

f

1

(x)

Mh(x)

, 1

_

h(x)dx,

which simplies to

_

a

1

M

min(f

1

(x), Mh(x))dx.

Thus the density of the output W is proportional to,

f

1

(x) = min(f

1

(x), Mh(x)),

rather than to f

1

(x).

Rejection method is a good method if a suitable envelope can be found for the target

density. Suppose the target density is f (x) and f

1

(x) = Kf (x) is known. Suppose g(x) is

the proposal or majorizing density and suppose that

f (x) Mg(x),

(so that f

1

(x) KMg(x)). The probability of accepting a sample is (1/M) and the distri-

bution of number of trials needed for one acceptance is geometric. Thus, on the average M

trials would be needed for accepting one sample. This can be a problem if M is large.

3. Markov Chain Monte Carlo

The Monte Carlo methods discussed above were based on generating independent samples

from the specied distribution. Metropolis and others in a paper published in Journal of

Chemical Physics in 1953 use a very different approach for simulation. The paper deals with

computation of certain properties of chemical substances, and uses Monte Carlo techniques

for the same but in a novel way:

For the distribution of interest whose density is , they construct a Markov Chain {X

n

} in

such a way that the given distribution is the stationary distribution for the chain. The chain

constructed is aperiodic and irreducible so that the stationary distribution is unique. Then the

ergodic theorem ensures that

1

N

N

n=1

g(X

n

)

_

g(x)(x)dx,

as N . This can be used to estimate

_

g(x)(x)dx.

Given a distribution how does one construct a Markov Chain with as the stationary

distribution?

86 Rajeeva L Karandikar

The answer to this question is surprisingly simple. We begin with a simple example. The 3-

dimensional analogue of this example was introduced in statistical physics to study behaviour

of a gas whose particles have non-negligible radii and thus cannot overlap.

Consider an N N chessboard. Each square is assigned a 1 or 0.

1 means the square is occupied and 0 means that the square is unoccupied. Each such

assignment is called a conguration. Aconguration is said to be feasible if all the neighbours

of every square that is occupied are unoccupied. (Every square that is not in the rst or last

row or column has 8 neighbours.)

Thus a conguration is feasible if for every pair of adjacent squares, at most one square

has a 1.

For a feasible conguration (denoted by ), let n() denote the number of 1s in . The

quantity of interest to physicists is the average of n() where the average is taken over the

uniform distribution over all the feasible congurations.

The total number of congurations is 2

NN

and even when N = 25, this number is 2

625

,

thus it is not computationally feasible to scan all congurations. Hence, count the feasible

congurations and take the average of n().

Assume that a powerful computer can sequentially scan the congurations , decide if it is

feasible and, if so, count n() in one cycle, and suppose the clock speed is 1000 GHz. Sup-

pose there are a million such machines working in parallel. Then in one second, 2

10+30+20

=

2

60

congurations will be scanned. In one year, there are 243600365 = 31536000 which

is approximately 2

25

= 33554432 seconds. Thus, we can scan 2

85

congurations in one year,

when we have a million computers working at 1000 GHz speed. It will still take 2

540

years.

Even if the size is 10, the number of congurations is 2

100

and it would take 2

15

= 32768

years.

It is easy to see that when N = 25, the total number of feasible congurations is at least

2

169

. To see this, assign 0 to all squares that have one of the coordinates even (the squares are

indexed from 1 to 25). In the remaining 169 positions, we can assign a 1 or 0. It is clear that

each such conguration is feasible and the total number of such congurations is 2

169

.

Let denote the discrete uniform distribution on the set of feasible congurations:

() = 1/M,

where M is the total number of feasible congurations. We construct a Markov Chain {X

k

}

on the set of feasible congurations in such a way that it is aperiodic and irreducible and

is the stationary distribution for the chain.

Then as N

1

N

N

k=1

n(X

k

)

n()().

The transition function p(, ) is described as follows: Fix 0 < p < 1. Given a feasible

conguration , choose a square s (out of the N

2

squares) with equal probability. If any of

the neighbours of s is occupied (has 1) then = ; if all the neighbours of s are unoccupied

(have 0) then with probability p, ip the state of the square s and otherwise do nothing.

(Since the chain is slow moving, it would be better to take p close to 1.)

Let us observe that the chain is irreducible. First note that the transition function is sym-

metric:

p(, ) = p(, ).

On the Markov Chain Monte Carlo (MCMC) method 87

If , differ at more than one square, then the above equality holds as both the probabilities

are zero. The same is true if they differ at one square, then all the adjacent squares must have

0 and then both the terms above are

p/(N N).

Thus the transition function is symmetric.

Thus to prove that the chain is irreducible sufces to prove that the null conguration

(where every square has a 0) leads to any other square. If a conguration has exactly one 1

then it is clear that it can be reached from the null conguration in one step. It follows that

any feasible conguration can be reached from null conguration in n() steps. Hence, the

chain is irreducible.

Since p(, ) > 0 for every feasible , it follows that the chain is aperiodic. Thus the chain

is a nite state Markov Chain that is irreducible and aperiodic. Hence it is positive recurrent

and admits a unique stationary distribution. Note that

()p(, ) =

1

M

p(, )

=

1

M

p(, )

=

1

M

= ().

Thus is the unique stationary distribution.

Hence as L ,

1

L

L

k=1

n(X

k

)

n()(), (3)

where {X

k

} is the Markov Chain described above. Thus to approximate

n()(), we

can choose a large L and take (1/L)

L

k=1

n(X

k

) as an approximation. Even better, to reduce

dependence on initial state X

0

,we can rst choose J, L integers, and then take

1

L

J+L

k=J+1

n(X

k

)

as an approximation for

n()().

Thus by generating the Markov Chain as described above, we can estimate the average

number of occupied sites. This is an example of the MCMC technique.

How large should J, L be for

1

L

J+L

k=J+1

n(X

k

)

to give a good approximation to

n()()?

Consider a simple random walk on N = 2

100

points placed on a (large) circle, so that

p

ij

= 05 if j = i +1 mod (N) or j = i 1 mod (N),

88 Rajeeva L Karandikar

and zero otherwise. Here also, the chain is irreducible and the transition probability matrix

is doubly stochastic and thus the unique invariant probability distribution is the uniform

distribution on the N points. Let h be a function on {0, 1, 2, . . . , N1} and X

n

be the Markov

Chain. Since in L steps, this Markov Chain will at most move L steps to the right and L steps

to the left, (and with very high probability, does not go more than 10

L steps away from

X

0

), it is clear that L must be much larger than N for the ergodic average

1

L

J+L

k=J+1

h(X

k

),

to be a good approximation of

1

N

N1

j=0

h(j).

Therefore, in this case, the MCMC technique does not yield a good answer.

One has to be careful in choosing an appropriate L. As a thumb rule, let M be the smallest

integrer such that

P(X

M

= j | X

0

= i) > 0 states i, j.

Then J should be of the order of M and Lshould be much larger. In the chessboard example

with N = 25, we can see that M 2 169.

In the chessboard example with N = 25, what J, L would sufce? To see this, we can

generate the Markov Chain and compute the approximation several times, say 1000 times,

and compute the variance of the estimate for various choices of J, L (table 1).

It can be seen that J = 1000 and L = 100000 gives a very good approximation.

Table 1. Monte Carlo results for Uniform distribution.

J L Mean Variance

1000 1000 89618 717764

1000 2000 898109 36315

1000 4000 899856 231912

1000 5000 900753 179497

1000 10000 902991 0918608

1000 20000 903284 0475608

1000 40000 90389 0243295

1000 50000 904042 021296

1000 100000 904365 00999061

1000 200000 904486 00527094

1000 400000 904464 00261528

1000 500000 904449 00207095

1000 1000000 904499 000986929

1000 2000000 904519 000535309

1000 4000000 904486 000245182

1000 5000000 904506 000195814

1000 10000000 904515 000104744

On the Markov Chain Monte Carlo (MCMC) method 89

What if for the chessboard example we were interested in computing

n()(),

with stationary invariant distribution () that is no longer the uniform distribution, but

another distribution say

() = c exp{Kn()}

where K is a constant and c is normalising constant.

One possibility is to estimate c

1

by (for suitable J, L),

J+L

k=J+1

exp{Kn(X

k

)},

and then estimate

n() exp{Kn()} by

J+L

k=J+1

n(X

k

) exp{Kn(X

k

)},

so that the required approximation is

_

J+L

k=J+1

n(X

k

) exp{Kn(X

k

)}

_

_

_

J+L

k=J+1

exp{Kn(X

k

)}

_

.

Here again, we can generate the estimate for J, L several times and compute the variance

of the estimate (table 2).

Table 2. Monte Carlo results for Gibbs distribution: Ratio Method.

J L Mean Variance

1000 1000 822744 124061

1000 2000 806866 893338

1000 4000 794026 799263

1000 5000 789912 755298

1000 10000 78055 626682

1000 20000 768683 564003

1000 40000 759593 479803

1000 50000 756392 437511

1000 100000 746713 481159

1000 200000 739778 399164

1000 400000 731445 385673

1000 500000 729314 38598

1000 1000000 722698 381293

1000 2000000 716584 349804

1000 4000000 710077 354857

1000 5000000 708849 33355

1000 10000000 70304 360938

90 Rajeeva L Karandikar

Here, we can see that the variance of the estimate does not go down as expected, even when

we take L = 1000000 and more.

Instead, can we construct a Markov Chain {X

n

} whose invariant distribution is () so

that (3) is valid?

Let p(, ) denote the transition function described in the earlier discussion. Recall that

it is symmetric.

Let

(, ) = min

_

1,

()

()

_

.

Dene

q(, ) = p(, )(, ),

if = and

q(, ) = 1

=

q(, ).

For congurations , , if () (),

q(, ) = p(, ),

and

q(, ) = p(, )[()/()],

and hence

()q(, ) = ()q(, ). (4)

By interchanging roles of , , it follows that (4) is true in the other case: () () as

well. As a consequence of (4), it follows that is an invariant distribution for the transition

function q. (Equation (4) is known as the detailed balance equation.) Since p is irreducible,

aperiodic, it follows that so is q and hence that is the unique invariant measure and that the

q chain is ergodic (table 3).

Observe that here the variance reduces as expected and the mean is very stable for L =

100000 as in the uniform distribution case. Thus we have reason to believe that this method

gives a good approximation while the earlier method is way off the mark even with L =

10000000.

This construction shows that given any symmetric transition kernel p(, ) such that the

underlying Markov Chain is an irreducible aperiodic chain which is easy to simulate from

p(, ), we can create a transition kernel q for which the stationary invariant distribution is .

As we will see, it is easytosimulate fromq(, ) - rst we simulate a move fromthe distribution

p(, ) (to say ) and then accept the move with probability (, ), otherwise we stay

put at . As in rejection sampling, the move with probability (, ) is implemented by

simulating an observation, say u, fromuniform(0,1) distribution and then accepting the move

if u < (, ), otherwise, not to move from in that step.

Let us now move to continuous case (see Robert & Casella 1999; Roberts & Rosenthal

2004). For now, let us look at real valued random variables. Again, we are given a target

On the Markov Chain Monte Carlo (MCMC) method 91

Table 3. Monte Carlo results for Gibbs distribution: MCMC technique.

J L Mean Variance

1000 1000 663028 91541

1000 2000 664575 576939

1000 4000 664792 293947

1000 5000 66625 256194

1000 10000 665286 136421

1000 20000 666623 0678447

1000 40000 666348 0326311

1000 50000 666676 0267248

1000 100000 666442 0127588

1000 200000 666476 00629443

1000 400000 666632 00309451

1000 500000 6666 00256965

1000 1000000 666581 00138524

1000 2000000 666539 000687151

1000 4000000 66655 000344363

1000 5000000 666546 000289359

1000 10000000 666586 000133429

function f

1

(x) = Kf (x) with f being a density, K is not known and we want to generate

samples fromf . The starting point is to get a Markov Chain with good properties (irreducible,

aperiodic) with the probability transition density function q(x, y) (assumed to be symmetric,

and such that it is possible to simulate from q(x, ) for every x. q is called the proposal).

Then dene (as in the nite case)

(x, y) = min{1, f

1

(y)/f

1

(x)},

(with the usual convention: (x, y) = 0 if f

1

(y) = 0 and (x, y) = 1 if f

1

(y) > 0 but

f

1

(x) = 0) and then

p(x, y) = q(x, y)(x, y).

It is easy to check that

(x, y)/(y, x) = f

1

(y)/f

1

(x),

and hence that the detailed balance equation holds:

f (x)p(x, y) = f (y)p(y, x) x, y. (5)

We can nowdene a Markov Chain {X

n

} that has f as its stationary distribution as follows:

Given that X

n

= x, the chain does not move (i.e., X

n+1

= x) with probability 1(x) where

(x) =

_

p(x, y)dy

and given that it is going to move, it moves to a point y chosen according to the density

p(x, y)/(x).

92 Rajeeva L Karandikar

The transition kernel P(x, A) for this chain is given by, for a bounded measurable function g

_

g(z)P(x, dz) = (1 (x))g(x) +

_

g(z)p(x, z)dz. (6)

This can be implemented as follows: given X

k

= x, we rst propose a move to a point y

chosen according to the law q(x, ) and then choose u according to the Uniform distribution

on (0, 1) and then set X

k+1

= y if u < (x, y) and X

k+1

= x if u (x, y). Once again we

can verify the detailed balance equation

f (x)p(x, y) = f (y)p(y, x) x, y,

and hence (on integration w.r.t. x) it follows that

_

f (x)p(x, y)dx = (y)f (y), (7)

and hence using (6), (7) and Fubinis theorem we can verify that

_ __

g(z)P(x, dz)

_

f (x)dx =

_

(1 (x))g(x)f (x)dx

+

_ __

g(y)p(x, y)dy

_

f (x)dx

=

_

(1 (x))g(x)f (x)dx

+

_

g(y)(y)f (y)dy

=

_

g(y)f (y)dy.

Thus, f (x) is the density of a stationary invariant distribution of the constructed Markov

Chain. Note that here the transition probability function is a mixture of a point mass and a

density w.r.t. the Lebesgue measure.

Let us note that in the procedure described above, if f

1

(X

k

) > 0 then f

1

(X

k+1

) > 0 and

hence if we choose the starting point carefully (so that f

1

(X

0

) > 0), we move only in the

set {y : f

1

(y) > 0}. Usually, the starting point X

0

is chosen according to a suitable initial

distribution.

We can choose the proposal chain in many ways. One simple choice: Take a continuous

symmetric density q

0

on R with q

0

(0) > 0 and then dene

q(x, y) = q

0

(y x).

The chain {W

n

} corresponding to this is simply the random walk where each step is chosen

according to the density q

0

, which is chosen so that it has a nite mean (which then has

to be zero since q

0

is symmetric) and such that efcient algorithm is available to generate

samples from q

0

. We also need to specify a starting point, which could be chosen according

to a specied density g

0

.

On the Markov Chain Monte Carlo (MCMC) method 93

The resulting procedure is known as the MetropolisHastings RandomWalk MCMC. Here

is the algorithm as a psuedo-code: to simulate {X

k

: 0 k N}

(1) Generate X

0

from the distribution with density q

0

and set n = 0;

(2) n = n +1;

(3) generate W

n

from the distribution with density q

0

;

(4) Y

n

= X

n

+W

n

proposed move;

(5) generate U

n

from uniform (0,1);

(6) if U

n

f

1

(X

n

) f

1

(Y

n

), then X

n+1

= Y

n

, otherwise X

n+1

= X

n

;

(7) if n < N, go to (2) else stop.

Then the generated Markov Chain {X

k

: 0 k N} has f as its stationary distribution.

Like in the discrete case, here too for a function g such that

_

|g(x)|f (x)dx

lim

N

1

N

N

i=1

g(X

i

) =

_

g(x)f (x)dx.

However, since the aim is to approximate the integral based on a nite sample, we ignore an

initial segment of the change with the hope that the distribution of the chain may be closer

to the limiting distribution. Also, in order to reduce dependence between successive values

(we are going to have lots of instances where the chain does not move), one records the chain

after suitable Gap G. Thus we record

Z

k

= X

B+kG

,

for suitable Burn In B, Gap G for k = 1, 2, . . . L and then use

1

N

N

i=1

g(Z

i

),

as an approximation to

_

g(x)f (x)dx.

Example: Target is a mixture of two normals, with equal weights,

f

1

(x) = exp{(x 4)

2

/8} +exp{(x 16)

2

/8}.

This is bi-modal, with the two distributions having almost disjoint supports. The mean of f

is 10 and variance is 40 (so that standard deviation is 6324).

The random walk proposal distribution is taken as double exponential with parameters

0,1 (we must ensure that the mean of the proposal is 0), the burn in is taken as 5000, gap

as 50. We generate 10 samples of size 10000 and the mean, standard deviation and variance

in each of the sample is given in table 4.

The above algorithm is an adaptation of the Metropolis algorithm. Hastings in 1970 sug-

gested a modication that does not require the proposal kernel to be symmetric. This allows

us to consider the proposal chain to be an i.i.d sequence. Thus the chain is just a sequence

94 Rajeeva L Karandikar

Table 4. Monte Carlo results for Mixture of Normal distributions.

Mean SD VAR

10173955 6283718 39485116

9719518 6317257 3990773

9783166 6326743 4002768

9799898 6300697 39698783

10124491 6308687 39799526

10100052 6326426 40023664

9309102 6297279 39655727

10258374 6322546 39974584

9819395 63241 39994238

10204787 6303478 39733837

of independent random variables W

n

with common distribution having a density q

1

and take

the transition function as

q(x, y) = q

1

(y).

The multiplier (x, y) is now given by the formula

(x, y) = min{1, [f

1

(y)q

1

(x)]/[f

1

(x)q

1

(y)]},

and the transition kernel p(x, y) is given by

p(x, y) = q(x, y)(x, y).

As in the randomwalk case, we can dene a Markov Chain {X

n

} that has f as its stationary

distribution as follows.

Given that X

n

= x, the chain does not move (i.e. X

n+1

= x) with probability 1 (x)

where

(x) =

_

p(x, y)dy,

and given that it is going to move, it moves to a point y chosen according to the density

p(x, y)/(x).

This can be implemented as follows: given X

k

= x, we rst propose a move to a point y

chosen according to the law q(x, ) and then choose u according to the uniform distribution

on (0, 1) and then set X

k+1

= y if u < (x, y) and X

k+1

= x if u (x, y). Once again we

can verify the detailed balance equation

f (x)p(x, y) = f (y)p(y, x) x, y,

and as in the random walk case, it follows that f (x) is the density of a stationary invariant

distribution of the constructed Markov Chain. Note that here the transition probability function

is a mixture of a point mass and an absolutely continuous density.

On the Markov Chain Monte Carlo (MCMC) method 95

MetropolisHastings independence chain: Here is the algorithm as a psuedo-code: to sim-

ulate {X

k

: 0 k N}

(1) Generate X

0

from the distribution with density q

0

and set n = 0;

(2) n = n +1;

(3) generate W

n

from the distribution with density q

0

;

(4) Y

n

= W

n

proposed move;

(5) generate U

n

from uniform (0,1);

(6) if U

n

f

1

(X

n

)q

1

(Y

n

) f

1

(Y

n

)q

1

(X

n

), then X

n+1

= Y

n

otherwise X

n+1

= X

n

;

(7) if n < N, go to (2) else stop.

Then the generated Markov Chain {X

k

: 0 k N} has f as its stationary distribution.

When we have two Markov chains with the same stationary distribution, we can generate

yet another chain where at each step we move according to one chain say with probability

05 and the other chain with probability 05.

This has an advantage that if for the given target, even if one of the two chains is well

behaved then the hybrid chain is also well behaved.

How does the algorithm behave for fat-tailed distributions?

We ran the programme (Hybrid version) for Cauchy and found that even with 50000 sample

size, burn in of 50000 and Gap of 20; the results were not encouraging. And this when the

Cauchy distribution is taken with median 0, and the proposal and RW proposal also have

mean 0 (double exponential (0,8) and Uniform (5, 5) respectively).

To see if burn in and gap (same sample size) improves the situation, we ran the hybrid

algorithm with a burn in of 50,000,000 and a gap of 10000. Five samples each of size 50000

meant a total of 2,750,000,000. This took 7293 seconds (little more than 2 hours).

Also, with burn in of 50,000,000 and gap of 20000, total samples generated were

5,250,000,000 (over 5 billion). The time taken was a little under 4 hours.

With both these runs, the outputs seemto be stable. It appears that for fat-tailed distribution,

we need large burn in and large gap.

Gibbs sampler

Suppose it is given that X, Y are real-valued random variables such that the conditional

distribution of Y given X is normal with mean 03Y and variance 4 and the conditional

distribution of X given Y is normal with mean 03X and variance 4. Does this determine the

joint distribution of X, Y uniquely?

More general question: Let (x, y) be the joint density of X, Y; f (y; x) be the conditional

density of Y given X = x and g(x; y) be the conditional density of X given Y = y. Do

f (y; x), g(x; y) determine (x, y) ?

Consider the one-step transition function P((x, y), A) with density

h((u, v); (x, y)) = f (v; x)g(u; v).

This corresponds to the following: starting from (x, y), rst update the second component

from y to v by sampling from the distribution with density f (v; x) and then update the rst

component from x to u by sampling from the distribution with density g(u; v).

Let us note that if f

, g

denote the marginal densities of X, Y respectively, then

f (y; x) = [(x, y)]/[f

(x)], g(x; y) = [(x, y)]/[g

(y)],

96 Rajeeva L Karandikar

and hence

_ _

h((u, v); (x, y))(x, y)dydx =

_ _

f (v; x)g(u; v)(x, y)dydx

=

_

f (v; x)g(u; v)

__

(x, y)dy

_

dx

=

_

f (v; x)g(u; v)f

(x)dx

=

_

g(u; v)(x, v)dx

= g(u; v)g

(v)

= (u, v).

Nowif f (y; x) and g(x; y) are continuous and (strictly) positive for all x, y, then this chain

is irreducible and aperiodic (see appendix for denition) and has a stationary distribution

(x, y) which must then be unique.

This answers the question posed above in the afrmative. Further, if we have algorithms to

generate samples from the univariate densities f (y; x) and g(x; y), this gives an algorithm to

(approximately) generate samples from (x, y) run the chain for a sufciently long time.

This is an MCMC algorithm.

Note that we could have instead taken

h((u, v); (x, y)) = f (v; u)g(u; y),

or

h((u, v); (x, y)) = 05(f (v; x)g(u; v) +f (v; u)g(u; y)).

In either case, the resulting Markov Chain would have (x, y) as its stationary invariant

distribution.

This can be easily generalized to higher dimensions. The resulting MCMC algorithm is

knownis Gibbs sampler that is useful insituations where we want tosample froma multivariate

distribution which is indirectly specied- the distribution of interest is a distribution on R

d

(for d > 1) and it is prescribed via its full conditional distributions.

Let X = (X

1

, X

2

, . . . , X

d

) have distribution and x = (x

1

, x

2

, . . . x

n

). Let

X

i

= (X

1

, X

2

, . . . X

i1

, X

i+1

. . . , , X

d

)

x

i

= (x

1

, x

2

, . . . , x

i1

, x

i+1

, . . . , x

n

).

The conditional density of X

i

given X

i

= x

i

is denoted by

f

i

(x

i

; x

i

).

As in the case when n = 2, the collection {f

i

: 1 i d} completely determines

if each f

i

is a strictly positive continuous function. It should be noted that if instead of the

full conditional densities f

i

(conditional density of ith component given all the rest), the

On the Markov Chain Monte Carlo (MCMC) method 97

conditional densities of the ith component, given all the preceding components, is available

for i = 2, 3, . . . d along with the density of the rst component, then it is easy to simulate a

sample: rst we simulate X

1

, then X

2

and so on till X

d

.

If we only know f

i

, 1 i n, Gibbs sampler is an algorithm to generate a sample from

. In d-dimensions, we can either update the d components sequentially in some xed order

or at each step choose one component (drawing from uniform distribution on {1, 2, . . . d}).

Let x = (x

1

, x

2

, . . . x

d

) be a point from the support of the joint distribution. Set X

0

= x.

Having simulated X

1

, X

2

, . . . X

n

, do the following to obtain X

n+1

(1) choose i from the discrete uniform distribution on {1, 2, . . . , d};

(2) simulate w from the conditional density f

i

(x

i

; X

n

i

);

(3) set X

n+1

i

= w and X

n+1

j

= X

n

j

, for j = i.

Note that at each step, only one component is updated. It can be shown that the Markov

Chain has as its unique invariant measure and hence for large n, X

n

can be taken to be a

sample from .

For more details on MCMC, see Robert & Casella (1999) and references therein.

4. Perfect sampling

We have seen some algorithms to simulate Markov chains in order to estimate quantities

associated with their limiting distribution. One of the difculties in this is to decide when to

stop, i.e. what sample size to use so as to achieve close approximation.

Propp & Wilson (1996) proposed a renement of the MCMC yielding an algorithm that

generates samples exactly from the stationary distribution.

This algorithmis called Perfect Sampling or Exact Sampling. The algorithmis based on the

idea of coupling of Markov chains. Propp & Wilson (1996) called this algorithm Coupling

from the past. It consists of simulating several copies of the Markov Chain with different

starting points, all of them coupled with each other.

Let us now focus on nite state Markov chains. Given a transition matrix P(i, j), we can

construct a function such that X

0

= i

0

, and

X

n+1

= (X

n

, U

n

),

where {U

k

} is a sequence of independent simulations from uniform (0,1) yields a Markov

Chain with transition matrix P and starting at i

0

. This gives us an algorithm for simulating a

Markov Chain.

There is no unique choice of the function given the matrix P. For example, given one

function , one can dene (x, u) = (x, 1 u) and then

Y

n+1

= (Y

n

, U

n

),

also yields a Markov Chain with the same transition probabilities.

For the case of a nite state Markov Chain, one choice is: We can assume that the state

space is E = {1, 2, . . . , N}. Let us dene

q(i, j) =

j

k=1

p(i, k).

98 Rajeeva L Karandikar

Dene

(i, u) = 1 if u q(i, 1),

(i, u) = 2 if q(i, 1) < u q(i, 2),

. . .

(i, u) = j if q(i, j 1) < u q(i, j),

. . .

(i, u) = N if q(i, N 1) < u.

Then it follows that for a uniform (0,1) random variable U, the distribution of (i, U) is

{p(i, j), 1 j N}.

Now x i

0

and let {U

n

: n 1} be i.i.d. uniform (0,1). Let {X

n

} be dened by

X

n+1

= (X

n

, U

n

).

Then {X

n

} is a Markov Chain with transition probability matrix P and initial state i

0

.

Let {U

n

: n 1} be i.i.d. uniform (0,1), and {V

n

: n 1} also be i.i.d. uniform (0,1). Let

i

0

and j

0

be xed.

Dene {X

n

: n 0}, {Y

n

: n 0} and {Z

n

: n 0} as follows: X

0

= i

0

, Y

0

= j

0

, Z

0

= j

0

X

n+1

= (X

n

, U

n

), n 1,

Y

n+1

= (Y

n

, V

n

), n 1,

Z

n+1

= (Z

n

, U

n

), n 1.

All the three processes are Markov chains with transition probability matrix P and X starts

at i

0

while Y and Z both start at j

0

.

{X

n

: n 0} and {Y

n

: n 0} are independent chains, while {Y

n

: n 0}, {Z

n

: n 0}

have the same distribution. Hence, if we were required to simulate a chain starting at j

0

we

can use either {Y

n

: n 0} or {Z

n

: n 0}.

Since the same sequence {U

n

: n 1} is used in generating the chains X and Z, they are

obviously correlated.

X and Z above are said to be coupled.

Now let us x a transition probability matrix P. Instead of starting the chain at n = 0, we

can start the chain at n = 10000 or n = 100000000!

If the chain begins at n = (in the innite past, this can be made precise) with the

stationary distribution , then at each step its marginal distribution is .

The ProppWilson algorithm: We will to generate samples from uniform (0,1) and for

reasons to be made clear later, number them as U

0

, U

1

, . . . , U

m

, . . . . Let m = 1.

(1) For each starting point i E, generate a chain X

i,m

n

, m n 0:

X

i,m

m

= i

X

i,m

n+1

= (X

i,m

n

, U

n

) 0 m < n 0.

On the Markov Chain Monte Carlo (MCMC) method 99

(2) if X

i,m

0

= X

j,m

0

for all i, j (i.e., if all the N chains meet) then stop and return W = X

1,m

0

.

Otherwise, set m=m+1 and goto 1.

Note that the chain {X

i,m

k

: m k 0} uses the random variables {U

m

, . . . U

1

, U

0

}.

Thus as we go from m to m + 1, only one new uniform (0,1) is generated and we reuse the

m samples generated earlier.

If the ProppWilson algorithm terminates with probability one, then the sample returned

has exact distribution .

To see this, suppose that for the given realization of U

0

, U

1

, . . . , U

m

, . . . ., the algo-

rithm has terminated with m = 17600 and has returned a sample W.

Let us examine what would happen if we do not stop, but keep generating the N chains.

Take m = 17601. We will argue that X

1,17601

0

is still W. The chain X

1,17601

now starts at 1:

(X

1,17601

17601

= 1) and goes to some state i, X

1,17601

17600

= i. From then on, it follows the trajectory

of the chain X

i,17600

which was initialized at m = 17600 at the state i (since both the chains

begin at i and use the same set of uniform variables U

17600

, . . . , U

0

). Hence X

1,17601

0

= W.

The same argument can be repeated and we can conclude that if we ran the algorithm for

any m > 17600, all the N chains X

i,m

will meet at time 0 and the common value will be W.

Thus,

lim

m

X

i,m

0

= W i.

It can be shown that the distribution of W is the stationary distribution .

Generating N chains in order to generate one sample seems tedious. Suppose that P is

such that

q(i, j) q(i +1, j) j, 1 j < N, i.

This means, conditional distribution of X

n+1

, given X

n

= i + 1, stochastically dominates

the conditional distribution of X

n+1

, given X

n

= i. The chain is then called stochastically

monotone.

Under this condition, it can be checked that for the canonical choice of the function

described earlier,

(i, u) (i +1, u),

and hence that for i k

(i, u) (k, u)

Thus, by induction it follows that if i

0

< j

0

and X, Z are dened by

X

n+1

= (X

n

, U

n

), n 1,

Z

n+1

= (Z

n

, U

n

), n 1,

then

X

n

Z

n

n 1.

100 Rajeeva L Karandikar

If the Markov Chain is stochastically monotone, then instead of generating all the chains

and checking if they meet, we can generate only two chains

X

1

n

, X

N

n

,

since

X

1

n

X

i,m

n

X

N

n

i.

(This is true becasue we are generating coupled chain via the special function .) So if X

1

and X

N

meet, all the chains meet.

Thus even for a large state space, it is feasible to run the ProppWilson algorithmto generate

an exact sample from the target stationary distribution. See Propp & Wilson (1996).

The natural ordering on the state space has no specic role. If there exists an ordering

with respect to which the chain is stochastically monotone, we can generate chains starting

at minimum and maximum and then stop when they meet.

A great deal of research is going on on this theme.

Appendix A

Markov chains on a general state space: For more details on material in this appendix

including proofs etc. see (Meyn & Tweedie 1993). Suppose E is a locally compact separable

metric space and suppose P(x, A) is a probability transition function on E:

for each x, P(x, ) is a probability measure on E (equipped with its Borel sigma eld

B(E));

for each A B(E), P(, A) is a Borel measurable function on E.

Example. E = R and for x R, A B(R)

P(x, A) =

_

A

[1/

2] exp{[1/2](y x)

2

}dx.

Let {X

n

} be the Markov Chain with P as the transition probability kernel. Let P

x

be the

distribution of the chain when X

0

= x. The n-step transition probability function is dened by

P

n(x,A)

= P

x

(X

n

A).

Also for x E, A B(E) let,

L(x, A) = P

x

(X

n

A for some n 1).

U(x, A) =

n=1

P

x

(X

n

A).

Q(x, A) = P

x

(X

n

A innitely often).

Let

A

=

n=1

I

A

(X

n

).

On the Markov Chain Monte Carlo (MCMC) method 101

Then

U(x, A) = E

x

(

A

)

and

Q(x, A) = P

x

(

A

= ).

L(x, A) is the probability of reaching the set Astarting fromx, U(x, A) is the average number

of visits to the set A starting from x and Q(x, A) is the probability of innitely many visits

to the set A starting from x.

The Markov Chain is said to be -irreducible if there exists a positive measure on

(E, B(E)) such that for all A B(E), with (A) > 0

P(x, A) > 0 x E.

is said to be a irreducibility measure.

For a -irreducible Markov Chain there exists a maximal irreducibility measure such

that dominates every other irreducibility measure of the chain. The phrase The Markov

Chain is -irreducible with maximal irreducibility measure is often written as The

Markov Chain is -irreducible.

If E is a nite state space and P is a transition probability matrix such that there is one

communicating class F and the rest of the states (belonging to E F

C

) are transient. Then

the chain is -irreducible with maximal irreducibility measure being the uniform measure

on F (or any measure equivalent to it).

A-irreducible Markov Chain is said to be recurrent if for all A B(E) with (A) > 0,

U(x, A) = x E.

(Recall: U(x, A) is the average number of visits to the set Astarting fromx.) A-irreducible

Markov Chain is said to be transient if A

n

B(E), n 1 such that E =

n

A

n

and

M

n

< ,

U(x, A

n

) M

n

x A

n

.

As in the countable state space case, we have a dichotomy: A-irreducible Markov Chain

is either recurrent or transient.

A -irreducible Markov Chain is said to be Harris recurrent, if for all A B(E) with

(A) > 0,

Q(x, A) = 1 x A.

Every recurrent chain is essentially Harris recurrent. We now make this precise.

A set H E is said to be absorbing if

P(x, H) = 1 x H.

A set H E is said to be full if

(H

C

) = 0.

If H is a full absorbing set, we can restrict the chain to H retaining all its properties.

102 Rajeeva L Karandikar

Theorem A1. If a -irreducible chain is recurrent, then it admits a full absorbing set H

such that restricting to H, the chain is Harris recurrent.

A set A is said to be an atom if (A) > 0 and

P(x, B) = P(y, B) B B(E), x, y A.

In this case, we can lump all the states in Atogether and treat the set Aas a singleton, retaining

the Markov property for the reduced chain.

If the chain has an atom A, then everytime the chain reaches A, the chain regenerates

itself and thus we can mimic the usual arguments in countable state space case for recurrence,

ergodicity etc.

Athreya et al (1996) showed how to create a pseudo atom for a large class of chains and

use it to study the chain. We outline the underlying idea in a special case.

A set C is said to be small, if there exists m 1 and a positive measure such that

P

m

(x, A) (A) x C, A B(E). (A1)

Let C be a small set with m, satisfying (A1). Let d be the g.c.d. of the set of integers k

such that there exists

k

> 0 with

P

k

(x, A)

k

(A) x C, A B(E).

It can be shown that d does not depend on the small set C, or the measure . The chain is said

to be aperiodic if d = 1.

It is said to be strongly aperiodic if (A1) holds for m = 1 and some C, . Consider

a strongly aperiodic -irreducible chain with a small set C. Thus we have a probability

measure such that for > 0

P(x, A) (A) x C, A B(E). (A2)

The AthreyaNey and Nummelin idea is as follows (Athreya & Ney 1978; Nummelin

1978):

Dene a chain (Y

n

,

n

) taking values in E {0, 1} as follows:

If

k

= 1, we draw a sample from ; if Y

k

C,

k

= 0, we draw a sample from

[P(x, ) ()]/(1 ),

and if Y

k

C,

k

= 0, we drawa sample fromP(x, ) and set it as Y

k+1

. Further, if Y

k+1

C,

we set

k+1

= 0 and if Y

k+1

C, we set

k+1

= 0 with probability 1 and equal to 1 with

probability .

It can be seen that the event Y

k

C,

k

= 1 will never occur. Clearly E {1} is an atom

of the split chain (Y

n

,

n

). A little calculation shows that the marginal chain Y

n

is also a

Markov Chain with transition probability function P. As a result, successive hitting times of

E {1} are regeneration times for the chain Y

n

. Note that since (A) > 0 and the chain is

-irreducible,

P(x, A) > 0, x E.

The Athreya-Ney-Neumalin idea also works if we have a set C with (C) > 0 and a

positive measure such that

m=1

(1/2

m

)P

m

(x, A) (A) x C, A B(E).

On the Markov Chain Monte Carlo (MCMC) method 103

The Markov Chain is said to be Feller (or weak Feller) if for all bounded continuous

f , the function,

h(x) =

_

f (y)P(x, dy),

is continuous. The chain is said to be strong Feller if for all bounded measurable f , h

dened above is continuous.

A probability measure is said to be invariant or stationary if

_

P(x, A) (dx) = (A) A A B(E).

A bounded function f on E is said to be harmonic if

_

P(x, dy)f (y) = f (x).

If the Markov Chain is -irreducible aperiodic and if a invariant probability measure

exists, then it is recurrent and every bounded harmonic function is constant a.s. Further,

in this case the chain is Harris recurrent if and only if every bounded harmonic function is

constant (everywhere).

Ergodic theorem: If the Markov Chain is -irreducible aperiodic and if a invariant proba-

bility measure exists, then for a bounded measurable function g on E, for all x outside a

-null set,

1

N

N

j=1

g(X

j

)

_

g d, P

x

a.s.

Further if the chain is Harris recurrent, then the relation above holds for all x.

Suppose E = R

d

or a connected subset of R

d

. Assume that there exists a continuous

function u on E E and a probability measure on (E, B(E)) such that

P(x, A) =

_

A

u(x, y) (dy) +(1 (x))

{x}

(A),

for all x E, A B(E) with

(x) =

_

u(x, y)(dy).

Suppose that

u(x, y) > 0 x E, y E.

Then the Markov Chain {X

n

} is -irreducible aperiodic. For such a chain, if there exists a

positive function f such that

f (y) =

_

u(x, y)f (x)(dx),

104 Rajeeva L Karandikar

then (upto normalization) f is the density of the unique stationary invariant distribution, the

chain is Harris positive recurrent and for any bounded g

1

N

N

i=1

g(X

i

)

_

g(x)f (x)(dx).

The Markov chains appearing in the MetropolisHastings algorithm often satisfy these

conditions.

References

Athreya K B, Ney P 1978 A new approach to the limit theory of recurrent Markov chains. Trans. Am.

Math. Soc. 245: 493501

Athreya KB, Doss H, Sethuraman J 1996 On the convergence of the Markov Chain simulation method.

Ann. Stat. 24: 69100

Meyn S P, Tweedie R L 1993 Markov chains and stochastic stability (Berlin: Springer-Verlag)

Nummelin E1978 Asplitting technique for Harris recurrent Markov chains. Z. Wahrsch. Verw. Gebiete

43: 309318

Propp J G, Wilson DB1996 Exact sampling with coupled Markov chains and applications to statistical

mechanics. Random Struct. Algorithms 9: 223252

Robert C P, Casella G 1999 Monte Carlo statistical methods (Berlin: Springer-Verlag)

Roberts G O, Rosenthal J S 2004 General state space Markov chains and MCMC algorithms. Probab.

Surv. 1: 2071

You might also like

- Essentials of Monte Carlo Simulation - Statistical Methods For Building Simulation ModelsDocument183 pagesEssentials of Monte Carlo Simulation - Statistical Methods For Building Simulation ModelsdarioimeNo ratings yet

- Cramer Raoh and Out 08Document13 pagesCramer Raoh and Out 08Waranda AnutaraampaiNo ratings yet

- Handbook of Item Response Theory Volume One ModelsDocument607 pagesHandbook of Item Response Theory Volume One Modelsjuangff2No ratings yet

- List of Mining BooksDocument34 pagesList of Mining Bookssatyarthsharma78% (9)

- Personal StatementDocument3 pagesPersonal StatementNathan PearsonNo ratings yet

- Variational Problems in Machine Learning and Their Solution With Finite ElementsDocument11 pagesVariational Problems in Machine Learning and Their Solution With Finite ElementsJohn David ReaverNo ratings yet

- Markov Models: 1 DefinitionsDocument10 pagesMarkov Models: 1 DefinitionsraviNo ratings yet

- Series 1, Oct 1st, 2013 Probability and Related) : Machine LearningDocument4 pagesSeries 1, Oct 1st, 2013 Probability and Related) : Machine Learningshelbot22No ratings yet

- Method of Moments Using Monte Carlo SimulationDocument27 pagesMethod of Moments Using Monte Carlo SimulationosmargarnicaNo ratings yet

- Arena Stanfordlecturenotes11Document9 pagesArena Stanfordlecturenotes11Victoria MooreNo ratings yet

- Markov Processes: 9.1 The Correct Line On The Markov PropertyDocument9 pagesMarkov Processes: 9.1 The Correct Line On The Markov PropertyehoangvanNo ratings yet

- Markov Chain Monte Carlo and Gibbs SamplingDocument24 pagesMarkov Chain Monte Carlo and Gibbs Samplingp1muellerNo ratings yet

- Physics 127a: Class Notes: Lecture 8: PolymersDocument11 pagesPhysics 127a: Class Notes: Lecture 8: PolymersBiros theodorNo ratings yet

- FinalDocument5 pagesFinalBeyond WuNo ratings yet

- Unit V Graphical ModelsDocument23 pagesUnit V Graphical ModelsIndumathy ParanthamanNo ratings yet

- MCMC With Temporary Mapping and Caching With Application On Gaussian Process RegressionDocument16 pagesMCMC With Temporary Mapping and Caching With Application On Gaussian Process RegressionChunyi WangNo ratings yet

- Hidden Markov Models: BackgroundDocument13 pagesHidden Markov Models: BackgroundSergiu ReceanNo ratings yet

- Rarefied Gas Dynamics - DSMC CourseDocument50 pagesRarefied Gas Dynamics - DSMC CourseyicdooNo ratings yet

- ECE Lab 2 102Document28 pagesECE Lab 2 102azimylabsNo ratings yet

- Schoner TDocument12 pagesSchoner TEpic WinNo ratings yet

- Empirical Finance7Document30 pagesEmpirical Finance7edison6685No ratings yet

- InformationDocument3 pagesInformationLeanna Elsie JadielNo ratings yet

- 6 Monte Carlo Simulation: Exact SolutionDocument10 pages6 Monte Carlo Simulation: Exact SolutionYuri PazaránNo ratings yet

- HE Etropolis Lgorithm: Theme ArticleDocument5 pagesHE Etropolis Lgorithm: Theme ArticleghanashyamNo ratings yet

- Markov Chains ErgodicityDocument8 pagesMarkov Chains ErgodicitypiotrpieniazekNo ratings yet

- On Simulating Finite Markov Chains by The Splitting and Roulette ApproachDocument3 pagesOn Simulating Finite Markov Chains by The Splitting and Roulette Approachconti51No ratings yet

- 15-359: Probability and Computing Inequalities: N J N JDocument11 pages15-359: Probability and Computing Inequalities: N J N JthelastairanandNo ratings yet

- MSCFE 620 Group Submission - 1 RevisedDocument7 pagesMSCFE 620 Group Submission - 1 RevisedGauravNo ratings yet

- An Adaptive Simulated Annealing Algorithm PDFDocument9 pagesAn Adaptive Simulated Annealing Algorithm PDFfadhli202No ratings yet

- Cram Er-Rao Bound Analysis On Multiple Scattering in Multistatic Point Scatterer EstimationDocument4 pagesCram Er-Rao Bound Analysis On Multiple Scattering in Multistatic Point Scatterer EstimationkmchistiNo ratings yet

- MC MC RevolutionDocument27 pagesMC MC RevolutionLucjan GucmaNo ratings yet

- Monte Carlo Techniques: 32.1. Sampling The Uniform DistributionDocument7 pagesMonte Carlo Techniques: 32.1. Sampling The Uniform DistributionHuu PhuocNo ratings yet

- Particle Filter TutorialDocument39 pagesParticle Filter TutorialJen RundsNo ratings yet

- 1 JurnalDocument14 pages1 JurnalAri IrhamNo ratings yet

- 4lectures Statistical PhysicsDocument37 pages4lectures Statistical Physicsdapias09No ratings yet

- Probability and Queuing Theory - Question Bank.Document21 pagesProbability and Queuing Theory - Question Bank.prooban67% (3)

- Ke Li's Lemma For Quantum Hypothesis Testing in General Von Neumann AlgebrasDocument12 pagesKe Li's Lemma For Quantum Hypothesis Testing in General Von Neumann AlgebrasEughen SvirghunNo ratings yet

- CHP 1curve FittingDocument21 pagesCHP 1curve FittingAbrar HashmiNo ratings yet

- Markov Chains (4728)Document14 pagesMarkov Chains (4728)John JoelNo ratings yet

- Applied and Computational Harmonic Analysis: Emmanuel J. Candès, Mark A. DavenportDocument7 pagesApplied and Computational Harmonic Analysis: Emmanuel J. Candès, Mark A. DavenportCatalin TomaNo ratings yet

- Mathematical Statistics: (Communicated by Prof. H. at The Meeting of October 31, 1959)Document10 pagesMathematical Statistics: (Communicated by Prof. H. at The Meeting of October 31, 1959)d.broduskiNo ratings yet

- Benchmark On Discretization Schemes For Anisotropic Diffusion Problems On General GridsDocument16 pagesBenchmark On Discretization Schemes For Anisotropic Diffusion Problems On General GridsPaulo LikeyNo ratings yet

- Markov Chain Monte CarloDocument29 pagesMarkov Chain Monte Carlomurdanetap957No ratings yet

- Montecarlo SimulationDocument584 pagesMontecarlo Simulationmushtaque61No ratings yet

- The Fokker-Planck EquationDocument12 pagesThe Fokker-Planck EquationslamNo ratings yet

- Bayesian Modelling Tuts-12-15Document4 pagesBayesian Modelling Tuts-12-15ShubhsNo ratings yet

- Method of Moments: Topic 13Document9 pagesMethod of Moments: Topic 13Zeeshan AhmedNo ratings yet

- CS 717: EndsemDocument5 pagesCS 717: EndsemGanesh RamakrishnanNo ratings yet

- Non Linear Root FindingDocument16 pagesNon Linear Root FindingMichael Ayobami AdelekeNo ratings yet

- Matlab Phase RetrievalDocument9 pagesMatlab Phase Retrievalmaitham100No ratings yet

- Bayesian Monte Carlo: Carl Edward Rasmussen and Zoubin GhahramaniDocument8 pagesBayesian Monte Carlo: Carl Edward Rasmussen and Zoubin GhahramanifishelderNo ratings yet

- Foundations Computational Mathematics: Online Learning AlgorithmsDocument26 pagesFoundations Computational Mathematics: Online Learning AlgorithmsYuan YaoNo ratings yet

- Latin Hi Per CubeDocument24 pagesLatin Hi Per Cubehighoctane2005No ratings yet

- Simulation of Stopped DiffusionsDocument22 pagesSimulation of Stopped DiffusionssupermanvixNo ratings yet

- A Kolmogorov-Smirnov Test For R Samples: Walter B OhmDocument24 pagesA Kolmogorov-Smirnov Test For R Samples: Walter B OhmJessica LoongNo ratings yet

- A New Efficient Algorithm For Solving Systems of Multivariate Polynomial EquationsDocument12 pagesA New Efficient Algorithm For Solving Systems of Multivariate Polynomial EquationsgandhiranjithNo ratings yet

- Chap 4Document21 pagesChap 4ehsan_civil_62No ratings yet

- Annals Rev Engineering Dynamic NetworksDocument10 pagesAnnals Rev Engineering Dynamic NetworkshmuffNo ratings yet

- Lecture 3Document15 pagesLecture 3nguyenhoangnguyenntNo ratings yet

- CH 3Document79 pagesCH 3Apoorav DhingraNo ratings yet

- An Iterative Method For Solving Fredholm Integral Equations of The First KindDocument26 pagesAn Iterative Method For Solving Fredholm Integral Equations of The First Kindjack1490No ratings yet

- Siggraph03Document24 pagesSiggraph03Thiago NobreNo ratings yet

- Hawassa University (Hu), Institute of Technology (Iot) Chemical Engineering DepartmentDocument30 pagesHawassa University (Hu), Institute of Technology (Iot) Chemical Engineering Departmentzin berNo ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and ApplicationsFrom EverandGreen's Function Estimates for Lattice Schrödinger Operators and ApplicationsNo ratings yet

- Excursions in Statistical Dynamics: Gavin E. CrooksDocument117 pagesExcursions in Statistical Dynamics: Gavin E. CrookspzvpzvNo ratings yet

- Automatic Car Wash Simulation: October 2018Document9 pagesAutomatic Car Wash Simulation: October 2018gamer kaliaNo ratings yet

- Predictive AnalyticsDocument74 pagesPredictive AnalyticsCrystal AgenciaNo ratings yet

- Week 4 HomeworkDocument7 pagesWeek 4 HomeworkYinwu ZhaoNo ratings yet

- DissertationDocument66 pagesDissertationChaitali GhodkeNo ratings yet

- RR 728Document50 pagesRR 728G BGNo ratings yet

- FERUM4.1 Users Guide PDFDocument21 pagesFERUM4.1 Users Guide PDFAlex OliveiraNo ratings yet

- Monte Carlo Simulation of Correlated Random VariablesDocument7 pagesMonte Carlo Simulation of Correlated Random VariablesAhmed MohammedNo ratings yet

- Atoll 3.3.2 MonteCarlo Simulations PDFDocument19 pagesAtoll 3.3.2 MonteCarlo Simulations PDFDenmark WilsonNo ratings yet

- ETL 1110-2-561 - Reliability Analysis PDFDocument129 pagesETL 1110-2-561 - Reliability Analysis PDFAdamNo ratings yet

- Topic 1 - Estimating Market Risk Measures AnswerDocument22 pagesTopic 1 - Estimating Market Risk Measures AnswerRekha KaseraNo ratings yet

- Wiley - Life-Cycle Costing - Using Activity-Based Costing and Monte Carlo Methods To Manage Future Costs and RisksDocument4 pagesWiley - Life-Cycle Costing - Using Activity-Based Costing and Monte Carlo Methods To Manage Future Costs and RisksDragan StamenkovićNo ratings yet

- 2020 - Pérez Cambet - High Performance Ultrasound Simulation Using Monte-Carlo Simulation A GPU Ray-Tracing ImplementationDocument10 pages2020 - Pérez Cambet - High Performance Ultrasound Simulation Using Monte-Carlo Simulation A GPU Ray-Tracing Implementationshiyuan wangNo ratings yet

- 316 Resource 10 (E) SimulationDocument17 pages316 Resource 10 (E) SimulationMithilesh Singh Rautela100% (1)

- Resume 10-2013Document25 pagesResume 10-2013GYANESHNo ratings yet

- Using MonteCarlo Simulation To Mitigate The Risk of Project Cost OverrunsDocument8 pagesUsing MonteCarlo Simulation To Mitigate The Risk of Project Cost OverrunsJancarlo Mendoza MartínezNo ratings yet

- LibfmDocument7 pagesLibfmarabbigNo ratings yet

- Using The Enkf For Combined State and Parameter Estimation: Geir EvensenDocument27 pagesUsing The Enkf For Combined State and Parameter Estimation: Geir EvensenNaufal FadhlurachmanNo ratings yet

- Nazara TechnologiesDocument28 pagesNazara TechnologiesMihir MaheshNo ratings yet

- Aircraft Performance Analysis in Conceptual DesignDocument7 pagesAircraft Performance Analysis in Conceptual DesigndnanaNo ratings yet

- Monte Carlo Simulation BasicsDocument16 pagesMonte Carlo Simulation BasicsSudhagarNo ratings yet

- Sales Forecasting ExampleDocument2 pagesSales Forecasting ExampleArash MazandaraniNo ratings yet

- 3 - Simulation BasicsDocument23 pages3 - Simulation BasicsDaniel TjeongNo ratings yet

- Distribution Reliability PredictiveDocument25 pagesDistribution Reliability PredictivemohsinamanNo ratings yet

- Monte Carlo SimDocument29 pagesMonte Carlo SimAbhiyan Anala Arvind100% (1)

- SARMAP v7 Technical User ManualDocument119 pagesSARMAP v7 Technical User ManualMark Simmons100% (1)