Lecture Notes On Ordinary Differential Equations: Annual Foundation School, IIT Kanpur, Dec.3-28, 2007

Lecture Notes On Ordinary Differential Equations: Annual Foundation School, IIT Kanpur, Dec.3-28, 2007

Uploaded by

sammeenaisgreatCopyright:

Available Formats

Lecture Notes On Ordinary Differential Equations: Annual Foundation School, IIT Kanpur, Dec.3-28, 2007

Lecture Notes On Ordinary Differential Equations: Annual Foundation School, IIT Kanpur, Dec.3-28, 2007

Uploaded by

sammeenaisgreatOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Lecture Notes On Ordinary Differential Equations: Annual Foundation School, IIT Kanpur, Dec.3-28, 2007

Lecture Notes On Ordinary Differential Equations: Annual Foundation School, IIT Kanpur, Dec.3-28, 2007

Uploaded by

sammeenaisgreatCopyright:

Available Formats

1

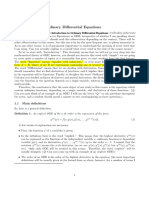

Lecture notes on Ordinary Dierential Equations

Annual Foundation School, IIT Kanpur,

Dec.3-28, 2007.

by

S. Sivaji Ganesh

Dept. of Mathematics, IIT Bombay,

Mumbai-76.

e-mail: sivaji.ganesh@gmail.com

Plan of lectures

(1) First order equations: Variable-Separable Method.

(2) Existence and uniqueness of solutions to initial value problems.

(3) Continuation of solutions, Saturated solutions, and Maximal interval of existence.

(4) Continuous dependence on data, Global existence theorem.

(5) Linear systems, Fundamental pairs of solutions, Wronskian.

References

1. V.I. Arnold, Ordinary dierential equations, translated by Silverman, (Printice-Hall of India,

1998).

2. E.A. Coddington, Introduction to ordinary dierential equations, (Prentice-Hall of India,

1974).

3. P. Hartman, Ordinary dierential equations, (Wiley, 1964).

4. M.W. Hirsh, S. Smale and R.L. Devaney, Dierential equations, dynamical systems & Chaos,

(Academic press, 2004).

5. L.C. Piccinini, G. Stampacchia and G. Vidossich, Ordinary dierential equations in R

N

:

problems and methods, (Springer-Verlag, 1984).

6. M.R.M. Rao, Ordinary dierential equations: theory and applications, (Aliated East-West,

1980).

7. D.A. Sanchez, Ordinary dierential equations: A brief eclectic tour, (Mathematical associa-

tion of America, 2002).

8. I.I. Vrabie, Dierential equations, (World scientic, 2004).

9. W.Wolfgang, Ordinary dierential equations, (Springer-Verlag, 1998).

S. Sivaji Ganesh ODE@AFS

2 1.1. BASIC CONCEPTS

Lecture-1

First order equations: Basic concepts

We introduce basic concepts of theory of ordinary dierential equations. A scalar ODE will be

given geometric interpretation and thereby try to gain a geometric understanding of solution

structure of ODE whose vector eld has some invariance. This understanding is then used to

solve equations of variable-separable type.

1.1 Basic concepts

We want to translate the feeling of what should be or what is an Ordinary Dierential Equation

(ODE) into mathematical terms. Dening some object like ODE, for which we have some rough

feeling, in English words is not really useful unless we know how to put it mathematically. As

such we can start by saying Let us look at the following dierential equation . . . . But since

many books give this denition, let us also have one such. The reader is referred to Remark 1.2

for an example of an ODE that we really do not want to be an ODE.

Let us start with

Hypothesis

Let R

n+1

be a domain and I R be an interval. Let F : I R be a function

dened by (x, z, z

1

, . . . z

n

) F(x, z, z

1

, . . . z

n

) such that F is not a constant function in

the variable z

n

.

With this notation and hypothesis on F we dene the basic object in our study, namely, an

Ordinary dierential equation.

Denition 1.1 (ODE) Assume the above hypothesis. An ordinary dierential equation of order

n is dened by the relation

F

_

x, y, y

(1)

, y

(2)

, . . . y

(n)

_

= 0, (1.1)

where y

(n)

stands for n

th

derivative of unknown function x y(x) with respect to the independent

variable x.

Remark 1.2

1. As we are going to deal with only one independent variable through out this course, we use the

terminology dierential equation in place of ordinary dierential equation at times. Also

we use the abbreviation ODE which stands for Ordinary Dierential Equation(s). Wherever

convenient, we use the notation prime

to denote a derivative w.r.t. independent variable

x; for example, y

is used to denote y

(1)

).

2. Note that the highest order of derivative of unknown function y appearing in the relation

(1.1) is called the order of the ordinary dierential equation. Look at the carefully framed

hypothesis above that makes sure the appearance of n

th

derivative of y in (1.1).

3. (Arnold) If we dene an ODE as a relation between an unknown function and its derivates,

then the following equation will also be an ODE.

dy

dx

(x) = y y(x). (1.2)

However, note that our Dention 1.1 does not admit (1.2) as an ODE. Also, we do not like

to admit (1.2) as an ODE since it is a non-local relation due to the presence of non-local

operator composition. On the other hand recall that derivative is a local operator in the

sense that derivative of a function at a point, depends only on the values of the function in

a neighbourhood of the point.

ODE@AFS S. Sivaji Ganesh

3

Having dened an ODE, we are interested in its solutions. This brings us to the question of

existence of solutions and nding out all the solutions. We make clear what we mean by a solution

of an ODE.

Denition 1.3 (Solution of an ODE) A real valued function is said to be a solution of ODE

(1.1) if (

n

(I) and

F

_

x, (x),

(1)

(x),

(2)

(x), . . .

(n)

(x)

_

= 0, x I. (1.3)

Remark 1.4

(1) There is no guarantee that an equation such as (1.1) will have a solution.

(i) The equation dened by F(x, y, y

) = (y

)

2

+y

2

+1 = 0 has no solution. Thus we

cannot hope to have a general theory for equations of type (1.1). Note that F is

a smooth function of its arguments.

(ii) The equation

y

=

_

1 if x 0

1 if x < 0,

does not have a solution on any interval containing 0. This follows from Darbouxs

theorem about derivative functions.

(2) To convince ourselves that we do not expect every ODE to have a solution, let us recall

the situation with other types of equations involving Polynomials, Systems of linear

equations, Implicit functions. In each of these cases, existence of solutions was proved

under some conditions. Some of those results also characterised equations that have

solution(s), for example, for systems of linear equations the characterisation was in

terms of ranks of matrix dening the linear system and the corresponding augmented

matrix.

(3) In the context of ODE, there are two basic existence theorems that hold for equations

in a special form called normal form. We state them in Section 3.1.

As observed in the last remark, we need to work with a less general class of ODE if we expect

them to have solutions. One such class is called ODE in normal form and is dened below.

Hypothesis (H)

Let R

n

be a domain and I R be an interval. Let f : I R be a continuous

function dened by (x, z, z

1

, . . . z

n1

) f(x, z, z

1

, . . . z

n1

).

Denition 1.5 (ODE in Normal form) Assume Hypothesis (H) on f. An ordinary dieren-

tial equation of order n is said to be in normal form if

y

(n)

= f

_

x, y, y

(1)

, y

(2)

, . . . y

(n1)

_

. (1.4)

Denition 1.6 (Solution of ODE in Normal form) A function C

n

(I

0

) where I

0

I is

a subinterval is called a solution of ODE (1.4) if for every x I

0

, the (n + 1)-tuple

_

x, (x),

(1)

(x),

(2)

(x), . . .

(n1)

(x)

_

I and

(n)

(x) = f

_

x, (x),

(1)

(x),

(2)

(x), . . .

(n1)

(x)

_

, x I

0

. (1.5)

Remark 1.7

S. Sivaji Ganesh ODE@AFS

4 1.2. GEOMETRIC INTERPRETATION OF A FIRST ORDER ODE AND ITS SOLUTION

1. Observe that we want equation (1.5) to be satised for all x I

0

instead of for all

x I. Compare now with dention of solution given before in Denition 1.3 which is more

stringent. We modied the concept of solution, by not requiring that the equation be satised

by the solution on entire interval I, due to various examples of ODEs that we shall see later

which have solutions only on a subinterval of I. We dont want to miss them!! Note that the

equation

y

=

_

1 if y 0

1 if y < 0,

does not admit a solution dened on R. However it has solutions dened on intervals (0, ),

(, 0). (Find them!)

2. Compare Denition 1.5 with Denition 1.1. See the item (ii) of Remark 1.2, observe that we

did not need any special eort in formulating Hypothesis (H) to ensure that n

th

derivative

makes an appearance in the equation (1.4).

Convention From now onwards an ODE in normal form will simply be called ODE for brevity.

Hypothesis (H

S

)

Let R

n

be a domain and I R be an interval. Let f : I R

n

be a continuous

function dened by (x, z) f (x, z) where z = (z

1

, . . . z

n

).

Denition 1.8 (System of ODEs) Assume Hypothesis (H

S

) on f . A rst order system of n

ordinary dierential equations is given by

y

= f (x, y) . (1.6)

The notion of solution for above system is dened analogous to Denition 1.5. A result due to

DAlembert enables us to restrict a general study of any ODE in normal form to that of a rst

order system in the sense of the following lemma.

Lemma 1.9 (DAlembert) An n

th

order ODE (1.4) is equivalent to a system of n rst order

ODEs.

Proof :

Introducing a transformation z = (z

1

, z

2

, . . . , z

n

) :=

_

y, y

(1)

, y

(2)

, . . . y

(n1)

_

, we see that z

satises the linear system

z

= (z

2

, . . . , z

n

, f(x, z)) (1.7)

Equivalence of (1.4) and (1.7) means starting from a solution of either of these ODE we can

produce a solution of the other. This is a simple calculation and is left as an exercise.

Note that the rst order system for z consists of n equations. This n is the order of (1.4).

Exercise 1.10 Dene higher order systems of ordinary dierential equations and dene corre-

sponding notion of its solution. Reduce the higher order system to a rst order system.

1.2 Geometric interpretation of a rst order ODE and its

solution

We now dene some terminology that we use while giving a geometric meaning of an ODE given

by

dy

dx

= f (x, y) . (1.8)

We recall that f is dened on a domain D in R

2

. In fact, D = I J where I, J are sub-intervals

of R.

ODE@AFS S. Sivaji Ganesh

5

Denition 1.11 (Line element) A line element associated to a point (x, y) D is a line passing

through the point (x, y) with slope p. We use the triple (x, y, p) to denote a line element.

Denition 1.12 (Direction eld/ Vector eld) A direction eld (sometimes called vector eld)

associated to the ODE (1.8) is collection of all line elements in the domain D where slope of the

line element associated to the point (x, y) has slope equal to f (x, y). In other words, a direction

eld is the collection

_

(x, y, f (x, y) ) : (x, y) D

_

.

Remark 1.13 (Interpretations)

1. The ODE (1.8) can be thought of prescribing line elements in the domain D.

2. Solving an ODE can be geometrically interpreted as nding curves in D that t the direction

eld prescribed by the ODE. A solution (say ) of the ODE passing through a point (x

0

, y

0

)

D (i.e., (x

0

) = y

0

) must satisfy

(x

0

) = f(x

0

, y

0

). In other words,

(x

0

, y

0

,

(x

0

) ) = (x

0

, y

0

, f (x

0

, y

0

) ).

3. That is, the ODE prescribes the slope of the tangent to the graph of any solution (which is

equal to

(x

0

)). This can be seen by looking at the graph of a solution.

4. Drawing direction eld corresponding to a given ODE and tting some curve to it will end up

in nding a solution, at least, graphically. However note that it may be possible to t more

than one curve passing through some points in D, which is the case where there are more

than one solution to ODE around those points. Thus this activity (of drawing and tting

curves) helps to get a rough idea of nature of solutions of ODE.

5. A big challenge is to draw direction eld for a given ODE. One good starting point is to

identify all the points in domain D at which line element has the same slope and it is easy

to draw all these lines. These are called isoclines; the word means leaning equally.

Exercise 1.14 Draw the direction eld prescribed by ODEs where f(x, y) = 1, f(x, y) = x,

f(x, y) = y, f(x, y) = y

2

, f(x, y) = 3y

2/3

and t solution curves to them.

Finding a solution of an ODE passing through a point in D is known as Initial value problem. We

address this in the next section.

1.3 Initial Value Problems

We consider an Initial Value Problem (also called Cauchy problem) for an ODE (1.4). It consists

of solving (1.4) subject to what are called Initial conditions. The two basic theorems we are going

to present are concerning an IVP for a rst order ODE.

Denition 1.15 (Initial Value Problem for an ODE) Let x

0

I and (y

1

, y

2

, . . . , y

n

) be

given. An Initial Value Problem (IVP) for an ODE in normal form is a relation satised by an

unknown function y given by

y

(n)

= f

_

x, y, y

(1)

, y

(2)

, . . . y

(n1)

_

, y

(i)

(x

0

) = y

i

, i = 0, . . . , (n 1). (1.9)

Denition 1.16 (Solution of an IVP for an ODE) A solution of ODE (1.4) (see Deni-

tion 1.6) is said to be a solution of IVP if x

0

I

0

and

(i)

(x

0

) = y

i

, i = 0, . . . , (n 1).

This solution is denoted by (.; x

0

, y

0

, y

1

, . . . , y

n1

) to remember the IVP solved by .

S. Sivaji Ganesh ODE@AFS

6 1.3. INITIAL VALUE PROBLEMS

Denition 1.17 (Local and Global solutions of an IVP) Let be a solution of an IVP for

ODE (1.4) according to Denition 1.16.

1. If I

0

I, then is called a local solution of IVP.

2. If I

0

= I, then is called a global solution of IVP.

Remark 1.18

1. Note that in all our denitions of solutions, a solution always comes with its domain of

denition. Sometimes it may be possible to extend the given solution to a bigger domain.

We address this issue in the next lecture.

2. When n = 1, geometrically speaking, graph of solution of an IVP is a curve passing through

the point (x

0

, y

0

).

Exercise 1.19 Dene an IVP for a rst order system. Reduce an IVP for an n

th

order ODE to

that of an equivalent rst order system.

ODE@AFS S. Sivaji Ganesh

7

Lecture-2

First order equations: Variable-Separable method

Hypothesis (H

VS

)

Let I R and J R be intervals. Let g : I R and h : J R 0 be continuous

functions.

If domains of functions are not specied, then they are assumed to be their natural

domains.

We consider a rst order ODE in Variable-Separable form given by

dy

dx

= g(x)h(y) (2.1)

We gain an understanding of the equation (2.1) and its solution in three simple steps.

2.1 Direction eld independent of y

The equation (2.1) takes the form

dy

dx

= g(x) (2.2)

Geometric understanding: Observe that slope of line element associated to a point depends

only on its x coordinate. Thus the direction eld is dened on the strip I R and is invariant

under translation in the direction of Y axis. Therefore it is enough to draw line elements for

points in the set I 0. This suggests that if we know a solution curve then its translates in the

Y axis direction gives all solution curves.

Let x

0

I be xed and let us dene a primitive of g on I by

G(x) :=

x

_

x

0

g(s) ds, (2.3)

with the understanding that, if x < x

0

, dene G(x) :=

x

0

_

x

g(s) ds.

The function G is a solution of ODE (2.2) by fundamental theorem of integral calculus, since

G

(x) = g(x) on I. Moreover, G(x

0

) = 0.

A solution curve passing through an arbitrary point (, ) I R can be obtained from G and is

given by

y(x; , ) = G(x) + ( G()), (2.4)

which is of the form G(x)+C where C = G(). Thus all solutions of ODE (2.2) are determined.

Moreover, all the solutions are global.

Example 2.1 Solve the ODE (2.2) with g given by (i). x

2

+ sin x (ii). x

1 x

2

(iii). sin x

2

(iv) Have you run out of patience? An explicit formula for the solution does not mean having it

explicitly!

S. Sivaji Ganesh ODE@AFS

8 2.2. DIRECTION FIELD INDEPENDENT OF X

2.2 Direction eld independent of x

The equation (2.1) takes the form

dy

dx

= h(y). (2.5)

This type of equations are called autonomous since the RHS of the equation does not depend

on the independent variable x.

Geometric understanding: Observe that slope of line element associated to a point depends

only on its y coordinate. Thus the direction eld is dened on the strip R J and is invariant

under translation in the direction of X axis. Therefore it is enough to draw line elements for

points in the set 0 I. This suggests that if we know a solution curve then its translates in the

X axis direction gives solution curves. Note that this is one of the main features of autonomous

equations.

Exercise 2.2 Verify that x

3

, (x c)

3

) with c R arbitrary are solutions of the ODE y

= 3y

2/3

.

Verify that (x) 0 is also a solution. However this can not be obtained by translating any of

the other solutions. Does this contradict the observation preceding this exercise?

Exercise 2.3 Formulate the geometric observation if we know a solution curve then its translates

in the X axis direction gives solution curves as a mathematical statement and prove it. Compare

and contrast with a similar statement made in Step 1.

This case is considerably dierent from that of Step 1 where solutions are dened on the entire

interval I. To expect the diculties awaiting us in the present case, it is advisable to solve the

following exercise.

Exercise 2.4 Verify that (x) =

1

(x+c)

are solutions of y

= y

2

on certain intervals. Graph the

solutions for c = 0, 1, 2. Verify that (x) 0 is also a solution on R.

Note from the last exercise that it has a constant solution (also called, rest/equilibrium point

since it does not move!) dened globally on R and also non-constant solutions dened on only a

subinterval of the real line that varies with c. There is no way we can get a non-zero solution from

zero solution by translation. Thus it is a good time to review Exercise 2.3 and nd out if there is

any contradition.

Observation: If J satises h() = 0, then is a rest point and (x) = is a solution of (2.5)

for x R.

Since the function h is continuous, it does not change sign. Therefore we may assume, without

loss of generality (WLOG), that h(y) > 0 for y J. There is no loss of generality because the

other case, namely h(y) < 0 for y J, can be disposed o in a similar manner.

Formally speaking, ODE (2.5) may be written as

dx

dy

=

1

h(y)

.

Thus all the conclusions of Step 1 can be translated to the current problem. But inversion of

roles of x and y needs to be justied!

Let y

0

J be xed and let us dene a primitive of 1/h on J by

H(y) :=

y

_

y

0

1

h(s)

ds. (2.6)

We record some properties of H below.

1. The function H : J R is dierentiable (follows from fundamental theorem of integral

calculus).

ODE@AFS S. Sivaji Ganesh

9

2. Since h > 0 on J, the function H is strictly monotonically increasing. Consequently, H is

one-one.

3. The function H : J H(J) is invertible. By denition, H

1

: H(J) J is onto, and is also

dierentiable.

4. A ner observation yields H(J) is an interval containing 0.

We write

H H

1

(x) = x

A particular solution

The function H gives rise to an implicit expression for a solution y of ODE (2.5) given by

H(y) = x (2.7)

This assertion follows from the simple calculation

d

dx

H(y) =

dH

dy

dy

dx

=

1

h(y)

dy

dx

= 1.

Note that H(y

0

) = 0 and hence x = 0. This means that the graph of the solution y(x) := H

1

(x)

passes through the point (0, y

0

). Note that this solution is dened on the interval H(J) which may

not be equal to R.

Exercise 2.5 When will the equality H(J) = R hold? Think about it. Try to nd some examples

of h for which the equality H(J) = R holds.

Some more solutions

Note that the function H

1

(x c) is also a solution on the interval c + H(J) (verify). Moreover,

these are all the solutions of ODE (2.5).

All solutions

Let z be any solution of ODE (2.5) dened on some interval I

z

. Then we have

dz

dx

= z

(x) = h(z(x))

Let x

0

I

z

and let z(x

0

) = z

0

. Since h ,= 0 on J, integrating both sides from x

0

to x yields

_

x

x

0

z

(x)

h(z(x))

dx =

_

x

x

0

1 dx

which reduces to

_

x

x

0

z

(x)

h(z(x))

dx = x x

0

The last equality, in terms of H dened by equation (2.6), reduces to

H(z)

z

0

_

y

0

1

h(s)

ds = x x

0

i.e., H(z) H(z

0

) = x x

0

(2.8)

From the equation (2.8), we conclude that x x

0

+ H(z

0

) H(J).

Thus, for x belonging to the interval x

0

H(z

0

) + H(J), we can write the solution as

z(x) = H

1

_

x x

0

+ H(z

0

)

_

. (2.9)

Note that a solution of ODE (2.5) are not global in general.

S. Sivaji Ganesh ODE@AFS

10 2.3. GENERAL CASE

Exercise 2.6 Give a precise statement of what we proved about solutions of ODE (2.5).

Exercise 2.7 Prove that a solution curve passes through every point of the domain R J.

Exercise 2.8 In view of what we have proved under the assumption of non-vanishing of h, revisit

Exercise 2.4, Exercise 2.3 and comment.

Exercise 2.9 Solve the ODE (2.5) with h given by (i). ky (ii).

_

[y[ (iii). 1 + y

2

(iv). 3y

2/3

(v). y

4

+ 4 (vi).

1

y

4

+4

2.3 General case

We justify the formal calculation used to obtain solutions of ODE (2.1) which we recall here for

convenience.

dy

dx

= g(x)h(y) (2.10)

The formal calculation to solve (2.10) is

_

dy

h(y)

=

_

g(x) dx + C (2.11)

We justify this formal calculation on a rigorous footing. We introduce two auxiliary ODEs:

dy

du

= h(y) and

du

dx

= g(x). (2.12)

Notation We refer to the rst and second equations of (2.12) as equations (2.12a) and (2.12b)

respectively. We did all the hard work in Steps 1-2 and we can easily deduce results concerning

ODE (2.10) using the auxiliary equations (2.12) introduced above.

Claim Let (x

1

, y

1

) I J be an arbitrary point. A solution y satisfying y(x

1

) = y

1

is given by

y(x) = H

1

_

G(x) G(x

1

) + H(y

1

)

_

. (2.13)

Proof :

Recalling from Step 2 that the function H

1

is onto J, there exists a unique u

1

such that

H

1

(u

1

) = y

1

. Now solution of (2.12a) satisfying y(u

1

) = y

1

is given by (see, formula (2.9))

y(u) = H

1

_

u u

1

+ H(y

1

)

_

(2.14)

and is dened on the interval u

1

H(y

1

) + H(J).

Solution of (2.12b), dened on I, satisfying u(x

1

) = u

1

is given by (see, formula (2.4))

u(x; x

1

, u

1

) = G(x) + (u

1

G(x

1

)).G(x) G(x

1

) H(y

1

) + H(J) (2.15)

Combining the two formulae (2.14)-(2.15), we get (2.13). This formula makes sense for x I such

that G(x) + (u

1

G(x

1

)) u

1

H(y

1

) + H(J). That is, G(x) G(x

1

) H(y

1

) + H(J).

Thus a general solution of (2.1) is given by

y(x) = H

1

_

G(x) c

_

. (2.16)

This ends the analysis of variable-separable equations modulo the following exercise.

Exercise 2.10 Prove that the set

_

x I : G(x) G(x

1

) H(y

1

) + H(J)

_

is non-empty and

contains a non-trivial interval.

Exercise 2.11 Prove: Initial value problems for variable-separable equations under Hypothesis

(H

VS

) have unique solutions. Why does it not contradict observations made regarding solutions

of y

= 3y

2/3

satisfying y(0) = 0?

ODE@AFS S. Sivaji Ganesh

11

Lecture-3

First order equations: Local existence & Uniqueness theory

We discuss the twin issues of existence and uniqueness for Initial value problems corresponding

to rst order systems of ODE. This discussion includes the case of scalar rst order ODE and also

general scalar ODE of higher order in view of Exercise 1.19. However certain properties like

boundedness of solutions do not carry over under the equivalence of Exercise 1.19 which are used

in the discussion of extensibility of local solutions to IVP.

We compliment the theory with examples from the class of rst order scalar equations. We recall

the basic setting of IVP for systems of ODE which is in force through our discussion. Later on

only the additional hypotheses are mentioned if and when they are made.

Hypothesis (H

IVPS

)

Let R

n

be a domain and I R be an interval. Let f : I R

n

be a continuous

function dened by (x, y) f (x, y) where y = (y

1

, . . . y

n

). Let (x

0

, y

0

) I be an

arbitrary point.

Denition 3.1 Assume Hypothesis (H

IVPS

) on f . An IVP for a rst order system of n ordinary

dierential equations is given by

y

= f (x, y) , y(x

0

) = y

0

. (3.1)

As we saw before (Exercise 2.4) we do not expect a solution to be dened globally on the entire

interval I. Recall Remark 1.7 in this context. This motivates the following denition of solution

of an IVP for systems of ODE.

Denition 3.2 (Solution of an IVP for systems of ODE) An n-tuple of functions

u = (u

1

, . . . u

n

) C

1

(I

0

) where I

0

I is a subinterval containing the point x

0

I is called a

solution of IVP (3.1) if for every x I

0

, the (n + 1)-tuple (x, u

1

(x), u

2

(x), , u

n

(x)) I ,

u

(x) = f (x, u(x)) , x I

0

and u(x

0

) = y

0

. (3.2)

We denote this solution by u = u(x; f , x

0

, y

0

) to remind us that the solution depends on f , y

0

and

u(x

0

) = y

0

.

The boldface notation is used to denote vector quantities and we drop boldface for scalar quan-

tities.

Remark 3.3 The IVP (3.1) involves an interval I, a domain , a continuous function f on

I , x

0

I, y

0

. Given I, x

0

I and , we may pose many IVPs by varying the data (f , y

0

)

belonging to the set C(I ) . .

Basic questions

There are three basic questions associated to initial value problems. They are

(i) Given (f , y

0

) C(I ) , does the IVP (3.1) admit at least one solution?

(ii) Assuming that for a given (f , y

0

) C(I ) , the IVP (3.1) has a solution, is the solution

unique?

(iii) Assuming that for each (f , y

0

) C(I) the IVP admits a unique solution y(x; f , x

0

, y

0

)

on a common interval I

0

containing x

0

, what is the nature of the following function?

o : C(I ) C

1

(I

0

) (3.3)

dened by

(f , y

0

) y(x; f , x

0

, y

0

). (3.4)

S. Sivaji Ganesh ODE@AFS

12 3.1. EXISTENCE OF LOCAL SOLUTIONS

We address questions (i), (ii) and (iii) in Sections 3.1, 3.2 and 4.3 respectively. Note that we do not

require a solution to IVP be dened on the entire interval I but only on a subinterval containing

the point x

0

at which initial condition is prescribed. Thus it is interesting to nd out if every

solution can be extended to I and the possible obstructions for such an extension. We discuss this

issue in the next lecture.

3.1 Existence of local solutions

There are two important results concerning existence of solutions for IVP (3.1). One of them

is proved for any function f satisfying Hypothesis (H

IVPS

) and the second assumes Lipschitz

continuity of the function f in addition. As we shall see in Section 3.2, this extra assumption on

f gives rise not only to another proof of existence but also uniqueness of solutions.

Both proofs are based on equivalence of IVP (3.1) and an integral equation.

Lemma 3.4 A continuous function y dened on an interval I

0

containing the point x

0

is a solu-

tion of IVP (3.1) if and only if y satises the integral equation

y(x) = y

0

+

_

x

x

0

f (s, y(s)) ds x I

0

. (3.5)

Proof :

If y is a solution of IVP (3.1), then by denition of solution we have

y

(x) = f (x, y(x)). (3.6)

Integrating the above equation from x

0

to x yields the integral equation (3.5).

On the other hand let y be a solution of integral equation (3.5). Observe that, due to continuity

of the function t y(t), the function t f (t, y(t)) is continuous on I

0

. Thus RHS of (3.5) is

a dierentiable function w.r.to x by fundamental theorem of integral calculus and its derivative

is given by the function x f (x, y(x)) which is a continuous function. Thus y, being equal to

a continuously dierentiable function via equation (3.5), is also continuously dierentiable. The

function y is a solution of ODE (3.1) follows by dierentiating the equation (3.5). Evaluating

(3.5) at x = x

0

gives the initial condition y(x

0

) = y

0

.

Thus it is enough to prove the existence of a solution to the integral equation (3.5) for showing

the existence of a solution to the IVP (3.1). Both the existence theorems that we are going to

prove, besides making use of the above equivalence, are proved by an approximation procedure.

The following result asserts that joining two solution curves in the extended phase space I

gives rise to another solution curve. This result is very useful in establishing existence of solutions

to IVPs; one usually proves the existence of a solution to the right and left of the point x

0

at

which initial condition is prescribed and then one gets a solution (which should be dened in an

open interval containing x

0

) by joining the right and left solutions at the point (x

0

, y

0

).

Lemma 3.5 (Concactenation of two solutions) Assume Hypothesis (H

IVPS

). Let [a, b] and

[b, c] be two subintervals of I. Let u and w dened on intervals [a, b] and [b, c] respectively be

solutions of IVP with initial data (a, ) and (b, u(b)) respectively. Then the concatenated function

z dened on the interval [a, c] by

z

(

x) =

_

u(x) if x [a, b].

w(x) if x (b, c].

(3.7)

is a solution of IVP with initial data (a, ).

ODE@AFS S. Sivaji Ganesh

13

Proof :

It is easy to see that the function z is continuous on [a, c]. Therefore, by Lemma 3.4, it is

enough to show that z satises the integral equation

z(x) = +

_

x

a

f (s, z(s)) ds x [a, c]. (3.8)

Clearly the equation (3.8) is satised for x [a, b], once again, by Lemma 3.4 since u solves IVP

with initial data (a, ) and z

(

x) = u(x) for x [a, b]. Thus it remains to prove (3.8) for x (b, c].

For x (b, c], once again by Lemma 3.4, we get

z(x) = w(x) = u(b) +

_

x

b

f (s, w(s)) ds = u(b) +

_

x

b

f (s, z(s)) ds. (3.9)

Since

u(b) = +

_

b

a

f (s, u(s)) ds = +

_

b

a

f (s, z(s)) ds, (3.10)

substituting for u(b) in (3.9) nishes the proof of lemma.

Rectangles

As I is an open set, we do not know if functions dened on this set are bounded; also we do

not know the shape or size of . If we are looking to solve IVP (3.1) (i.e., to nd a solution curve

passing through the point (x

0

, y

0

)), we must know how long a solution (if and when it exists)

may live. In some sense this depends on size of a rectangle R I centred at (x

0

, y

0

) dened

by two positive real numbers a, b

R = x : [x x

0

[ a y : |y y

0

| b (3.11)

Since I is an open set, we can nd such an R (for some positive real numbers a, b) for each

point (x

0

, y

0

) I . Let M be dened by M = sup

R

|f (x, y|.

Note that rectangle R in (3.11) is symmetric in the x space as well. However a solution may be

dened on an interval that is not symmetric about x

0

. Thus it looks restrictive to consider R

as above. It is denitely the case when x

0

is very close to one of the end points of the interval

I. Indeed in results addressing the existence of solutions for IVP, separately on intervals left and

right to x

0

consider rectangles R

I of the form

R

= [x

0

, x

0

+ a] y : |y y

0

| b (3.12)

3.1.1 Existence theorem of Peano

Theorem 3.6 (Peano) Assume Hypothesis (H

IVPS

). Then the IVP (3.1) has at least one solu-

tion on the interval [x x

0

[ where = mina,

b

M

.

3.1.2 Cauchy-Lipschitz-Picard existence theorem

From real analysis, we know that continuity of a function at a point is a local concept (as it

involves values of the function in a neighbourhood of the point at which continuity of function is

in question). We talk about uniform continuity of a function with respect to a domain. Similarly

we can dene Lipschitz continuity at a point and on a domain of a function dened on subsets of

R

n

. For ODE purposes we need functions of (n + 1) variables and Lipschitz continuity w.r.t. the

last n variables. Thus we straight away dene concept of Lipschitz continuity for such functions.

Let R I be a rectangle centred at (x

0

, y

0

) dened by two positive real numbers a, b (see

equation (3.11)).

S. Sivaji Ganesh ODE@AFS

14 3.1. EXISTENCE OF LOCAL SOLUTIONS

Denition 3.7 (Lipschitz continuity) A function f is said to be Lipschitz continuous on a

rectangle R with respect to the variable y if there exists a K > 0 such that

|f (x, y

1

) f (x, y

1

)| K|y

1

y

2

| (x, y

1

), (x, y

2

) R. (3.13)

Exercise 3.8 1. Let n = 1 and f be dierentiable w.r.t. the variable y with a continuous

derivative dened on I . Show that f is Lipschitz continuous on any rectangle R I .

2. If f is Lipschitz continuous on every rectangle R I , is f dierentiable w.r.t. the

variable y?

3. Prove that the function h dened by h(y) = y

2/3

on [0, ) is not Lipschitz continuous on

any interval containing 0.

4. Prove that the function f(x, y) = y

2

dened on doamin R R is not Lipschitz continuous.

(this gives yet another reason to dene Lipschitz continuity on rectangles)

We now state the existence theorem and the method of proof is dierent from that of Peano

theorem and yields a bilateral interval containing x

0

on which existence of a solution is asserted.

Theorem 3.9 (Cauchy-Lipschitz-Picard) Assume Hypothesis (H

IVPS

). Let f be Lipschitz

continuous with respect to the variable y on R. Then the IVP (3.1) has at least one solution

on the interval J [x x

0

[ where = mina,

b

M

.

Proof :

Step 1: Equivalent integral equation We recall (3.5), which is equivalent to the given IVP below.

y(x) = y

0

+

_

x

x

0

f (s, y(s)) ds x I. (3.14)

By the equivalence of above integral equation with the IVP, it is enough to prove that the integral

equation has a solution. This proof is accomplished by constructing, what are known as Picard

approximations, a sequence of functions that converges to a solution of the integral equation (3.14).

Step 2: Construction of Picard approximations

Dene the rst function y

0

(x), for x I, by

y

0

(x) := y

0

(3.15)

Dene y

1

(x), for x I, by

y

1

(x) := y

0

+

_

x

x

0

f (s, y

0

(s)) ds. (3.16)

Note that the function y

1

(x) is well-dened for x I. However, when we try to dene the next

member of the sequence, y

2

(x), for x I, by

y

2

(x) := y

0

+

_

x

x

0

f (s, y

1

(s)) ds, (3.17)

caution needs to exercised. This is because, we do not know about the values that the function

y

1

(x) assumes for x I, there is no reason that those values are inside . However, it happens

that for x J, where the interval J is as in the statement of the theorem, the expression on RHS

of (3.17) which dened function y

2

(x) is meaningful, and hence the function y

2

(x) is well-dened

for x J. By restricting to the interval J, we can prove that the following sequence of functions

is well-dened: Dene for k 1, for x J,

y

k

(x) = y

0

+

_

x

x

0

f (s, y

k1

(s)) ds. (3.18)

ODE@AFS S. Sivaji Ganesh

15

Proving the well-denedness of Picard approximations is left as an exercise, by induction. In fact,

the graphs of each Picard approximant lies inside the rectangle R (see statement of our theorem).

That is,

|y

k

(x) y

0

| b, x [x

0

, x

0

+ ]. (3.19)

The proof is immediate from

y

k

(x) y

0

=

_

x

x

0

f (s, y

k1

(s)) ds, x J. (3.20)

Therefore, |y

k

(x) y

0

| M[x x

0

[, x J. (3.21)

and for x J, we have [x x

0

[ .

Step 3: Convergence of Picard approximations

We prove the uniform convergence of sequence of Picard approximations y

k

on the interval J, by

proving that this sequence corresponds to the partial sums of a uniformly convergent series of

functions, and the series is given by

y

0

+

l=0

_

y

l+1

(x) y

l

(x)

, (3.22)

Note that the sequence y

k+1

corresponds to partial sums of series (3.22). That is,

y

k+1

(x) = y

0

+

k

l=0

_

y

l+1

(x) y

l

(x)

. (3.23)

Step 3A: Unifrom convergence of series (3.22) on x J

We are going to compare series (3.22) with a convergence series of real numbers, uniformly in

x J, and thereby proving uniform convergence of the series. From the expression

y

l+1

(x) y

l

(x) =

_

x

x

0

_

f (s, y

l

(s)) f (s, y

l1

(s))

_

ds, x J, (3.24)

we can prove by induction the estimate (this is left an exercise):

|y

l+1

(x) y

l

(x)| ML

l

[x x

0

[

l+1

(l + 1)!

M

L

L

l+1

l+1

(l + 1)!

(3.25)

We conclude that the series (3.22), and hence the sequence of Picard iterates, converge uniformly

on J. This is because the above estimate (3.25) says that general term of series (3.22) is uniformly

smaller than that of a convergent series, namely, for the function e

L

times a constant.

Let y(x) denote the uniform limit of the sequence of Picard iterates y

k

(x) on J.

Step 4: The limit function y(x) solves integral equation (3.14)

We want to take limit as k in

y

k

(x) = y

0

+

_

x

x

0

f (s, y

k1

(s)) ds. (3.26)

Taking limit on LHS of (3.26) is trivial. Therefore, for x J, if we prove that

_

x

x

0

f (s, y

k1

(s)) ds

_

x

x

0

f (s, y(s)) ds, (3.27)

S. Sivaji Ganesh ODE@AFS

16 3.2. UNIQUENESS

then we would obtain, for x J,

y(x) = y

0

+

_

x

x

0

f (s, y(s)) ds, (3.28)

and this nishes the proof. Therefore, it remains to prove (3.27). Let us estimate, for x J, the

quantity

_

x

x

0

f (s, y

k1

(s)) ds

_

x

x

0

f (s, y(s)) ds =

_

x

x

0

_

f (s, y

k1

(s)) f (s, y(s))

_

ds (3.29)

Since the graphs of y

k

lie inside rectangle R, so does the graph of y. This is because rectangle R

is closed. Now we use that the vector eld f is Lipschitz continuous (with Lipschitz constant L)

in the variable y on R, we get

_

_

_

_

_

x

x

0

_

f (s, y

k1

(s)) f (s, y(s))

_

ds

_

_

_

_

_

x

x

0

_

_

_

f (s, y

k1

(s)) f (s, y(s))

__

_

ds

(3.30)

L

_

x

x

0

|y

k1

(s) y(s)| ds

L[x x

0

[ sup

J

|y

k1

(x) y(x)| (3.31)

L sup

J

|y

k1

(x) y(x)| . (3.32)

This estimate nishes the proof of (3.27), since y(x) is the uniform limit of the sequence of Picard

iterates y

k

(x) on J, and hence for suciently large k, the quantity sup

J

|y

k1

(x) y(x)| can be

made arbitrarily small.

Some comments on existence theorems

Remark 3.10 (i) Note that the interval of existence depends only on the bound M and not on

the specic function.

(ii) If g is any Lipschitz continuous function (with respect to the variable y on R) in a neigh-

bourhood of f and is any vector in an neighbourhood of y

0

, then solution to IVP with data

(g, ) exists on the interval = mina,

b

M+

. Note that this interval depends on data (g, )

only in terms of its distance to (f , y

0

).

Exercise 3.11 Prove that Picards iterates need not converge if the vector eld does is not locally

Lipschitz. Compute successive approximations for the IVP

y

= 2x x

y

+

, y(0) = 0, with y

+

= maxy, 0,

and show that they do not converge, and also show that IVP has a unique solution. (Hint: y

2n

=

0, y

2n+1

= x

2

, n N).

3.2 Uniqueness

Recalling the denition of a solution, we note that if u solves IVP (3.1) on an interval I

0

then

w

def

= u[

I

1

is also a solution to the same IVP where I

1

is any subinterval of I

0

containing the point

x

0

. In principle we do not want to consider the latter as a dierent solution. Thus we are led to

dene a concept of equivalence of solutions of an IVP that does not distinguish w from u near

the point x

0

. Roughly speaking, two solutions of IVP are said to be equivalent if they agree on

some interval containing x

0

(neighbourhood of x

0

). This neighbourhood itself may depend on the

given two solutions.

Denition 3.12 (local uniqueness) An IVP is said to have local uniqueness property if for

each (x

0

, y

0

) I and for any two solutions y

1

and y

2

of IVP (3.1) dened on intervals I

1

and I

2

respectively, there exists an open interval I

lr

:= (x

0

l

, x

0

+

r

) containing the point x

0

such that y

1

(x) = y

2

(x) for all x I

lr

.

ODE@AFS S. Sivaji Ganesh

17

Denition 3.13 (global uniqueness) An IVP is said to have global uniqueness property if for

each (x

0

, y

0

) I and for any two solutions y

1

and y

2

of IVP (3.1) dened on intervals I

1

and I

2

respectively, the equality y

1

(x) = y

2

(x) holds for all x I

1

I

2

.

Remark 3.14 1. It is easy to understand the presence of adjectives local and global in Deni-

tion 3.12 and Denition 3.12 respectively.

2. There is no loss of generality in assuming that the interval appearing in Denition 3.12,

namely I

lr

, is of the form J

lr

:= [x

0

l

, x

0

+

r

]. This is because in any open interval

containing a point x

0

, there is a closed interval containing the same point x

0

and vice versa.

Though it may appear that local and global uniqueness properties are quite dierent from each

other, indeed they are the same. This is the content of the next result.

Lemma 3.15 The following are equivalent.

1. An IVP has local uniqueness property.

2. An IVP has global uniqueness property.

Proof :

From denitions, clearly (2) =(1). We turn to the proof of (1) =(2).

Let y

1

and y

2

be two solutions of IVP (3.1) dened on intervals I

1

and I

2

respectively. We prove

that y

1

= y

2

on the interval I

1

I

2

; we split its proof in to two parts. We rst prove the equality

to the right of x

0

, i.e., on the interval [x

0

, sup(I

1

I

2

)) and proving the equality to the left of x

0

(i.e., on the interval (inf(I

1

I

2

), x

0

] ) follows a canonically modied argument. Let us consider

the following set

/

r

= t I

1

I

2

: y

1

(x) = y

2

(x) x [x

0

, t] . (3.33)

The set /

r

has the following properties:

1. The set /

r

is non-empty. This follows by applying local uniqueness property of the IVP

with initial data (x

0

, y

0

).

2. The equality sup /

r

= sup(I

1

I

2

) holds.

Proof :

Note that inmum and supremum of an open interval equals the left and right end points of

its closure (the closed interval) whenever they are nite. Observe that sup /

r

sup(I

1

I

2

)

since /

r

I

1

I

2

. Thus it is enough to prove that strict inequality can not hold. On the

contrary, let us assume that a

r

:= sup /

r

< sup(I

1

I

2

). This means that a

r

I

1

I

2

and

hence y

1

(a

r

), y

2

(a

r

) are dened. Since a

r

is the supremum (in particular, a limit point) of

the set /

r

on which y

1

and y

2

coincide, by continuity of functions y

1

and y

2

on I

1

I

2

, we

get y

1

(a

r

) = y

2

(a

r

).

Thus we nd that the functions y

1

and y

2

are still solutions of ODE on the interval I

1

I

2

,

and also that y

1

(a

r

) = y

2

(a

r

). Thus applying once again local uniquness property of IVP but

with initial data (a

r

, y

1

(a

r

)), we conclude that y

1

= y

2

on an interval J

lr

:= [a

r

l

, a

r

+

r

]

(see Remark 3.14). Thus combining with arguments of previous paragraph we obtain the

equality of functions y

1

= y

2

on the interval [x

0

, a

r

+

r

]. This means that a

r

+

r

/

r

and thus a

r

is not an upper bound for /

r

. This contradiction to the denition of a

r

nishes

the proof of 2.

As mentioned at the beginning of the proof, similar statements to the left of x

0

follow. This

nishes the proof of lemma.

S. Sivaji Ganesh ODE@AFS

18 3.2. UNIQUENESS

Remark 3.16 1. By Lemma 3.15 we can use either of the two denitions Denition 3.12 and

Denition 3.12 while dealing with questions of uniqueness. Henceforth we use the word

uniqueness instead of using adjectives local or global since both of them are equivalent.

2. One may wonder then, why there is a need to dene both local and global uniqueness prop-

erties. The reason is that it is easy to prove local uniqueness compared to proving global

uniqueness and at the same retaining what we intuitively feel about uniqueness.

Example 3.17 (Peano) The initial value problem

y

= 3 y

2/3

, y(0) = 0. (3.34)

has innitely many solutions.

However there are sucient conditions on f so that the corresponding IVP has a unique solution.

One such condition is that of Lipschitz continuity w.r.t. variable y.

Lemma 3.18 Assume Hypothesis (H

IVPS

). If f is Lipschitz continuous on every rectangle R

contained in I , then we have global uniqueness.

Proof :

Let y

1

and y

2

be two solutions of IVP (3.1) dened on intervals I

1

and I

2

respectively. By

Lemma 3.4, we have

y

i

(x) = y

0

+

_

x

x

0

f (s, y

i

(s)) ds, x I

i

and i = 1, 2. (3.35)

Subtracting one equation from another we get

y

1

(x) y

2

(x) =

_

x

x

0

_

f (s, y

1

(s)) f (s, y

2

(s))

_

ds, x I

1

I

2

. (3.36)

Applying norm on both sides yields, for x I

1

I

2

|y

1

(x) y

2

(x)| =

_

_

_

_

_

x

x

0

_

f (s, y

1

(s)) f (s, y

2

(s))

_

ds

_

_

_

_

(3.37)

_

x

x

0

_

_

f (s, y

1

(s)) f (s, y

2

(s))

_

_

ds (3.38)

Choose such that |y

1

(s)y

0

| b and |y

2

(s)y

0

| b, since we know that f is locally Lipschitz,

it will be Lipschitz on the rectangle R with Lipschitz constant L > 0. As a consequence we get

|y

1

(x) y

2

(x)| L sup

|xx

0

|

|y

1

(x) y

2

(x)| [x x

0

[ (3.39)

L sup

|xx

0

|

|y

1

(x) y

2

(x)| (3.40)

It is possible to arrange such that L < 1. From here we conclude that

sup

|xx

0

|

|y

1

(x) y

2

(x)| < sup

|xx

0

|

|y

1

(x) y

2

(x)| (3.41)

Thus we conclude sup

|xx

0

|

|y

1

(x) y

2

(x)| = 0. This establishes local uniqueness and global

uniqueness follows from their equivalence.

Example 3.19 The IVP

y

=

_

y sin

1

y

if y ,= 0

0 if y = 0,

y(0) = 0.

has unique solution, despite the RHS not being Lipschitz continuous w.r.t. variable y on any

rectangle containing (0, 0).

ODE@AFS S. Sivaji Ganesh

19

Lecture-4

Saturated solutions & Maximal interval of existence

4.1 Continuation

We answer the following two questions in this section.

1. When can a given solution be continued?

2. If a solution can not be continued to a bigger interval, what prevents a continuation?

The existential results of Section 3.1 provide us with an interval containing the initial point on

which solution for IVP exists and the length of the interval depends on the data of the problem

as can be seen from expression for its length. However this does not rule out solutions of IVP

dened on bigger intervals if not whole of I. In this section we address the issue of extending a

given solution to a bigger interval and the diculties that arise in extending.

Intuitive idea for extension Take a local solution u dened on an interval I

0

= (x

1

x

2

) con-

taining the point x

0

. An intuitive idea to extend u is to take the value of u at the point x = x

2

and consider a new IVP for the same ODE by posing the initial condition prescribed at the point

x = x

2

to be equal to u(x

2

). Consider this IVP on a rectangle containing the point (x

2

, u(x

2

)).

Now apply any of the existence theorems of Section 3.1 and conclude the existence of solution on

a bigger interval. Repete the previous steps and obtain a solution on the entire interval I.

However note that this intuitive idea may fail for any one of the following reasons. They are (i).

limit of function u does not exist as x x

2

(ii). limit in (i) may exist but there may not be any

rectangle around the point (x

2

, u(x

2

)) as required by existence theorems. In fact these are the

principle diculties in extending a solution to a bigger interval. In any case we show that for any

solution there is a biggest interval beyond which it can not be extended as a solution of IVP and

is called maximal interval of existence via Zorns lemma.

We start with a few denitions.

Denition 4.1 (Continuation, Saturated solutions) Let the function u dened on an inter-

val I

0

be a solution of IVP (3.1). Then

1. The solution u is called continuable at the right if there exists a solution of IVP w dened

on interval J

0

satisfying sup I

0

sup J

0

and the equality u(x) = w(x) holds for x I

0

J

0

.

Any such w is called an extension of u. Further if sup I

0

< sup J

0

, then w is called a non-

trivial extension of u. The solution u is called saturated at the right if there is no non-trivial

right-extension.

2. The solution u is called continuable at the left if there exists a solution of IVP z dened on

interval K

0

satisfying inf K

0

< inf I

0

and the equality u(x) = z(x) holds for x I

0

K

0

. The

solution u is called saturated at the left if it is not continuable at the left.

3. The solution u is called global at the right if I

0

x I : x x

0

. Similarly, u is called

global at the left if I

0

x I : x x

0

.

Remark 4.2 If the solution u is continuable at the right, then by concactenating the solutions u

and w we obtain a solution of IVP on a bigger interval (inf I

0

, sup J

0

), where w dened on J

0

is

a right extension of u. A similar statement holds if u is continuable at the left.

Let us dene the notion of a right (left) solution to the IVP (3.1).

Denition 4.3 A function u dened on an interval [x

0

, b) (respectively, on (a, x

0

]) is said to be

a right solution (respectively, a left solution) if u is a solution of ODE y

= f (x, y) on (x

0

, b)

(respectively, on (a, x

0

)) and u(x

0

) = y

0

.

S. Sivaji Ganesh ODE@AFS

20 4.1. CONTINUATION

Note that if u is a solution of IVP (3.1) dened on an interval (a, b), then u restricted to [x

0

, b)

(respectively, to (a, x

0

]) is a right solution (respectively, a left solution) to IVP.

For right and left solutions, the notions of continuation and saturated solutions become

Denition 4.4 (Continuation, Saturated solutions) Let the function u dened on an inter-

val [x

0

, b) be a right solution of IVP and let v dened on an interval (a, x

0

] be a left solution of

IVP (3.1). Then

1. The solution u is called continuable at the right if there exists a right solution of IVP w

dened on interval [x

0

, d) satisfying b < d and the equality u(x) = w(x) holds for x [x

0

, b).

Any such w is called a right extension of u. The right solution u is called saturated at the

right if it is not continuable at the right.

2. The solution v is called continuable at the left if there exists a solution of IVP z dened

on interval (c, x

0

] satisfying c < a and the equality u(x) = z(x) holds for x (a, x

0

]. The

solution u is called saturated at the left if it is not continuable at the left.

3. The solution u is called global at the right if [x

0

, b) = x I : x x

0

. Similarly, u is

called global at the left if (a, x

0

] = x I : x x

0

.

The rest of the discussion in this section is devoted to analysing continuability at the right, saturated at the right as

the analysis for the corresponding notions at the left is similar. We drop sufxing at the right from now on to save

space to notions of continuability and saturation of a solution.

4.1.1 Characterisation of continuable solutions

Lemma 4.5 Assume Hypothesis (H

IVPS

). Let u : I

0

R

n

be a right solution of IVP (3.1) dened

on the interval [x

0

, d). Then the following statements are equivalent.

(1) The solution u is continuable.

(2) (i) d < sup I and there exists

(ii) y

= lim

xd

y(x) and y

.

(3) The graph of u i.e.,

graphu =

_

(x, u(x)) : x [x

0

, d)

_

(4.1)

is contained in a compact subset of I .

Proof :

We prove (1) =(2) =(3) =(2) =(1). The implication (1) =(2) is obvious.

Proof of (2) =(3)

In view of (2), we can extend the function u to the interval [x

0

, d] and let us call this extended

function u. Note that the function x (x, u(x) is continuous on the interval [x

0

, d] and the

image of [x

0

, d] under this map is graph of u, denoted by graph u, is compact. But graphu

graph u I . Thus (3) is proved.

Proof of (3) =(2)

Assume that graphu is contained in a compact subset of I . As a consequence, owing to

continuity of the function f on I , there exists M > 0 such that |f (x, u(x))| < M for all

x [x

0

, d). Also, since I is an open interval, necessarily d < sup I. We will now prove that the

limit in (2)(ii) exists.

Since u is a solution of IVP (3.1), by Lemma 3.4, we have

u(x) = y

0

+

_

x

x

0

f (s, u(s)) ds x [x

0

, d). (4.2)

ODE@AFS S. Sivaji Ganesh

21

Thus for , [x

0

, d), we get

|u() u()|

_

|f (s, u(s))| ds M[ [. (4.3)

Thus u satises the hypothesis of Cauchy test on the existence of nite limit at d. Indeed, the

inequality (4.3) says that u is uniformly continuous on [x

0

, d) and hence limit of u(x) is nite as

x d. This follows from a property of uniformly continuous functions, namely they map Cauchy

sequences to Cauchy sequences. Let us denote the limit by y

. In principle being a limit, y

.

To complete the proof we need to show that y

. This is a consequence of the hypothesis that

graph of u is contained in a compact subset of I and the fact that I and are open sets.

Proof of (2) =(1)

As we shall see, the implication (2) =(1) is a consequence of existence theorem for IVP (Theo-

rem 3.6) and concactenation lemma (Lemma 3.5).

Let w be a solution to IVP corresponding to the initial data (d, y

) I dened on an interval

(e, f) containing the point d. Let w[

[d, f)

be the restriction of w to the interval [d, f) I. Let u

be dened as the continuous extension of u to the interval (c, d]) which makes sense due to the

existence of the limit in (2)(ii). Concactenating u and w[

[d, f)

yields a solution of the original IVP

(3.1) that is dened on the interval (c, f) and d < f.

Remark 4.6 The important message of the above result is that a solution can be extended to a

bigger interval provided the solution curve remains well within the domain I i.e., its right

end-point lies in .

4.1.2 Existence and Classication of saturated solutions

The following result is concerning the existence of saturted solutions for an IVP. Once again we

study saturated at the right and corresponding results for saturated at the left can be obtained

by similar arguments. Thus for this discussion we always consider a soluton as dened on interval

of the form [x

0

, d)

Theorem 4.7 (Existence of saturated solutions) If u dened on an interval [x

0

, d) is a right

solution of IVP (3.1), then either u is saturated, or u can be continued up to a saturated one.

Proof :

If u is saturated, then there is nothing to prove. Therefore, we assume u is not saturated.

By denition of saturatedness of a solution, u is continuable. Thus the set o, dened below, is

non-empty.

o = Set of all solutions of IVP (3.1) which extend u. (4.4)

We dene a relation _ on the set o, called partial order as follows. For w, z o dened on

intervals [x

0

, d

w

) and [x

0

, d

z

) respectively, we say that w _ z if z is a continuation of w.

Roughly speaking, if we take the largest (w.r.t. order _) element of o then by it can not be further

continued. To implement this idea, we need to apply Zorns lemma. Zorns lemma is equivalent to

axiom of choice (see the book on Topology by JL Kelley for more) and helps in asserting existence

of maximal elements provided the totally ordered subsets of o have an upper bound (upper

bound for a subset T o is an element h o such that w _ h for all w o).

Exercise 4.8 Show that the relation _ denes a partial order on the set o. Prove that each totally

ordered subset of o has an upper bound.

By Zorns lemma, there exists a maximal element q in o. Note that this maximal solution is

saturated in view of the denition of _ and maximality q.

S. Sivaji Ganesh ODE@AFS

22 4.1. CONTINUATION

Remark 4.9 Under the hypothesis of previous theorem, if a solution u of IVP (3.1) is continuable,

then there may be more than one saturated solution extending u. This possibility is due to non-

uniqueness of solutions to IVP (3.1). The following exercise is concerned with this phenomenon.

Further note that if solution u to IVP (3.1) is unique, then there will be a unique saturated solution

extending it.

Exercise 4.10 Let f : R R R be dened by f(x, y) = 3 y

2/3

. Show that the solution y :

[1, 0] R dened by y(x) = 0 for all x [1, 0] of IVP satisfying the initial condition y(1) = 0

has at least two saturated solutions extending it.

The longevity of a solution (the extent to which a solution can be extended) is independent of

the smoothness of vector eld, where as existence and uniqueness of solutions are guaranteed for

smooth vector elds.

Theorem 4.11 (Classication of saturated solutions) Let u be a saturated right solution of

IVP (3.1), and its domain of denition be the interval [x

0

, d). Then one of the following alterna-

tives holds.

(1) The function u is unbounded on the interval [x

0

, d).

(2) The function u is bounded on the interval [x

0

, d), and u is global i.e., d = sup I.

(3) The function u is bounded on the interval [x

0

, d), and u is not global i.e., d < sup I

and each limit point of u as x d

lies on the boundary of .

Proof :

If (1) is not true, then denitely (2) or (3) will hold. Therefore we assume that both (1) and

(2) do not hold. Thus we assume that u is bounded on the interval [x

0

, d) and d < sup I. We need

to show that each limit point of u as x d

lies on the boundary of .

Our proof is by method of contradiction. We assume that there exists a limit point u

of u as

x d

in . We are going to prove that lim

xd

u(x) exists. Note that, once the limit exists it

must be equal to u

which is one of its limit points. Now applying Lemma 4.5, we infer that the

solution u is continuable and thus contradicting the hypothesis that u is a saturated solution.

Thus it remains to prove that lim

xd

u(x) = u

i.e., |u(x) u

| can be made arbitrarily small for

x near x = d.

Since is an open set and u

, there exists r > 0 such that B[u

, r] . As a consequence,

B[u

, ] for every < r. Thus on the rectangle R I dened by

R = [x

0

, d] y : |y u

| r, (4.5)

|f (x, y)| M for some M > 0 since R is a compact set and f is continuous.

Since u

is a limit point of u as x d

, there exists a sequence (x

m

) in [x

0

, d) such that x

m

d

and u(x

m

) u

. As a consequence of denition of limit, we can nd a k N such that

[x

k

d[ < min

_

2M

,

2

_

and |u(x

k

) u

| < min

_

2M

,

2

_

. (4.6)

Claim: (x, u(x)) : x [x

k

, d) I B[u

, ].

PROOF OF CLAIM: If the inclusion in the claim were false, then there would exist a point on the

graph of u (on the interval [x

k

, d)) lying outside the set I B[u

, ]. Owing to the continuity of

u, the graph must meet the boundary of B[u

, ]. Let x

> x

k

be the rst instance at which the

trajectory touches the boundary of B[u

, ]. That is, = |u(x

) u

| and |u(x) u

| < for

x

k

x < x

. Thus

= |u(x

) u

| |u(x

) u(x

k

)| +|u(x

k

) u

| <

_

x

x

k

|f (s, u(s))| ds +

2

(4.7)

< M(x

x

k

) +

2

< M(d x

k

) +

2

< (4.8)

ODE@AFS S. Sivaji Ganesh

23

This contradiction nishes the proof of Claim.

Therefore, limit of u(x) as x d exists. As noted at the beginning of this proof, it follows that

u is continuable. This nishes the proof of the theorem.

Theorem 4.12 (Classication of saturated solutions) Let f : I R

n

be continuous on I

and assume that it maps bounded subsets in I into bounded subsets in R

n

. Let u : [x

0

, d) R

n

be a saturated right solution of IVP. Then one of the following alternatives holds.

(1)

The function u is unbounded on the interval [x

0

, d). If d < there exists lim

xd

|u(x)| =

.

(2) The function u is bounded on the interval [x

0

, d), and u is global i.e., d = sup I.

(3)

The function u is bounded on the interval [x

0

, d), and u is not global i.e., d < sup I

and limit of u as x d

exists and lies on the boundary of .

Corollary 4.13 Let f : R R

n

R

n

be continuous. Let u : [x

0

, d) R

n

be a saturated right

solution of IVP. Use the previous exercise and conclude that one of the following two alternatives

holds.

(1) The function u is global i.e., d = .

(2) The function u is not global i.e., d < and lim

xd

|u(x)| = . This phenomenon

is often referred to as u blows up in nite time.

4.2 Global Existence theorem

In this section we give some sucient conditions under which every local solution of an IVP is

global. One of them is the growth of f w.r.t. y. If the growth is at most linear, then we have a

global solution.

Theorem 4.14 Let f : I R

n

R

n

be continuous. Assume that there exist two continuous

functions h, k : I R

+

(non-negative real-valued) such that

|f (x, y)| k(x)|y| + h(x), (x, y) I R

n

. (4.9)

Then for every initial data (x

0

, y

0

) I R

n

, IVP has at least one global solution.

Exercise 4.15 Using Global existence theorem, prove that any IVP corresponding to a rst order

linear system has a unique global solution.

4.3 Continuous dependence

In situations where a physical process is described (modelled) by an initial value problem for a

system of ODEs, then it is desirable that any errors made in the measurement of either initial

data or the vector eld, do not inuence the solution very much. In mathematical terms, this is

known as continuous dependence of solution of an IVP, on the data present in the problem. In

fact, the following result asserts that solution to an IVP has not only continuous dependence on

initial data but also on the vector eld f .

Exercise 4.16 Try to carefully formulate a mathematical statement on continuous dependence of

solution of an IVP, on initial conditions and vector elds.

S. Sivaji Ganesh ODE@AFS

24 4.4. WELL-POSED PROBLEMS

An honest eort to answer the above exercise would make us understand the diculty in formu-

lating such a statement. In fact, many introductory books on ODEs do not address this subtle

issue, and rather give a weak version of it without warning the reader about the principal di-

culties. See, however, the books of Wolfgang [33], Piccinini et. al. [23]. We now state a result on

continuous dependence, following Wolfgang [33].

Theorem 4.17 (Continuous dependece) Let R

n

be a domain and I R be an interval

containing the point x

0

. Let J be a closed and bounded subinterval of I, such that x

0

J. Let

f : I R

n

be a continuous function. Let y(x; x

0

, y

0

) be a solution on J of the initial value

problem

y

= f (x, y) , y(x

0

) = y

0

. (4.10)

Let S

denote the -neighbourhood of graph of y, i.e.,

S

:=

_

(x, y) : |y y(x; x

0

, y

0

)| , x J

_

. (4.11)

Suppose that there exists an > 0 such that f satises Lipschitz condition w.r.t. variable y on

S

. Then the solution y(x; x

0

, y

0

) depends continuously on the initial values and on the vector

eld f .

That is: Given > 0, there exists a > 0 such that if g is continuous on S

and the inequalities

|g (x, y) f (x, y) | on S

, | y

0

| (4.12)

are satised, then every solution z(x; x

0

, ) of the IVP

z

= g (x, z) , z(x

0

) = . (4.13)

exists on all of J, and satises the inequality

|z(x; x

0

, ) y(x; x

0

, y

0

)| , x J. (4.14)

Remark 4.18 (i) In words, the above theorem says : Any solution corresponding to an IVP

where the vector eld g near a Lipschitz continuous vector eld f and initial data (x

0

, )

near-by (x

0

, y

0

), stays near the unique solution of IVP with vector eld f and initial data

(x

0

, y

0

).

(ii) Note that, under the hypothesis of the theorem, any IVP with a vector eld g which is only

continuous, also has a solution dened on J.

(iii) Note that the above theorem does not answer the third question we posed at the beginning of

this chapter. The above theorem does not say anything about the function in (3.3).

4.4 Well-posed problems

A mathematical problem is said to be well-posed (or, properly posed) if it has the EUC property.

(1) Existence: The problem should have at least one solution.

(2) Uniqueness: The problem has at most one solution.

(3) Continuous dependence: The solution depends continuously on the data that are

present in the problem.

Theorem 4.19 Initial value problem for an ODE y

= f (x, y), where f is Lipschitz continuuos

on a rectangle containining the initial data (x, y

0

), is well-posed.

Example 4.20 Solving Ax = b is not well-posed. Think why such a statement could be true.

ODE@AFS S. Sivaji Ganesh

25

Lecture-5

22 Linear systems & Fundamental pairs of solutions

5.1 The Linear System

We consider the two-dimensional, linear homogeneous rst order system of ODE