0 ratings0% found this document useful (0 votes)

45 viewsFeature Subset Selection With Fast Algorithm Implementation

The Title “Feature Subset Selection with FAST Algorithm Implementation” has intended to show the things

occurred in between the searches happened in the place of client and server. The users clearly know about the process of

how to sending a request for the particular thing, and how to get a response for that request or how the system shows the

results explicitly. But no one knows about the internal process of searching records from a large database. This system

clearly shows how an internal process of the searching process works. In text classification, the dimensionality of the

feature vector is usually huge to manage. The Problems need to be handled are as follows: a) the current problem of the

existing feature clustering methods b) The desired number of extracted features has to be specified in advance c) When

calculating similarities, the variance of the underlying cluster is not considered d) How to reduce the dimensionality of

feature vectors for text classification and run faster. These Problems are handled by means of applying the FAST

Algorithm in hands with Association Rule Mining.

Uploaded by

seventhsensegroupCopyright

© © All Rights Reserved

Available Formats

Download as PDF, TXT or read online on Scribd

0 ratings0% found this document useful (0 votes)

45 viewsFeature Subset Selection With Fast Algorithm Implementation

The Title “Feature Subset Selection with FAST Algorithm Implementation” has intended to show the things

occurred in between the searches happened in the place of client and server. The users clearly know about the process of

how to sending a request for the particular thing, and how to get a response for that request or how the system shows the

results explicitly. But no one knows about the internal process of searching records from a large database. This system

clearly shows how an internal process of the searching process works. In text classification, the dimensionality of the

feature vector is usually huge to manage. The Problems need to be handled are as follows: a) the current problem of the

existing feature clustering methods b) The desired number of extracted features has to be specified in advance c) When

calculating similarities, the variance of the underlying cluster is not considered d) How to reduce the dimensionality of

feature vectors for text classification and run faster. These Problems are handled by means of applying the FAST

Algorithm in hands with Association Rule Mining.

Uploaded by

seventhsensegroupCopyright

© © All Rights Reserved

Available Formats

Download as PDF, TXT or read online on Scribd

You are on page 1/ 5

International Journal of Computer Trends and Technology (IJCTT) volume 6 number 1 Dec 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page1

Feature Subset Selection with Fast Algorithm

Implementation

K.Suman

#1

, S.Thirumagal

#2

#1

M.E, Computer Science and Engg, Chendhuran College of Engg and Tech, Anna University, Tamilnadu, India

#2

Assistant Professor, Department of IT, Chendhuran College of Engg and Tech, Anna University, Tamilnadu, India

Abstract-- The Title Feature Subset Selection with FAST Algorithm Implementation has intended to show the things

occurred in between the searches happened in the place of client and server. The users clearly know about the process of

how to sending a request for the particular thing, and how to get a response for that request or how the system shows the

results explicitly. But no one knows about the internal process of searching records from a large database. This system

clearly shows how an internal process of the searching process works. In text classification, the dimensionality of the

feature vector is usually huge to manage. The Problems need to be handled are as follows: a) the current problem of the

existing feature clustering methods b) The desired number of extracted features has to be specified in advance c) When

calculating similarities, the variance of the underlying cluster is not considered d) How to reduce the dimensionality of

feature vectors for text classification and run faster. These Problems are handled by means of applying the FAST

Algorithm in hands with Association Rule Mining.

Index Terms FAST algorithm, Feature selection, Redundant data, Text classification, Clustering, Rule mining.

1. INTRODUCTION

In machine learning and statistics, feature selection also

known as variable selection, attribute selection or variable

subset selection. It is the process of selecting a subset of

relevant features for use in model construction. The central

assumption when using a feature selection technique is that the

data contains many redundant or irrelevant features.

Redundant features are those which provide no more

information than the currently selected features, and irrelevant

features provide no useful information in any context. Feature

selection techniques are a subset of the more general field

of feature extraction. Feature extraction creates new features

from functions of the original features, whereas feature

selection returns a subset of the features. Feature selection

techniques are often used in domains where there are many

features and comparatively few samples or data points. The

archetypal case is the use of feature selection in

analysing DNA microarrays, where there are many thousands

of features, and a few tens to hundreds of samples. Feature

selection techniques provide three main benefits when

constructing predictive models: improved model

interpretability, shorter training times, and enhanced by

reducing over fitting.

A feature selection algorithmcan be seen as the combination

of a search technique for proposing new feature subsets, along

with an evaluation measure which scores the different feature

subsets. The simplest algorithmis to test each possible subset

of features finding the one which minimizes the error rate.

This is an exhaustive search of the space, and is

computationally intractable for all but the smallest of feature

sets. The choice of evaluation metric heavily influences the

algorithm, and it is these evaluation metrics which distinguish

between the three main categories of feature selection

algorithms: wrappers, filters and embedded methods.

Wrapper methods use a predictive model to score feature

subsets. Each new subset is used to train a model, which is

tested on a hold-out set. Counting the number of mistakes

made on that hold-out set (the error rate of the model) gives

the score for that subset. As wrapper methods train a new

model for each subset, they are very computationally

intensive, but usually provide the best performing feature set

for that particular type of model.

Filter methods use a proxy measure instead of the error rate to

score a feature subset. This measure is chosen to be fast to

compute, whilst still capturing the usefulness of the feature

set. Common measures include the Mutual

Information, Pearson product-moment correlation coefficient,

and inter/intra class distance. Filters are usually less

computationally intensive than wrappers, but they produce a

feature set which is not tuned to a specific type of predictive

model. Many filters provide a feature ranking rather than an

explicit best feature subset, and the cutoff point in the ranking

is chosen via cross-validation.

Embedded methods are a catch-all group of techniques which

performfeature selection as part of the model construction

process. One other popular approach is the Recursive Feature

Elimination algorithm, commonly used with Support Vector

Machines to repeatedly construct a model and remove features

with low weights. These approaches tend to be between filters

and wrappers in terms of computational complexity.

Subset selection evaluates a subset of features as a group for

suitability. Subset selection algorithms can be broken into

Wrappers, Filters and Embedded. Wrappers use a search

algorithmto search through the space of possible features and

evaluate each subset by running a model on the subset.

International Journal of Computer Trends and Technology (IJCTT) volume 6 number 1 Dec 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page2

Wrappers can be computationally expensive and have a risk of

over fitting to the model. Filters are similar to Wrappers in the

search approach, but instead of evaluating against a model, a

simpler filter is evaluated. Embedded techniques are

embedded in and specific to a model.

The Fast clustering Based Feature Selection algorithm(FAST)

works into 2 steps. In the first step, features are divided into

clusters by using graph theoretic clustering methods. In the

second step, the most representative feature i.e., strongly

related to target class is selected fromeach cluster to form the

final subset of features. A feature in different clusters in

relatively independent, the clustering based strategy of FAST

has high probability of producing a subset of useful and

independent features. The experimental results show that,

compared with other five different types of feature subset

selection algorithms, the proposed algorithm not only reduces

the number of features, but also improves the performances of

four well known different types of classifiers. A good feature

subset is one that contains features highly correlated with the

target, yet uncorrelated with each other. Different fromthese

algorithms, our proposed FAST algorithmemploys clustering

based method to features.

This paper discusses about feature selection, FAST algorithm,

Text classification and so on. The next section is literature

review.

2. LITERATURE REVIEW

This section literature review has provides an overview and a

critical evaluation of a body of literature relating to a research

problem. Literature review is the most important step in

software development process. This paper presents [1], a

temporal association rule mining approach named T-patterns,

applied on highly challenging floating train data. The aimis to

discover temporal associations between pairs of time stamped

alarms, called events, which can predict the occurrence of

severe failures within a complex bursty environment. The main

advantage is Association Rule Mining Produces efficient

cluster wise data maintenance and main drawback of this paper

is cluster set is too large to handle. In this paper [2], an

algorithm for mining highly coherent rules that takes the

properties of propositional logic into consideration is proposed.

The derived association rules may thus be more thoughtful and

reliable. The advantage is reducing the Cluster set by applying

Feature subset manipulations and drawback is resulting

probability is low. In this paper [3], the basic objective of

feature subset selection is to reduce the dimensionality of the

problem while retaining the most discriminatory information

necessary for accurate classification. Thus it is necessary to

evaluate feature subsets for their ability to discriminate

different classes of pattern. Now the fact that two best features

do not comprise the best feature subset of two features

demands evaluation of all possible subset of features to find out

the best feature subset. If the number of features increases, the

number of possible feature subsets grows exponentially leading

to a combinatorial optimization problem. The advantage of this

paper is optimal subset is created, so less time is required for

manipulation and drawbacks are data security is an issue, high

possibility for data loss. In the instance of this paper [4], we

present here a new population-based feature selection method

that utilizes dependency between features to guide the search.

For the particular problem of feature selection, population-

based methods aim to produce betteror fitter future

generations that contain more informative subsets of features. It

is well-known that feature subset selection is a very

challenging optimization problem, especially when dealing

with datasets that contain large number of features. The main

advantage of this paper is applying subset classification

algorithmto simplify data and drawback is, it requires more

time to manipulate data. In this paper [5], we propose a new

hybrid ant colony optimization (ACO) algorithmfor feature

selection (FS), called ACOFS, using a neural network. A key

aspect of this algorithmis the selection of a subset of salient

features of reduced size. ACOFS uses a hybrid search

technique that combines the advantages of wrapper and filter

approaches. We evaluate the performance of ACOFS on eight

benchmark classification datasets and one gene expression

dataset, which have dimensions varying from 9 to 2000. The

advantage along with this paper is hybrid methodology is

applied to optimize data and other drawback is inefficient for

large data sets. According to this paper which presents [6], a

feature subset selection algorithmbased on branch and bound

techniques are developed to select the best subset of mfeatures

from an n-feature set. Existing procedures for feature subset

selection, such as sequential selection and dynamic

programming, do not guarantee optimality of the selected

feature subset. Exhaustive search, on the other hand, is

generally computationally unfeasible. The present algorithmis

very efficient and it selects the best subset without exhaustive

search. The advantage is, pointers are maintained to reach data

efficiently and drawback which among inner looping produces

lots and lots of confusions for large cluster.

3. PROPOSED APPROACH

In the Proposed Approach, the feature subset selection

algorithmthe data is to be viewed as the process of identifying

and eliminating as many immaterial and unneeded features as

probable. This is because inappropriate features do not supply

to the projecting accuracy and unneeded features do not

redound to getting a better predictor for that they provide

mostly information which is already present in other features.

In past systems users can store the data and retrieve the data

from server without any knowledge about how it is maintained

and how it gets processed, but in the present systemthe users

clearly know about the process of how to sending a request for

the particular thing, and how to get a response for that request

or how the system shows the results explicitly, but no one

knows about the internal process of searching records from a

large database. This systemclearly shows how an internal

process of the searching process works; this is the present

advantage in our system. In text classification, the

dimensionality of the feature vector is usually huge to manage.

In the present system we eliminate the redundant data finding

the representative data, reduce the dimensions of the feature

sets, find the best set of vectors which best separate the

patterns and two ways of doing feature reduction, feature

selection and feature extraction. Good feature subsets contain

features highly correlated with predictive of the class, yet

uncorrelated with each other. The efficiently and effectively

deal with both irrelevant and redundant features, and obtain a

good feature subset. In future the same system can be

International Journal of Computer Trends and Technology (IJCTT) volume 6 number 1 Dec 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page3

developed with the help of mobile applications to support the

same procedure in mobile environments so that the users can

easily manipulate their data in any place.

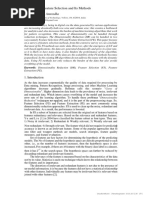

4. SYSTEM DESIGN

4.1 SYSTEM ARCHITECTURE

Generally algorithms shows a result for exploring a single

thing that is either be a performance, or speed, or accuracy,

and so on. But here is a requirement of proving the above

mentioned all in a single algorithm. The system has to start

with a concept of collecting transactional database, and

process the step with the rule of association, further

implementation proceeds with an implementation of rule

mining; then finally end with the process of sensitive rule

mining. For all our goal is to apply various association rules

to generate quicker results that may enhance algorithm

process.

Fig.a.SystemArchitecture

4.2 MODULES

A. User Module

B. Circulated Clustering

C. Text Detachment

D. Association Rule Mining

E. Text Organization

F. Data Representation

A. USER MODULE

In this module, Users are having authentication and security to

access the detail which is presented in the ontology system.

Before accessing or searching the details user should have the

account in that otherwise they should register first.

B. CIRCULATED CLUSTERING

Cluster is nothing but it is a combination of various features

including text subsets, it has been used to cluster words into

groups based either on their contribution in particular

grammatical relations with other words. Here the distributed

clustering focuses on the cluster with various text subsets. In

this module the systemcan manage the cluster with various

classifications of data.

C. TEXT DETACHMENT

The text detachment is the filtration process, which filters the

combination of various subsets present in the cluster into

matching clusters belonging to the text head with the help of

k-Means algorithm. A novel algorithmwhich can efficiently

and effectively deal with both inappropriate and superfluous

features, and acquire a good feature subset.

.

D. ASSOCIATION RULE MINING

Association rule mining is the best method for discovering

interesting relations between variables in large databases or

data warehouse. It is intended to identify strong rules

discovered in databases using different measures of

interestingness. With the help of this rule mining the system

can manipulate and associates the text cluster into the

respective heads based on the internal features of data.

E. TEXT ORGANIZATION

The Text organization contains many problems, which has

been widely studied in the data mining, machine learning,

database, and information retrieval communities with

applications in a number of diverse domains, such as target

marketing, medical diagnosis, news group ltering, and

document organization. The text organization technique

assumes categorical values for the labels, though it is possible

to use continuous values as labels. The latter is referred to as

the regression modeling problem. The problem of text

organization is closely related to that of classication of

records with set-valued features. However, this model assumes

that only information about the presence or absence of words

is used in a document. In reality, the frequency of words also

plays a helpful role in the classication process and typical

domain size of text data (the entire size) is much greater than a

typical set valued classication problem.

F. DATA REPRESENTATION

With the help of text classification and association rule mining

the cluster is assembled with proper subset and correct header

representations, in this stage the system can easily find out the

text representation with maximumthreshold value.

4.3 SCREEN SHOTS

A. Main form

Authentication

Load Transactional

database

Authorization

Newreg

Registry created

DB- System

Request dataand

receive response

Client

User

International Journal of Computer Trends and Technology (IJCTT) volume 6 number 1 Dec 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page4

B. Load Transactional Data

C. Select the Data

D. Text Classification

International Journal of Computer Trends and Technology (IJCTT) volume 6 number 1 Dec 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page5

S.Thirumagal is currently working as an

Asst. Prof. from the Department of

Information Technology at Chendhuran

College of Engineering and Technology,

Pudukkottai. She received his Bachelor

Degree from Shanmuganathan Engineering

College, Pudukkottai. She received his

master degree fromInfant J esus college of

Engineering, Thoothukudi and Tamilnadu.

She Published 1 National Conference and 1

International J ournal. Her main research

interests lie in the area of Data mining and

Data warehousing.

5. CONCLUSION AND FUTURE ENHANCEMENT

For the entire Fast algorithmin hands with association rule

implementation gives flexible results to users, like removing

irrelevant features fromthe Original Subset, and constructing

a minimumspanning tree from the relative subset whatever

present in the data store. By partitioning the minimum

spanning tree we can easily identify the text representation

fromthe features. Association Rule Mining gives ultimate data

set with header representation as well as FAST algorithm with

applied K-Means strategy provides efficient data management

and faster performance. The revealing regulation set is

significantly smaller than the association rule set, in particular

when the minimumsupport is small. The proposed work has

characterized the associations between the revealing

regulation set and the non-redundant association rule set, and

discovered that the enlightening regulation set is a subset of

the non-redundant association rule set.

ACKNOWLEDGMENT

We would like to sincerely thank Assistant Prof. S.Thirumagal

for his advice and guidance at the start of this article. His

guidance has also been essential during some steps of this

article and his quick invaluable insights have always been very

helpful. His hard working and passion for research also has set

an example that we would like to follow. We really appreciate

his interest and enthusiasm during this article. Finally we

thank the Editor in chief, the Associate Editor and anonymous

Referees for their comments.

REFERENCES

[1] Sammouri W., Temporal association rule mining for the preventive

diagnosis of onboard subsystems within floating train data

framework, pp 1351-1356, J une 2012.

[2] Chun-Hao Chen., A High Coherent Association Rule Mining

Algorithm, In Proceedings of the IEEE international Conference on

Technologies and applications of artificial intelligence, pp 1-4, Nov

2012.

[3] Chakraborty.B.., Bio-inspired algorithms for optimal feature subset

selection In Proceedings of the Fifth IEEE international Conference on

Computers and Devices for Communication , pp 1-7, Dec 2012..

[4] Ahmed Al-Ani, Rami N. Khushaba., A Population Based Feature

Subset Selection AlgorithmGuided by Fuzzy Feature Dependency,

Volume 322, pp 430-438, Dec 2012.

[5] Md. Monirul Kabir., A new hybrid ant colony optimization algorithm

for feature selection, Volume 39, issue 3, pp 3747-3763, Feb 2012.

[6] P.M. Narendra, K. Fukunaga, A Branch and Bound Algorithmfor

Feature Subset Selection, Volume C-26, issue 9, pp 917-922, Aug

2006.

AUTHORS

PROFILE

K.Suman is currently a PG scholar in

Computer Science Engineering from the

Department of Computer Science and

Engineering at Chendhuran College of

Engineering and Technology, Pudukkottai.

He received his Bachelor Degree in

Information Technology from Sudharsan

Engineering College, Pudukkottai and

Tamilnadu. His Research areas include

Data mining, grid computing and wireless

sensor networks.

You might also like

- Fast Clustering Based Feature Selection: Ubed S. Attar, Ajinkya N. Bapat, Nilesh S. Bhagure, Popat A. BhesarNo ratings yetFast Clustering Based Feature Selection: Ubed S. Attar, Ajinkya N. Bapat, Nilesh S. Bhagure, Popat A. Bhesar7 pages

- Literature Review On Feature Subset Selection TechniquesNo ratings yetLiterature Review On Feature Subset Selection Techniques3 pages

- International Journal of Engineering Research and Development (IJERD)No ratings yetInternational Journal of Engineering Research and Development (IJERD)5 pages

- An Improved Fast Clustering Method For Feature Subset Selection On High-Dimensional Data ClusteringNo ratings yetAn Improved Fast Clustering Method For Feature Subset Selection On High-Dimensional Data Clustering5 pages

- A Review of Feature Selection and Its Methods: Cybernetics and Information Technologies March 2019No ratings yetA Review of Feature Selection and Its Methods: Cybernetics and Information Technologies March 201925 pages

- A Novel Approach For Feature Selection Based On Correlation Measures CFS and Chi SquareNo ratings yetA Novel Approach For Feature Selection Based On Correlation Measures CFS and Chi Square13 pages

- A Review of Feature Selection Methods On Synthetic DataNo ratings yetA Review of Feature Selection Methods On Synthetic Data37 pages

- A Comparative Study Between Feature Selection Algorithms - OkNo ratings yetA Comparative Study Between Feature Selection Algorithms - Ok10 pages

- Optimal Feature Selection from VMware ESXi 5.1 Feature SetNo ratings yetOptimal Feature Selection from VMware ESXi 5.1 Feature Set8 pages

- 1.a Faster Clustering-Based Feature Subset Selection Algorithm For High Dimensional DataNo ratings yet1.a Faster Clustering-Based Feature Subset Selection Algorithm For High Dimensional Data3 pages

- Feature Selection Techniques For Microarray Dataset: A ReviewNo ratings yetFeature Selection Techniques For Microarray Dataset: A Review8 pages

- Feature Subset Selection: A Correlation Based Filter ApproachNo ratings yetFeature Subset Selection: A Correlation Based Filter Approach4 pages

- A Study On Feature Selection Techniques in Bio Informatics100% (1)A Study On Feature Selection Techniques in Bio Informatics7 pages

- Toward Integrating Feature Selection Algorithms For Classification and Clustering-M7s PDFNo ratings yetToward Integrating Feature Selection Algorithms For Classification and Clustering-M7s PDF12 pages

- E-Note 14653 Content Document 20231228101402AMNo ratings yetE-Note 14653 Content Document 20231228101402AM10 pages

- Kernels, Model Selection and Feature SelectionNo ratings yetKernels, Model Selection and Feature Selection5 pages

- 2015-Elsevier-Multi-objective-optimization-of-shared-nearest-neighbor-similarity-for-feature-selectionNo ratings yet2015-Elsevier-Multi-objective-optimization-of-shared-nearest-neighbor-similarity-for-feature-selection12 pages

- A Review of Feature Selection and Its MethodsNo ratings yetA Review of Feature Selection and Its Methods15 pages

- Booster in High Dimensional Data ClassificationNo ratings yetBooster in High Dimensional Data Classification5 pages

- A Fast Clustering-Based Feature Subset Selection Algorithm For High Dimensional DataNo ratings yetA Fast Clustering-Based Feature Subset Selection Algorithm For High Dimensional Data8 pages

- Improving Floating Search Feature Selection Using Genetic AlgorithmNo ratings yetImproving Floating Search Feature Selection Using Genetic Algorithm19 pages

- 1-2 The Problem 3-4 Proposed Solution 5-7 The Experiment 8-9 Experimental Results 10-11 Conclusion 12 References 13No ratings yet1-2 The Problem 3-4 Proposed Solution 5-7 The Experiment 8-9 Experimental Results 10-11 Conclusion 12 References 1314 pages

- Independent Feature Elimination in High Dimensional Data: Empirical Study by Applying Learning Vector Quantization MethodNo ratings yetIndependent Feature Elimination in High Dimensional Data: Empirical Study by Applying Learning Vector Quantization Method6 pages

- Clustering Based Attribute Subset Selection Using Fast AlgorithmNo ratings yetClustering Based Attribute Subset Selection Using Fast Algorithm8 pages

- A Review of Feature Selection Methods With ApplicationsNo ratings yetA Review of Feature Selection Methods With Applications6 pages

- Feature Selection Techniques in Machine LearningNo ratings yetFeature Selection Techniques in Machine Learning9 pages

- On_the_behavior_of_feature_selection_methods_dealing_with_noise_and_relevance_over_synthetic_scenariosNo ratings yetOn_the_behavior_of_feature_selection_methods_dealing_with_noise_and_relevance_over_synthetic_scenarios8 pages

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- DATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABNo ratings yet

- DATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABNo ratings yet

- Fabrication of High Speed Indication and Automatic Pneumatic Braking System0% (1)Fabrication of High Speed Indication and Automatic Pneumatic Braking System7 pages

- Design, Development and Performance Evaluation of Solar Dryer With Mirror Booster For Red Chilli (Capsicum Annum)No ratings yetDesign, Development and Performance Evaluation of Solar Dryer With Mirror Booster For Red Chilli (Capsicum Annum)7 pages

- Implementation of Single Stage Three Level Power Factor Correction AC-DC Converter With Phase Shift ModulationNo ratings yetImplementation of Single Stage Three Level Power Factor Correction AC-DC Converter With Phase Shift Modulation6 pages

- Non-Linear Static Analysis of Multi-Storied Building100% (1)Non-Linear Static Analysis of Multi-Storied Building5 pages

- FPGA Based Design and Implementation of Image Edge Detection Using Xilinx System GeneratorNo ratings yetFPGA Based Design and Implementation of Image Edge Detection Using Xilinx System Generator4 pages

- An Efficient and Empirical Model of Distributed ClusteringNo ratings yetAn Efficient and Empirical Model of Distributed Clustering5 pages

- Design and Implementation of Multiple Output Switch Mode Power SupplyNo ratings yetDesign and Implementation of Multiple Output Switch Mode Power Supply6 pages

- Experimental Analysis of Tobacco Seed Oil Blends With Diesel in Single Cylinder Ci-EngineNo ratings yetExperimental Analysis of Tobacco Seed Oil Blends With Diesel in Single Cylinder Ci-Engine5 pages

- Analysis of The Fixed Window Functions in The Fractional Fourier DomainNo ratings yetAnalysis of The Fixed Window Functions in The Fractional Fourier Domain7 pages

- Statistics A Tool for Social Research Canadian 3rd Edition Healey Test Bank - Read Now With The Full Version Of All Chapters100% (2)Statistics A Tool for Social Research Canadian 3rd Edition Healey Test Bank - Read Now With The Full Version Of All Chapters45 pages

- Artgo - Intrinsic Viscosity - Single PointNo ratings yetArtgo - Intrinsic Viscosity - Single Point7 pages

- Introductory Statistics 8th Ed by Mann ( PDFDrive )-16-20No ratings yetIntroductory Statistics 8th Ed by Mann ( PDFDrive )-16-205 pages

- Metropolitan University, Sylhet: (Answer All The Questions)No ratings yetMetropolitan University, Sylhet: (Answer All The Questions)2 pages

- Gringarten Deutsch 2001 - Variogram Interpretation and ModelingNo ratings yetGringarten Deutsch 2001 - Variogram Interpretation and Modeling28 pages

- M.Sc. Course in Applied Mathematics With Oceanology and Computer Programming Semester-IV Paper-MTM402 Unit-1No ratings yetM.Sc. Course in Applied Mathematics With Oceanology and Computer Programming Semester-IV Paper-MTM402 Unit-115 pages

- 1-Quantitative Decision Making and Overview PDFNo ratings yet1-Quantitative Decision Making and Overview PDF14 pages

- Stata Item Response Theory Reference Manual: Release 17No ratings yetStata Item Response Theory Reference Manual: Release 17257 pages

- Subject: Statistics and Probability Grade Level: 11 Quarter: FIRST Week: 1No ratings yetSubject: Statistics and Probability Grade Level: 11 Quarter: FIRST Week: 123 pages

- Full Download of Design and Analysis of Experiments 8th Edition Montgomery Solutions Manual in PDF DOCX Format100% (15)Full Download of Design and Analysis of Experiments 8th Edition Montgomery Solutions Manual in PDF DOCX Format58 pages

- Lembar Jawaban Skillab Evidence Based Edicine (Ebm) : Parameter Rerata SD RERATA+2sd Nilai AbnormalitasNo ratings yetLembar Jawaban Skillab Evidence Based Edicine (Ebm) : Parameter Rerata SD RERATA+2sd Nilai Abnormalitas26 pages

- The Locomotion of The Cockroach Periplaneta AmericanaNo ratings yetThe Locomotion of The Cockroach Periplaneta Americana10 pages

- Download full Introduction to Experimental Linguistics Christelle Gillioz ebook all chapters100% (3)Download full Introduction to Experimental Linguistics Christelle Gillioz ebook all chapters51 pages

- Classification Through Machine Learning Technique: C4.5 Algorithm Based On Various EntropiesNo ratings yetClassification Through Machine Learning Technique: C4.5 Algorithm Based On Various Entropies8 pages

- 6 The Korean Wave - A Quantitative Study On K-Pop's Aesthetic Presence in Philippine Multi-MediaNo ratings yet6 The Korean Wave - A Quantitative Study On K-Pop's Aesthetic Presence in Philippine Multi-Media12 pages

- ALY6010 - Project 3 Document - Electronic Keno - v1 PDFNo ratings yetALY6010 - Project 3 Document - Electronic Keno - v1 PDF6 pages

- Fast Clustering Based Feature Selection: Ubed S. Attar, Ajinkya N. Bapat, Nilesh S. Bhagure, Popat A. BhesarFast Clustering Based Feature Selection: Ubed S. Attar, Ajinkya N. Bapat, Nilesh S. Bhagure, Popat A. Bhesar

- Literature Review On Feature Subset Selection TechniquesLiterature Review On Feature Subset Selection Techniques

- International Journal of Engineering Research and Development (IJERD)International Journal of Engineering Research and Development (IJERD)

- An Improved Fast Clustering Method For Feature Subset Selection On High-Dimensional Data ClusteringAn Improved Fast Clustering Method For Feature Subset Selection On High-Dimensional Data Clustering

- A Review of Feature Selection and Its Methods: Cybernetics and Information Technologies March 2019A Review of Feature Selection and Its Methods: Cybernetics and Information Technologies March 2019

- A Novel Approach For Feature Selection Based On Correlation Measures CFS and Chi SquareA Novel Approach For Feature Selection Based On Correlation Measures CFS and Chi Square

- A Review of Feature Selection Methods On Synthetic DataA Review of Feature Selection Methods On Synthetic Data

- A Comparative Study Between Feature Selection Algorithms - OkA Comparative Study Between Feature Selection Algorithms - Ok

- Optimal Feature Selection from VMware ESXi 5.1 Feature SetOptimal Feature Selection from VMware ESXi 5.1 Feature Set

- 1.a Faster Clustering-Based Feature Subset Selection Algorithm For High Dimensional Data1.a Faster Clustering-Based Feature Subset Selection Algorithm For High Dimensional Data

- Feature Selection Techniques For Microarray Dataset: A ReviewFeature Selection Techniques For Microarray Dataset: A Review

- Feature Subset Selection: A Correlation Based Filter ApproachFeature Subset Selection: A Correlation Based Filter Approach

- A Study On Feature Selection Techniques in Bio InformaticsA Study On Feature Selection Techniques in Bio Informatics

- Toward Integrating Feature Selection Algorithms For Classification and Clustering-M7s PDFToward Integrating Feature Selection Algorithms For Classification and Clustering-M7s PDF

- 2015-Elsevier-Multi-objective-optimization-of-shared-nearest-neighbor-similarity-for-feature-selection2015-Elsevier-Multi-objective-optimization-of-shared-nearest-neighbor-similarity-for-feature-selection

- A Fast Clustering-Based Feature Subset Selection Algorithm For High Dimensional DataA Fast Clustering-Based Feature Subset Selection Algorithm For High Dimensional Data

- Improving Floating Search Feature Selection Using Genetic AlgorithmImproving Floating Search Feature Selection Using Genetic Algorithm

- 1-2 The Problem 3-4 Proposed Solution 5-7 The Experiment 8-9 Experimental Results 10-11 Conclusion 12 References 131-2 The Problem 3-4 Proposed Solution 5-7 The Experiment 8-9 Experimental Results 10-11 Conclusion 12 References 13

- Independent Feature Elimination in High Dimensional Data: Empirical Study by Applying Learning Vector Quantization MethodIndependent Feature Elimination in High Dimensional Data: Empirical Study by Applying Learning Vector Quantization Method

- Clustering Based Attribute Subset Selection Using Fast AlgorithmClustering Based Attribute Subset Selection Using Fast Algorithm

- A Review of Feature Selection Methods With ApplicationsA Review of Feature Selection Methods With Applications

- On_the_behavior_of_feature_selection_methods_dealing_with_noise_and_relevance_over_synthetic_scenariosOn_the_behavior_of_feature_selection_methods_dealing_with_noise_and_relevance_over_synthetic_scenarios

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLAB

- DATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLAB

- DATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLAB

- Fabrication of High Speed Indication and Automatic Pneumatic Braking SystemFabrication of High Speed Indication and Automatic Pneumatic Braking System

- Design, Development and Performance Evaluation of Solar Dryer With Mirror Booster For Red Chilli (Capsicum Annum)Design, Development and Performance Evaluation of Solar Dryer With Mirror Booster For Red Chilli (Capsicum Annum)

- Implementation of Single Stage Three Level Power Factor Correction AC-DC Converter With Phase Shift ModulationImplementation of Single Stage Three Level Power Factor Correction AC-DC Converter With Phase Shift Modulation

- Non-Linear Static Analysis of Multi-Storied BuildingNon-Linear Static Analysis of Multi-Storied Building

- FPGA Based Design and Implementation of Image Edge Detection Using Xilinx System GeneratorFPGA Based Design and Implementation of Image Edge Detection Using Xilinx System Generator

- An Efficient and Empirical Model of Distributed ClusteringAn Efficient and Empirical Model of Distributed Clustering

- Design and Implementation of Multiple Output Switch Mode Power SupplyDesign and Implementation of Multiple Output Switch Mode Power Supply

- Experimental Analysis of Tobacco Seed Oil Blends With Diesel in Single Cylinder Ci-EngineExperimental Analysis of Tobacco Seed Oil Blends With Diesel in Single Cylinder Ci-Engine

- Analysis of The Fixed Window Functions in The Fractional Fourier DomainAnalysis of The Fixed Window Functions in The Fractional Fourier Domain

- Statistics A Tool for Social Research Canadian 3rd Edition Healey Test Bank - Read Now With The Full Version Of All ChaptersStatistics A Tool for Social Research Canadian 3rd Edition Healey Test Bank - Read Now With The Full Version Of All Chapters

- Introductory Statistics 8th Ed by Mann ( PDFDrive )-16-20Introductory Statistics 8th Ed by Mann ( PDFDrive )-16-20

- Metropolitan University, Sylhet: (Answer All The Questions)Metropolitan University, Sylhet: (Answer All The Questions)

- Gringarten Deutsch 2001 - Variogram Interpretation and ModelingGringarten Deutsch 2001 - Variogram Interpretation and Modeling

- M.Sc. Course in Applied Mathematics With Oceanology and Computer Programming Semester-IV Paper-MTM402 Unit-1M.Sc. Course in Applied Mathematics With Oceanology and Computer Programming Semester-IV Paper-MTM402 Unit-1

- Stata Item Response Theory Reference Manual: Release 17Stata Item Response Theory Reference Manual: Release 17

- Subject: Statistics and Probability Grade Level: 11 Quarter: FIRST Week: 1Subject: Statistics and Probability Grade Level: 11 Quarter: FIRST Week: 1

- Full Download of Design and Analysis of Experiments 8th Edition Montgomery Solutions Manual in PDF DOCX FormatFull Download of Design and Analysis of Experiments 8th Edition Montgomery Solutions Manual in PDF DOCX Format

- Lembar Jawaban Skillab Evidence Based Edicine (Ebm) : Parameter Rerata SD RERATA+2sd Nilai AbnormalitasLembar Jawaban Skillab Evidence Based Edicine (Ebm) : Parameter Rerata SD RERATA+2sd Nilai Abnormalitas

- The Locomotion of The Cockroach Periplaneta AmericanaThe Locomotion of The Cockroach Periplaneta Americana

- Download full Introduction to Experimental Linguistics Christelle Gillioz ebook all chaptersDownload full Introduction to Experimental Linguistics Christelle Gillioz ebook all chapters

- Classification Through Machine Learning Technique: C4.5 Algorithm Based On Various EntropiesClassification Through Machine Learning Technique: C4.5 Algorithm Based On Various Entropies

- 6 The Korean Wave - A Quantitative Study On K-Pop's Aesthetic Presence in Philippine Multi-Media6 The Korean Wave - A Quantitative Study On K-Pop's Aesthetic Presence in Philippine Multi-Media

- ALY6010 - Project 3 Document - Electronic Keno - v1 PDFALY6010 - Project 3 Document - Electronic Keno - v1 PDF