Orthogonal Biorthogonal and Simplex Signals

Orthogonal Biorthogonal and Simplex Signals

Uploaded by

indameantime5655Copyright:

Available Formats

Orthogonal Biorthogonal and Simplex Signals

Orthogonal Biorthogonal and Simplex Signals

Uploaded by

indameantime5655Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Orthogonal Biorthogonal and Simplex Signals

Orthogonal Biorthogonal and Simplex Signals

Uploaded by

indameantime5655Copyright:

Available Formats

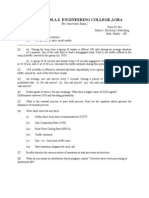

4.

2-21

4.2.4 Orthogonal, Biorthogonal and Simplex Signals In PAM, QAM and PSK, we had only one basis function. For orthogonal, biorthogonal and simplex signals, however, we use more than one orthogonal basis function, so N-dimensional Examples: the Fourier basis; time-translated pulses; the Walsh-Hadamard basis. o Weve become used to SER getting worse quickly as we add bits to the symbol but with these orthogonal signals it actually gets better. o The drawback is bandwidth occupancy; the number of dimensions in bandwidth W and symbol time Ts is

N 2W Ts

o So we use these sets when the power budget is tight, but theres plenty of bandwidth.

Orthogonal Signals With orthogonal signals, we select only one of the orthogonal basis functions for transmission:

The number of signals M equals the number of dimensions N.

4.2-22

Examples of orthogonal signals are frequency-shift keying (FSK), pulse position modulation (PPM), and choice of Walsh-Hadamard functions (note that with Fourier basis, its FSK, not OFDM).

Energy and distance:

o The signals are equidistant, as can be seen from the sketch or from

Es location i si s j = Es location j

so si s j = 2 Es = 2log 2 ( M ) Eb = 2kEb = d min , i, j

4.2-23

Effect of adding bits:

o For a fixed energy per bit, adding more bits increases the minimum distance strong contrast to PAM, QAM, PSK.

o But adding each bit doubles the number of signals M, which equals the number of dimensions N and that doubles the bandwidth! Error analysis for orthogonal signals. Equal energy, equiprobable signals, so receiver is

o Error probability is same for all signals. If s1 was sent, then the correlation vector is

Es + n1 n2 with real components. c= nM

4.2-24

o Assume s1 was sent. The probability of a correct symbol decision, conditioned on the value of the received c1 , is

Pcs (c1 ) = P ( n2 < c1 ) ( n3 < c1 ) ( nM < c1 ) c1 = 1 Q N 2 0

M 1

o Then the unconditional probability of correct symbol detection is

c1 Pcs = 1 Q N 2 0

M 1

pc1 ( c1 ) dc1

M 1

c1 = 1 Q N 2 0 =

1 2

1 2 1 c1 Es exp 2 N0 N0 2

dc1

(1 Q ( u ) )

M 1

1 1 exp u 2 s 2 2

du

Needs a numerical evaluation.

o The unconditional probability of symbol error (SER) is

Pes = 1 Pcs

4.2-25

Now for the bit error rate.

o All errors equally likely, at

Pes P = k es . No point in Gray coding. M 1 2 1

k o Can get i bit errors in equiprobable ways, so i Ps k k Ps k k 1 Peb = k i = k 2 1 i =1 i 2k 1 i =1 i 1 2k 1 k Ps Ps =k k 2 2 1

About half the bits are in error in the average symbol error. The SER for orthogonal signals is striking:

Symbol Error Prob, Orthogonal Signals

1 0.1 prob of symbol error 0.01 1 .10

3

M=2 M=4 M=8 M = 16

The SER improves as we add bits!

4 1 .10

But the bandwidth doubles with each additional bit.

1 .10 1 .10

5 6

10

12

14

16

SNR per bit, gamma_b (dB)

4.2-26

Why does SER drop as M increases?

o All points are separated by the same d = 2log 2 ( M ) Eb . Since d 2

increases linearly with k = log 2 ( M ) , the pairwise error probability (between two specific points) decreases exponentially with k, the number of bits per symbol.

o Offsetting this is the number M-1 of neighbours of any point: M-1

ways of getting it wrong, and M increases exponentially with k.

o Which effect wins? Cant get much analytical traction from the

integral expression two pages back. So fall back to a union bound.

Union bound analysis:

o Without loss of generality, assume s1 was transmitted. o Define Em , m = 2, , M as the event that r is closer to s m than to s1 .

4.2-27

o Note that Em is a pairwise error. It does not imply that the receivers

decision is m. In fact, we have events E2 ,, EM and they are not mutually exclusive, since r could lie closer to two or more points than to s1 :

o The overall error event E = E2 E3 EM , so the SER is bounded

by the sum of the pairwise event probabilities1

Pes = P [ E ] = P [ E2 E3 EM ] d 2 = ( M 1)Q = ( M 1) Q N 2 0 1 2 e k b 2

k

1

m=2

P [ Em ]

log 2 ( M ) b

<e

k b ln(2) 2

A general expansion is

M M M

P [ A1 A2 AM ] = P [ A i ] P Ai A j

i =1 i =i j =1 j i

+ P Ai A j Ak

i =1 j =1 k =1 j i k j k i

M M M

etc.

4.2-28

o From the bound, if b > 2ln ( 2 ) = 1.386 , then loading more bits onto a

symbol causes the upper bound on SER to drop exponentially to zero.

o Conversely, if b < 2ln ( 2 ) , increasing k causes the upper bound on

SER to rise exponentially (SER itself saturates at 1).

o Too bad the dimensionality, the number of correlators and the

bandwidth also increase exponentially with k. This scheme is called block orthogonal coding. Thresholds are characteristic of coded systems. Remember that this analysis is based on bounds

SER and Bound, Orthogonal Signals

10 1 0.1 prob of symbol error 0.01 1 .10 1 .10 1 .10 1 .10

3 4 5 6

True SERs are solid lines, bounds are dashed.

M=2 M=2 M=4 M=4 M=8 M=8 M = 16 M = 16 2 0 2 4 6 8 10 12 14 16

Upper bound is useful to show decreasing SER, but is too loose for a good approximation.

SNR per bit, gamma_b (dB)

4.2-29

Biorthogonal Signals

If you have coherent detection, why waste the other side of the axis? Double the number of signal points (add one bit) with Es . Now

M = 2 N , with no increase in bandwidth. Or keep the same M and cut the

bandwidth in half.

Energy and distance:

All signal points are equidistant from si

si s k = 2 Es = d min (the same as orthogonal signals)

except one the reflection through the origin which is farther away

s i s i = 2 Es

4.2-30

Symbol error rate:

The probability of error is messy, but the union bound is easy. A signal is equidistant from all other signals but its own complement.

o So the union bound on SER is

Ps ( M 2) Q

( ) + Q ( 2 ) ( M 2) Q ( ) second term redundant (Sec'n 4.5.4) = ( M 2) Q ( log ( M ) )

s s s 2 b

which is slightly less than orthogonal signaling, for the same M and same b . The major benefit is that biorthogonal needs only half the bandwidth of orthogonal, since it has half the number of dimensions.

o And the bit error probability is

Pb log 2 ( M ) Ps 2

4.2-31

Simplex Signals

The orthogonal signals can be seen as a mean values, shared by all, and signal-dependent increments:

1 The mean signal (the centroid) is s = M

m =1

sm .

o The mean does not contribute to distinguishability of signals so why

not subtract it?

1 M 1 M s = Es m 1 1 M location m 1 M

s = s m s m

where

Es is the original signal energy.

4.2-32

o Removing the mean lowers the dimensionality by one. After rotation

into tidier coordinates:

They still have equal energy (though no longer orthogonal). That energy is

Es = s m

1 2 = Es M 2 + 1 M M

1 = E 1 M

The energy saving is small for larger M.

4.2-33

The correlation among signals is:

1 ( s , s ) 1 Re [mn ] = m n = M = 1 s s M 1 m n 1 M

for m n

A uniform negative correlation. The SER is easy. Translation doesnt change the error rate, so the SER is that of orthogonal signals with a M ( M 1) SNR boost.

4.2.5 Vertices of a Hypercube and Generalizations

More signals defined on a multidimensional space. Unlike orthogonal, we will now allow more than one dimension to be used in a symbol. Vertices of a hypercube is straightforward: two-level PAM (binary antipodal) on each of the N dimensions:

o With the Fourier basis, it is BPSK on each frequency at once a

simple OFDM

o With the time translate basis, it is a classical NRZ transmission:

4.2-34

o Signal vectors:

sm1 s sm = m2 smN

with smn = Es N

for m = 1, M and M = 2 N .

o In space, it looks like this

o Every point has the same distance from the origin, hence the same

energy

sm

= Es = log 2 ( M ) Eb = N Eb

4.2-35

o Minimum distance occurs for differences in a single coordinate:

1 1 Es 1 N 1 1 1 1 Es 1 N 1 1

sm =

sn =

d min = s m s n = 2

Es = 2 Eb N

More generally, for d H disagreements (Hamming distance),

sm sn = 2 d H Es = 2 d H Eb N

o What are the effects on d min and bandwidth if we increase the number

of bits, keeping Eb fixed? Its easy to generalize from binary to PAM, PSK, QAM, etc. on each of the dimensions:

o With the Fourier basis, it is OFDM

o With time translates, it is the usual serial transmission.

4.2-36

Error analysis: All points have same energy

Es = N Eb

Euclidean distance for Hamming distance h is d = 2 h Eb

o If labeling is done independently on different dimensions (see sketch),

then the independence of the noise causes it to decompose to N independent detectors. So the following structure

Ps N Q

2 b

= log 2 ( M )Q

)

2 b

Pb depends on labels

becomes

Ps is meaningless

Pb = Q

2 b , just

binary antipodal

4.2-37

Generalization: user multilevel signals (PAM) in each dimension. Again, if labeling is independent by dimension, then its just independent and parallel use, like QAM. Not too exciting. Yet. These signals form a finite lattice.

Generalization: use a subset of the cube vertices, with points selected for greater minimum distance. This is a binary block code.

o Advantage: greater Euclidean distance

o Disadvantage: lower data rate

You might also like

- Bruno Clerckx SlidesDocument60 pagesBruno Clerckx SlidesMehrdad SalNo ratings yet

- Mindray BC 2800 TrainingDocument56 pagesMindray BC 2800 Traininghendra_ck100% (2)

- Adc Mod 4Document130 pagesAdc Mod 4anuNo ratings yet

- Hfe Pioneer vsx-921-k 1021-k Service rrv4182 enDocument9 pagesHfe Pioneer vsx-921-k 1021-k Service rrv4182 enmdbaddiniNo ratings yet

- 5,6 Ldic New Course FileDocument49 pages5,6 Ldic New Course FileTarun PrasadNo ratings yet

- Comunicaciones Digitales Avanzadas DEBER 1 (Select Max. 10 Problems and Answer The Two Questions)Document3 pagesComunicaciones Digitales Avanzadas DEBER 1 (Select Max. 10 Problems and Answer The Two Questions)Cristian Sancho LopezNo ratings yet

- Matched Filtering and Timing Recovery in Digital Receivers - Match Filter, Timing RecoveryDocument8 pagesMatched Filtering and Timing Recovery in Digital Receivers - Match Filter, Timing Recoveryjoe2001coolNo ratings yet

- Experiment 3 AWGN Noise Generation: 1.0 ObjectiveDocument2 pagesExperiment 3 AWGN Noise Generation: 1.0 ObjectiveAafaqIqbalNo ratings yet

- Volte SRVCCDocument22 pagesVolte SRVCCanoopreghuNo ratings yet

- EC403 Analog CommunicationDocument53 pagesEC403 Analog CommunicationMnshNo ratings yet

- Matched Filter DemodulatorDocument11 pagesMatched Filter DemodulatorTikam SuvasiyaNo ratings yet

- Question BankDocument37 pagesQuestion BankViren PatelNo ratings yet

- Spectral Estimation NotesDocument6 pagesSpectral Estimation NotesSantanu Ghorai100% (1)

- Modulacion-Demodulacion-Medios de Transmision y NormatividadDocument5 pagesModulacion-Demodulacion-Medios de Transmision y NormatividadOziel RivasNo ratings yet

- QPSK Mod&Demodwith NoiseDocument32 pagesQPSK Mod&Demodwith Noisemanaswini thogaruNo ratings yet

- Spread SpectrumDocument41 pagesSpread Spectrummohan inumarthiNo ratings yet

- Ece V Microwaves and Radar (10ec54) NotesDocument193 pagesEce V Microwaves and Radar (10ec54) NotesVijayaditya Sìngh100% (3)

- Chapter 7 Multiple Access TechniquesDocument65 pagesChapter 7 Multiple Access TechniquesRamyAgiebNo ratings yet

- Qam NotesDocument14 pagesQam NotesWaqasMirzaNo ratings yet

- Advanced Digital Signal Processing With Matlab (R)Document4 pagesAdvanced Digital Signal Processing With Matlab (R)srinivaskaredlaNo ratings yet

- Digital CommunicationDocument19 pagesDigital Communicationnimish kambleNo ratings yet

- Lecture 04 - Signal Space Approach and Gram Schmidt ProcedureDocument20 pagesLecture 04 - Signal Space Approach and Gram Schmidt ProcedureKhoa PhamNo ratings yet

- DCS SolnDocument6 pagesDCS SolnSandeep Kumar100% (1)

- Digital Signal Processing Lab 2018 2019 2Document149 pagesDigital Signal Processing Lab 2018 2019 2Ahmed Aleesa100% (2)

- WINSEM2022-23 BECE304L TH VL2022230500822 Reference Material I 10-01-2023 Module 3 - Bandwidth and Power Efficient AM SystemsDocument13 pagesWINSEM2022-23 BECE304L TH VL2022230500822 Reference Material I 10-01-2023 Module 3 - Bandwidth and Power Efficient AM SystemsYaganti pavan saiNo ratings yet

- PSD of Pulse Coding Techniques With MatlabDocument7 pagesPSD of Pulse Coding Techniques With MatlabNithin MukeshNo ratings yet

- DC Lab VivaDocument5 pagesDC Lab VivaAbir HoqueNo ratings yet

- RF Based Home Security System: Presented byDocument14 pagesRF Based Home Security System: Presented byAamirNo ratings yet

- Filter DesignDocument9 pagesFilter DesignTaylor MaddixNo ratings yet

- MIMO - Lecture 3Document85 pagesMIMO - Lecture 3billyNo ratings yet

- Low Density Parity Check (LDPC) Coded MIMOConstantDocument4 pagesLow Density Parity Check (LDPC) Coded MIMOConstantkishoreNo ratings yet

- Adaptive Filters-3Document18 pagesAdaptive Filters-3deepaNo ratings yet

- Iterative Receiver For Flip-OFDM in Optical Wireless CommunicationDocument6 pagesIterative Receiver For Flip-OFDM in Optical Wireless CommunicationShravan KumarNo ratings yet

- Ec51 Digital CommunicationDocument18 pagesEc51 Digital CommunicationvijayprasathmeNo ratings yet

- Applications of Adaptive FiltersDocument6 pagesApplications of Adaptive FiltersNabil IshamNo ratings yet

- Radar BitsDocument2 pagesRadar Bitsvenkiscribd444No ratings yet

- ADC Chapter 1 NotesDocument24 pagesADC Chapter 1 NotesatifNo ratings yet

- L14 15 ABCD and S ParametersDocument15 pagesL14 15 ABCD and S ParametersAmeya KadamNo ratings yet

- Chapter 4 Digital Mod - Part 2 - 2Document33 pagesChapter 4 Digital Mod - Part 2 - 2Bhern Bhern100% (1)

- UNIT - 3: Fast-Fourier-Transform (FFT) Algorithms: Dr. Manjunatha. PDocument100 pagesUNIT - 3: Fast-Fourier-Transform (FFT) Algorithms: Dr. Manjunatha. PMVRajeshMaliyeckalNo ratings yet

- Question Bank Communication SystemsDocument47 pagesQuestion Bank Communication SystemsGajanan ArhaNo ratings yet

- Source Coding Techniques: 1. Huffman Code. 2. Two-Pass Huffman Code. 3. Lemple-Ziv CodeDocument111 pagesSource Coding Techniques: 1. Huffman Code. 2. Two-Pass Huffman Code. 3. Lemple-Ziv Codetafzeman891No ratings yet

- On The Fundamental Aspects of DemodulationDocument11 pagesOn The Fundamental Aspects of DemodulationAI Coordinator - CSC JournalsNo ratings yet

- Unit - IV - Spread Spectrum ModulationDocument16 pagesUnit - IV - Spread Spectrum ModulationPrisha SinghNo ratings yet

- Dcom Mod4Document4 pagesDcom Mod4Vidit shahNo ratings yet

- Error Detection and CorrectionDocument28 pagesError Detection and Correctionaubaidullah muzammil100% (1)

- Antenna 2Document15 pagesAntenna 2Jaskirat Singh Chhabra100% (1)

- Ch10-1 (FIR Filter Design) UDocument33 pagesCh10-1 (FIR Filter Design) UphithucNo ratings yet

- A BPSK QPSK Timing Error Detector For SampledDocument7 pagesA BPSK QPSK Timing Error Detector For SampledFahmi MuradNo ratings yet

- Unit3 IIR DesignDocument103 pagesUnit3 IIR DesignramuamtNo ratings yet

- Communication SystemDocument63 pagesCommunication SystemAthar BaigNo ratings yet

- Project 5 - Superheterodyne AM Receiver Design in ADS (June 2014)Document4 pagesProject 5 - Superheterodyne AM Receiver Design in ADS (June 2014)Stephen J. Watt100% (1)

- Power Electronics Experiments ECE-P-672Document9 pagesPower Electronics Experiments ECE-P-672Sai SomayajulaNo ratings yet

- Multiple Access Techniques LectureDocument23 pagesMultiple Access Techniques LecturefatimamanzoorNo ratings yet

- Real Time DSP: Professors: Eng. Julian Bruno Eng. Mariano Llamedo SoriaDocument29 pagesReal Time DSP: Professors: Eng. Julian Bruno Eng. Mariano Llamedo SoriaAli AkbarNo ratings yet

- Final PPT On Speech ProcessingDocument20 pagesFinal PPT On Speech ProcessingBhavik Patel0% (1)

- Experiment 6 Implementation of LP Fir Filter For A Given SequenceDocument25 pagesExperiment 6 Implementation of LP Fir Filter For A Given SequenceSrinivas SamalNo ratings yet

- ES PaperDocument22 pagesES PaperRaghu Nath SinghNo ratings yet

- Principles of Communication Systems LAB: Lab Manual (EE-230-F) Iv Sem Electrical and Electronics EngineeringDocument87 pagesPrinciples of Communication Systems LAB: Lab Manual (EE-230-F) Iv Sem Electrical and Electronics Engineeringsachin malikNo ratings yet

- HTVT 2Document153 pagesHTVT 2Vo Phong PhuNo ratings yet

- Ber AwgnDocument9 pagesBer AwgnVina SectianaNo ratings yet

- 1 Coherent and Incoherent Modulation in OFDM: 1.1 Review of Differential ModulationDocument15 pages1 Coherent and Incoherent Modulation in OFDM: 1.1 Review of Differential ModulationRajib MukherjeeNo ratings yet

- L2 DiversityDocument26 pagesL2 DiversityNayim MohammadNo ratings yet

- Specimen Copy: Answer SheetDocument1 pageSpecimen Copy: Answer SheetAnubhav GangwarNo ratings yet

- Vow 3120Document11 pagesVow 3120tabassam7801No ratings yet

- VisiLogic Software Manual-Function BlocksDocument194 pagesVisiLogic Software Manual-Function BlocksCristiano SoderNo ratings yet

- Request For ContractDocument6 pagesRequest For Contractshakeel ahmedNo ratings yet

- CM SLMDocument828 pagesCM SLMGirarza100% (1)

- Seminar ReportDocument9 pagesSeminar Reportamrapali nimsarkarNo ratings yet

- Sketchup Tutorial - 10 Steps To Create A Table in Sketchup: Getting Set UpDocument21 pagesSketchup Tutorial - 10 Steps To Create A Table in Sketchup: Getting Set UpkopikoNo ratings yet

- Types of Blister MachineDocument3 pagesTypes of Blister MachineYousifNo ratings yet

- Very Useful - AS400 SlidesDocument86 pagesVery Useful - AS400 SlidesVasanth Kumar100% (1)

- Cable BoltingDocument12 pagesCable BoltingEesh Jain0% (1)

- I Would Like To Acknowledge The Presence of Our IIEE Governor IIEE President. Engr. Mario C. TabanyagDocument7 pagesI Would Like To Acknowledge The Presence of Our IIEE Governor IIEE President. Engr. Mario C. TabanyagMerlinros QuipaoNo ratings yet

- Experiment On Performance of Centrifugal PumpDocument7 pagesExperiment On Performance of Centrifugal PumpSyfNo ratings yet

- Home Security Using Fingerprint Scanner and Keypad Lock With Sms NotificationDocument24 pagesHome Security Using Fingerprint Scanner and Keypad Lock With Sms NotificationBecca AzarconNo ratings yet

- Retail Banking - Ed. 4Document6 pagesRetail Banking - Ed. 4Pratik MehtaNo ratings yet

- Transmision d6mDocument8 pagesTransmision d6mTeresa Marina Peralta100% (5)

- Occupancy Permit Forms NewDocument12 pagesOccupancy Permit Forms NewaltavanoarNo ratings yet

- Bendix Abs ValvulasDocument25 pagesBendix Abs ValvulasAdal VeraNo ratings yet

- University of Mindanao Chorale - Google SearchDocument2 pagesUniversity of Mindanao Chorale - Google SearchClaudeXDNo ratings yet

- Cpu's PackageDocument1 pageCpu's PackagepangaeaNo ratings yet

- Arvind Mills LTDDocument15 pagesArvind Mills LTDChetan PanaraNo ratings yet

- Game Testing TechniquesDocument3 pagesGame Testing TechniquesEspada Fajarr SmileNo ratings yet

- Acxiom Case Study - MicrosoftDocument2 pagesAcxiom Case Study - MicrosoftsilentwarrioronroadNo ratings yet

- Design Knowledge MaterialDocument75 pagesDesign Knowledge MaterialAkin ErsozNo ratings yet

- Easi Easiest User Manual PDFDocument3 pagesEasi Easiest User Manual PDFkaranNo ratings yet

- XFBDocument2 pagesXFBMikeCao1384No ratings yet

- Information SystemsDocument4 pagesInformation Systemssha chandraNo ratings yet

- The Effect of Soil and Cable Backfill Thermal ConductivityDocument6 pagesThe Effect of Soil and Cable Backfill Thermal ConductivitymiguelpaltinoNo ratings yet